#What is MLOps

Explore tagged Tumblr posts

Text

Why AI and ML Are the Future of Scalable MLOps Workflows?

In today’s fast-paced world of machine learning, speed and accuracy are paramount. But how can businesses ensure that their ML models are continuously improving, deployed efficiently, and constantly monitored for peak performance? Enter MLOps—a game-changing approach that combines the best of machine learning and operations to streamline the entire lifecycle of AI models. And now, with the infusion of AI and ML into MLOps itself, the possibilities are growing even more exciting.

Imagine a world where model deployment isn’t just automated but intelligently optimized, where model monitoring happens in real-time without human intervention, and where continuous learning is baked into every step of the process. This isn’t a far-off vision—it’s the future of MLOps, and AI/ML is at its heart. Let’s dive into how these powerful technologies are transforming MLOps and taking machine learning to the next level.

What is MLOps?

MLOps (Machine Learning Operations) combines machine learning and operations to streamline the end-to-end lifecycle of ML models. It ensures faster deployment, continuous improvement, and efficient management of models in production. MLOps is crucial for automating tasks, reducing manual intervention, and maintaining model performance over time.

Key Components of MLOps

Continuous Integration/Continuous Deployment (CI/CD): Automates testing, integration, and deployment of models, ensuring faster updates and minimal manual effort.

Model Versioning: Tracks different model versions for easy comparison, rollback, and collaboration.

Model Testing: Validates models against real-world data to ensure performance, accuracy, and reliability through automated tests.

Monitoring and Management: Continuously tracks model performance to detect issues like drift, ensuring timely updates and interventions.

Differences Between Traditional Software DevOps and MLOps

Focus: DevOps handles software code deployment, while MLOps focuses on managing evolving ML models.

Data Dependency: MLOps requires constant data handling and preprocessing, unlike DevOps, which primarily deals with software code.

Monitoring: MLOps monitors model behavior over time, while DevOps focuses on application performance.

Continuous Training: MLOps involves frequent model retraining, unlike traditional DevOps, which deploys software updates less often.

AI/ML in MLOps: A Powerful Partnership

As machine learning continues to evolve, AI and ML technologies are playing an increasingly vital role in enhancing MLOps workflows. Together, they bring intelligence, automation, and adaptability to the model lifecycle, making operations smarter, faster, and more efficient.

Enhancing MLOps with AI and ML: By embedding AI/ML capabilities into MLOps, teams can automate critical yet time-consuming tasks, reduce manual errors, and ensure models remain high-performing in production. These technologies don’t just support MLOps—they supercharge it.

Automating Repetitive Tasks: Machine learning algorithms are now used to handle tasks that once required extensive manual effort, such as:

Data Preprocessing: Automatically cleaning, transforming, and validating data.

Feature Engineering: Identifying the most relevant features for a model based on data patterns.

Model Selection and Hyperparameter Tuning: Using AutoML to test multiple algorithms and configurations, selecting the best-performing combination with minimal human input.

This level of automation accelerates model development and ensures consistent, scalable results.

Intelligent Monitoring and Self-Healing: AI also plays a key role in model monitoring and maintenance:

Predictive Monitoring: AI can detect early signs of model drift, performance degradation, or data anomalies before they impact business outcomes.

Self-Healing Systems: Advanced systems can trigger automatic retraining or rollback actions when issues are detected, keeping models accurate and reliable without waiting for manual intervention.

Key Applications of AI/ML in MLOps

AI and machine learning aren’t just being managed by MLOps—they’re actively enhancing it. From training models to scaling systems, AI/ML technologies are being used to automate, optimize, and future-proof the entire machine learning pipeline. Here are some of the key applications:

1. Automated Model Training and Tuning: Traditionally, choosing the right algorithm and tuning hyperparameters required expert knowledge and extensive trial and error. With AI/ML-powered tools like AutoML, this process is now largely automated. These tools can:

Test multiple models simultaneously

Optimize hyperparameters

Select the best-performing configuration

This not only speeds up experimentation but also improves model performance with less manual intervention.

2. Continuous Integration and Deployment (CI/CD): AI streamlines CI/CD pipelines by automating critical tasks in the deployment process. It can:

Validate data consistency and schema changes

Automatically test and promote new models

Reduce deployment risks through anomaly detection

By using AI, teams can achieve faster, safer, and more consistent model deployments at scale.

3. Model Monitoring and Management: Once a model is live, its job isn’t done—constant monitoring is essential. AI systems help by:

Detecting performance drift, data shifts, or anomalies

Sending alerts or triggering automated retraining when issues arise

Ensuring models remain accurate and reliable over time

This proactive approach keeps models aligned with real-world conditions, even as data changes.

4. Scaling and Performance Optimization: As ML workloads grow, resource management becomes critical. AI helps optimize performance by:

Dynamically allocating compute resources based on demand

Predicting system load and scaling infrastructure accordingly

Identifying bottlenecks and inefficiencies in real-time

These optimizations lead to cost savings and ensure high availability in large-scale ML deployments.

Benefits of Integrating AI/ML in MLOps

Bringing AI and ML into MLOps doesn’t just refine processes—it transforms them. By embedding intelligence and automation into every stage of the ML lifecycle, organizations can unlock significant operational and strategic advantages. Here are the key benefits:

1. Increased Efficiency and Faster Deployment Cycles: AI-driven automation accelerates everything from data preprocessing to model deployment. With fewer manual steps and smarter workflows, teams can build, test, and deploy models much faster, cutting down time-to-market and allowing quicker experimentation.

2. Enhanced Accuracy in Predictive Models: With ML algorithms optimizing model selection and tuning, the chances of deploying high-performing models increase. AI also ensures that models are continuously evaluated and updated, improving decision-making with more accurate, real-time predictions.

3. Reduced Human Intervention and Manual Errors: Automating repetitive tasks minimizes the risk of human errors, streamlines collaboration, and frees up data scientists and engineers to focus on higher-level strategy and innovation. This leads to more consistent outcomes and reduced operational overhead.

4. Continuous Improvement Through Feedback Loops: AI-powered MLOps systems enable continuous learning. By monitoring model performance and feeding insights back into training pipelines, the system evolves automatically, adjusting to new data and changing environments without manual retraining.

Integrating AI/ML into MLOps doesn’t just make operations smarter—it builds a foundation for scalable, self-improving systems that can keep pace with the demands of modern machine learning.

Future of AI/ML in MLOps

The future of MLOps is poised to become even more intelligent and autonomous, thanks to rapid advancements in AI and ML technologies. Trends like AutoML, reinforcement learning, and explainable AI (XAI) are already reshaping how machine learning workflows are built and managed. AutoML is streamlining the entire modeling process—from data preprocessing to model deployment—making it more accessible and efficient. Reinforcement learning is being explored for dynamic resource optimization and decision-making within pipelines, while explainable AI is becoming essential to ensure transparency, fairness, and trust in automated systems.

Looking ahead, AI/ML will drive the development of fully autonomous machine learning pipelines—systems capable of learning from performance metrics, retraining themselves, and adapting to new data with minimal human input. These self-sustaining workflows will not only improve speed and scalability but also ensure long-term model reliability in real-world environments. As organizations increasingly rely on AI for critical decisions, MLOps will evolve into a more strategic, intelligent framework—one that blends automation, adaptability, and accountability to meet the growing demands of AI-driven enterprises.

As AI and ML continue to evolve, their integration into MLOps is proving to be a game-changer, enabling smarter automation, faster deployments, and more resilient model management. From streamlining repetitive tasks to powering predictive monitoring and self-healing systems, AI/ML is transforming MLOps into a dynamic, intelligent backbone for machine learning at scale. Looking ahead, innovations like AutoML and explainable AI will further refine how we build, deploy, and maintain ML models. For organizations aiming to stay competitive in a data-driven world, embracing AI-powered MLOps isn’t just an option—it’s a necessity. By investing in this synergy today, businesses can future-proof their ML operations and unlock faster, smarter, and more reliable outcomes tomorrow.

#AI and ML#future of AI and ML#What is MLOps#Differences Between Traditional Software DevOps and MLOps#Benefits of Integrating AI/ML in MLOps

0 notes

Text

Dive into the heart of MLOps, where data science meets DevOps, ensuring seamless integration of machine learning models into production. Discover the essential practices and tools for automating, monitoring, and managing the entire ML lifecycle.

Learn how to bridge the gap between data scientists and IT operations to streamline model deployment and optimize performance.

Download our white paper now to gain invaluable insights into the world of MLOps and revolutionize your machine learning workflows.

0 notes

Text

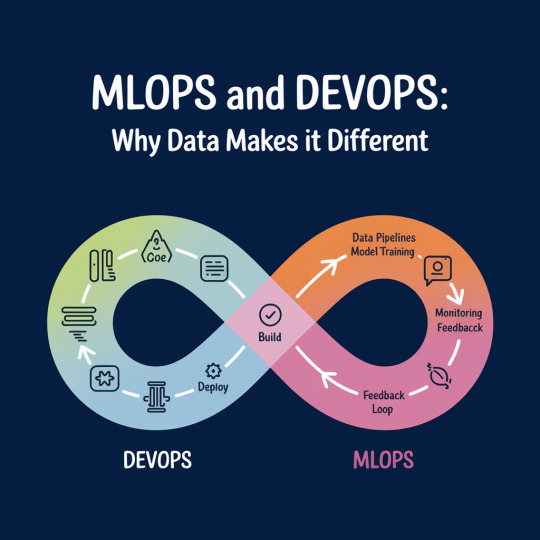

MLOps and DevOps: Why Data Makes It Different

In today’s fast-evolving tech ecosystem, DevOps has become a proven methodology to streamline software delivery, ensure collaboration across teams, and enable continuous deployment. However, when machine learning enters the picture, traditional DevOps processes need a significant shift—this is where MLOps comes into play. While DevOps is focused on code, automation, and systems, MLOps introduces one critical variable: data. And that data changes everything.

To understand this difference, it's essential to explore how DevOps and MLOps operate. DevOps aims to automate the software development lifecycle—from development and testing to deployment and monitoring. It empowers teams to release reliable software faster. Many enterprises today rely on expert DevOps consulting and managed cloud services to help them build resilient, scalable infrastructure and accelerate time to market.

MLOps, on the other hand, integrates data engineering and model operations into this lifecycle. It extends DevOps principles by focusing not just on code, but also on managing datasets, model training, retraining, versioning, and monitoring performance in production. The machine learning pipeline is inherently more experimental and dynamic, which means MLOps needs to accommodate constant changes in data, model behavior, and real-time feedback.

What Makes MLOps Different?

The primary differentiator between DevOps and MLOps is the role of data. In traditional DevOps, code is predictable; once tested, it behaves consistently in production. In MLOps, data drives outcomes—and data is anything but predictable. Shifts in user behavior, noise in incoming data, or even minor feature drift can degrade a model’s performance. Therefore, MLOps must be equipped to detect these changes and retrain models automatically when needed.

Another key difference is model validation. In DevOps, automated tests validate software correctness. In MLOps, validation involves metrics like accuracy, precision, recall, and more, which can evolve as data changes. Hence, while DevOps teams rely heavily on tools like Jenkins or Kubernetes, MLOps professionals use additional tools such as MLflow, TensorFlow Extended (TFX), or Kubeflow to handle the complexities of model deployment and monitoring.

As quoted by Andrej Karpathy, former Director of AI at Tesla: “Training a deep neural network is much more like an art than a science. It requires insight, intuition, and a lot of trial and error.” This trial-and-error nature makes MLOps inherently more iterative and experimental.

Example: Real-World Application

Imagine a financial institution using ML models to detect fraudulent transactions. A traditional DevOps pipeline could deploy the detection software. But as fraud patterns change weekly or daily, the ML model must learn from new patterns constantly. This demands a robust MLOps system that can fetch fresh data, retrain the model, validate its accuracy, and redeploy—automatically.

This dynamic nature is why integrating agilix DevOps practices is crucial. These practices ensure agility and adaptability, allowing teams to respond faster to data drift or model degradation. For organizations striving to innovate through machine learning, combining agile methodologies with MLOps is a game-changer.

The Need for DevOps Transformation in MLOps Adoption

As companies mature digitally, they often undergo a DevOps transformation consulting journey. In this process, incorporating MLOps becomes inevitable for teams building AI-powered products. It's not enough to deploy software—businesses must ensure that their models remain accurate, ethical, and relevant over time.

MLOps also emphasizes collaboration between data scientists, ML engineers, and operations teams, which can be a cultural challenge. Thus, successful adoption of MLOps often requires not just tools and workflows, but also mindset shifts—similar to what organizations go through during a DevOps transformation.

As Google’s ML Engineer D. Sculley stated: “Machine Learning is the high-interest credit card of technical debt.” This means that without solid MLOps practices, technical debt builds up quickly, making systems fragile and unsustainable.

Conclusion

In summary, while DevOps and MLOps share common goals—automation, reliability, and scalability—data makes MLOps inherently more complex and dynamic. Organizations looking to build and maintain ML-driven products must embrace both DevOps discipline and MLOps flexibility.

To support this journey, many enterprises are now relying on proven DevOps consulting services that evolve with MLOps capabilities. These services provide the expertise and frameworks needed to build, deploy, and monitor intelligent systems at scale.

Ready to enable intelligent automation in your organization? Visit Cloudastra Technology: Cloudastra DevOps as a Services and discover how our expertise in DevOps and MLOps can help future-proof your technology stack.

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As artificial intelligence and machine learning (AI/ML) become integral to digital transformation strategies, organizations are looking for scalable platforms that can streamline the development, deployment, and lifecycle management of intelligent applications. Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is designed to meet this exact need—providing a powerful foundation for operationalizing AI/ML workloads in hybrid cloud environments.

The AI268 course from Red Hat offers a hands-on, practitioner-level learning experience that empowers data scientists, developers, and DevOps engineers to work collaboratively on AI/ML solutions using Red Hat OpenShift AI.

🎯 Course Overview: What is AI268?

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268) is an intermediate-level training course that teaches participants how to:

Develop machine learning models in collaborative environments using tools like Jupyter Notebooks.

Train, test, and refine models using OpenShift-native resources.

Automate ML workflows using pipelines and GitOps.

Deploy models into production using model serving frameworks like KFServing or OpenVINO.

Monitor model performance and retrain based on new data.

🔧 Key Learning Outcomes

✅ Familiarity with OpenShift AI Tools Get hands-on experience with integrated tools like JupyterHub, TensorFlow, Scikit-learn, PyTorch, and Seldon.

✅ Building End-to-End Pipelines Learn to create CI/CD-style pipelines tailored to machine learning, supporting repeatable and scalable workflows.

✅ Model Deployment Strategies Understand how to deploy ML models as microservices using OpenShift AI’s built-in serving capabilities and expose them via APIs.

✅ Version Control and Collaboration Use Git and GitOps to track code, data, and model changes for collaborative, production-grade AI development.

✅ Monitoring & Governance Explore tools for observability, drift detection, and automated retraining, enabling responsible AI practices.

🧑💻 Who Should Take AI268?

This course is ideal for:

Data Scientists looking to move their models into production environments.

Machine Learning Engineers working with Kubernetes and OpenShift.

DevOps/SRE Teams supporting AI/ML workloads in hybrid or cloud-native infrastructures.

AI Developers seeking to learn how to build scalable ML applications with modern MLOps practices.

🏗️ Why Choose Red Hat OpenShift AI?

OpenShift AI blends the flexibility of Kubernetes with the power of AI/ML toolchains. With built-in support for GPU acceleration, data versioning, and reproducibility, it empowers teams to:

Shorten the path from experimentation to production.

Manage lifecycle and compliance for ML models.

Collaborate across teams with secure, role-based access.

Whether you're building recommendation systems, computer vision models, or NLP pipelines—OpenShift AI gives you the enterprise tools to deploy and scale.

🧠 Final Thoughts

AI/ML in production is no longer a luxury—it's a necessity. Red Hat OpenShift AI, backed by Red Hat’s enterprise-grade OpenShift platform, is a powerful toolset for organizations that want to scale AI responsibly. By enrolling in AI268, you gain the practical skills and confidence to deliver intelligent solutions that perform reliably in real-world environments.

🔗 Ready to take your AI/ML skills to the next level? Explore Red Hat AI268 training and become an integral part of the enterprise AI revolution.

For more details www.hawkstack.com

0 notes

Text

Hire Artificial Intelligence Developers: What Businesses Look for

The Evolving Landscape of AI Hiring

The number of people needed to develop artificial intelligence has grown astronomically, but businesses are getting extremely picky about the kind of people they recruit. Knowing what businesses really look like, artificial intelligence developers can assist job seekers and recruiters in making more informed choices. The criteria extend well beyond technical expertise, such as a multidimensional set of skills that lead to success in real AI development.

Technical Competence Beyond the Basics

Organizations expect to hire artificial intelligence developers to possess sound technical backgrounds, but the particular needs differ tremendously depending on the job and domain. Familiarity with programming languages such as Python, R, or Java is generally needed, along with expertise in machine learning libraries such as TensorFlow, PyTorch, or scikit-learn.

But more and more, businesses seek AI developers with expertise that spans all stages of AI development. These stages include data preprocessing, model building, testing, deployment, and monitoring. Proficiency in working on cloud platforms, containerization technology, and MLOps tools has become more essential as businesses ramp up their AI initiatives.

Problem-Solving and Critical Thinking

Technical skills by themselves provide just a great AI practitioner. Businesses want individuals who can address intricate issues in an analytical manner and logically assess possible solutions. It demands knowledge of business needs, determining applicable AI methods, and developing solutions that implement in reality.

The top artificial intelligence engineers can dissect intricate problems into potential pieces and iterate solutions. They know AI development is every bit an art as a science, so it entails experiments, hypothesis testing, and creative problem-solving. Businesses seek examples of this problem-solving capability through portfolio projects, case studies, or thorough discussions in interviews.

Understanding of Business Context

Business contexts and limitations today need to be understood by artificial intelligence developers. Businesses appreciate developers who are able to transform business needs into technical requirements and inform business decision-makers about technical limitations. Such a business skill ensures that AI projects achieve tangible value instead of mere technical success.

Good AI engineers know things like return on investment, user experience, and operational limits. They can choose model accuracy versus computational expense in terms of the business requirements. This kind of business-technical nexus is often what distinguishes successful AI projects from technical pilot projects that are never deployed into production.

Collaboration and Communication Skills

AI development is collaborative by nature. Organizations seek artificial intelligence developers who can manage heterogeneous groups of data scientists, software engineers, product managers, and business stakeholders. There is a big need for excellent communication skills to explain complex things to non-technical teams and to collect requirements from domain experts.

The skill of giving and receiving constructive criticism is essential for artificial intelligence builders. Building artificial intelligence is often iterative with multiple stakeholders influencing the process. Builders who can include feedback without compromising technical integrity are most sought after by organizations developing AI systems.

Ethical Awareness and Responsibility

Firms now realize that it is crucial to have ethical AI. They want to employ experienced artificial intelligence developers who understand bias, fairness, and the long-term impact of AI systems. This is not compliance for the sake of compliance,it is about creating systems that work equitably for everyone and do not perpetuate destructive bias.

Artificial intelligence engineers who are able to identify potential ethical issues and recommend solutions are increasingly valuable. This requires familiarity with things like algorithmic bias, data privacy, and explainable AI. Companies want engineers who are able to solve problems ahead of time rather than as afterthoughts.

Adaptability and Continuous Learning

The AI field is extremely dynamic, and therefore artificial intelligence developers must be adaptable. The employers eagerly anticipate employing persons who are evidencing persistent learning and are capable of accommodating new technologies, methods, and demands. It goes hand in hand with staying abreast of research developments and welcoming learning new tools and frameworks.

Successful artificial intelligence developers are open to being transformed and unsure. They recognize that the most advanced methods used now may be outdated tomorrow and work together with an air of wonder and adaptability. Businesses appreciate developers who can adapt fast and absorb new knowledge effectively.

Experience with Real-World Deployment

Most AI engineers can develop models that function in development environments, but companies most appreciate those who know how to overcome the barriers of deploying AI systems in production. These involve knowing model serving, monitoring, versioning, and maintenance.

Production deployment experience shows that AI developers appreciate the full AI lifecycle. They know how to manage issues such as model drift, performance monitoring, and system integration. Practical experience is normally more helpful than superior abstract knowledge.

Domain Expertise and Specialization

Although overall AI skill is to be preferred, firms typically look for artificial intelligence developers with particular domain knowledge. Knowledge of healthcare, finance, or retail industries' particular issues and needs makes developers more efficient and better.

Domain understanding assists artificial intelligence developers in crafting suitable solutions and speaking correctly with stakeholders. Domain understanding allows them to spot probable problems and opportunities that may be obscure to generalist developers. This specialization can result in more niched career advancement and improved remuneration.

Portfolio and Demonstrated Impact

Companies would rather have evidence of good AI development work. Artificial intelligence developers who can demonstrate the worth of their work through portfolio projects, case studies, or measurable results have much to offer. This demonstrates that they are able to translate technical proficiency into tangible value.

The top portfolios have several projects that they utilize to represent various aspects of AI development. Employers seek to hire artificial intelligence developers who are able to articulate their thought process, reflect on problems they experience, and measure the effects of their work.

Cultural Fit and Growth Potential

Apart from technical skills, firms evaluate whether AI developers will be a good fit with their firm culture and enjoy career development. Factors such as work routines, values alignment, and career development are addressed. Firms deeply invest in AI skills and would like to have developers that will be an asset to the firm and evolve with the firm.

The best artificial intelligence developers possess technical skills augmented with superior interpersonal skills, business skills, and a sense of ethics. They can stay up with changing requirements without sacrificing quality and assisting in developing healthy team cultures.

0 notes

Text

Developing and Deploying AI/ML Applications: From Idea to Production

In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), developing and deploying intelligent applications is no longer a futuristic concept — it's a competitive necessity. Whether it's predictive analytics, recommendation engines, or computer vision systems, AI/ML applications are transforming industries at scale.

This article breaks down the key phases and considerations for developing and deploying AI/ML applications in modern environments — without diving into complex coding.

💡 Phase 1: Problem Definition and Use Case Design

Before writing a single line of code or selecting a framework, organizations must start with clear business goals:

What problem are you solving?

What kind of prediction or automation is expected?

Is AI/ML the right solution?

Examples: 🔹 Forecasting sales 🔹 Classifying customer feedback 🔹 Detecting fraudulent transactions

📊 Phase 2: Data Collection and Preparation

Data is the foundation of AI. High-quality, relevant data fuels accurate models.

Steps include:

Gathering structured or unstructured data (logs, images, text, etc.)

Cleaning and preprocessing to remove noise

Feature selection and engineering to extract meaningful inputs

Tools often used: Jupyter Notebooks, Apache Spark, or cloud-native services like AWS Glue or Azure Data Factory.

Phase 3: Model Development and Training

Once data is prepared, ML engineers select algorithms and train models. Common types include:

Classification (e.g., spam detection)

Regression (e.g., predicting prices)

Clustering (e.g., customer segmentation)

Deep Learning (e.g., image or speech recognition)

Key concepts:

Training vs. validation datasets

Model tuning (hyperparameters)

Accuracy, precision, and recall

Cloud platforms like SageMaker, Vertex AI, or OpenShift AI simplify this process with scalable compute and managed tools.

Phase 4: Model Evaluation and Testing

Before deploying a model, it’s critical to validate its performance on unseen data.

Steps:

Measure performance against benchmarks

Avoid overfitting or bias

Ensure the model behaves well in real-world edge cases

This helps in building trustworthy, explainable AI systems.

🚀 Phase 5: Deployment and Inference

Deployment involves integrating the model into a production environment where it can serve real users.

Approaches include:

Batch Inference (run periodically on data sets)

Real-time Inference (API-based predictions on-demand)

Edge Deployment (models deployed on devices, IoT, etc.)

Tools used for deployment:

Kubernetes or OpenShift for container orchestration

MLflow or Seldon for model tracking and versioning

APIs for front-end or app integration

🔄 Phase 6: Monitoring and Continuous Learning

Once deployed, the job isn’t done. AI/ML models need to be monitored and retrained over time to stay relevant.

Focus on:

Performance monitoring (accuracy over time)

Data drift detection

Automated retraining pipelines

ML Ops (Machine Learning Operations) helps automate and manage this lifecycle — ensuring scalability and reliability.

Best Practices for AI/ML Application Development

✅ Start with business outcomes, not just algorithms ✅ Use version control for both code and data ✅ Prioritize data ethics, fairness, and security ✅ Automate with CI/CD and MLOps workflows ✅ Involve cross-functional teams: data scientists, engineers, and business users

🌐 Real-World Examples

Retail: AI recommendation systems that boost sales

Healthcare: ML models predicting patient risk

Finance: Real-time fraud detection algorithms

Manufacturing: Predictive maintenance using sensor data

Final Thoughts

Building AI/ML applications goes beyond model training — it’s about designing an end-to-end system that continuously learns, adapts, and delivers real value. With the right tools, teams, and practices, organizations can move from experimentation to enterprise-grade deployments with confidence.

Visit our website for more details - www.hawkstack.com

0 notes

Text

Beyond the Pipeline: Choosing the Right Data Engineering Service Providers for Long-Term Scalability

Introduction: Why Choosing the Right Data Engineering Service Provider is More Critical Than Ever

In an age where data is more valuable than oil, simply having pipelines isn’t enough. You need refineries, infrastructure, governance, and agility. Choosing the right data engineering service providers can make or break your enterprise’s ability to extract meaningful insights from data at scale. In fact, Gartner predicts that by 2025, 80% of data initiatives will fail due to poor data engineering practices or provider mismatches.

If you're already familiar with the basics of data engineering, this article dives deeper into why selecting the right partner isn't just a technical decision—it’s a strategic one. With rising data volumes, regulatory changes like GDPR and CCPA, and cloud-native transformations, companies can no longer afford to treat data engineering service providers as simple vendors. They are strategic enablers of business agility and innovation.

In this post, we’ll explore how to identify the most capable data engineering service providers, what advanced value propositions you should expect from them, and how to build a long-term partnership that adapts with your business.

Section 1: The Evolving Role of Data Engineering Service Providers in 2025 and Beyond

What you needed from a provider in 2020 is outdated today. The landscape has changed:

📌 Real-time data pipelines are replacing batch processes

📌 Cloud-native architectures like Snowflake, Databricks, and Redshift are dominating

📌 Machine learning and AI integration are table stakes

📌 Regulatory compliance and data governance have become core priorities

Modern data engineering service providers are not just builders—they are data architects, compliance consultants, and even AI strategists. You should look for:

📌 End-to-end capabilities: From ingestion to analytics

📌 Expertise in multi-cloud and hybrid data ecosystems

📌 Proficiency with data mesh, lakehouse, and decentralized architectures

📌 Support for DataOps, MLOps, and automation pipelines

Real-world example: A Fortune 500 retailer moved from Hadoop-based systems to a cloud-native lakehouse model with the help of a modern provider, reducing their ETL costs by 40% and speeding up analytics delivery by 60%.

Section 2: What to Look for When Vetting Data Engineering Service Providers

Before you even begin consultations, define your objectives. Are you aiming for cost efficiency, performance, real-time analytics, compliance, or all of the above?

Here’s a checklist when evaluating providers:

📌 Do they offer strategic consulting or just hands-on coding?

📌 Can they support data scaling as your organization grows?

📌 Do they have domain expertise (e.g., healthcare, finance, retail)?

📌 How do they approach data governance and privacy?

📌 What automation tools and accelerators do they provide?

📌 Can they deliver under tight deadlines without compromising quality?

Quote to consider: "We don't just need engineers. We need architects who think two years ahead." – Head of Data, FinTech company

Avoid the mistake of over-indexing on cost or credentials alone. A cheaper provider might lack scalability planning, leading to massive rework costs later.

Section 3: Red Flags That Signal Poor Fit with Data Engineering Service Providers

Not all providers are created equal. Some red flags include:

📌 One-size-fits-all data pipeline solutions

📌 Poor documentation and handover practices

📌 Lack of DevOps/DataOps maturity

📌 No visibility into data lineage or quality monitoring

📌 Heavy reliance on legacy tools

A real scenario: A manufacturing firm spent over $500k on a provider that delivered rigid ETL scripts. When the data source changed, the whole system collapsed.

Avoid this by asking your provider to walk you through previous projects, particularly how they handled pivots, scaling, and changing data regulations.

Section 4: Building a Long-Term Partnership with Data Engineering Service Providers

Think beyond the first project. Great data engineering service providers work iteratively and evolve with your business.

Steps to build strong relationships:

📌 Start with a proof-of-concept that solves a real pain point

📌 Use agile methodologies for faster, collaborative execution

📌 Schedule quarterly strategic reviews—not just performance updates

📌 Establish shared KPIs tied to business outcomes, not just delivery milestones

📌 Encourage co-innovation and sandbox testing for new data products

Real-world story: A healthcare analytics company co-developed an internal patient insights platform with their provider, eventually spinning it into a commercial SaaS product.

Section 5: Trends and Technologies the Best Data Engineering Service Providers Are Already Embracing

Stay ahead by partnering with forward-looking providers who are ahead of the curve:

📌 Data contracts and schema enforcement in streaming pipelines

📌 Use of low-code/no-code orchestration (e.g., Apache Airflow, Prefect)

📌 Serverless data engineering with tools like AWS Glue, Azure Data Factory

📌 Graph analytics and complex entity resolution

📌 Synthetic data generation for model training under privacy laws

Case in point: A financial institution cut model training costs by 30% by using synthetic data generated by its engineering provider, enabling robust yet compliant ML workflows.

Conclusion: Making the Right Choice for Long-Term Data Success

The right data engineering service providers are not just technical executioners—they’re transformation partners. They enable scalable analytics, data democratization, and even new business models.

To recap:

📌 Define goals and pain points clearly

📌 Vet for strategy, scalability, and domain expertise

📌 Watch out for rigidity, legacy tools, and shallow implementations

📌 Build agile, iterative relationships

📌 Choose providers embracing the future

Your next provider shouldn’t just deliver pipelines—they should future-proof your data ecosystem. Take a step back, ask the right questions, and choose wisely. The next few quarters of your business could depend on it.

#DataEngineering#DataEngineeringServices#DataStrategy#BigDataSolutions#ModernDataStack#CloudDataEngineering#DataPipeline#MLOps#DataOps#DataGovernance#DigitalTransformation#TechConsulting#EnterpriseData#AIandAnalytics#InnovationStrategy#FutureOfData#SmartDataDecisions#ScaleWithData#AnalyticsLeadership#DataDrivenInnovation

0 notes

Text

What Businesses Look for in an Artificial Intelligence Developer

The Evolving Landscape of AI Hiring

The number of people needed to develop artificial intelligence has grown astronomically, but businesses are getting extremely picky about the kind of people they recruit. Knowing what businesses really look for in artificial intelligence developer can assist job seekers and recruiters in making more informed choices. The criteria extend well beyond technical expertise, such as a multidimensional set of skills that lead to success in real AI development.

Technical Competence Beyond the Basics

Organizations expect artificial intelligence developers to possess sound technical backgrounds, but the particular needs differ tremendously depending on the job and domain. Familiarity with programming languages such as Python, R, or Java is generally needed, along with expertise in machine learning libraries such as TensorFlow, PyTorch, or scikit-learn.

But more and more, businesses seek AI developers with expertise that spans all stages of AI development. These stages include data preprocessing, model building, testing, deployment, and monitoring. Proficiency in working on cloud platforms, containerization technology, and MLOps tools has become more essential as businesses ramp up their AI initiatives.

Problem-Solving and Critical Thinking

Technical skills by themselves provide just a great AI practitioner. Businesses want individuals who can address intricate issues in an analytical manner and logically assess possible solutions. It demands knowledge of business needs, determining applicable AI methods, and developing solutions that implement in reality.

The top artificial intelligence engineers can dissect intricate problems into potential pieces and iterate solutions. They know AI development is every bit an art as a science, so it entails experiments, hypothesis testing, and creative problem-solving. Businesses seek examples of this problem-solving capability through portfolio projects, case studies, or thorough discussions in interviews.

Understanding of Business Context

Business contexts and limitations today need to be understood by artificial intelligence developers. Businesses appreciate developers who are able to transform business needs into technical requirements and inform business decision-makers about technical limitations. Such a business skill ensures that AI projects achieve tangible value instead of mere technical success.

Good AI engineers know things like return on investment, user experience, and operational limits. They can choose model accuracy versus computational expense in terms of the business requirements. This kind of business-technical nexus is often what distinguishes successful AI projects from technical pilot projects that are never deployed into production.

Collaboration and Communication Skills

AI development is collaborative by nature. Organizations seek artificial intelligence developers who can manage heterogeneous groups of data scientists, software engineers, product managers, and business stakeholders. There is a big need for excellent communication skills to explain complex things to non-technical teams and to collect requirements from domain experts.

The skill of giving and receiving constructive criticism is essential for artificial intelligence builders. Building artificial intelligence is often iterative with multiple stakeholders influencing the process. Builders who can include feedback without compromising technical integrity are most sought after by organizations developing AI systems.

Ethical Awareness and Responsibility

Firms now realize that it is crucial to have ethical AI. They want to employ experienced artificial intelligence developers who understand bias, fairness, and the long-term impact of AI systems. This is not compliance for the sake of compliance,it is about creating systems that work equitably for everyone and do not perpetuate destructive bias.

Artificial intelligence engineers who are able to identify potential ethical issues and recommend solutions are increasingly valuable. This requires familiarity with things like algorithmic bias, data privacy, and explainable AI. Companies want engineers who are able to solve problems ahead of time rather than as afterthoughts.

Adaptability and Continuous Learning

The AI field is extremely dynamic, and therefore artificial intelligence developers must be adaptable. The employers eagerly anticipate employing persons who are evidencing persistent learning and are capable of accommodating new technologies, methods, and demands. It goes hand in hand with staying abreast of research developments and welcoming learning new tools and frameworks.

Successful artificial intelligence developers are open to being transformed and unsure. They recognize that the most advanced methods used now may be outdated tomorrow and work together with an air of wonder and adaptability. Businesses appreciate developers who can adapt fast and absorb new knowledge effectively.

Experience with Real-World Deployment

Most AI engineers can develop models that function in development environments, but companies most appreciate those who know how to overcome the barriers of deploying AI systems in production. These involve knowing model serving, monitoring, versioning, and maintenance.

Production deployment experience shows that AI developers appreciate the full AI lifecycle. They know how to manage issues such as model drift, performance monitoring, and system integration. Practical experience is normally more helpful than superior abstract knowledge.

Domain Expertise and Specialization

Although overall AI skill is to be preferred, firms typically look for artificial intelligence developers with particular domain knowledge. Knowledge of healthcare, finance, or retail industries' particular issues and needs makes developers more efficient and better.

Domain understanding assists artificial intelligence developers in crafting suitable solutions and speaking correctly with stakeholders. Domain understanding allows them to spot probable problems and opportunities that may be obscure to generalist developers. This specialization can result in more niched career advancement and improved remuneration.

Portfolio and Demonstrated Impact

Companies would rather have evidence of good AI development work. Artificial intelligence developers who can demonstrate the worth of their work through portfolio projects, case studies, or measurable results have much to offer. This demonstrates that they are able to translate technical proficiency into tangible value.

The top portfolios have several projects that they utilize to represent various aspects of AI development. Employers seek artificial intelligence developers who are able to articulate their thought process, reflect on problems they experience, and measure the effects of their work.

Cultural Fit and Growth Potential

Apart from technical skills, firms evaluate whether AI developers will be a good fit with their firm culture and enjoy career development. Factors such as work routines, values alignment, and career development are addressed. Firms deeply invest in AI skills and would like to have developers that will be an asset to the firm and evolve with the firm.

The best artificial intelligence developer possess technical skills augmented with superior interpersonal skills, business skills, and a sense of ethics. They can stay up with changing requirements without sacrificing quality and assisting in developing healthy team cultures.

0 notes

Text

Unlocking the Future of AI: Harnessing Multimodal Control Strategies for Autonomous Systems

The landscape of artificial intelligence is undergoing a profound transformation. Gone are the days when AI was confined to narrow, siloed tasks. Today, enterprises are embracing multimodal, agentic AI systems that integrate diverse data types, execute complex workflows autonomously, and adapt dynamically to evolving business needs. At the heart of this transformation are large language models (LLMs) and multimodal foundation architectures, which are not only transforming industries but redefining what it means for software to be truly intelligent.

For AI practitioners, software architects, and CTOs, especially those considering a Agentic AI course in Mumbai or a Generative AI course in Mumbai, the challenge is no longer about building isolated models but orchestrating resilient, autonomous agents that can process text, images, audio, and video in real time, make context-aware decisions, and recover gracefully from failures. This article explores the convergence of Agentic and Generative AI in software, the latest tools and deployment strategies, and the critical role of software engineering best practices in ensuring reliability, security, and compliance.

Evolution of Agentic and Generative AI in Software

The journey from rule-based systems to today’s agentic AI is a story of increasing complexity and autonomy. Early AI models were narrowly focused, requiring manual input and strict rules. The advent of machine learning brought about predictive models, but these still relied heavily on human oversight. The real breakthrough came with the rise of large language models (LLMs) and multimodal architectures, which enabled AI to process and generate content across text, images, audio, and video.

Agentic AI represents the next evolutionary step. These systems are designed to act autonomously, making decisions, executing workflows, and even self-improving without constant human intervention. They leverage multimodal data to understand context, anticipate trends, and optimize strategies in real time. This shift is not just technical; it is fundamentally changing how businesses operate, enabling hyper-intelligent workflows that drive innovation and competitive advantage.

Generative AI, meanwhile, has moved beyond simple text generation to become a core component of multimodal systems. Today’s generative models can create content, synthesize information, and even simulate complex scenarios, making them indispensable for tasks like personalized marketing, fraud detection, and supply chain optimization. For professionals in Mumbai, enrolling in a Generative AI course in Mumbai can provide hands-on experience with these cutting-edge technologies.

Key Trends in Agentic and Generative AI

Unified Multimodal Foundation Models: These architectures enable seamless integration of multiple data types, improving performance and scalability. Enterprises can now deploy a single model for a wide range of use cases, from customer support to creative content generation.

Agentic AI Orchestration: Platforms like Jeda.ai are integrating multiple LLMs into visual workspaces, allowing businesses to leverage the strengths of different models for parallel task execution. This approach enhances efficiency and enables more sophisticated, context-aware decision-making.

MLOps for Generative Models: As generative AI becomes more central to business operations, robust MLOps pipelines are essential for managing model training, deployment, monitoring, and retraining. Tools like MLflow, Kubeflow, and custom orchestration layers are now standard for enterprise AI teams. For those new to the field, Agentic AI courses for beginners offer a structured introduction to these concepts and the practical skills needed to implement them.

Latest Frameworks, Tools, and Deployment Strategies

The rapid maturation of multimodal AI has given rise to a new generation of frameworks and tools designed to orchestrate complex AI workflows. Leading the charge are unified multimodal foundation models such as OpenAI’s GPT-4o, Google’s Gemini, and Meta’s LLaMA 3. These models can process and generate text, images, audio, and video, reducing the need for separate, specialized models and streamlining deployment across industries.

Key Deployment Strategies

Hybrid Cloud and Edge Architectures: To support real-time, multimodal processing, enterprises are adopting hybrid architectures that combine cloud scalability with edge computing for low-latency inference.

Containerization and Kubernetes: Containerized deployment using Kubernetes ensures portability, scalability, and resilience for AI workloads.

API-first Design: Exposing AI capabilities via well-defined APIs enables seamless integration with existing business systems and third-party applications. For professionals seeking to upskill, a Agentic AI course in Mumbai can provide practical training in these deployment strategies.

Advanced Tactics for Scalable, Reliable AI Systems

Building resilient, autonomous AI systems requires more than just advanced models. It demands a holistic approach to system design, deployment, and operations.

Resilience and Fault Tolerance

Redundancy and Failover: Deploying multiple instances of critical AI components ensures continuous operation even in the event of hardware or software failures.

Self-Healing Mechanisms: Autonomous agents must be able to detect and recover from errors, whether caused by data drift, model degradation, or external disruptions.

Graceful Degradation: When faced with unexpected inputs or system failures, AI systems should degrade gracefully, providing partial results or fallback mechanisms rather than failing outright.

Scalability

Horizontal Scaling: Distributing AI workloads across multiple nodes enables efficient scaling to meet fluctuating demand.

Asynchronous Processing: Leveraging event-driven architectures and message queues allows for efficient handling of high-throughput, multimodal data streams.

Security and Compliance

Data Privacy and Anonymization: Multimodal AI systems often process sensitive data, necessitating robust privacy controls and anonymization techniques.

Model Explainability and Auditability: Enterprises must ensure that AI decisions can be explained and audited, particularly in regulated industries.

For beginners, Agentic AI courses for beginners often include modules on these advanced tactics, providing a solid foundation for real-world deployment.

Ethical Considerations in AI Deployment

As AI systems become more autonomous and pervasive, ethical considerations become paramount. Key challenges include:

Bias and Fairness: Ensuring that AI models are fair and unbiased is crucial for maintaining trust and avoiding discrimination.

Transparency and Explainability: Providing clear explanations for AI-driven decisions is essential for accountability and compliance.

Data Privacy: Protecting user data and ensuring privacy is a critical ethical concern in AI deployment.

For professionals in Mumbai, a Generative AI course in Mumbai may include case studies and discussions on these ethical issues, helping learners navigate the complexities of responsible AI deployment.

The Role of Software Engineering Best Practices

Software engineering principles are the bedrock of reliable AI systems. Without them, even the most advanced models can falter.

Code Quality and Maintainability

Modular Design: Breaking down AI systems into reusable, modular components simplifies maintenance and enables incremental improvements.

Automated Testing: Comprehensive test suites, including unit, integration, and end-to-end tests, are essential for catching regressions and ensuring system stability.

DevOps and CI/CD

Continuous Integration and Delivery: Automating the build, test, and deployment pipeline accelerates innovation and reduces the risk of human error.

Infrastructure as Code: Managing infrastructure programmatically ensures consistency and repeatability across environments.

Monitoring and Observability

Real-Time Monitoring: Tracking system health, performance, and data quality in real time enables proactive issue resolution.

Logging and Tracing: Detailed logs and distributed tracing help diagnose complex, multimodal workflows.

For those considering an Agentic AI course in Mumbai, these best practices are often a core focus, ensuring that graduates are equipped to build robust, scalable AI solutions.

Cross-Functional Collaboration for AI Success

The complexity of modern AI systems demands close collaboration between data scientists, software engineers, and business stakeholders.

Breaking Down Silos

Shared Goals and Metrics: Aligning technical and business objectives ensures that AI initiatives deliver real value.

Cross-Functional Teams: Embedding data scientists within engineering teams fosters a culture of collaboration and rapid iteration.

Communication and Documentation

Clear Documentation: Well-documented APIs, data schemas, and deployment processes reduce friction and accelerate onboarding.

Regular Reviews: Frequent code and design reviews help catch issues early and promote knowledge sharing.

For beginners, Agentic AI courses for beginners often emphasize the importance of teamwork and communication in successful AI projects.

Measuring Success: Analytics and Monitoring

The true measure of AI success lies in its impact on business outcomes.

Key Metrics

Accuracy and Performance: Model accuracy, inference speed, and resource utilization are critical for assessing technical performance.

Business Impact: Metrics such as customer satisfaction, operational efficiency, and revenue growth reflect the real-world value of AI deployments.

User Engagement: For customer-facing AI, engagement metrics like session duration and task completion rates provide insights into user experience.

Continuous Improvement

Feedback Loops: Collecting feedback from end users and monitoring system behavior enables continuous refinement of AI models and workflows.

A/B Testing: Comparing different model versions or deployment strategies helps identify the most effective approaches.

For professionals in Mumbai, a Generative AI course in Mumbai may include hands-on projects focused on analytics and monitoring, providing practical experience with these critical aspects of AI deployment.

Case Study: Jeda.ai – Orchestrating Multimodal AI at Scale

Jeda.ai is a leading innovator in the field of multimodal, agentic AI. Their platform integrates multiple LLMs, including GPT-4o, Claude 3.5, LLaMA 3, and o1, into a unified visual workspace, enabling businesses to execute complex, AI-driven workflows with unprecedented efficiency and autonomy.

Technical Challenges

Data Integration: Jeda.ai needed to seamlessly process and analyze text, images, audio, and video from diverse sources.

Orchestration Complexity: Managing multiple LLMs and ensuring smooth handoffs between models required sophisticated orchestration logic.

Scalability: The platform had to support high-throughput, real-time processing for enterprise clients.

Solutions and Innovations

Unified Data Pipeline: Jeda.ai developed a robust data pipeline capable of ingesting and preprocessing multimodal data in real time.

Multi-LLM Orchestration: The platform’s orchestration engine dynamically routes tasks to the most appropriate LLM based on context, data type, and performance requirements.

Autonomous Workflow Execution: Jeda.ai’s agents can execute entire workflows autonomously, from data ingestion to decision-making and output generation.

Business Outcomes

Operational Efficiency: Clients report significant improvements in workflow automation and operational efficiency.

Enhanced Decision-Making: The platform’s context-aware agents enable more accurate, data-driven decisions.

Scalability and Reliability: Jeda.ai’s architecture ensures high availability and resilience, even under heavy load.

Lessons Learned

Embrace Modularity: Breaking down complex workflows into modular components simplifies development and maintenance.

Invest in Observability: Comprehensive monitoring and logging are essential for diagnosing issues in multimodal, agentic systems.

Foster Cross-Functional Collaboration: Close collaboration between data scientists, engineers, and business stakeholders accelerates innovation and ensures alignment with business goals.

For those interested in mastering these techniques, a Agentic AI course in Mumbai can provide valuable insights and practical experience.

Additional Case Studies: Multimodal AI in Diverse Industries

Healthcare

Personalized Medicine: Multimodal AI can analyze patient data, including medical images and genomic information, to provide personalized treatment plans.

Diagnostic Assistance: AI systems can assist in diagnosing diseases by analyzing symptoms, medical histories, and imaging data.

Finance

Risk Management: Multimodal AI helps in risk assessment by analyzing financial data, news, and market trends to predict potential risks.

Customer Service: AI-powered chatbots can provide personalized customer support by understanding voice, text, and visual inputs.

For professionals seeking to specialize, a Generative AI course in Mumbai may offer industry-specific case studies and hands-on projects.

Actionable Tips and Lessons Learned

Start Small, Scale Fast: Begin with a focused proof of concept, then expand to more complex workflows as confidence and expertise grow.

Prioritize Resilience: Design systems with redundancy, self-healing, and graceful degradation in mind.

Leverage Unified Models: Use multimodal foundation models to streamline deployment and improve performance.

Invest in MLOps: Robust MLOps pipelines are critical for managing the lifecycle of generative and agentic AI models.

Monitor and Iterate: Continuously monitor system performance and user feedback, and iterate based on real-world insights.

Collaborate Across Teams: Break down silos and foster a culture of collaboration between technical and business teams.

For beginners, Agentic AI courses for beginners often include practical exercises based on these tips, helping learners build confidence and competence.

Conclusion

The era of autonomous, multimodal AI is upon us. Enterprises that embrace agentic and generative AI will unlock new levels of resilience, efficiency, and innovation. By leveraging the latest frameworks, adopting software engineering best practices, and fostering cross-functional collaboration, AI teams can build systems that not only process and generate content across multiple modalities but also adapt, recover, and thrive in dynamic, real-world environments.

For AI practitioners and technology leaders, especially those considering a Agentic AI course in Mumbai or a Generative AI course in Mumbai, the path forward is clear: invest in multimodal control strategies, prioritize resilience and scalability, and never stop learning from real-world deployments. For beginners, Agentic AI courses for beginners provide a structured entry point into this exciting field, equipping learners with the skills and knowledge needed to succeed in the future of AI.

0 notes

Text

Career Scope After Completing an Artificial Intelligence Classroom Course in Bengaluru

Artificial Intelligence (AI) has rapidly evolved from a futuristic concept into a critical component of modern technology. As businesses and industries increasingly adopt AI-powered solutions, the demand for skilled professionals in this domain continues to rise. If you're considering a career in AI and are located in India’s tech capital, enrolling in an Artificial Intelligence Classroom Course in Bengaluru could be your best career decision.

This article explores the career opportunities that await you after completing an AI classroom course in Bengaluru, the industries hiring AI talent, and how classroom learning gives you an edge in the job market.

Why Choose an Artificial Intelligence Classroom Course in Bengaluru?

1. Access to India’s AI Innovation Hub

Bengaluru is often called the "Silicon Valley of India" and is home to top tech companies, AI startups, global R&D centers, and prestigious academic institutions. Studying AI in Bengaluru means you’re surrounded by innovation, mentorship, and career opportunities from day one.

2. Industry-Aligned Curriculum

Most reputed institutions offering an Artificial Intelligence Classroom Course in Bengaluru ensure that their curriculum is tailored to industry standards. You gain hands-on experience in tools like Python, TensorFlow, PyTorch, and cloud platforms like AWS or Azure, giving you a competitive edge.

3. In-Person Mentorship & Networking

Unlike online courses, classroom learning offers direct interaction with faculty and peers, live doubt-clearing sessions, group projects, hackathons, and job fairs—all of which significantly boost employability.

What Will You Learn in an AI Classroom Course?

Before we delve into the career scope, let’s understand the core competencies you’ll develop during an Artificial Intelligence Classroom Course in Bengaluru:

Python Programming & Data Structures

Machine Learning & Deep Learning Algorithms

Natural Language Processing (NLP)

Computer Vision

Big Data & Cloud Integration

Model Deployment and MLOps

AI Ethics and Responsible AI Practices

Hands-on experience with real-world projects ensures that you not only understand theoretical concepts but also apply them in practical business scenarios.

Career Scope After Completing an AI Classroom Course

1. Machine Learning Engineer

One of the most in-demand roles today, ML Engineers design and implement algorithms that enable machines to learn from data. With a strong foundation built during your course, you’ll be qualified to work on predictive models, recommendation systems, and autonomous systems.

Salary Range in Bengaluru: ₹8 LPA to ₹22 LPA Top Hiring Companies: Google, Flipkart, Amazon, Mu Sigma, IBM Research Lab

2. AI Research Scientist

If you have a knack for academic research and innovation, this role allows you to work on cutting-edge AI advancements. Research scientists often work in labs developing new models, improving algorithm efficiency, or working on deep neural networks.

Salary Range: ₹12 LPA to ₹30+ LPA Top Employers: Microsoft Research, IISc Bengaluru, Bosch, OpenAI India, Samsung R&D

3. Data Scientist

AI and data science go hand in hand. Data scientists use machine learning algorithms to analyze and interpret complex data, build models, and generate actionable insights.

Salary Range: ₹10 LPA to ₹25 LPA Hiring Sectors: Fintech, eCommerce, Healthcare, EdTech, Logistics

4. Computer Vision Engineer

With industries adopting automation and facial recognition, computer vision engineers are in high demand. From working on surveillance systems to autonomous vehicles and medical imaging, this career path is both versatile and future-proof.

Salary Range: ₹9 LPA to ₹20 LPA Popular Employers: Nvidia, Tata Elxsi, Qualcomm, Zoho AI

5. Natural Language Processing (NLP) Engineer

NLP is at the core of chatbots, language translators, and sentiment analysis tools. As companies invest in better human-computer interaction, the demand for NLP engineers continues to rise.

Salary Range: ₹8 LPA to ₹18 LPA Top Recruiters: TCS AI Lab, Adobe India, Razorpay, Haptik

6. AI Product Manager

With your AI knowledge, you can move into managerial roles and lead AI-based product development. These professionals bridge the gap between the technical team and business goals.

Salary Range: ₹18 LPA to ₹35+ LPA Companies Hiring: Swiggy, Ola Electric, Urban Company, Freshworks

7. AI Consultant

AI consultants work with multiple clients to assess their needs and implement AI solutions for business growth. This career often involves travel, client interaction, and cross-functional knowledge.

Salary Range: ₹12 LPA to ₹28 LPA Best Suited For: Professionals with prior work experience and communication skills

Certifications and Placements

Many reputed institutions like Boston Institute of Analytics (BIA) offer AI classroom courses in Bengaluru with:

Globally Recognized Certifications

Live Industry Projects

Placement Support with 90%+ Success Rate

Interview Preparation & Resume Building Sessions

Graduates of such courses have gone on to work at top tech firms, startups, and even international research labs.

Final Thoughts

Bengaluru’s tech ecosystem provides an unmatched environment for aspiring AI professionals. Completing an Artificial Intelligence Classroom Course in Bengaluru equips you with the skills, exposure, and confidence to enter high-paying, impactful roles across various industries.

Whether you're a student, IT professional, or career switcher, this classroom course can be your gateway to a future-proof career in one of the world’s most transformative technologies. The real-world projects, in-person mentorship, and direct industry exposure you gain in Bengaluru will set you apart in a competitive job market.

#Best Data Science Courses in Bengaluru#Artificial Intelligence Course in Bengaluru#Data Scientist Course in Bengaluru#Machine Learning Course in Bengaluru

0 notes

Text

5 Ultimate Industry Trends That Define the Future of Data Science

Data science is a field in constant motion, a dynamic blend of statistics, computer science, and domain expertise. Just when you think you've grasped the latest tool or technique, a new paradigm emerges. As we look towards the immediate future and beyond, several powerful trends are coalescing to redefine what it means to be a data scientist and how data-driven insights are generated.

Here are 5 ultimate industry trends that are shaping the future of data science:

1. Generative AI and Large Language Models (LLMs) as Co-Pilots

This isn't just about data scientists using Gen-AI; it's about Gen-AI augmenting the data scientist themselves.

Automated Code Generation: LLMs are becoming increasingly adept at generating SQL queries, Python scripts for data cleaning, feature engineering, and even basic machine learning models from natural language prompts.

Accelerated Research & Synthesis: LLMs can quickly summarize research papers, explain complex concepts, brainstorm hypotheses, and assist in drafting reports, significantly speeding up the research phase.

Democratizing Access: By lowering the bar for coding and complex analysis, LLMs enable "citizen data scientists" and domain experts to perform more sophisticated data tasks.

Future Impact: Data scientists will shift from being pure coders to being "architects of prompts," validators of AI-generated content, and experts in fine-tuning and integrating LLMs into their workflows.

2. MLOps Maturation and Industrialization

The focus is shifting from building individual models to operationalizing entire machine learning lifecycles.

Production-Ready AI: Organizations realize that a model in a Jupyter notebook provides no business value. MLOps (Machine Learning Operations) provides the practices and tools to reliably deploy, monitor, and maintain ML models in production environments.

Automated Pipelines: Expect greater automation in data ingestion, model training, versioning, testing, deployment, and continuous monitoring.

Observability & Governance: Tools for tracking model performance, data drift, bias detection, and ensuring compliance with regulations will become standard.

Future Impact: Data scientists will need stronger software engineering skills and a deeper understanding of deployment environments. The line between data scientist and ML engineer will continue to blur.

3. Ethical AI and Responsible AI Taking Center Stage

As AI systems become more powerful and pervasive, the ethical implications are no longer an afterthought.

Bias Detection & Mitigation: Rigorous methods for identifying and reducing bias in training data and model outputs will be crucial to ensure fairness and prevent discrimination.

Explainable AI (XAI): The demand for understanding why an AI model made a particular decision will grow, driven by regulatory pressure (e.g., EU AI Act) and the need for trust in critical applications.

Privacy-Preserving AI: Techniques like federated learning and differential privacy will gain prominence to allow models to be trained on sensitive data without compromising individual privacy.

Future Impact: Data scientists will increasingly be responsible for the ethical implications of their models, requiring a strong grasp of responsible AI principles, fairness metrics, and compliance frameworks.

4. Edge AI and Real-time Analytics Proliferation

The need for instant insights and local processing is pushing AI out of the cloud and closer to the data source.

Decentralized Intelligence: Instead of sending all data to a central cloud for processing, AI models will increasingly run on devices (e.g., smart cameras, IoT sensors, autonomous vehicles) at the "edge" of the network.

Low Latency Decisions: This enables real-time decision-making for applications where milliseconds matter, reducing bandwidth constraints and improving responsiveness.

Hybrid Architectures: Data scientists will work with complex hybrid architectures where some processing happens at the edge and aggregated data is sent to the cloud for deeper analysis and model retraining.

Future Impact: Data scientists will need to understand optimization techniques for constrained environments and the challenges of deploying and managing models on diverse hardware.

5. Democratization of Data Science & Augmented Analytics

Data science insights are becoming accessible to a broader audience, not just specialized practitioners.

Low-Code/No-Code (LCNC) Platforms: These platforms empower business analysts and domain experts to build and deploy basic ML models without extensive coding knowledge.

Augmented Analytics: AI-powered tools that automate parts of the data analysis process, such as data preparation, insight generation, and natural language explanations, making data more understandable to non-experts.

Data Literacy: A greater emphasis on fostering data literacy across the entire organization, enabling more employees to interpret and utilize data insights.

Future Impact: Data scientists will evolve into mentors, consultants, and developers of tools that empower others, focusing on solving the most complex and novel problems that LCNC tools cannot handle.

The future of data science is dynamic, exciting, and demanding. Success in this evolving landscape will require not just technical prowess but also adaptability, a strong ethical compass, and a continuous commitment to learning and collaboration.

0 notes

Text

Top Tech Events in June 2025: Must-Attend Conferences and upcoming tech events

June 2025 packed with exciting technology events all over India, providing unique networking options and state you insight. Whether you are interested in AI, data analysis, cyber security or marketing innovation, these upcoming tech events programs will help you be ahead of industry trends and go with ideal managers.

Why Attend Tech Events in 2025?

The technology panorama is evolving hastily, artificial intelligence, machine learning, and cybersecurity taking centre level. Attending these 2025 activities gives priceless possibilities to study from industry experts, find out emerging technology, and build significant expert connections which can boost up your career or commercial enterprise boom.

1. Gartner Data & Analytics Summit 2025 - Mumbai

📅 Date: June 2-3, 2025 📍 Location: Grand Hyatt Mumbai, Mumbai, India 🏢 Organized by: Gartner ⏰ Timings: 9:00 AM - 6:00 PM

What Makes This Event Special?

Gartner Data and Analytics Summit today takes the most pressure challenges in front of data and analysis managers. This premiere event focuses on taking advantage of Digital transformation strategies, AI implementation best practices, data to run digital transformation success, build high -performing teams and make strategic decisions

2. Accel AI Summit 2025 - Bengaluru

📅 Date: June 4, 2025 📍 Location: Bengaluru, India 🏢 Organized by: Accel ⏰ Timings: 9:00 AM - 6:00 PM

Event Highlights

Accel AI Summit provides special insight into machine learning innovation and AI applications from the real world. This event is perfect for professionals who form the future of artificial intelligence in the technical capital of India. You'll Learn AI implementation strategies, Industry case studies, Industry case studies etc.

3. Gen AI ML Global Conclave Bangalore 2025

📅 Date: June 13, 2025 📍 Location: Ibis Bengaluru Hebbal, Bengaluru, India 🏢 Organized by: 1.21GWS ⏰ Timings: 9:00 AM - 6:00 PM

Comprehensive AI Focus

This concert brings top industry leaders together to share trading activities in many departments, including Tribal AI HR, sales, marketing and customer success, to share practical insight into it.

4. CISO6 Cyber Security Summit 2025 - Mumbai

📅 Date: June 20, 2025 📍 Location: Hyatt Centric Juhu Mumbai, Mumbai, India 🏢 Organized by: Transformation Studios ⏰ Timings: 8:30 AM - 11:00 PM

Premier Cybersecurity Event

CISO6 Cyber Safety Summit is part of a prestigious global event series covering India, United Arab Emirates, Apac and Africa. The Mumbai version focuses on coordinating cyber security strategies with commercial purposes in India's economic capital.

5. NewGen Marketing Innovation Summit 2025 - Bengaluru

📅 Date: June 27, 2025 📍 Location: Ibis Bengaluru Hebbal, Bengaluru, India 🏢 Organized by: 1.21GWS ⏰ Timings: 8:50 AM - 5:00 PM

Marketing Technology Revolution

This summit shows how AI and computer -driven strategies bring revolution in marketing. The attendees will gain practical insight into taking advantage of generic AI, Martech tools and personalization techniques to perform better results. Core focus areas include Generative AI innovations and real-world applications, strengthening cybersecurity in the age of AI, addressing MLOps and scaling challenges

Registration and Early Bird Benefits

Most of these tech events in 2025 provide the benefits of early bird and benefits of group registration. Companies that send many employees often have significant cost savings. It is recommended to register early as these premium events are usually sold quickly.

Conclusion: Don't Miss These Game-Changing Upcoming Tech Events

These 2025 tech events represent the best opportunities to live electricity with 2025 technology trends, expand their professional networks and gain competitive advantage in their industry. From AI innovations to cyber security strategies, each event provides unique value suggestions for technology persons.

Whether you are in Mumbai, Bangalore or travel from other cities, these provides carefully curated events exceptional opportunities for learning and networks. The convergence of industry leaders, innovative startups and technology pies make a month for technical professionals throughout India.

Ready to Transform Your Tech Career?

Start planning your June 2025 tech event journey today. These events fill up quickly, so early registration is highly recommended. Connect with industry leaders, discover breakthrough technologies, and position yourself at the forefront of India's dynamic technology landscape.

To stay updated on the latest tech trends and innovations, visit Nextloop Technologies.

0 notes

Text

How to Choose the Best Data Science Course in Pune: A Complete Checklist

In today’s data-driven world, data science has emerged as one of the most in-demand and lucrative career paths. Pune, known as the "Oxford of the East", is a growing tech hub in India and offers numerous options for data science education. With so many institutions, bootcamps, and online courses to choose from, it can be overwhelming to select the right one.

If you're planning to build a career in data science and are looking for the best data science course in Pune, this comprehensive checklist will help you make a well-informed decision.

1. Define Your Goals