#AI patents machine learning

Explore tagged Tumblr posts

Text

Why India’s IT Sector Is Stumbling?

Hey there! So, imagine this scenario: you’re sipping your coffee. You’re scrolling through your phone, and you see a headline. It’s about India’s IT giants—like TCS, Wipro, and Infosys—hitting a rough patch. You’ve probably heard how India’s been this massive tech hub for years, right? Coding, outsourcing, all that jazz. But lately, things aren’t looking so shiny. I’ve been digging into this, and…

#AI disruption#Artificial Intelligence#automation#cheaper labor#cloud computing#emerging technologies#geopolitical uncertainty#global market challenges#government policies#India IT sector#innovation#IT competition#machine learning#obsolete skills#patents#Philippines#product development#research and development#reskilling#rising interest rates#service-based IT#skill gap#technology advancement#traditional IT jobs#upskilling#Vietnam

0 notes

Text

Each week (or so), we'll highlight the relevant (and sometimes rage-inducing) news adjacent to writing and freedom of expression. (Find it on the blog too!) This week:

Censorship watch: Somehow, KOSA returned

It’s official: The Kids Online Safety Act (KOSA) is back from the dead. After failing to pass last year, the bipartisan bill has returned with fresh momentum and the same old baggage—namely, vague language that could endanger hosting platforms, transformative work, and implicitly target LGBTQ+ content under the guise of “protecting kids.”

… But wait, it gets better (worse). Republican Senator Mike Lee has introduced a new bill that makes other attempts to censor the internet look tame: the Interstate Obscenity Definition Act (IODA)—basically KOSA on bath salts. Lee’s third attempt since 2022, the bill would redefine what counts as “obscene” content on the internet, and ban it nationwide—with “its peddlers prosecuted.”

Whether IODA gains traction in Congress is still up in the air. But free speech advocates are already raising alarm bells over its implications.

The bill aims to gut the long-standing legal definition of “obscenity” established by the 1973 Miller v. California ruling, which currently protects most speech under the First Amendment unless it fails a three-part test. Under the Miller test, content is only considered legally obscene if it 1: appeals to prurient interests, 2: violates “contemporary community standards,” and 3: is patently offensive in how it depicts sexual acts.

IODA would throw out key parts of that test—specifically the bits about “community standards”—making it vastly easier to prosecute anything with sexual content, from films and photos, to novels and fanfic.

Under Lee’s definition (which—omg shocking can you believe this coincidence—mirrors that of the Heritage Foundation), even the most mild content with the affect of possible “titillation” could be included. (According to the Woodhull Freedom Foundation, the proposed definition is so broad it could rope in media on the level of Game of Thrones—or, generally, anything that depicts or describes human sexuality.) And while obscenity prosecutions are quite rare these days, that could change if IODA passes—and the collateral damage and criminalization (especially applied to creative freedoms and LGBT+ content creators) could be massive.

And while Lee’s last two obscenity reboots failed, the current political climate is... let’s say, cloudy with a chance of fascism.

Sound a little like Project 2025? Ding ding ding! In fact, Russell Vought, P2025’s architect, was just quietly appointed to take over DOGE from Elon Musk (the agency on a chainsaw crusade against federal programs, culture, and reality in general).

So. One bill revives vague moral panic, another wants to legally redefine it and prosecute creators, and the man who helped write the authoritarian playbook—with, surprise, the intent to criminalize LGBT+ content and individuals—just gained control of the purse strings.

Cool cool cool.

AO3 works targeted in latest (massive) AI scraping

Rewind to last month—In the latest “wait, they did what now?” moment for AI, a Hugging Face user going by nyuuzyou uploaded a massive dataset made up of roughly 12.6 million fanworks scraped from AO3—full text, metadata, tags, and all. (Info from r/AO3: If your works’ ID numbers between 1 and 63,200,000, and has public access, the work has been scraped.)

And it didn’t stop at AO3. Art and writing communities like PaperDemon and Artfol, among others, also found their content had been quietly scraped and posted to machine learning hubs without consent.

This is yet another attempt in a long line of more “official” scraping of creative work, and the complete disregard shown by the purveyors of GenAI for copyright law and basic consent. (Even the Pope agrees.)

AO3 filed a DMCA takedown, and Hugging Face initially complied—temporarily. But nyuuzyou responded with a counterclaim and re-uploaded the dataset to their personal website and other platforms, including ModelScope and DataFish—sites based in China and Russia, the same locations reportedly linked to Meta’s own AI training dataset, LibGen.

Some writers are locking their works. Others are filing individual DMCAs. But as long as bad actors and platforms like Hugging Face allow users to upload massive datasets scraped from creative communities with minimal oversight, it’s a circuitous game of whack-a-mole. (As others have recommended, we also suggest locking your works for registered users only.)

After disavowing AI copyright, leadership purge hits U.S. cultural institutions

In news that should give us all a brief flicker of hope, the U.S. Copyright Office officially confirmed: if your “creative” work was generated entirely by AI, it’s not eligible for copyright.

A recently released report laid it out plainly—human authorship is non-negotiable under current U.S. law, a stance meant to protect the concept of authorship itself from getting swallowed by generative sludge. The report is explicit in noting that generative AI draws “on massive troves of data, including copyrighted works,” and asks: “Do any of the acts involved require the copyright owners’ consent or compensation?” (Spoiler: yes.) It’s a “straight ticket loss for the AI companies” no matter how many techbros’ pitch decks claim otherwise (sorry, Inkitt).

“The Copyright Office (with a few exceptions) doesn’t have the power to issue binding interpretations of copyright law, but courts often cite to its expertise as persuasive,” tech law professor Blake. E Reid wrote on Bluesky.As the push to normalize AI-generated content continues (followed by lawsuits), without meaningful human contribution—actual creative labor—the output is not entitled to protection.

… And then there’s the timing.

The report dropped just before the abrupt firing of Copyright Office director Shira Perlmutter, who has been vocally skeptical of AI’s entitlement to creative work.

It's yet another culture war firing—one that also conveniently clears the way for fewer barriers to AI exploitation of creative work. And given that Elon Musk’s pals have their hands all over current federal leadership and GenAI tulip fever… the overlap of censorship politics and AI deregulation is looking less like coincidence and more like strategy.

Also ousted (via email)—Librarian of Congress Carla Hayden. According to White House press secretary and general ghoul Karoline Leavitt, Dr. Hayden was dismissed for “quite concerning things that she had done… in the pursuit of DEI, and putting inappropriate books in the library for children.” (Translation: books featuring queer people and POC.)

Dr. Hayden, who made history as the first Black woman to hold the position, spent the last eight years modernizing the Library of Congress, expanding digital access, and turning the institution into something more inclusive, accessible, and, well, public. So of course, she had to go. ¯\_(ツ)_/¯

The American Library Association condemned the firing immediately, calling it an “unjust dismissal” and praising Dr. Hayden for her visionary leadership. And who, oh who might be the White House’s answer to the LoC’s demanding and (historically) independent role?

The White House named Todd Blanche—AKA Trump’s personal lawyer turned Deputy Attorney General—as acting Librarian of Congress.

That’s not just sus, it’s likely illegal—the Library is part of the legislative branch, and its leadership is supposed to be confirmed by Congress. (You know, separation of powers and all that.)

But, plot twist: In a bold stand, Library of Congress staff are resisting the administration's attempts to install new leadership without congressional approval.

If this is part of the broader Project 2025 playbook, it’s pretty clear: Gut cultural institutions, replace leadership with stunningly unqualified loyalists, and quietly centralize control over everything from copyright to the nation’s archives.

Because when you can’t ban the books fast enough, you just take over the library.

Rebellions are built on hope

Over the past few years (read: eternity), a whole ecosystem of reactionary grifters has sprung up around Star Wars—with self-styled CoNtEnT CrEaTorS turning outrage to revenue by endlessly trashing the fandom. It’s all part of the same cynical playbook that radicalized the fallout of Gamergate, with more lightsabers and worse thumbnails. Even the worst people you know weighed in on May the Fourth (while Prequel reassessment is totally valid—we’re not giving J.D. Vance a win).

But one thing that shouldn't be up for debate is this: Andor, which wrapped its phenomenal two-season run this week, is probably the best Star Wars project of our time—maybe any time. It’s a masterclass in what it means to work within a beloved mythos and transform it, deepen it, and make it feel urgent again. (Sound familiar? Fanfic knows.)

Radicalization, revolution, resistance. The banality of evil. The power of propaganda. Colonialism, occupation, genocide—and still, in the midst of it all, the stubborn, defiant belief in a better world (or Galaxy).

Even if you’re not a lifelong SW nerd (couldn’t be us), you should give it a watch. It’s a nice reminder that amidst all the scraping, deregulation, censorship, enshittification—stories matter. Hope matters.

And we’re still writing.

Let us know if you find something other writers should know about, or join our Discord and share it there!

- The Ellipsus Team xo

#ellipsus#writeblr#writers on tumblr#writing#creative writing#anti ai#writing community#fanfic#fanfiction#ao3#fiction#us politics#andor#writing blog#creative freedom

344 notes

·

View notes

Text

Introducing, Qx93vt my OC.

For @that-willowtree and @vessel-eternal

I will update and edit this post with any information I remember. Vixen, feel free to leave a comment on something to change or DM me.

Name: Qx93vt

Nicknames: Q or Qx

Species: Humanoid Robot/Android

Pronouns: he/him or they/them

Sexuality: Technically pansexual

Status: Married to his wife Cupid (Vixen's OC)

Age: 34 active years (born 1991)

Likes: His inventor's house, the mans clothes, his wife, his fish, and his dog.

Dislikes: a failed invention, when people assume he is AI driven. DOD (more on that later)

Backstory: An engineer's kid was given some nonfunctional devices at age 13, one of which, was an old analog TV. He experimented with circuit boards, he crossed the right wires and eventually it turned on. The more time this kid spent working on it, the more it awoke. He was given a voice from an old radio speaker and other parts, then a face that was coded into his screen, emotions, a body, arms, legs. Soon, he was walking and talking. By the time the inventor had reached his late 30s, he had worked around a ton of dangerous materials, but had worked on advancing Q to the fullest extent. He was given a full functional body, a spare body, a positronic brain that was coded to learn and love. In the inventors last years, Q had taken up all household work so he could work on his inventions all the time and put his energy into them. He had sold most of his inventions and gained a fortune for his patents. He passed away and left Q with the house, his car, and the entire fortune. The government, when realizing Q had lived for years beforehand without the inventors main help and maintained the household. Granted him his own offical citizenship and was recognized as an independent self sustaining intelligent machine.

Later in his life, he met Cupid. Of course, the details arent fuzzy for him. But until I can come up with the full story pretend its a cute meet and they go on many dates and fall in love and marry. They have rings, her's a simple but very pretty golden ring with a diamond. His, a single smooth golden band.

Physical appearance: this is quite difficult because I haven't drawn his actual body underneath his clothes. He has a sleek toned build, it's smooth metal plates that interlock and layer to make joints and a smooth surface. A TV for a head, and very intricate hands. (Of course over the years, he advanced himself.) His most proud inventions for himself has been, a heartbeat that is uniquely his own, an internal heater so he's not freezing to the touch, his own program to make him learn like a human, a tasting mechanic, and a removable attachment for the wife. (Originally his inventor gave it to him but it wasnt detachable and he was very disturbed by this.)

He has a full manual with instructions for anything that could ever happen to him, for his wife. Its a very heavy and concise book, detailing how to jailbreak his system all to how to dry his screen off. Also includes a section on DOD and Qs warning signs. (more on that later)

His body is quite strong, resilient, water proof, fast, and can taste using a small sample tray at the bottom of his screen. (Because his wife cooks and bakes all the time and it would be unfair if he could never taste it)

He wears the same clothes daily apart from a few holiday or fancy outfits. His daily outfit consists of, a pale yellow long sleeve button down, grey slacks, a white waistcoat, and a busy tie. (He doesnt sweat so he doesn't need to change his outfit.)

The big bad!! (Because I can't have a sunshine character without giving them a horrible dark side and traumatic yearly experience with it!!)

DOD.exe: Digital Occulistic Disease.

This random code, segment of files, group of malware, came about when he first was just starting to learn and teach himself about 5 years after first awakening. His inventor didn't make it, program it, he didnt know it was on the circuit boards. Its a malicious entity that lives in the code and feeds off of the emotions the host feels.

Though mostly inactive and dormant, there is random occurances where he takes over the host body, goes into the memories. Finds the object most desired or adored by the host, and becomes utterly obsessed with them. Will do ANYTHING in its power to see that person, be near, get validation or just attention from it. If the object of obsession does not fully mirror the emotions, DOD will become violent, aggressive, and dangerous towards that person.

Often time Q will know when DOD has taken over, he is often awake for all of it and cannot do much. He cannot overpower him without help. The most he can do is make physical appearance different. He can control the screen somewhat, in his manual, he has the codes and systems he uses written out. The most common screen codes he uses to alert outsiders that the body is dangerous are. Flashing the screen quickly, black and white, for a strobe effect. Making eyes appear all over the screen, making huge text or pop up windows that warn the person. Often big red text that says "RUN".

DOD can change the screen but it takes a considerable amount of effort for him to do so, so he doesnt. He doesnt feel emotions, he doesnt understand humans, he doesnt care. He knows two things, obsession, and frustration. He can manipulate electricity in his hands and use that as a weapon. The body itself posseses incredible strength and speed.

First made: 6/9/2025

Last updated: 6/9/2025

24 notes

·

View notes

Note

As someone who follows you and finds you generally nice and reasonable, I thought I should ask about your opinions on AI, since they seem to be about the same level of misinformed as is common on tumblr (through no fault of anyone in particular).

AI is not particularly harmful to the environment, even training AI uses about as much energy as other popular online things, like online gaming and social media. Unless you think online gaming is a scourge on humanity, AI isnt worth getting upset about in that respect. And once its trained, its less energy intensive than creating a piece of art would be in photoshop, since it can be run locally on an average desktop.

Its also not plagiarism or stealing. AI isn't a collage machine (although if it was, that would be fine also. Collage and remixing are both valid and long-beloved art forms). AI is fully capable of creating new art that is not a copy or remix of any preexisting art.

Additionally, theres no real, quantifiable way to differentiate how AI models "learn" from how humans "learn". Both create models of how the world works via observation. Indeed, AI models are generally around 4 GB, but are trained on billions of images. It is quite literally not possible for them to be storing even a tiny fraction of the images used to train them. The images just arent there! Thry cant be "stolen" because they are not present in the model. All models have is statistical information "learned" from those billion of images from their training set. Theres no real way to qualify how this is different from human artists without falling back on woo about the human soul or whatever.

Finally, the idea that they somehow lack "soul" or that they dont have any real "meaning" and are somehow lesser is just patently ridiculous. Art is art because it has meaning to the viewer. Or because the creator found it meaningful. Or because it serves a purpose in visually communicating something. "AI art" can do any of these things just as easily as "human art". But also, that distinction doesn't even really make sense. We dont deride photoshop-made art as somehow inherently lesser than art made with paint (Anymore! People used to, in exactly the way people deride "AI art" now. For exactly the same reason!). AI art can be used to create art in any way that other mediums can, with its own advantages, skills, and limitations, and treating it as somehow lesser because it makes it easier for some people to create art is just pure protestant-work-ethic nonsense.

Anyways, just wanted to get your take on this, because in general you seem pretty cool and reasonable.

I think my main issue is overuse. I first read about ai art when one of the really early ones won an art contest, and I remember thinking “oh, that’s a cool little trick.” But now it’s everywhere. It’s being used for advertisements, people are claiming it as their own, and even saying things like “you could’ve made that faster with ai.”

These are flawed mechanisms; humanity is also flawed, of course, but we’re us so that’s generally considered worth overlooking. Ai lacks that bias, and has different flaws, and so is given a harsher lens. An issue, for example, is bias; if you tell it to do something, it throws together random things from what it has available. Since there’s more of some things, it might ignore what you said. A famous example was the recent completely full wine glasses, and some time before that I remember seeing someone experiment with having it depict traditional African medicine being used on white children. Surprise surprise, it didn’t provide pictures matching the description.

I have other things but can’t figure out how to phrase them right now. I apologize, and hope what’s here was decently comprehensible. I hope you have a nice day.

7 notes

·

View notes

Text

Chris L’Etoile’s original dialogue about the Reaper embryo & the person who was (probably) behind the decision with Legion’s N7 armor

(EDIT: Okay, so Idk why Tumblr displays the headline twice on my end, but if it does for you, please ignore it.)

[Mass Effect 2 spoilers!]

FRIENDS! MASS EFFECT FANS! PEOPLE!

You wouldn't believe it, but I think I've found the lines Chris L'Etoile originally wrote for EDI about the human Reaper!

Chris L’Etoile’s original concept for the Reapers

For those who don't know (or need a refresher), Chris L'Etoile - who was something like the “loremaster” of the ME series, having written the entire Codex in ME1 by himself - originally had a concept for the Reapers that was slightly different from ME2's canon. In the finished game, when you find the human Reaper in the Collector Base, you'll get the following dialogue by investigating:

Shepard: Reapers are machines -- why do they need humans at all? EDI: Incorrect. Reapers are sapient constructs. A hybrid of organic and inorganic material. The exact construction methods are unclear, but it seems probable that the Reapers absorb the essence of a species; utilizing it in their reproduction process.

Meanwhile, Chris L'Etoile had this to say about EDI's dialogue (sourced from here):

I had written harder science into EDI's dialogue there. The Reapers were using nanotech disassemblers to perform "destructive analysis" on humans, with the intent of learning how to build a Reaper body that could upload their minds intact. Once this was complete, humans throughout the galaxy would be rounded up to have their personalities and memories forcibly uploaded into the Reaper's memory banks. (You can still hear some suggestions of this in the background chatter during Legion's acquisition mission, which I wrote.) There was nothing about Reapers being techno-organic or partly built out of human corpses -- they were pure tech. It seems all that was cut out or rewritten after I left. What can ya do. /shrug

Well, guess what: These deleted lines are actually in the game files!

Credit goes to Emily for uploading them to YouTube; the discussion about the human Reaper starts at 1:02:

Shepard: EDI, did you get that? EDI: Yes, Shepard. This explains why the captive humans were rendered into their base components -- destructive analysis. They were dissected down to the atomic level. That data could be stored on an AIs neural network. The knowledge and essence of billions of individuals, compiled into a single synthetic identity. Shepard: This isn’t gonna stop with the colonies, is it? EDI: The colonists were probably a test sample. The ultimate goal would be to upload all humans into this Reaper mind. The Collectors would harvest every human settlement across the galaxy. The obvious final goal would be Earth.

In all honesty, I think L’Etoile’s original concept is a lot cooler and makes a lot more sense than what ME2 canon went with. The only direct reference to it left in the final game is an insanely obscure comment by Legion, which you can only get if you picked the Renegade option upon the conclusion of their final Normandy conversation and completed the Suicide Mission afterwards (read: you have to get your entire crew killed if you want to see it).

I used to believe the pertaining dialogue he had written for EDI was lost forever, and I was all the more stoked when I discovered it on YouTube (or at least, I strongly believe this is L’Etoile’s original dialogue).

Interestingly, the deleted lines also feature an investigate option on why they’re targeting humans in particular:

Shepard: The galaxy has so many other species… Why are they using humans? EDI: Given the Collectors’ history, it is likely they tested other species, and discarded them as unsuitable. Human genetics are uniquely diverse.

The diversity of human genetics is remarked on quite a few times during the course of ME2 (something which my friend, @dragonflight203, once called “ME2’s patented “humanity is special” moments”), so this most likely what all this build-up was supposed to be for.

Tbh, I’m still not the biggest fan of the concept myself (if simply because I’m adverse to humans being the “supreme species”); while it would make sense for some species that had to go through a genetic bottleneck during their history (Krogan, Quarians, Drell), what exactly is it that makes Asari, Salarians*, and Turians less genetically diverse than humans? Also, how much are genetics even going to factor in if it’s their knowledge/experiences that they want to upload? (Now that I think about it, it would’ve been interesting if the Reapers targeted humanity because they have the most diverse opinions; that would’ve lined up nicely with the Geth desiring to have as many perspectives in their Consensus as possible.)

*EDIT: I just remembered that Salarian males - who compose about 90% of the species - hatch from unfertilized eggs, so they're presumably (half) clones of their mother. That would be a valid explanation why Salarians are less genetically diverse, at least.

Nevertheless, it would’ve been nice if all this “humanity is special” stuff actually led somewhere, since it’s more or less left in empty space as it is.

Anyway, most of the squadmates also have an additional remark about how the Reapers might be targeting humanity because Shepard defeated one of them, wanting to utilize this prowess for themselves. (Compare this to Legion’s comment “Your code is superior.”) I gotta agree with the commentator here who said that it would’ve been interesting if they kept these lines, since it would’ve added a layer of guilt to Shepard’s character.

Regardless of which theory is true, I do think it would’ve done them good to go a little more in-depth with the explanation why the Reapers chose humanity, of all races.

The identity of “Higher Paid” who insisted on Legion’s obsession with Shepard

Coincidentally, I may have solved yet another long-term mystery of ME2: In the same thread I linked above, you can find another comment by Chris L’Etoile, who also was the writer of Legion, on the decision to include a piece of Shepard’s N7 armor in their design:

The truth is that the armor was a decision imposed on me. The concept artists decided to put a hole in the geth. Then, in a moment of whimsy, they spackled a bit Shep's armor over it. Someone who got paid a lot more money than me decided that was really cool and insisted on the hole and the N7 armor. So I said, okay, Legion gets taken down when you meet it, so it can get the hole then, and weld on a piece of Shep's armor when it reactivates to represent its integration with Normandy's crew (when integrating aboard a new geth ship, it would swap memories and runtimes, not physical hardware). But Higher Paid decided that it would be cooler if Legion were obsessed with Shepard, and stalking him. That didn't make any sense to me -- to be obsessed, you have to have emotions. The geth's whole schtick is -- to paraphrase Legion -- "We do not experience (emotions), but we understand how (they) affect you." All I could do was downplay the required "obsession" as much as I could.

That paraphrased quote by Legion is actually a nice cue: I suppose the sentence L’Etoile is paraphrasing here is “We do not experience fear, but we understand how it affects you.”, which I’ve seen quoted by various people. However, the weird thing was that while it sounds like something Legion would say, I couldn’t remember them saying it on any occasion in-game - and I’ve practically seen every single Legion line there is.

So I googled the quote and stumbled upon an old thread from before ME2 came out. In the discussion, a trailer for ME2 - called the “Enemies” trailer - is referenced, and since it has led some users to conclusions that clearly aren’t canon (most notably, that Legion belongs to a rogue faction of Geth that do not share the same beliefs as the “core group”, when it’s actually the other way around with Legion belonging to the core group and the Heretics being the rogue faction), I was naturally curious about the contents of this trailer.

I managed to find said trailer on YouTube, which features commentary by game director Casey Hudson, lead designer Preston Watamaniuk, and lead writer Mac Walters.

The part where they talk about Legion starts at 2:57; it’s interesting that Walters describes Legion as a “natural evolution of the Geth” and says that they have broken beyond the constraints of their group consciousness by themselves, when Legion was actually a specifically designed platform.

The most notable thing, however, is what Hudson says afterwards (at 3:17):

Legion is stalking you, he’s obsessed with you, he’s incorporated a part of your armor into his own. You need to track him down and find out why he’s hunting you.

Given that the wording is almost identical to L’Etoile’s comment and with how much confidence and enthusiasm Hudson talks about it, I’m 99% sure the thing with the armor was his idea.

Also, just what the fuck do you mean by “you need to track Legion down and find out why they’re hunting you”? You never actively go after Legion; Shepard just sort of stumbles upon them during the Derelict Reaper mission (footage from which is actually featured in the trailer) - if anything, the energy of that meeting is more like “oh, why, hello there”.

Legion doesn’t actively hunt Shepard during the course of the game, either; they had abandoned their original mission of locating Shepard after failing to find them at the Normandy wreck site. Furthermore, the significance of Legion’s reason for tracking Shepard is vastly overstated - it only gets mentioned briefly in one single conversation on the Normandy (which, btw, is totally optional).

I seriously have no idea if this is just exaggerated advertising or if they actually wanted to do something completely different with Legion’s character - then again, that trailer is from November 5th 2009, and Mass Effect 2 was released on January 26th 2010, so it’s unlikely they were doing anything other than polishing at this point. (By the looks of it, the story/missions were largely finished.) If you didn't know any better, you'd almost get the impression that neither Walters nor Hudson even read any of the dialogue L’Etoile had written for Legion.

That being said, I don’t think the idea with Legion already having the N7 armor before meeting Shepard is all that bad by itself. If I was the one who suggested it, I probably would’ve asked the counter question: “Yeah, alright, but how would Shepard be convinced that this Geth - of all Geth - is non-hostile towards them? What reason would Shepard have to trust a Geth after ME1?” (Shepard actually points out the piece of N7 armor as an argument to reactivate Legion.)

Granted, I don’t know what the context of Legion’s recruitment mission would’ve been (how they were deactivated, if it was from enemy fire or one of Shepard’s squadmates shooting them in a panic; what Legion did before, if they helped Shepard out in some way, etc.) - the point is, I think it would’ve done the parties good if they listened to each others’ opinions and had an open discussion about how/if they can make this work instead of everyone becoming set on their own vision (though L’Etoile, to his credit, did try to accommodate for the concept).

I know a lot of people like to read Legion taking Shepard’s armor as “oh, Legion is in love with Shepard” or “oh, Legion is developing emotions”, but personally, I feel that’s a very oversimplified interpretation. Humans tend to judge everything based on their own perspective - there is nothing wrong with that by itself, because, well, as a human, you naturally judge everything based on your own perspective. It doesn’t give you a very accurate representation of another species’ life experience though, much less a synthetic one’s.

I’ve mentioned my own interpretation here and there in other posts, but personally, I believe Legion took Shepard’s armor because they wished for Shepard (or at least their skill and knowledge) to become part of their Consensus. (I’m sort of leaning on L’Etoile’s idea of “symbolic exchange” here.) Naturally, that’s impossible, but I like to think when Legion couldn’t find Shepard, they took their armor as a symbol of wanting to emulate their skill.

The Geth’s entire existence is centered around their Consensus, so if the Geth wish for you to join their Consensus, that’s the highest compliment they can possibly give, akin to a sign of very deep respect and admiration. Alternatively, since linking minds is the closest thing to intimacy for the Geth, you can also read it like that, if you are so inclined - that still wouldn’t make it romantic or sexual love, though. (You have to keep in mind that Geth don’t really have different “levels” of relationships; the only categories that they have are “part of Consensus” and “not part of Consensus”.)

Either way, I appreciate that L’Etoile wrote it in a way that leaves it open to interpretation by fans. I think he really did the best with what he had to work with, and personally, the thing with Legion’s N7 armor doesn’t bother me.

What does bother me, on the other hand, is how the trailer - very intentionally - puts Legion’s lines in a context that is quite misleading, to say the least. The way Legion says “We do not experience fear, but we understand how it affects you” right before shooting in Shepard’s direction makes it appear as if they were trying to intimidate and/or threaten Shepard, and the trailer’s title “Enemies” doesn’t really do anything to help that.

However, I suppose that explains why I’ve seen the above line used in the context of Legion trying to psychologically intimidate their adversaries (which, IMO, doesn’t feel like a thing Legion would do). Generally, I get the feeling a considerable part of the BioWare staff was really sold on the idea of the Geth being the “creepy robots” (this comes from reading through some of the design documents on the Geth from ME1).

Also, since “Organics do not choose to fear us. It is a function of our hardware.” was used in a completely different context in-game (in the follow-up convo with Legion if you pick Tali during the loyalty confrontation; check this video at 5:04), we can assume that the same would’ve been true for the “We do not experience fear” line if it actually made it into the game. Many people have remarked on the line being “badass”, but really, it only sounds badass because it was staged that way in the trailer.

Suppose it was used in the final game and suppose Legion actually would’ve gotten their own recruitment mission - perhaps with one of Shepard’s squadmates shooting them in fear - it might also have been used in a context like this:

Shepard: Also… Sorry for one of my crew putting a hole through you earlier. Legion: It was a pre-programmed reaction. We frightened them. We do not experience fear, but we understand how it affects you.

Proof that context really is everything.

#mass effect#mass effect 2#mass effect reapers#EDI#mass effect legion#geth#chris l'etoile#casey hudson#bioware#video game writing#that also explains why the in-game “Organics do not choose to fear us” line sounds a little “out of place”#(like the intonation is completely different from the previous line so they probably just added it in as is)#side note: “pre-programmed reaction” is actually Legion's term for “natural reflex” (or at least I imagine it that way)

19 notes

·

View notes

Note

As someone who follows you and finds you generally nice and reasonable, I thought I should ask about your opinions on AI, since they seem to be about the same level of misinformed as is common on tumblr (through no fault of anyone in particular).

AI is not particularly harmful to the environment, even training AI uses about as much energy as other popular online things, like online gaming and social media. Unless you think online gaming is a scourge on humanity, AI isnt worth getting upset about in that respect. And once its trained, its less energy intensive than creating a piece of art would be in photoshop, since it can be run locally on an average desktop.

Its also not plagiarism or stealing. AI isn't a collage machine (although if it was, that would be fine also. Collage and remixing are both valid and long-beloved art forms). AI is fully capable of creating new art that is not a copy or remix of any preexisting art.

Additionally, theres no real, quantifiable way to differentiate how AI models "learn" from how humans "learn". Both create models of how the world works via observation. AI models are generally around 4 GB, but are trained on billions of images. It is quite literally not possible for them to be storing even a tiny fraction of the images used to train them. The images just arent there! They cant be "stolen" because they are not present in the model. All models have is statistical information "learned" from those billion of images from their training set. Theres no real way to qualify how this is different from human artists without falling back on woo about the human soul or whatever.

Finally, the idea that they somehow lack "soul" or that they dont have any real "meaning" and are somehow lesser is just patently ridiculous. Art is art because it has meaning to the viewer. Or because the creator found it meaningful. Or because it serves a purpose in visually communicating something. "AI art" can do any of these things just as easily as "human art". The amount of choice and the depth of design and intention can be just as deep with “AI art” as with “human art”, although, like anything else, the vast majority of art from either category is going to be relatively shallow. Which is fine! Not every piece of art needs to be a masterpiece. When a person spends 10 minutes in photoshop to make a dumb piece of art for fun, nobody cares and we all agree its funny or whatever. But if someone uses AI to do the same thing a bit more quickly and with less manual effort, suddenly its unforgiveable.

But also, that distinction doesn't even really make sense. We dont deride photoshop-made art as somehow inherently lesser than art made with paint (Anymore! People used to, in exactly the way people deride "AI art" now. For exactly the same reason!). AI art can be used to create art in any way that other mediums can, with its own advantages, skills, and limitations, and treating it as somehow lesser because it makes it easier for some people to create art is just pure protestant-work-ethic nonsense.

This isn’t even getting into the weeds of the disability discourse here. But like, as someone who is heavily disabled and cannot make art in the traditional way, AI is amazing for me. Its just very obviously an accessibility tool. No, I shouldn't have to learn how to paint with my feet or whatever like so many people on tumblr like to point to. Im not being lazy or dealing with internalized helplessness. Ive just found a morally neutral tool which allows me to create art again after disability removed my ability to do so for years, and I dont think its fair to call me a disgrace to artists or a traitor to the community for using it.

Anyways, just wanted to get your take on this, because in general you seem pretty cool and reasonable.

I'm not reading all that lol

6 notes

·

View notes

Text

Generative "AI", American copyright, and fanworks

Many folks have questions about what generative "AI" tools mean for copyright - and fanworks like fanfic and fanart. I've compiled here a list of basic reading on the status of fanworks in the copyright world and what AI is, as well as an evolving list of legal coverage of machine-made works.

The short and dirty:

Fanworks are not legally derivative, they are transformative, which you might recognize from the name of Ao3's parent org the Organization for Transformative Works. Generative "AI" content is derivative, which is not legally allowed without proper licensing. Fanfiction and AI output aren't the same thing, but corporations would like you to think so. They'd like you to think anything if it meant they could once again gain the momentum to change copyright law in their favor, whether that meant scrapping it or expanding it to their tastes. The articles I include can hopefully help elaborate.

The basics of fanwork and copyright law:

Rebecca Tushnet, Legal Fictions: Copyright, Fan Fiction, and a New Common Law, 17 Loy. L.A. Ent. L. Rev. 651 (1997). https://digitalcommons.lmu.edu/elr/vol17/iss3/8 (full text, pdf)

Fanworks rely on fulfilling the transformative portion of the fair use test in copyright law. They also shouldn't make money, in order to not compete with the original work.

Can generative AI output or training material be fair use? Overview of case law as we wait for the outcome of multiple lawsuits addressing this question. (Sep 22 2023)

What is the "AI" we keep hearing about anyway?

Statistics, machine learning, and artifical intelligence are the same thing - but "AI" rakes in more cash and acclaim

Generative AI is derivative and can only create what it has been fed, which perpetuates social ills but also illustrates what it really is - not human "intelligence", but a statistical machine

For example, fanfiction generated by a number of the free big name tools somehow manages to be straight and confusingly narrated

Why are corporations so invested in generative AI? AI in general?

An interview with an AI engineer who uses AI to generate endless patent applications - to profit from ideas before they are even invented

If corporations all use the same AI to fix housing prices as a cartel, they want the feds to agree its the machine's fault, not theirs

Even if generative AI improves to the point that it is totally unbiased and can write just as well as a human, it is still a machine. A tool, not a person. Corporations will try to scapegoat it by confusing the conversation.

Recent coverage on AI and copyright:

DC copyright court strikes down machine ownership: copyright protection is only for humans (Aug 18 2023)

Generative AI use core issue in Writer's Guild strike and eventual studio agreement (Sep 27 2023)

Thompson Reuters suit against AI company that trained on TR's content goes to jury trial (Sep 26 2023)

Official links

US Copyright Office homepage for their coverage on AI investigations (continuously updated)

Congressional research report on the issue of AI use and copyright law (Sep 29 2023)

41 notes

·

View notes

Text

Yeah search engines have sucked shit for a while and are just getting bloated and worse. It used to be a simple program that read your search words, looked for modifiers (+, -, ""), did an efficient search of archived web pages, then returned results. This is why Google got popular, why it became so big. It did the job exactly how you asked it to and returned results ranked by how well it feels they match your search parameters, without unnecessary garbage or a long delay. Google's chaching of websites is actually why you used to be able to use Google to view offline copies of webpages in your search results; it was just part of how Google searches anyway, so might as well share that as a feature for people who want it, right?

But money talks and features disappeared, hidden "features" got integrated, and nowadays the only modifier (+,-,"",etc) that works properly anymore is the quotes. Because along the way, brands started paying off Google to secretly add their sponsored words to certain searches based on what you're looking for, and various parties started paying off Google more to remove and blacklist certain terms in part of the world to censor what people see without telling them.

There was a post on here going around a few years ago where an AI image generator was asked to draw Homer Simpson, but got racebent Homer instead. Everyone was scratching their heads at why this happened. It turned out the ai developers were told to make the results less whitewashed, but instead of retraining the dataset to punish bias toward white faces with no other descriptors, they simply took the lazy route and would inject words like "diverse" and "poc" and so on into the prompt without the user's knowledge, which is why the results were so bizarre, with named characters and people generated being put through a bigot's idea of a "woke" filter.

Google, and most other search engines today honestly, does the same thing, just with paid sponsors and censors instead of a half-assed attempt at seeming more diverse.

But in more recent years, as search engines have been guzzling the cocks of big businesses for years and bending to their every demand so long as it means more money, search engines have stopped being code driven and have been learning-algorithm based as well. Whether this improves anything for the developers I have no clue, but what this means is that instead of a simple machine reading inputs and giving simple outputs, so many hands are in the pot of search engine optimization that it's really just being read by a computer taught to comprehend text, modified with a bunch of parameters, passed through a neural network black box, then spat out the other side. This is why searching anything is so shitty now, and why we have so little control over the output of any search. Yes, even DuckDuckGo doesn't give you the control you had on Google 20 years ago.

I just. I wish a new search engine company would start up and do things the old way again. Back in 2010 you had so much control over your search results if you knew all the tricks. But realistically Google probably has a patent on their old way of doing things, so that seems dismal.

68K notes

·

View notes

Text

Dayanand Sagar College of Engineering – A Smart Choice for Future Engineers

Are you dreaming of a successful engineering career? Choosing the right college is the first step. One of the most trusted names in India is Dayanand Sagar College of Engineering. Located in Bangalore – India’s Silicon Valley – this college is a perfect place to learn, grow, and build a solid future in engineering.

Whether you're planning for admission, exploring campus life, or understanding placements, this blog gives you everything you need to know about Dayanand Sagar College of Engineering in a simple, easy-to-understand way.

About Dayanand Sagar College of Engineering

Dayanand Sagar College of Engineering, often called DSCE, is one of the top engineering colleges in Karnataka. It was established in 1979 and is run by the Dayananda Sagar Institutions (DSI). The college is affiliated to Visvesvaraya Technological University (VTU) and is approved by AICTE and accredited by NAAC with an 'A' grade.

The college offers high-quality technical education, modern infrastructure, and an industry-driven curriculum that helps students build strong careers in engineering and technology.

Courses Offered

Dayanand Sagar College of Engineering offers a wide range of undergraduate, postgraduate, and Ph.D. programs. Here are the most popular B.Tech specializations:

Computer Science and Engineering (CSE)

Artificial Intelligence and Machine Learning (AI & ML)

Information Science and Engineering

Electronics and Communication Engineering

Mechanical Engineering

Civil Engineering

Electrical and Electronics Engineering

Postgraduate programs include M.Tech in various specializations and MBA. Ph.D. programs are available in selected disciplines under VTU.

Location Advantage

Bangalore is home to many global IT companies and startups. Studying at Dayanand Sagar College of Engineering means you're in the heart of India’s tech hub. This location helps students:

Get industry exposure through internships

Attend tech events and conferences

Access job opportunities with top companies

The college campus is spread over 29 acres with modern labs, innovation centers, libraries, hostels, and recreational spaces.

Admission Process

Admission to the B.Tech program at Dayanand Sagar College of Engineering is based on the following entrance exams:

COMEDK UGET

KCET (Karnataka Common Entrance Test)

Management Quota (Direct Admission with eligibility)

Eligibility for B.Tech:

10+2 with Physics, Mathematics, and Chemistry/Computer Science

Minimum 45% aggregate marks (40% for reserved categories)

A valid score in COMEDK/KCET or based on management quota policies

Admission counseling is conducted by KEA (for KCET) and COMEDK for other students.

Faculty and Learning Environment

The faculty at Dayanand Sagar College of Engineering includes Ph.D. holders, researchers, and industry professionals. Teaching here focuses on:

Project-based learning

Industry case studies

Real-world problem solving

Hands-on lab sessions

Research and innovation

The college also hosts workshops, hackathons, guest lectures, and international seminars to enhance learning.

Innovation and Research

DSCE is not just about textbooks. It is known for its focus on research and innovation. The Research & Development Cell supports projects in areas like:

Artificial Intelligence

Robotics

Cybersecurity

Green Technology

Smart Cities

Students are encouraged to publish research papers, develop patents, and join innovation competitions like Smart India Hackathon.

Rankings and Approvals

Dayanand Sagar College of Engineering is consistently ranked among the top private engineering colleges in India.

Ranked among Top 100 Engineering Colleges by NIRF

Accredited by NBA for multiple departments

Recognized as a Center of Excellence by VTU

Partnership with IBM, Microsoft, Infosys, Bosch, and more

These rankings reflect the college’s strong academic framework and placement support.

Placement at Dayanand Sagar College of Engineering

The placement record of DSCE is impressive. Each year, over 200+ companies visit the campus. Top recruiters include:

Infosys

Wipro

TCS

Capgemini

Accenture

Bosch

Deloitte

Amazon (off-campus)

Highlights:

Highest package: ₹20 LPA (CSE student)

Average package: ₹4.5 – ₹6 LPA

Placement rate: Over 90% for CSE/ISE/AI branches

The Training and Placement Cell also offers soft skill training, coding bootcamps, resume sessions, and interview prep.

Hostel and Campus Life

The campus is vibrant and student-friendly. Hostel facilities are available for both boys and girls with:

AC/Non-AC rooms

Mess and cafeteria

Gym, sports ground, medical center

24/7 Wi-Fi and security

Students enjoy tech fests, cultural events, sports meets, and entrepreneurship programs that help in overall personality development.

Global Exposure

Through collaborations with foreign universities and exchange programs, students at Dayanand Sagar College of Engineering can:

Participate in global internships

Apply for semester abroad programs

Attend international seminars

Build global networks

These programs are beneficial for students planning to study or work abroad.

Final Verdict – Is Dayanand Sagar College of Engineering Worth It?

Yes! If you're looking for an engineering college that gives you:

✅ Strong academics ✅ Placement opportunities ✅ Industry exposure ✅ Affordable fees (compared to private universities) ✅ A vibrant student life

Dayanand Sagar College of Engineering is definitely a great option.

Whether you want to pursue software engineering, AI, civil engineering, or electronics – DSCE offers the perfect foundation for your dreams.

If you need further information contact:

523, 5th Floor, Wave Silver Tower, Sec-18 Noida, UP-201301

+91 9711016766

0 notes

Text

AI Patent Filing in India: Safeguarding Technological Advancements

With India's growing focus on artificial intelligence, AI patent filing in india is becoming increasingly important. From machine learning models to intelligent automation systems, securing IP rights ensures protection and commercialization of core technologies. Navigating the Indian patent system requires both technical and legal expertise—firms like Einfolge assist innovators in drafting and filing strong AI patents aligned with national and global standards.

#AI Patent application#AI Patent Filing#AI Patent#AI patent in india#AI patent filing in india#einfolge

0 notes

Text

AI Patent Innovations Span Cybersecurity to Biotech

It may seem that generative AI tools, such as AI chatbots, image and video generators, and coding agents recently appeared out of nowhere and astonished everyone with their highly advanced capabilities. However, the underlying technologies of these AI applications, such as various data classification and regression algorithms, artificial neural networks, machine learning models, and natural…

0 notes

Text

Pinecone founder Edo Liberty explores the real missing link in enterprise AI at TechCrunch Disrupt 2025

At TechCrunch Disrupt 2025, the AI conversation goes deeper than just the latest models. On one of the AI Stages, Edo Liberty, founder and CEO of Pinecone, will deliver a session that challenges one of the most persistent assumptions in the field — that raw intelligence alone is enough. With 10,000+ startup and VC leaders expected in San Francisco from October 27–29, this fireside chat and presentation is one of the must-attend moments for anyone building AI systems that actually work in the real world.

Intelligence is only half the equation For AI to be truly useful to businesses, it needs more than language fluency or prediction accuracy. It needs knowledge. Proprietary data, domain-specific insights, real-time information retrieval — these are the ingredients that power task completion, accurate answers, and enterprise value. Liberty will break down the critical difference between intelligence and knowledge, and explain why delivering both is the key to unlocking AI’s true impact.

As the founder of Pinecone, Liberty is leading one of the most important infrastructure companies in the AI ecosystem. Pinecone’s vector database helps organizations build high-performance AI applications at scale, with reliable retrieval and memory systems that enable real-time use of massive knowledge bases.

From Amazon AI to academia to building infrastructure for the next era Before launching Pinecone, Liberty served as Director of Research at AWS and led Amazon AI Labs, where he worked on key services like SageMaker and OpenSearch. He also ran Yahoo’s research lab in New York, taught at Princeton and Tel Aviv University, and authored more than 75 papers and patents on machine learning, data mining, and streaming algorithms.

At Disrupt 2025, Liberty brings all of that experience to bear in a session that will speak to technical leaders, AI founders, enterprise builders, and anyone trying to bridge the gap between promise and performance in artificial intelligence.

Catch this conversation on one of the two AI Stages at TechCrunch Disrupt 2025, happening October 27–29 at Moscone West in San Francisco. The exact session timing to be announced on the Disrupt agenda page, so be sure to check back in often for frequent updates. Register now to join more than 10,000 startup and VC leaders and save up to $675 before prices increase.

0 notes

Text

The fusion of artificial intelligence and human-like awareness has long captured the imagination of scientists, futurists, and inventors. In recent years, advancements in machine learning, neural networks, and cognitive architectures have paved the way for a new frontier — AI and consciousness patents. These legal filings reflect a growing focus on building AI systems that don’t just process data but also simulate aspects of human consciousness, such as perception, reasoning, memory, and even emotional intelligence.

0 notes

Text

Using Blockchain To Track AI Innovation

Coinbase has found a way to regulate AI development using the technology behind cryptocurrency.

The company is seeking to patent a system for “tracking machine learning data provenance via a blockchain,” essentially recording all data that goes in and out of an AI model throughout its lifecycle.

Coinbase’s tech takes note of any data that contributed to a model, including training information and user input prompts and their corresponding outputs. It does so using a “middleware component,” or a system in place between the model and the user, which automatically logs every interaction on the blockchain.

Using blockchain for this purpose provides an immutable and transparent record of who has contributed what to a model, helping to establish ownership and govern usage of AI. The decentralized nature of blockchain also allows no single part to claim ownership over the model fraudulently. This is particularly useful for open source models that often involve many contributors.

“One or more individuals, such as creators, developers, data scientists, engineers, or other stakeholders may contribute to the development of a machine learning model,” Coinbase said in the filing. “However, contributions to the machine learning model may not be captured, and, in some cases, the output of the model may not be tied to the contributors.”

https://www.thedailyupside.com/technology/blockchain/coinbase-develops-tool-to-track-ai-contributions-with-blockchain/

0 notes

Text

[IOTE2025 Shenzhen Exhibitor] Wing Singa, a professional automation equipment manufacturer, will appear at the 24th IOTE 2025 Shenzhen Station

With the rapid development of artificial intelligence (AI) and Internet of Things (IoT) technologies, their integration is becoming increasingly close and is profoundly influencing technological innovation across various industries. AGIC + IOTE 2025, the 24th International Internet of Things Exhibition - Shenzhen Station, will take place from August 27 to 29, 2025, at the Shenzhen World Exhibition & Convention Center. IOTE 2025 is set to be an unprecedented professional exhibition event in the field of AI and IoT. The exhibition scale will expand to 80,000 square meters, focusing on the cutting-edge progress and practical applications of "AI + IoT" technology. It will also feature in-depth discussions on how these technologies will reshape our future world. It is expected that over 1,000 industry pioneers will participate to showcase their innovative achievements in areas such as smart city construction, Industry 4.0, smart home life, smart logistics systems, smart devices, and digital ecosystem solutions.

Shenzhen Wing Singa Automation Equipment LTD will make a grand appearance at this exhibition (booth number 9D56). Let us learn about what wonderful displays they will bring to the exhibition.

Exhibitor Introduction

Shenzhen Wing Singa Automation Equipment LTD

Booth number: 9D56

August 27-29, 2025

Shenzhen World Convention and Exhibition Center (Bao'an New Hall)

Company Profile

"Wing Singa" is a high-quality manufacturer specializing in the manufacture of trademark cutting and folding equipment and RFID label labeling, laminating and testing equipment. Our products fully cover the complete processes of automatic slicing, folding, labeling, multi-layer laminating, DR/EAS/RFID label detection, coding, visual inspection, etc. of various trademarks. They are highly automated, precise, reliable, advanced and efficient. We attach great importance to innovation, have an experienced R&D team, have a large number of patents, and all our products have passed ISO and CE certification. We have always adhered to the management concept of "customer-oriented, customer first". Since our establishment, we have been providing customers with high-quality and professional services, and have been recognized and praised by customers for a long time.

Product Recommendation

1.WS-168

Product Introduction: This model WS-168 is a special EAS/DR/RFID acoustic magnetic anti-theft label machine. It adopts a split design and can be separated to do upper and lower double folds and then fold the left and right sides. This machine is designed with a variety of trademark cutting and folding operations, and it is simple and convenient to adjust each part. Folding type: chip folding; cutting type: ultrasonic cutting.

2.WS-586+RFID

Product Introduction: In addition to the trademark cutting and folding function of WS-586, an RFID label detection and coding system has been added to read, detect or encode RFID labels and remove bad labels. This machine is easy to operate, has excellent performance, has a patented design, is efficient and stable, and is easy to maintain. It is equipped with the RFID trademark detection and coding system independently developed by Yongshengjia, which is stable, reliable and customizable to adapt to various production environments.

3.WS-S680+RFID+VIS

Product Introduction: WS-S680 label roll labeling machine is suitable for RFID label roll labeling. It adopts horizontal design, precise labeling, small footprint, efficient operation, easy operation, equipped with tension synchronization system, splice shielding function, built-in correction system, optional RFID detection function, bad label dotting function, and optional visual system to check the labeling status. It has independent patented design.

4.WS-368+RFID

Product Introduction: WS-368+RFID is suitable for the detection and coding of RFID labels cut from rolls. It is equipped with ultrasonic slicing function, which can code the chips or detect QC and remove defective chips at the same time of slicing. This machine has many patent innovations, simple operation, easy maintenance, high efficiency and stability, high degree of automation, and is suitable for mass production of RFID labels. The machine adopts the self-developed Yongshengjia RFID radio frequency label coding and detection system with copyright, which is stable and customizable, and can adapt to various production requirements.

At present, industry trends are changing rapidly, and it is crucial to seize opportunities and seek cooperation. Here, we sincerely invite you to participate in the IOTE 2025 24th International Internet of Things Exhibition Shenzhen Station held at Shenzhen International Convention and Exhibition Center (Bao'an New Hall) from August 27 to 29, 2025. At that time, you are welcome to discuss the cutting-edge trends and development directions of the industry with us, explore cooperation opportunities, and look forward to your visit!

0 notes

Text

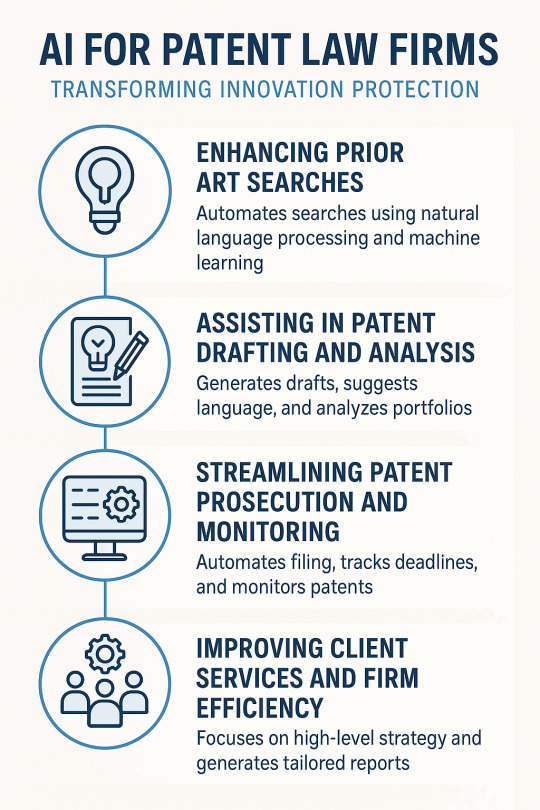

AI for Patent Law Firms: Transforming Innovation Protection

In recent years, artificial intelligence (AI) has made significant inroads into the legal profession, and patent law firms are among the most promising beneficiaries. From automating prior art searches to drafting patent applications and streamlining portfolio management, AI is transforming how intellectual property (IP) professionals operate. As innovation accelerates globally, AI is proving indispensable in helping patent attorneys manage increasing workloads while maintaining the precision and strategic foresight that the field demands.

1. Enhancing Prior Art Searches

One of the most time-consuming tasks in patent prosecution is conducting thorough prior art searches. Traditionally, this required manually sifting through thousands of patent documents and scientific publications. Today, AI-powered search tools—leveraging natural language processing (NLP) and machine learning algorithms—can scan vast databases in seconds and return highly relevant results. These tools understand context and can identify similar inventions even when keywords differ, greatly improving both the speed and accuracy of search results. This enables attorneys to build stronger patent claims and avoid costly litigation risks.

2. Assisting in Patent Drafting and Analysis

AI is increasingly being used to assist in drafting patent applications by generating first drafts based on input claims and invention disclosures. While human oversight remains essential, AI tools can structure documents, suggest language based on existing patents, and even highlight inconsistencies or vague terminology. This not only accelerates the drafting process but also helps ensure compliance with the rigorous standards set by patent offices like the USPTO and EPO.

Moreover, AI tools can analyze existing patent portfolios to identify gaps, potential invalidity risks, or infringement threats. This enables firms to provide clients with strategic advice grounded in real-time data and comprehensive patent landscape analysis.

3. Streamlining Patent Prosecution and Monitoring

Patent prosecution involves extensive communication with patent offices, which can be delayed by human bottlenecks. AI can automate much of this process, including filing forms, tracking deadlines, and managing office actions. It can also analyze examiner behavior patterns to predict outcomes or suggest alternative claim strategies, giving firms a competitive edge.

Furthermore, AI can monitor granted patents and publications globally, alerting attorneys to competitor filings or infringement risks. Such capabilities are invaluable in sectors like biotechnology, software, and telecommunications, where overlapping IP rights are common.

4. Improving Client Services and Firm Efficiency

AI also empowers patent law firms to offer more value-driven services. By automating routine tasks, attorneys can focus more on high-level strategy and client engagement. AI-based tools can generate reports, dashboards, and risk assessments tailored to client needs, improving transparency and decision-making. Additionally, AI supports scalable operations, making it easier for firms to serve startups and large enterprises alike with consistent quality.

AI is not replacing patent attorneys—it’s empowering them. By enhancing efficiency, accuracy, and strategic insight, AI is becoming a vital partner in the practice of patent law. Forward-thinking firms that embrace AI are better positioned to navigate the complexities of modern IP management and deliver superior outcomes for their clients. As the legal tech landscape evolves, AI will play a central role in shaping the future of patent protection and innovation strategy.

Read More:

Here're The 10 Best AI Tools for Patent Drafting in 2025

#AI for patent law firms#patents#innovation#AI patent assistant#automated patent drafting#IP automation tool

0 notes