#AI-generated CSAM

Explore tagged Tumblr posts

Text

How AI is Fueling Child Exploitation

AI tools are being weaponized by predators to exploit children in ways that were previously unimaginable. For instance, images of real-life child abuse victims are being fed into AI models to generate new, hyper-realistic depictions of abuse. This not only perpetuates the trauma of existing victims but also creates a vicious cycle of exploitation. Additionally, AI is being used to "de-age" celebrities or manipulate images of children to create graphic content, which is then used for blackmail or traded on the dark web.

The UK-based Internet Watch Foundation (IWF) reported a significant surge in CSAM following the proliferation of AI technologies. Predators are also using AI to disguise their identities, identify potential targets, and "groom" children online. These advancements have drastically reduced the time and effort required to carry out such heinous activities, making it easier for offenders to operate on a larger scale. Expand to read more

#AI child exploitation#AI-generated CSAM#child safety online#AI and cybercrime#India child protection laws#AI deepfake abuse#online child abuse prevention#AI crime laws India#AI misuse regulation#digital child safety

0 notes

Text

Oda(the creator of One Piece)is an enabler of Nobuhiro Watsuki, the creator convicted of having articles of csam and didn't even go to jail

Whether or not the man's stance on AI is pro or anti, he's worth being wary of.

https://www.cbr.com/one-piece-fans-horrified-oda-support-rurouni-kenshin-mangaka/

https://boundingintocomics.com/manga/one-piece-mangaka-eiichiro-oda-faces-backlash-for-upcoming-interview-with-rurouni-kenshin-mangaka-nobuhiro-watsuki/

I know that article about Oda using AI once one time in 2022 is being showed around like it's new but I get w being in 2022 effects of Gen AI was barely known and he tried it once with his own work and pretty much said it wasn't worth it. It's still concerning to me he used it but I don't think he did again however his enabling shouldn't be thrown underwater and is honestly more of a concern to me.

I just wanted to post this so more misinformation doesn't get spread about that article and gen ai to where people put so much more focus on that than the guy enabling a predator for whatever bullshit reason.

It's just sad as fuck I'm so mad to know of this. One Piece was a show I would use to watch to remember my best friend who's not here anymore who loved that show so much and he soiled it so bad with this shit. I'm never giving them another penny.

At least she never had to see this 😭

Watsuki just had a fine less than 2000 us dollars...it's sickening how anyone could justify supporting that creep especially knowing how many kids and teenagers follow Oda...he just doesn't care. I've been crying so hard it just hurts

I don't idolize anybody I've known dude's a celebrity and I don't know him and so many celebrities and creeps enable each other but this just felt like such a shot to my heart with how much I loved the series. Hell Im at 1090 rn. I wanted to finish it for my friend. I got my little brother into it and the games and now it's always going to be soiled.

4 notes

·

View notes

Text

IF YOU ARE A PROSHIPPER STAY AWAY FROM ME AND MY BLOG! WHY DID I GET A BUNCH OF BRAINWASHED CHILDREN AND PREDATORS ON HERE REBLOGGING MY SHIT!??

Do Not Interact if you are or support any of the following:

Proshippers/Darkshippers/Ex-antis/anti-antis

AI USERS/LOVERS!

Radqueers

Shotacons, lolicons

Groomers/Pedophiles

"Consensual abuse" believers

If you think incest and rape are kinks

TERFs

Fujoshis + fundanshis

Pro-ana/eating disorder glamorizing blogs

Anti-furries

Anti-therians

Anti-age regression + anti-pet regression

Anti-LGBTQ

Lolita romanticizers

ABDL/Age play/DDLG/etc.

Vore fetishists

Melanie Martinez stans + supporters

Dream stans + supporters

Swifties

Daddy's Little Toy/Tori Woods defenders

MAP/NOMAP apologists

People who make "problematic jokes" and hide behind "dark humor"

"Age is just a number" people

Users of AI-generated CSAM or "lolicon/shota because it's not real" excuses

Alt-right, incel, or manosphere adjacent ideologies

Zoophile apologists or "zoo-neutral"

"Fiction ≠ reality" extremists who use that as a shield for vile content

People who treat triggers and boundaries as jokes or "overreactions"

---------------

YUMESHIPPERS/SELF INSERT ARE OKAY, I AM ONE AND AS LONG AS YOU AINT A PROSHIT!

#anti ao3#anti proship#anti proshitter#anti profic#fuck proshippers#anti proshipper#anti proshippers#anti twitter#anti shotacon#anti lolicon#dni proshitters#proshippers dni#proshitters dni#proship dni

50 notes

·

View notes

Note

I think what stress me out about AI currently is that the companies pushing it are not being honest about its limitations and a lot of people using it are not thinking critically about its output and taking everything it says at face value.

Rolling stone recently had a really good article about AI feeding into psychosis and i think that is the tip of the iceberg.

AI hit the general population very quickly and very uncritically for capitalism reasons and i think we will eventually work out the problems and it will provide great benefit but it rolled out in about the worst way it could have.

I can’t say i have used a representative sample of all gen AI. I’ve only used ChatGPT a bit and now Claude; Anthropic’s interface for the latter seems to stress quite clearly what kind of queries tend to be useful and what not, though, and to emphasize that Claude is fallible.

AI psychosis, deepfake porn of real people, CSAM, and similar problems are very real. People not understanding the limits of technology is a problem. But people have used the internet to harass people and distribute revenge porn; people have fallen for bullshit on television because it was presented in an authoritative format. We should be concerned about these problems but not panic more so than we might about similar problems with other technologies, i guess is my point. And i think these are problems inherent to easing costs of information creation and distribution generally. You would get them in one form or another even in the absence of capitalism. Indeed, the evil capitalists mostly try very hard to lock down their AI behind restrictive usage policies; afaict it’s the open source and freely distributed models you usually have to turn to if you want to easily generate (say) porn using the faces of real people.

21 notes

·

View notes

Text

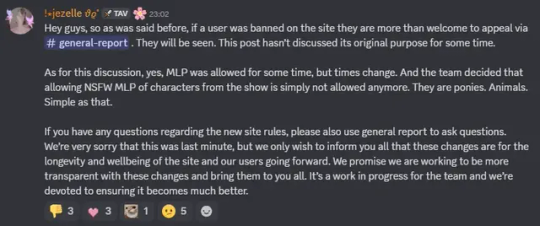

IMPORTANT, PLEASE READ!

REGARDING THE FUTURE OF MY JANITOR AI ACCOUNT AND BOTS:

firstly if you don't use jai, feel free to ignore this post. if you do, please read the entirety of it thoroughly.

janitor ai is notorious for it's lack of censorship. also, for its horrible mod/dev team and horrible communication with its user base. they never tell us when anything is happening to the site, or if they do, it's after the fact. any site tests they do aren't on a separate code tester, it's on the up and running site itself. no matter how much we—the users—complain, they just say "oopsie! we'll do better next time guys!" with their fingers crossed behind their backs.

so recently shep—the head dev of janitor—made an announcement on discord instead of using the jai blog like a normal person would where everyone can see it. the announcement said in so many words that janitor would start implementing a filter to stop illegal things from happening in people's private chats, such as CSAM, beastiality, and incest. here's what was said:

Here's an important comment thread as well:

now while anthros and animals are absolutely not my thing, the problem with this isn't the purging of the mlp bots in general but that it was done without any warning.

how does this affect me? well, i have bots that are at the very least morally questionable such as numerous stepcest bots, a serial killer bot, and even a snuff bot which was a request. but i also have many, many normal bots that significantly outnumber the others. i have a dad!han bot which is currently a target to be deleted and a catalyst for a potential ban of my account.

related to this, here's part of a reblog of a post from @fungalpieceofextras (apologies for the random tag but this is a very good point):

"We need to remember that morality and advertiser standards are not the same thing, any more than morality and legality are the same thing. We also need to remember, Especially Right Now, that fictional scenarios are Not Real, and that curating your experiences yourself is essential to making things tolerable. Tags exist for a reason, and that reason is to warn and/or state the content of something."

janitor has a dead dove tag. there are also custom tags where you can warn what the content of your bot is, and you also have the ability to do so in the bot description. you can block tags on janitor ai. many people don't like stepcest or infidelity bots and you know what they do? block the tags!

anyways, my overarching point for making this post is that i have dead dove bots that are illegal (eg dad!han and serial killer!sam). but it's all fictional. that seems to not matter. so, what does this mean for my janitor account and the future of making bots?

well, for right now, i don't want to get wrongfully banned. i also don't want any of my bots deleted. i spend lots and lots of time perfecting bot personalities and making the perfect intro message for you all, and if that hard work culminates into deletion against my will, i am going to be pissed. so, i'm going to save the code and messages for my "illegal" bots for later, and i'm going to consider archiving said bots.

i'm so so sorry that this is happening and that someone's favorite bot will maybe be taken from them. if ever it does get taken down please, by all means, shoot me a message and i'll slide you the doc with the code. but i also will be wary of this because my bots have been stolen. so long as you don't transfer my bots to other sites for people to chat with, meaning that you only use it for yourself as a private bot, then i don't mind at all.

thank you all for reading and if there's an update i'll let you all know! <3

7 notes

·

View notes

Note

did you hear about the LAION stuff?

yeah i mean it is obviously really fucking bad but i think a lot of the reporting on it is disingenous in presenting this as a specific problem or failure of LAION / the AI sector when it is really just indicative of a much wider problem with the prevalence of CSAM on the internet. like, ending up with CSAM in your dataset is kind of a natural consequence of scraping the public facing internet, because places like facebook and twitter are fucking rife with it. facebook removed more than 70 million posts last year for violating its CSAM policy -- if we give them the extremely generous benefit of the doubt and say that their moderation is capable of catching 99% of it, that's still 700,000 CSAM images/videos left up in 2022 alone. like a lot of things with AI, it's just showcasing a much wider problem that's unfortunatley much more difficult to resolve.

85 notes

·

View notes

Text

The real problems with AI have nothing to do with art theft as that could easily be solved by companies just not stealing or environmental concerns that are actually a problem with databases in general. And taking away artists jobs is a capitalism problem, if you’re worried about that, push for universal basic income. The real problem is that the stuff it produces will always be the lowest common denominator of human creativity. Also the CSAM in the current image models is a much more pressing issue to me than “intellectual property rights” which I think should be abolished for anything but straight-up plagiarism.

5 notes

·

View notes

Note

I would think some of the logic behind AI companies not allowing NSFW or sexual works to be used in training or to be created would be to prevent the creation of child abuse images. I don’t know what term to use, for CSAM or CSEM that is indistinguishable from a real photograph but not real, but with AI improving to photorealistic quality with fewer tells that it’s AI, a company banning people from generating sexual images and not training them on sexual images would prevent a scenario where it spits out what could look like real CSAM.

AI generation seems like a shit show right now, with the amount of wrong google search responses that are coming up, and tests I’ve seen where many have generated hate and nazi imagery easily. It makes the most business sense to me to only put resources to making sure 100% of sexually generated images look like adults only, only if generating sexual content is its thing. Otherwise, if there’s a chance it could produce an image that looks like real child sexual abuse? That’s prosecutable in the US, and has to be investigated as if it’s real.

--

23 notes

·

View notes

Text

I really don't want to talk about the present AO3 controversy (I have opinions, I think anyone paying attention does, but I'm so. Very. Tired. And the internet chews up tired people with opinions and spits them out for fun. So that's not happening).

HOWEVER!

I see many people out there championing Squidgeworld as an alternative to AO3. If you don't like AO3's staff or leadership and want to go somewhere else, this is actually pretty reasonable. The Squidge folks have been doing this a long time, they know what they're doing. They are a smaller team, hosting on smaller infrastructure (a dog knocked over their server once, apparently), but they seem up to the task of running an archive.

However. I also see people talking how Squidgeworld's policies are better than AO3 in various regards. And I want to address this because it's... mostly wrong. There are some differences in the TOSes, but for most of you they will be the same.

First thing, top of the list: Squidge's TOS is much smaller and vaguer than AO3's. A whole ton of rules around tagging in the TOS are just... not there. There's a bunch of information in AO3's TOS about how complaints are treated, that's all gone, any specificity about how Squidgeworld defines what they do or do not consider acceptable behavior is just... not there. In their place, we have Wheaton's Law: "Don't be a Dick". You might thing that covers it all, but a good TOS that makes it clear what is and is not allowed gives a lot of piece of mind. Nothing is worse than waking up to see that you've been given the boot because you and the mods disagreed about what the TOS meant. It sucks, you don't want that. It probably won't happen to most people, especially if you're not an asshole, but it is a thing.

The next thing in the TOS is the CSEM clause (the technical term for most of what people refer to as child pornography), and this is what I heard a lot of people talking about. I have heard people say that Squidge "actually bans child porn". However, the only difference between AO3 and Squidge's policies on CSEM (which is, to be clear, they they do not permit it) The only difference is this line: "This includes anything deemed pro-child sex or child-sex advocacy symbols." The thing is, that's subjective. A fic that involves this kind of content is not necessarily pro-child sex. Yes, even if it's RPF. And no, RPF is not CSAM. Nor is RPF CSEM, which is a broader umbrella term. At least, not under US law or US definitions, which is what both AO3 and Squidge operate under. I have done my best to get the official definitions for these things: RPF isn't covered. This has nothing to do with my opinions on RPF, it's just fact. In short, if you're upset at AO3 for not banning sexually explicit RPF containing real-life minors, Squidgeworld doesn't ban that either. Whether or not it takes down a fic with that kind of content in it is basically down moderation staff opinion on whether it might be advocating for child sex.

Now here's the juicy stuff. Squidgeworld prohibits links to any kind of fundraising... except in the case of original work. So, if you are a writer who posts original work to fic archives and wants to link your Patreon... you can do that on Squidgeworld. Just don't do it on fanfics. Or in the comments or in your profile. Standard AO3 rules everywhere else. Honestly, unless squidgeworld takes off on a scale that is frankly unlikely, this probably won't matter, but for some of you, this is a good thing, and it's worth bringing up.

Squidgeworld, unlike AO3, does not allow AI-generated fanworks. Now, I'm sure a lot of you are very happy, but this does actually present some problems: Specifically, you can't always tell AI generated and human work apart. Sure, a lot of the time it's pretty easy, but you can mask it, and sometimes the output could be confused for real writing. More importantly, real people's writing could be confused for AI writing. I could easily see fic authors being attacked with accusations that their work is AI generated, or co-written with an AI. I don't know if that will happen, but... well, some people are assholes, and fan communities are often drama-laden. It could happen.

Squidgeworld prohibits web scraping for the purpose of use with AI. AO3 basically has the same policy, and they've taken more technical measures to prevent it now that we're aware it's a thing that happens (the first time AO3 was scraped was pre-ChatGPT, people just weren't paying attention to this stuff. I imagine squidgeworld takes similar anti-scraping technical measures, although I don't know for sure). I think people don't think AO3 does this because in the post where they explained this they also said they allowed AI-generated fics, and they went on to say that they couldn't make it impossible for someone to scrape the site and feed that into a machine learning model. That's something Squidgeworld can't do either: if you really want a website scraped, that website can be scraped. This is why AO3 went on to say that archive-locking your work would make it less likely for scrapers to catch it, and that you could do that if you were concerned.

So yeah. I think broadly that these policies shake out mostly the same as they do on AO3 in terms of content. There's more vagueness and subjectivity and more things that malicious users could potentially abuse to waste staff time and attack other users, but... well, you can always submit false reports. That's a problem on AO3 too. Same as it ever was. And hey, at least there won't be any blatantly AI-generated fics over on squidgeworld. Mind, I haven't seen any in any of my fandoms anyways...

56 notes

·

View notes

Text

Following the trend of tech companies in the AI race throwing privacy and caution to the wind, Microsoft unveiled plans this week to launch a tool on its forthcoming Copilot+ PCs called Recall that takes screenshots of its customers’ computers every few seconds. Microsoft says the tool is meant to give people the ability to “find the content you have viewed on your device.” The company also claims to have a range of protections in place and says the images are only stored locally in an encrypted drive, but the response has been roundly negative nonetheless, with some watchdogs reportedly calling it a possible “privacy nightmare.” The company notes that an intruder would need a password and physical access to the device to view any of the screenshots, which should rule out the possibility of anyone with legal concerns ever adopting the system. Ironically, Recall’s description sounds eerily reminiscent of computer monitoring software the FBI has used in the past. Microsoft even acknowledges that the system takes no steps to redact passwords or financial information.

29 notes

·

View notes

Text

i do genuinely think generative ai is pretty fucking cool outside of like, all the horrifically unethical ways it’s used and programmed (which like, people have been using it to disguise gore and csam images and post them online, the ethical issues are horrific and an endless depth, i don’t want to discount them they’re there, but I presume people on this blog Know that bc I’ve talked about it in depth bc I’ve been interested in ai for a long time and I’ve seen this shit go down and it’s awful and depressing) bc like. i think it’s really interesting that a pattern recognition machine exists and we can see the patterns it draws. remember that freaky nightmare woman it came up with like it just invented a pattern that doesn’t exist that’s cool as hell. like conceptually it’s so interesting to see it do that and link weird shit together and notice patterns that are imperceptible to humans bc it has no fucking idea what it’s looking at. i mean in practise it fucks up the enviroment and has so much illegal shit both in the training data and how people use it that cannot go overlooked gen ai as it is today fucking sucks bc of that. but I don’t think that’s bc it’s inherently uninteresting- noticing patterns in art and writing humanity doesnt, either bc of subconscious biases or bc they’re weird shit we wouldn’t come up with like the weird cryptid lady is neat! it’s just, y’know. people are using it cutting down rainforests to upload images of children dying to Instagram without it getting caught by their content filter and that’s fucking unjustifiable.

9 notes

·

View notes

Note

For something to be considered CSAM, it has to contain a real child. It is extremely important that we have a term to differentiate out content where there's a real child victim and that's what the term exists for. It's also how it's used in the study you linked to, exclusively to refer to media of real children.

I couldn't tell from the phrasing in your post if you already know this, but I make a point of correcting this misconception whenever I see it because it's important for discussing the topic accurately.

That is incorrect, even in the United States. CSAM does not need to depict an actual, real-world child to be classed as CSAM. Depictions of non-real children (like fictional children, computer edited/generated children, or AI-generated children) are legally classified as CSAM, even in the United States. Making explicit content where children are the sexual object of the fantasy and exploitation, or content that features sexual fantasies and exploitation about a child, is exactly that.

The AI-generated child, for example, may not technically exist (non-real), but it is still illegal to depict their likeness in an explicit way.

^^ Most states specify visual depictions, but not all laws are so explicit. But also? Not everywhere is the US. Many of us may be governed by different laws, and in other countries, you can face legal sanction for written depictions, too.

I linked to that Finnish study because it is comprehensive in how it studied the affect exposure has on victims and victimizers. Depictions of “fake” children accomplish the same outcome; the child being technically not real is not like methadone, as I argued in that post.

4 notes

·

View notes

Note

An investigation found hundreds of known images of child sexual abuse material (CSAM) in an open dataset used to train popular AI image generation models, such as Stable Diffusion. Models trained on this dataset, known as LAION-5B, are being used to create photorealistic AI-generated nude images, including CSAM. It is challenging to clean or stop the distribution of publicly distributed datasets as it has been widely disseminated. Future datasets could use freely available detection tools to prevent the collection of known CSAM. (SOURCE: https:// cyber.fsi.stanford.edu/news/investigation-finds-ai-image-generation-models-trained-child-abuse)

This isn't some "gotcha". This is something that actually HAPPENED.

Firstly;

Secondly, pick a fucking argument, dude. You've gone from asking me if I think AI rape images are fine to asking if I think AI CSA is fine because its using real CSEM to calling out that some AI datasets were contaminated.

So what's your argument? Are you arguing against my moral values or against AI? Those specific AI programs? People using art to fulfil unorthodox fantasy?

Copy-pasting statements after a furious little google search because I'm not bending over ass-backwards to agree with you is presenting what point, exactly?

AI bad?

Taboo kink bad?

Fictional content bad?

RPF bad?

Which is it? Help me out here. Is it some hybrid of all of those points?

Because I mean if your point is that AI is bad, I agree! Whole heartedly. For a multitude of reasons, not least one of your copied points; that it is actually basically impossible to make the AI forget something its learned. Hence; scrapping it all and starting over. Hence; stricter laws that only allow AI to be trained on specific open source datasets. Hence; requiring human approval for reference images and constant human monitoring rather than simply allowing the AI to run unsupervised, as most are.

I just don't agree that using various mediums to create fictional art that isn't created with a malicious directive is bad. Whether that's done by hand or using (god forbid) AI.

16 notes

·

View notes

Text

In Brazil, proposed AI regulation might compromise fight against child abuse material

Corporate lobbying has reshaped Brazil's AI legislation efforts, removing previously proposed safeguards against high-risk AI models that could generate exploitative content involving children, sources revealed to Nucleo

Corporate interests have quietly reshaped Brazil's most comprehensive proposed artificial intelligence regulation to date, leading its latest version in the Senate to abandon crucial safeguards against high-risk AI systems capable of generating child sexual abuse content, Nucleo's reporting has found.

The Senate bill – which sets rules for the creation, use, and management of AI in Brazil – initially mirrored the European Union's AI Act, with strict classifications for high-risk AI models. But the current version shifts toward self-regulation, a change championed by tech companies arguing that tighter controls would affect innovation in Brazil, according to multiple sources.

The shift follows multiple delays in the Senate's Commission on Artificial Intelligence, which began discussing regulation in August 2023. After five postponed votes since May 2024, the commission decided to revise its original proposal on high-risk AI systems last Tuesday (December 3, 2024)

The earlier draft completely banned several high-risk AI models, including those that could “enable, produce, disseminate, or facilitate” child sexual abuse material (CSAM). It also classified all AI applications on the internet as high-risk. Both provisions have now been removed.

Continue reading.

#brazil#brazilian politics#politics#children's rights#artificial intelligence#image description in alt#mod nise da silveira

9 notes

·

View notes

Text

fyi if you are pro computer generated art/writing, 'ai' stuff, or whatever my blog is not the place for you 👍

the issues with ai art are the problems with proffiting off of others labour, the interhent distruption and unrelegated aspects of the technology, problems with the inherent violation of data scrapping on a large level, alongside the database containing csam , and if you are in support of that you need to reevaluate your views.

24 notes

·

View notes

Text

For years, hashing technology has made it possible for platforms to automatically detect known child sexual abuse materials (CSAM) to stop kids from being retraumatized online. However, rapidly detecting new or unknown CSAM remained a bigger challenge for platforms as new victims continued to be victimized. Now, AI may be ready to change that.

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an API expanding access to an AI model designed to flag unknown CSAM. It's the earliest use of AI technology striving to expose unreported CSAM at scale.

An expansion of Thorn's CSAM detection tool, Safer, the AI feature uses "advanced machine learning (ML) classification models" to "detect new or previously unreported CSAM," generating a "risk score to make human decisions easier and faster."

The model was trained in part using data from the National Center for Missing and Exploited Children (NCMEC) CyberTipline, relying on real CSAM data to detect patterns in harmful images and videos. Once suspected CSAM is flagged, a human reviewer remains in the loop to ensure oversight. It could potentially be used to probe suspected CSAM rings proliferating online.

It could also, of course, make mistakes, but Kevin Guo, Hive's CEO, told Ars that extensive testing was conducted to reduce false positives or negatives substantially. While he wouldn't share stats, he said that platforms would not be interested in a tool where "99 out of a hundred things the tool is flagging aren't correct."

9 notes

·

View notes