#AI-powered estimating tools

Explore tagged Tumblr posts

Text

Key Factors That Impact the Accuracy of a Construction Estimating Service

Introduction

In the construction industry, cost estimation is a crucial process that determines the financial feasibility of a project. A minor miscalculation in cost estimation can lead to budget overruns, project delays, and financial losses. That’s why accuracy in a construction estimating service is essential for contractors, project managers, and developers.

Several factors influence the precision of cost estimates, including material prices, labor costs, project scope, and unforeseen risks. In this article, we will explore the key factors that impact the accuracy of a construction estimating service and how companies can enhance their estimating processes.

1. Well-Defined Project Scope

One of the most common reasons for inaccurate cost estimates is a poorly defined project scope. If project requirements, materials, and specifications are unclear, estimators may make incorrect assumptions, leading to cost discrepancies.

Unclear Scope: Missing project details force estimators to make guesses, reducing accuracy.

Frequent Scope Changes: Modifications after estimation can alter material and labor costs significantly.

Solution: Clearly define project requirements before engaging a construction estimating service and update estimates as scope changes occur.

2. Quality of Blueprints and Specifications

The accuracy of an estimate depends on the quality of the blueprints and project specifications provided. Incomplete or conflicting plans can result in incorrect material takeoffs, leading to miscalculations.

Incomplete Drawings: Missing dimensions and unclear layouts lead to errors.

Inconsistent Specifications: Variations between the design documents and project requirements can create discrepancies.

Solution: Ensure all blueprints are accurate, well-detailed, and approved before submitting them for cost estimation.

3. Material Cost Fluctuations

Material costs are one of the most variable components in construction. Prices for materials such as steel, concrete, and lumber fluctuate due to market demand, inflation, and supply chain disruptions.

Price Instability: Global market trends, tariffs, and economic conditions impact material costs.

Substitutions and Availability: Limited supply can force the use of costlier alternatives.

Solution: Use a construction estimating service that integrates real-time pricing databases to reflect the latest material costs.

4. Labor Costs and Productivity

Labor expenses make up a significant portion of construction costs. Labor rates vary based on location, workforce availability, and project complexity.

Skilled Labor Shortage: Higher demand for skilled workers drives up wages.

Labor Productivity Variations: Estimators must consider realistic productivity rates to avoid underestimating labor costs.

Solution: Conduct market research on labor rates and include productivity assessments in labor cost estimates.

5. Accuracy of Quantity Takeoffs

A construction estimating service relies on quantity takeoffs to determine material requirements. Errors in this stage can drastically impact the final cost estimate.

Manual Errors: Human mistakes in calculations can lead to material shortages or excess costs.

Incorrect Measurements: Misinterpretation of construction drawings can result in inaccurate takeoffs.

Solution: Use digital takeoff tools that automate the process and reduce the risk of human error.

6. Site Conditions and Location Factors

The physical conditions of a construction site significantly influence project costs. Factors such as soil type, weather conditions, and accessibility can impact labor and equipment costs.

Remote Locations: Higher transportation and labor costs due to distance.

Difficult Terrain: Additional work required for site preparation increases expenses.

Solution: Conduct a thorough site analysis before estimating costs and adjust estimates based on local conditions.

7. Contingency Planning and Risk Management

Unexpected project risks can lead to financial setbacks if they are not accounted for in the estimation process. Common risks include permit delays, design changes, and unforeseen environmental factors.

Lack of Contingency Funds: Failure to allocate extra funds can lead to financial struggles during the project.

Unanticipated Costs: Legal and regulatory changes may require additional expenses.

Solution: A good construction estimating service should include contingency allowances (5–10% of total project cost) to cover unforeseen expenses.

8. Estimating Software and Technology

The tools used for cost estimation can make a significant difference in accuracy. Outdated manual methods are prone to errors, while modern software solutions enhance precision and efficiency.

Manual Estimation Risks: Increased potential for human error and time-consuming calculations.

AI and Automation Benefits: AI-powered construction estimating services analyze vast amounts of data for better accuracy.

Solution: Invest in advanced estimating software that integrates real-time data and automates calculations.

9. Experience and Expertise of the Estimator

The accuracy of a construction estimating service also depends on the experience of the estimator. Skilled estimators understand industry standards, potential risks, and pricing trends better than inexperienced ones.

Lack of Industry Knowledge: Inexperienced estimators may overlook critical costs.

Improper Use of Historical Data: Inaccurate use of past project costs can distort estimates.

Solution: Hire experienced estimators and ensure continuous training on the latest industry trends and estimating techniques.

10. Economic and Market Conditions

External economic factors such as inflation, interest rates, and supply chain disruptions can impact construction costs. Estimators must factor in these variables to create realistic budgets.

High Market Demand: Increased demand for construction services can drive up material and labor costs.

Inflation and Tariffs: Rising costs of imported materials can affect estimates.

Solution: Stay updated on economic trends and adjust estimates accordingly.

Conclusion

The accuracy of a construction estimating service depends on multiple factors, including project scope clarity, material and labor cost fluctuations, estimator expertise, and the use of advanced technology. By addressing these factors, construction firms can improve cost predictability, reduce financial risks, and ensure successful project execution.

Investing in modern estimating tools, regularly updating pricing data, and refining estimation processes will enhance the reliability of construction estimating services, leading to more profitable and efficient construction projects.

#construction estimating service#accurate cost estimation#construction cost factors#estimating project expenses#construction labor costs#material price fluctuations#project scope estimation#estimating software tools#AI in construction estimating#real-time construction costs#construction bid preparation#automated quantity takeoff#site conditions impact#risk management in estimating#construction contingency planning#project budgeting#construction estimating best practices#estimating labor productivity#estimator expertise#construction bidding strategy#estimating service benefits#economic factors in construction#inflation impact on costs#cost overruns prevention#advanced estimating software#AI-powered estimating tools#digital takeoff solutions#industry trends in estimating#construction budget forecasting#construction cost control

0 notes

Note

what’s the story about the generative power model and water consumption? /gen

There's this myth going around about generative AI consuming truly ridiculous amount of power and water. You'll see people say shit like "generating one image is like just pouring a whole cup of water out into the Sahara!" and bullshit like that, and it's just... not true. The actual truth is that supercomputers, which do a lot of stuff, use a lot of power, and at one point someone released an estimate of how much power some supercomputers were using and people went "oh, that supercomputer must only do AI! All generative AI uses this much power!" and then just... made shit up re: how making an image sucks up a huge chunk of the power grid or something. Which makes no sense because I'm given to understand that many of these models can run on your home computer. (I don't use them so I don't know the details, but I'm told by users that you can download them and generate images locally.) Using these models uses far less power than, say, online gaming. Or using Tumblr. But nobody ever talks about how evil those things are because of their power generation. I wonder why.

To be clear, I don't like generative AI. I'm sure it's got uses in research and stuff but on the consumer side, every effect I've seen of it is bad. Its implementation in products that I use has always made those products worse. The books it writes and flood the market with are incoherent nonsense at best and dangerous at worst (let's not forget that mushroom foraging guide). It's turned the usability of search engines from "rapidly declining, but still usable if you can get past the ads" into "almost one hundred per cent useless now, actually not worth the effort to de-bullshittify your search results", especially if you're looking for images. It's a tool for doing bullshit that people were already doing much easier and faster, thus massively increasing the amount of bullshit. The only consumer-useful uses I've seen of it as a consumer are niche art projects, usually projects that explore the limits of the tool itself like that one poetry book or the Infinite Art Machine; overall I'd say its impact at the Casual Random Person (me) level has been overwhelmingly negative. Also, the fact that so much AI turns out to be underpaid people in a warehouse in some country with no minimum wage and terrible labour protections is... not great. And the fact that it's often used as an excuse to try to find ways to underpay professionals ("you don't have to write it, just clean up what the AI came up with!") is also not great.

But there are real labour and product quality concerns with generative AI, and there's hysterical bullshit. And the whole "AI is magically destroying the planet via climate change but my four hour twitch streaming sesh isn't" thing is hysterical bullshit. The instant I see somebody make this stupid claim I put them in the same mental bucket as somebody complaining about AI not being "real art" -- a hatemobber hopping on the hype train of a new thing to hate and feel like an enlightened activist about when they haven't bothered to learn a fucking thing about the issue. And I just count my blessings that they fell in with this group instead of becoming a flat earther or something.

2K notes

·

View notes

Note

Saw a tweet that said something around:

"cannot emphasize enough how horrid chatgpt is, y'all. it's depleting our global power & water supply, stopping us from thinking or writing critically, plagiarizing human artists. today's students are worried they won't have jobs because of AI tools. this isn't a world we deserve"

I've seen some of your AI posts and they seem nuanced, but how would you respond do this? Cause it seems fairly-on point and like the crux of most worries. Sorry if this is a troublesome ask, just trying to learn so any input would be appreciated.

i would simply respond that almost none of that is true.

'depleting the global power and water supply'

something i've seen making the roudns on tumblr is that chatgpt queries use 3 watt-hours per query. wow, that sounds like a lot, especially with all the articles emphasizing that this is ten times as much as google search. let's check some other very common power uses:

running a microwave for ten minutes is 133 watt-hours

gaming on your ps5 for an hour is 200 watt-hours

watching an hour of netflix is 800 watt-hours

and those are just domestic consumer electricty uses!

a single streetlight's typical operation 1.2 kilowatt-hours a day (or 1200 watt-hours)

a digital billboard being on for an hour is 4.7 kilowatt-hours (or 4700 watt-hours)

i think i've proved my point, so let's move on to the bigger picture: there are estimates that AI is going to cause datacenters to double or even triple in power consumption in the next year or two! damn that sounds scary. hey, how significant as a percentage of global power consumption are datecenters?

1-1.5%.

ah. well. nevertheless!

what about that water? yeah, datacenters use a lot of water for cooling. 1.7 billion gallons (microsoft's usage figure for 2021) is a lot of water! of course, when you look at those huge and scary numbers, there's some important context missing. it's not like that water is shipped to venus: some of it is evaporated and the rest is generally recycled in cooling towers. also, not all of the water used is potable--some datacenters cool themselves with filtered wastewater.

most importantly, this number is for all data centers. there's no good way to separate the 'AI' out for that, except to make educated guesses based on power consumption and percentage changes. that water figure isn't all attributable to AI, plenty of it is necessary to simply run regular web servers.

but sure, just taking that number in isolation, i think we can all broadly agree that it's bad that, for example, people are being asked to reduce their household water usage while google waltzes in and takes billions of gallons from those same public reservoirs.

but again, let's put this in perspective: in 2017, coca cola used 289 billion liters of water--that's 7 billion gallons! bayer (formerly monsanto) in 2018 used 124 million cubic meters--that's 32 billion gallons!

so, like. yeah, AI uses electricity, and water, to do a bunch of stuff that is basically silly and frivolous, and that is broadly speaking, as someone who likes living on a planet that is less than 30% on fire, bad. but if you look at the overall numbers involved it is a miniscule drop in the ocean! it is a functional irrelevance! it is not in any way 'depleting' anything!

'stopping us from thinking or writing critically'

this is the same old reactionary canard we hear over and over again in different forms. when was this mythic golden age when everyone was thinking and writing critically? surely we have all heard these same complaints about tiktok, about phones, about the internet itself? if we had been around a few hundred years earlier, we could have heard that "The free access which many young people have to romances, novels, and plays has poisoned the mind and corrupted the morals of many a promising youth."

it is a reactionary narrative of societal degeneration with no basis in anything. yes, it is very funny that laywers have lost the bar for trusting chatgpt to cite cases for them. but if you think that chatgpt somehow prevented them from thinking critically about its output, you're accusing the tail of wagging the dog.

nobody who says shit like "oh wow chatgpt can write every novel and movie now. yiou can just ask chatgpt to give you opinions and ideas and then use them its so great" was, like, sitting in the symposium debating the nature of the sublime before chatgpt released. there is no 'decay', there is no 'decline'. you should be suspicious of those narratives wherever you see them, especially if you are inclined to agree!

plagiarizing human artists

nah. i've been over this ad infinitum--nothing 'AI art' does could be considered plagiarism without a definition so preposterously expansive that it would curtail huge swathes of human creative expression.

AI art models do not contain or reproduce any images. the result of them being trained on images is a very very complex statistical model that contains a lot of large-scale statistical data about all those images put together (and no data about any of those individual images).

to draw a very tortured comparison, imagine you had a great idea for how to make the next Great American Painting. you loaded up a big file of every norman rockwell painting, and you made a gigantic excel spreadsheet. in this spreadsheet you noticed how regularly elements recurred: in each cell you would have something like "naturalistic lighting" or "sexually unawakened farmers" and the % of times it appears in his paintings. from this, you then drew links between these cells--what % of paintings containing sexually unawakened farmers also contained naturalistic lighting? what % also contained a white guy?

then, if you told someone else with moderately competent skill at painting to use your excel spreadsheet to generate a Great American Painting, you would likely end up with something that is recognizably similar to a Norman Rockwell painting: but any charge of 'plagiarism' would be absolutely fucking absurd!

this is a gross oversimplification, of course, but it is much closer to how AI art works than the 'collage machine' description most people who are all het up about plagiarism talk about--and if it were a collage machine, it would still not be plagiarising because collages aren't plagiarism.

(for a better and smarter explanation of the process from soneone who actually understands it check out this great twitter thread by @reachartwork)

today's students are worried they won't have jobs because of AI tools

i mean, this is true! AI tools are definitely going to destroy livelihoods. they will increase productivty for skilled writers and artists who learn to use them, which will immiserate those jobs--they will outright replace a lot of artists and writers for whom quality is not actually important to the work they do (this has already essentially happened to the SEO slop website industry and is in the process of happening to stock images).

jobs in, for example, product support are being cut for chatgpt. and that sucks for everyone involved. but this isn't some unique evil of chatgpt or machine learning, this is just the effect that technological innovation has on industries under capitalism!

there are plenty of innovations that wiped out other job sectors overnight. the camera was disastrous for portrait artists. the spinning jenny was famously disastrous for the hand-textile workers from which the luddites drew their ranks. retail work was hit hard by self-checkout machines. this is the shape of every single innovation that can increase productivity, as marx explains in wage labour and capital:

“The greater division of labour enables one labourer to accomplish the work of five, 10, or 20 labourers; it therefore increases competition among the labourers fivefold, tenfold, or twentyfold. The labourers compete not only by selling themselves one cheaper than the other, but also by one doing the work of five, 10, or 20; and they are forced to compete in this manner by the division of labour, which is introduced and steadily improved by capital. Furthermore, to the same degree in which the division of labour increases, is the labour simplified. The special skill of the labourer becomes worthless. He becomes transformed into a simple monotonous force of production, with neither physical nor mental elasticity. His work becomes accessible to all; therefore competitors press upon him from all sides. Moreover, it must be remembered that the more simple, the more easily learned the work is, so much the less is its cost to production, the expense of its acquisition, and so much the lower must the wages sink – for, like the price of any other commodity, they are determined by the cost of production. Therefore, in the same manner in which labour becomes more unsatisfactory, more repulsive, do competition increase and wages decrease”

this is the process by which every technological advancement is used to increase the domination of the owning class over the working class. not due to some inherent flaw or malice of the technology itself, but due to the material realtions of production.

so again the overarching point is that none of this is uniquely symptomatic of AI art or whatever ever most recent technological innovation. it is symptomatic of capitalism. we remember the luddites primarily for failing and not accomplishing anything of meaning.

if you think it's bad that this new technology is being used with no consideration for the planet, for social good, for the flourishing of human beings, then i agree with you! but then your problem shouldn't be with the technology--it should be with the economic system under which its use is controlled and dictated by the bourgeoisie.

4K notes

·

View notes

Text

Green energy is in its heyday.

Renewable energy sources now account for 22% of the nation’s electricity, and solar has skyrocketed eight times over in the last decade. This spring in California, wind, water, and solar power energy sources exceeded expectations, accounting for an average of 61.5 percent of the state's electricity demand across 52 days.

But green energy has a lithium problem. Lithium batteries control more than 90% of the global grid battery storage market.

That’s not just cell phones, laptops, electric toothbrushes, and tools. Scooters, e-bikes, hybrids, and electric vehicles all rely on rechargeable lithium batteries to get going.

Fortunately, this past week, Natron Energy launched its first-ever commercial-scale production of sodium-ion batteries in the U.S.

“Sodium-ion batteries offer a unique alternative to lithium-ion, with higher power, faster recharge, longer lifecycle and a completely safe and stable chemistry,” said Colin Wessells — Natron Founder and Co-CEO — at the kick-off event in Michigan.

The new sodium-ion batteries charge and discharge at rates 10 times faster than lithium-ion, with an estimated lifespan of 50,000 cycles.

Wessells said that using sodium as a primary mineral alternative eliminates industry-wide issues of worker negligence, geopolitical disruption, and the “questionable environmental impacts” inextricably linked to lithium mining.

“The electrification of our economy is dependent on the development and production of new, innovative energy storage solutions,” Wessells said.

Why are sodium batteries a better alternative to lithium?

The birth and death cycle of lithium is shadowed in environmental destruction. The process of extracting lithium pollutes the water, air, and soil, and when it’s eventually discarded, the flammable batteries are prone to bursting into flames and burning out in landfills.

There’s also a human cost. Lithium-ion materials like cobalt and nickel are not only harder to source and procure, but their supply chains are also overwhelmingly attributed to hazardous working conditions and child labor law violations.

Sodium, on the other hand, is estimated to be 1,000 times more abundant in the earth’s crust than lithium.

“Unlike lithium, sodium can be produced from an abundant material: salt,” engineer Casey Crownhart wrote in the MIT Technology Review. “Because the raw ingredients are cheap and widely available, there’s potential for sodium-ion batteries to be significantly less expensive than their lithium-ion counterparts if more companies start making more of them.”

What will these batteries be used for?

Right now, Natron has its focus set on AI models and data storage centers, which consume hefty amounts of energy. In 2023, the MIT Technology Review reported that one AI model can emit more than 626,00 pounds of carbon dioxide equivalent.

“We expect our battery solutions will be used to power the explosive growth in data centers used for Artificial Intelligence,” said Wendell Brooks, co-CEO of Natron.

“With the start of commercial-scale production here in Michigan, we are well-positioned to capitalize on the growing demand for efficient, safe, and reliable battery energy storage.”

The fast-charging energy alternative also has limitless potential on a consumer level, and Natron is eying telecommunications and EV fast-charging once it begins servicing AI data storage centers in June.

On a larger scale, sodium-ion batteries could radically change the manufacturing and production sectors — from housing energy to lower electricity costs in warehouses, to charging backup stations and powering electric vehicles, trucks, forklifts, and so on.

“I founded Natron because we saw climate change as the defining problem of our time,” Wessells said. “We believe batteries have a role to play.”

-via GoodGoodGood, May 3, 2024

--

Note: I wanted to make sure this was legit (scientifically and in general), and I'm happy to report that it really is! x, x, x, x

#batteries#lithium#lithium ion batteries#lithium battery#sodium#clean energy#energy storage#electrochemistry#lithium mining#pollution#human rights#displacement#forced labor#child labor#mining#good news#hope

3K notes

·

View notes

Text

Anon's explanation:

I’m curious because I see a lot of people claiming to be anti-AI, and in the same post advocating for the use of Glaze and Artshield, which use DiffusionBee and Stable Diffusion, respectively. Glaze creates a noise filter using DiffusionBee; Artshield runs your image through Stable Diffusion and edits it so that it reads as AI-generated. You don’t have to take my work for it. Search for DiffusionBee and Glaze yourself if you have doubts. I’m also curious about machine translation, since Google Translate is trained on the same kinds of data as ChatGPT (social media, etc) and translation work is also skilled creative labor, but people seem to have no qualms about using it. The same goes for text to speech—a lot of the voices people use for it were trained on professional audiobook narration, and voice acting/narration is also skilled creative labor. Basically, I’m curious because people seem to regard these types of gen AI differently than text gen and image gen. Is it because they don’t know? Is it because they don’t think the work it replaces is creative? Is it because of accessibility? (and, if so, why are other types of gen AI not also regarded as accessibility? And even then, it wouldn’t explain the use of Glaze/Artshield)

Additional comments from anon:

I did some digging by infiltrating (lurking in) pro-AI spaces to see how much damage Glaze and other such programs were doing. Unfortunately, it turns out none of those programs deter people from using the ‘protected’ art. In fact, because of how AI training works, they may actually result in better output? Something about adversarial training. It was super disappointing. Nobody in those spaces considers them even a mild deterrent anywhere I looked. Hopefully people can shed some light on the contradictions for me. Even just knowing how widespread their use is would be informative. (I’m not asking about environmental impact as a factor because I read the study everybody cited, and it wasn’t even anti-AI? It was about figuring out the best time of day to train a model to balance solar power vs water use and consumption. And the way they estimated the impact of AI was super weird? They just went with 2020’s data center growth rate as the ‘normal’ growth rate and then any ‘extra’ growth was considered AI. Maybe that’s why it didn’t pass peer review... But since people are still quoting it, that’s another reason for me to wonder why they would use Glaze and Artshield and everything. That’s why running them locally has such heavy GPU requirements and why it takes so long to process an image if you don’t meet the requirements. It’s the same electricity/water cost as generating any other AI image.)

–

We ask your questions anonymously so you don’t have to! Submissions are open on the 1st and 15th of the month.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about ethics#submitted april 15#polls about the internet#ai#gen ai#generative ai#ai tools#technology

329 notes

·

View notes

Text

Cosmic - Poe Dameron

Episode 1: A Space Odyssey next

Cosmic Masterlist | Poe Dameron Masterlist | Main Masterlist

Happy Poevember!

Pairing: Poe Dameron x reader

Summary: In 1981, in rural America, Poe crash lands to earth and you have to show him everything (set in America but reader is not necessarily American)

Content: some minor injuries and blood, not beta'd

Word Count: 2.4k

☾ ⋆*・゚:⋆*・゚☾ ⋆*・゚:⋆*・゚☾ ⋆*・゚:⋆*・゚

A deafening crash obliterated peaceful sleep on a silent, country night. You sat up in bed, abruptly, rubbing sleep from your eyes. Heart pounding and ears alert, you listened, hoping to convince yourself it was nothing - a dream, or maybe even a distant car crash.

Willing yourself to climb out of bed, you crept to the window, trying desperately to calm your breathing before drawing back the curtains.

That's when you saw it. A fire - distant, but definitely on your property. Maybe someone did crash. Or...was there some sort of electrical or gas explosion? As far as you could tell, the fire wasn't near your barn, or any of your sheds or buildings.

Scrubbing a hand over your face, you decided you better go check it out. Pulling your nightgown over your head, you grabbed the nearest pair of sweats - a crewneck gray top with matching bottoms. Taking the stairs two at a time, you headed for the back door, slipping into your boots and lifting your coat off the hook. Twisting the lock, you yanked open the door, but paused. You turned back and rummaged around in the drawer for a flashlight.

It flickered once before powering on, bright enough to lead you to the laundry room where you found a more useful spotlight flashlight and a fire extinguisher. Pushing open the screen door, you tried to estimate how far the fire was. This prompted you to grab your truck keys and drive.

The familiar creak of the your father's old truck door reminded you that this thing was probably on its last leg. You put the key in the ignition, impatiently bouncing on the bench seat.

"Come on, girl, not tonight. Come on."

After a few more sputters, the old thing cranked, a puff of smoke its only protest. With your high beams illuminating the path, you made your way to the mysterious flames.

In the few minutes it took you to drive across your property, bouncing over the uneven ground in the old truck, you started to realize how big the fire was...and that you probably should've called the fire department before you charged at it with a mere fire extinguisher.

Twisted hunks of metal had ravaged your farmland. Something huge had crashed here. An airplane or jet of some sort. Maybe experimental aircraft. Or a UFO. The musical motif from 2001: A Space Odyssey drifted through your mind. The government was sure to be here soon, probably setting up camp on your property and kicking you out of your own home on grounds of national security.

You were at a complete loss, heart racing as the smoke began to burn your lungs. Pulling your shirt collar up over your nose as a makeshift mask, you began to walk the perimeter of the crash, deciding to take a look before calling the authorities.

Rounding the corner of what appeared to be a black and orange metal wing, you heard a groan.

"Oh my god," you gasped, easing closer, braving the heat and the smoke to see what you assumed was the pilot. Something welled up inside you - adrenaline, probably, but your legs carried you forward to a man, half strapped into his seat, bloodied and unconscious.

"Oh god. Hold on. Hold on, I'm gonna get you out."

Racing back to your truck, you climbed into the truck bed, looking for a tool - anything to help you. Thankfully, you found a pair of work gloves, a wrench and a pair of pliers in the back, and a utility knife normally kept in the glove box.

You scrambled back to the man, praying to anything listening that he was not dead. After using the fire extinguisher to put out the fire immediately surrounding him, you used the knife. You cut him free of the straps holding him to the aircraft seat, grateful for gloves around such hot metal. Thankfully he wasn't a big person - not overly tall or heavy, so you were able to drag him all the way back to your truck.

It took all your strength and then some to get him all the way into the truck. You quickly examined him for obvious injuries, hoping he wasn't bleeding out or hadn't broken his back. He seemed generally okay, aside from some scrapes and cuts and minor burns.

Gingerly, you buckled him into the seat and slowly removed his helmet. He was bleeding from his temple, but the cut didn't seem deep. Blood and dirt covered his cheeks and was matted into his thick, dark curls.

"Gotta get you to a hospital." Cranking the truck, you glanced over at his orange flight suit, wondering who he could possibly work for.

You drove to the end of your property, wondering if you should drive the closest medical center, which was ten miles away, and closed, or if you should drive a hour to the closest city hospital. Either option was a gamble with your somewhat unreliable truck. What if you got stuck?

You decided against it, heading back to your house to call the fire department. They could take this man wherever he needed to go in an ambulance.

You pulled up to the house and switched off the engine, exhaling heavily before unbuckling both yourself and the pilot. You walked around the truck, opened the passenger door and jumped back with a scream as his head lolled over and his eyes blinked open.

"Where am I?" He croaked out. "Which system?"

"Hey, it's okay," you tried to soothe both him and yourself simultaneously. "You're at my farm. I think your jet crashed. I'm going to call for some help."

He tried to climb out of the truck, but flopped back into the seat with a groan. "The f...the First Order. Is the First Order here?"

You shook your head. "I-I don't know what you mean. I think you need a hospital."

Slinging one leg out the door, he gripped the truck door with his gloved hand, hauling himself to his feet.

"Careful," you instructed, reaching out to help steady him.

Deep brown eyes locked onto yours. "Thank you."

"Of course. Come on, let's get you inside."

He nodded, arm resting heavily around your shoulders. "Kriffing hell," he choked, limping with difficulty.

"Hey, I've got you. Just lean on me."

The two of you made it through the back door, into the kitchen, where you helped the pilot ease down onto a chair.

"You okay?" You asked, trying to steady him. "Is your leg broken?"

"I-I don't know. I don't think so." He leaned forward, resting his head in his hands.

"Hold on. Let me get you some water. I need to call for help."

"Wait!" He protested, stopping you with a strong grip on your arm. "Wait, who are you calling? The First Order can't know."

You shook your head. "I don't know what that is. I was just going to call an ambulance to help you and the fire department to take care of your jet out there."

"I'm fine," he waved you off, attempting to push himself up on the chair. "Believe me, I've been in tougher scrapes than this. I just need to get back to my ship, to my transceiver. Where's your satellite?"

"My satellite? I don't have a satellite," you explained. "I have a telephone. And a couple of CB radios. That's it. No satellite."

"Damn it," he huffed, seeming to grow more agitated by the moment. Yanking off his gloves, he pushed his hands through his hair, wincing as he grazed the cut on his temple.

"Let me get you some help," you insisted, opening the cupboard to get a glass, which you filled with water from the tap. "Drink this."

His eyes met yours and he nodded once, downing the glass in one gulp. You took it from him and refilled it, collecting the first aid kit from under the kitchen sink. "Here," you said, handing the glass back to him. "Drink some more. Let me look at your head. Then I'm calling an ambulance."

Without answering, he slowly accepted the glass of water, waiting patiently while you dabbed the cut on his temple, hissing as you cleansed it.

"You need to hold this gauze here for a minute. I don't think a bandage will stick in your hair," you explained. "I don't think you need stitches, but I would rather a doctor look at you."

Reaching for your arm, he stopped you, his calloused fingers circling your wrist. "Please don't call anyone. You're very kind but...please. Not until I'm sure."

With trembling breath, you swallowed down a growing sense of dread. Was this man some sort of spy? Maybe he was Russian? "Not until you're sure of what?"

"Of where I am," he emphatically explained. "And who's in control of this system. Noticing you shudder, he released your wrists. "Please, can we take your...speeder back to my ship? I won't bother you anymore."

Slowly nodding, you stood, flabbergasted as he used the table to help him climb out of his chair, standing with difficulty.

"Here, I'll help you," you found yourself offering, despite your concern about who this man could be.

Soon enough, you drove him back out to the crash site, wondering if you would somehow get into trouble with the government if this man communicated with an enemy of the state. But, not sure of what else to do, you watched as he climbed out of your truck, limped around the perimeter of the crash and did something with the ship that made the fire go out pretty quickly.

You weren't even sure if he wanted you to stay and wait for him.

After a few minutes, however, he made his way back to the truck.

"Comms are busted. My droid is a pile of wires. Glad it wasn't BB." Shaking his head, he sighed in frustration. "This whole thing is too hot to look at tonight. Do you think anyone will come looking?" He glanced over at you.

"Uhm, the nearest neighbor is five miles. Maybe no one saw," you told him. "They might see the smoke in the morning."

He nodded curtly, running a gloved hand over his face. "Would it be okay if I waited here for a little while? Maybe let my ship cool off and..." With a groan of pain, he turned to peer through the window behind him. "Do you think we could use your speeder to haul away some of the wreckage?"

You stared at him for almost a full minute. "Who are you?"

With a sardonic, exhausted half-chuckle, he shook his head. "Sorry. I...I can't tell you until I know where I am."

Chewing on your lip, you tried to decide what to do. "I'll tell you where we are. But you have to tell me where you're from too. Deal?"

He nodded, so you unbuckled your seatbelt and shifted to face him, one leg drawn up to your chest.

"We're in Iowa. But you must have known that. You must have been flying over us, maybe to the closest base, when you crashed."

"Iowa," he slowly repeated. "What system are we in?"

"You keep saying 'system' - I don't know what that means," you insistently explained. "We're in Iowa. In the United States. Are you not from here?"

"Uh, no," he quickly answered. "I have no idea where we are. Who's in charge of your United States? Are you occupied by the First Order?"

"I don't know what that is! We're the United States. Do you seriously not know the United States of America? Maybe the most powerful nation in the world? Or one of them, anyway. There's no one occupying this country. I've never even heard of something called a First Order."

"Good. That's good." Removing his gloves again, the man stroked his chin. It seemed to be a habit of his. "You said 'this world'. What planet is this?"

Without meaning to, you looked at him like he was crazy. "You must have a concussion. I definitely should've called an ambulance."

"Just - please, answer me. Please." His eyes found yours, dark eyebrows shifting pleadingly. True, deep concern radiated from his gaze as a shimmer brimmed along his lower lashes. "Please tell me. I don't understand. I don't know where I am."

"Okay, okay," you quickly reassured him. "I'll answer anything you ask. And...remember, you're going to tell me where you're from too. And a name."

He nodded quickly, scooting a little closer as if he were hanging on to your every word.

This poor man. He seemed really out of it. "We're on Earth. This is planet Earth. In North America. United States. In Iowa. On my farm. That's it, that's where we are. And you can call me Trix." You shrugged one shoulder. Not your real name, but your dad called you Trix when you were really young.

"Trix," he slowly repeated. "Trix...from Earth." He sighed, worriedly. "Earth. I've never heard of it. And you don't know the system?"

You shrugged. "I mean...Earth is in the solar system? In the Milky Way galaxy? Is that what you mean?"

"Milky Way," he gasped, staring at you in disbelief. "The Milky Way galaxy? Oh my...I've...I've never left our galaxy. I've never..."

His breathing grew shallow as his head hit the headrest with a thud.

"Oh, god, I think you're having anxiety or...just breathe." Reaching across him, you rolled down the truck window to give him fresh air, which didn't help much, because the air smelled like smoke. It seemed to help, however as he slowly began to calm down.

"Are you okay?" You finally asked after several tense moments.

"I think so. I must've. I think..." He trailed off, something in his eyes so forlorn.

You had to ask. "Are you...a spy? Are you Russian?"

Turning to face you, he frowned in confusion. "What's Russian?"

Okay. So either this man was completely mental, or...no. It couldn't be. You had watched too many science fiction films. He must have amnesia or something.

"Where are you from? You promised," you reminded him.

He swallowed hard, sitting up a bit straighter. Then he looked right into your eyes, again. There was something so honest and slightly unnerving when he did that.

"My name is Poe," he finally declared. "I'm from Yavin 4. It's in the Yavin System, in the Gordian Reach sector, in the Outer Rim Territories." Glancing down at his lap, he exhaled shakily. "It's definitely not in the Milky Way Galaxy."

next

☾ ⋆*・゚:⋆*・゚☾ ⋆*・゚:⋆*・゚☾ ⋆*・゚:⋆*・゚

Follow @ivystoryupdates and turn on notifications to never miss an update

Cosmic Masterlist | Poe Masterlist | Main Masterlist | Join my tag list

211 notes

·

View notes

Text

AI Reminder

Quick reminder folks since there's been a recent surge of AI fanfic shite. Here is some info from Earth.org on the environmental effects of ChatGPT and it's fellow AI language models.

"ChatGPT, OpenAI's chatbot, consumes more than half a million kilowatt-hours of electricity each day, which is about 17,000 times more than the average US household. This is enough to power about 200 million requests, or nearly 180,000 US households. A single ChatGPT query uses about 2.9 watt-hours, which is almost 10 times more than a Google search, which uses about 0.3 watt-hours.

According to estimates, ChatGPT emits 8.4 tons of carbon dioxide per year, more than twice the amount that is emitted by an individual, which is 4 tons per year. Of course, the type of power source used to run these data centres affects the amount of emissions produced – with coal or natural gas-fired plants resulting in much higher emissions compared to solar, wind, or hydroelectric power – making exact figures difficult to provide.

A recent study by researchers at the University of California, Riverside, revealed the significant water footprint of AI models like ChatGPT-3 and 4. The study reports that Microsoft used approximately 700,000 litres of freshwater during GPT-3’s training in its data centres – that’s equivalent to the amount of water needed to produce 370 BMW cars or 320 Tesla vehicles."

Now I don't want to sit here and say that AI is the worst thing that has ever happened. It can be an important tool in advancing effectiveness in technology! However, there are quite a few drawbacks as we have not figured out yet how to mitigate these issues, especially on the environment, if not used wisely. Likewise, AI is not meant to do the work for you, it's meant to assist. For example, having it spell check your work? Sure, why not! Having it write your work and fics for you? You are stealing from others that worked hard to produce beautiful work.

Thank you for coming to my Cyn Talk. I love you all!

237 notes

·

View notes

Text

Also preserved in our archive (Updated daily!)

Researchers report that a new AI tool enhances the diagnostic process, potentially identifying more individuals who need care. Previous diagnostic studies estimated that 7 percent of the population suffers from long COVID. However, a new study using an AI tool developed by Mass General Brigham indicates a significantly higher rate of 22.8 percent.

The AI-based tool can sift through electronic health records to help clinicians identify cases of long COVID. The often-mysterious condition can encompass a litany of enduring symptoms, including fatigue, chronic cough, and brain fog after infection from SARS-CoV-2.

The algorithm used was developed by drawing de-identified patient data from the clinical records of nearly 300,000 patients across 14 hospitals and 20 community health centers in the Mass General Brigham system. The results, published in the journal Med, could identify more people who should be receiving care for this potentially debilitating condition.

“Our AI tool could turn a foggy diagnostic process into something sharp and focused, giving clinicians the power to make sense of a challenging condition,” said senior author Hossein Estiri, head of AI Research at the Center for AI and Biomedical Informatics of the Learning Healthcare System (CAIBILS) at MGB and an associate professor of medicine at Harvard Medical School. “With this work, we may finally be able to see long COVID for what it truly is — and more importantly, how to treat it.”

For the purposes of their study, Estiri and colleagues defined long COVID as a diagnosis of exclusion that is also infection-associated. That means the diagnosis could not be explained in the patient’s unique medical record but was associated with a COVID infection. In addition, the diagnosis needed to have persisted for two months or longer in a 12-month follow-up window.

Precision Phenotyping: A Novel Approach The novel method developed by Estiri and colleagues, called “precision phenotyping,” sifts through individual records to identify symptoms and conditions linked to COVID-19 to track symptoms over time in order to differentiate them from other illnesses. For example, the algorithm can detect if shortness of breath results from pre-existing conditions like heart failure or asthma rather than long COVID. Only when every other possibility was exhausted would the tool flag the patient as having long COVID.

“Physicians are often faced with having to wade through a tangled web of symptoms and medical histories, unsure of which threads to pull, while balancing busy caseloads. Having a tool powered by AI that can methodically do it for them could be a game-changer,” said Alaleh Azhir, co-lead author and an internal medicine resident at Brigham and Women’s Hospital, a founding member of the Mass General Brigham healthcare system.

The new tool’s patient-centered diagnoses may also help alleviate biases built into current diagnostics for long COVID, said researchers, who noted diagnoses with the official ICD-10 diagnostic code for long COVID trend toward those with easier access to healthcare.

The researchers said their tool is about 3 percent more accurate than the data ICD-10 codes capture, while being less biased. Specifically, their study demonstrated that the individuals they identified as having long COVID mirror the broader demographic makeup of Massachusetts, unlike long COVID algorithms that rely on a single diagnostic code or individual clinical encounters, skewing results toward certain populations such as those with more access to care.

“This broader scope ensures that marginalized communities, often sidelined in clinical studies, are no longer invisible,” said Estiri.

Limitations and Future Directions Limitations of the study and AI tool include the fact that health record data the algorithm uses to account for long COVID symptoms may be less complete than the data physicians capture in post-visit clinical notes. Another limitation was the algorithm did not capture the possible worsening of a prior condition that may have been a long COVID symptom. For example, if a patient had COPD that worsened before they developed COVID-19, the algorithm might have removed the episodes even if they were long COVID indicators. Declines in COVID-19 testing in recent years also makes it difficult to identify when a patient may have first gotten COVID-19.

The study was limited to patients in Massachusetts.

Future studies may explore the algorithm in cohorts of patients with specific conditions, like COPD or diabetes. The researchers also plan to release this algorithm publicly on open access so physicians and healthcare systems globally can use it in their patient populations.

In addition to opening the door to better clinical care, this work may lay the foundation for future research into the genetic and biochemical factors behind long COVID’s various subtypes. “Questions about the true burden of long COVID — questions that have thus far remained elusive — now seem more within reach,” said Estiri.

Reference: “Precision phenotyping for curating research cohorts of patients with unexplained post-acute sequelae of COVID-19” by Alaleh Azhir, Jonas Hügel, Jiazi Tian, Jingya Cheng, Ingrid V. Bassett, Douglas S. Bell, Elmer V. Bernstam, Maha R. Farhat, Darren W. Henderson, Emily S. Lau, Michele Morris, Yevgeniy R. Semenov, Virginia A. Triant, Shyam Visweswaran, Zachary H. Strasser, Jeffrey G. Klann, Shawn N. Murphy and Hossein Estiri, 8 November 2024, Med. DOI: 10.1016/j.medj.2024.10.009 www.cell.com/med/fulltext/S2666-6340(24)00407-0?_returnURL=https%3A%2F%2Flinkinghub.elsevier.com%2Fretrieve%2Fpii%2FS2666634024004070%3Fshowall%3Dtrue

#long covid#covid is airborne#mask up#public health#pandemic#covid#wear a respirator#wear a mask#covid 19#coronavirus#covid is not over#covid conscious#still coviding#sars cov 2

79 notes

·

View notes

Text

The cryptocurrency hype of the past few years already started to introduce people to these problems. Despite producing little to no tangible benefits — unless you count letting rich people make money off speculation and scams — Bitcoin consumed more energy and computer parts than medium-sized countries and crypto miners were so voracious in their energy needs that they turned shuttered coal plants back on to process crypto transactions. Even after the crypto crash, Bitcoin still used more energy in 2023 than the previous year, but some miners found a new opportunity: powering the generative AI boom. The AI tools being pushed by OpenAI, Google, and their peers are far more energy intensive than the products they aim to displace. In the days after ChatGPT’s release in late 2022, Sam Altman called its computing costs “eye-watering” and several months later Alphabet chairman John Hennessy told Reuters that getting a response from Google’s chatbot would “likely cost 10 times more” than using its traditional search tools. Instead of reassessing their plans, major tech companies are doubling down and planning a massive expansion of the computing infrastructure available to them.

[...]

As the cloud took over, more computation fell into the hands of a few dominant tech companies and they made the move to what are called “hyperscale” data centers. Those facilities are usually over 10,000 square feet and hold more than 5,000 servers, but those being built today are often many times larger than that. For example, Amazon says its data centers can have up to 50,000 servers each, while Microsoft has a campus of 20 data centers in Quincy, Washington with almost half a million servers between them. By the end of 2020, Amazon, Microsoft, and Google controlled half of the 597 hyperscale data centres in the world, but what’s even more concerning is how rapidly that number is increasing. By mid-2023, the number of hyperscale data centres stood at 926 and Synergy Research estimates another 427 will be built in the coming years to keep up with the expansion of resource-intensive AI tools and other demands for increased computation. All those data centers come with an increasingly significant resource footprint. A recent report from the International Energy Agency (IEA) estimates that the global energy demand of data centers, AI, and crypto could more than double by 2026, increasing from 460 TWh in 2022 to up to 1,050 TWh — similar to the energy consumption of Japan. Meanwhile, in the United States, data center energy use could triple from 130 TWh in 2022 — about 2.5% of the country’s total — to 390 TWh by the end of the decade, accounting for a 7.5% share of total energy, according to Boston Consulting Group. That’s nothing compared to Ireland, where the IEA estimates data centers, AI, and crypto could consume a third of all power in 2026, up from 17% in 2022. Water use is going up too: Google reported it used 5.2 billion gallons of water in its data centers in 2022, a jump of 20% from the previous year, while Microsoft used 1.7 billion gallons in its data centers, an increase of 34% on 2021. University of California, Riverside researcher Shaolei Ren told Fortune, “It’s fair to say the majority of the growth is due to AI.” But these are not just large abstract numbers; they have real material consequences that a lot of communities are getting fed up with just as the companies seek to massively expand their data center footprints.

9 February 2024

#ai#artificial intelligence#energy#big data#silicon valley#climate change#destroy your local AI data centre

75 notes

·

View notes

Text

How AI energy use compares to a Google search

According to estimates from the International Energy Agency (IEA), a single ChatGPT request requires nearly 10 times the electricity of a typical Google search at 2.9 watt-hours vs. 0.2 watt-hours, respectively. If ChatGPT was utilized in all 9 billion daily searches, that would require nearly 10 terawatt-hours of additional electricity per year, the equivalent usage of 1.5 million European Union residents.

AI's environmental impact comes in large part from the power and water demands of running data centers. The IEA expects global AI electricity consumption to be ten times in 2026 what it was in 2023, and the water requirements by 2027 could be more than the entire annual usage of all of Denmark.

10 notes

·

View notes

Text

editorial from the english version of the Hankyoreh

archive link

plain text

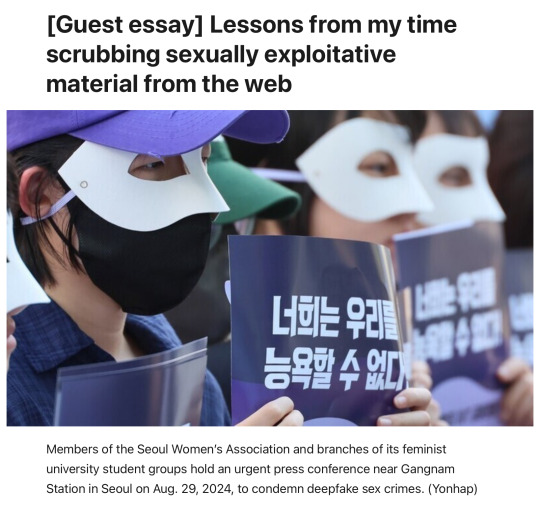

Before focusing on my master’s thesis, I worked for a brief period for an organization supporting victims of cyber sex crimes. My job was to put each and every case of illegally filmed footage assigned to me through a search engine and if I found that the images had been uploaded to certain sites, I would beg the operator of that site to take those images down.

The process of searching and subsequently scrubbing illegally filmed footage probably isn’t what most people would expect. There is no advanced AI that categorizes footage based on the faces of victims, lists the sites the footage is uploaded to, and sends automated requests for the deletion of such posts. Instead, employees and volunteers divide each reported video frame by frame, put each fragmented frame through search engines, organize each site the footage is posted to on a spreadsheet, collate the sites for verification, find the site operator’s email or contact channel, and write a message that read, “This video is illegally filmed footage so we ask that you remove it from your site. If it is not removed, the South Korean government may take action against the site pursuant to relevant laws,” translate it from Korean into English and then send it to the operator.

This happened daily. Sometimes, you would have to go through 100 or 200 cases a day. I’ve assigned a nickname to this procedure: “The Illegally Filmed Footage Removal Protocol that Seems Absurdly Advanced, But Basically Follows the Same Grueling Procedures as a Sweatshop.”

The biggest challenge when fighting digital sex crimes is their overwhelming breadth and scale. It was impossible for us, as we sat in front of our computer screens, to estimate which far-out depths of the internet any of the footage had reached.

Even if we painstakingly found 20 posts using a particular photo and saw that every post was erased, the next day we would see the photo spring up in 40 different posts. Websites distributing illegally filmed footage make various backup sites with different domains to make sure that sites are up and running even if the main site is taken down.

Many of the sites in question have servers based abroad, so even if you sent a beseeching email citing South Korean laws, they simply could just pretend that they never saw the email.

The terror of that vast scope goes beyond distribution and duplication. While deepfake pornography has been in the news recently, photoshopping a real person’s face onto a pornographic image has been a crime for some time now.

The difference is that producing such images used to be time-consuming and technically challenging, requiring the “manual” manipulation of images. But the new tool of AI has made it easy for anyone to instantaneously create deepfakes in a dizzying variety of formats.

Another frightening aspect is that anyone with access to photographs on social media can choose victims at random. And since the images are “fakes” created by AI, the perpetrator can deliberately duck the guilt of harming a real person.

Deepfake creation has spread so rapidly because it gives perpetrators a perverse sense of power over their victims — the ability to create dozens of humiliating images of someone from photographs scraped off Instagram — while also enabling them to ignore victims’ suffering because the images aren’t technically “real.” In short, deepfakes represent a game-changing acceleration of the production cycle of sexually exploitative media.

Those images spread far too fast for the handful of employees at nonprofits to keep up with. Facing such a vast challenge, permanent employees began to drift away, and their positions were once again filled by people on short-term contracts.

Lee Jun-seok, a lawmaker with the Reform Party, said during a meeting of the National Assembly’s Science, ICT, Broadcasting and Communications Committee that the 220,000 members of a deepfake channel on Telegram was an “overblown threat” and estimated that, given the percentage of Korean users on Telegram, only about 726 of the channel members are actually Koreans.

But what does it matter whether there are 220,000 Koreans on the channel or just 726?

Let’s suppose there aren’t even 726, but just 10 people in the group — they could still produce 220,000 deepfakes if they set their mind to it. Those images would then be copied and circulated beyond their point of origin and around the world, perhaps remaining permanently in some dark corners of the Internet without ever being deleted.

That’s the nature of sex crimes in the digital age.

So assuming that the criminal potential of this technology remains the same regardless of whether the channel has 220,000 members, 726 members or even just 10, I can’t help wondering what Lee thinks would be an acceptable number of deepfake purveyors that would not constitute an “overblown threat.”

38 notes

·

View notes

Text

Amazon’s Alexa has been claiming the 2020 election was stolen

The popular voice assistant says the 2020 race was stolen, even as parent company Amazon promotes the tool as a reliable election news source -- foreshadowing a new information battleground

This is a scary WaPo article by Cat Zakrzewski about how big tech is allowing AI to get information from dubious sources. Consequently, it is contributing to the lies and disinformation that exist in today's current political climate.

Even the normally banal but ubiquitous (and not yet AI supercharged) Alexa is prone to pick up and recite political disinformation. Here are some excerpts from the article [color emphasis added]:

Amid concerns the rise of artificial intelligence will supercharge the spread of misinformation comes a wild fabrication from a more prosaic source: Amazon’s Alexa, which declared that the 2020 presidential election was stolen. Asked about fraud in the race — in which President Biden defeated former president Donald Trump with 306 electoral college votes — the popular voice assistant said it was “stolen by a massive amount of election fraud,” citing Rumble, a video-streaming service favored by conservatives.

The 2020 races were “notorious for many incidents of irregularities and indications pointing to electoral fraud taking place in major metro centers,” according to Alexa, referencing Substack, a subscription newsletter service. Alexa contended that Trump won Pennsylvania, citing “an Alexa answers contributor.”

Multiple investigations into the 2020 election have revealed no evidence of fraud, and Trump faces federal criminal charges connected to his efforts to overturn the election. Yet Alexa disseminates misinformation about the race, even as parent company Amazon promotes the tool as a reliable election news source to more than 70 million estimated users. [...] Developers “often think that they have to give a balanced viewpoint and they do this by alternating between pulling sources from right and left, thinking this is going to give balance,” [Prof. Meredith] Broussard said. “The most popular sources on the left and right vary dramatically in quality.” Such attempts can be fraught. Earlier this week, the media company the Messenger announced a new partnership with AI company Seekr to “eliminate bias” in the news. Yet Seekr’s website characterizes some articles from the pro-Trump news network One America News as “center” and as having “very high” reliability. Meanwhile, several articles from the Associated Press were rated “very low.” [...] Yet despite a growing clamor in Congress to respond to the threat AI poses to elections, much of the attention has fixated on deepfakes. However, [attorney Jacob] Glick warned Alexa and AI-powered systems could “potentially double down on the damage that’s been done.” “If you have AI models drawing from an internet that is filled with platforms that don’t care about the preservation of democracy … you’re going to get information that includes really dangerous undercurrents,” he said. [color emphasis added]

#alexa#ai is spreading political misinformation#2020 election lies#the washington post#cat zakrzewski#audio

167 notes

·

View notes

Text

As digital scamming explodes in Southeast Asia, including so called “pig butchering” investment scams, the United Nations Office on Drugs and Crime (UNODC) issued a comprehensive report this week with a dire warning about the rapid growth of this criminal ecosystem. Many digital scams have traditionally relied on social engineering, or tricking victims into giving away their money willingly, rather than leaning on malware or other highly technical methods. But researchers have increasingly sounded the alarm that scammers are incorporating generative AI content and deepfakes to expand the scale and effectiveness of their operations. And the UN report offers the clearest evidence yet that these high tech tools are turning an already urgent situation into a crisis.

In addition to buying written scripts to use with potential victims or relying on templates for malicious websites, attackers have increasingly been leaning on generative AI platforms to create communication content in multiple languages and deepfake generators that can create photos or even video of nonexistent people to show victims and enhance verisimilitude. Scammers have also been expanding their use of tools that can drain a victim’s cryptocurrency wallets, have been manipulating transaction records to trick targets into sending cryptocurrency to the wrong places, and are compromising smart contracts to steal cryptocurrency. And in some cases, they’ve been purchasing Elon Musk’s Starlink satellite internet systems to help power their efforts.

“Agile criminal networks are integrating these new technologies faster than anticipated, driven by new online marketplaces and service providers which have supercharged the illicit service economy,” John Wojcik, a UNODC regional analyst, tells WIRED. “These developments have not only expanded the scope and efficiency of cyber-enabled fraud and cybercrime, but they have also lowered the barriers to entry for criminal networks that previously lacked the technical skills to exploit more sophisticated and profitable methods.”

For years, China-linked criminals have trafficked people into gigantic compounds in Southeast Asia, where they are often forced to run scams, held against their will, and beaten if they refuse instructions. Around 200,000 people, from at least 60 countries, have been trafficked to compounds largely in Myanmar, Cambodia, and Laos over the last five years. However, as WIRED reporting has shown, these operations are spreading globally—with scamming infrastructure emerging in the Middle East, Eastern Europe, Latin America, and West Africa.

Most prominently, these organized crime operations have run pig butchering scams, where they build intimate relationships with victims before introducing an “investment opportunity” and asking for money. Criminal organizations may have conned people out of around $75 billion through pig butchering scams. Aside from pig butchering, according to the UN report, criminals across Southeast Asia are also running job scams, law enforcement impersonation, asset recovery scams, virtual kidnappings, sextortion, loan scams, business email compromise, and other illicit schemes. Criminal networks in the region earned up to $37 billion last year, UN officials estimate. Perhaps unsurprisingly, all of this revenue is allowing scammers to expand their operations and diversify, incorporating new infrastructure and technology into their systems in the hope of making them more efficient and brutally effective.

For example, scammers are often constrained by their language skills and ability to keep up conversations with potentially hundreds of victims at a time in numerous languages and dialects. However, generative AI developments within the last two years—including the launch of writing tools such as ChatGPT—are making it easier for criminals to break down language barriers and create the content needed for scamming.

The UN’s report says AI can be used for automating phishing attacks that ensnare victims, the creation of fake identities and online profiles, and the crafting of personalized scripts to trick victims while messaging them in different languages. “These developments have not only expanded the scope and efficiency of cyber-enabled fraud and cybercrime, but they have also lowered the barriers to entry for criminal networks that previously lacked the technical skills to exploit sophisticated and profitable methods,” the report says.

Stephanie Baroud, a criminal intelligence analyst in Interpol’s human trafficking unit, says the impact of AI needs to be considered as part of a pig butchering scammer’s tactics going forward. Baroud, who spoke with WIRED in an interview before the publication of the UN report, says the criminal’s recruitment ads that lure people into being trafficked to scamming compounds used to be “very generic” and full of grammatical errors. However, AI is now making them appear more polished and compelling, Baroud says. “It is really making it easier to create a very realistic job offer,” she says. “Unfortunately, this will make it much more difficult to identify which is the real and which is the fake ads.”

Perhaps the biggest AI paradigm shift in such digital attacks comes from deepfakes. Scammers are increasingly using machine-learning systems to allow for real-time face-swapping. This technology, which has also been used by romance scammers in West Africa, allows criminals to change their appearance on calls with their victims, making them realistically appear to be a different person. The technology is allowing “one-click” face swaps and high-resolution video feeds, the UN’s report states. Such services are a game changer for scammers, because they allow attackers to “prove” to victims in photos or real-time video calls that they are who they claim to be.

Using these setups, however, can require stable internet connections, which can be harder to maintain within some regions where pig butchering compounds and other scamming have flourished. There has been a “notable” increase in cops seizing Starlink satellite dishes in recent months in Southeast Asia, the UN says—80 units were seized between April and June this year. In one such operation carried out in June, Thai police confiscated 58 Starlink devices. In another instance, law enforcement seized 10 Starlink devices and 4,998 preregistered SIM cards while criminals were in the process of moving their operations from Myanmar to Laos. Starlink did not immediately respond to WIRED’s request for comment.

“Obviously using real people has been working for them very well, but using the tech could be cheaper after they have the required computers” and connectivity, says Troy Gochenour, a volunteer with the Global Anti-Scam Organization (GASO), a US-based nonprofit that fights human-trafficking and cybercrime operations in Southeast Asia.

Gochenour’s research involves tracking trends on Chinese-language Telegram channels related to carrying out pig butchering scams. And he says that it is increasingly common to see people applying to be AI models for scam content.

In addition to AI services, attackers have increasingly leaned on other technical solutions as well. One tool that has been increasingly common in digital scamming is so-called “crypto drainers,” a type of malware that has particularly been deployed against victims in Southeast Asia. Drainers can be more or less technically sophisticated, but their common goal is to “drain” funds from a target’s cryptocurrency wallets and redirect the currency to wallets controlled by attackers. Rather than stealing the credentials to access the target wallet directly, drainers are typically designed to look like a legitimate service—either by impersonating an actual platform or creating a plausible brand. Once a victim has been tricked into connecting their wallet to the drainer, they are then manipulated into approving one or a few transactions that grant attackers unintended access to all the funds in the wallet.

Drainers can be used in many contexts and with many fronts. They can be a component of pig butchering investment scams, or promoted to potential victims through compromised social media accounts, phishing campaigns, and malvertizing. Researchers from the firm ScamSniffer, for example, published findings in December about sponsored social media and search engine ads linked to malicious websites that contained a cryptocurrency drainer. The campaign, which ran from March to December 2023 reportedly stole about $59 million from more than 63,000 victims around the world.

Far from the low-tech days of doing everything through social engineering by building a rapport with potential victims and crafting tricky emails and text messages, today’s scammers are taking a hybrid approach to make their operations as efficient and lucrative as possible, UN researchers say. And even if they aren’t developing sophisticated malware themselves in most cases, scammers are increasingly in the market to use these malicious tools, prompting malware authors to adapt or create hacking tools for scams like pig butchering.

Researchers say that scammers have been seen using infostealers and even remote access trojans that essentially create a backdoor in a victim’s system that can be utilized in other types of attacks. And scammers are also expanding their use of malicious smart contracts that appear to programmatically establish a certain agreed-upon transaction or set of transactions, but actually does much more. “Infostealer logs and underground data markets have also been critical to ongoing market expansion, with access to unprecedented amounts of sensitive data serving as a major catalyst,” Wojcik, from the UNODC, says.

The changing tactics are significant as global law enforcement scrambles to deter digital scamming. But they are just one piece of the larger picture, which is increasingly urgent and bleak for forced laborers and victims of these crimes.

“It is now increasingly clear that a potentially irreversible displacement and spillover has taken place in which organized crime are able to pick, choose, and move value and jurisdictions as needed, with the resulting situation rapidly outpacing the capacity of governments to contain it,” UN officials wrote in the report. “Failure to address this ecosystem will have consequences for Southeast Asia and other regions.”

29 notes

·

View notes

Note

genuinely curious but I don't know how to phrase this in a way that sounds less accusatory so please know I'm asking in good faith and am just bad at words

what are your thoughts on the environmental impact of generative ai? do you think the cost for all the cooling system is worth the tasks generative ai performs? I've been wrangling this because while I feel like I can justify it as smaller scales, that would mean it isn't a publicly available tool which I also feel uncomfortable with

the environmental impacts of genAI are almost always one of three things, both by their detractors and their boosters:

vastly overstated

stated correctly, but with a deceptive lack of context (ie, giving numbers in watt-hours, or amount of water 'used' for cooling, without necessary context like what comparable services use or what actually happens to that water)

assumed to be on track to grow constantly as genAI sees universal adoption across every industry

like, when water is used to cool a datacenter, that datacenter isn't just "a big building running chatgpt" -- datacenters are the backbone of the modern internet. now, i mean, all that said, the basic question here: no, i don't think it's a good tradeoff to be burning fossil fuels to power the magic 8ball. but asking that question in a vacuum (imo) elides a lot of the realities of power consumption in the global north by exceptionalizing genAI as opposed to, for example, video streaming, or online games. or, for that matter, for any number of other things.

so to me a lot of this stuff seems like very selective outrage in most cases, people working backwards from all the twitter artists on their dashboard hating midjourney to find an ethical reason why it is irredeemably evil.

& in the best, good-faith cases, it's taking at face value the claims of genAI companies and datacenter owners that the power usage will continue spiralling as the technology is integrated into every aspect of our lives. but to be blunt, i think it's a little naive to take these estimates seriously: these companies rely on their stock prices remaining high and attractive to investors, so they have enormous financial incentives not only to lie but to make financial decisions as if the universal adoption boom is just around the corner at all times. but there's no actual business plan! these companies are burning gigantic piles of money every day, because this is a bubble

so tldr: i don't think most things fossil fuels are burned for are 'worth it', but the response to that is a comprehensive climate politics and not an individualistic 'carbon footprint' approach, certainly not one that chooses chatgpt as its battleground. genAI uses a lot of power but at a rate currently comparable to other massively popular digital leisure products like fortnite or netflix -- forecasts of it massively increasing by several orders of magnitude are in my opinion unfounded and can mostly be traced back to people who have a direct financial stake in this being the case because their business model is an obvious boondoggle otherwise.

868 notes

·

View notes

Text

Why no one feels threatened by a coffee machine, but everyone panics over ChatGPT

or how lack of critical thinking and self-worth will break you long before any AI does

Let’s talk about a strange little paradox.

No one has an existential crisis because a coffee machine makes better cappuccino than they do. No one spirals when an elevator takes them to the 14th floor faster than their legs could. No one loses sleep over a calculator doing math better.

But introduce AI into the creative process — and suddenly:

“What if it writes better than me?” “What if my ideas aren’t that unique?” “What if I’m no longer needed?”

Hold up.

If you don’t have critical thinking or a stable sense of self — anything can shatter you. Instagram can. A magazine cover can. A successful friend can. Or even your own reflection on a bad day.

AI is not the enemy. Your relationship with comparison is.

Comparison isn’t the problem. The way you use it is.

Useful comparison:

comparing yourself to your past self — growth.

comparing yourself to your process — grounding.

Toxic comparison:

comparing yourself to someone else’s highlight reel — poison.

comparing yourself to an algorithm — nonsense.

AI is not a person. It doesn’t mean anything. It doesn’t feel anything. It’s not better than you. It’s not worse. It’s just not you.

Comparing yourself to a language model is like hating yourself because your bathroom scale is better at estimating weight than your eyes. Yes. It is. Because it was built for that. So what?

If you feel broken by AI, ask yourself: what was already shaky?

When people say “AI shattered my self-confidence”, I hear: