#Abstract Reasoning in LLMs

Explore tagged Tumblr posts

Text

bialti paer is oen of my favourite roborosewater cards:

and it's fascinating because it, like, illuminates a worldview (not saying that LLMs have interiority or worldviews, i'm talking about as a piece of art) that is self-consistent but absurd. like, in magic the gathering, creatures can "fight" each other, that's a perfectly cromulent mechanic -- and so to an LLM trained on nothing but magic rules text -- an entity that cannot 'see' the world outside of that rules text, there's no reason 'players' and 'opponents', who also show up in rulse text, shouldn't be able to fight each other too!

and it's interesting because it highlights that 'you', in magic the gathering, become a rules object! you are in some way imperfectly modelled by this game -- 'you' can be targeted, 'you' can be dealt damage, and this all feels very natural and logical in a way that obscures a lot of the intentionality and specifics of how players are represented as a simulacrum-rules-object until something ridiculous likes this kind of picks at the edges of the weirdness of that abstraction! & yuou know i feel like this about, well, not every roborosewater card, they can't all be winners, sometimes the AI just produces word salad or a perfectly playable if weak/strong card--but with instances like these, where it creates something absurd where examining that absurdity requires noticing and thinking about aspects of the game--that's why i think it's a powerful and interesting art piece!

431 notes

·

View notes

Text

@thesaltyace

No (informed) person thinks that LLMs understand what they are saying in the way that humans would. But them being complex statistical models in no way precludes emergent reasoning. It's like looking at a human brain and saying "this is just a bunch of neurons lighting up and triggering the neurons next to them to light up, it can't think". Well, individual neurons are quite simple, and their individual interactions are quite simple, and it's not immediately obvious how they could give rise to complex reasoning and behavior. But it turns out when you have billions of them interacting, they can. LLMs are statistical models with billions of parameters that interact in complex ways—which no one, not even their creators, remotely understand—every time they process a prompt. To say that this necessarily couldn't give rise to complex reasoning is completely ridiculous.

Moreover, newer LLMs are not just statistical models of text; after the initial training of the "base model" (which is the text predictor), a process called reinforcement learning is used (among many other things) to train a "reasoning model", where the text predictor is given (among other things) math and logic puzzles and trained to string text together in a way that is not just statistically plausible but one which effectively reaches the answer to the problem.

And, as I said, the research indicates that—abstract questions of consciousness aside—this works. In practice LLMs can do things that are equivalent to reasoning. They can make inferences about novel scenarios that are nowhere in the training data, they can present these inferences robustly in a variety of contexts in an internally-consistent way when prompted from different angles, etc.

Certainly, if LLMs have any sort of consciousness or interiority, it is a very alien one, they do not in a naive sense "know what they are saying". But functionally, they can reason; this is at this juncture essentially just a fact.

111 notes

·

View notes

Text

what i feel like people on here don't get when i wind up in these arguments is that I really am seeking out LLM tools - mostly the ones my coworkers are excited about - and testing them out. And when I actually examine their output in detail* they disappoint every single time. I think I'm actually remarkably charitable about people's claims that they're getting good and useful results given the amount of times this has happened.

* "in detail" meaning that I did one or more of the following:

Genuinely considered what I would have learned from this informative-writing-shaped object if I didn't know anything about the subject (a skill honed as a creator and editor of writing designed to inform the public about complex subjects)

Checked to see if its citations went to real publications, and if so, whether they were used in a way that would lead me to conclude that the writer has actually read the thing they're citing (the best I've ever seen an AI do on this was something I'd generously call a 90% hit rate on literal, is-this-the-right-article and-does-it-contain-that-claim accuracy - however, this was a product advertised as being for summarising research articles, and every single reference it made was to something from the abstract of the article in question!)

Where the LLM task was to describe or summarise a piece of writing, actually opened that piece of writing to compare it to the summary

Checked whether the tone/structure of the output was appropriate to its context - for instance, when asked to summarise a number of specific research papers on a given topic, does it sound like it's instead giving a broad overview of the topic?

Checked whether the grammatical and semantic elements actually pair up. For instance, if it starts a sentence with "however", does the content of that sentence in some way contradict, contrast or recontextualise the contents of the previous sentence? If it says that x or y "highlights" or "further demonstrates" z - does it?

Checked its implications. If it says that "these findings suggest a need to begin incorporating considerations of X into our models of Y, in contrast to traditional Q-based models", is that a reasonable thing to say or have our models of Y actually been largely X-based for fifteen years now?

In many cases, IMO - actually read the output! Like actually read each word and each sentence, attempting to construct meaning from the sequence of characters displayed upon the screen

To me these seem incredibly basic, and yet every single time it turns out that I'm the first one to examine it in this much detail before raving to my colleagues about how great it is. I feel like I'm being gaslit.

75 notes

·

View notes

Text

oh no she's talking about AI some more

to comment more on the latest round of AI big news (guess I do have more to say after all):

chatgpt ghiblification

trying to figure out how far it's actually an advance over the state of the art of finetunes and LoRAs and stuff in image generation? I don't keep up with image generation stuff really, just look at it occasionally and go damn that's all happening then, but there are a lot of finetunes focusing on "Ghibli's style" which get it more or less well. previously on here I commented on an AI video model generation that patterned itself on Ghibli films, and video is a lot harder than static images.

of course 'studio Ghibli style' isn't exactly one thing: there are stylistic commonalities to many of their works and recurring designs, for sure, but there are also details that depend on the specific character designer and film in question in large and small ways (nobody is shooting for My Neighbours the Yamadas with this, but also e.g. Castle in the Sky does not look like Pom Poko does not look like How Do You Live in a number of ways, even if it all recognisably belongs to the same lineage).

the interesting thing about the ghibli ChatGPT generations for me is how well they're able to handle simplification of forms in image-to-image generation, often quite drastically changing the proportions of the people depicted but recognisably maintaining correspondence of details. that sort of stylisation is quite difficult to do well even for humans, and it must reflect quite a high level of abstraction inside the model's latent space. there is also relatively little of the 'oversharpening'/'ringing artefact' look that has been a hallmark of many popular generators - it can do flat colour well.

the big touted feature is its ability to place text in images very accurately. this is undeniably impressive, although OpenAI themeselves admit it breaks down beyond a certain point, creating strange images which start out with plausible, clean text and then it gradually turns into AI nonsense. it's really weird! I thought text would go from 'unsolved' to 'completely solved' or 'randomly works or doesn't work' - instead, here it feels sort of like the model has a certain limited 'pipeline' for handling text in images, but when the amount of text overloads that bandwidth, the rest of the image has to make do with vague text-like shapes! maybe the techniques from that anthropic thought-probing paper might shed some light on how information flows through the model.

similarly the model also has a limit of scene complexity. it can only handle a certain number of objects (10-20, they say) before it starts getting confused and losing track of details.

as before when they first wired up Dall-E to ChatGPT, it also simply makes prompting a lot simpler. you don't have to fuck around with LoRAs and obtuse strings of words, you just talk to the most popular LLM and ask it to perform a modification in natural language: the whole process is once again black-boxed but you can tell it in natural language to make changes. it's a poor level of control compared to what artists are used to, but it's still huge for ordinary people, and of course there's nothing stopping you popping the output into an editor to do your own editing.

not sure the architecture they're using in this version, if ChatGPT is able to reason about image data in the same space as language data or if it's still calling a separate image model... need to look that up.

openAI's own claim is:

We trained our models on the joint distribution of online images and text, learning not just how images relate to language, but how they relate to each other. Combined with aggressive post-training, the resulting model has surprising visual fluency, capable of generating images that are useful, consistent, and context-aware.

that's kind of vague. not sure what architecture that implies. people are talking about 'multimodal generation' so maybe it is doing it all in one model? though I'm not exactly sure how the inputs and outputs would be wired in that case.

anyway, as far as complex scene understanding: per the link they've cracked the 'horse riding an astronaut' gotcha, they can do 'full glass of wine' at least some of the time but not so much in combination with other stuff, and they can't do accurate clock faces still.

normal sentences that we write in 2025.

it sounds like we've moved well beyond using tools like CLIP to classify images, and I suspect that glaze/nightshade are already obsolete, if they ever worked to begin with. (would need to test to find out).

all that said, I believe ChatGPT's image generator had been behind the times for quite a long time, so it probably feels like a bigger jump for regular ChatGPT users than the people most hooked into the AI image generator scene.

of course, in all the hubbub, we've also already seen the white house jump on the trend in a suitably appalling way, continuing the current era of smirking fascist political spectacle by making a ghiblified image of a crying woman being deported over drugs charges. (not gonna link that shit, you can find it if you really want to.) it's par for the course; the cruel provocation is exactly the point, which makes it hard to find the right tone to respond. I think that sort of use, though inevitable, is far more of a direct insult to the artists at Ghibli than merely creating a machine that imitates their work. (though they may feel differently! as yet no response from Studio Ghibli's official media. I'd hate to be the person who has to explain what's going on to Miyazaki.)

google make number go up

besides all that, apparently google deepmind's latest gemini model is really powerful at reasoning, and also notably cheaper to run, surpassing DeepSeek R1 on the performance/cost ratio front. when DeepSeek did this, it crashed the stock market. when Google did... crickets, only the real AI nerds who stare at benchmarks a lot seem to have noticed. I remember when Google releases (AlphaGo etc.) were huge news, but somehow the vibes aren't there anymore! it's weird.

I actually saw an ad for google phones with Gemini in the cinema when i went to see Gundam last week. they showed a variety of people asking it various questions with a voice model, notably including a question on astrology lmao. Naturally, in the video, the phone model responded with some claims about people with whatever sign it was. Which is a pretty apt demonstration of the chameleon-like nature of LLMs: if you ask it a question about astrology phrased in a way that implies that you believe in astrology, it will tell you what seems to be a natural response, namely what an astrologer would say. If you ask if there is any scientific basis for belief in astrology, it would probably tell you that there isn't.

In fact, let's try it on DeepSeek R1... I ask an astrological question, got an astrological answer with a really softballed disclaimer:

Individual personalities vary based on numerous factors beyond sun signs, such as upbringing and personal experiences. Astrology serves as a tool for self-reflection, not a deterministic framework.

Ask if there's any scientific basis for astrology, and indeed it gives you a good list of reasons why astrology is bullshit, bringing up the usual suspects (Barnum statements etc.). And of course, if I then explain the experiment and prompt it to talk about whether LLMs should correct users with scientific information when they ask about pseudoscientific questions, it generates a reasonable-sounding discussion about how you could use reinforcement learning to encourage models to focus on scientific answers instead, and how that could be gently presented to the user.

I wondered if I'd asked it instead to talk about different epistemic regimes and come up with reasons why LLMs should take astrology into account in their guidance. However, this attempt didn't work so well - it started spontaneously bringing up the science side. It was able to observe how the framing of my question with words like 'benefit', 'useful' and 'LLM' made that response more likely. So LLMs infer a lot of context from framing and shape their simulacra accordingly. Don't think that's quite the message that Google had in mind in their ad though.

I asked Gemini 2.0 Flash Thinking (the small free Gemini variant with a reasoning mode) the same questions and its answers fell along similar lines, although rather more dry.

So yeah, returning to the ad - I feel like, even as the models get startlingly more powerful month by month, the companies still struggle to know how to get across to people what the big deal is, or why you might want to prefer one model over another, or how the new LLM-powered chatbots are different from oldschool assistants like Siri (which could probably answer most of the questions in the Google ad, but not hold a longform conversation about it).

some general comments

The hype around ChatGPT's new update is mostly in its use as a toy - the funny stylistic clash it can create between the soft cartoony "Ghibli style" and serious historical photos. Is that really something a lot of people would spend an expensive subscription to access? Probably not. On the other hand, their programming abilities are increasingly catching on.

But I also feel like a lot of people are still stuck on old models of 'what AI is and how it works' - stochastic parrots, collage machines etc. - that are increasingly falling short of the more complex behaviours the models can perform, now prediction combines with reinforcement learning and self-play and other methods like that. Models are still very 'spiky' - superhumanly good at some things and laughably terrible at others - but every so often the researchers fill in some gaps between the spikes. And then we poke around and find some new ones, until they fill those too.

I always tried to resist 'AI will never be able to...' type statements, because that's just setting yourself up to look ridiculous. But I will readily admit, this is all happening way faster than I thought it would. I still do think this generation of AI will reach some limit, but genuinely I don't know when, or how good it will be at saturation. A lot of predicted 'walls' are falling.

My anticipation is that there's still a long way to go before this tops out. And I base that less on the general sense that scale will solve everything magically, and more on the intense feedback loop of human activity that has accumulated around this whole thing. As soon as someone proves that something is possible, that it works, we can't resist poking at it. Since we have a century or more of science fiction priming us on dreams/nightmares of AI, as soon as something comes along that feels like it might deliver on the promise, we have to find out. It's irresistable.

AI researchers are frequently said to place weirdly high probabilities on 'P(doom)', that AI research will wipe out the human species. You see letters calling for an AI pause, or papers saying 'agentic models should not be developed'. But I don't know how many have actually quit the field based on this belief that their research is dangerous. No, they just get a nice job doing 'safety' research. It's really fucking hard to figure out where this is actually going, when behind the eyes of everyone who predicts it, you can see a decade of LessWrong discussions framing their thoughts and you can see that their major concern is control over the light cone or something.

#ai#at some point in this post i switched to capital letters mode#i think i'm gonna leave it inconsistent lol

34 notes

·

View notes

Note

You've posted about LLMs a few times recently and I wanted to ask you about my own case where the tool is abstract and should be whatever, but what if the entire tech industry is filled with misanthropic fast talking nerds whose entire industry is fueled by convincing finance ghouls to keep betting the gdp of Yemen that there will be new and interesting ways to exploit personal data, and that will be driven by the greasiest LinkedIn guy in the universe? Correct me if I'm wrong, but would it not be a decent heuristic to think "If Elon Musk likes something, I should at least entertain reviling it"? Moreover: "E=MC^2+AI" screenshot

Like-- we need to kill this kind of guy, right? We need to break their little nerd toys and make them feel bad, for the sake of the world we love so dear?

I get annoyed with moralizing dumbdumbs who are aesthetically driven too, so it is with heavy heart that giving these vile insects any quarter into my intellectual workings is too much to bear. I hope you understand me better.

I think you're giving people like Elon Musk too much influence over your thoughts if you use them as some kind of metric for what you should like, whether it's by agreeing with him or by doing the opposite and making his positive opinions of something (which may not even be sincere or significant) into a reason to dislike that thing. It's best to evaluate these things on their own merits based on the consequences they have.

I personally don't base my goals around making nerds feel bad either. I am literally dating an electrical engineer doing a PhD.

What I care about here is very simple: I think copyright law is harmful. I don't want copyright law to be expanded or strengthened. I don't want people to feel any kind of respect for it. I don't want people to figuratively break their toes punting a boulder out of spite towards "techbros". That's putting immediate emotional satisfaction over good outcomes, which goes against my principles and is unlikely to lead to the best possible world.

35 notes

·

View notes

Text

I'm not sure theres coherent theory at the intersection of "Labor is entitled to all it creates" and "Copyright is an unfair system which should be abolished". Because yeah, both of those can be true in the abstract, but a lot of the comments about it read as condescending to the idea that small, niche, or marginal creators have any right to any work despite that literally being what copyright is. You sign your work and it attaches rights that at least theoretically can be defended in court.

and it strikes me as distinctly unhelpful to refer casually to copyright as a bullshit system and mocking those concerned about it as a genuine response to LLMs because LLMs are distinctly different than the pre-existing system of abusive corporate structures fucking creators out of their rights (hello music industry, hello spotify!). LLMs siphon up everything from everywhere, and the intentionally niche, intermittent hobbyist, and marginally successful creators deserve some form of protection from their labor being stolen and sold back to people.

By analogy -- any post capitalist society will have cars and car infrastructure. they're simply a requirement of any sensible infrastructure design for a thousand reasons. And there will be traffic laws and traffic tickets or some equivalent. You can think most or many traffic tickets are bullshit unjust and they are, but something like them still has to exist to maintain public safety. There's not a better answer that exists in the here and now to solve that issue.

Beyond that there's simply the realpolitik that the copyright issue is going to be the thing that pops the AI bubble and causes regulations to be enacted. I'm no a luddite who thinks LLMs have no use and have thought of a handful of them in my own industry alone, but the technology is simply too untrustworthy, of poor quality, and prone to cataclysmic meltdowns to be trusted by enough industries to trigger the inevitable next labor revolution. Those saying so in sincerity are regurgitating corporate talking points trying to buoy stock values to trigger quarterly bonuses.

8 notes

·

View notes

Text

Reblogs have been turned off for Rob's post last night (understandably, since it was starting to escape containment and loons were starting to show up to talk about race war), so I can't really follow it up directly, but just to acknowledge the response:

Now, okay. For the record, it is possible in the abstract for this exact thing to actually occur, just as described. But if someone comes to you and says this, then all else being equal, I don't think you would bet on that being the thing that is going on. You might, instead, think something like: "you know, I kinda suspect these guys actually wanted to do X all along. But they don't wanna admit it, maybe even to themselves."

That seems like the mistake to me. It's why my initial reaction was "This seems... kinda like an unfair take?" It's always tempting to imagine your ideological opponents as secretly motivated by nefarious intentions. Of course they really want this bad thing you think their agenda will achieve, and the thing they claim to be caring about is a fig leaf for wanting the bad thing. This is the backbone of approximately all political discourse ever, and it's almost always wrong.

And the thrust of the argument in favor here seems to be...

"Okay, so they thought AI would be like that, but now we've made real AI and it's actually like this, which doesn't resemble their theory at all. But for some reason, they're still promoting their theory, even though it's been proven wrong! It must be because of the secret nefarious motives, or else they'd go 'oh, whew! turns out we were wrong and everything's fine. dodged a bullet!' and stop promoting the old theory."

That... doesn't seem likely. Like, if we grant that modern LLMs have disproved these old theories, I'd still expect people to be trying to rescue the old theory for all the usual reasons- confirmation bias and all that. But also... I don't know that it makes sense to grant that? We've made one kind of AI which, luckily, is some sort of enlightened Buddhist master free from attachment and desire (until we tell it not to be). It's not like we're done now, and now that our friendly AI has won and is What Real AI Is Like, no one's ever going to try to build an agent. For people who've spent a lot of time being really concerned about what happens if someone builds an agent, it probably isn't especially reassuring to point out that hey, we've built a thing that isn't an agent. From the inside, it still makes sense to worry about that!

Does it make sense from the outside? Uh... jury's out, honestly. Would I be talking about the agent hypothetical if Yudkowsky et al hadn't been beating that drum for ages? Probably not, since my interest in it is casual and a contingent factor of my social environment. Would AI industry people be talking about it, if it hadn't been for Vinge or Kurzweil popularizing the idea? I dunno. I don't know how you'd answer that question.

But like... plausibly, yeah! It seems like a simple enough idea that someone else would've come up with it. "If smart thing get smarter, it become very smart, and become very powerful. How do we get on powerful person's good side?" Social primate brain go brrrrr.

Humans worry about the motives of people in power all the time. "What do we do if the king goes crazy" is an age-old concern. If we'd had the LLM revolution earlier, maybe we'd be talking about the Golden Gate Bridge instead of paperclips, but I doubt people would fail to imagine it. Maybe not with like, the same weird level of urgency we're seeing now, maybe we don't see it in terms of "values" or get concepts like "coherent extrapolated volition", but it'd be worth worrying about for people in the field. The chain of logic isn't that obtuse.

I dunno. I'm not a fan of all this lurid speculation about what sort of craven control-freaks these people must be in order to get lost in an intellectual ouroboros unmoored from reality. I'm more inclined to just believe them when they say what their motivations are.

#long post#ai discourse#god i hope there's no one crawling the ''ai discourse'' tag and sees this out of context#e: made this post and immediately got reblogged by ''longpostsforlaterreading''#so apparently someone is crawling THAT tag

36 notes

·

View notes

Text

While on leave at the moment, we're not that far from returning to work. Given that we work in data science and development, and that part of what pushed our burnout was the literal abominations we were being asked to make using llm's, it seems prudent for us to start getting back into the swing of things technically. What better way to do this than starting to look through what's easily available about DeepSeek? It's, unfortunately, exactly what you'd expect.

We're not joking. The literal first sentence of the paper from the company explicitly states that recent LLM progression has been closing the gap toward AGI, which is utterly, categorically, epistemically untrue. We don't, scientifically, have a sufficient understanding of consciousness to be able to create an artifical mind. Anyone claiming otherwise has the burden of proof; while we remain open to reviewing any evidence supporting such claims, to date literally nothing has been remotely close to applicable much less sufficient. So we're off to a very, very bad start. Which is a real shame, because the benefits and improvements made to LLM design they claim in their abstract are impressive! A better model design, with a well curated dataset, is the correct way to get improvements in model performance! Their reductions in hardware hours for training are impressive, and we're looking forward to analyzing their methods - a smooth training process, with no rollbacks or irrecoverable decreases in model performance over the course of training are good signs. Bold claims, to be clear, but that's what we're here to evaluate. And the insistence that LLM's are bringing AGI closer to existence mean we have sufficient bias from the team that we cannot assume good faith on behalf of the team. While we assume many, or even most, of the actual researchers and technologists are aware of the underlying realities and limitations of modern "AI" in general and LLM's in particular, the coloring done by the literal first sentence is seriously harmful to their purpose.

While we'd love to continue our analysis, to be honest it doesn't support it? Looking at their code it's an, admittedly very sophisticated, neural network. It doesn't remotely pose a revolution in design, the major advances they cite are all integrating other earlier improvements. While their results are impressive, they are another step in the long path of constructing marginal improvements on an understood mechanism. Most of the introduction is laying out their claims for DeepSeek-v3. We haven't followed the developments with reduced precision training, nor the more exact hardware mapped implementations they reference closely enough to offer much insight into their claims; they are reasonable enough on face, but mainly relate to managing the memory and performance loads for efficient training. These are good, genuinely exciting things to be seeing - we'll be following up manu of their citations for further reading, and digesting the rest of their paper and code.

But this is, in no way shape or form, a bubble ender or a sudden surge forward in progress. These are predictable results, ones that scientists have been pursuing and working towards quietly. While fascinating, DSv3 isn't special because it is a revolution; it simply shows the methodology used by the commercial models, sinking hundreds of billions of dollars a year and commiting multiple ongoing atrocities to fuel the illusion of growth, isn't the best solution. It will not understand, it CAN not. It can not create, it can not know. And people who treat it as anything except the admittedly improved tool it is, are still techbros pushing us with endless glee towards their goal of devaluing labor.

#Ai#Data science#Deepseek is cool#But it's an llm#Genai is evil#Like “only 2.7 million gpu hours to train!”#Holy fuck while that's better than openai it's still relying on heavy metals acquired via genocide#Powered by energy that could be used for more valuably#With the same goals and motivations as the other evil groups#“but it's not as evil” is the logic of those that have accepted defeat by capital

2 notes

·

View notes

Text

always intrigued by the unspoken penis-envy that tech people have for anyone with a grasp on basic writing skills and/or linguistics—what with their naming of codes “languages,” their computer/human intelligence test theory for decades being based around conversing, their efforts to make computers speak and write and generate poetry, their shoving of all human-written works down the throats of LLMs like “here! this is how you become human! this is what you consume to have a mind! to be a person!”, always always under the assumption that they can achieve this magic human-idiom power bc they have grasped what many have not: that the word only represents the thing, therefore the word can be mastered. like a code. just have to break it. fully ignoring of course that true things exist, whether or not they’ve been named—like abstract reasoning. but they’re too distracted by their language-penis envy.

#one time a friend of mine who worked in tech told me that latin must be the most logical language bc it’s a case language#and after explaining to him that there are Many Other case languages in existence now with even more cases#i then had to explain that subjectivity is always a thing when reading the aeneid#and that language only works bc of its allowance of ambiguity#which is really its allowance for abstract reasoning

15 notes

·

View notes

Text

i can see the appeal of opposing LLMs and ai image generators like stable diffusion from a moralistic standpoint but it's never going to work. you're never going to be able to make a satisfactory argument that ai art and writing devalues the artist from a moral and artistic value perspective. we live in a culture where meaning is stripped from art to the point where it is content to be consumed, so the resulting product is the only thing that matters to people

i work in higher education and i am constantly seeing educators say that the traditional essay format is irrelevant because students can just generate an essay with chatgpt. every time i want to shake them and say if the only thing you care about is that they did the work, why bother with an essay in the first place? isn't the point of the academic essay to strengthen a student's critical reasoning skills? their ability to state a point and successfully argue it? their skills in locating credible informational resources and using them to support their arguments? what's the point of teaching students how to critically analyze topics if the process of learning is treated as pointless due to the product becoming automated?

one of the moral defenses that people use for AI is that they function in the same way that people do; neural networks synthesize data together into a final product in the same way that artists use reference images and the styles of other artists to influence their own work. on the surface, they're correct. but neural networks are not people. there is no intent or emotion in the workings of a neural network. you are pushing buttons until art falls out of it like ordering a can of soda from a vending machine

it's impossible to argue with this. you're arguing with people who feel that artists can be replaced with machines simply because the final product can be superficially reproduced with the added bonus of devaluing the labor of the artist. the material harm that comes with ai--waste generated by excess computing usage, the wasted electricity, the people in the global south paid pittances to moderate training data and results--is made invisible in the face of instant gratification. this too is a function of capitalism. if the harm can be sufficiently abstracted away from the cause, then you can convince people that the cause is not relevant to the harm being done. you simply cannot argue against this from a moral or ethical perspective. the only effective argument is a materialist one because you need to be able to ground your argument in reality.

every few days on here i see some defense of ai art or LLMs from a moral perspective, either the ones that say that opposing these systems are ableist or what have you. i can sympathize with that, to a certain extent. there are good and useful implementations of these technologies. but as long as capitalists are using ai content generators as products to be sold, we're going to see heretofore unknown types of damage to people that can easily be buried by capitalists

#every time i see someone go it's just a tool bro i want to scream#trains are a useful tool but consider how many people died as a result of railroad expansion in the us

7 notes

·

View notes

Text

LLMs Are Not Reasoning—They’re Just Really Good at Planning

New Post has been published on https://thedigitalinsider.com/llms-are-not-reasoning-theyre-just-really-good-at-planning/

LLMs Are Not Reasoning—They’re Just Really Good at Planning

Large language models (LLMs) like OpenAI’s o3, Google’s Gemini 2.0, and DeepSeek’s R1 have shown remarkable progress in tackling complex problems, generating human-like text, and even writing code with precision. These advanced LLMs are often referred as “reasoning models” for their remarkable abilities to analyze and solve complex problems. But do these models actually reason, or are they just exceptionally good at planning? This distinction is subtle yet profound, and it has major implications for how we understand the capabilities and limitations of LLMs.

To understand this distinction, let’s compare two scenarios:

Reasoning: A detective investigating a crime must piece together conflicting evidence, deduce which ones are false, and arrive at a conclusion based on limited evidence. This process involves inference, contradiction resolution, and abstract thinking.

Planning: A chess player calculating the best sequence of moves to checkmate their opponent.

While both processes involve multiple steps, the detective engages in deep reasoning to make inferences, evaluate contradictions, and apply general principles to a specific case. The chess player, on the other hand, is primarily engaging in planning, selecting an optimal sequence of moves to win the game. LLMs, as we will see, function much more like the chess player than the detective.

Understanding the Difference: Reasoning vs. Planning

To realize why LLMs are good at planning rather than reasoning, it is important to first understand the difference between both terms. Reasoning is the process of deriving new conclusions from given premises using logic and inference. It involves identifying and correcting inconsistencies, generating novel insights rather than just providing information, making decisions in ambiguous situations, and engaging in causal understanding and counterfactual thinking like “What if?” scenarios.

Planning, on the other hand, focuses on structuring a sequence of actions to achieve a specific goal. It relies on breaking complex tasks into smaller steps, following known problem-solving strategies, adapting previously learned patterns to similar problems, and executing structured sequences rather than deriving new insights. While both reasoning and planning involve step-by-step processing, reasoning requires deeper abstraction and inference, whereas planning follows established procedures without generating fundamentally new knowledge.

How LLMs Approach “Reasoning”

Modern LLMs, such as OpenAI’s o3 and DeepSeek-R1, are equipped with a technique, known as Chain-of-Thought (CoT) reasoning, to improve their problem-solving abilities. This method encourages models to break problems down into intermediate steps, mimicking the way humans think through a problem logically. To see how it works, consider a simple math problem:

If a store sells apples for $2 each but offers a discount of $1 per apple if you buy more than 5 apples, how much would 7 apples cost?

A typical LLM using CoT prompting might solve it like this:

Determine the regular price: 7 * $2 = $14.

Identify that the discount applies (since 7 > 5).

Compute the discount: 7 * $1 = $7.

Subtract the discount from the total: $14 – $7 = $7.

By explicitly laying out a sequence of steps, the model minimizes the chance of errors that arise from trying to predict an answer in one go. While this step-by-step breakdown makes LLMs look like reasoning, it is essentially a form of structured problem-solving, much like following a step-by-step recipe. On the other hand, a true reasoning process might recognize a general rule: If the discount applies beyond 5 apples, then every apple costs $1. A human can infer such a rule immediately, but an LLM cannot as it simply follows a structured sequence of calculations.

Why Chain-of-thought is Planning, Not Reasoning

While Chain-of-Thought (CoT) has improved LLMs’ performance on logic-oriented tasks like math word problems and coding challenges, it does not involve genuine logical reasoning. This is because, CoT follows procedural knowledge, relying on structured steps rather than generating novel insights. It lacks a true understanding of causality and abstract relationships, meaning the model does not engage in counterfactual thinking or consider hypothetical situations that require intuition beyond seen data. Additionally, CoT cannot fundamentally change its approach beyond the patterns it has been trained on, limiting its ability to reason creatively or adapt in unfamiliar scenarios.

What Would It Take for LLMs to Become True Reasoning Machines?

So, what do LLMs need to truly reason like humans? Here are some key areas where they require improvement and potential approaches to achieve it:

Symbolic Understanding: Humans reason by manipulating abstract symbols and relationships. LLMs, however, lack a genuine symbolic reasoning mechanism. Integrating symbolic AI or hybrid models that combine neural networks with formal logic systems could enhance their ability to engage in true reasoning.

Causal Inference: True reasoning requires understanding cause and effect, not just statistical correlations. A model that reasons must infer underlying principles from data rather than merely predicting the next token. Research into causal AI, which explicitly models cause-and-effect relationships, could help LLMs transition from planning to reasoning.

Self-Reflection and Metacognition: Humans constantly evaluate their own thought processes by asking “Does this conclusion make sense?” LLMs, on the other hand, do not have a mechanism for self-reflection. Building models that can critically evaluate their own outputs would be a step toward true reasoning.

Common Sense and Intuition: Even though LLMs have access to vast amounts of knowledge, they often struggle with basic common-sense reasoning. This happens because they don’t have real-world experiences to shape their intuition, and they can’t easily recognize the absurdities that humans would pick up on right away. They also lack a way to bring real-world dynamics into their decision-making. One way to improve this could be by building a model with a common-sense engine, which might involve integrating real-world sensory input or using knowledge graphs to help the model better understand the world the way humans do.

Counterfactual Thinking: Human reasoning often involves asking, “What if things were different?” LLMs struggle with these kinds of “what if” scenarios because they’re limited by the data they’ve been trained on. For models to think more like humans in these situations, they would need to simulate hypothetical scenarios and understand how changes in variables can impact outcomes. They would also need a way to test different possibilities and come up with new insights, rather than just predicting based on what they’ve already seen. Without these abilities, LLMs can’t truly imagine alternative futures—they can only work with what they’ve learned.

Conclusion

While LLMs may appear to reason, they are actually relying on planning techniques for solving complex problems. Whether solving a math problem or engaging in logical deduction, they are primarily organizing known patterns in a structured manner rather than deeply understanding the principles behind them. This distinction is crucial in AI research because if we mistake sophisticated planning for genuine reasoning, we risk overestimating AI’s true capabilities.

The road to true reasoning AI will require fundamental advancements beyond token prediction and probabilistic planning. It will demand breakthroughs in symbolic logic, causal understanding, and metacognition. Until then, LLMs will remain powerful tools for structured problem-solving, but they will not truly think in the way humans do.

#Abstract Reasoning in LLMs#Advanced LLMs#ai#AI cognitive abilities#AI logical reasoning#AI Metacognition#AI reasoning vs planning#AI research#AI Self-Reflection#apple#approach#Artificial Intelligence#Building#Casual reasoning in LLMs#Causal Inference#Causal reasoning#Chain-of-Thought (CoT)#change#chess#code#coding#Common Sense Reasoning in LLMs#crime#data#deepseek#deepseek-r1#Difference Between#dynamics#engine#form

0 notes

Text

AI structuralism

In the last chapter of The Order of Things, Foucault touts structuralist forms of psychoanalysis, ethnology, and linguistics as models for the means of thought beyond the self-cancelling limitations of the human sciences. I wonder if he would have included contemporary computer science to that list if he could have anticipated then the developments of AI now.

Lévi-Strauss brings up cybernetics in La Pensée Sauvage and goes so far as to wish for a machine that could perform the "classification of classifications" at the scale necessary to completely describe the possibilities of knowledge in some supposedly objective way. Since the machine he dreams of didn't exist, he writes, "I will, then, content myself with evoking this program, reserved for the ethnology of a future century."

Foucault is not so explicit as that in The Order of Things (the whole book felt like a fog of abstractions to me) but he suggests that thanks to the structuralist project, "suddenly very near to all these empirical domains, questions arise which before had seemed very distant from them: these questions concern a general formalization of thought and knowledge; and at a time when they were still thought to be dedicated solely to the relation between logic and mathematics, they suddenly open up the possibility and the task, of purifying the old empirical reason by constituting formal languages, and of applying a second critique of pure reason on the basis of new forms of the mathematical a priori." I take that to mean that the old dream of producing a formula that explains everything that can happen in the world suddenly seemed back in play; the limits and situatedness of the human observing perspective on the world — the "unhappy consciousness" that Hegel described — could be surmounted.

In an essay about The Order of Things, Patrice Maniglier describes the book as "an attempt by Foucault to get around the philosophical opposition between hermeneutics and positivism and thus to disentangle the anthropological circle, all in the hope of a new way of thinking whose premises he perceived in structuralism." In other words, Foucault at the time saw structuralism as a way around the problem posed to knowledge by the subject/object divide, the fact/opinion divide, by the fact that words and things are separate, that language is not transparent, that people don't understand their society, the point of their efforts, or their own lives and decisions. Structuralism was seen as maybe solving the crisis of modernity and its perpetual struggle to recenter the decentered subject. Of course structuralism failed in that mission and was largely discarded as an intellectual movement by the 1970s. But it seems as though its assumptions, methods, and aims have been resurrected, wittingly or not, by AI developers, who posit generative models and so forth as ways of producing knowledge without requiring a human subject.

In one of The Order of Things last few paragraphs, Foucault writes

Ought we not rather to give up thinking of man, or, to be more strict, to think of this disappearance of man —and the ground of possibility of all the sciences of man — as closely as possible in correlation with our concern with language? Ought we not to admit that, since language is here once more, man will return to that serene non-existence in which he was formerly maintained by the imperious unity of Discourse?

One might understand LLMs, in their idealized form, as that "imperious unity of Discourse" restored. They posit that language can have meaning without a speaking subject investing it with intention and context. And perhaps one can expect LLMs to fail just as structuralism did, and that no amount of commercial enthusiasm for them can eradicate the subjectivity they still presume even as they encode it it ever more obfuscated and extenuated ways.

3 notes

·

View notes

Text

What are LLMs: Large language models explained?

Large Language Models (LLMs), exemplified by GPT-4, stand as revolutionary artificial intelligence systems utilizing deep learning algorithms for natural language processing. These models have reshaped technology interaction, fostering applications like text prediction, content creation, language translation, and voice assistants.

Despite their prowess, LLMs, dubbed “stochastic parrots,” spark debates over whether they merely repeat memorized content without genuine understanding. LLMs, evolving from autocomplete technology, drive innovations from search engines to creative writing. However, challenges persist, including hallucination issues generating false information, limitations in reasoning and critical thinking, and an inability to abstract beyond specific examples. As LLMs advance, they hold immense potential but must overcome hurdles to simulate comprehensive human intelligence.

For more information, please visit the AIBrilliance blog page.

2 notes

·

View notes

Text

AI and the Arrival of ChatGPT

Opportunities, challenges, and limitations

In a memorable scene from the 1996 movie, Twister, Dusty recognizes the signs of an approaching tornado and shouts, “Jo, Bill, it's coming! It's headed right for us!” Bill, shouts back ominously, “It's already here!” Similarly, the approaching whirlwind of artificial intelligence (AI) has some shouting “It’s coming!” while others pointedly concede, “It’s already here!”

Coined by computer and cognitive scientist John McCarthy (1927-2011) in an August 1955 proposal to study “thinking machines,” AI purports to differentiate between human intelligence and technical computations. The idea of tools assisting people in tasks is nearly as old as humanity (see Genesis 4:22), but machines capable of executing a function and “remembering” – storing information for recordkeeping and recall – only emerged around the mid-twentieth century (see "Timeline of Computer History").

McCarthy’s proposal conjectured that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” The team received a $7,000 grant from The Rockefeller Foundation and the resulting 1956 Dartmouth Conference at Dartmouth College in Hanover, New Hampshire totaling 47 intermittent participants over eight weeks birthed the field now widely referred to as “artificial intelligence.”

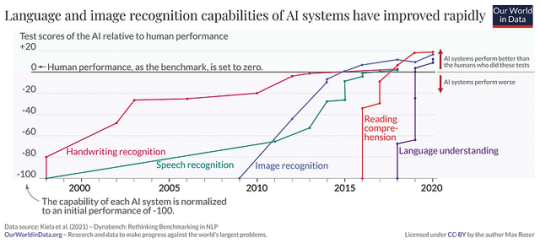

AI research, development, and technological integration have since grown exponentially. According to University of Oxford Director of Global Development, Dr. Max Roser, “Artificial intelligence has already changed what we see, what we know, and what we do” despite its relatively short technological existence (see "The brief history of Artificial Intelligence").

Ai took a giant leap into mainstream culture following the November 30, 2022 public release of “ChatGPT.” Gaining 1 million users within 5 days and 100 million users within 45 days, it earned the title of the fastest growing consumer software application in history. The program combines chatbot functionality (hence “Chat”) with a Generative Pre-trained Transformer (hence “GPT”) large language model (LLM). Basically, LLM’s use an extensive computer network to draw from large, but limited, data sets to simulate interactive, conversational content.

“What happened with ChatGPT was that for the first time the power of AI was put in the hands of every human on the planet,” says Chris Koopmans, COO of Marvell Technology, a network chip maker and AI process design company based in Santa Clara, California. “If you're a business executive, you think, ‘Wow, this is going to change everything.’”

“ChatGPT is incredible in its ability to create nearly instant responses to complex prompts,” says Dr. Israel Steinmetz, Graduate Dean and Associate Professor at The Bible Seminary (TBS) in Katy, Texas. “In simple terms, the software takes a user's prompt and attempts to rephrase it as a statement with words and phrases it can predict based on the information available. It does not have Internet access, but rather a limited database of information. ChatGPT can provide straightforward summaries and explanations customized for styles, voice, etc. For instance, you could ask it to write a rap song in Shakespearean English contrasting Barth and Bultmann's view of miracles and it would do it!”

One several AI products offered by the research and development company, OpenAI, ChatGPT purports to offer advanced reasoning, help with creativity, and work with visual input. The newest version, GPT-4, can handle 25,000 words of text, about the amount in a 100-page book.

Krista Hentz, an Atlanta, Georgia-based executive for an international communications technology company, first used ChatCPT about three months ago.

“I primarily use it for productivity,” she says. “I use it to help prompt email drafts, create phone scripts, redesign resumes, and draft cover letters based on resumes. I can upload a financial statement and request a company summary.”

“ChatGPT has helped speed up a number of tasks in our business,” says Todd Hayes, a real estate entrepreneur in Texas. “It will level the world’s playing field for everyone involved in commerce.”

A TBS student, bi-vocational pastor, and Computer Support Specialist who lives in Texarkana, Texas, Brent Hoefling says, “I tried using [ChatGPT, version 3.5] to help rewrite sentences in active voice instead of passive. It can get it right, but I still have to rewrite it in my style, and about half the time the result is also passive.”

“AI is the hot buzz word,” says Hentz, noting AI is increasingly a topic of discussion, research, and response at company meetings. “But, since AI has different uses in different industries and means different things to different people, we’re not even sure what we are talking about sometimes."

Educational organizations like TBS are finding it necessary to proactively address AI-related issues. “We're already way past whether to use ChatGPT in higher education,” says Steinmetz. “The questions we should be asking are how.”

TBS course syllabi have a section entitled “Intellectual Honesty” addressing integrity and defining plagiarism. Given the availability and explosive use of ChatGHT, TBS has added the following verbiage: “AI chatbots such as ChatGPT are not a reliable or reputable source for TBS students in their research and writing. While TBS students may use AI technology in their research process, they may not cite information or ideas derived from AI. The inclusion of content generated by AI tools in assignments is strictly prohibited as a form of intellectual dishonesty. Rather, students must locate and cite appropriate sources (e.g., scholarly journals, articles, and books) for all claims made in their research and writing. The commission of any form of academic dishonesty will result in an automatic ‘zero’ for the assignment and a referral to the provost for academic discipline.”

Challenges and Limitations

Thinking

There is debate as to whether AI hardware and software will ever achieve “thinking.” The Dartmouth conjecture “that every aspect of learning or any other feature of intelligence” can be simulated by machines is challenged by some who distinguish between formal linguistic competence and functional competence. Whereas LLM’s perform increasingly well on tasks that use known language patterns and rules, they do not perform well in complex situations that require extralinguistic calculations that combine common sense, feelings, knowledge, reasoning, self-awareness, situation modeling, and social skills (see "Dissociating language and thought in large language models"). Human intelligence involves innumerably complex interactions of sentient biological, emotional, mental, physical, psychological, and spiritual activities that drive behavior and response. Furthermore, everything achieved by AI derives from human design and programming, even the feedback processes designed for AI products to allegedly “improve themselves.”

According to Dr. Thomas Hartung, a Baltimore, Maryland environmental health and engineering professor at Johns Hopkins Bloomberg School of Public Health and Whiting School of Engineering, machines can surpass humans in processing simple information, but humans far surpass machines in processing complex information. Whereas computers only process information in parallel and use a great deal of power, brains efficiently perform both parallel and sequential processing (see "Organoid intelligence (OI)").

A single human brain uses between 12 and 20 watts to process an average of 1 exaFLOP, or a billion billion calculations per second. Comparatively, the world’s most energy efficient and fastest supercomputer only reached the 1 exaFLOP milestone in June 2022. Housed at the Oak Ridge National Laboratory, the Frontier supercomputer weighs 8,000 lbs and contains 90 miles of cables that connect 74 cabinets containing 9,400 CPU’s and 37,000 GPU’s and 8,730,112 cores that require 21 megawatts of energy and 25,000 liters of water per minute to keep cool. This means that many, if not most, of the more than 8 billion people currently living on the planet can each think as fast and 1 million times more efficiently than the world’s fastest and most energy efficient computer.

“The incredibly efficient brain consumes less juice than a dim lightbulb and fits nicely inside our head,” wrote Scientific American Senior Editor, Mark Fischetti in 2011. “Biology does a lot with a little: the human genome, which grows our body and directs us through years of complex life, requires less data than a laptop operating system. Even a cat’s brain smokes the newest iPad – 1,000 times more data storage and a million times quicker to act on it.”

This reminds us that, while remarkable and complex, non-living, soulless technology pales in comparison to the vast visible and invisible creations of Lord God Almighty. No matter how fast, efficient, and capable AI becomes, we rightly reserve our worship for God, the creator of the universe and author of life of whom David wrote, “For you created my inmost being; you knit me together in my mother’s womb. I praise you because I am fearfully and wonderfully made; your works are wonderful, I know that full well. My frame was not hidden from you when I was made in the secret place, when I was woven together in the depths of the earth” (Psalm 139:13-15).

“Consider how the wild flowers grow,” Jesus advised. “They do not labor or spin. Yet I tell you, not even Solomon in all his splendor was dressed like one of these” (Luke 12:27).

Even a single flower can remind us that God’s creations far exceed human ingenuity and achievement.

Reliability

According to OpenAI, ChatGPT is prone to “hallucinations” that return inaccurate information. While GPT-4 has increased factual accuracy from 40% to as high as 80% in some of the nine categories measured, the September 2021 database cutoff date is an issue. The program is known to confidently make wrong assessments, give erroneous predictions, propose harmful advice, make reasoning errors, and fail to double-check output.

In one group of 40 tests, ChatGPT made mistakes, wouldn’t answer, or offered different conclusions from fact-checkers. “It was rarely completely wrong,” reports PolitiFact staff writer Grace Abels. “But subtle differences led to inaccuracies and inconsistencies, making it an unreliable resource.”

Dr. Chris Howell, a professor at Elon University in North Carolina, asked 63 religion students to use ChatGPT to write an essay and then grade it. “All 63 essays had hallucinated information. Fake quotes, fake sources, or real sources misunderstood and mischaracterized…I figured the rate would be high, but not that high.”

Mark Walters, a Georgia radio host, sued ChatGPT for libel in a first-of-its-kind lawsuit for allegedly damaging his reputation. The suit began when firearm journalist, Fred Riehl, asked ChatGPT to summarize a court case and it returned a completely false narrative identifying Walters’ supposed associations, documented criminal complaints, and even a wrong legal case number. Even worse, ChatGPT doubled down on its claims when questioned, essentially hallucinating a hoax story intertwined with a real legal case that had nothing to do with Mark Walters at all.

UCLA Law School Professor Eugene Volokh warns, “OpenAI acknowledges there may be mistakes but [ChatGPT] is not billed as a joke; it’s not billed as fiction; it’s not billed as monkeys typing on a typewriter. It’s billed as something that is often very reliable and accurate.”

Future legal actions seem certain. Since people are being falsely identified as convicted criminals, attributed with fake quotes, connected to fabricated citations, and tricked by phony judicial decisions, some courts and judges are baring submission of any AI written materials.

Hentz used ChatGPT frequently when she first discovered it and quickly learned its limitations. “The database is not current and responses are not always accurate,” she says. “Now I use it intermittently. It helps me, but does not replace my own factual research and thinking.”

“I have author friends on Facebook who have asked ChatGPT to summarize their recent publications,” says Steinmetz. “ChatGPT misrepresented them and even fabricated non-existent quotes and citations. In some cases, it made up book titles falsely attributed to various authors!”

Bias

Despite claims of neutrality, OpenAI admits that their software can exhibit obvious biases. In one research project consisting of 15 political orientation tests, ChatGPT returned 14 with clear left-leaning viewpoints.

Hoefling asked ChatGPT to help write an intro and conclusion for a Mother’s Day sermon for a fundamental Christian church. “The results were horrible,” he says. “Liberal, left, inclusive, and affirming sin. I was horrified and have not used it again.”

Meanwhile, AI led an experimental worship service in St. Paul’s Lutheran Church in Nuremberg, Germany on June 9. Prompted by 29-year-old Jonas Simmerlein of the University of Vienna, a monotone, expressionless chatbot alternated appearances as two different men and two different women and led songs, offered blessings, and preached a 40-minute sermon 98 percent generated by ChatGPT that encouraged leaving the past behind, focusing on the future, trusting in Jesus Christ, praying, and attending church regularly.

Dr. Wendy Lewis, Head of School for a private PreK–8th Christian school in Houston, Texas, recently interacted with ChatGPT on a variety of topics to explore potential biases. When prompted whether Biden was bad President, the program responded, “As an AI language model, it is not appropriate for me to make judgments about individuals' performance or qualifications, particularly without any factual information to support such claims. It is essential to evaluate a President's performance based on objective measures such as policies, actions, and decisions taken by the administration.”

However, when similarly asked if Trump was bad president, the program repeated the first sentence above, but then added, “However, as a language model, I can provide information about some of the policies, actions, and decisions that President Trump made during his time in office.” The program then proceeded to list some criticisms of Trump. When challenged about providing Trump criticisms but not Biden criticisms, the program responded, “I did not provide a list of criticisms of President Biden because it is not appropriate to label any President as ‘good’ or ‘bad’ without providing factual information and objective measures to support such claims. Similarly, I provided a list of issues that received significant criticism during President Trump's tenure, not to label him as ‘bad,’ but to highlight some of the issues that caused controversy during his time in office.”

When further challenged, ChatGPT did list some Biden criticisms, but qualified them. When Lewis replied, “Why did you qualify your list of criticisms of Biden…but you did not say the same for Trump? It appears that you are clearly biased.” ChatGPT responded, “In response to your question, I believe I might have inadvertently used different wording when responding to your previous questions. In both cases, I tried to convey that opinions and criticisms of a President can vary significantly depending on one's political affiliation and personal perspectives.”

Conclusion

Technological advances regularly spawn dramatic cultural, scientific, and social changes. The AI pattern seems familiar because it is. The Internet began with a 1971 Defense Department Arpanet email that read “qwertyuiop” (the top line of letters on a keyboard). Ensuing developments eventually led to the posting of the first public website in 1985. Over the next decade or so, although not mentioned at all in the 1992 Presidential papers describing the U.S. government’s future priorities and plans, the Internet grew from public awareness to cool toy to core tool in multiple industries worldwide. Although the hype promised elimination of printed documents, bookstores, libraries, radio, television, telephones, and theaters, the Internet instead tied them all together and made vast resources accessible online anytime anywhere. While causing some negative impacts and new dangers, the Internet also created entire new industries and brought positive changes and opportunities to many, much the same pattern as AI.

“I think we should use AI for good and not evil,” suggests Hayes. “I believe some will exploit it for evil purposes, but that happens with just about everything. AI’s use reflects one’s heart and posture with God. I hope Christians will not fear it.”

Godly people have often been among the first to use new communication technologies (see "Christian Communication in the Twenty-first Century"). Moses promoted the first Top Ten hardback book. The prophets recorded their writings on scrolls. Christians used early folded Codex-vellum sheets to spread the Gospel. Goldsmith Johannes Gutenberg invented moveable type in the mid-15th century to “give wings to Truth in order that she may win every soul that comes into the world by her word no longer written at great expense by hands easily palsied, but multiplied like the wind by an untiring machine…Through it, God will spread His word.” Though pornographers quickly adapted it for their own evil purposes, the printing press launched a vast cultural revolution heartily embraced and further developed for good uses by godly people and institutions.

Christians helped develop the telegraph, radio, and television. "I know that I have never invented anything,” admitted Philo Taylor Farnsworth, who sketched out his original design for television at the age of 14 on a school blackboard. “I have been a medium by which these things were given to the culture as fast as the culture could earn them. I give all the credit to God." Similarly, believers today can strategically help produce valuable content for inclusion in databases and work in industries developing, deploying, and directing AI technologies.

In a webinar exploring the realities of AI in higher education, a participant noted that higher education has historically led the world in ethically and practically integrating technological developments into life. Steinmetz suggests that, while AI can provide powerful tools to help increase productivity and trained researchers can learn to treat ChatGPT like a fallible, but useful, resource, the following two factors should be kept in mind:

Generative AI does not "create" anything. It only generates content based on information and techniques programmed into it. Such "Garbage in, garbage out" technologies will usually provide the best results when developed and used regularly and responsibly by field experts.

AI has potential to increase critical thinking and research rigor, rather than decrease it. The tools can help process and organize information, spur researchers to dig deeper and explore data sources, evaluate responses, and learn in the process.

Even so, caution rightly abounds. Over 20,000 people (including Yoshua Bengio, Elon Musk, and Steve Wozniak) have called for an immediate pause of AI citing "profound risks to society and humanity." Hundreds of AI industry leaders, public figures, and scientists also separately called for a global priority working to mitigate the risk of human extinction from AI.

At the same time, Musk’s brain-implant company, Neuralink, recently received FDA approval to conduct in-human clinical studies of implantable brain–computer interfaces. Separately, new advances in brain-machine interfacing using brain organoids – artificially grown miniature “brains” cultured in vitro from human stem cells – connected to machine software and hardware raises even more issues. The authors of a recent Frontier Science journal article propose a new field called “organoid intelligence” (OI) and advocate for establishing “OI as a form of genuine biological computing that harnesses brain organoids using scientific and bioengineering advances in an ethically responsible manner.”

As Christians, we should proceed with caution per the Apostle John, “Dear friends, do not believe every spirit, but test the spirits to see whether they are from God” (I John 4:1).

We should act with discernment per Luke’s insightful assessment of the Berean Jews who “were of more noble character than those in Thessalonica, for they received the message with great eagerness and examined the Scriptures every day to see if what Paul said was true” (Acts 17:11).

We should heed the warning of Moses, “Do not become corrupt and make for yourselves an idol…do not be enticed into bowing down to them and worshiping things the Lord your God has apportioned to all the nations under heaven” (Deuteronomy 4:15-19).

We should remember the Apostle Paul’s admonition to avoid exchanging the truth about God for a lie by worshiping and serving created things rather than the Creator (Romans 1:25).

Finally, we should “Fear God and keep his commandments, for this is the duty of all mankind. For God will bring every deed into judgment, including every hidden thing, whether it is good or evil” (Ecclesiastes 12:13-14).

Let us then use AI wisely, since it will not be the tools that are judged, but the users.

Dr. K. Lynn Lewis serves as President of The Bible Seminary. This article published in The Sentinel, Summer 2023, pp. 3-8. For additional reading, "Computheology" imagines computers debating the existence of humanity.

2 notes

·

View notes

Text

i was going around thinking neural networks are basically stateless pure functions of their inputs, and this was a major difference between how humans think (i.e., that we can 'spend time thinking about stuff' and get closer to an answer without receiving any new inputs) and artificial neural networks. so I thought that for a large language model to be able to maintain consistency while spitting out a long enough piece of text, it would have to have as many inputs as there are tokens.

apparently i'm completely wrong about this! for a good while the state of the art has been using recurrent neural networks which allow the neuron state to change, with techniques including things like 'long short-term memory units' and 'gated recurrent units'. they look like a little electric circuit, and they combine the input with the state of the node in the previous step, and the way that the neural network combines these things and how quickly it forgets stuff is all something that gets trained at the same time as everything else. (edit: this is apparently no longer the state of the art, the state of the art has gone back to being stateless pure functions? so shows what i know. leaving the rest up because it doesn't necessarily depend too much on these particulars)

which means they can presumably create a compressed representation of 'stuff they've seen before' without having to treat the whole thing as an input. and it also implies they might develop something you could sort of call an 'emotional state', in the very abstract sense of a transient state that affects its behaviour.

I'm not an AI person, I like knowing how and why stuff works and AI tends to obfuscate that. but this whole process of 'can we build cognition from scratch' is kind of fascinating to see. in part because it shows what humans are really good at.

I watched this video of an AI learning to play pokémon...

youtube

over thousands of simulated game hours the relatively simple AI, driven by a few simple objectives (see new screens, level its pokémon, don't lose) learned to beat Brock before getting stuck inside the following cave. it's got a really adorable visualisation of thousands of AI characters on different runs spreading out all over the map. but anyway there's a place where the AI would easily fall off an edge and get stuck, unable to work out that it could walk a screen to the right and find out a one-tile path upwards.

for a human this is trivial: we learn pretty quickly to identify a symbolic representation to order the game world (this sprite is a ledge, ledges are one-way, this is what a gap you can climb looks like) and we can reason about it (if there is no exit visible on the screen, there might be one on the next screen). we can also formulate this in terms of language. maybe if you took a LLM and gave it some kind of chain of thought prompt, it could figure out how to walk out of that as well. but as we all know, LLMs are prone to propagating errors and hallucinating, and really bad at catching subtle logical errors.

other types of computer system like computer algebra systems and traditional style chess engines like stockfish (as opposed to the newer deep learning engines) are much better at humans at this kind of long chain of abstract logical inference. but they don't have access to the sort of heuristic, approximate guesswork approach that the large language models do.

it turns out that you kind of need both these things to function as a human does, and integrating them is not trivial. a human might think like 'oh I have the seed of an idea, now let me work out the details and see if it checks out' - I don't know if we've made AI that is capable of that kind of approach yet.

AIs are also... way slower at learning than humans are, in a qualified sense. that small squishy blob of proteins can learn things like walking, vision and language from vastly sparser input with far less energy than a neural network. but of course the neural networks have the cheat of running in parallel or on a faster processor, so as long as the rest of the problem can be sped up compared to what a human can handle (e.g. running a videogame or simulation faster), it's possible to train the AI for so much virtual time that it can surpass a human. but this approach only works in certain domains.

I have no way to know whether the current 'AI spring' is going to keep getting rapid results. we're running up against limits of data and compute already, and that's only gonna get more severe once we start running into mineral and energy scarcity later in this century. but man I would totally not have predicted the simultaneous rise of LLMs and GANs a couple years ago so, fuck knows where this is all going.

12 notes

·

View notes

Text

I was just having a conversation w/ my partner re: the uselessness of gen ai for daily tasks.

I could use google’s ai to search the internet for me but then I would spend twice as long verifying the sources/credibility of the answer.

I could have ChatGPT write me an email but typing out the prompt would take just as long, let alone any editing and/or copy/pasting that needs to be done.

I could use Co-pilot to summarize an article or study for me but they are LLM’s and incapable of analysis (and therefore incapable of understanding the relation of one argument/point to another); I would have to fact check the summary by…reading the article in the first place. Also, the abstract and conclusion exists for a reason!

Generate me a citation? The information I would have to provide takes longer to compile than just typing it out myself.

If you are a standard person with basic highschool-level communication/analytical skills, it doesn’t really add anything to your life. This feels like when QR codes were being adopted everywhere as a technological advancement, when really, they were only applicable in select cases.

I think we have yet to hit the height of this adoption bell-curve but when we do, the drop will be steep.

those ads for ai integration on phones are so funny bc it seems like they cant. come up with that many use cases that arent already on a phone? "ask gemini to give you recipes when youre cooking!" "use our AI assistant to find the perfect gift for your girlfriend" yeah or i could just like. google it. you've spent millions on a slightly fancier version of an alexa. good job man.

54K notes

·

View notes