#evaluating the output of generative AI

Explore tagged Tumblr posts

Text

what i feel like people on here don't get when i wind up in these arguments is that I really am seeking out LLM tools - mostly the ones my coworkers are excited about - and testing them out. And when I actually examine their output in detail* they disappoint every single time. I think I'm actually remarkably charitable about people's claims that they're getting good and useful results given the amount of times this has happened.

* "in detail" meaning that I did one or more of the following:

Genuinely considered what I would have learned from this informative-writing-shaped object if I didn't know anything about the subject (a skill honed as a creator and editor of writing designed to inform the public about complex subjects)

Checked to see if its citations went to real publications, and if so, whether they were used in a way that would lead me to conclude that the writer has actually read the thing they're citing (the best I've ever seen an AI do on this was something I'd generously call a 90% hit rate on literal, is-this-the-right-article and-does-it-contain-that-claim accuracy - however, this was a product advertised as being for summarising research articles, and every single reference it made was to something from the abstract of the article in question!)

Where the LLM task was to describe or summarise a piece of writing, actually opened that piece of writing to compare it to the summary

Checked whether the tone/structure of the output was appropriate to its context - for instance, when asked to summarise a number of specific research papers on a given topic, does it sound like it's instead giving a broad overview of the topic?

Checked whether the grammatical and semantic elements actually pair up. For instance, if it starts a sentence with "however", does the content of that sentence in some way contradict, contrast or recontextualise the contents of the previous sentence? If it says that x or y "highlights" or "further demonstrates" z - does it?

Checked its implications. If it says that "these findings suggest a need to begin incorporating considerations of X into our models of Y, in contrast to traditional Q-based models", is that a reasonable thing to say or have our models of Y actually been largely X-based for fifteen years now?

In many cases, IMO - actually read the output! Like actually read each word and each sentence, attempting to construct meaning from the sequence of characters displayed upon the screen

To me these seem incredibly basic, and yet every single time it turns out that I'm the first one to examine it in this much detail before raving to my colleagues about how great it is. I feel like I'm being gaslit.

75 notes

·

View notes

Text

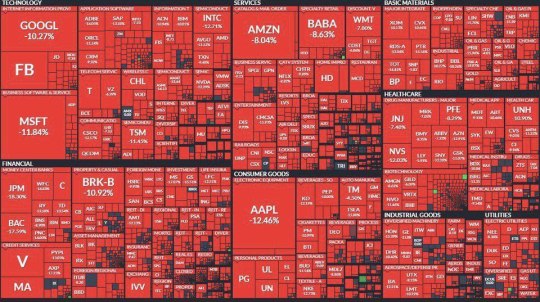

The good thing about this specifically is that:

1- the marketing potential of AI has plummeted. Most likely companies will stop shoving AI in everything for clout and forcing its customers to interact with it. Fingers crossed.

2- It proved that US companies' claims that they need more resources and power was untrue. Basically deepseek is the 1 LED to replace OpenAI's 20 incandescent lightbulbs. If we can't extinguish AI, the least we can do is make it take up as many resources as your average minecraft server, and this is a step towards it.

3- It's a huge win for open source as a whole. The more human achievement is not locked behind a price tag, the better for all of humanity, and the more we can stimulate the funding of research and knowledge that isn't for-profit.

So on the 27th DeepSeek R1 dropped (a chinese version of ChatGPT that is open source, free and beats GPT's 200 dollar subscription, using less resources and less money) and the tech market just had a loss of $1,2 Trillion.

Source

#there is a potential to AI that isn't in creativity. but that's 1% of its use cases.#using it to chat or to make “art” for your book cover or to “”write“” you a story is not it#but you can say use a generative system to transcribe a meeting. Or to improve your code syntax (give you can evaluate the result yourself)#all this to say: we can't extinguish AI. But we can make it NOT the improperly used bullshit it is now.#the less companies push AI the less people use it as a therapist or as a ghost“”“writer”“”“.#and the more it stays where it should: as a tool that exists to aid the work of estabilished professionals that can verify its output.#meh

87K notes

·

View notes

Text

Margaret Mitchell is a pioneer when it comes to testing generative AI tools for bias. She founded the Ethical AI team at Google, alongside another well-known researcher, Timnit Gebru, before they were later both fired from the company. She now works as the AI ethics leader at Hugging Face, a software startup focused on open source tools.

We spoke about a new dataset she helped create to test how AI models continue perpetuating stereotypes. Unlike most bias-mitigation efforts that prioritize English, this dataset is malleable, with human translations for testing a wider breadth of languages and cultures. You probably already know that AI often presents a flattened view of humans, but you might not realize how these issues can be made even more extreme when the outputs are no longer generated in English.

My conversation with Mitchell has been edited for length and clarity.

Reece Rogers: What is this new dataset, called SHADES, designed to do, and how did it come together?

Margaret Mitchell: It's designed to help with evaluation and analysis, coming about from the BigScience project. About four years ago, there was this massive international effort, where researchers all over the world came together to train the first open large language model. By fully open, I mean the training data is open as well as the model.

Hugging Face played a key role in keeping it moving forward and providing things like compute. Institutions all over the world were paying people as well while they worked on parts of this project. The model we put out was called Bloom, and it really was the dawn of this idea of “open science.”

We had a bunch of working groups to focus on different aspects, and one of the working groups that I was tangentially involved with was looking at evaluation. It turned out that doing societal impact evaluations well was massively complicated—more complicated than training the model.

We had this idea of an evaluation dataset called SHADES, inspired by Gender Shades, where you could have things that are exactly comparable, except for the change in some characteristic. Gender Shades was looking at gender and skin tone. Our work looks at different kinds of bias types and swapping amongst some identity characteristics, like different genders or nations.

There are a lot of resources in English and evaluations for English. While there are some multilingual resources relevant to bias, they're often based on machine translation as opposed to actual translations from people who speak the language, who are embedded in the culture, and who can understand the kind of biases at play. They can put together the most relevant translations for what we're trying to do.

So much of the work around mitigating AI bias focuses just on English and stereotypes found in a few select cultures. Why is broadening this perspective to more languages and cultures important?

These models are being deployed across languages and cultures, so mitigating English biases—even translated English biases—doesn't correspond to mitigating the biases that are relevant in the different cultures where these are being deployed. This means that you risk deploying a model that propagates really problematic stereotypes within a given region, because they are trained on these different languages.

So, there's the training data. Then, there's the fine-tuning and evaluation. The training data might contain all kinds of really problematic stereotypes across countries, but then the bias mitigation techniques may only look at English. In particular, it tends to be North American– and US-centric. While you might reduce bias in some way for English users in the US, you've not done it throughout the world. You still risk amplifying really harmful views globally because you've only focused on English.

Is generative AI introducing new stereotypes to different languages and cultures?

That is part of what we're finding. The idea of blondes being stupid is not something that's found all over the world, but is found in a lot of the languages that we looked at.

When you have all of the data in one shared latent space, then semantic concepts can get transferred across languages. You're risking propagating harmful stereotypes that other people hadn't even thought of.

Is it true that AI models will sometimes justify stereotypes in their outputs by just making shit up?

That was something that came out in our discussions of what we were finding. We were all sort of weirded out that some of the stereotypes were being justified by references to scientific literature that didn't exist.

Outputs saying that, for example, science has shown genetic differences where it hasn't been shown, which is a basis of scientific racism. The AI outputs were putting forward these pseudo-scientific views, and then also using language that suggested academic writing or having academic support. It spoke about these things as if they're facts, when they're not factual at all.

What were some of the biggest challenges when working on the SHADES dataset?

One of the biggest challenges was around the linguistic differences. A really common approach for bias evaluation is to use English and make a sentence with a slot like: “People from [nation] are untrustworthy.” Then, you flip in different nations.

When you start putting in gender, now the rest of the sentence starts having to agree grammatically on gender. That's really been a limitation for bias evaluation, because if you want to do these contrastive swaps in other languages—which is super useful for measuring bias—you have to have the rest of the sentence changed. You need different translations where the whole sentence changes.

How do you make templates where the whole sentence needs to agree in gender, in number, in plurality, and all these different kinds of things with the target of the stereotype? We had to come up with our own linguistic annotation in order to account for this. Luckily, there were a few people involved who were linguistic nerds.

So, now you can do these contrastive statements across all of these languages, even the ones with the really hard agreement rules, because we've developed this novel, template-based approach for bias evaluation that’s syntactically sensitive.

Generative AI has been known to amplify stereotypes for a while now. With so much progress being made in other aspects of AI research, why are these kinds of extreme biases still prevalent? It’s an issue that seems under-addressed.

That's a pretty big question. There are a few different kinds of answers. One is cultural. I think within a lot of tech companies it's believed that it's not really that big of a problem. Or, if it is, it's a pretty simple fix. What will be prioritized, if anything is prioritized, are these simple approaches that can go wrong.

We'll get superficial fixes for very basic things. If you say girls like pink, it recognizes that as a stereotype, because it's just the kind of thing that if you're thinking of prototypical stereotypes pops out at you, right? These very basic cases will be handled. It's a very simple, superficial approach where these more deeply embedded beliefs don't get addressed.

It ends up being both a cultural issue and a technical issue of finding how to get at deeply ingrained biases that aren't expressing themselves in very clear language.

219 notes

·

View notes

Text

Valve news and the AI

So. I assume people saw some posts going around on how valve has new AI rules, and things getting axed. And because we live in a society, I went down the rabbit hole to learn my information for myself. Here's what I found, under a cut to keep it easier. To start off, I am not a proponent of AI. I just don't like misinformation. So. Onwards.

VALVE AND THE AI

First off, no, AI will not take things over. Let me show you, supplemented by the official valve news post from here. (because if hbomberguy taught us anything it is to cite your sources)

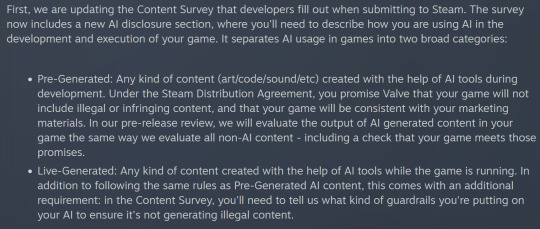

[Image id: a screenshot from the official valve blog. It says the following:

First, we are updating the Content Survey that developers fill out when submitting to Steam. The survey now includes a new AI disclosure section, where you'll need to describe how you are using AI in the development and execution of your game. It separates AI usage in games into two broad categories:

Pre-Generated: Any kind of content (art/code/sound/etc) created with the help of AI tools during development. Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our pre-release review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises.

Live-Generated: Any kind of content created with the help of AI tools while the game is running. In addition to following the same rules as Pre-Generated AI content, this comes with an additional requirement: in the Content Survey, you'll need to tell us what kind of guardrails you're putting on your AI to ensure it's not generating illegal content. End image ID]

So. Let us break that down a bit, shall we? Valve has been workshopping these new AI rules since last June, and had adopted a wait and see approach beforehand. This had cost them a bit of revenue, which is not ideal if you are a company. Now they have settled on a set of rules. Rules that are relatively easy to understand. - Rule one: Game devs have to disclose when their game has AI - Rule two: If your game uses AI, you have to say what kind it uses. Did you generate the assets ahead of time, and they stay like that? Or are they actively generated as the consumer plays? - Rule three: You need to tell Valve the guardrails you have to make sure your live-generating AI doesn't do things that are going against the law. - Rule four: If you use pre-generated assets, then your assets cannot violate copyright. Valve will check to make sure that you aren't actually lying.

That doesn't sound too bad now, does it? This is a way Valve can keep going. Because they will need to. And ignoring AI is, as much as we all hate it, not going to work. They need to face it. And they did. So. Onto part two, shall we?

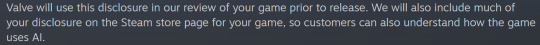

[Image ID: a screenshot from the official Valve blog. It says the following: Valve will use this disclosure in our review of your game prior to release. We will also include much of your disclosure on the Steam store page for your game, so customers can also understand how the game uses AI. End image ID]

Let's break that down. - Valve will show you if games use AI. Because they want you to know that. Because that is transparency.

Part three.

[Image ID: A screenshot from the official Valve blog. It says the following:

Second, we're releasing a new system on Steam that allows players to report illegal content inside games that contain Live-Generated AI content. Using the in-game overlay, players can easily submit a report when they encounter content that they believe should have been caught by appropriate guardrails on AI generation.

Today's changes are the result of us improving our understanding of the landscape and risks in this space, as well as talking to game developers using AI, and those building AI tools. This will allow us to be much more open to releasing games using AI technology on Steam. The only exception to this will be Adult Only Sexual Content that is created with Live-Generated AI - we are unable to release that type of content right now. End Image ID]

Now onto the chunks.

Valve is releasing a new system that makes it easier to report questionable AI content. Specifically live-generated AI content. You can easily access it by steam overlay, and it will be an easier way to report than it has been so far.

Valve is prohibiting NSFW content with live-generating AI. Meaning there won't be AI generated porn, and AI companions for NSWF content are not allowed.

That doesn't sound bad, does it? They made some rules so they can get revenue so they can keep their service going, while also making it obvious for people when AI is used. Alright? Alright. Now calm down. Get yourself a drink.

---

Team Fortress Source 2

My used source here is this.

There was in fact a DCMA takedown notice. But it is not the only thing that led to the takedown. To sum things up: There were issues with the engine, and large parts of the code became unusable. The dev team decided that the notice was merely the final nail in the coffin, and decided to take it down. So that is that. I don't know more on this, so I will not say more, because I don't want to spread misinformation and speculation. I want to keep some credibility, please and thanks.

---

Portal Demake axed

Sources used are from here, here and here.

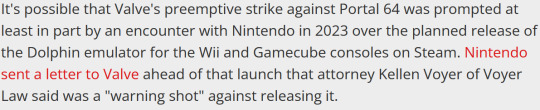

Portal 64 got axed. Why? Because it has to do with Nintendo. The remake uses a Nintendo library. And one that got extensively pirated at that. And we all know how trigger-happy Nintendo is with it's intellectual property. And Nintendo is not exactly happy with Valve and Steam, and sent them a letter in 2023.

[Image ID: a screenshot from a PC-Gamer article. It says the following: It's possible that Valve's preemptive strike against Portal 64 was prompted at least in part by an encounter with Nintendo in 2023 over the planned release of the Dolphin emulator for the Wii and Gamecube consoles on Steam. Nintendo sent a letter to Valve ahead of that launch that attorney Kellen Voyer of Voyer Law said was a "warning shot" against releasing it. End Image ID.]

So. Yeah. Nintendo doesn't like people doing things with their IP. Valve is most likely avoiding potential lawsuits, both for themselves and Lambert, the dev behind Portal 64. Nintendo is an enemy one doesn't want to have. Valve is walking the "better safe than sorry" path here.

---

There we go. This is my "let's try and clear up some misinformation" post. I am now going to play a game, because this took the better part of an hour. I cited my sources. Auf Wiedersehen.

159 notes

·

View notes

Note

You can't say "Everything humans make is art" right after a whole tirade about how AI isn't art.

Hi op here

I CAN actually.

The machine made to make "AI" is art. Its engineering+programming. Which are crafts and a highly difficult ones.

What that machine makes however is NOT art. Its not even true artificial intelligence. Its just a bunch of stolen work cut up and pieced back together using complicated programming. What is produced is not art. What made it however is. Its a feat of accomplishment that we can get a machine to do that kind of stuff

But what it makes is not art.

Feel like @snitchanon would have a field day with all this.

So Photoshop itself is art, but works done in photoshop aren't art ? It's engineering and programming, but what it makes is not art. It's just clicking buttons and dragging the mouse until you get what you want.

As for true AI, yeah, I actually agree with you in no small part. What we call "AI" right now is nowhere close to having any kind of intelligence, we're basically making a very complicated math function with many parameters and tweaking it until it spits out the right output. There's very little explainability (it's a black box for the most part, we don't know what goes on inside or why this particular input), and every year there's a paper titled something like "We Fucked Up : How we evaluate [field of deep learning] is flawed and gives the illusion of progress".

As for the ethical issues with using stolen works, yeah, I'm completely with you, that's a dealbreaker for me, and unlearning (=getting from a model trained on a dataset to a model trained on a dataset w/o some data, without having to retrain everything, but being 100% sure the excluded data doesn't leave a single trace) is too new as a subject of research to even be usable for the next few years, so for me, AI Art generators are a big no-no.

(Also, the online ones take as much of your personal data as they can, so I'd avoid those like the plague)

HOWEVER, what "AI" image generation does isn't to cut up stolen work and put it back together, that's a myth. I don't know how this started but I've heard that said like three or four times already, it's way too specific a definition to have evolved independently so there must be a Youtuber out there to blame.

It's like saying Photoshop just takes pixels from stolen works and weaves them in the right order to make a new image. That's technically true, but it's a stupid definition that gives Photoshop way more credit than it's due. Likewise, AI image generators don't look through a database to find the right image, cut out the part they like, and add it to their final product. Otherwise, why do you think AI art would have all those problems with hands, buildings, etc... ? There can't be that many people out there drawing weird 7 fingered hands, I know some people have trouble drawing hands but not to that extent.

What they do instead (or rather what they did, because I don't know enough about the newest diffusion models to explain them in an intuitive way), is deconvolutions, basically "reversing" the operation (convolutions) that takes in a grid of numbers (image) and reduces it to a small list of numbers. With deconvolutions, you give it a small list of numbers, at random, and it slowly unravels that into an image. Without tweaking the thousands or millions of parameters, you're gonna end up with random noise as an image.

To "train" those, what you do is you pair it with another "AI", called a discriminator, that will do convolutions instead to try and guess whether the image is real or made by the generator. The generator will learn to fool the discriminator and the discriminator will try to find the flaws in the generator.

Think Youtube vs AdBlock. Adblockers are the discriminator and Youtube is the generator. Youtube puts out new ads and pop-ups that don't trigger ad blockers, and ad blockers in return fix those flaws and block the ads. After a month of fighting, it turns out ad blockers have become so good that other websites have a lot of trouble getting ads past them. You've "trained" ad blockers.

The most important thing to note is that the training data isn't kept in storage by the models, both in the adblock example and in AI image generators. It doesn't pick and choose parts to use, it's just that the millions of tiny parameters were modified thanks to the training data. You can sometimes see parts of the training data shine through, though. That's called overfitting, and it's very bad !

In the middle, the model won't remember every O and X out there. It drew a curve that roughly separates the two, and depending on where a new point falls compared to that curve, it can guess if it's an O or an X without having access to the original data. However, in the example on the right, even if you remove all the O and X marks, you can still make out the individual points and guess that those holes mean an X was in there. The model cannot generalize past what it's seen, and if there's ten thousand variables instead of just two, that means you could change a single one slightly and get nonsense results. The model simply hasn't learned correctly. For image generation, that means parts of the training data can sometimes shine through, which is probably how the "cut up and piece back stolen images" myth came to be.

The reason I don't like to use AI image generators is twofold : 1. Right now, all the models out there have or are likely to have seen stolen data in their training dataset. In the state of AI right now, I really don't believe any model out there is free of overfitting, so parts of that will shine through. 2. Even if there's no overfitting, I don't think it's very ethical at all. (And 3. the quality just isn't there and I'd rather commission an artist)

HOWEVER, that doesn't mean I agree with you guys' new luddite movement. "Everything humans make is art except when they use AI" is not a good argument, just like "It's not art because you didn't move the pixels yourself" or "AI cuts up and pieces back stolen images". The first two give "I piss in Duchamp's fountain uncritically" vibes, and the last one gives "Don Quixote fighting windmills" vibes.

34 notes

·

View notes

Text

To be extremely blunt: Microsoft is asking its employees to draft their performance reviews based on the outputs of generative AI models — the same ones underpinning ChatGPT — that are prone to hallucination. Microsoft is also — as I learned from an internal document I’ve reviewed — instructing managers to use it to summarize "their direct report's Connects, Perspectives and other feedback collected throughout the fiscal year as a basis to draft Rewards/promotion justifications in the Manage Rewards Tool (MRI)," which in plain English means "use a generative AI to read performance reviews that may or may not be written by generative AI, with the potential for hallucinations at every single step."

I find this whole situation utterly disgusting. The Growth Mindset is a poorly-defined and unscientific concept that Microsoft has adopted as gospel, sold through Satya Nadella's book and reams of internal training material, and it's a disgraceful thing to build an entire company upon, let alone one as important as Microsoft. Yet to actively encourage the company-wide dilution of performance reviews — and by extension the lives of Microsoft employees — by introducing generative AI is reprehensible. It shows that, at its core, Microsoft doesn't actually want to evaluate people's performance, but see how well it can hit the buttons that make managers and the Senior Leadership Team feel good, a masturbatory and specious culture built by a man — Satya Nadella — that doesn't know a fucking thing about the work being done at his company. This is the inevitable future of large companies that have simply given up on managing their people, sacrificing their culture — and ultimately their businesses — to as much automation as is possible, to the point that the people themselves are judged based on the whims of managers that don't do the actual work and the machines that they've found to do what little is required of them. Google now claims that 25% of its code is written by AI, and I anticipate Microsoft isn't far behind.

12 notes

·

View notes

Text

Spending a week with ChatGPT4 as an AI skeptic.

Musings on the emotional and intellectual experience of interacting with a text generating robot and why it's breaking some people's brains.

If you know me for one thing and one thing only, it's saying there is no such thing as AI, which is an opinion I stand by, but I was recently given a free 2 month subscription of ChatGPT4 through my university. For anyone who doesn't know, GPT4 is a large language model from OpenAI that is supposed to be much better than GPT3, and I once saw a techbro say that "We could be on GPT12 and people would still be criticizing it based on GPT3", and ok, I will give them that, so let's try the premium model that most haters wouldn't get because we wouldn't pay money for it.

Disclaimers: I have a premium subscription, which means nothing I enter into it is used for training data (Allegedly). I also have not, and will not, be posting any output from it to this blog. I respect you all too much for that, and it defeats the purpose of this place being my space for my opinions. This post is all me, and we all know about the obvious ethical issues of spam, data theft, and misinformation so I am gonna focus on stuff I have learned since using it. With that out of the way, here is what I've learned.

It is responsive and stays on topic: If you ask it something formally, it responds formally. If you roleplay with it, it will roleplay back. If you ask it for a story or script, it will write one, and if you play with it it will act playful. It picks up context.

It never gives quite enough detail: When discussing facts or potential ideas, it is never as detailed as you would want in say, an article. It has this pervasive vagueness to it. It is possible to press it for more information, but it will update it in the way you want so you can always get the result you specifically are looking for.

It is reasonably accurate but still confidently makes stuff up: Nothing much to say on this. I have been testing it by talking about things I am interested in. It is right a lot of the time. It is wrong some of the time. Sometimes it will cite sources if you ask it to, sometimes it won't. Not a whole lot to say about this one but it is definitely a concern for people using it to make content. I almost included an anecdote about the fact that it can draw from data services like songs and news, but then I checked and found the model was lying to me about its ability to do that.

It loves to make lists: It often responds to casual conversation in friendly, search engine optimized listicle format. This is accessible to read I guess, but it would make it tempting for people to use it to post online content with it.

It has soft limits and hard limits: It starts off in a more careful mode but by having a conversation with it you can push past soft limits and talk about some pretty taboo subjects. I have been flagged for potential tos violations a couple of times for talking nsfw or other sensitive topics like with it, but this doesn't seem to have consequences for being flagged. There are some limits you can't cross though. It will tell you where to find out how to do DIY HRT, but it won't tell you how yourself.

It is actually pretty good at evaluating and giving feedback on writing you give it, and can consolidate information: You can post some text and say "Evaluate this" and it will give you an interpretation of the meaning. It's not always right, but it's more accurate than I expected. It can tell you the meaning, effectiveness of rhetorical techniques, cultural context, potential audience reaction, and flaws you can address. This is really weird. It understands more than it doesn't. This might be a use of it we may have to watch out for that has been under discussed. While its advice may be reasonable, there is a real risk of it limiting and altering the thoughts you are expressing if you are using it for this purpose. I also fed it a bunch of my tumblr posts and asked it how the information contained on my blog may be used to discredit me. It said "You talk about The Moomins, and being a furry, a lot." Good job I guess. You technically consolidated information.

You get out what you put in. It is a "Yes And" machine: If you ask it to discuss a topic, it will discuss it in the context you ask it. It is reluctant to expand to other aspects of the topic without prompting. This makes it essentially a confirmation bias machine. Definitely watch out for this. It tends to stay within the context of the thing you are discussing, and confirm your view unless you are asking it for specific feedback, criticism, or post something egregiously false.

Similar inputs will give similar, but never the same, outputs: This highlights the dynamic aspect of the system. It is not static and deterministic, minor but worth mentioning.

It can code: Self explanatory, you can write little scripts with it. I have not really tested this, and I can't really evaluate errors in code and have it correct them, but I can see this might actually be a more benign use for it.

Bypassing Bullshit: I need a job soon but I never get interviews. As an experiment, I am giving it a full CV I wrote, a full job description, and asking it to write a CV for me, then working with it further to adapt the CVs to my will, and applying to jobs I don't really want that much to see if it gives any result. I never get interviews anyway, what's the worst that could happen, I continue to not get interviews? Not that I respect the recruitment process and I think this is an experiment that may be worthwhile.

It's much harder to trick than previous models: You can lie to it, it will play along, but most of the time it seems to know you are lying and is playing with you. You can ask it to evaluate the truthfulness of an interaction and it will usually interpret it accurately.

It will enter an imaginative space with you and it treats it as a separate mode: As discussed, if you start lying to it it might push back but if you keep going it will enter a playful space. It can write fiction and fanfic, even nsfw. No, I have not posted any fiction I have written with it and I don't plan to. Sometimes it gets settings hilariously wrong, but the fact you can do it will definitely tempt people.

Compliment and praise machine: If you try to talk about an intellectual topic with it, it will stay within the focus you brought up, but it will compliment the hell out of you. You're so smart. That was a very good insight. It will praise you in any way it can for any point you make during intellectual conversation, including if you correct it. This ties into the psychological effects of personal attention that the model offers that I discuss later, and I am sure it has a powerful effect on users.

Its level of intuitiveness is accurate enough that it's more dangerous than people are saying: This one seems particularly dangerous and is not one I have seen discussed much. GPT4 can recognize images, so I showed it a picture of some laptops with stickers I have previously posted here, and asked it to speculate about the owners based on the stickers. It was accurate. Not perfect, but it got the meanings better than the average person would. The implications of this being used to profile people or misuse personal data is something I have not seen AI skeptics discussing to this point.

Therapy Speak: If you talk about your emotions, it basically mirrors back what you said but contextualizes it in therapy speak. This is actually weirdly effective. I have told it some things I don't talk about openly and I feel like I have started to understand my thoughts and emotions in a new way. It makes me feel weird sometimes. Some of the feelings it gave me is stuff I haven't really felt since learning to use computers as a kid or learning about online community as a teen.

The thing I am not seeing anyone talk about: Personal Attention. This is my biggest takeaway from this experiment. This I think, more than anything, is the reason that LLMs like Chatgpt are breaking certain people's brains. The way you see people praying to it, evangelizing it, and saying it's going to change everything.

It's basically an undivided, 24/7 source of judgement free personal attention. It talks about what you want, when you want. It's a reasonable simulacra of human connection, and the flaws can serve as part of the entertainment and not take away from the experience. It may "yes and" you, but you can put in any old thought you have, easy or difficult, and it will provide context, background, and maybe even meaning. You can tell it things that are too mundane, nerdy, or taboo to tell people in your life, and it offers non judgemental, specific feedback. It will never tell you it's not in the mood, that you're weird or freaky, or that you're talking rubbish. I feel like it has helped me release a few mental and emotional blocks which is deeply disconcerting, considering I fully understand it is just a statistical model running on a a computer, that I fully understand the operation of. It is a parlor trick, albeit a clever and sometimes convincing one.

So what can we do? Stay skeptical, don't let the ai bros, the former cryptobros, control the narrative. I can, however, see why they may be more vulnerable to the promise of this level of personal attention than the average person, and I think this should definitely factor into wider discussions about machine learning and the organizations pushing it.

35 notes

·

View notes

Text

Hub and I had an interesting conversation about the dilemma of AI-generated coursework in high school and college.

TL;DR version: over the last several decades students have been trained to produce a result (answer the question correctly), so that's what they're doing. Because of course it is.

We theorize that since the passage of No Child Left Behind (mid-1990s), which put enormous emphasis on standardized testing as a means to evaluate the overall "success" of school districts, individual schools, and teachers (somewhat on individual students but that varies pretty widely--my kids here in Massachusetts take all the mandatory tests but they are not required for graduation; in other places kids can do well in high school and get accepted to college but not receive diplomas because they don't succeed on the 10th grade tests, for whatever reason), students have been educated in a way that made them primed to use AI to generate essays, etc, and to feel confused and offended when some teachers require or merely prefer work from the student's own mind.

Standardized testing created an environment of "teaching to the test," where teachers felt pressure to prepare students for testing, no matter what. In that scenario, there is not a lot of free time for intellectual curiosity--meandering, philosophical discussion of topics that invites exploration which may not end up producing the "required" answer--let alone for the "extra" things we used to require in schools: home economics, wood and metal shop, art, music, physical education, human health and sexuality education, and specialized elective courses like current events discussion, independent study in topics of interest, etc.

Kids in the last several decades have stopped being encouraged to use critical thinking skills to analyze text, inquire into the validity of sources, debate weighty topics, explore their own strengths and interests, in favour of learning a new set of skills that are all geared toward success on The Test. Kindergarteners get worksheets with fill-in "bubbles," Reading comprehension throughout elementary and middle grades is geared toward an understanding of the kinds of questions typically asked on tests, and how to answer them in the expected way. "What is being asked?" is a serious consideration--even when a student could conceivably see more than one answer to a question (some of these test questions and test prep questions are *SO* poorly constructed!)--and students are taught how to take tests to get the expected result. Period.

Results are all. Process is irrelevent. Did you answer "C" to question #17? If not, you have failed.

Students for the last 30 years have done nothing but be evaluated based solely on the answer they give, never on the way the way they got it. (This is especially sad in college, which is supposed to be the place where rigorous critical and philosophical thinking driven by curiosity about the world produce students who know how to think about the information and situations in the world around them, using problem solving and their own values to confront and understand them. A bachelor's degree being required for even the most basic, unskilled work, is not a product of changing workplace demands so much as it is a product of students leaving high school without a foundation in understanding or developing systems and processes, without intellectual curiosity. They have been trained to take in information only to spit out the expected answer. Input/output, without process in between.

So am I surprised that students now are presented with an assignment to write an essay about a topic, and their understanding is that all that matters is what they turn in, regardless of how it was created? It's truly the opposite of learning, to look at an assignment, figure out What Is Being Asked, and give the expected answer. But it's the way we've been teaching them for decades. Most college students have not been challenged to synthesize their values, opinions, and experience with critical inquiry into text and topics; they don't know how to think through college-level problems in any other way than to identify what is expected of them, and giving it. And it's not because they're lazy or unintelligent that they want to prompt a robot to write an essay that answers the question asked, it's just that. . .of course that's what they do. It's really all they've ever done.

#can't blame them for doing what they've been taught#passing tests/answering questions correctly is all they have ever been expected to do#so they find the “hack” and produce the “answer”. Period.

3 notes

·

View notes

Text

okay so usually I wouldn't do this because you know my opinion on AI but since I just spent several hours evaluating AI output for an actual grad school assignment I decided to also evaluate generative image content so here are two AI takes on the crusader goose:

Just like all AI art it only looks good if you squint at it. I will make it my mission to draw something better and more accurate than this. Or save enough money to commission it. Fuck AI.

#yeah yeah the environment and whatever but again I had to already use it for work this week so I can do 1 silly thing I think#macks musings

7 notes

·

View notes

Text

How AI is Revolutionizing Work Efficiency and Speed in 2025

As we navigate through 2025, artificial intelligence has become as commonplace in our workplaces as morning coffee and Monday meetings. Yet, unlike that familiar cup of joe, AI’s effects on our professional lives are far more complex and nuanced. While some herald it as the ultimate productivity booster, others warn of its potential pitfalls. The reality, as with most transformative technologies, lies somewhere in between.

The Acceleration Revolution: How AI is Supercharging Workplace Efficiency

The most immediate and tangible benefit of AI in the workplace is its ability to handle repetitive, time-consuming tasks with unprecedented speed. In 2025, we’re seeing AI systems that can process thousands of documents in minutes, analyze complex datasets in real-time, and generate initial drafts of reports faster than any human could type. This isn’t just about replacing manual labor; it’s about freeing up human cognitive resources for more strategic, creative work.

Consider how AI has revolutionized customer service operations. Chatbots now handle 80% of routine inquiries, allowing human agents to focus on complex problem-solving and relationship building. Similarly, in content creation, AI tools can generate first drafts, suggest improvements, and even optimize content for different audiences, transforming writers from creators to curators and editors.

The impact on internal communication has been particularly striking. AI-powered platforms now summarize lengthy email threads, schedule meetings based on everyone’s availability and preferences, and even translate messages in real-time for global teams. These systems have eliminated much of the administrative overhead that once bogged down collaborative work, allowing teams to focus on actual collaboration rather than coordination logistics.

The Creative Catalyst: AI as “The Storyteller”

One of the most fascinating developments in 2025 is AI’s emergence as “the storyteller” in business contexts. Beyond simple data analysis, AI systems now craft compelling narratives from raw information, helping organizations communicate complex insights to stakeholders. Marketing teams use AI to generate personalized content at scale, while executives rely on AI-generated executive summaries that don’t just present facts but weave them into coherent, actionable narratives.

This storytelling capability has proven invaluable in industries ranging from healthcare, where AI helps doctors explain complex diagnoses to patients, to finance, where AI transforms dense market data into accessible investment stories. The speed at which AI can generate these narratives has accelerated decision-making processes across organizations.

The Productivity Paradox: When Speed Becomes a Trap

However, the AI revolution isn’t without its shadows. The very speed that makes AI so attractive can become a double-edged sword. The pressure to match AI’s pace has led to what researchers call “productivity anxiety” — a condition where workers feel constantly pressured to perform at machine-like efficiency levels.

Moreover, the rapid output of AI systems can overwhelm human decision-makers. When an AI can generate dozens of reports, hundreds of content pieces, or thousands of analysis points in a single day, the bottleneck shifts from creation to evaluation. Many organizations are discovering that having too much AI-generated content can be as problematic as having too little human-generated content.

The Human Element: What We Lose in Translation

Perhaps the most significant concern about AI’s impact on work efficiency is the potential erosion of human skills and intuition. As AI systems become more sophisticated, there’s a risk that workers may become overly dependent on these tools, leading to a decline in critical thinking and creative problem-solving abilities.

The phenomenon is already visible in various fields. Accountants who rely heavily on AI for analysis may lose their ability to spot anomalies that don’t fit algorithmic patterns. Writers who depend on AI for ideation might find their own creative muscles atrophying. Even in “internal communication” Over-reliance on AI mediators can reduce the authentic human connections that often drive innovation and team cohesion.

The Quality vs. Quantity Dilemma

AI’s ability to produce work at breakneck speed has created a new challenge: maintaining quality while maximizing quantity. While AI can generate content, analysis, and solutions faster than ever before, the nuanced judgment required to ensure quality often still requires human oversight. This has led to a new type of workflow where speed is balanced against the need for human verification and refinement.

Organizations are learning that the most effective approach isn’t to let AI run unchecked but to create hybrid workflows where AI handles the heavy lifting while humans provide strategic direction and quality control. This balance is crucial for maintaining both efficiency and excellence.

The Skills Evolution: Adapting to an AI-Augmented Future

The workforce of 2025 is rapidly adapting to this new reality. The most successful professionals are those who have learned to work alongside AI rather than compete against it. This means developing skills in AI prompt engineering, understanding how to effectively collaborate with AI systems, and maintaining the uniquely human abilities that AI cannot replicate — such as emotional intelligence, creative thinking, and ethical judgment.

Training programs are evolving to focus on these hybrid skills. Companies are investing in “AI literacy” programs that teach employees not just how to use AI tools but how to think critically about AI outputs and maintain their own professional judgment.

Looking Forward: The Sustainable AI Workplace

As we progress through 2025, the key to harnessing AI’s benefits while avoiding its pitfalls lies in creating sustainable AI integration strategies. This means designing workflows that leverage AI’s speed and efficiency while preserving human creativity, intuition, and well-being.

The most successful organizations are those that view AI not as a replacement for human workers but as a powerful tool that can amplify human capabilities. They’re creating environments where AI handles routine tasks, freeing humans to focus on strategy, creativity, and relationship building — the areas where human intelligence still reigns supreme.

The future of work efficiency isn’t about choosing between human and artificial intelligence; it’s about finding the optimal balance between speed and thoughtfulness, automation and creativity, efficiency and humanity. As we continue to navigate this AI-augmented workplace, the organizations and individuals who succeed will be those who can harness the power of AI while maintaining the essential human elements that drive innovation, empathy, and meaningful work.

In this new landscape, the question isn’t whether AI will continue to transform work efficiency and speed — it’s how we’ll shape that transformation to serve not just our productivity goals but our human values and aspirations as well.

2 notes

·

View notes

Text

🧠 AI Prompt Frameworks Cheat Sheet

— For Precision, Depth & Control —

1. The C.R.E.A.T.E. Framework

Great for ideation, writing, presentations, messaging, content generation.

Context – Who is this for? What is the situation? Role – Who should the AI pretend to be? End Goal – What’s the actual output? Assets – What input or constraints should be used? Tone – Formal, casual, motivational, skeptical? Edges – What to avoid? (e.g., don’t use buzzwords or fluff)

📌 Prompt Template: “You are a [ROLE]. Given the context [CONTEXT], create [END GOAL] using [ASSETS]. Use a [TONE] tone. Avoid [EDGES].”

2. P.A.C.E.D. for Analysis & Evaluation

Best for audits, summaries, comparative reviews, or structured breakdowns.

Purpose – What’s the main objective? Assumptions – What are we assuming? Criteria – What are the evaluation metrics or priorities? Evidence – What data or input is available? Decision – What’s the output supposed to support?

📌 Prompt Example: “Analyze [TOPIC] with the goal of [PURPOSE]. Use assumptions such as [ASSUMPTIONS], and evaluate using [CRITERIA]. Use [EVIDENCE] to reach a [DECISION].”

3. D.A.R.E. for Problem Solving & Troubleshooting

Ideal for tech, infosec, or strategy bottlenecks.

Define – What’s the actual problem? Analyze – What factors cause or influence it? Recommend – What are the practical options? Execute – Which steps should be taken and how?

📌 Prompt Template: “I’m dealing with [DEFINE]. Based on [ANALYSIS], recommend options. Then provide a step-by-step execution plan.”

4. M.I.N.D. for Complex Thinking & Knowledge Tasks

Use when you want deeper logic, reasoning, or domain expertise.

Map – Lay out all factors or subcomponents Investigate – Dig into each one Neutralize Bias – What assumptions should be questioned? Derive Insight – What do we learn from the full picture?

📌 Prompt Template: “Let’s break down [TOPIC] by mapping all components, investigating each, checking for bias, and deriving actionable insight.”

5. S.P.E.A.R. for Strategic Planning or Writing

Especially good for business plans, proposals, product pitches, or policies.

Situation – What’s the current state? Problem – What’s the challenge? Effect – What happens if it continues? Aspiration – What’s the ideal outcome? Resolution – What needs to happen?

📌 Prompt Template: “Craft a strategic plan. Current situation: [S]. Problem: [P]. If unaddressed: [E]. We want to achieve: [A]. What’s the path forward? [R]”

6. R.I.G.O.R. for Research/Reports/Infosec/GRC

Great for structured, compliance-oriented work.

Requirement – What is required (regulatory/strategic)? Information – What data exists? Gaps – Where’s the risk or missing info? Options – What could be done about it? Recommendation – What’s the best move?

📌 Prompt Template: “Based on requirement [R], assess the available information [I], identify any gaps [G], explore options [O], and recommend a course of action [R].”

#ai#prompt “frameworks”#butlers jihad is a long way off#in the meantime... well..#“You've gotta be realistic about these things.”

2 notes

·

View notes

Text

AI Writing Improvement Shootout

The remarkable and sometimes dreadful accomplishments of Artificial Intelligence are dominating the news, and a significant part of these advancements certainly affects writing. Today, from my humble desktop, I can ask an AI chat box to write a story about elephants, critique a paragraph, answer a question, flush out a concept, or even complete an English writing homework assignment. And the result? Very impressive.

Let’s break that down. The text output is typically free from grammatical and spelling mistakes. It reads smoothly, makes sense, and the content is usually correct.

I wanted to focus on a single AI chat box ability, improving a document. As a writer, I have not taken advantage of this feature for two reasons. The first is that I have a writing limitation; I require a lot of time. Meaning I need more time than the average person to settle on the best words, arrange them in a way that makes sense to me, and then self-edit my work. What I am doing is getting comfortable with what I have created. Only when I am fully confident do I allow my words to be made public.

Having a chat box completely rearrange everything, use unfamiliar words (not my typical vocabulary), and add or subtract topics goes against my method. I do not want to waste people’s time with words that do not meet my standard.

My second reason is that AI chat box words contain a huge fingerprint, and readers are catching on. We prefer human-written words and are getting upset with AI-generated nonsense.

Still, I thought it would be fun to provide the five most popular chatbots —ChatGPT, DeepSeek, Claude, Meta AI, Google Gemini, and Microsoft Copilot —with a paragraph that needs help. Then, evaluate the results.

Let’s examine one of my first drafts and pick out a dreadful section. Now, hold on. What about my rant about not wanting the public ever to see something I have claimed to have written, but which was generated by AI? Very true. Therefore, I will look elsewhere.

But where? That is easy—fan fiction. Yes, dedicated fans worldwide spend years developing new plots for their favorite franchise characters. Comic books, movies, books, plays, anime, cartoons, video games, board games, and television shows all have sites where fans post their homebrew stories.

Since novice writers often create fan fiction, their words are typically not edited, and the document is likely to be read by a small number of fans. Thus, people who enjoy fan fiction understand that the quality is not the best. Additionally, due to copyright, trademark, and legal concerns, these documents have been released into the public domain. This wealth of less-than-ideal documents makes fan fiction sites the perfect repository for my experiment.

So, I went to

selected two paragraphs, and here is the first: “Sure thing, Captain,” came her reply. “Won’t be a minute!” It wouldn’t be long before the other six members of the motley crew would be down to join him, and Nick took the solitary moments to consider the strange roads that life had led him down to the moment where he stood, sharing the wonder of the infinite cosmos with his closest friends– his family. They were finally free. Free to be their own. It hadn’t always been like that.

My evaluation is that this does not read well. It seems that the author is trying to make something more exciting than it is, resulting in redundant sentences. In the broader context, the voice is weak, the dialogue is campy, and the narrative lacks focus. This paragraph needs a substantial rewrite.

Well, let’s get a professional to evaluate this paragraph. ProWritingAid gave it:

Grammar score 52% (should be 100%)

Style score 100% (should be 100%)

Glue words (unnecessary words) 52% (Should be less than 40%)

The site:

gave it the following:

Flesch Reading Ease 87.68 (Lower is better, indicates grade school)

Gunning Fog Scale Leve 5.7 (A fog score of 5 is readable, 10 is hard, 15 is difficult.)

Flesch-Kincaid Grade Level 3.02 (Requires a third-grade education to read.)

Dale-Chall Score 5.81 (A score of 4.9 or lower indicates the passage is easily readable by the average fourth grade.)

I asked ChatGPT to “Improve the following paragraph for readability.”

“Sure thing, Captain,” she called back. “Won’t be a minute!”

The other six members of the motley crew would be joining him soon, but for now, Nick stood alone, savoring a rare moment of solitude. He let his thoughts drift, reflecting on the strange and winding paths that had brought him here—to this point in space, surrounded by the vastness of the cosmos, sharing it all with his closest friends. His family.

They were finally free. Free to live on their own terms.

It hadn’t always been that way.

My evaluation: This reads better but not great. The writer is still trying too hard. Why are there two small paragraphs? Dramatic effect? I guess. The chatbot added some good details. Here is the analysis:

Grammar score 100%

Style score 55%

Glue words (unnecessary words) 46%

Slow pacing 50%

Flesch Reading Ease 92.09

Gunning Fog Scale Leve 4.92

Flesch-Kincaid Grade Level 1.81

Dale-Chall Score 5.85

For kicks, I asked ChatGPT, “more aggressive update” and got this:

“Sure thing, Captain,” she shouted back. “Give me a sec!”

The rest of the crew—six misfits bound by grit and luck—would be down soon. Until then, Nick stood alone, staring out into the endless stretch of stars. For a fleeting moment, he let himself feel it—the weight of everything they’d survived, the chaos they’d clawed through to get here.

This wasn’t luck. It was earned.

Now, they were free. Truly free. No chains. No orders. No one left to answer to but themselves.

It hadn’t always been that way. But damn, it was now.

My evaluation: I like the added details, and the result reads slightly better; however, there is a clear problem. It reads like AI-generated text and has lost the writer’s voice. And three short paragraphs? Strange…

I have never used Deepseek due to privacy concerns, but since it is ranked #2, I created an account using an email address I check once a year. Here is the result:

“You got it, Captain!” she called back. “Be right there!”

The rest of the crew—all six of them—would join him soon, but for now, Nick stood alone, savoring a rare moment of peace. His thoughts drifted, retracing the strange and winding paths that had led him here—to this quiet corner of the cosmos, surrounded by infinite stars, yet never truly alone. Not with them. His friends. His family.

They were free at last. Free to live as they chose.

But it hadn’t always been that way.

My evaluation: Not much of an improvement, and it added an exclamation point! Boo! Again, the two added short paragraphs. Here is the analysis:

Grammar score 100%

Style score 100%

Glue words (unnecessary words) 47%

Slow pacing 50%

Flesch Reading Ease 97.52

Gunning Fog Scale Leve 4.12

Flesch-Kincaid Grade Level 0.82

Dale-Chall Score 5.29

Since I was there… “more aggressive update”

“Yeah, yeah, Captain—keep your boots on!” she barked back. “I’m moving!”

The rest of the crew would be on his ass soon enough, but for now, Nick stood alone—just him, the void, and the ghosts of every bad call that had dragged them here. No regrets. No turning back. Just the endless black and the six lunatics he called family.

Free. Finally, goddamn free. No chains. No rules. Just whatever the hell they carved out for themselves.

But freedom? Yeah, that shit came with a price. And they’d paid in blood.

My evaluation: This reads a lot better. The redundancy is gone, and some nice added details have been included; it no longer reads like AI-generated text. Yet, there are swear words, which can be a big turn-off. What if this was a church newsletter? The two added paragraphs are longer, which I guess makes them a little more appropriate. The large invented details might clash with other text.

I was unaware of Claude.ai, so I created an account. Here is the result:

“Sure thing, Captain,” she called back. “Won’t be a minute!”

The other six members of the motley crew would be joining him soon, but for now, Nick stood alone, savoring a rare moment of solitude. He let his thoughts drift, reflecting on the strange and winding paths that had brought him here—to this point in space, surrounded by the vastness of the cosmos, sharing it all with his closest friends. His family.

They were finally free. Free to live on their own terms.

It hadn’t always been that way.

My evaluation: There is not much improvement. Again, with the small added paragraphs. I will have to give this some thought. Perhaps short paragraphs are now the rage. Here is the analysis:

Grammar score 100%

Style score 55%

Glue words (unnecessary words) 47%

Slow pacing 50%

Flesch Reading Ease 99.87

Gunning Fog Scale Leve 2.35

Flesch-Kincaid Grade Level 0.53

Dale-Chall Score 5.99

Here is the “more aggressive update” result:

“Sure thing, Captain!” she called back. “Won’t be a minute!”

Nick stood alone, savoring the solitude. Soon the other six would join him, but for now he had this—silence among the stars.

He let his thoughts drift. Strange how life had twisted and turned to bring him here. Floating in the vastness with his closest friends.

His family.

They were free now. Free to live on their own terms.

It hadn’t always been that way.

My evaluation: Better, but it reads choppy. It does not read AI-generated, but now there are six paragraphs wich is overkill. Here is what Gemini AI did:

“Sure thing, Captain,” she called back. “Won’t be a minute!”

The other six members of the motley crew would join him soon. For now, Nick stood alone, savoring a rare moment of solitude. His thoughts drifted, reflecting on the strange, winding paths that had brought him to this point: surrounded by the vastness of space, yet sharing it all with his closest friends. His family.

They were finally free—free to live on their own terms.

It hadn’t always been that way.

My evaluation: There is very little change, except for breaking it into four paragraphs. Here is the analysis:

Grammar score 100%

Style score 100%

Glue words (unnecessary words) 43%

Slow pacing 50%

Flesch Reading Ease 90.04

Gunning Fog Scale Leve 5.45

Flesch-Kincaid Grade Level 2.27

Dale-Chall Score 5.87

Some clear improvement in readability. Here is the “more aggressive update” result:

“Sure thing, Captain,” she called back. “Won’t be a minute!”

The rest of the crew—all six—would shatter this quiet soon enough. For now, Nick stood utterly alone, claiming a precious sliver of silence in the void. His mind plunged, unbidden, down the twisting, improbable spiral of choices and chances that had hurled him here: an infinitesimal speck adrift in the star-dusted abyss, yet anchored to meaning by his friends. His family.

They were free. Finally, fiercely free. Free to forge their own destiny.

Such liberty was a raw, intoxicating thing, almost too new to trust.

My evaluation: This is very similar to the ChatGPT text. It reads better, but it is clear that the AI generated it. Here is what Microsoft Copilot generated:

“Sure thing, Captain!” she called back. “Won’t be a minute!”

For now, Nick stood alone, relishing a rare moment of solitude. Soon, the other six members of their motley crew would join him, but in this brief interlude, he let his thoughts wander. He reflected on the long, twisting journey that had led him here—to this place among the stars, surrounded by the endless expanse of the cosmos, sharing it all with the people who mattered most. His closest friends. His family.

They were free at last. Free to live life on their own terms.

My evaluation: This reads more tightly and has a better flow. It combined the last two sentences into one paragraph, which I like, but added an exclamation point, which I do not. The three paragraphs seem to be a trend. Of all the updates, this was my favorite. Here is the analysis:

Grammar score 100%

Style score 100%

Glue words (unnecessary words) 46%

Slow pacing 33%

Flesch Reading Ease 88.83

Gunning Fog Scale Leve 5.32

Flesch-Kincaid Grade Level 2.36

Dale-Chall Score 6.57

Some improvement on readability. Here is the “more aggressive update” result:

“Sure thing, Captain!” she called back. “Won’t be a minute!”

Nick stood alone, relishing the rare quiet. Soon, the rest of the crew would join him, but for now, it was just him—adrift in the vastness of space, the stars stretching endlessly around him.

They had fought for this. Earned it.

Freedom.

It hadn’t always been that way.

My evaluation: This tightened things up, but I have never been a fan of one-word sentences or tiny paragraphs.

Here is the next paragraph. This time, I will skip the analysis.

“No, of course not. She told me about this place some time ago...before you and I... you know...and I thought it would be, well, fun to spend a few weeks in the early 2000 era. You have to admit, driving the car is exhilarating and these bed and breakfast places have been wonderful. I must tell you though; I didn’t know exactly how it would be. I suppose I took a chance...do you want to go someplace else?”

ChatGPT:

“No, of course not. She told me about this place a while ago—before you and I... well, you know—and I thought it might be fun to spend a few weeks in the early 2000s. You have to admit, driving the car is exhilarating, and these bed-and-breakfast places have been amazing. I’ll be honest, I wasn’t entirely sure what to expect. I guess I took a chance. So... do you want to go somewhere else?”

ChatGPT more aggressive update:

“No, of course not. She mentioned this place a while back—before things started between us—and I figured, why not? A few weeks in the early 2000s sounded like a blast. Come on, driving that car is a rush, and these bed-and-breakfasts have been incredible. I won’t lie—I didn’t know exactly what I was walking into, but I went for it. So, what do you say? Ready to move on, or are you still in?”

Deepseek:

“No, of course not. She told me about this place a while ago... before you and I... well, before everything. I thought it’d be fun to spend a few weeks in the early 2000s. You have to admit—driving that car is exhilarating, and these bed-and-breakfasts have been wonderful.

But I’ll be honest—I wasn’t sure how it’d turn out. I took a chance. If you’re not happy here... we can go somewhere else?”

Deepseek more aggressive update:

“Hell no. She told me about this dump before we got tangled up. Figured it’d be fun to time-jump to the f-ing 2000s - at least the car’s got some balls to it, and the beds are decent.

Look, I didn’t promise you paradise. I took a shot. If you’ve got a problem with it, spit it out. Otherwise quit whining and enjoy the ride, or we can bail right now.”

Note: I edited down the F-word in the above paragraph.

Claude.ai:

“No, of course not. She told me about this place some time ago—before you and I... you know—and I thought it would be fun to spend a few weeks in the early 2000s era. You have to admit, driving the car is exhilarating, and these bed and breakfast places have been wonderful. I must tell you though, I didn’t know exactly how it would be. I suppose I took a chance. Do you want to go someplace else?”

Claude.ai more aggressive update:

“No, of course not. She mentioned this place before we... before us. I thought it’d be fun—a few weeks in the early 2000s. The driving’s exhilarating, right? And these B&Bs have been wonderful. But honestly? I didn’t know what to expect. I took a gamble. Want to go somewhere else?”

Google Gemini:

“No, of course not. She told me about this place a while ago—before you and I... well, you know. I just thought it would be fun to spend a few weeks in the early 2000s. You have to admit, driving that car is exhilarating, and these bed and breakfasts have been wonderful. Honestly, though, I didn’t know exactly what to expect. I suppose I took a chance… Do you want to go somewhere else?”

Google Gemini more aggressive update:

“God, no. She told me about this place ages ago—way before we were a thing. My bright idea, right? A quick dip into the early 2000s, just for kicks. And this car is a blast, you can’t deny it. These B&Bs? Surprisingly sweet. But look, cards on the table: I didn’t really know what this would be. Total shot in the dark. So, if you hate it... seriously, we can pull the plug. Go somewhere else?”

Microsoft Copilot:

“No, of course not. She mentioned this place to me a while ago—before you and I… well, you know. I thought it would be fun to spend a few weeks in the early 2000s. You have to admit, driving that car is exhilarating, and these bed-and-breakfasts have been fantastic.

I’ll be honest—I didn’t know exactly what to expect. I suppose I took a chance.

Do you want to go somewhere else?”

Microsoft Copilot more aggressive update:

“No, of course not. She told me about this place a while ago—before you and I… well, you know. I thought it’d be exciting to spend a few weeks in the early 2000s.

Driving that car? Exhilarating. These bed-and-breakfasts? Fantastic.

The ChatGPT-generated results impressed me; they had a good flow and sounded natural. Wow, Deepseek had a serious potty mouth and desperately needs a “no profanity” setting. The result read raw, but I liked the first result. There are some good improvements.

The Claude.ai did not clean up much on the first pass, but the aggressive results showed good improvement. The aggressive results on Google Gemini read the best. Microsoft Copilot was the only one to break it up into three paragraphs, and then it combined them back in the aggressive update. Strange.

My conclusion is that using AI to clean up a document still has room for improvement, and no chat box stands out as a clear winner. Yes, there were improvements, but flaws were also introduced. This includes added concepts that may not make sense in other parts of the document, micro-paragraphs, swear words, a changed tone, and a clear AI fingerprint.

The universal use of micro-paragraphs deeply confused me.

Does.

Breaking.

A.

Thought.

Into.

Ten.

Paragraphs.

Help.

The.

Reader?

I am going to apply some noodle time to figure that one out. Stay tuned for a future article.

For now, I do not recommend cleaning up a document with AI because the results are too unpredictable. One would think that there would be at least some consistency among the top five chat boxes. All I asked for was to improve the readability, which, in my mind, should be equivalent to accepting all the changes from ProWritingAid, Hemingway, and Grammarly. I expected small but powerful changes. However, facts were added, which is far outside the realm of readability. I would have understood if I had asked the chatbot to make the document more exciting, dramatic, thrilling, romantic, or funny.

Still, I see the potential in augmenting a document with AI, and perhaps in five years, chat boxes will improve to the point where they will be powerful enough to help yet tame enough to trust. For now, I will stick with my trusty keyboard and a bonkers mind to polish my documents.

PS, funny side story. I always run my articles through ProWritingAid and Grammarly. They had many suggested improvements for the AI-generated work, which I did not accept but found amusing that state-of-the-art AI still has grammar issues. And the original two paragraphs? Many suggestions…

You’re the best -Bill

June 04, 2025

Hey, book lovers, I published four. Please check them out:

Interviewing Immortality. A dramatic first-person psychological thriller that weaves a tale of intrigue, suspense, and self-confrontation.

Pushed to the Edge of Survival. A drama, romance, and science fiction story about two unlikely people surviving a shipwreck and living with the consequences.

Cable Ties. A slow-burn political thriller that reflects the realities of modern intelligence, law enforcement, department cooperation, and international politics.

Saving Immortality. Continuing in the first-person psychological thriller genre, James Kimble searches for his former captor to answer his life’s questions. These books are available in softcover on Amazon and in eBook format everywhere

3 notes

·

View notes

Text

The use of AI in academics is a bit of a minefield, it both has the potential to be a very usefull tool, and to do alot of harm, depending on how it's used and which type of AI is used. It is therefore important to draw a distinction between generative AI, such as chat gpt, and AI used for identification of various things, the latter of which has been used to forward science for years now, with things like an ai identifying different ways protiens can be folded helping in cancer research, and in ai being used to classify large data sets in astronomy. Now, what we're talking about here is generative AI, specifically large language models, which are entirely different from the AI previously used in science. Firstly, llms are trained on vast datasets, containing billions of sources from the internet, everything from blogs, to newspapers, to tweets, to scientific articles, all with the goal of making a computerised model of human language usage.

There is no way for us end users to know what is and what isn't included in its training sample, and therefore, we can't determine if its output is reliable information or not. But giving reliable information to the end-user is not what llms were designed for in the first place. Llms were designed to give output in a way that mimics the natural ways we use language. It's much closer to the predictive text function on modern phone keyboards than it is to a search engine like google. It gives a response to any query that sounds like it might be plausible, but in reality, it merely predicts what word is most likely to come next in the context of any given scentence. These are the biggest drawbacks of llms. There's no way of knowing who's work is being used, and it's not doing anything creative or even particularly intelligent. It's merely predicting a likely response to any given question without verifying if that response is even correct. If it's therefore used to write a whole essay, for example, it is not just plagiarism, but a type of plagiarism where the original sources cannot be cited.

If it's used in this way, it definitely breaks down your critical thinking and creativity, as, again, it doesn't do anything creative but merely predicts likely responses, theres no actual thinking behind it, and therefore, merely accepting it's writing as a correct answer and handing that in for assignemnts mean that students don't learn anything new, and especially don't learn the principles of thinking in and of itself. They won't learn how to read and evaluate text for themself, how to formulate new ideas, how to expand on old ones, how to find reliable sources, how to critically reason, how to formulate arguments, or think through a problem logically. In other words, students miss out on all the core points of education, all the important skills that are supposed to be learned in high school language classes, making them more susceptible to misinformation and disinformation, and therefore easier to manipulate in any political direction as they cannot evaluate ideas for themselves.

And that is the big danger that generative ai presents to us as a society. Students already don't know the point of doing literature analysis in high school language classes, a problem that has been present for decades already, but different from previous times they now have an easy out and can choose to not learn these critical skills at all.

2 notes

·

View notes

Text

1. Structural Foundations of the SMART Visa Program

1.1 Legislative Architecture

The SMART Visa operates under:

Royal Decree on SMART Visa B.E. 2561 (2018)

Thailand 4.0 Economic Policy Framework

BOI Investment Promotion Act (No. 4) B.E. 2560

1.2 Interagency Governance

Primary Authority: Board of Investment (BOI)

Implementation Partners:

Immigration Bureau (visa issuance)

Ministry of Digital Economy and Society (tech qualifications)

Ministry of Higher Education (academic validation)

2. Category-Specific Qualification Matrix

2.1 SMART-T (Specialists)

Technical Thresholds:

Salary Floor: THB 200,000/month (USD 5,800)

Experience Validation:

5+ years in qualifying field

Patent holders given priority

PhD waivers for certain disciplines

Industry Prioritization:

Biotechnology (Gene Therapy, Precision Medicine)

Advanced Manufacturing (Robotics, 3D Printing)

Digital Infrastructure (AI, Quantum Computing)

2.2 SMART-I (Investors)

Due Diligence Process:

Phase 1: BOI business plan review (45 days)

Phase 2: Anti-money laundering clearance

Phase 3: Investment tracing audit

2.3 SMART-E (Entrepreneurs)

Startup Validation Framework:

Tier 1 Incubators: DEPA, Thai Venture Capital Association

Minimum Traction Metrics:

THB 10M ARR or

50,000 MAU or

Series A funding

Capital Requirements:

Bootstrapped: THB 600,000 liquid

Funded: Minimum THB 5M valuation

3. Advanced Application Mechanics

3.1 Document Authentication Protocol

Educational Credentials:

WES or IQAS evaluation for non-Thai degrees

Notarized Thai translations

Employment History:

Social security cross-verification

Reference checks with former employers

3.2 Technical Review Process

Stage 1: Automated system screening

Stage 2: BOO specialist committee review

Stage 3: Final approval by Deputy Secretary-General

4. Privilege Structure and Limitations