#AutoML

Explore tagged Tumblr posts

Text

youtube

#sub media#gaza#palestine#israel#operation iron sword#hamas#habsora#ai#israel defense forces#idf#google#amazon#youtube#dahiya doctrine#nizoz#storm clouds#iai harpy#project nimbus#automl#elbit systems#israeli occupation#genocide#war crimes

7 notes

·

View notes

Text

Is AutoML Replacing Data Scientists? Understanding the Evolving Role

In the rapidly accelerating world of Artificial Intelligence and machine learning, a question frequently echoes through the halls of data science departments and online forums: "Is AutoML going to take my job?" Automated Machine Learning (AutoML) tools, capable of automating significant portions of the machine learning pipeline, have indeed gained immense sophistication by mid-2025. Yet, the consensus among industry experts is clear: AutoML is not replacing data scientists, but rather profoundly evolving their role.

Instead of a threat, AutoML represents a powerful set of tools that augment human capabilities, shifting the focus of data scientists from tedious, repetitive tasks to higher-value, more strategic endeavors.

What is AutoML and Why the Buzz?

AutoML platforms are designed to automate various stages of the machine learning workflow that traditionally required significant manual effort and deep expertise. These stages often include:

Data Preprocessing: Handling missing values, encoding categorical features, scaling data.

Feature Engineering: Automatically generating new features from raw data that might improve model performance.

Algorithm Selection: Testing a wide array of machine learning algorithms (e.g., logistic regression, random forests, neural networks) to find the best fit for a given problem.

Hyperparameter Tuning: Optimizing the configuration settings of chosen algorithms to maximize their performance.

Model Validation & Evaluation: Automatically performing cross-validation and evaluating models using various metrics.

Model Deployment: Streamlining the process of putting trained models into production.

The appeal of AutoML is undeniable: it democratizes machine learning, allowing "citizen data scientists" and domain experts with less coding expertise to build basic, functional models quickly. It also significantly accelerates the experimentation phase for experienced data scientists, allowing them to test hundreds of model configurations in a fraction of the time.

Limitations of AutoML: Where Human Expertise Remains Indispensable

While AutoML excels at automating repetitive, computationally intensive tasks, it has inherent limitations that prevent it from fully replacing the nuanced, creative, and critical thinking abilities of a human data scientist:

Problem Formulation and Business Acumen: AutoML doesn't understand the underlying business problem, the strategic goals, or the specific context in which a model will be used. A human data scientist translates a vague business challenge into a well-defined data science problem.

Data Understanding and Quality: AutoML assumes clean, relevant, and unbiased data. It struggles with messy, incomplete, or highly specialized datasets that require deep domain knowledge to clean, transform, and interpret correctly. Identifying and mitigating subtle biases in raw data is still a fundamentally human task.

Feature Engineering for Novelty: While AutoML can automate generic feature engineering, creating truly innovative and insightful features often requires a deep understanding of the domain and a spark of human creativity that algorithms currently lack.

Model Interpretability and Explainability (XAI): AutoML often produces complex, "black-box" models that are difficult to interpret. For high-stakes applications (e.g., healthcare, finance, legal), understanding why a model made a specific prediction is crucial for trust, accountability, and regulatory compliance. Explaining model decisions to non-technical stakeholders requires human communication skills.

Handling Unstructured and Specialized Data: While improving, AutoML tools still face challenges with highly unstructured data (e.g., complex text, audio, video) or specialized data types (e.g., graph data, genomics) that often require custom pre-processing and model architectures.

Ethical AI and Bias Mitigation: AutoML tools are becoming more equipped with bias detection features, but identifying and ethically mitigating bias rooted in societal inequalities or historical data requires human judgment, ethical reasoning, and careful consideration of fairness definitions, which AutoML cannot fully automate.

Deployment and MLOps Complexity: While AutoML can deploy models, setting up robust, scalable, and continuously monitored MLOps pipelines for complex production environments (handling data drift, model decay, infrastructure challenges) still requires significant engineering expertise.

The Evolving Role of the Data Scientist: From Coder to Strategist

Instead of elimination, AutoML is driving an exciting evolution in the data scientist's role:

Focus on Problem Definition & Strategy: Data scientists will spend more time translating complex business questions into solvable data problems, defining success metrics, and aligning AI solutions with organizational goals.

Data Curation & Governance: Their focus shifts to ensuring data quality, representativeness, and ethical sourcing, becoming custodians of high-integrity data pipelines.

Advanced Feature Engineering: While AutoML handles basic features, data scientists will specialize in creating novel, high-impact features based on deep domain knowledge.

Model Interpretation & Explainability: They will be responsible for unpacking "black-box" models, communicating insights to diverse audiences, and ensuring interpretability for trust and compliance.

Bias Detection & Ethical AI: Data scientists become crucial in identifying, measuring, and mitigating biases, ensuring models are fair, equitable, and adhere to ethical guidelines.

Experimentation & Validation Expert: They leverage AutoML to rapidly prototype and iterate, then dive deeper to validate and fine-tune the most promising models, ensuring their real-world applicability and robustness.

Consultant & Communicator: The ability to communicate complex technical findings to non-technical stakeholders, influence decisions, and lead cross-functional teams becomes paramount.

MLOps Collaboration: While not necessarily becoming MLOps engineers, data scientists will need a strong understanding of deployment, monitoring, and pipeline management to effectively collaborate with engineering teams.

Conclusion: Augmentation, Not Replacement

In mid-2025, AutoML is undeniably a powerful force democratizing certain aspects of machine learning. However, it's a tool for augmentation, not replacement. The human data scientist's unique blend of business acumen, domain expertise, critical thinking, problem-solving skills, and ethical reasoning remains irreplaceable.

For aspiring and current data scientists, the key is not to fear automation but to embrace it. By leveraging AutoML for the repetitive, lower-level tasks, data scientists are freed to focus on the truly challenging, creative, and impactful problems that require a human touch. The future of data science is one where human intelligence, amplified by automated tools, drives unprecedented innovation and value.

0 notes

Text

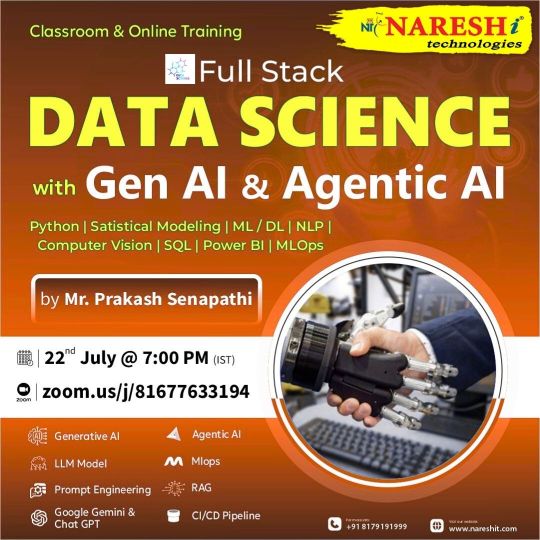

📊 Future-Proof Your Career with Full Stack Data Science & AI! 🤖

The demand for skilled Data Scientists and AI professionals is skyrocketing. Get ahead with the Full Stack Data Science & AI program by Mr. Prakash Senapathi, starting July 22 at 7:00 PM IST — a comprehensive course designed to turn you into an industry-ready professional.

👨🏫Learn directly from an expert with real-world industry experience. This program blends foundational concepts with modern tools and real-time projects to ensure hands-on learning.

🚀 Course Highlights:

Python, NumPy, Pandas, Matplotlib, Seaborn

Advanced Machine Learning Algorithms

Deep Learning using Keras & TensorFlow

AI Concepts: NLP, Computer Vision, Chatbots, and Speech Recognition

SQL, Data Warehousing, Power BI & Tableau Visualizations

Cloud Deployment using AWS, Azure, and GCP basics

Streamlit Dashboards & Flask App APIs

Exploratory Data Analysis (EDA) & Feature Engineering

Model Optimization, Cross-Validation & Hyperparameter Tuning

GitHub Workflow, Version Control & MLOps with CI/CD

Capstone Projects with Resume and LinkedIn Portfolio Review

Time Series Forecasting & Recommender Systems

Data Ethics, Bias Mitigation & Explainable AI (XAI)

🎯 Suitable for:

Graduates, Working Professionals, and Tech Enthusiasts

Anyone aiming to pivot into AI, ML, or Data Analytics

Business Analysts transitioning into tech roles

Developers seeking a transition to ML/AI-focused jobs

Entrepreneurs aiming to use AI in real-world products

💡 Extras:

Real-world datasets from finance, healthcare & e-commerce

Weekly mini-projects and quizzes

Lifetime access to recorded sessions + Certification

Mock technical interviews, resume guidance & job referrals

Supportive learner community for networking & Q&A

Tools walkthrough: Jupyter, Colab, GitHub, Docker Basics

Special module on AI Trends: GenAI, ChatGPT APIs, and AutoML

🔗 Register Now: https://tr.ee/4DF2gi 🎓 All Free Demos: https://linktr.ee/ITcoursesFreeDemos

#DataScienceTraining#AIwithPython#FullStackDS#NareshIT#MachineLearningCourse#AITools#CareerInDataScience#FreeDemo#PowerBI#MLBootcamp#DataAnalytics#PythonAI#MLOps#GitHubProjects#AIForBeginners#TensorFlowTraining#TableauDashboards#ChatbotDevelopment#SpeechAI#AzureAI#StreamlitApps#LinkedInReady#ExplainableAI#AutoML#GenAI#TimeSeriesForecasting#RecommenderSystems#DockerForAI#freshers#jobseekers

0 notes

Text

#EdgeAI#AutoML#EmbeddedSystems#OpenSourceAI#KenningFramework#AnalogDevices#Antmicro#powerelectronics#powersemiconductor#powermanagement

0 notes

Text

#AutoML#EdgeAI#EmbeddedSystems#OpenSourceAI#MachineLearning#EdgeImpulse#AIInnovation#IoTDevelopment#Timestech#electronicsnews#technologynews

0 notes

Text

Project Title: automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization - Keras-Exercise-106

Here’s a far more advanced Keras project focused on automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization. This takes your last project to the next level by bringing optimization into architecture and training pipeline, with multi-algorithm search, advanced callbacks, checkpointing, and evaluation. Project…

0 notes

Text

Project Title: automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization - Keras-Exercise-106

Here’s a far more advanced Keras project focused on automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization. This takes your last project to the next level by bringing optimization into architecture and training pipeline, with multi-algorithm search, advanced callbacks, checkpointing, and evaluation. Project…

0 notes

Text

Project Title: automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization - Keras-Exercise-106

Here’s a far more advanced Keras project focused on automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization. This takes your last project to the next level by bringing optimization into architecture and training pipeline, with multi-algorithm search, advanced callbacks, checkpointing, and evaluation. Project…

0 notes

Text

Project Title: automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization - Keras-Exercise-106

Here’s a far more advanced Keras project focused on automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization. This takes your last project to the next level by bringing optimization into architecture and training pipeline, with multi-algorithm search, advanced callbacks, checkpointing, and evaluation. Project…

0 notes

Text

Project Title: automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization - Keras-Exercise-106

Here’s a far more advanced Keras project focused on automated hyperparameter tuning for a convolutional neural network on CIFAR-100, using Keras Tuner’s Hyperband and Bayesian Optimization. This takes your last project to the next level by bringing optimization into architecture and training pipeline, with multi-algorithm search, advanced callbacks, checkpointing, and evaluation. Project…

0 notes

Text

#MachineLearning#AutoML#DataScience#PredictiveAnalytics#ModelInterpretability#BigData#DigitalTransformation#AI

0 notes

Text

AutoML: Automating the AI Development Process

The world of artificial intelligence (AI) is evolving rapidly, and automating the AI development process has become a game-changer for businesses, developers, and data scientists. AutoML, short for Automated Machine Learning, is a revolutionary approach that simplifies the creation of machine learning models, making AI accessible to a broader audience. By streamlining complex tasks like data preprocessing, model selection, and hyperparameter tuning, AutoML empowers organizations to harness AI's potential without requiring deep expertise in data science. This blog explores how AutoML is transforming the AI landscape, its benefits, challenges, and practical applications.

What is AutoML?

AutoML refers to the process of automating the end-to-end workflow of machine learning, from data preparation to model deployment. Traditionally, building an AI model involves multiple intricate steps, such as feature engineering, algorithm selection, and performance optimization. These tasks demand significant time, expertise, and computational resources. AutoML platforms simplify this by using algorithms to automate repetitive tasks, enabling even non-experts to create high-performing models.

The Core Components of AutoML

AutoML systems typically focus on automating several key stages of the machine learning pipeline:

Data Preprocessing: Cleaning and preparing raw data for analysis.

Feature Engineering: Automatically selecting and transforming variables to improve model performance.

Model Selection: Choosing the best algorithm for a given task, such as decision trees, neural networks, or regression models.

Hyperparameter Tuning: Optimizing model parameters to achieve better accuracy.

Model Evaluation: Assessing model performance using metrics like accuracy, precision, or recall.

By handling these tasks, AutoML reduces the manual effort required, making AI development faster and more efficient.

Why AutoML Matters

The rise of AutoML is driven by the increasing demand for AI solutions across industries. However, the shortage of skilled data scientists and the complexity of traditional machine learning workflows create barriers for many organizations. AutoML addresses these challenges by democratizing AI, enabling businesses of all sizes to adopt intelligent solutions.

Bridging the Skills Gap

One of the most significant advantages of AutoML is its ability to lower the entry barrier for AI development. Non-technical professionals, such as business analysts or domain experts, can use AutoML platforms to build models without writing complex code. This empowers teams to focus on solving business problems rather than getting bogged down in technical details.

Accelerating Development Timelines

Automating the AI development process significantly reduces the time required to build and deploy models. What once took weeks or months can now be accomplished in days or even hours. This speed is critical for businesses that need to respond quickly to market changes or customer demands.

Cost Efficiency

By reducing the need for specialized talent and minimizing manual effort, AutoML lowers the cost of AI development. Small and medium-sized enterprises (SMEs) can now access AI tools that were previously reserved for large corporations with substantial budgets.

How AutoML Works

AutoML platforms leverage advanced algorithms to automate the machine learning pipeline. These platforms typically use a combination of techniques, such as neural architecture search (NAS), genetic algorithms, and reinforcement learning, to identify the best models for a given dataset.

Step-by-Step Process

Data Input: Users upload their dataset, which could include structured data (e.g., spreadsheets) or unstructured data (e.g., images or text).

Preprocessing: The platform cleans the data, handles missing values, and normalizes features.

Model Exploration: AutoML tests multiple algorithms and architectures to find the best fit.

Optimization: The system fine-tunes hyperparameters to maximize performance.

Deployment: Once the model is ready, AutoML tools often provide options for deployment, such as APIs or integration with existing systems.

Popular AutoML Platforms

Several AutoML platforms have gained popularity for their ease of use and powerful features. Examples include:

Google Cloud AutoML: Offers solutions for vision, natural language, and tabular data.

Microsoft Azure AutoML: Integrates with Azure’s ecosystem for scalable AI development.

H2O.ai: Known for its open-source AutoML framework, Driverless AI.

DataRobot: A comprehensive platform for automated machine learning and predictive modeling.

These platforms cater to different use cases, from startups to enterprises, and provide user-friendly interfaces for automating the AI development process.

Benefits of AutoML

AutoML offers a range of benefits that make it an attractive option for organizations looking to adopt AI.

Accessibility for Non-Experts

With intuitive interfaces and guided workflows, AutoML platforms allow users with minimal technical knowledge to create robust models. This accessibility fosters innovation across industries, as teams can experiment with AI without needing advanced skills.

Consistency and Scalability

AutoML ensures consistent results by reducing human error in tasks like feature selection or model tuning. Additionally, it scales easily, allowing organizations to apply AI to multiple projects simultaneously.

Enhanced Productivity

By automating repetitive tasks, AutoML frees up data scientists to focus on higher-value activities, such as interpreting results or designing innovative solutions. This leads to greater productivity and faster innovation cycles.

Challenges of AutoML

While AutoML is a powerful tool, it’s not without its challenges. Understanding these limitations is crucial for organizations considering its adoption.

Limited Customization

AutoML platforms are designed for general use cases, which may limit their flexibility for highly specialized tasks. In some cases, custom-built models may outperform AutoML solutions when tailored to specific problems.

Black-Box Nature

Many AutoML systems operate as black boxes, meaning users may not fully understand how the platform makes decisions. This lack of transparency can be a concern in industries like healthcare or finance, where explainability is critical.

Data Dependency

AutoML’s performance heavily relies on the quality and quantity of input data. Poor-quality data or insufficient datasets can lead to suboptimal models, even with automation.

Real-World Applications of AutoML

AutoML is being used across various industries to solve real-world problems. Here are a few examples:

Healthcare

In healthcare, AutoML is used to predict patient outcomes, diagnose diseases, and optimize treatment plans. For instance, hospitals can use AutoML to analyze medical imaging data, identifying patterns that indicate conditions like cancer or heart disease.

Finance

Financial institutions leverage AutoML for fraud detection, credit scoring, and risk assessment. By automating the AI development process, banks can quickly build models to detect suspicious transactions or predict loan defaults.

Retail and E-Commerce

Retailers use AutoML to personalize customer experiences, optimize pricing strategies, and forecast demand. For example, AutoML can analyze customer behavior to recommend products, improving sales and customer satisfaction.

Manufacturing

In manufacturing, AutoML helps optimize supply chains, predict equipment failures, and improve quality control. Automated models can analyze sensor data to detect anomalies, reducing downtime and costs.

The Future of AutoML

As AI continues to advance, AutoML is expected to play an even bigger role in shaping the future of technology. Emerging trends include:

Integration with Cloud Platforms: AutoML is increasingly being integrated into cloud ecosystems, making it easier to scale and deploy models.

Improved Explainability: Future AutoML systems will likely focus on providing more transparency, addressing concerns about black-box models.

Broader Accessibility: As AutoML tools become more user-friendly, they will empower a wider range of professionals, from marketers to educators, to leverage AI.

AutoML and Ethical AI

As AutoML democratizes AI development, ensuring ethical use becomes critical. Organizations must prioritize fairness, transparency, and accountability when deploying automated models. This includes addressing biases in training data and ensuring compliance with regulations like GDPR or CCPA.

Getting Started with AutoML

For organizations looking to adopt AutoML, the first step is identifying the right platform for their needs. Consider factors like ease of use, integration capabilities, and support for specific use cases. Many platforms offer free trials, allowing users to experiment before committing.

Tips for Success

Start Small: Begin with a simple project to understand how AutoML works.

Focus on Data Quality: Ensure your data is clean and representative of the problem you’re solving.

Monitor Performance: Regularly evaluate model performance to ensure it meets your goals.

Combine with Expertise: While AutoML automates many tasks, combining it with domain knowledge can yield better results.

AutoML is revolutionizing the way organizations approach AI by automating the AI development process. By making machine learning accessible, efficient, and cost-effective, AutoML empowers businesses to innovate and stay competitive. While challenges like limited customization and data dependency exist, the benefits of speed, scalability, and accessibility make AutoML a powerful tool for the future. Whether you’re a small business owner or a seasoned data scientist, AutoML offers a pathway to harness the power of AI with ease.

0 notes

Text

Inteligencia Artificial: El Motor de la Nueva Era del Análisis de Datos

Introducción: De Datos Crudos a Decisiones Inteligentes Gracias a la IA Vivimos en una era de información sin precedentes. Las organizaciones generan y recopilan volúmenes masivos de datos cada segundo. Sin embargo, estos datos son solo potencial en bruto. El verdadero valor reside en la capacidad de analizarlos para extraer conocimientos, identificar patrones, predecir tendencias y, en última…

#Alteryx#Análisis de Datos#Analítica Aumentada#Analítica Predictiva#AutoML#Azure ML#BI#Big Data#Business Intelligence#Ciencia de Datos#Data Mining#Domo#Google#IA#IBM#IBM Watson#inteligencia artificial#Looker#machine learning#Microsoft#MicroStrategy#Oracle#Oracle Analytics#Palantir Technologies#Power BI#Qlik#RapidMiner#SAP#SAP Analytics Cloud#SAS Institute

0 notes

Text

Project Title: leveraging Keras Tuner for hyperparameter optimization on a ResNet‑based CIFAR‑10 classifier - Keras-Exercise-104

Here’s a far more advanced Keras project—leveraging Keras Tuner for hyperparameter optimization on a ResNet‑based CIFAR‑10 classifier. Most of the content is Python code with type annotations; only the essentials are summarized. Project Title ai‑ml‑ds‑HjKqRt9BFile: tuned_resnet_cifar10_with_keras_tuner.py 📌 Short Description A CIFAR‑10 image classification project using a HyperResNet model…

#AutoML#BayesianOptimization#CIFAR10#ComputerVision#DeepLearning#Hyperband#HyperparameterTuning#KerasTuner#ResNet#TransferLearning

0 notes

Text

Project Title: leveraging Keras Tuner for hyperparameter optimization on a ResNet‑based CIFAR‑10 classifier - Keras-Exercise-104

Here’s a far more advanced Keras project—leveraging Keras Tuner for hyperparameter optimization on a ResNet‑based CIFAR‑10 classifier. Most of the content is Python code with type annotations; only the essentials are summarized. Project Title ai‑ml‑ds‑HjKqRt9BFile: tuned_resnet_cifar10_with_keras_tuner.py 📌 Short Description A CIFAR‑10 image classification project using a HyperResNet model…

#AutoML#BayesianOptimization#CIFAR10#ComputerVision#DeepLearning#Hyperband#HyperparameterTuning#KerasTuner#ResNet#TransferLearning

0 notes