#OpenSourceAI

Explore tagged Tumblr posts

Text

CLIMATE JUSTICE NOW: How to End Fossil Fuel Finance While Protecting Indigenous Rights in the Global Cost of Living Crisis CLIMATE JUSTICE ADVOCACY Explore the forefront of climate justice efforts, featuring influential voices advocating for environmental and indigenous rights.

#ClimateEmergency#ClimateCrisis#ClimateChange#Sustainability#EnvironmentalRights#HumanRights#Activism#OpenSourceAI#Perplexity#Llewelyn Pritchard#climatejustice#corruption#rightsofnature#civilresistance#ai#education

3 notes

·

View notes

Link

One of the most fascinating features of oobabooga is its ability to support various Large Language Models (LLMs), allowing you to choose from a range of AI systems tailored to your needs. Among the most popular models for their performance are Llama, Mistral, GPT-NeoX, and other open-source models offering excellent text generation capabilities.

1 note

·

View note

Text

Step into the future with Llama 3.1, the latest iteration in open-source large language models by Meta AI. With its high parameter count (405B) and multilingual capabilities, it’s redefining what’s possible in the world of AI.

#Llama3.1#OpenSourceAI#LargeLanguageModel#ArtificialIntelligence#DeepLearning#MachineLearning#NLP#artificial intelligence#open source#machine learning#programming#software engineering#opensource#python

2 notes

·

View notes

Text

Ready to Dive into the Future of Open-Source AI?

Join our FREE Hands-On Bootcamp and unlock the power ofJoin our FREE Hands-On Bootcamp and unlock the power of Hugging Face – the industry’s leading platform for AI innovation!

Register Now: https://t.ly/FWSAG-27JU

Trainer: Mr. Prakash Senapathi Date: 27th July 2025 Time: 10:00 AM (IST) ZoomLink: zoom.us/j/86216138016 Mode: Online

Perfect For: Students | Developers | AI Enthusiasts | Tech Professionals

Limited Seats – Don’t Miss Out! Save the Date & Set Your Reminder NOW!

0 notes

Text

Unveiling a New Era in Speech Recognition: How Mistral Voxtral Redefines Open-Source Audio AI

The world of voice technology is rapidly evolving, and Mistral Voxtral is at the forefront of this transformation. As an open-weights competitor to industry giants like OpenAI’s Whisper, this innovative model is reshaping how developers and businesses approach automatic speech recognition (ASR). With its blend of affordability, performance, and accessibility, it’s no surprise that this solution is generating buzz in the AI community. This blog explores how this groundbreaking model is setting a new standard for open-source speech recognition, offering a powerful alternative to proprietary systems while empowering developers with unmatched flexibility.

The Rise of Open-Source Speech Recognition

Why Open-Source Matters in AI

Open-source AI models have become a game-changer, offering developers the freedom to customize, deploy, and innovate without the constraints of proprietary systems. Unlike closed APIs, which often come with high costs and limited control, open-source solutions provide transparency and adaptability. This shift is particularly significant in the realm of speech recognition, where businesses need cost-effective, scalable tools to process audio data in real time.

The Legacy of OpenAI’s Whisper

For years, OpenAI’s Whisper has been a cornerstone of open-source speech recognition, praised for its accuracy and accessibility. Trained on vast datasets, it set a high bar for transcribing speech across various languages and environments. However, its limitations, such as the need for additional language models for semantic understanding, have left room for improvement. This is where Mistral Voxtral steps in, offering a more integrated and efficient approach.

What Makes Mistral Voxtral Stand Out?

A Dual-Model Approach for Versatility

Mistral Voxtral comes in two variants, each tailored to specific use cases. The 24-billion-parameter model is designed for production-scale applications, delivering robust performance for complex audio processing tasks. Meanwhile, the 3-billion-parameter Mini variant is optimized for edge and local deployments, making it ideal for mobile devices and resource-constrained environments. This flexibility ensures that developers can choose the right tool for their needs, whether they’re building enterprise-grade solutions or lightweight apps.

Superior Transcription Accuracy

One of the standout features of Mistral Voxtral is its transcription prowess. Benchmarks show it consistently outperforms Whisper large-v3, the previous leader in open-source transcription. Across datasets like LibriSpeech, GigaSpeech, and Mozilla Common Voice, it achieves lower word error rates, particularly in English and multilingual scenarios. This makes it a reliable choice for applications requiring precise speech-to-text conversion, from meeting transcription to podcast summarization.

Multilingual Capabilities for Global Reach

In today’s interconnected world, multilingual support is non-negotiable. Mistral Voxtral excels in this area, automatically detecting and transcribing languages such as English, Spanish, French, Hindi, German, Portuguese, Dutch, and Italian. Its performance on the FLEURS benchmark highlights its strength in European languages, making it a go-to solution for businesses serving diverse audiences. This seamless language detection eliminates the need for manual configuration, streamlining workflows.

Beyond Transcription: Advanced Features

Built-In Semantic Understanding

Unlike traditional ASR systems that focus solely on transcription, Mistral Voxtral integrates deep semantic understanding. Built on the Mistral Small 3.1 language model, it can process audio context, answer questions, and generate summaries without requiring additional models. For example, users can ask, “What was the main decision in the meeting?” and receive a concise response based on a 30-minute audio file. This capability simplifies the development of intelligent voice applications.

Voice-Activated Function Calling

Mistral Voxtral takes interactivity to the next level with voice-activated function calling. Developers can design applications where spoken commands trigger backend actions, such as adding tasks to a to-do list or sending emails. This feature is a boon for creating voice-driven assistants and automation tools, reducing the complexity of traditional intent recognition systems.

Extended Context Window

With a 32,000-token context window, Mistral Voxtral can handle up to 30 minutes of audio for transcription and 40 minutes for understanding tasks. This extended capacity is ideal for processing long-form content like lectures, interviews, or customer service calls, eliminating the need for cumbersome audio segmentation.

Cost-Effectiveness and Accessibility

Disruptive Pricing Model

One of the most compelling aspects of Mistral Voxtral is its affordability. With API pricing starting at just $0.001 per minute, it undercuts competitors like OpenAI’s Whisper ($0.006 per minute) and GPT-4o-mini ($0.003 per minute) by a significant margin. For cost-sensitive applications, the Mini Transcribe variant offers transcription performance that rivals Whisper at less than half the cost. This pricing structure makes high-quality speech recognition accessible to startups, researchers, and enterprises alike.

Open-Source Freedom

Released under the Apache 2.0 license, Mistral Voxtral empowers developers with full control over deployment and customization. Available for download on Hugging Face, it can be run locally or on-premise, making it ideal for privacy-sensitive industries like healthcare and finance. Businesses can also fine-tune the model for domain-specific needs, ensuring optimal performance in niche applications.

Easy Integration with Le Chat

For those looking to test Mistral Voxtral’s capabilities, Mistral’s Le Chat platform offers a user-friendly interface. Users can upload audio, generate transcriptions, ask questions, or create summaries directly within the web or mobile app. This accessibility makes it easy for non-technical users to experience the model’s power firsthand.

Real-World Applications

Enterprise Solutions

Mistral Voxtral is a game-changer for businesses seeking scalable voice solutions. Its private deployment options cater to organizations with strict data privacy requirements, such as those in regulated industries. From transcribing customer support calls to automating meeting summaries, it streamlines workflows while keeping costs low.

Voice Assistants and Automation

The model’s ability to interpret spoken commands and trigger actions makes it ideal for building next-generation voice assistants. Imagine a virtual assistant that not only transcribes your instructions but also executes tasks like scheduling meetings or updating databases—all from a single audio input.

Educational and Media Use Cases

In education, Mistral Voxtral can transcribe lectures and generate summaries, helping students focus on learning rather than note-taking. For media professionals, it offers a cost-effective way to transcribe interviews and podcasts, with built-in summarization to extract key insights quickly.

The Future of Open-Source Voice AI

Bridging the Gap Between Open and Proprietary

Mistral Voxtral addresses a long-standing dilemma in speech recognition: the trade-off between open-source accessibility and proprietary performance. By combining state-of-the-art accuracy, semantic understanding, and affordability, it eliminates the need to choose between cost and quality. This positions it as a direct competitor to closed systems like ElevenLabs Scribe and Google’s Gemini 2.5 Flash.

Upcoming Features

Mistral is already planning enhancements for Voxtral, including speaker segmentation, emotion detection, and word-level timestamps. These additions will further expand its utility, making it a versatile tool for advanced audio analysis and real-time applications.

Why Choose Mistral Voxtral?

Mistral Voxtral is more than just an alternative to Whisper—it’s a leap forward in open-source voice AI. Its combination of transcription accuracy, semantic understanding, and cost-effectiveness makes it a compelling choice for developers and businesses. Whether you’re building a voice-driven app, automating enterprise workflows, or processing multilingual audio, this model delivers unmatched value. By embracing open-source principles, it empowers the AI community to innovate without barriers, setting a new benchmark for what voice technology can achieve.

Get Started Today

Ready to explore Mistral Voxtral? Download the models from Hugging Face, integrate them via the API, or try them on Le Chat. With its open-source accessibility and cutting-edge features, this model is poised to redefine how we interact with voice AI. Join the revolution and discover the future of speech recognition today.

#MistralVoxtral#OpenSourceAI#SpeechRecognition#AudioAI#GenerativeAI#AIInnovation#AIbreakthrough#TechNews#FutureofAI#LLM#AIresearch#MachineLearning

0 notes

Text

#EdgeAI#AutoML#EmbeddedSystems#OpenSourceAI#KenningFramework#AnalogDevices#Antmicro#powerelectronics#powersemiconductor#powermanagement

0 notes

Text

#AutoML#EdgeAI#EmbeddedSystems#OpenSourceAI#MachineLearning#EdgeImpulse#AIInnovation#IoTDevelopment#Timestech#electronicsnews#technologynews

0 notes

Text

#MiraMurati#AIStartup#ThinkingMachinesLab#OpenAI#TechNews#AIRevolution#OpenSourceAI#FutureOfAI#StartupFunding

0 notes

Text

Botpress Developer This image represents the expertise of a Botpress developer in crafting intelligent, customizable chatbots using the powerful open-source Botpress platform. From building conversational flows to integrating NLP and third-party APIs, Botpress developers create scalable AI assistants for customer support, HR, and enterprise automation. Ideal for businesses seeking personalized, real-time interaction at scale.

#BotpressDeveloper#ChatbotDevelopment#ConversationalAI#OpenSourceAI#AIChatbots#BotpressPlatform#AutomationSolutions#BusinessAI

0 notes

Text

Your AI Doesn’t Sleep. Neither Should Your Monitoring.

We’re living in a world run by models from real-time fraud detection to autonomous systems navigating chaos. But what happens after deployment?

What happens when your model starts drifting, glitching, or breaking… quietly?

That’s the question we asked ourselves while building the AI Inference Monitor, a core module of the Aurora Framework by Auto Bot Solutions.

This isn’t just a dashboard. It’s a watchtower.

It sees every input and output. It knows when your model lags. It learns what “normal” looks like and it flags what doesn’t.

Why it matters: You can’t afford to find out two weeks too late that your model’s been hallucinating, misclassifying, or silently underperforming.

That’s why we gave the AI Inference Monitor:

Lightweight Python-based integration

Anomaly scoring and model drift detection

System resource tracking (RAM, CPU, GPU)

Custom alert thresholds

Reproducible logging for full audits

No more guessing. No more “hope it holds.” Just visibility. Control. Insight.

Built for developers, researchers, and engineers who know the job isn’t over when the model trains it’s just beginning.

Explore it here: Aurora On GitHub : AI Inference Monitor https://github.com/AutoBotSolutions/Aurora/blob/Aurora/ai_inference_monitor.py

Aurora Wiki https://autobotsolutions.com/aurora/wiki/doku.php?id=ai_inference_monitor

Get clarity. Get Aurora. Because intelligent systems deserve intelligent oversight.

Sub On YouTube: https://www.youtube.com/@autobotsolutions/videos

#OpenSourceAI#PythonAI#AIEngineering#InferenceOptimization#ModelDriftDetection#AIInProduction#DeepLearningTools#AIWorkflow#ModelAudit#AITracking#ScalableAI#HighStakesAI#AICompliance#AIModelMetrics#AIControlCenter#AIStability#AITrust#EdgeAI#AIVisualDashboard#InferenceLatency#AIThroughput#DataDrift#RealtimeMonitoring#PredictiveSystems#AIResilience#NextGenAI#TransparentAI#AIAccountability#AutonomousAI#AIForDevelopers

0 notes

Text

Meta's Llama 4: The Most Powerful Al Yet!

youtube

In this episode of TechTalk, we dive deep into Meta's latest release LLaMA 4. What's new with LLaMA 4, and how does it stand apart from other leading models like ChatGPT-4, Claude, and Gemini?

#llama4#metaai#opensourceai#multimodal#aiinnovation#gpt4alternative#claude3#airesearch#machinelearning#contextwindow#aitools#futureofai#llm#Youtube

0 notes

Text

Learn the specifics behind GLM-4.5, an open-source AI engineered to holistically unify reasoning, coding, and agentic work. This deep dive explores how its novel MoE architecture and Innovative Reinforcement Learning Infrastructure ('slime') set it apart. The analysis covers key benchmarks where GLM-4.5, specifically optimized for agentic tasks, was shown to outperform peers such as GPT-4 consistently on agentic metrics, especially on difficult real-world problems. Discover why its integrated design is a major step forward for autonomous systems.

#GLM45#OpenSourceAI#AgenticAI#AICoding#AIReasoning#MixtureOfExperts#MoE#ReinforcementLearning#slimeRL#LLM#ArtificialIntelligence#MachineLearning#DeepLearning#ai#artificial intelligence#open source#machine learning#software engineering#opensource#nlp#programming

0 notes

Text

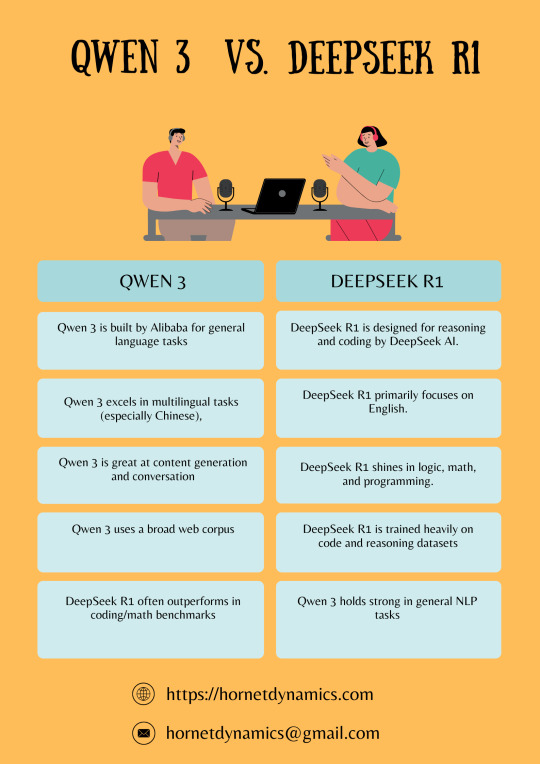

Qwen 3 vs DeepSeek R1: Battle of the Next-Gen AI Models

Discover the key differences between Qwen 3 and DeepSeek R1—two of the most advanced AI models in 2025. From performance benchmarks to coding capabilities, we break down which model leads in innovation, reasoning, and real-world applications. Find out which AI is right for your needs!

#Qwen3#DeepSeekR1#AIModels2025#ArtificialIntelligence#MachineLearning#AIBenchmark#GenerativeAI#TechComparison#AIInnovation#FutureOfAI#OpenSourceAI#AIResearch#NextGenAI#AIDevelopers#LLMComparison

0 notes

Text

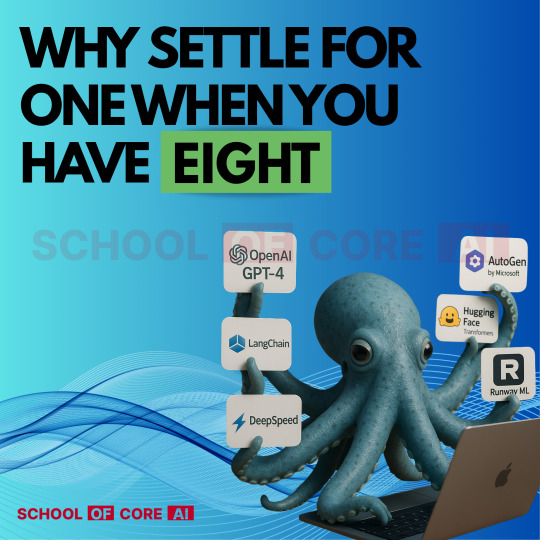

Why stick to one AI tool when today’s innovations demand synergy?

Modern AI development doesn’t rely on a single model or library it thrives on integrated ecosystems.

Here are 8 powerful tools every aspiring AI Engineer should know:

GPT-4 (OpenAI) – Powerful LLM for reasoning, generation, and comprehension

LangChain – Framework to connect LLMs with external data and APIs

DeepSpeed (by Microsoft) – Speeds up training of large models efficiently

AutoGen (Microsoft) – Enables multi-agent conversations and collaborative AI workflows

Hugging Face Transformers – Open-source hub for state-of-the-art NLP models

Runway ML – AI for media creators: image, video, and animation generation

LLM Guard – Secure and sanitize LLM outputs to prevent risks (e.g., prompt injection, PII leaks)

Gradio – Instantly demo AI models with shareable web interfaces

These tools work best together, not in silos.

At School of Core AI we don’t teach tools in isolation. We teach you how to orchestrate them together to build scalable, real-world GenAI apps.

#GenerativeAI#GPT4#LangChain#DeepSpeed#AutoGen#HuggingFace#RunwayML#Gradio#AItools#MachineLearning#OpenSourceAI#AIFrameworks#MultiAgentAI#LLMengineer#AgenticAI#AIEducation#SchoolOfCoreAI#LearnAI

1 note

·

View note

Text

#Red Hat#OpenSourceAI#EnterpriseAI#GenerativeAI#Collaboration#Innovation#OpenShiftAI#elecctronicsnews#technologynews

0 notes

Text

0 notes