#Azure DevOps Packer

Explore tagged Tumblr posts

Text

Unlock innovation and agility with Azure Cloud Computing. Impressico Business Solutions empowers enterprises to scale effortlessly, improve data security, and optimize performance through tailored cloud strategies. Embrace digital transformation with Microsoft Azure's cutting-edge infrastructure backed by their expert guidance and 24/7 support. Make your business future-ready today.

#Azure Cloud Computing#Cloud Computing Platform#Azure Cloud Infrastructure#Azure DevOps Packer#Azure Cloud#Cloud Computing#Cloud Infrastructure

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Use Terraform and Jenkins to implement Infrastructure as Code and Pipeline as Code across multi-cloud environmentsKey FeaturesStep-by-step guidelines for managing infrastructure across multiple cloud platforms.Expert-led coverage on managing Pipeline as Code using Jenkins.Includes images demonstrating how to manage AWS and Azure resources using Terraform Modules.DescriptionThis book explains how to quickly learn and utilize Terraform to incorporate Infrastructure as Code into a continuous integration and continuous delivery pipeline. The book gives you the step-by-step instructions with screenshots and diagrams to make the learning more accessible and fun.This book discusses the necessity of Infrastructure as a Code (IaC) and the many tools available for implementing IaC. You will gain the knowledge of resource creation, IAM roles, EC2 instances, elastic load balancers, and building terraform scripts, among other learnings. Next, you will explore projects and use-cases for implementing DevOps concepts like Continuous Integration, Infrastructure as Code, and Continuous Delivery. Finally, you learn about the Terraform Modules and how to establish networks and Kubernetes clusters on various cloud providers. Installing and configuring Jenkins and SonarQube in Cloud Environments will also be discussed.As a result of reading this book, you will be able to apply Infrastructure as Code and Pipeline as Code principles to major cloud providers such as AWS and Azure.What you will learnCreate, manage, and maintain AWS and Microsoft Azure infrastructure.Using Packer, create AMIs and EC2 instances.Utilize Terraform Modules to create VPC and Kubernetes clusters.Put the Pipeline and Infrastructure as Code principles into practice.Utilize Jenkins to automate the application lifecycle management process.Who this book is forThis book will primarily help DevOps, Cloud Operations, Agile teams, Cloud Native Developers, and Networking Professionals. Being familiar with the fundamentals of Cloud Computing and DevOps will be beneficial.Table of Contents1. Setting up Terraform2. Terraform Basics and Configuration3. Terraform Provisioners4. Automating Infrastructure Deployments in the AWS Using Terraform5. Automating Infrastructure Deployments in Azure Using Terraform6. Terraform Modules7. Terraform Cloud8. Terraform and Jenkins Integration9. End-to-End Application Management using TerraformRead more Publisher : Bpb Publications (16 June 2022) Language : English Paperback : 468 pages ISBN-10 : 935551090X ISBN-13 : 978-9355510907 Reading age : 18 years and up Item Weight : 798 g Dimensions : 19.05 x 2.69 x 23.5 cm Country of Origin : India

Net Quantity : 1.0 Count [ad_2]

0 notes

Text

Consultant – Azure Cloud DevOps Engineer

Shell scripting, PowerShell, Ruby, HCL, Python, Packer, JSON, YAML, ARM Templates, DSC, AWS-CLI, AZ-CLI, etc. Extensive… Apply Now

0 notes

Text

"HashiDays conference: 3 cities, 6 keynotes, 24 breakout sessions. Secure your spot today at [https://hashicorp.com/conferences/hashidays] for the latest in tech innovation. Limited availability.

RoamNook - Bringing New Information to the Table

RoamNook - Fueling Digital Growth

Greetings, fellow enthusiasts of technological advancements! Prepare to have your minds blown as we delve into the world of hard facts, numbers, data, and concrete information that will leave you in awe. In this blog, we will explore various technical, professional, and scientific aspects that will not only broaden your knowledge but also highlight the real-world applications of this information. But first, allow us to introduce ourselves.

Welcome to RoamNook - Innovating the Digital World

RoamNook, an innovative technology company, sets itself apart in the industry by specializing in IT consultation, custom software development, and digital marketing. Our main goal is to fuel digital growth and empower businesses with cutting-edge solutions. With a team of experts that is second to none, we constantly adapt to the evolving landscape of technology, ensuring that our clients stay ahead of the curve.

The Power of Objective and Informative Content

At RoamNook, we firmly believe in the power of objective and informative content. In today's age, where information overload is the norm, it becomes crucial to bring new knowledge to the table. That is precisely what this blog aims to accomplish. We have meticulously collected key facts, hard information, numbers, and concrete data to provide you with an unparalleled learning experience. Prepare to be amazed!

Revolutionary Technological Advancements

As we dive into the world of technological advancements, let's explore some of the groundbreaking products and services that have redefined the industry.

1. HashiCorp Cloud Platform

The HashiCorp Cloud Platform, or HCP, is a game-changer in the world of cloud computing. It offers a unified cloud experience across multiple cloud providers, allowing businesses to seamlessly manage their infrastructure. Whether it's provisioning resources, automating workflows, or securing applications, HCP has got you covered.

2. Terraform - Infrastructure as Code

Terraform, developed by HashiCorp, revolutionizes infrastructure provisioning. With its declarative language, infrastructure as code becomes a reality. Say goodbye to manual configuration and hello to automated infrastructure deployment. Terraform enables organizations to codify their infrastructure requirements, resulting in increased efficiency and scalability.

3. Packer - Automated Machine Image Creation

Packer, another revolutionary product by HashiCorp, automates the creation of machine images for multiple platforms. Whether it's Amazon Web Services, Microsoft Azure, or any other cloud provider, Packer ensures consistent and reproducible machine images. This eliminates the manual and error-prone process of image creation, making it a must-have tool for DevOps teams.

Real-World Applications and Why It Matters

Now that we have explored some of the remarkable technological advancements, let's discuss their real-world applications and why they matter to you, the reader.

RoamNook - Your Partner in Digital Growth

As we wrap up this informative journey, we invite you to reflect on the vast amount of new knowledge you have acquired. We hope that the hard facts, numbers, and concrete data presented in this blog have left you inspired and eager to explore the limitless possibilities of technology.

At RoamNook, we are dedicated to bringing innovative solutions to the table and fueling the digital growth of businesses worldwide. If you are looking to harness the power of technology and achieve unprecedented success, we invite you to reach out to us. Let's embark on a transformative journey together!

Stay connected with RoamNook:

Website: https://www.roamnook.com

Email: [email protected]

Phone: 123-456-7890

We would love to hear your thoughts and feedback. Share your experience with us and let's continue pushing the boundaries of technology together. Now, it's time for you to take an active role.

Reflect and Engage

As we conclude, we leave you with a thought-provoking question: In a rapidly evolving digital landscape, how will you harness the power of technology to fuel your own growth?

Thank you for joining us on this enlightening journey. Keep exploring, keep innovating, and stay tuned for more exciting content from RoamNook!

Source: https://developer.hashicorp.com/terraform/tutorials/aws-get-started/infrastructure-as-code&sa=U&ved=2ahUKEwij1eeZrKKGAxV8BdsEHe5zAIwQFnoECAAQAw&usg=AOvVaw2-Ozd30n-FW3iGMot-yj9k

0 notes

Photo

HIRING: Technical Program Manager, Cloud Operations / United States - Remote

#devops#cicd#cloud#engineering#career#jobs#jobsearch#recruiting#hiring#TechTalent#Azure#AWS#Docker#Elasticsearch#Git#Kubernetes#Packer#PostgreSQL#RabbitMQ#SQL#Terraform#Bash#Linux#Ruby#Chef#Puppet

0 notes

Text

WE PROVIDE DEVOPS COURSE TUTORIALS ALL ARE REAL TIME PRACTICAL LAB ALL ARE HANDS ON COURSE DETAILS :- linux aws azure docker kubernetes ansible git jenkins terraform packers python and projects FOR MORE INFO TEXT ON WHATSAPP :- 7898870083 PLEASE WATCH AND SUBSCRIBE for demo visit our youtube channel :- https://www.youtube.com/watch?v=gXonAZGcmUE&list=PL9JhaNh5QrVnLk4xw2eERLACBcUgw_wEdHow To Become DevOPS Engineer IN HINDI | WhatsAPP No- 8817442344How To Become DevOPS Engineer IN HINDI | WhatsAPP No- 8817442344#DevOPS #Linux #Aws #Docker #Kubernetes #Ansible #Git #GitHUB #Jenkins #Nagios #Maven #CICD #...❤️

0 notes

Text

IaC - Speeding up Digital Readiness

Historically, manual intervention was the only way of managing computer infrastructure. Servers had to be mounted on racks, operating systems had to installed, and networks had to be connected and configured. At that time, this wasn't a problem since development cycles were long and infrastructure changes were not frequent.

But in today's world the businesses expect agility on all fronts to meet the dynamic customer needs.

With the rise of DevOps, Virtualization, Cloud and Agile practices, the software development cycles were shortened. As a result, there was a demand for better infrastructure management techniques. Organizations could no longer afford to wait for hours or days for servers to be deployed.

Infrastructure as Code is one way of raising the standard of infrastructure management and time to deployment. By using a combination of tools, languages, protocols, and processes, IaC can safely create and configure infrastructure elements in seconds.

What is Infrastructure as Code?

Infrastructure as Code, or IaC, is a method of writing and deploying machine-readable definition files that generate service components, thereby supporting the delivery of business systems and IT-enabled processes. IaC helps IT operations teams manage and provision IT infrastructure automatically through code without relying on manual processes. IaC is often described as “programmable infrastructure”.

IaC can be applied to the entire IT landscape but it is especially critical for cloud computing, Infrastructure as a Services (IaaS), and DevOps. IaC is the foundation on which the entire DevOps is built. DevOps requires agile work processes and automated workflows which can only be achieved through the assurance of readily available IT Infrastructure – which is needed to run and test the developed code. This can only happen within an automated workflow.

How Infrastructure as Code Works

At a high level IaC can be explained in 3 main steps as shown below.

Developers write the infrastructure specification in a domain-specific language.

The resulting files are sent to a master server, a management API, or a code repository.

The platform takes all the necessary steps to create and configure the computer resources.

Types of Infrastructure as Code

Scripting: Writing scripts is the most direct approach to IaC. Scripts are best for executing simple, short, or one-off tasks.

Configuration Management Tools: These are specialized tools designed to manage software. They are usually used for installing and configuring servers. Ex: Chef, Puppet, and Ansible.

Provisioning Tools: Provisioning tools focus on creating infrastructure. Using these types of tools, developers can define exact infrastructure components. Ex: Terraform, AWS CloudFormation, and OpenStack Heat.

Containers and Templating Tools: These tools generate templates pre-loaded with all the libraries and components required to run an application. Containerized workloads are easy to distribute and have much lower overhead than running a full-size server. Examples are Docker, rkt, Vagrant, and Packer.

Some of the frequently used IaC Tools

AWS CloudFormation

Azure Resource Manager

Google Cloud Deployment Manager

HashiCorp Terraform

RedHat Ansible

Docker

Puppet/Chef

Use Cases of IaC:

Software Development:

If the development environment is uniform across the SDLC, the chances of bugs arising are much lower. Also, the deployment and configuration can be done faster as building, testing, staging and production deployments are mostly repeatable, predictable and error-free.

Cloud Infrastructure Management:

In the case of cloud infrastructure management using IaC, multiple scenarios emerge, where provisioning and configuring the system components with tools like Terraform and Kubernetes helps save time, money and effort. All kinds of tasks, from database backups to new feature releases can be done faster and better.

Cloud Monitoring:

The usefulness of IaC can also be utilized in cloud monitoring, logging and alerting tools which run in different environments. Solutions like ELK stack, FluentD, SumoLogic, Datadog, Prometheus + Grafana, etc. can be quickly provisioned and configured for the project using IaC.

Benefits of Infrastructure as Code

Faster time to production/market

Improved consistency

Agility, more efficient development

Protection against churn

Lower costs and improved ROI

Better Documentation

Increased transparency and accountability

Conclusion:

IaC is an essential part of DevOps transformation, helping the software development and infrastructure management teams work in closely and provide predictable, repeatable and reliable software delivery services. IaC can simplify and accelerate your infrastructure provisioning process, help you avoid mistakes, keep your environments consistent, and save your company a lot of time and money.

If IaC isn’t something you’re already doing, maybe it’s time to start!

#iac#code#devops#digital#transformation#cloud#aws#azure#automation#kubernetes#agile#scripting#sdlc#network#virtualmachines#loadbalancers

0 notes

Text

DevOps Lifecycle Management Engineer w/Strong PhP Experience at Dallas, TX REMOTE

5+ Years DevOps Lifecycle Management-MUST HAVE 5+ Years PHP-MUST HAVE 2+ Years Azure cloud computing environment-MUST HAVE Familiarity with technologies such as Docker, Terraform, Jenkins, git/GitHub, Chef, Ansible and Packer Writing automation tools from Job Portal https://ift.tt/2xBWJly

0 notes

Text

IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting

Must Have Skills (Top 3 technical skills only) : Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment MonitoringAlerting for Containers in Azure Detailed Job Description: Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc. Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment. Monitoring Alerting for Containers in Azure. Good understanding of Network Infrastructure for Azure Setting up and managing load balancers, network security groups. Good understanding of Storage infrastructure for Azure Securing storage accounts Minimum years of experience : 5+ Certifications Needed: Yes Top 3 responsibilities you would expect the Subcon to shoulder and execute : Azure Service Principal for user management on Virtual Machine Infrastructure Build Tools Terraform and Packer Develop and manage Storage infrastructure for Azure Interview Process (Is face to face required?) Yes Does this position require Visa independent candidates only? No Reference : IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting jobs Source: http://jobrealtime.com/jobs/technology/it-senior-technology-architect-cloud-platform-azure-development-solution-architecting_i9582

0 notes

Text

IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting

Must Have Skills (Top 3 technical skills only) : Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment MonitoringAlerting for Containers in Azure Detailed Job Description: Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc. Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment. Monitoring Alerting for Containers in Azure. Good understanding of Network Infrastructure for Azure Setting up and managing load balancers, network security groups. Good understanding of Storage infrastructure for Azure Securing storage accounts Minimum years of experience : 5+ Certifications Needed: Yes Top 3 responsibilities you would expect the Subcon to shoulder and execute : Azure Service Principal for user management on Virtual Machine Infrastructure Build Tools Terraform and Packer Develop and manage Storage infrastructure for Azure Interview Process (Is face to face required?) Yes Does this position require Visa independent candidates only? No Reference : IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting jobs from Latest listings added - JobsAggregation http://jobsaggregation.com/jobs/technology/it-senior-technology-architect-cloud-platform-azure-development-solution-architecting_i8910

0 notes

Text

Impressico Business Solutions offers expert Packer DevOps services to streamline your deployment processes. Their team leverages Packer to automate the creation of machine images, ensuring consistency and efficiency across your infrastructure. Trust them to enhance your DevOps workflows and accelerate your software delivery.

#Packer DevOps#DevOps Packer#Azure DevOps Packer#DevOps Service Providers#DevOps as a Service Providers

0 notes

Text

IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting

Must Have Skills (Top 3 technical skills only) : Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment MonitoringAlerting for Containers in Azure Detailed Job Description: Azure Devops build CICD pipeline for different environment, approval workflow for moving code from dev test production, best practices for versioning etc. Experience with setup of RabbitMQ, Redis, MongoDB, Airflow infrastructure in Azure environment. Monitoring Alerting for Containers in Azure. Good understanding of Network Infrastructure for Azure Setting up and managing load balancers, network security groups. Good understanding of Storage infrastructure for Azure Securing storage accounts Minimum years of experience : 5+ Certifications Needed: Yes Top 3 responsibilities you would expect the Subcon to shoulder and execute : Azure Service Principal for user management on Virtual Machine Infrastructure Build Tools Terraform and Packer Develop and manage Storage infrastructure for Azure Interview Process (Is face to face required?) Yes Does this position require Visa independent candidates only? No Reference : IT - Senior Technology Architect | Cloud Platform | Azure Development & Solution Architecting jobs from Latest listings added - JobRealTime http://jobrealtime.com/jobs/technology/it-senior-technology-architect-cloud-platform-azure-development-solution-architecting_i9582

0 notes

Text

Consultant – Azure Cloud DevOps Engineer

Shell scripting, PowerShell, Ruby, HCL, Python, Packer, JSON, YAML, ARM Templates, DSC, AWS-CLI, AZ-CLI, etc. Extensive… Apply Now

0 notes

Text

Кто такой Big Data Administrator: профессиональные компетенции администратора больших данных

В прошлых выпусках мы рассмотрели, чем занимаются аналитик (Data Analyst), исследователь (Data Scientist) и инженер больших данных (Data Engineer). Завершая цикл статей о самых популярных профессиях Big Data, поговорим об администраторе больших данных – его рабочих обязанностях, профессиональных компетенциях, зарплате и отличиях от других специалистов. Итак, в сегодняшней статье – Administrator Big Data «для чайников».

Что делает администратор Big Data

Администратор больших данных занимается созданием и поддержкой кластерных решений (в том числе облачных платформ на базе Apache Hadoop), включая: установку и развертывание кластера; выбор начальной конфигурации; оптимизацию узлов на уровне ядра; управление обновлениями и создание локальных репозиториев; настройку репликаций, аутентификаций и средств управления очередями; обеспечение информационной безопасности кластеров; мониторинг производительности и балансировка нагрузки на серверы; обеспечение информационной безопасности кластеров и систем; резервное копирование и восстановление данных при сбоях. При выполнении этих обязанностей администратор взаимодействует с инженерами больших данных, однако их рабочие задачи не дублируют друг друга, хотя и некоторым образом пересекаются. Чем занимается Data Engineer, читайте здесь.

Администратор Big Data - "супермен" мира больших данных

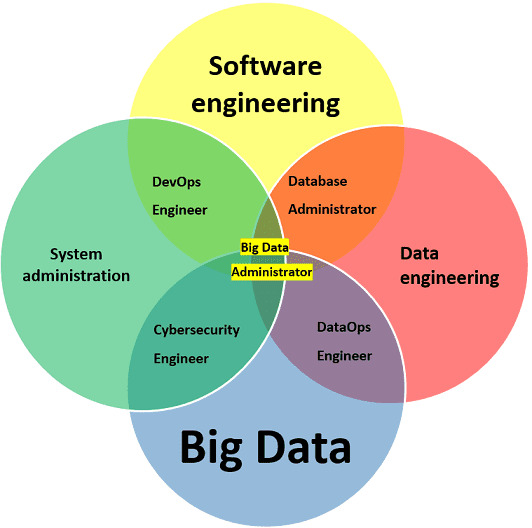

Профессиональные компетенции администратора Big Data

Чтобы решать задачи по созданию, настройке и обслуживанию Big Data кластеров, администратор больших данных должен знать следующие дисциплины и технологии: сетевые протоколы стека TCP/IP, в т.ч. nginx, bash и пр.; языки программирования Python, Shell, Go; экосистема Apache Hadoop, а также кластерные решения HBase, Kafka, Spark; системы мониторинга Grafana, Zabbix, ELK, Prometheus; планировщики задач и балансировщики нагрузки Cloudera Manager, Apache Ambari, Apache Zookeeper; инструменты обеспечения кластерной безопасности Kerberos, Apache Sentry, Cloudera Navigator, Apache Ambari, Apache Ranger, Apache Knox, Apache Atlas; облачные платформы для больших данных (Amazon Web Services, Google Cloud Platform, Microsoft Azure и другие подобные решения от крупных PaaS/IaaS-провайдеров). В некоторых компаниях к администратору больших данных также выдвигаются требования к знанию инструментов непрерывной интеграции и поставки ПО (CI/CD, Continuous Integration/ Continuous Delivery) – Jenkins, Puppet, Chef, Ansible, Docker, OpenShift, Kubernetes, а также средств для управления конфигурациями и тестированием (Terraform, Vault, Consul, Packer, Elasticsearch и пр.). Однако, такие задачи относятся к области ответственности DevOps-инженера, а Big Data Administrator занимается, прежде всего, настройкой кластерной инфраструктуры. Подробнее об отличиях DevOps-инженера от сисадмина и администратора больших данных мы рассказывали здесь. А про использование Docker, Kubernetes и другие технологии контейнеризации читайте в нашем новом материале.

Области профессиональных знаний администратора Big Data

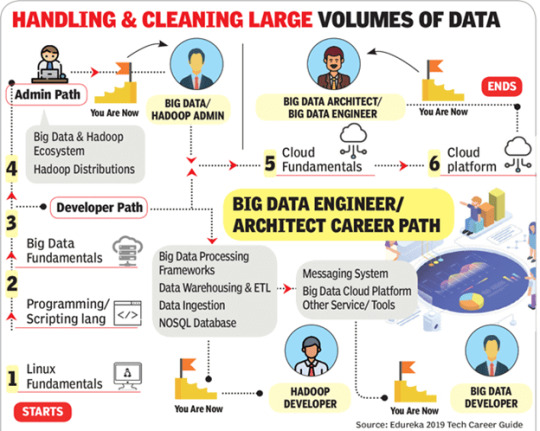

Чем отличаются друг от друга Data Engineer и администратор больших данных

Как и Data Engineer, администратор больших данных является частью инженерии Big Data, которая готовит инфраструктуру для анализа информации, чем занимаются Data Analyst и Data Scientist. Тем не менее, области профессиональной деятельности инженера и администратора больших данных существенно отличаются друг от друга: Data Engineer работает на более высоком уровне абстракции, концентрируясь на автоматизации сбора и распределения информационных потоков, а также взаимодействуя с корпоративными хранилищами информации (SQL и NoSQL базы данных, Data Lake, Data Warehouse); администратор Big Data настраивает и обслуживает инфраструктуру для хранения данных, создавая кластера и конфигурируя облачные платформы, а также заботится об информационной безопасности больших данных. Отметим, что уровень зарплат этих ИТ-специалистов тоже отличается: обзор вакансий с популярной рекрутинговой площадки HeadHunter показал, что работа Data Engineer’а оценивается в 150-250 тысяч рублей в месяц, тогда как администратору больших данных предлагается месячный заработок 80-200 тысяч рублей. При этом, в связи с тотальной цифровизацией и цифровой трансформацией различных отраслей экономики, сохраняется тенденция нехватки опытных профессионалов. Впрочем, об этом мы уже упоминали в разговоре о профессиях в мире больших данных «для чайников», в статье «Big Data с чего начать».

Путь профессионального становления Big Data Administrator Освойте искусство администрирования инфраструктуры больших данных на наших практических курсах обучения и повышения квалификации ИТ-специалистов в лицензированном учебном центре для руководителей, аналитиков, архитекторов, инженеров и исследователей Big Data в Москве: INTR: Основы Hadoop HADM: Администрирование кластера Hadoop DSEC: Безопасность озера данных Hadoop AIRF: Apache AirFlow NIFI: Кластер Apache NiFi KAFKA: Администрирование кластера Kafka HIVE: Hadoop SQL администратор Hive HBASE: Администрирование кластера HBase SPARK: Администратор кластера Apache Spark

Смотреть расписание занятий

Зарегистрироваться на курс Read the full article

0 notes

Text

WE PROVIDE DEVOPS COURSE TUTORIALS

ALL ARE REAL TIME PRACTICAL LAB

ALL ARE HANDS ON

COURSE DETAILS :-

linux

aws

azure

docker

kubernetes

ansible

git

jenkins

terraform

packers

python

and projects

FOR MORE INFO TEXT ON WHATSAPP :- 7898870083

PLEASE WATCH AND SUBSCRIBE

for demo visit our youtube channel :-

https://www.youtube.com/watch?v=gXonAZGcmUE&list=PL9JhaNh5QrVnLk4xw2eERLACBcUgw_wEd

0 notes

Text

Integración Continua – DevOps

Descripción

En Smart HC, buscamos incorporar varios perfiles tanto de Integración Continua como perfiles DevOps para un trabajar en la zona noroeste de la Comunidad de Madrid en una posición estable.

Integración Continua

Mínimo 5 años definiendo, desarrollando y configurando sistemas / partes de arquitecturas de infraestructura en entornos corporativos complejos. Conocimiento y experiencia en la mayoría de las siguientes tecnologías: Conocimiento sólido de conceptos, procesos y métodos de entrega continua. Gran conocimiento de Middleware y sistemas de mensajería, ESBs. Plataformas como Apache, Tomcat, JBoss, WSO2, Apigee, TIBCO, RabbitMQ, etc. Conocimiento amplios en Git, Maven, npm, Java, Jenkins, Kubernetes, AWS Sonar, Javascript, Pruebas de estrategias, Ansible. Conocimientos de Agile, trabajo en equipo y habilidades de liderazgo. Gran conocimiento de los grandes proveedores de nube AWS, Azure, Google Cloud. Conocimientos sólidos sobre Ansible, Puppet, Cheff, Terraform

Conocimientos valorables Packer, Gitlab CI/CD, GitLab, Terraform, Cucumber, Spring, Infrastructure as Code

DevOps

Imprescindibles:

Cloud: AWS y/o Azure. Experiencia evaluando y operando sistemas de alta disponibilidad en Cloud. Infraestructura como código: Terraform. Desarrollo de componentes de forma modular empleando DRY en la medida de lo posible. Contenedores: Docker (docker-compose), Kubernetes y/o sus sistemas de managing (Rancher, AKS, EKS, GKE, en último caso Openshift) Provisionadores de configuración: Ansible (Packer) aplicadas a infraestructuras en Cloud. Experiencia dilatada en la administración de sistemas basados en Linux: Ubuntu, RHEL, CentOS: Hardening, configuración de red, seguridad perimetral, gestión de usuarios y tareas programadas, compilación de aplicaciones.

Valorables: Testing en IaC: Kitchen. Terragrunt Boto como provisionador de infraestructura. Diseño y operación de aplicaciones orientadas a la monitorización y trazabilidad tanto operacional, como funcional como de performance en sistemas de alta disponibilidad. (ELK, EFK, Prometheus) Server side scripting: bash y/o python. LPIC/LPIC2

Tecnologías

Funciones Profesionales

Detalles de la oferta

Imprescindible Residir: País Puesto

Experiencia: Más de 5 años

Formación Mínima: FP2/Grado Superior

Nivel Profesional: Empleado

Número de puestos: 2

Tipo contrato: Indefinido

Jornada: Jornada completa

Salario: No especificado

www.tecnoempleo.com/integracion-continua-devops-madrid/rabbitmq-git-jenkins-aws-ansible-apache/rf-6217z7409a3309da6fe6

La entrada Integración Continua – DevOps se publicó primero en Ofertas de Empleo.

from WordPress http://bit.ly/2GE0F5H via IFTTT

0 notes