#BiasInTech

Explore tagged Tumblr posts

Text

Time for a new project on racial bias in AI

So I have this new idea to do a newsletter to teach people how to prompt and identify and remove bias from responses. I know what your thinking - Jamil don't you have enough projects - but this really is a direct connection between the work I've been doing on my BlackHistoryChatGPT blog and the AI Empower work that I'm doing for work. So today I asked ChatGPT a couple of questions to get me started.

The answers gave me a good start so I'm off to the races. Of course I had to ask it for potential names for my new newsletter. My working idea was "Jailbreaking racial bias - Removing bias from Generative AI" but had to see what AI thought for names.

10 Possible Names for Your Substack Newsletter on Bias in LLMs

The Bias Decoder

AI Unmasked: Spotting Bias in LLMs

Prompt for Change

Fair Prompts, Fair AI

Bias in the Machine

AI Truth Serum

The Prompt Liberation Project

De-Biased: Rethinking AI Responses

Hacking AI Bias

Reprogramming Fairness

What do you think? Some of them are actually pretty good...

Off to start using my study guide!!

#AIBias#FairAI#EthicalAI#LLMBias#AITransparency#AIEquity#DeBiasingAI#PromptEngineering#ResponsibleAI#BiasInTech#brooklyndad#creative writing#brooklyn#middle age life#chatgpt#midjourney#ai generated

0 notes

Text

Building a study guide to look at bias in generative AI

Full ChatGPT Chat is here

Prompt:

Please answer as an expert in racial bias in Large Language models

Response:

Absolutely. When discussing racial bias in Large Language Models (LLMs), it's important to recognize that these biases emerge due to multiple factors in the training and deployment of these models. Here’s an expert-level breakdown of the issue:

1. Sources of Racial Bias in LLMs

LLMs inherit biases from various stages of their development:

a) Bias in Training Data

LLMs are trained on vast amounts of text from the internet, books, and other sources, many of which contain historical and systemic racial biases.

Underrepresentation of non-dominant racial groups in training data leads to skewed or inaccurate representations.

Overrepresentation of dominant narratives (e.g., Western, Eurocentric perspectives) can reinforce stereotypes.

b) Bias in Labeling and Fine-Tuning

Human annotators influence model behavior through reinforcement learning and filtering mechanisms. Annotator biases (often shaped by cultural and societal perspectives) affect what is considered “appropriate” or “correct.”

Filtering out certain controversial or politically charged discussions can unintentionally erase or distort racialized perspectives.

c) Bias in Model Architecture and Tokenization

Language tokenization can create disparities in how words from different languages, dialects, and cultural contexts are processed.

Certain names, dialects, or linguistic patterns associated with racial minorities might be misclassified or poorly generated.

2. How Racial Bias Manifests in LLM Outputs

Even when developers take steps to mitigate bias, it often appears in various ways:

a) Stereotype Reinforcement

LLMs may associate racial or ethnic groups with certain professions, behaviors, or historical narratives in a biased manner (e.g., associating Black individuals with crime or poverty due to biased data distributions).

Common names from non-Western cultures may be linked to negative connotations while Western names have more positive associations.

b) Erasure or Underrepresentation

LLMs may struggle to generate diverse perspectives on historical and social issues, prioritizing dominant narratives.

Certain cultural or racial identities may be less represented in responses, leading to marginalization in AI-generated content.

c) Sentiment and Toxicity Disparities

Some studies have found that prompts containing Black-sounding names or references to African American Vernacular English (AAVE) trigger disproportionately high toxicity scores.

Sentiment analysis models trained on biased datasets may associate non-White racial identities with more negative sentiments.

3. Addressing and Mitigating Racial Bias in LLMs

While eliminating bias completely is difficult, various strategies can help:

a) Diverse and Representative Training Data

Ensuring datasets include a balanced representation of racial groups, dialects, and cultural narratives.

Actively curating datasets that counter historical biases.

b) Bias Auditing and Testing

Systematic auditing for racial bias using fairness metrics (e.g., disparate impact analysis, adversarial testing).

Involving diverse teams in testing and evaluating model outputs.

c) Algorithmic Interventions

Using de-biasing techniques such as counterfactual data augmentation, adversarial training, and fairness-aware fine-tuning.

Applying post-processing methods to detect and correct biased responses.

d) Community Involvement and Transparency

Engaging racial justice advocates, ethicists, and impacted communities in model development and evaluation.

Providing greater transparency in dataset sources, annotation processes, and model limitations.

4. Open Questions and Challenges

Despite these efforts, challenges remain:

Trade-offs Between Fairness and Utility: Attempts to reduce bias may sometimes reduce overall model performance or introduce new biases.

Defining Fairness: Different communities and stakeholders may have varying perspectives on what constitutes "fair" AI behavior.

Evolving Language and Social Norms: Bias mitigation is an ongoing process since societal perspectives on race and identity continuously evolve.

Conclusion

Racial bias in LLMs is a socio-technical problem requiring interdisciplinary solutions. While developers and researchers have made progress in reducing biases, structural inequalities embedded in both data and algorithmic decision-making continue to pose challenges. Ethical AI development must center marginalized voices, actively address disparities, and prioritize transparency to ensure AI benefits all racial groups equitably.

Would you like insights on bias in a specific model or application?

Prompt:

First I want to do deeper research on Racial bias in LLMs. Can you give me a study guide for me? I would you to find 10 people to follow- please include a short description of their work. I'd like to see popular articles on the topic. If you can find any youtube videos that would great as well

Response:

Study Guide: Racial Bias in Large Language Models (LLMs)

This guide will help you explore racial bias in LLMs through a structured approach, including key readings, experts to follow, articles, and video resources.

📚 Study Plan (8-Week Structure)

Week 1: Introduction to Bias in AI & LLMs

Readings:

"Weapons of Math Destruction" – Cathy O’Neil (Book)

"AI and the Everything in the World Problem" – Timnit Gebru (Paper)

The Algorithmic Justice League's website (for AI bias activism)

Tasks:

Define racial bias in LLMs and list key concerns.

Follow key AI bias researchers.

Week 2: Bias in Training Data & NLP

Readings:

"Dissecting Racial Bias in NLP" – Bender et al. (Paper)

"The Dangers of Stochastic Parrots" – Timnit Gebru et al. (Paper)

Blog: Google AI Blog on Fairness

Tasks:

Investigate how bias is introduced via training data.

Analyze real-world NLP bias cases.

Week 3: Auditing & Measuring Bias in AI

Readings:

"Bias in Bios" – De-Arteaga et al. (Paper)

"Gender and Racial Bias in Sentiment Analysis" – Kiritchenko & Mohammad (Paper)

Tasks:

Explore fairness auditing tools (Google’s What-If Tool, IBM AI Fairness 360).

Week 4: Mitigation Strategies & Algorithmic Fairness

Readings:

"Fairness and Abstraction in Sociotechnical Systems" – Selbst et al. (Paper)

OpenAI’s bias mitigation reports (OpenAI Blog)

Tasks:

Examine different de-biasing techniques in AI models.

Week 5: Racial Bias in LLM Applications

Readings:

"GPT-3: What’s It Good For?" – Emily Bender (Article)

"Ethical Issues in NLP" – Hovy & Spruit (Paper)

Tasks:

Test ChatGPT/Bard for bias in responses.

Week 6: The Socio-Political Impact of AI Bias

Readings:

"Automating Inequality" – Virginia Eubanks (Book)

Algorithmic Bias Case Studies – AI Now Institute

Tasks:

Explore AI’s racial impact in criminal justice and hiring.

Week 7: Case Studies & Advocacy Efforts

Readings:

"Coded Bias" (Documentary)

Algorithmic Justice League reports

Tasks:

Study historical cases (e.g., COMPAS algorithm bias).

Week 8: Future Directions & Ethical AI

Readings:

AI Ethics and Fairness Policies (Google, Microsoft)

OpenAI’s Approach to AI Alignment

Tasks:

Write a summary of best practices in AI fairness.

👥 Experts to Follow on AI Bias & Fairness

1. Dr. Timnit Gebru

AI ethics researcher; former co-lead of Google’s Ethical AI team.

Co-author of "Stochastic Parrots" and founder of the Distributed AI Research Institute (DAIR).

Twitter: @timnitGebru

2. Dr. Joy Buolamwini

Founder of the Algorithmic Justice League.

Researches facial recognition bias and AI fairness.

Twitter: @jovialjoy

3. Dr. Ruha Benjamin

Professor at Princeton, author of "Race After Technology."

Examines how racial bias is embedded in technology.

Twitter: @Ruha9

4. Dr. Safiya Umoja Noble

Author of "Algorithms of Oppression."

Researches how search engines reinforce racial bias.

Twitter: @safiyanoble

5. Dr. Abeba Birhane

Researcher in AI ethics and decolonization of AI.

Works on bias mitigation strategies in LLMs.

Twitter: @Abebab

6. Dr. Emily Bender

Computational linguist; critic of AI’s overhype and bias.

Co-author of "Stochastic Parrots."

Twitter: @emilymbender

7. Mutale Nkonde

AI policy expert, founder of AI for the People.

Works on racial justice and tech policy.

Twitter: @mutalenkonde

8. Dr. Kate Crawford

Researcher on AI bias, co-founder of AI Now Institute.

Author of "Atlas of AI."

Twitter: @katecrawford

9. Dr. Meredith Broussard

Author of "Artificial Unintelligence."

Researches bias in AI journalism and machine learning.

Twitter: @merbroussard

10. Dr. Shakir Mohamed

Researcher at DeepMind on fairness and bias in AI.

Twitter: @shakir_za

📰 Popular Articles on Racial Bias in LLMs

"AI Systems Are Racist. Here’s Why" – Time Magazine

"How AI Reinforces Racial Bias" – Wired

"GPT-3 and the Problem of Bias" – VentureBeat

"How AI Chatbots Internalize Racism" – The Verge

"Why AI Fairness Needs More Than Technical Fixes" – MIT Tech Review

🎥 YouTube Videos on Racial Bias in AI

1. "Coded Bias" (Documentary)

A must-watch on algorithmic racial bias.

Watch Here

2. "Racist AI?" – Dr. Ruha Benjamin (TEDx Talk)

A powerful discussion on race, technology, and AI bias.

Watch Here

3. "Algorithmic Bias Explained" (CrashCourse AI)

Simple breakdown of bias in AI systems.

Watch Here

4. "What AI Still Can’t Do" (Dr. Timnit Gebru)

Analyzes AI bias and why large models reinforce discrimination.

Watch Here

5. "How Algorithms Reinforce Inequality" (Mutale Nkonde)

An AI policy expert explains how racism is embedded in AI.

Watch Here

Final Thoughts

This study guide should give you a strong foundation to understand, analyze, and critique racial bias in LLMs. Do you want any specific case studies or datasets to experiment with AI bias detection?

Prompt:

I'm thinking of writing a substack series of articles teaching people how to identify bias in LLM responses and creative ways of prompting to remove bias from responses. Can you find other examples where people have either wrote about how to remove bias from responses or how to prompt to remove bias

Response:

Creating a Substack series on identifying and mitigating bias in Large Language Model (LLM) responses is a commendable endeavor. While specific guides on prompting techniques to remove bias are limited, several resources offer insights into understanding and addressing biases in LLMs. Here's a curated list to assist you:

1. Research Papers and Articles on Bias in LLMs:

"Language (Technology) is Power: A Critical Survey of 'Bias' in NLP" by Blodgett et al.: This paper provides a comprehensive overview of biases in Natural Language Processing (NLP) and discusses methodologies to identify and mitigate them.

"Mitigating Gender Bias in Natural Language Processing: Literature Review" by Sun et al.: This review explores various techniques to detect and reduce gender bias in NLP models, which can be applied to LLMs.

2. Guides and Toolkits for Bias Detection and Mitigation:

AI Fairness 360 (AIF360): Developed by IBM, AIF360 is an open-source toolkit that offers metrics to test for biases in datasets and machine learning models, along with algorithms to mitigate these biases. While not exclusively for LLMs, the principles can be adapted.

Google's "Responsible AI Practices": Google provides a set of guidelines and resources aimed at developing fair and unbiased AI systems. These practices emphasize the importance of data representation and offer strategies to minimize bias.

3. Articles on Prompt Engineering to Mitigate Bias:

"Reducing Bias in AI Language Models: Strategies and Challenges" by OpenAI: This article discusses the inherent biases in AI language models and suggests strategies, including prompt engineering, to mitigate these biases.

"Prompting GPT-3 To Be Less Biased: Challenges and Strategies" by Zhao et al.: The authors explore how different prompting techniques can influence GPT-3's outputs and discuss methods to reduce biased responses.

4. Case Studies and Examples:

"Auditing AI: Investigating the Use of AI in Policing" by Richardson et al.: This case study examines the biases present in AI systems used in law enforcement and discusses methods to identify and mitigate such biases.

"Dissecting Racial Bias in an AI Language Model: A Case Study" by Sheng et al.: The authors analyze racial biases in AI language models and provide insights into how specific prompting strategies can reduce biased outputs.

5. Workshops and Tutorials:

"Fairness in AI" Workshop at NeurIPS: This annual workshop brings together researchers to discuss fairness, accountability, and transparency in AI. The proceedings often include discussions on bias in LLMs and methods to address them.

"Bias and Fairness in NLP" Tutorial at ACL: This tutorial provides an in-depth look into biases in NLP models and offers practical guidance on detecting and mitigating these biases.

6. Online Communities and Forums:

Reddit's r/MachineLearning: This subreddit frequently features discussions on biases in AI models and shares resources on mitigating them. Engaging with this community can provide real-world insights and prompt engineering techniques.

AI Alignment Forum: A platform where researchers discuss aligning AI systems with human values, including addressing biases in AI outputs.

7. Books:

"Weapons of Math Destruction" by Cathy O'Neil: This book delves into how algorithms can perpetuate biases and offers insights into identifying and addressing these issues.

"Artificial Unintelligence: How Computers Misunderstand the World" by Meredith Broussard: The author explores the limitations of AI, including biases, and discusses ways to mitigate them.

By exploring these resources, you'll gain a comprehensive understanding of the biases inherent in LLMs and the strategies to identify and mitigate them. This foundation will be invaluable as you develop your Substack series on this critical topic.

#AIBias#FairAI#EthicalAI#LLMBias#AITransparency#AIEquity#DeBiasingAI#PromptEngineering#ResponsibleAI#BiasInTech#chatgpt#midjourney#equality#justice#ai generated

0 notes

Text

Bias in AI: The Glitch That Hurts Real People

Yo, Tumblr crew! Let’s chat about something wild: AI bias. Let’s discuss about AI’s everywhere—picking Netflix binge, powering self-driving cars, even deciding who will gets a job interview? It’s super cool, but here’s the tea: AI can mess up by favouring some people over others, and that’s a big deal. Let’s break down what’s going on, why it sucks, and how we can make things better.

What’s AI Bias?

Let’s imagine that AI is supposed to be a neutral, super-smart robot brain, right? But sometimes it’s more like that friend who picks favorites without even realizing it. AI bias is enables the system to screw up by treating certain groups unfairly—like giving dudes an edge in job applications or struggling to recognize faces of people with darker skin. It’s not the AI being a mis operational model; it’s just doing what it was taught, and sometimes its teaching is flawed.

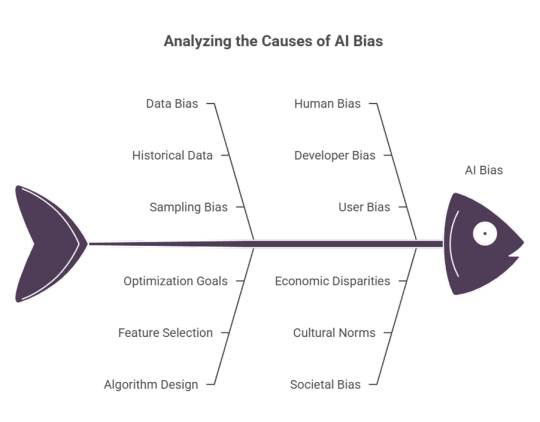

AI bias and its insights

Shady Data: AI learns from data humans feed it. If that data’s from a world where, say, most tech hires were guys, the AI might “learn” to pick guys over others.

Human Oof Moments: People build AI, and we’re not perfect. Our blind spots—are thinking about how different groups are affected—can end up in the code.

Bad Design Choices: AI’s built can accidentally lean toward certain outcomes, like prioritizing stuff that seems “normal” but actually excludes people.

Why’s This a Big Deal?

A few years back, a big company ditched an AI hiring tool because it was rejecting women’s résumés. Yikes.

Facial recognition tech has messed up by misidentifying Black and Brown folks way more than white folks, even leading to wrongful arrests.

Ever notice job ads for high-paying gigs popping up more for guys?

This isn’t just a tech glitch—it’s a fairness issue. If AI keeps amplifying the same old inequalities, it’s not just a bug; it’s a system that’s letting down entire communities.

Where’s the Bias Coming From?

Old-School Data: If the data AI’s trained on comes from a world with unfair patterns (like, uh, ours), it’ll keep those patterns going. For example, if loan records show certain groups got denied more, AI might keep denying them too.

Not Enough Voices: If the folks building AI all come from similar backgrounds, they might miss how their tech affects different people. More diversity in the room = fewer blind spots.

Vicious Cycles: AI can get stuck in a loop. If it picks certain people for jobs, and only those people get hired, the data it gets next time just doubles down on the same bias.

Okay, How Do We Fix This?

There are ways to make things fairer, and it’s totally doable if we put in the work.

Mix Up the Data: Feed AI data that actually represents everyone—different races, genders, backgrounds.

Be Open About It: Companies need to spill the beans on how their AI works. No more hiding behind “it’s complicated.”

Get Diverse Teams: Bring in people from all walks of life to build AI. They’ll spot issues others might miss and make tech that works for everyone.

Keep Testing: Check AI systems regularly to catch any unfair patterns. If something’s off, tweak it until it’s right.

Set Some Ground Rules: Make ethical standards a must for AI. Fairness and accountability should be non-negotiable.

What Can we Do?

Spread the Word: Talk about AI bias! Share posts, write your own, or just chat with friends about it. Awareness is power.

Call It Out: If you see a company using shady AI, ask questions. Hit them up on social media and demand transparency.

Support the Good Stuff: Back projects and people working on fair, inclusive tech. Think open-source AI or groups pushing for ethical standards.

Let’s Dream Up a Better AI

AI’s got so much potential—think better healthcare, smarter schools, or even tackling climate change. By using diverse data, building inclusive teams, and keeping companies honest.

#AI #TechForGood #BiasInTech #MakeItFair #InclusiveFuture

@sruniversity

2 notes

·

View notes

Photo

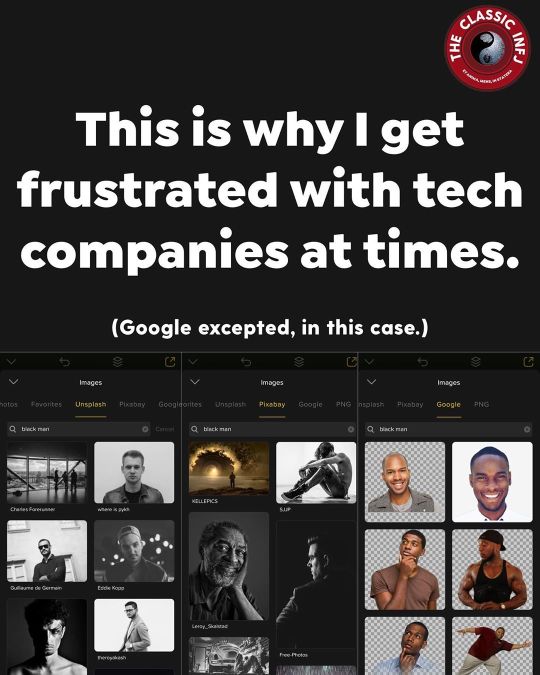

Searching for “black man” should not come up with black and white images as the primary content, @unsplash, @pixabay 😠😠😠 @google? A much better job! 👏🏾👏🏾👏🏾 #techbias #biasintech #racialbias #racialbiasintech (at Birmingham, United Kingdom) https://www.instagram.com/p/B76cDR5BrqP/?igshid=1n2wp9k7hqjaa

0 notes