#CUDA application programming interface

Explore tagged Tumblr posts

Text

Nvidia CEO Jensen Huang calls US ban on H20 AI chip ‘deeply painful’

[ASIA] Nvidia CEO Jensen Huang said Washington’s plan to stymie China’s artificial intelligence (AI) capabilities by restricting access to its H20 graphics processing units (GPUs) was “deeply uninformed”, as the US semiconductor giant continues to navigate through a deepening tech rivalry between the world’s two largest economies. In an interview with tech site Stratechery following his keynote…

#AI#AI Diffusion Rule#America#American#Ban#Beijing#Ben Thompson#Biden administration#Blackwell AI graphics processors#calls#CEO#China#Chinese#chip#Computex#CUDA application programming interface#deeply#DeepSeek#Donald Trump#Foxconn#GPUs#H20#Hon Hai Precision Industry#Huang#Huawei Technologies#Jensen#Jensen Huang#mainland China#Nvidia#painful

0 notes

Text

IQM Resonance Devices For Quantum Software Development

The world's leading quantum computer manufacturer, IQM Quantum Computers, updated its IQM Resonance cloud platform. This big development uses new software tools to speed up quantum algorithm generation and give customers a more resilient and expanded quantum system. IQM is known for its on-premises full-stack quantum computers and cloud platform, serving top high-performance computing centres, research labs, universities, and enterprises worldwide. Over 300 individuals work for the Finnish company, which has a global presence on numerous continents.

Key IQM Resonance platform updates and features include:

Novel 54-Qubit Quantum Computer: An advanced Crystal 54 processor powers the platform's 54-qubit quantum computer. Amazon Web Services' quantum computing service, Amazon Braket, should offer this dependable system by July 16, 2025. Providing the quantum community with high-qubit counts is a major advance.

The default SDK for the IQM Resonance platform is Qrisp, an open-source project started by Fraunhofer FOKUS. Qrisp offers a strong and easy-to-learn higher-level programming interface for quantum developers and researchers. To give users options, IQM will support Qiskit, Cirq, Cuda Quantum, and TKET when Qrisp becomes the main SDK. This thorough and open quantum development approach gives beginners and experts a firm foundation. IQM also hired Raphael Seidel, Qrisp's Lead Quantum Software Engineer, to lead its development in October 2025, a major move.

Seidel stressed that Qrisp's programming methodology routinely delivers “serious performance advantages”. He called Qrisp “state-of-the-art in quantum programming” and said its direct and robust integration into IQM's quantum hardware will give it a “strong head-start into the era of fault-tolerant quantum computers.”

Advanced Error Handling: The platform now supports all error-reducing techniques. Dynamical decoupling was one of the first qubit noise protection features. The next step is to reduce readout error to considerably improve experimental precision. These advances are crucial for consumers to acquire more reliable quantum computation data.

A powerful Quantum Approximate Optimisation Algorithms (QAOA) library accelerates research. This crucial application library allows speedy iteration on new concepts and the research of unique quantum algorithms with its many QAOA flavours. It also helps researchers develop, test, and adjust quantum circuits for complex optimisation difficulties.

Expanded IQM Academy Resources: To suit the latest upgrades, materials have been considerably upgraded. In-depth Qrisp examples and tutorials and an error reduction section have been included. The academy will soon offer a Qrisp on IQM hardware lecture series and insights into IQM's upcoming Noise Robust estimation methods.

IQM Resonance now offers pulse-level access for experienced users that need maximum experimental control. Scientists can directly program pulse patterns to create novel quantum operations and precise experiments.

Increased Access and Adoption:

IQM is offering a free "Starter tier" with up to 30 credits per month on some quantum computers to increase access to its top platform. This project aims to boost quantum technology interest by decreasing barriers for new researchers, developers, and students. With the launch of this Starter Tier and the rapid availability of IQM Crystal 54, IQM is also giving all Starter tier customers temporary access to the 54-qubit quantum computer to encourage experimentation and creativity.

Dr. Stefan Seegerer, IQM's Head of Product, Quantum Platform, called these achievements “a new era of utility for quantum computing” and showed the company's commitment to ecosystem enablement. He stressed that IQM is providing researchers and developers with the Crystal 54-qubit system, cutting-edge error reduction techniques, and Qrisp standardisation to push the frontiers of what is possible in the sector. Dr. Seegerer called the free Starter package a “crucial step in our mission to make quantum technology accessible to everyone.”

Over 200 companies are registered on the IQM Resonance quantum cloud platform for research. IQM, a globally leading superconducting quantum computing company, updates its cloud platform and on-premises full-stack quantum computers to fulfil the needs of research labs, high-performance computing centres, academic institutions, and companies.

#IQMResonance#quantumalgorithms#AmazonBraket#IQMResonanceplatform#quantumcircuits#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

OpenCV Porting on T507 Platform with Linux 4.19: A Deep Comparison Between Standalone Compilation and Buildroot Compilation

OpenCV is an open-source computer vision library that provides a rich set of image processing and computer vision algorithms, supporting multi-platform development. It can be used for various computer vision tasks such as image and video processing, feature extraction, object detection, image segmentation, pose estimation, and object recognition. There are two methods for porting OpenCV: standalone compilation and Buildroot compilation.

Standalone Compilation of OpenCV

Standalone compilation requires CMake, a cross-platform build system similar to the commonly used ./configure. Once configured, it generates a CMakeLists.txt file that defines the project's build rules.

1. Install CMake

2. Configuration

First, extract opencv-2.4.13.7.tar.gz, enter its directory, and run cmake-gui to open the configuration interface.

(1)Set the source code path and build output path (the output path must be created in advance);

(2)Click Configure and select Unix platform cross-compilation mode;

(3)Configure compiler settings.

(4)Configure compiler settings after configuration.

Enable TIFF support.

Disable CUDA (Compiling with CUDA fails; CUDA is for NVIDIA GPUs, which are not available on T507, so disabling it has no impact).

Select Grouped to configure the installation path in cmake.

Cancel GTK

Reconfigure and generate the build files.

3. Compilation

Before compiling, modify the CMakeLists.txt file to configure linker rules:

The goal is to configure the rules for the connector,

-lpthread: Links the libpthread.so library, which provides multithreading support

-lrt: links the librt.so library, which provides real-time extension-related functions

-lrt: links the librt.so library, which provides real-time extension-related functions

make compilation

4. Installation and Deployment

The installation path needs to be cleared before installation.

make install.

Generated test program.

Generated library.

There is also a part of the build path.

Package the above content and release it to the file system of the board.

5. Test

The program can be called to the library to run normally.

However, there are still some errors in the application processing.

Build root compile opencv

1. Enter the Graphical Interface

2. Select Compilation Parameters

Select opencv3 in the following path

Tick all the parameter items.

3. Save the .config file.

Go to the output path of the source package/OKT507-linux-sdk/out/t507/okt507/longan/buildroot,

Change the existing target file to any name and create a new target file

4. Standalone Compilation

Networking is required for the compilation process

Buildroot outputs the standalone compiled content to the previously created **target** directory. The contents can then be packaged and deployed to the **filesystem**.

5. Test

ZD-Atom provides an OpenCV Qt test program based on **i.MX6UL**, where the **.pro** file defines the application's dependencies.

These libraries are available in the files generated by the compilation.

Both methods have their pros and cons:

Standalone Compilation: Allows trying different source versions and offers more flexible parameter configuration, but the process is complex.

Buildroot Compilation: Enables easy deployment by simply extracting the generated files, but switching versions is inconvenient.

0 notes

Text

Nvidia Strategy

Nvidia Strategy

Nvidia Strategy and Nvidia Corporation is an American multinational technology company incorporated in Delaware and based in Santa Clara, California.

It is a software and fabless company that designs graphics processing units, application programming interfaces for data science and high-performance computing as well as system on chip units for the mobile computing and automotive market.

Stock Strategy

Nvidia is a dominant supplier of artificial intelligence hardware and software.

Its professional line of GPUs are used in workstations for applications in such fields as architecture, engineering and construction, media and entertainment, automotive, scientific research, and manufacturing design.

In addition to GPU manufacturing, Nvidia provides an API called CUDA that allows the creation of massively parallel programs that utilize GPUs. They are deployed in supercomputing sites around the world.

More recently, it has moved into the mobile computing market, where it produces tegra mobile processors for smartphones and tablets as well as vehicle navigation and entertainment systems.

In addition to AMD, its competitors include Intel, Qualcomm and AI-accelerator companies such as Graph core.

CEO

Jensen Huang

FOUNDED

Apr 1993

HEADQUARTERS

Santa Clara, California

United States

EMPLOYEES

26,196

Stock Strategy

Nvidia's family includes graphics, wireless communication, PC processors, and automotive hardware/software.

Some families

GeForce, consumer-oriented graphics processing products

Nvidia RTX, professional visual computing graphics processing products (replacing GTX)

NVS, multi-display business graphics solution.

Facebook Trading Strategy

Meta Platforms, Inc., formerly named Facebook, Inc., and The Facebook, Inc., is an American multinational technology conglomerate based in Menlo Park, California. The company owns Facebook, Instagram, and WhatsApp, among other products and services.

Meta is one of the world's most valuable companies and among the ten largest publicly traded corporations in the United States. It is considered one of the Big Five American information technology companies, alongside Alphabet, Amazon, Apple, and Microsoft. Meta's products and services include Facebook, Instagram, WhatsApp, Messenger, and Quest 2.

It has acquired Reality Labs, Mapillary, CTRL-Labs, Kustomer, and has a 9.99% stake in Jio Platforms. In 2021, the company generated 97.5% of its revenue from the sale of advertising. On October 28, 2021, the parent company of Facebook changed its name from Facebook, Inc., to Meta Platforms, Inc., to "reflect its focus on building the metaverse".

According to Meta, the "metaverse" refers to the integrated environment that links all of the company's products and services.

CEO

Mark Zuckerberg

FOUNDED

Feb 2004

EMPLOYEES

77,114

Stock Strategy

OfficesUsers outside of the US and Canada contract with Meta's Irish subsidiary, Meta Platforms Ireland Limited (formerly Facebook Ireland Limited), allowing Meta to avoid US taxes for all users in Europe, Asia, Australia, Africa and South America. Meta is making use of the Double Irish arrangement which allows it to pay 2–3% corporation tax on all international revenue

0 notes

Text

OPINION: NVIDIA's Anti-Competitive Move: The Restriction on Third-Party "Translation" Software

Opinion posting: In my opinion, a recent update to their terms of service, NVIDIA has taken a controversial step by restricting third-party use of its CUDA libraries for use with "translation" software, most notably targeting projects like ZLUDA. This decision by the GPU giant has sparked considerable debate within the tech community, with many voicing concerns over its potential anti-competitive implications.

CUDA, NVIDIA's proprietary parallel computing platform and application programming interface (API), has long been a cornerstone for developers utilizing NVIDIA GPUs for various computational tasks, from scientific simulations to deep learning algorithms. However, with the rise of alternative platforms and software, the tech industry has witnessed a growing demand for compatibility and interoperability across different ecosystems.

One such demand has been for tools that enable the translation of CUDA code to run on non-NVIDIA hardware, effectively breaking down vendor lock-in and fostering a more open and diverse computing environment. Projects like ZLUDA have emerged to address this need, allowing CUDA applications to run on AMD GPUs, for instance, thus providing users with greater flexibility and choice in their hardware selection.

By imposing restrictions on the usage of its CUDA libraries with such translation software, NVIDIA is effectively limiting the accessibility and interoperability of its technology, thereby stifling competition and innovation in the GPU market. This move not only undermines the principles of fair competition but also raises concerns about the dominance of NVIDIA within the industry.

This isn't the first time a tech industry leader has been accused of engaging in anti-competitive behavior. Historical examples abound, with some of the most prominent cases including:

Microsoft vs. Netscape: In the late 1990s, Microsoft faced antitrust scrutiny for bundling its Internet Explorer web browser with the Windows operating system, thus stifling competition from Netscape Navigator and other browsers. This led to a landmark legal battle and ultimately resulted in Microsoft being found guilty of anticompetitive practices.

Intel's Anti-Competitive Practices: Intel has faced multiple allegations of anti-competitive behavior over the years, including accusations of offering rebates and incentives to PC manufacturers in exchange for exclusive deals and favoring its own products over competitors' in certain markets. These actions have led to investigations by regulatory authorities in various countries and hefty fines imposed on the company.

Google's Search Dominance: Google has been under scrutiny for leveraging its dominant position in the search engine market to favor its own services and products over competitors' offerings. This has resulted in numerous antitrust investigations and legal challenges, with regulators raising concerns about Google's impact on competition and consumer choice.

In each of these cases, the actions of the industry leader in question were seen as detrimental to competition and innovation, ultimately resulting in regulatory intervention and legal consequences. Similarly, NVIDIA's decision to restrict third-party "translation" software could be seen as a move aimed at preserving its dominance in the GPU market, potentially at the expense of consumer choice and innovation.

In conclusion, NVIDIA's recent update to its terms of service regarding the usage of CUDA libraries with third-party translation software raises serious concerns about anti-competitive behavior within the tech industry. By limiting interoperability and stifling competition, NVIDIA risks undermining the principles of fair competition and innovation that are essential for a healthy and vibrant technology ecosystem. As such, it is imperative for regulatory authorities to closely monitor the situation and take appropriate action to ensure a level playing field for all participants in the GPU market.

0 notes

Text

Understanding NVIDIA CUDA

NVIDIA CUDA has revolutionized the world of computing by enabling programmers to harness the power of GPU (Graphics Processing Unit) for general-purpose computing tasks. It has changed the way we think about parallel computing, unlocking immense processing power that was previously untapped. In this article, we will delve into the world of NVIDIA CUDA, exploring its origins, benefits, applications, programming techniques, and the future it holds. 1. Introduction In the era of big data and complex computational tasks, traditional CPUs alone are no longer sufficient to meet the demands for high-performance computing. This is where NVIDIA CUDA comes into play, providing a parallel computing platform and API that allows developers to utilize the massively parallel architecture of GPUs. 2. What is NVIDIA CUDA? NVIDIA CUDA is a parallel computing platform and programming model that enables developers to utilize the power of GPUs for general-purpose computations. It provides a unified programming interface that abstracts the complexities of GPU architecture and allows programmers to focus on their computational tasks without worrying about low-level GPU details. 3. History and Evolution of NVIDIA CUDA NVIDIA CUDA was first introduced by NVIDIA Corporation in 2007 as a technology to enable general-purpose computing on GPUs. Since then, it has evolved significantly, with each iteration bringing more features, optimizations, and performance improvements. 4. Benefits of NVIDIA CUDA One of the key advantages of NVIDIA CUDA is its ability to significantly accelerate computing tasks by offloading them to GPUs. GPUs are designed to handle massive parallelism, making them ideal for data-intensive and computationally intensive tasks. This results in faster execution times and improved performance compared to traditional CPU-based computing. Additionally, NVIDIA CUDA allows for more efficient utilization of hardware resources, maximizing the overall system throughput. It allows programmers to exploit the full potential of GPUs, enabling them to solve complex problems that were previously impractical or infeasible. 5. Applications and Use Cases of NVIDIA CUDA NVIDIA CUDA finds applications in a wide range of domains, including scientific research, data analysis, machine learning, computer vision, simulations, graphics rendering, and more. It is particularly useful in tasks that involve massive data parallelism, such as image and video processing, deep learning, cryptography, and computational physics.The ability to perform complex calculations in real-time, enabled by NVIDIA CUDA, has accelerated breakthroughs in various fields, pushing the boundaries of what is possible. 6. How NVIDIA CUDA Works At the heart of NVIDIA CUDA is a parallel computing architecture known as CUDA Cores. These are the individual processing units within a GPU and are capable of executing thousands of lightweight threads simultaneously. By efficiently partitioning the workload among these cores, NVIDIA CUDA can achieve massive parallelism and deliver exceptional performance.To harness the power of NVIDIA CUDA, programmers write code using CUDA C/C++ or CUDA Fortran, which is then compiled into GPU executable code. This code is optimized for the GPU architecture, allowing for seamless integration of compute-intensive and graphics-intensive tasks. 7. Programming with NVIDIA CUDA Programming with NVIDIA CUDA requires an understanding of parallel computing concepts and GPU architecture. Developers need to creatively partition their algorithms into parallelizable tasks that can be executed on multiple cores simultaneously. They also need to carefully manage memory accesses and ensure efficient communication between CPU and GPU.NVIDIA provides a comprehensive set of programming tools, libraries, and frameworks, such as CUDA Toolkit and cuDNN, to assist developers in writing efficient and optimized CUDA code. These tools help in handling complexities associated with GPU programming, enabling faster development cycles. 8. Popular NVIDIA CUDA Libraries and Frameworks To further simplify the development process, NVIDIA CUDA provides a vast ecosystem of libraries and frameworks tailored for various domains. This includes libraries for linear algebra (cuBLAS), signal processing (cuFFT), image and video processing (NPP), deep learning (cuDNN), and many more. These libraries provide pre-optimized functions and algorithms, enabling developers to achieve high-performance results with minimal effort. 9. Performance and Efficiency of NVIDIA CUDA The performance and efficiency of NVIDIA CUDA are crucial factors when considering its adoption. GPUs equipped with CUDA architecture have demonstrated exceptional capabilities in terms of both raw computational power and power efficiency. Their ability to handle parallel workloads and massive data sets make them indispensable for modern high-performance computing.Additionally, the continuous advancements in CUDA architecture, hardware improvements, and software optimizations ensure that NVIDIA CUDA remains at the forefront of parallel computing technology. 10. Future of NVIDIA CUDA As technology continues to advance, the future of NVIDIA CUDA looks extremely promising. With the rise of artificial intelligence, deep learning, and the need for more efficient computations, the demand for GPU-accelerated computing is only expected to grow. NVIDIA is dedicated to pushing the boundaries of parallel computing, continually refining CUDA architecture, and providing developers with the tools they need to solve the world's most demanding computational problems. 11. Conclusion In conclusion, NVIDIA CUDA has revolutionized the computing landscape by providing a powerful platform for harnessing GPU power for general-purpose computations. Through its parallel computing architecture and comprehensive programming ecosystem, it enables developers to unlock the potential of GPUs, delivering faster, more efficient, and highly parallel solutions. With a bright future ahead, NVIDIA CUDA is set to continue reshaping the world of high-performance computing. FAQs What are the benefits of using NVIDIA CUDA? NVIDIA CUDA offers several benefits, including accelerated computing, improved performance, efficient hardware utilization, and the ability to solve complex problems that were previously infeasible. What are some applications of NVIDIA CUDA? NVIDIA CUDA finds applications in scientific research, machine learning, computer vision, simulations, graphics rendering, cryptography, and more. It is particularly useful in tasks involving massive data parallelism. How does NVIDIA CUDA work? NVIDIA CUDA utilizes parallel computing architecture known as CUDA Cores to achieve massive parallelism on GPUs. This allows for the execution of thousands of lightweight threads simultaneously, resulting in faster computations. What programming languages are used with NVIDIA CUDA? NVIDIA CUDA supports programming languages such as CUDA C/C++ and CUDA Fortran, which allow developers to write code optimized for the GPU architecture. What are popular NVIDIA CUDA libraries and frameworks? NVIDIA CUDA provides a wide range of libraries and frameworks tailored for various domains, including cuBLAS, cuFFT, NPP, cuDNN, and more, which assist developers in achieving high-performance results with minimal effort. Read the full article

0 notes

Text

🖥 Understanding aespa’s ED Hacker Role (1)

A short theory post of me attempting to understand Ningning’s role in aespa’s lore and what she really does in the overall story. This post discuses what happened in Ningning’s side in aespa’s EP. 1 Black Mamba - SMCU video. There could be more posts as the next episode is released.

In aespa’s lore, Ningning has the role of being a ‘ED Hacker’. Though, oddly, she is mostly shown being an artist. Because of that, its confusing to see what’s the correlation of an artist and a hacker. So here’s me attempting to dissect this.

As of now we still don’t know what ED stands for, but for sure, Ningning is a hacker. After rewatching EP 1 Black Mamba SMCU, I found out that her role somehow has something to do with cryptocurrency.

tweet link

I’m using this tweet as my guide... although I may not understand what OP was really talking about. But here’s my understanding.

CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing unit (GPU) for general purpose processing.

We’re only focusing on GPU,

GPU - A graphics processing unit is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. It was created by Nvidia (multinational technology company). GPU is used in cryptocurrency mining.

GPU mining involves the use of a gaming computer’s graphics processing unit to solve complex math problems to verify electronic transactions on a blockchain. This is how you mine blockchain. Due to a GPU's power potential vs. a CPU, or central processing unit, they have become more useful in blockchain mining due to their speed and efficiency. (source)

On the picture of the tweet, we also have Ethminer (labeled as ‘Ethereum Blockchain’ on pic).

Ethminer is an Ethash GPU mining worker: with ethminer you can mine every coin which relies on an Ethash Proof of Work thus including Ethereum, Ethereum Classic, Metaverse, Musicoin, Ellaism, Pirl, Expanse and others. Ethminer features Nvidia CUDA mining. (source)

Ethash is the computer algorithm (just search what it looks like, you’ll get it) for Ethereum network or other Ethereum-based cryptocurrencies.

Now that I have defined the terms, shouldn’t I be able to patch things up? Honestly, not really... I need someone who is an expert in this. But I’ll give it a shot.

If my translation is correct, Ningning is targeting this lady (art director) because she is under suspicion.

‘“예술가 착취” 미관장, 혐의 의혹’ translates to ‘"Artist exploitation". Director Mi, suspicion.’

Ningning along with the mysterious guy in hoodie, who, if you remember, people speculated to be either Jaehyun or Jungwoo in Sticker era, is in a way crashing her exhibition. Basically to attack her for a good reason (?).

My possible theories:

1. Ningning hacked into her exhibition using Ethminer and replaced (?) the previous art with her art.

Weakness of this theory: To be honest, I don’t know if you can hack using an Ethminer. I don’t think that’s the function either. It’s difficult to get the answers for this too unfortunately. This is my first attempt in patching her hacker role with mining cryptocurrency.

This one is getting further,

2. The art director stole/benefit unfairly from the artwork of someone else and was selling it to people as an NFT(?), Ningning stole (or bought???) the artwork using Ethminer before it falls into the wrong hands. That’s why the article says ‘Artist Exploitation’.

Weakness: I’m unsure of this theory too. Why would Ningning buy it too just to save it?? If only there’s more explanation to that article.

Those are just attempts by the way, I’m not concluding yet. Even the video is still vague.

I’m confused if Ningning is hacking or just successfully mined???? Again, I would need someone who knows this better. What’s for sure is that there is mining involved. Since there is art involved so does this mean there are NFTs?

We still don’t know what ED means either. But its a part of the role name, ‘ED Hacker’. So Ningning hacks for it? My biggest guess is that the E stands for Etherium or Ether, I mean we had Ethash and Ethminer involved. Speaking of which, the word ‘Ether’ isn’t foreign either in SMCU. NCT has their own definition of Ether; sea of unconscious. So does that mean aespa has their own ‘Ether’?

---

I guess that’s it from me. I’ll definitely make another post about this once I get more info.

#aespa#smcu#sm culture universe#SMCU THEORY#ningning#ning yizhuo#ningning aespa#karina aespa#winter aespa#giselle aespa#naevis#kwangya#yoo jimin#kim minjeong#aeri uchinaga#aespa black mamba

17 notes

·

View notes

Text

Driver Full For Mac Os X

Disclaimer

Driver Mac Os X Wifi

Driver Full For Mac Os X 10 12 Download

Driver Full For Mac Os X 10.10

Driver Mac Os X Wifi

Paragon NTFS for Mac OS X is an NTFS driver that provides full read/write access to NTFS formatted volume with the same speed as OS X's native HFS+ format. The latest version of the driver features full support for 64-bit Snow Leopard and Lion, but can also be used in 32-bit mode. File Driver is an application for Mac OS X that easily allows you to Locate, Organize, and Archive your files. File Driver utilizes OS X’s built-in metadata capabilities (Spotlight) to uniquely organize your files the way you see fit, making filing and retrieving your data a breeze. File Driver can easily replace your bulky filing cabinet that holds your bills, receipts, and other various files.

All software, programs (including but not limited to drivers), files, documents, manuals, instructions or any other materials (collectively, “Content”) are made available on this site on an 'as is' basis.

Canon Singapore Pte. Ltd. and its affiliate companies (“Canon”) make no guarantee of any kind with regard to the Content, expressly disclaims all warranties, expressed or implied (including, without limitation, implied warranties of merchantability, fitness for a particular purpose and non-infringement) and shall not be responsible for updating, correcting or supporting the Content.

Canon reserves all relevant title, ownership and intellectual property rights in the Content. You may download and use the Content solely for your personal, non-commercial use and at your own risks. Canon shall not be held liable for any damages whatsoever in connection with the Content, (including, without limitation, indirect, consequential, exemplary or incidental damages).

Driver Full For Mac Os X 10 12 Download

You shall not distribute, assign, license, sell, rent, broadcast, transmit, publish or transfer the Content to any other party. You shall also not (and shall not let others) reproduce, modify, reformat or create derivative works from the Content, in whole or in part.

Download Mac software in the Drivers category. Native macOS Gmail client that uses Google's API in order to provide you with the Gmail features you know and love, all in an efficient Swift-based app. In order to run Mac OS X Applications that leverage the CUDA architecture of certain NVIDIA graphics cards, users will need to download and install the 7.0.64 driver for Mac located here. New in Release 346.02.03f01: Graphics driver updated for Mac OS X Yosemite 10.10.5 (14F27).

You agree not to send or bring the Content out of the country/region where you originally obtained it to other countries/regions without any required authorization of the applicable governments and/or in violation of any laws, restrictions and regulations.

By proceeding to downloading the Content, you agree to be bound by the above as well as all laws and regulations applicable to your download and use of the Content.

When working with Macs and PCs at the same time, you will at some time hit the stumbling block of hard drives with different formats. Paragon NTFS for Mac OS X is an NTFS driver that provides full read/write access to NTFS formatted volume with the same speed as OS X's native HFS+ format.

The latest version of the driver features full support for 64-bit Snow Leopard and Lion, but can also be used in 32-bit mode. Using the driver means that shared files can be accessed with ease without the need for potentially expensive hardware.

With the driver installed, existing files on NTFS partitions can be modified and deleted, and you also have the option of creating new files. Use of the driver is incredibly simple and a driver can be used to mount NTFS partitions.

There are no limits to the size of NTFS partitions that can be accessed, and support is available non-Roman characters. A Windows version of the driver is also available that can be used to enable Windows computer to access HFS+ partitions.

NTFS for Mac 11 ships with these changes:

- Support for the latest OS X 10.9 Mavericks - Added Software update center - New simplified installer Interface - Improved stability and performance - Bug fixing

Driver Full For Mac Os X 10.10

Verdict:

Paragon NTFS for Mac OS X is an essential installation for anyone working with Macs and PCS, as it helps to break down the barriers that exist between the two operating systems.

1 note

·

View note

Text

How to Find a Perfect Deep Learning Framework

Many courses and tutorials offer to guide you through building a deep learning project. Of course, from the educational point of view, it is worthwhile: try to implement a neural network from scratch, and you’ll understand a lot of things. However, such an approach does not prepare us for real life, where you are not supposed to spare weeks waiting for your new model to build. At this point, you can look for a deep learning framework to help you.

A deep learning framework, like a machine learning framework, is an interface, library or a tool which allows building deep learning models easily and quickly, without getting into the details of underlying algorithms. They provide a clear and concise way for defining models with the help of a collection of pre-built and optimized components.

Briefly speaking, instead of writing hundreds of lines of code, you can choose a suitable framework that will do most of the work for you.

Most popular DL frameworks

The state-of-the-art frameworks are quite new; most of them were released after 2014. They are open-source and are still undergoing active development. They vary in the number of examples available, the frequency of updates and the number of contributors. Besides, though you can build most types of networks in any deep learning framework, they still have a specialization and usually differ in the way they expose functionality through its APIs.

Here were collected the most popular frameworks

TensorFlow

The framework that we mention all the time, TensorFlow, is a deep learning framework created in 2015 by the Google Brain team. It has a comprehensive and flexible ecosystem of tools, libraries and community resources. TensorFlow has pre-written codes for most of the complex deep learning models you’ll come across, such as Recurrent Neural Networks and Convolutional Neural Networks.

The most popular use cases of TensorFlow are the following:

NLP applications, such as language detection, text summarization and other text processing tasks;

Image recognition, including image captioning, face recognition and object detection;

Sound recognition

Time series analysis

Video analysis, and much more.

TensorFlow is extremely popular within the community because it supports multiple languages, such as Python, C++ and R, has extensive documentation and walkthroughs for guidance and updates regularly. Its flexible architecture also lets developers deploy deep learning models on one or more CPUs (as well as GPUs).

For inference, developers can either use TensorFlow-TensorRT integration to optimize models within TensorFlow, or export TensorFlow models, then use NVIDIA TensorRT’s built-in TensorFlow model importer to optimize in TensorRT.

Installing TensorFlow is also a pretty straightforward task.

For CPU-only:

pip install tensorflow

For CUDA-enabled GPU cards:

pip install tensorflow-gpu

Learn more:

An Introduction to Implementing Neural Networks using TensorFlow

TensorFlow tutorials

PyTorch

PyTorch

Facebook introduced PyTorch in 2017 as a successor to Torch, a popular deep learning framework released in 2011, based on the programming language Lua. In its essence, PyTorch took Torch features and implemented them in Python. Its flexibility and coverage of multiple tasks have pushed PyTorch to the foreground, making it a competitor to TensorFlow.

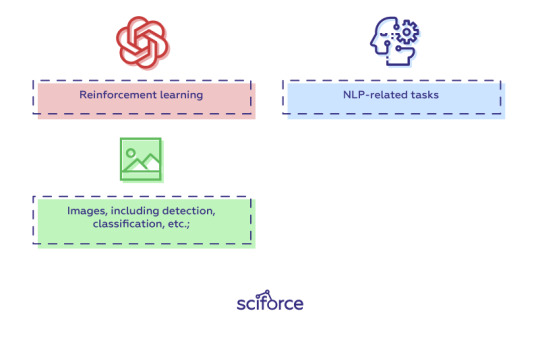

PyTorch covers all sorts of deep learning tasks, including:

Images, including detection, classification, etc.;

NLP-related tasks;

Reinforcement learning.

Instead of predefined graphs with specific functionalities, PyTorch allows developers to build computational graphs on the go, and even change them during runtime. PyTorch provides Tensor computations and uses dynamic computation graphs. Autograd package of PyTorch, for instance, builds computation graphs from tensors and automatically computes gradients.

For inference, developers can export to ONNX, then optimize and deploy with NVIDIA TensorRT.

The drawback of PyTorch is the dependence of its installation process on the operating system, the package you want to use to install PyTorch, the tool/language you’re working with, CUDA and others.

Learn more:

Learn How to Build Quick & Accurate Neural Networks using PyTorch — 4 Awesome Case Studies

PyTorch tutorials

Keras

Keras was created in 2014 by researcher François Chollet with an emphasis on ease of use through a unified and often abstracted API. It is an interface that can run on top of multiple frameworks such as MXNet, TensorFlow, Theano and Microsoft Cognitive Toolkit using a high-level Python API. Unlike TensorFlow, Keras is a high-level API that enables fast experimentation and quick results with minimum user actions.

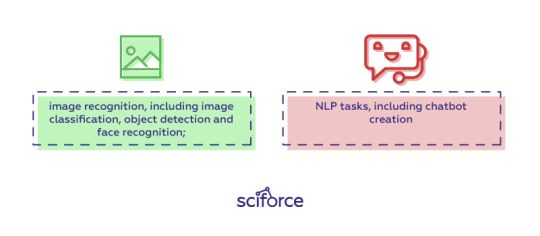

Keras has multiple architectures for solving a wide variety of problems, the most popular are

image recognition, including image classification, object detection and face recognition;

NLP tasks, including chatbot creation

Keras models can be classified into two categories:

Sequential: The layers of the model are defined in a sequential manner, so when a deep learning model is trained, these layers are implemented sequentially.

Keras functional API: This is used for defining complex models, such as multi-output models or models with shared layers.

Keras is installed easily with just one line of code:

pip install keras

Learn more:

The Ultimate Beginner’s Guide to Deep Learning in Python

Keras Tutorial: Deep Learning in Python

Optimizing Neural Networks using Keras

Caffe

The Caffe deep learning framework created by Yangqing Jia at the University of California, Berkeley in 2014, and has led to forks like NVCaffe and new frameworks like Facebook’s Caffe2 (which is already merged with PyTorch). It is geared towards image processing and, unlike the previous frameworks, its support for recurrent networks and language modeling is not as great. However, Caffe shows the highest speed of processing and learning from images.

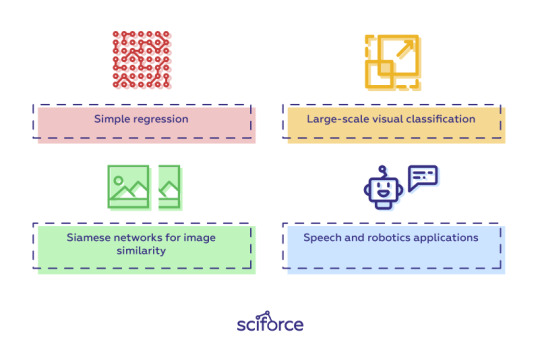

The pre-trained networks, models and weights that can be applied to solve deep learning problems collected in the Caffe Model Zoo framework work on the below tasks:

Simple regression

Large-scale visual classification

Siamese networks for image similarity

Speech and robotics applications

Besides, Caffe provides solid support for interfaces like C, C++, Python, MATLAB as well as the traditional command line.

To optimize and deploy models for inference, developers can leverage NVIDIA TensorRT’s built-in Caffe model importer.

The installation process for Caffe is rather complicated and requires performing a number of steps and meeting such requirements, as having CUDA, BLAF and Boost. The complete guide for installation of Caffe can be found here.

Learn more:

Caffe Tutorial

Choosing a deep learning framework

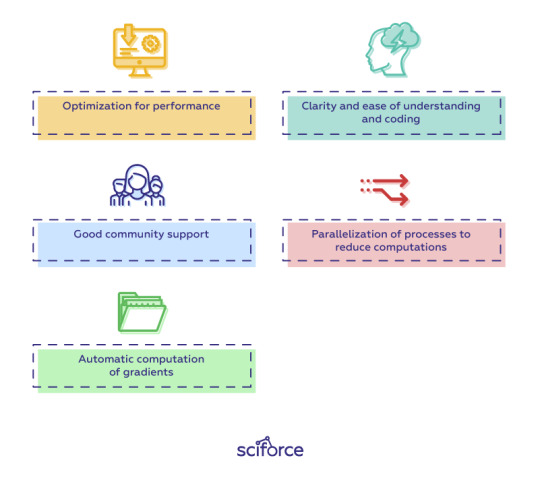

You can choose a framework based on many factors you find important: the task you are going to perform, the language of your project, or your confidence and skillset. However, there are a number of features any good deep learning framework should have:

Optimization for performance

Clarity and ease of understanding and coding

Good community support

Parallelization of processes to reduce computations

Automatic computation of gradients

Model migration between deep learning frameworks

In real life, it sometimes happens that you build and train a model using one framework, then re-train or deploy it for inference using a different framework. Enabling such interoperability makes it possible to get great ideas into production faster.

The Open Neural Network Exchange, or ONNX, is a format for deep learning models that allows developers to move models between frameworks. ONNX models are currently supported in Caffe2, Microsoft Cognitive Toolkit, MXNet, and PyTorch, and there are connectors for many other popular frameworks and libraries.

New deep learning frameworks are being created all the time, a reflection of the widespread adoption of neural networks by developers. It is always tempting to choose one of the most common one (even we offer you those that we find the best and the most popular). However, to achieve the best results, it is important to choose what is best for your project and be always curious and open to new frameworks.

10 notes

·

View notes

Text

Lloyds Stock Trading Strategy

Lloyds Stock Trading Strategy

Stock Trading Strategy for Lloyds Banking Group is a British financial institution formed through the acquisition of HBOS by Lloyds TSB in 2009. It is one of the UK’s largest financial services organization. with 30 million customers and 65,000 employees.

Lloyds Bank was founded in 1765 but the wider Group’s heritage extends over 320 years, dating back to the founding of the Bank of Scotland by the Parliament of Scotland in 1695. The Group’s headquarters are located at 25 Gresham Street in the City of London, while its registered office is on The Mound in Edinburgh.

It also operates office sites in Birmingham, Bristol, West Yorkshire and Glasgow. The Group also has extensive overseas operations in the US, Europe, the Middle East and Asia. Its headquarters for business in the European Union is in Berlin, Germany. The business operates under a number of distinct brands, including Lloyds Bank, Halifax, Bank of Scotland and Scottish Widows. Former Chief Executive António Horta-Osório told The Banker, “We will keep the different brands because the customers are very different in terms of attitude”. Lloyds Banking Group is listed on the London Stock Exchange and is a constituent of the FTSE 100 Index.

Best Stock Strategy

FOUNDED

Jan 19, 2009

HEADQUARTERS

London, Greater London

United Kingdom

WEBSITE

lloydsbank.nl

EMPLOYEES

59,354

Bank of America stock strategies

stock strategies for The Bank of America Corporation

Bank of America is an American multinational investment bank and financial services holding company headquartered at the Bank of America Corporate Center in Charlotte, North Carolina, with investment banking and auxiliary headquarters in Manhattan. The bank was founded in San Francisco, California. It is the second-largest banking institution in the United States, after JPMorgan Chase, and the second-largest bank in the world by market capitalization. Bank of America is one of the Big Four banking institutions of the United States. It serves approximately 10.73% of all American bank deposits, in direct competition with JPMorgan Chase, Citigroup, and Wells Fargo. Its primary financial services revolve around commercial banking, wealth management, and investment banking. One branch of its history stretches back to the U.S.-based Bank of Italy, founded by Amadeo Pietro Giannini in 1904, which provided various banking options to Italian immigrants who faced service discrimination. Originally headquartered in San Francisco, California, Giannini acquired Banca d’America e d’Italia in 1922.

stock strategies

CEO

Brian Moynihan

FOUNDED

Sep 30, 1998

WEBSITE

bankofamerica.com

EMPLOYEES

217,000

Nvidia stock strategy

Stock trading Strategy

stock strategy and Nvidia Corporation is an American multinational technology company incorporated in Delaware and based in Santa Clara, California.

It is a software and fabless company which designs graphics processing units, application programming interface for data science and high-performance computing as well as system on a chip units for the mobile computing and automotive market.

Nvidia is a dominant supplier of artificial intelligence hardware and software.

Its professional line of GPUs are used in workstations for applications in such fields as architecture, engineering and construction, media and entertainment, automotive, scientific research, and manufacturing design.

In addition to GPU manufacturing, Nvidia provides an API called CUDA that allows the creation of massively parallel programs which utilize GPUs. They are deployed in supercomputing sites around the world.

More recently, it has moved into the mobile computing market, where it produces tegra mobile processors for smartphones and tablets as well as vehicle navigation and entertainment systems.

In addition to AMD, its competitors include Intel, Qualcomm and AI-accelerator companies such as Graph core.

CEO

Jensen Huang

FOUNDED

Apr 1993

HEADQUARTERS

Santa Clara, California

United States

WEBSITE

nvidia.com

EMPLOYEES

26,196

Stock Strategy

Nvidia’s family includes graphics, wireless communication, PC processors, and automotive hardware/software.

Some families

GeForce, consumer-oriented graphics processing products

Nvidia RTX, professional visual computing graphics processing products (replacing GTX)

NVS, multi-display business graphics solution.

0 notes

Text

NVIDIA Omniverse Blueprint Used By Rescale For AI Models

NVIDIA Omniverse Blueprint

In collaboration with industry software leaders, NVIDIA has announced Omniverse Real-Time Physics Digital Twins. This blueprint for interactive virtual wind tunnels allows for previously unheard-of computer-aided engineering exploration for Altair, Ansys, Cadence, Siemens, and other companies.

SC24- With the help of the NVIDIA Omniverse Blueprint, which was unveiled today, industry software developers can assist their computer-aided engineering (CAE) clients in the manufacturing, energy, automotive, aerospace, and other sectors in creating digital twins that are interactive in real time.

The NVIDIA Omniverse Blueprint for real-time computer-aided engineering digital twins may be used by software developers like Altair, Ansys, Cadence, and Siemens to assist their clients reduce development costs and energy consumption while accelerating time to market. In order to accomplish 1,200x quicker simulations and real-time visualization, the blueprint is a standard approach that incorporates physics-AI frameworks, NVIDIA acceleration libraries, and interactive physically based rendering.

Omniverse was created to enable the creation of digital twins for everything. Computational fluid dynamics (CFD) simulations, a crucial initial step in digitally exploring, testing, and improving the designs of automobiles, aircraft, ships, and numerous other goods, are among the earliest uses of the blueprint. It might take weeks or even months to finish traditional engineering operations, which include physics simulation, visualization, and design optimization.

A virtual wind tunnel that enables users to simulate and visualize fluid dynamics at real-time, interactive speeds even while altering the vehicle model within the tunnel is being demonstrated by NVIDIA and Luminary Cloud at SC24, marking an industry first.

Unifying Three Pillars of NVIDIA Technology for Developers

Real-time physics solver performance and real-time visualization of large-scale information are two essential skills needed to build a real-time physics digital twin.

In order to accomplish these, the Omniverse Blueprint combines the NVIDIA CUDA-X libraries to speed up the solvers, the NVIDIA Modulus physics-AI framework to train and implement models to create flow fields, and the NVIDIA Omniverse application programming interfaces for real-time RTX-enabled visualization and 3D data interoperability.

The blueprint can be fully or partially integrated into the developers’ current tools.

Ecosystem Uses NVIDIA Blueprint to Advance Simulations

In order to facilitate rapid CFD simulation, Ansys was the first to use the NVIDIA Omniverse Blueprint in their Ansys Fluent fluid simulation program.

At the Texas Advanced Computing Center, Ansys used 320 NVIDIA GH200 Grace Hopper Superchips to run Fluent. After little over six hours, a 2.5-billion-cell car simulation that would have taken over a month to execute on 2,048 x86 CPU cores was finished. This greatly increased the viability of overnight high-fidelity CFD assessments and set a new industry standard.

The clients are able to handle more intricate and sophisticated simulations more rapidly and precisely because to the integration of NVIDIA Omniverse Blueprint with Ansys software. “To the partnership is advancing engineering and design standards in a variety of industries.”

The model is also being used by Luminary Cloud. Using training data from its GPU accelerated CFD solver, the company’s new simulation AI model which is based on NVIDIA Modulus learned the connections between airflow fields and vehicle shape. Through the use of Omniverse APIs, real-time aerodynamic flow simulation is made possible by the model, which does simulations orders of magnitude quicker than the solver itself.

Siemens, SimScale, Altair, Beyond Math, Cadence, Hexagon, Neural Concept, and Trane Technologies are also investigating the possibility of incorporating the Omniverse Blueprint into their own systems.

All of the top cloud computing systems, such as Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, are compatible with the Omniverse Blueprint. NVIDIA DGX Cloud offers it as well.

The NVIDIA Omniverse Blueprint is being used by Rescale, a cloud-based platform that helps businesses speed up scientific and engineering discoveries, to make it possible for businesses to train and implement unique AI models with a few clicks.

The Rescale platform may be used with any cloud service provider and automates the whole application-to-hardware stack. Businesses may use any simulation solver to create training data, construct, train, and implement AI models, run inference predictions, and display and optimize models.

NVIDIA Omniverse Blueprint Availability

Businesses may register for early access to the NVIDIA Omniverse Blueprint for real-time digital twins in computer-aided engineering.

Read more on govindhtech.com

#NVIDIAOmniverseBlueprint#AIModels#DigitalTwins#NVIDIA#NVIDIAOmniverse#NVIDIADGX#Blueprint#NVIDIAGH200GraceHopperSuperchips#Ecosystem#technology#technews#news#govindhtech

0 notes

Text

Amazon dim3 direction

For instance, the 圆4 ABI defines that the first six integer or pointer arguments are passed in registers ( %rdi, %rsi, %rdx, %rcx, %r8, %r9) the first eight floating point arguments (single or double precision) are passed in SSE registers ( %xmm0 to %xmm7) and any remaining arguments are pushed on the stack (in right-to-left order). One aspect of an ABI is to define a calling convention, including how arguments are passed to a function and where the return value can be retrieved. The ABI defines the interface between an application and the operating system, libraries and other components. Soon followed operating systems, multiprogramming, and the concept of an application binary interface (ABI). Initially, those programs had to be written in or manually translated into binary machine code, but soon assembly languages and assemblers were developed to simplify the process. Stored program computers soon followed that allowed one to write a program, load it into the computer memory, and run it. In the not-too-distant past, ENIAC was programmed with switches and a plugboard. Since 1987 - Covering the Fastest Computers in the World and the People Who Run Them įrom the figure, the 13th block maps to the coordinates and the 17th thread maps to the coordinates. With respect to 0-indexing, the 17th thread of the 13th block is thread. This number has to be expressed in terms of the block size. Here are the steps to find the indices for a particular thread, say thread. Each block has threads for a total of threads. Randomly completed threads and blocks are shown as green to highlight the fact that the order of execution for threads is undefined. , and below the 3D index is the 1D index of each block, e.g.Īt the block level (on the right), a similar indexing scheme applies, where the tuple is the 3D index of the thread within the block and the number in the square bracket is the 1D index of the thread within the block.ĭuring execution, the CUDA threads are mapped to the problem in an undefined manner. The grid (on the left) has size, that is, it has blocks in the direction, blocks in the direction, and block in the direction.Įach block (on the right) is of size with threads along the and directions, and thread along the direction.Īt the grid level (on the left), the tuple for each block is the 3D index, e.g. Here is an example indexing scheme based on the mapping defined above. Thread index within the block (zero-based)Įach of the above are dim3 structures and can be read in the kernel to assign particular workloads to any thread. MappingĮvery thread in CUDA is associated with a particular index so that it can calculate and access memory locations in an array. One can initialise as many of the three coordinates as they like dim3 threads(256) // Initialise with x as 256, y and z will both be 1ĭim3 blocks(100, 100) // Initialise x and y, z will be 1ĭim3 anotherOne(10, 54, 32) // Initialises all three values, x will be 10, y gets 54 and z will be 32. Blocks can be organized into one- or two-dimensional grids (say up to 65,535 blocks) in each dimension.ĭim3 is a 3d structure or vector type with three integers,, and. The limitation on the number of threads in a block is actually imposed because the number of registers that can be allocated across all threads is limited. For example if the maximum, and dimensions of a block are 512, 512 and 64, it should be allocated such that 512, which is the maximum number of threads per block. When a kernel is launched the number of threads per thread block, and the number of thread blocks is specified, this, in turn, defines the total number of CUDA threads launched. The blocks in a grid must be able to be executed independently, as communication or cooperation between blocks in a grid is not possible. DimensionsĪs many parallel applications involve multidimensional data, it is convenient to organize thread blocks into 1D, 2D or 3D arrays of threads. In the chevrons we place the number of blocks and the number of threads per block.ġ00, 256 would launch 100 blocks of 256 threads each (total of 25600 threads).ĥ0, 1024 would launch 50 blocks of 1024 threads each (51200 threads in total). The host calls a kernel using a triple chevron.

0 notes

Text

Dvdfab 9 registration key

#Dvdfab 9 registration key serial key

#Dvdfab 9 registration key full version

#Dvdfab 9 registration key license key

#Dvdfab 9 registration key mp4

#Dvdfab 9 registration key full

DVDFab Crack 12.0.8.3 Plus Keygen freeload 2022: Meanwhile, DVDFab Free renders the world-class technology of NVIDIA CUDA. In this way, you can work with an unlimited number of files without worrying about their sizes. Also, it enables you to compress large videos to half of their size without losing the quality. It is supported by a powerful feature of H.26, also known as High-Efficiency Video Coding. Overall, this program is one of the most powerful DVD management software.ĭVDFab Torrent Key allows you to move forward with its latest technologies. DVD Fab Crack is for professional media production.

#Dvdfab 9 registration key full

And these modes are Full Disc, Custom, Main Movie, Merge, and Split. DVDFab 12 Crack gives you all these modes for various operations. There are six copy modes available, so you can do whatever you want flexibly. And you can restore lost data from DVDs, Blu-ray, and CDs. This software allows you to convert videos and DVDFab Crack 12.0.8.3 decrypt blurry files. In the interface, all the main functions are on the left side.ĭVDFab 12.0.8.3 Crack With Registration Code freeload 2022:ĭVDFab Crack Registration Code is an excellent professional and powerful tool to easily copy, backup, and burn DVDs. You will also get the DVDFab Lifetime Registration Key 2022 from below. Besides, you will be able to navigate the application easily. As well as, Once the application is installed and launched.

#Dvdfab 9 registration key full version

So DVDFab freeload Full Version Crack can work properly. Such as, you can merge, split, and copy DVDs.Īllowing you to transfer DVD content to blank discs while preserving the quality of your original files. Download DVDFab 12.0.8.3 Crack With Keygen and amazing software. It is very simple and fully professional. It is full of professional tools to get better results. They can easily work with them with this amazing software. DVDFab Crack 12.0.8.3 With Lifetime Latest Version freeload:ĭVDFab 12.0.8.3 Lifetime is the best software for DVD and Blu-ray professionals. You can use this program to build disk images that fit various applications and devices. It allows you to perform classified data disks without the risk of settlement. Moreover, you can protect your disk’s password very quickly.

#Dvdfab 9 registration key license key

This enables you to draw and cut a disk efficiently.ĭVDFab License Key gives a very stable and secure environment for burning and cutting data without any difficulties. You can also encrypt avenues using proper methods to secure data. You will also get a device for encrypting disk metaphors. Its characteristics execute it so well and are famous worldwide. The software has a full kit of tools for treating various types of disks. This is from the usual complete and dynamic disk method statements. Users can surely raise all kinds of DVD image stability. It also makes it very easy to copy, store, burn, and too open secure DVDs. It has very nice and attractive features.

#Dvdfab 9 registration key serial key

Fix: Some minor changes and improvements.DVDFab Crack 12.0.8.3 Crack + Full Serial Key freeload HereĭVDFab Crack is a sophisticated and comprehensive tool for imitating, maintenance, and consuming DVDs. Fix: A problem that the subtitle color of the output file is not correct in certain cases. Fix: A failure problem when converting to profile “Xbox 360 (WMV)” in Ripper.

#Dvdfab 9 registration key mp4

Fix: A problem that the converted mp4 (MPEG4) files are not playable in Windows Media Player. Fix: A failure problem when converting Blu-ray to in certain cases. Fix: An uncompressed problem when copying Blu-ray 3D full disc to BD25 with “Copy as 3D” option selected in certain cases. (NOTE: Please change the display method at Common Settings -> General -> Click “A” icon if you are experiencing some GUI character display problems.) Fix: A problem that the GUI character is messed up in certain cases. (The option will be used when converting interlaced source in Ripper and Video Converter.) New: Add support to set “Deinterlacing Method” at Common Settings -> Conversion -> Convert. (The option will be used when the output resolution is different from the source resolution.) New: Added support to set “Scale Method” at Common Settings -> Conversion -> Convert. New: Added support to preserve file chapters in Video Converter. New: Added support to merge multiple video files in Video Converter. New: Added video adjust feature (Brightness, Contrast and Saturation) in Ripper and Video Converter. New: Improved support for IQS (Intel Quick Sync) GPU conversion. New: Improved support for DVD copy protections.

0 notes

Text

Understanding NVIDIA CUDA

NVIDIA CUDA has revolutionized the world of computing by enabling programmers to harness the power of GPU (Graphics Processing Unit) for general-purpose computing tasks. It has changed the way we think about parallel computing, unlocking immense processing power that was previously untapped. In this article, we will delve into the world of NVIDIA CUDA, exploring its origins, benefits, applications, programming techniques, and the future it holds. 1. Introduction In the era of big data and complex computational tasks, traditional CPUs alone are no longer sufficient to meet the demands for high-performance computing. This is where NVIDIA CUDA comes into play, providing a parallel computing platform and API that allows developers to utilize the massively parallel architecture of GPUs. 2. What is NVIDIA CUDA? NVIDIA CUDA is a parallel computing platform and programming model that enables developers to utilize the power of GPUs for general-purpose computations. It provides a unified programming interface that abstracts the complexities of GPU architecture and allows programmers to focus on their computational tasks without worrying about low-level GPU details. 3. History and Evolution of NVIDIA CUDA NVIDIA CUDA was first introduced by NVIDIA Corporation in 2007 as a technology to enable general-purpose computing on GPUs. Since then, it has evolved significantly, with each iteration bringing more features, optimizations, and performance improvements. 4. Benefits of NVIDIA CUDA One of the key advantages of NVIDIA CUDA is its ability to significantly accelerate computing tasks by offloading them to GPUs. GPUs are designed to handle massive parallelism, making them ideal for data-intensive and computationally intensive tasks. This results in faster execution times and improved performance compared to traditional CPU-based computing. Additionally, NVIDIA CUDA allows for more efficient utilization of hardware resources, maximizing the overall system throughput. It allows programmers to exploit the full potential of GPUs, enabling them to solve complex problems that were previously impractical or infeasible. 5. Applications and Use Cases of NVIDIA CUDA NVIDIA CUDA finds applications in a wide range of domains, including scientific research, data analysis, machine learning, computer vision, simulations, graphics rendering, and more. It is particularly useful in tasks that involve massive data parallelism, such as image and video processing, deep learning, cryptography, and computational physics.The ability to perform complex calculations in real-time, enabled by NVIDIA CUDA, has accelerated breakthroughs in various fields, pushing the boundaries of what is possible. 6. How NVIDIA CUDA Works At the heart of NVIDIA CUDA is a parallel computing architecture known as CUDA Cores. These are the individual processing units within a GPU and are capable of executing thousands of lightweight threads simultaneously. By efficiently partitioning the workload among these cores, NVIDIA CUDA can achieve massive parallelism and deliver exceptional performance.To harness the power of NVIDIA CUDA, programmers write code using CUDA C/C++ or CUDA Fortran, which is then compiled into GPU executable code. This code is optimized for the GPU architecture, allowing for seamless integration of compute-intensive and graphics-intensive tasks. 7. Programming with NVIDIA CUDA Programming with NVIDIA CUDA requires an understanding of parallel computing concepts and GPU architecture. Developers need to creatively partition their algorithms into parallelizable tasks that can be executed on multiple cores simultaneously. They also need to carefully manage memory accesses and ensure efficient communication between CPU and GPU.NVIDIA provides a comprehensive set of programming tools, libraries, and frameworks, such as CUDA Toolkit and cuDNN, to assist developers in writing efficient and optimized CUDA code. These tools help in handling complexities associated with GPU programming, enabling faster development cycles. 8. Popular NVIDIA CUDA Libraries and Frameworks To further simplify the development process, NVIDIA CUDA provides a vast ecosystem of libraries and frameworks tailored for various domains. This includes libraries for linear algebra (cuBLAS), signal processing (cuFFT), image and video processing (NPP), deep learning (cuDNN), and many more. These libraries provide pre-optimized functions and algorithms, enabling developers to achieve high-performance results with minimal effort. 9. Performance and Efficiency of NVIDIA CUDA The performance and efficiency of NVIDIA CUDA are crucial factors when considering its adoption. GPUs equipped with CUDA architecture have demonstrated exceptional capabilities in terms of both raw computational power and power efficiency. Their ability to handle parallel workloads and massive data sets make them indispensable for modern high-performance computing.Additionally, the continuous advancements in CUDA architecture, hardware improvements, and software optimizations ensure that NVIDIA CUDA remains at the forefront of parallel computing technology. 10. Future of NVIDIA CUDA As technology continues to advance, the future of NVIDIA CUDA looks extremely promising. With the rise of artificial intelligence, deep learning, and the need for more efficient computations, the demand for GPU-accelerated computing is only expected to grow. NVIDIA is dedicated to pushing the boundaries of parallel computing, continually refining CUDA architecture, and providing developers with the tools they need to solve the world's most demanding computational problems. 11. Conclusion In conclusion, NVIDIA CUDA has revolutionized the computing landscape by providing a powerful platform for harnessing GPU power for general-purpose computations. Through its parallel computing architecture and comprehensive programming ecosystem, it enables developers to unlock the potential of GPUs, delivering faster, more efficient, and highly parallel solutions. With a bright future ahead, NVIDIA CUDA is set to continue reshaping the world of high-performance computing. FAQs What are the benefits of using NVIDIA CUDA? NVIDIA CUDA offers several benefits, including accelerated computing, improved performance, efficient hardware utilization, and the ability to solve complex problems that were previously infeasible. What are some applications of NVIDIA CUDA? NVIDIA CUDA finds applications in scientific research, machine learning, computer vision, simulations, graphics rendering, cryptography, and more. It is particularly useful in tasks involving massive data parallelism. How does NVIDIA CUDA work? NVIDIA CUDA utilizes parallel computing architecture known as CUDA Cores to achieve massive parallelism on GPUs. This allows for the execution of thousands of lightweight threads simultaneously, resulting in faster computations. What programming languages are used with NVIDIA CUDA? NVIDIA CUDA supports programming languages such as CUDA C/C++ and CUDA Fortran, which allow developers to write code optimized for the GPU architecture. What are popular NVIDIA CUDA libraries and frameworks? NVIDIA CUDA provides a wide range of libraries and frameworks tailored for various domains, including cuBLAS, cuFFT, NPP, cuDNN, and more, which assist developers in achieving high-performance results with minimal effort. Read the full article

0 notes

Text

Adobe premiere pro 2021 review

#Adobe premiere pro 2021 review upgrade

#Adobe premiere pro 2021 review full

#Adobe premiere pro 2021 review pro

#Adobe premiere pro 2021 review Offline

#Adobe premiere pro 2021 review pro

Is the overall trimming functions of Premiere Pro CS6 better than Media Composer? No. If you’re going to steal, then steal from the best. It’s obvious the Adobe product designers looked very closely at Avid Media Composer when designing these new trim tools. I never realized that the ever-so-brief pause that happens when trimming with the Media Composer Smart Tool (which lets it enter trim mode) can seem like an eternity when compared to Premiere Pro CS6’s “smart tool.” Premiere Pro CS6 doesn’t have a smart tool, per se, but the mouse cursor will change to different trim tools depending on where it is in the timeline, just like Avid’s Smart Tool. Trimming can be achieved by realtime JKL playback and edits adjusted all while playback continues.Īvid editors might miss slip-and-slide dynamic trimming but they’ll enjoy the instant response here when working with the mouse and trimming in the timeline. Premiere Pro CS6 has attempted to bridge the best of both worlds by keeping mouse-based, tool-based timeline trimming fast and easy while also letting users set up rather complex ripple and roll trims. Final Cut Pro (7 and earlier) editors know clicking and dragging to trim in the timeline. You can see how clean and uncluttered the interface can be.īeing able to achieve detailed, dynamic trimming operations in the NLE timeline is something that Avid editors know well. This view isn’t the default interface but it shows the Program monitor with all the buttons and info turned off. And you really don’t need those buttons, since you can map them all to the keyboard. Best of all, you can turn off the buttons all together for an even cleaner look. You can now customize what buttons are there and tailor a second row of them if you're so inclined. The useless jog/shuttle wheel and scrubber bar has also been axed. All the wasted space around the Source and Program monitors has been removed. Gone is the button clutter of the Premieres of yore. I put media management as priority number one to be addressed in the next version of PPro. That’s not a huge deal if all your media resides in one or two directories, but since PPro offers no real assistance, other than giving you a file name to look for, it can be a real pain if your media is scattered or your mulitcamera shoot has cams generating the same file name.

#Adobe premiere pro 2021 review Offline

Reconnection options are pretty much point the offline file to the original. Moving footage around or having a different drive name (or anything that alters that original path) results in media offline. The way it works is it pretty much just looks at a directory path that points to a clip. Media management in CS6 is still pretty much the same as it has always been and that’s a shame. Tight integration is also a huge selling point for the Production Premium, which includes After Effects, Photoshop Extended, Audition, SpeedGrade and much more. If you need to get your PPro sequence to DVD, then just import that sequence right into Adobe Encore. Select some clips in a Premiere Pro CS6 timeline, Dynamic Link them to After Effects and you have a new clip in the PPro timeline that updates as the AE project is tweaked. As you may recall, Mercury originally only harnessed the power of NVIDIA’s CUDA technology to offer up some unprecedented realtime playback.ĭynamic Linking is Adobe’s way of easily interchanging media and materials between different applications in the Adobe suite. Native file support is still very strong in version 6 and the Mercury Engine has been retooled to work with some other non-NVIDIA CUDA video cards. Two of the biggest selling points to Premiere Pro have been its native camera support (and its ability to handle many of those different camera formats) and the Mercury Playback Engine. Native Camera Support and Mercury Playback

#Adobe premiere pro 2021 review upgrade

This new version is a very nice upgrade that makes some significant improvements over the last version in several key areas. People are still talking about Premiere Pro CS6, which I'll focus on for this review. The entire Adobe Creative Suite has also had impressive upgrades, which will be good news to Adobe fans that are getting used to using a number of Adobe applications that complement and interact with each other.

#Adobe premiere pro 2021 review full

It was a hot topic at NAB 2012, certainly, and Adobe’s booth was full of people taking in demos and asking questions. Everyone has been talking about Adobe Premiere Pro CS6 recently.

0 notes