#Computer Vision

Explore tagged Tumblr posts

Text

204 notes

·

View notes

Text

Termovision HUD from The Terminator (1984) A head-up display (HUD) is a transparent display that presents data over a visual screen. A Termovision refers to HUD used by Terminators to display analyses and decision options.

#terminator#computer vision#pattern recognition#red#sci fi#hud#OCR#termovision#natural language processing

63 notes

·

View notes

Text

Amanda Wasielewski, Computational Formalism: Art History and Machine Learning

8 notes

·

View notes

Text

Flexible circuit boards manufacturing (JLCPCB, 2023)

Inkjet print head uses a fiducial camera for registering an FPC panel, and after alignment it prints graphics with UV-cureable epoxy in two passes

#jlcpcb#pcb#fpc#manufacturing#manufacture#factory#electronics#fiducial camera#camera#cv#opencv#computer vision

137 notes

·

View notes

Text

ordered an xbox kinect to play with hehe

4 notes

·

View notes

Text

New vision-based system helps machines develop body awareness

- By Nuadox Crew -

Researchers at MIT have developed a new vision-based system that allows machines to model their own physical structure—essentially enabling robots to “understand” their bodies without external sensors.

Published in Science Robotics, the system uses a camera mounted on a robot’s hand to watch its own movements. From this visual data, a neural network learns a self-model that can be used to simulate physical interactions, navigate space, and plan movements more effectively.

This approach bypasses the need for predefined physics models or manual calibration. The result is a more adaptive, resilient robot that can function in dynamic or unfamiliar environments—potentially accelerating development in autonomous systems and soft robotics.

The team says this method could lead to robots that are easier to train and more capable of adjusting to physical wear, damage, or manufacturing variability.

Image: Qualitative results demonstrating robustness in out-of-distribution scenarios. Credit: Nature (2025). DOI: 10.1038/s41586-025-09170-0.

Header image: A 3D-printed robotic arm grips a pencil while training through random motions and a single camera, using a system called Neural Jacobian Fields (NJF). Instead of depending on sensors or manually coded models, NJF enables robots to learn how their bodies respond to motor commands through visual observation alone—paving the way for more adaptable, cost-effective, and self-aware machines. Credit: Image courtesy of the researchers.

Scientific paper: Sizhe Lester Li et al, Controlling diverse robots by inferring Jacobian fields with deep networks, Nature (2025). DOI: 10.1038/s41586-025-09170-0

Read more at MIT News

Related Content

Artificial intelligence enhances inspection in poultry processing industry

Other Recent News

Firefly Aerospace eyes $5.5B IPO as Blue Ghost lander preps for annual moon missions.

Apple’s iOS 18.5 rolls out with satellite messaging, new wallpapers, and gesture-based alerts.

Dubai’s DIFC hits 1,388 innovation firms, bolstering its global fintech leadership.

NASA spots double supernova, giving astronomers a rare glimpse into cosmic death.

Scientists revive the 1918 flu virus to study its pandemic potential.

Neanderthals may have eaten maggots, say researchers. Tastes evolve.

4 notes

·

View notes

Text

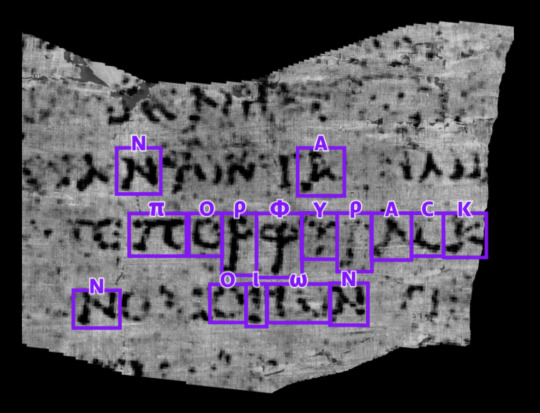

How do you read a scroll you can’t open?

With lasers!

In 79 AD, Mount Vesuvius erupts and buries the library of the Villa of the Papyri in hot mud and ash

The scrolls are carbonized by the heat of the volcanic debris. But they are also preserved. For centuries, as virtually every ancient text exposed to the air decays and disappears, the library of the Villa of the Papyri waits underground, intact

Then, in 1750, our story continues:

While digging a well, an Italian farm worker encounters a marble pavement. Excavations unearth beautiful statues and frescoes – and hundreds of scrolls. Carbonized and ashen, they are extremely fragile. But the temptation to open them is great; if read, they would more than double the corpus of literature we have from antiquity.

Early attempts to open the scrolls unfortunately destroy many of them. A few are painstakingly unrolled by an Italian monk over several decades, and they are found to contain philosophical texts written in Greek. More than six hundred remain unopened and unreadable.

Using X-ray tomography and computer vision, a team led by Dr. Brent Seales at the University of Kentucky reads the En-Gedi scroll without opening it. Discovered in the Dead Sea region of Israel, the scroll is found to contain text from the book of Leviticus.

This achievement shows that a carbonized scroll can be digitally unrolled and read without physically opening it.

But the Herculaneum Papyri prove more challenging: unlike the denser inks used in the En-Gedi scroll, the Herculaneum ink is carbon-based, affording no X-ray contrast against the underlying carbon-based papyrus.

To get X-rays at the highest possible resolution, the team uses a particle accelerator to scan two full scrolls and several fragments. At 4-8µm resolution, with 16 bits of density data per voxel, they believe machine learning models can pick up subtle surface patterns in the papyrus that indicate the presence of carbon-based ink

In early 2023 Dr. Seales’s lab achieves a breakthrough: their machine learning model successfully recognizes ink from the X-ray scans, demonstrating that it is possible to apply virtual unwrapping to the Herculaneum scrolls using the scans obtained in 2019, and even uncovering some characters in hidden layers of papyrus

On October 12th: the first words have been officially discovered in the unopened Herculaneum scroll! The computer scientists won 40,000 dollars for their work and have given hope that the 700,000 grand prize is within reach: to read an entire unwrapped scroll!

The grand prize: first team to read a scroll by December 31, 2023

#vesuvius#Vesuvius challenge#papyrus#ancient rome#ancient greece#x ray tomography#machine learning#computer vision

62 notes

·

View notes

Text

2023.08.31

i have no idea what i'm doing!

learning computer vision concepts on your own is overwhelming, and it's even more overwhelming to figure out how to apply those concepts to train a model and prepare your own data from scratch.

context: the public university i go to expects the students to self-study topics like AI, machine learning, and data science, without the professors teaching anything TT

i am losing my mind

based on what i've watched on youtube and understood from articles i've read, i think i have to do the following:

data collection (in my case, images)

data annotation (to label the features)

image augmentation (to increase the diversity of my dataset)

image manipulation (to normalize the images in my dataset)

split the training, validation, and test sets

choose a model for object detection (YOLOv4?)

training the model using my custom dataset

evaluate the trained model's performance

so far, i've collected enough images to start annotation. i might use labelbox for that. i'm still not sure if i'm doing things right 🥹

if anyone has any tips for me or if you can suggest references (textbooks or articles) that i can use, that would be very helpful!

56 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

4 notes

·

View notes

Text

sub 15 ms latency in-flight CV pipeline

37 notes

·

View notes

Text

Tom and Robotic Mouse | @futuretiative

Tom's job security takes a hit with the arrival of a new, robotic mouse catcher.

TomAndJerry #AIJobLoss #CartoonHumor #ClassicAnimation #RobotMouse #ArtificialIntelligence #CatAndMouse #TechTakesOver #FunnyCartoons #TomTheCat

Keywords: Tom and Jerry, cartoon, animation, cat, mouse, robot, artificial intelligence, job loss, humor, classic, Machine Learning Deep Learning Natural Language Processing (NLP) Generative AI AI Chatbots AI Ethics Computer Vision Robotics AI Applications Neural Networks

Tom was the first guy who lost his job because of AI

(and what you can do instead)

⤵

"AI took my job" isn't a story anymore.

It's reality.

But here's the plot twist:

While Tom was complaining,

others were adapting.

The math is simple:

➝ AI isn't slowing down

➝ Skills gap is widening

➝ Opportunities are multiplying

Here's the truth:

The future doesn't care about your comfort zone.

It rewards those who embrace change and innovate.

Stop viewing AI as your replacement.

Start seeing it as your rocket fuel.

Because in 2025:

➝ Learners will lead

➝ Adapters will advance

➝ Complainers will vanish

The choice?

It's always been yours.

It goes even further - now AI has been trained to create consistent.

//

Repost this ⇄

//

Follow me for daily posts on emerging tech and growth

#ai#artificialintelligence#innovation#tech#technology#aitools#machinelearning#automation#techreview#education#meme#Tom and Jerry#cartoon#animation#cat#mouse#robot#artificial intelligence#job loss#humor#classic#Machine Learning#Deep Learning#Natural Language Processing (NLP)#Generative AI#AI Chatbots#AI Ethics#Computer Vision#Robotics#AI Applications

4 notes

·

View notes

Text

Shaping a Sustainable Future by Advancing ESG Goals

U3Core application of DigitalU3 redefines how organizations approach Environmental, Social, and Governance (ESG) strategies by combining real-time data, advanced analytics, and AI-powered automation

Email us at: [email protected]

youtube

#Smart Cities#Green Future#Sustainable operations#Technology for Sustainability#Asset Management#Digital Transformation#Computer Vision#AI for Sustainability#IoT for Sustainability#Youtube#intel

3 notes

·

View notes

Text

Day 19/100 days of productivity | Fri 8 Mar, 2024

Visited University of Connecticut, very pretty campus

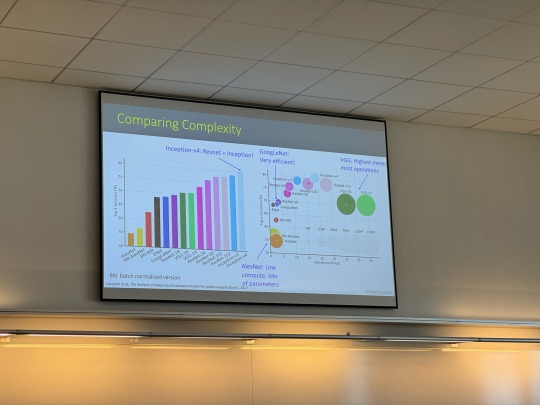

Attended a class on Computer Vision, learned about Google ResNet, which is a type of residual neural network for image processing

Learned more about the grad program and networked

Journaled about my experience

Y’all, UConn is so cool! I was blown away by the gigantic stadium they have in the middle of campus (forgot to take a picture) for their basketball games, because apparently they have the best female collegiate basketball team in the US?!? I did not know this, but they call themselves Huskies, and the branding everyone on campus is on point.

#100 days of productivity#grad school#computer vision#google resnet#neural network#deep learning#UConn#uconn huskies#uconn women’s basketball#university of Connecticut

11 notes

·

View notes

Text

Heart Cell Line-up

Combination of microscopy and computer vision produces the first 3D reconstruction of mouse heart muscle cell orientation at micrometre scale, furthering understanding of heart wall mechanics and electrical conduction

Read the published research paper here

Image from work by Drisya Dileep and Tabish A Syed, and colleagues

Centre for Cardiovascular Biology & Disease, Institute for Stem Cell Science & Regenerative Medicine, Bengaluru, India; School of Computer Science & Centre for Intelligent Machines, McGill University, & MILA– Québec AI Institute, Montréal, QC, Canada

Video originally published with a Creative Commons Attribution 4.0 International (CC BY 4.0)

Published in The EMBO Journal, September 2023

You can also follow BPoD on Instagram, Twitter and Facebook

20 notes

·

View notes