#Electrical Engineering & Computer Science (eecs)

Explore tagged Tumblr posts

Photo

J-WAFS awards over $1.3 million in fourth round of seed grant funding Today, the Abdul Latif Jameel World Water and Food Security Lab (J-WAFS) at MIT announced the award of over $1.3 million in research funding through its seed grant program, now in its fourth year.

#Abdul Latif Jameel World Water and Food Security Lab (J-WAFS)#agriculture#Chemical engineering#Civil and environmental engineering#desalination#design#Developing countries#DMSE#EAPS#Electrical Engineering & Computer Science (eecs)#environment#food#Funding#Grants#IDSS#Invention#J-WAFS#Materials science and engineering#pollution#Research#School of Engineering#School of Science#Sensors#Sustainability#water

0 notes

Text

New transistor’s superlative properties could have broad electronics applications

New Post has been published on https://thedigitalinsider.com/new-transistors-superlative-properties-could-have-broad-electronics-applications/

New transistor’s superlative properties could have broad electronics applications

In 2021, a team led by MIT physicists reported creating a new ultrathin ferroelectric material, or one where positive and negative charges separate into different layers. At the time they noted the material’s potential for applications in computer memory and much more. Now the same core team and colleagues — including two from the lab next door — have built a transistor with that material and shown that its properties are so useful that it could change the world of electronics.

Although the team’s results are based on a single transistor in the lab, “in several aspects its properties already meet or exceed industry standards” for the ferroelectric transistors produced today, says Pablo Jarillo-Herrero, the Cecil and Ida Green Professor of Physics, who led the work with professor of physics Raymond Ashoori. Both are also affiliated with the Materials Research Laboratory.

“In my lab we primarily do fundamental physics. This is one of the first, and perhaps most dramatic, examples of how very basic science has led to something that could have a major impact on applications,” Jarillo-Herrero says.

Says Ashoori, “When I think of my whole career in physics, this is the work that I think 10 to 20 years from now could change the world.”

Among the new transistor’s superlative properties:

It can switch between positive and negative charges — essentially the ones and zeros of digital information — at very high speeds, on nanosecond time scales. (A nanosecond is a billionth of a second.)

It is extremely tough. After 100 billion switches it still worked with no signs of degradation.

The material behind the magic is only billionths of a meter thick, one of the thinnest of its kind in the world. That, in turn, could allow for much denser computer memory storage. It could also lead to much more energy-efficient transistors because the voltage required for switching scales with material thickness. (Ultrathin equals ultralow voltages.)

The work is reported in a recent issue of Science. The co-first authors of the paper are Kenji Yasuda, now an assistant professor at Cornell University, and Evan Zalys-Geller, now at Atom Computing. Additional authors are Xirui Wang, an MIT graduate student in physics; Daniel Bennett and Efthimios Kaxiras of Harvard University; Suraj S. Cheema, an assistant professor in MIT’s Department of Electrical Engineering and Computer Science and an affiliate of the Research Laboratory of Electronics; and Kenji Watanabe and Takashi Taniguchi of the National Institute for Materials Science in Japan.

What they did

In a ferroelectric material, positive and negative charges spontaneously head to different sides, or poles. Upon the application of an external electric field, those charges switch sides, reversing the polarization. Switching the polarization can be used to encode digital information, and that information will be nonvolatile, or stable over time. It won’t change unless an electric field is applied. For a ferroelectric to have broad application to electronics, all of this needs to happen at room temperature.

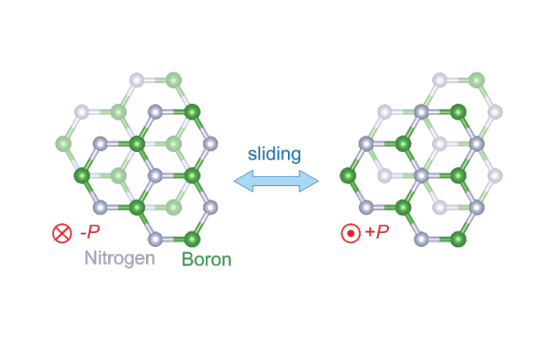

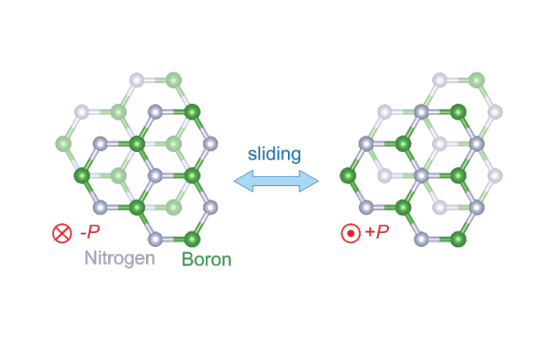

The new ferroelectric material reported in Science in 2021 is based on atomically thin sheets of boron nitride that are stacked parallel to each other, a configuration that doesn’t exist in nature. In bulk boron nitride, the individual layers of boron nitride are instead rotated by 180 degrees.

It turns out that when an electric field is applied to this parallel stacked configuration, one layer of the new boron nitride material slides over the other, slightly changing the positions of the boron and nitrogen atoms. For example, imagine that each of your hands is composed of only one layer of cells. The new phenomenon is akin to pressing your hands together then slightly shifting one above the other.

“So the miracle is that by sliding the two layers a few angstroms, you end up with radically different electronics,” says Ashoori. The diameter of an atom is about 1 angstrom.

Another miracle: “nothing wears out in the sliding,” Ashoori continues. That’s why the new transistor could be switched 100 billion times without degrading. Compare that to the memory in a flash drive made with conventional materials. “Each time you write and erase a flash memory, you get some degradation,” says Ashoori. “Over time, it wears out, which means that you have to use some very sophisticated methods for distributing where you’re reading and writing on the chip.” The new material could make those steps obsolete.

A collaborative effort

Yasuda, the co-first author of the current Science paper, applauds the collaborations involved in the work. Among them, “we [Jarillo-Herrero’s team] made the material and, together with Ray [Ashoori] and [co-first author] Evan [Zalys-Geller], we measured its characteristics in detail. That was very exciting.” Says Ashoori, “many of the techniques in my lab just naturally applied to work that was going on in the lab next door. It’s been a lot of fun.”

Ashoori notes that “there’s a lot of interesting physics behind this” that could be explored. For example, “if you think about the two layers sliding past each other, where does that sliding start?” In addition, says Yasuda, could the ferroelectricity be triggered with something other than electricity, like an optical pulse? And is there a fundamental limit to the amount of switches the material can make?

Challenges remain. For example, the current way of producing the new ferroelectrics is difficult and not conducive to mass manufacturing. “We made a single transistor as a demonstration. If people could grow these materials on the wafer scale, we could create many, many more,” says Yasuda. He notes that different groups are already working to that end.

Concludes Ashoori, “There are a few problems. But if you solve them, this material fits in so many ways into potential future electronics. It’s very exciting.”

This work was supported by the U.S. Army Research Office, the MIT/Microsystems Technology Laboratories Samsung Semiconductor Research Fund, the U.S. National Science Foundation, the Gordon and Betty Moore Foundation, the Ramon Areces Foundation, the Basic Energy Sciences program of the U.S. Department of Energy, the Japan Society for the Promotion of Science, and the Ministry of Education, Culture, Sports, Science and Technology (MEXT) of Japan.

#2-D#affiliate#applications#atom#atoms#author#billion#boron nitride#career#Cells#change#chip#collaborative#computer#computer memory#Computer Science#Computer science and technology#computing#education#electric field#Electrical Engineering&Computer Science (eecs)#electricity#Electronics#energy#engineering#flash#Foundation#Fundamental#Future#green

2 notes

·

View notes

Text

Toward a code-breaking quantum computer

New Post has been published on https://thedigitalinsider.com/toward-a-code-breaking-quantum-computer/

Toward a code-breaking quantum computer

The most recent email you sent was likely encrypted using a tried-and-true method that relies on the idea that even the fastest computer would be unable to efficiently break a gigantic number into factors.

Quantum computers, on the other hand, promise to rapidly crack complex cryptographic systems that a classical computer might never be able to unravel. This promise is based on a quantum factoring algorithm proposed in 1994 by Peter Shor, who is now a professor at MIT.

But while researchers have taken great strides in the last 30 years, scientists have yet to build a quantum computer powerful enough to run Shor’s algorithm.

As some researchers work to build larger quantum computers, others have been trying to improve Shor’s algorithm so it could run on a smaller quantum circuit. About a year ago, New York University computer scientist Oded Regev proposed a major theoretical improvement. His algorithm could run faster, but the circuit would require more memory.

Building off those results, MIT researchers have proposed a best-of-both-worlds approach that combines the speed of Regev’s algorithm with the memory-efficiency of Shor’s. This new algorithm is as fast as Regev’s, requires fewer quantum building blocks known as qubits, and has a higher tolerance to quantum noise, which could make it more feasible to implement in practice.

In the long run, this new algorithm could inform the development of novel encryption methods that can withstand the code-breaking power of quantum computers.

“If large-scale quantum computers ever get built, then factoring is toast and we have to find something else to use for cryptography. But how real is this threat? Can we make quantum factoring practical? Our work could potentially bring us one step closer to a practical implementation,” says Vinod Vaikuntanathan, the Ford Foundation Professor of Engineering, a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL), and senior author of a paper describing the algorithm.

The paper’s lead author is Seyoon Ragavan, a graduate student in the MIT Department of Electrical Engineering and Computer Science. The research will be presented at the 2024 International Cryptology Conference.

Cracking cryptography

To securely transmit messages over the internet, service providers like email clients and messaging apps typically rely on RSA, an encryption scheme invented by MIT researchers Ron Rivest, Adi Shamir, and Leonard Adleman in the 1970s (hence the name “RSA”). The system is based on the idea that factoring a 2,048-bit integer (a number with 617 digits) is too hard for a computer to do in a reasonable amount of time.

That idea was flipped on its head in 1994 when Shor, then working at Bell Labs, introduced an algorithm which proved that a quantum computer could factor quickly enough to break RSA cryptography.

“That was a turning point. But in 1994, nobody knew how to build a large enough quantum computer. And we’re still pretty far from there. Some people wonder if they will ever be built,” says Vaikuntanathan.

It is estimated that a quantum computer would need about 20 million qubits to run Shor’s algorithm. Right now, the largest quantum computers have around 1,100 qubits.

A quantum computer performs computations using quantum circuits, just like a classical computer uses classical circuits. Each quantum circuit is composed of a series of operations known as quantum gates. These quantum gates utilize qubits, which are the smallest building blocks of a quantum computer, to perform calculations.

But quantum gates introduce noise, so having fewer gates would improve a machine’s performance. Researchers have been striving to enhance Shor’s algorithm so it could be run on a smaller circuit with fewer quantum gates.

That is precisely what Regev did with the circuit he proposed a year ago.

“That was big news because it was the first real improvement to Shor’s circuit from 1994,” Vaikuntanathan says.

The quantum circuit Shor proposed has a size proportional to the square of the number being factored. That means if one were to factor a 2,048-bit integer, the circuit would need millions of gates.

Regev’s circuit requires significantly fewer quantum gates, but it needs many more qubits to provide enough memory. This presents a new problem.

“In a sense, some types of qubits are like apples or oranges. If you keep them around, they decay over time. You want to minimize the number of qubits you need to keep around,” explains Vaikuntanathan.

He heard Regev speak about his results at a workshop last August. At the end of his talk, Regev posed a question: Could someone improve his circuit so it needs fewer qubits? Vaikuntanathan and Ragavan took up that question.

Quantum ping-pong

To factor a very large number, a quantum circuit would need to run many times, performing operations that involve computing powers, like 2 to the power of 100.

But computing such large powers is costly and difficult to perform on a quantum computer, since quantum computers can only perform reversible operations. Squaring a number is not a reversible operation, so each time a number is squared, more quantum memory must be added to compute the next square.

The MIT researchers found a clever way to compute exponents using a series of Fibonacci numbers that requires simple multiplication, which is reversible, rather than squaring. Their method needs just two quantum memory units to compute any exponent.

“It is kind of like a ping-pong game, where we start with a number and then bounce back and forth, multiplying between two quantum memory registers,” Vaikuntanathan adds.

They also tackled the challenge of error correction. The circuits proposed by Shor and Regev require every quantum operation to be correct for their algorithm to work, Vaikuntanathan says. But error-free quantum gates would be infeasible on a real machine.

They overcame this problem using a technique to filter out corrupt results and only process the right ones.

The end-result is a circuit that is significantly more memory-efficient. Plus, their error correction technique would make the algorithm more practical to deploy.

“The authors resolve the two most important bottlenecks in the earlier quantum factoring algorithm. Although still not immediately practical, their work brings quantum factoring algorithms closer to reality,” adds Regev.

In the future, the researchers hope to make their algorithm even more efficient and, someday, use it to test factoring on a real quantum circuit.

“The elephant-in-the-room question after this work is: Does it actually bring us closer to breaking RSA cryptography? That is not clear just yet; these improvements currently only kick in when the integers are much larger than 2,048 bits. Can we push this algorithm and make it more feasible than Shor’s even for 2,048-bit integers?” says Ragavan.

This work is funded by an Akamai Presidential Fellowship, the U.S. Defense Advanced Research Projects Agency, the National Science Foundation, the MIT-IBM Watson AI Lab, a Thornton Family Faculty Research Innovation Fellowship, and a Simons Investigator Award.

#2024#ai#akamai#algorithm#Algorithms#approach#apps#artificial#Artificial Intelligence#author#Building#challenge#classical#code#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computers#computing#conference#cryptography#cybersecurity#defense#Defense Advanced Research Projects Agency (DARPA)#development#efficiency#Electrical Engineering&Computer Science (eecs)#elephant#email

5 notes

·

View notes

Text

Elaine Liu: Charging ahead

New Post has been published on https://thedigitalinsider.com/elaine-liu-charging-ahead/

Elaine Liu: Charging ahead

MIT senior Elaine Siyu Liu doesn’t own an electric car, or any car. But she sees the impact of electric vehicles (EVs) and renewables on the grid as two pieces of an energy puzzle she wants to solve.

The U.S. Department of Energy reports that the number of public and private EV charging ports nearly doubled in the past three years, and many more are in the works. Users expect to plug in at their convenience, charge up, and drive away. But what if the grid can’t handle it?

Electricity demand, long stagnant in the United States, has spiked due to EVs, data centers that drive artificial intelligence, and industry. Grid planners forecast an increase of 2.6 percent to 4.7 percent in electricity demand over the next five years, according to data reported to federal regulators. Everyone from EV charging-station operators to utility-system operators needs help navigating a system in flux.

That’s where Liu’s work comes in.

Liu, who is studying mathematics and electrical engineering and computer science (EECS), is interested in distribution — how to get electricity from a centralized location to consumers. “I see power systems as a good venue for theoretical research as an application tool,” she says. “I’m interested in it because I’m familiar with the optimization and probability techniques used to map this level of problem.”

Liu grew up in Beijing, then after middle school moved with her parents to Canada and enrolled in a prep school in Oakville, Ontario, 30 miles outside Toronto.

Liu stumbled upon an opportunity to take part in a regional math competition and eventually started a math club, but at the time, the school’s culture surrounding math surprised her. Being exposed to what seemed to be some students’ aversion to math, she says, “I don’t think my feelings about math changed. I think my feelings about how people feel about math changed.”

Liu brought her passion for math to MIT. The summer after her sophomore year, she took on the first of the two Undergraduate Research Opportunity Program projects she completed with electric power system expert Marija Ilić, a joint adjunct professor in EECS and a senior research scientist at the MIT Laboratory for Information and Decision Systems.

Predicting the grid

Since 2022, with the help of funding from the MIT Energy Initiative (MITEI), Liu has been working with Ilić on identifying ways in which the grid is challenged.

One factor is the addition of renewables to the energy pipeline. A gap in wind or sun might cause a lag in power generation. If this lag occurs during peak demand, it could mean trouble for a grid already taxed by extreme weather and other unforeseen events.

If you think of the grid as a network of dozens of interconnected parts, once an element in the network fails — say, a tree downs a transmission line — the electricity that used to go through that line needs to be rerouted. This may overload other lines, creating what’s known as a cascade failure.

“This all happens really quickly and has very large downstream effects,” Liu says. “Millions of people will have instant blackouts.”

Even if the system can handle a single downed line, Liu notes that “the nuance is that there are now a lot of renewables, and renewables are less predictable. You can’t predict a gap in wind or sun. When such things happen, there’s suddenly not enough generation and too much demand. So the same kind of failure would happen, but on a larger and more uncontrollable scale.”

Renewables’ varying output has the added complication of causing voltage fluctuations. “We plug in our devices expecting a voltage of 110, but because of oscillations, you will never get exactly 110,” Liu says. “So even when you can deliver enough electricity, if you can’t deliver it at the specific voltage level that is required, that’s a problem.”

Liu and Ilić are building a model to predict how and when the grid might fail. Lacking access to privatized data, Liu runs her models with European industry data and test cases made available to universities. “I have a fake power grid that I run my experiments on,” she says. “You can take the same tool and run it on the real power grid.”

Liu’s model predicts cascade failures as they evolve. Supply from a wind generator, for example, might drop precipitously over the course of an hour. The model analyzes which substations and which households will be affected. “After we know we need to do something, this prediction tool can enable system operators to strategically intervene ahead of time,” Liu says.

Dictating price and power

Last year, Liu turned her attention to EVs, which provide a different kind of challenge than renewables.

In 2022, S&P Global reported that lawmakers argued that the U.S. Federal Energy Regulatory Commission’s (FERC) wholesale power rate structure was unfair for EV charging station operators.

In addition to operators paying by the kilowatt-hour, some also pay more for electricity during peak demand hours. Only a few EVs charging up during those hours could result in higher costs for the operator even if their overall energy use is low.

Anticipating how much power EVs will need is more complex than predicting energy needed for, say, heating and cooling. Unlike buildings, EVs move around, making it difficult to predict energy consumption at any given time. “If users don’t like the price at one charging station or how long the line is, they’ll go somewhere else,” Liu says. “Where to allocate EV chargers is a problem that a lot of people are dealing with right now.”

One approach would be for FERC to dictate to EV users when and where to charge and what price they’ll pay. To Liu, this isn’t an attractive option. “No one likes to be told what to do,” she says.

Liu is looking at optimizing a market-based solution that would be acceptable to top-level energy producers — wind and solar farms and nuclear plants — all the way down to the municipal aggregators that secure electricity at competitive rates and oversee distribution to the consumer.

Analyzing the location, movement, and behavior patterns of all the EVs driven daily in Boston and other major energy hubs, she notes, could help demand aggregators determine where to place EV chargers and how much to charge consumers, akin to Walmart deciding how much to mark up wholesale eggs in different markets.

Last year, Liu presented the work at MITEI’s annual research conference. This spring, Liu and Ilić are submitting a paper on the market optimization analysis to a journal of the Institute of Electrical and Electronics Engineers.

Liu has come to terms with her early introduction to attitudes toward STEM that struck her as markedly different from those in China. She says, “I think the (prep) school had a very strong ‘math is for nerds’ vibe, especially for girls. There was a ‘why are you giving yourself more work?’ kind of mentality. But over time, I just learned to disregard that.”

After graduation, Liu, the only undergraduate researcher in Ilić’s MIT Electric Energy Systems Group, plans to apply to fellowships and graduate programs in EECS, applied math, and operations research.

Based on her analysis, Liu says that the market could effectively determine the price and availability of charging stations. Offering incentives for EV owners to charge during the day instead of at night when demand is high could help avoid grid overload and prevent extra costs to operators. “People would still retain the ability to go to a different charging station if they chose to,” she says. “I’m arguing that this works.”

#2022#amp#Analysis#approach#artificial#Artificial Intelligence#attention#Behavior#Building#buildings#Canada#cascade#challenge#China#competition#computer#Computer Science#conference#consumers#cooling#course#data#Data Centers#devices#effects#electric power#electric vehicles#Electrical engineering and computer science (EECS)#electricity#Electronics

2 notes

·

View notes

Text

Scientists use generative AI to answer complex questions in physics

New Post has been published on https://thedigitalinsider.com/scientists-use-generative-ai-to-answer-complex-questions-in-physics/

Scientists use generative AI to answer complex questions in physics

When water freezes, it transitions from a liquid phase to a solid phase, resulting in a drastic change in properties like density and volume. Phase transitions in water are so common most of us probably don’t even think about them, but phase transitions in novel materials or complex physical systems are an important area of study.

To fully understand these systems, scientists must be able to recognize phases and detect the transitions between. But how to quantify phase changes in an unknown system is often unclear, especially when data are scarce.

Researchers from MIT and the University of Basel in Switzerland applied generative artificial intelligence models to this problem, developing a new machine-learning framework that can automatically map out phase diagrams for novel physical systems.

Their physics-informed machine-learning approach is more efficient than laborious, manual techniques which rely on theoretical expertise. Importantly, because their approach leverages generative models, it does not require huge, labeled training datasets used in other machine-learning techniques.

Such a framework could help scientists investigate the thermodynamic properties of novel materials or detect entanglement in quantum systems, for instance. Ultimately, this technique could make it possible for scientists to discover unknown phases of matter autonomously.

“If you have a new system with fully unknown properties, how would you choose which observable quantity to study? The hope, at least with data-driven tools, is that you could scan large new systems in an automated way, and it will point you to important changes in the system. This might be a tool in the pipeline of automated scientific discovery of new, exotic properties of phases,” says Frank Schäfer, a postdoc in the Julia Lab in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of a paper on this approach.

Joining Schäfer on the paper are first author Julian Arnold, a graduate student at the University of Basel; Alan Edelman, applied mathematics professor in the Department of Mathematics and leader of the Julia Lab; and senior author Christoph Bruder, professor in the Department of Physics at the University of Basel. The research is published today in Physical Review Letters.

Detecting phase transitions using AI

While water transitioning to ice might be among the most obvious examples of a phase change, more exotic phase changes, like when a material transitions from being a normal conductor to a superconductor, are of keen interest to scientists.

These transitions can be detected by identifying an “order parameter,” a quantity that is important and expected to change. For instance, water freezes and transitions to a solid phase (ice) when its temperature drops below 0 degrees Celsius. In this case, an appropriate order parameter could be defined in terms of the proportion of water molecules that are part of the crystalline lattice versus those that remain in a disordered state.

In the past, researchers have relied on physics expertise to build phase diagrams manually, drawing on theoretical understanding to know which order parameters are important. Not only is this tedious for complex systems, and perhaps impossible for unknown systems with new behaviors, but it also introduces human bias into the solution.

More recently, researchers have begun using machine learning to build discriminative classifiers that can solve this task by learning to classify a measurement statistic as coming from a particular phase of the physical system, the same way such models classify an image as a cat or dog.

The MIT researchers demonstrated how generative models can be used to solve this classification task much more efficiently, and in a physics-informed manner.

The Julia Programming Language, a popular language for scientific computing that is also used in MIT’s introductory linear algebra classes, offers many tools that make it invaluable for constructing such generative models, Schäfer adds.

Generative models, like those that underlie ChatGPT and Dall-E, typically work by estimating the probability distribution of some data, which they use to generate new data points that fit the distribution (such as new cat images that are similar to existing cat images).

However, when simulations of a physical system using tried-and-true scientific techniques are available, researchers get a model of its probability distribution for free. This distribution describes the measurement statistics of the physical system.

A more knowledgeable model

The MIT team’s insight is that this probability distribution also defines a generative model upon which a classifier can be constructed. They plug the generative model into standard statistical formulas to directly construct a classifier instead of learning it from samples, as was done with discriminative approaches.

“This is a really nice way of incorporating something you know about your physical system deep inside your machine-learning scheme. It goes far beyond just performing feature engineering on your data samples or simple inductive biases,” Schäfer says.

This generative classifier can determine what phase the system is in given some parameter, like temperature or pressure. And because the researchers directly approximate the probability distributions underlying measurements from the physical system, the classifier has system knowledge.

This enables their method to perform better than other machine-learning techniques. And because it can work automatically without the need for extensive training, their approach significantly enhances the computational efficiency of identifying phase transitions.

At the end of the day, similar to how one might ask ChatGPT to solve a math problem, the researchers can ask the generative classifier questions like “does this sample belong to phase I or phase II?” or “was this sample generated at high temperature or low temperature?”

Scientists could also use this approach to solve different binary classification tasks in physical systems, possibly to detect entanglement in quantum systems (Is the state entangled or not?) or determine whether theory A or B is best suited to solve a particular problem. They could also use this approach to better understand and improve large language models like ChatGPT by identifying how certain parameters should be tuned so the chatbot gives the best outputs.

In the future, the researchers also want to study theoretical guarantees regarding how many measurements they would need to effectively detect phase transitions and estimate the amount of computation that would require.

This work was funded, in part, by the Swiss National Science Foundation, the MIT-Switzerland Lockheed Martin Seed Fund, and MIT International Science and Technology Initiatives.

#ai#approach#artificial#Artificial Intelligence#Bias#binary#change#chatbot#chatGPT#classes#computation#computer#Computer modeling#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computing#crystalline#dall-e#data#data-driven#datasets#dog#efficiency#Electrical Engineering&Computer Science (eecs)#engineering#Foundation#framework#Future#generative

2 notes

·

View notes

Text

Francis Fan Lee, former professor and interdisciplinary speech processing inventor, dies at 96

New Post has been published on https://thedigitalinsider.com/francis-fan-lee-former-professor-and-interdisciplinary-speech-processing-inventor-dies-at-96/

Francis Fan Lee, former professor and interdisciplinary speech processing inventor, dies at 96

Francis Fan Lee ’50, SM ’51, PhD ’66, a former professor of MIT’s Department of Electrical Engineering and Computer Science, died on Jan. 12, some two weeks shy of his 97th birthday.

Born in 1927 in Nanjing, China, to professors Li Rumian and Zhou Huizhan, Lee learned English from his father, a faculty member in the Department of English at the University of Wuhan. Lee’s mastery of the language led to an interpreter position at the U.S. Office of Strategic Services, and eventually a passport and permission from the Chinese government to study in the United States.

Lee left China via steamship in 1948 to pursue his undergraduate education at MIT. He earned his bachelor’s and master’s degrees in electrical engineering in 1950 and 1951, respectively, before going into industry. Around this time, he became reacquainted with a friend he’d known in China, who had since emigrated; he married Teresa Jen Lee, and the two welcomed children Franklin, Elizabeth, Gloria, and Roberta over the next decade.

During his 10-year industrial career, Lee distinguished himself in roles at Ultrasonic (where he worked on instrument type servomechanisms, circuit design, and a missile simulator), RCA Camden (where he worked on an experimental time-shared digital processor for department store point-of-sale interactions), and UNIVAC Corp. (where he held a variety of roles, culminating in a stint in Philadelphia, planning next-generation computing systems.)

Lee returned to MIT to earn his PhD in 1966, after which he joined the then-Department of Electrical Engineering as an associate professor with tenure, affiliated with the Research Laboratory of Electronics (RLE). There, he pursued the subject of his doctoral research: the development of a machine that would read printed text out loud — a tremendously ambitious and complex goal for the time.

Work on the “RLE reading machine,” as it was called, was inherently interdisciplinary, and Lee drew upon the influences of multiple contemporaries, including linguists Morris Halle and Noam Chomsky, and engineer Kenneth Stevens, whose quantal theory of speech production and recognition broke down human speech into discrete, and limited, combinations of sound. One of Lee’s greatest contributions to the machine, which he co-built with Donald Troxel, was a clever and efficient storage system that used root words, prefixes, and suffixes to make the real-time synthesis of half-a-million English words possible, while only requiring about 32,000 words’ worth of storage. The solution was emblematic of Lee’s creative approach to solving complex research problems, an approach which earned him respect and admiration from his colleagues and contemporaries.

In reflection of Lee’s remarkable accomplishments in both industry and building the reading machine, he was promoted to full professor in 1969, just three years after he earned his PhD. Many awards and other recognition followed, including the IEEE Fellowship in 1971 and the Audio Engineering Society Best Paper Award in 1972. Additionally, Lee occupied several important roles within the department, including over a decade spent as the undergraduate advisor. He consistently supported and advocated for more funding to go to ongoing professional education for faculty members, especially those who were no longer junior faculty, identifying ongoing development as an important, but often-overlooked, priority.

Lee’s research work continued to straddle both novel inquiry and practical, commercial application — in 1969, together with Charles Bagnaschi, he founded American Data Sciences, later changing the company’s name to Lexicon Inc. The company specialized in producing devices that expanded on Lee’s work in digital signal compression and expansion: for example, the first commercially available speech compressor and pitch shifter, which was marketed as an educational tool for blind students and those with speech processing disorders. The device, called Varispeech, allowed students to speed up written material without losing pitch — much as modern audiobook listeners speed up their chapters to absorb books at their preferred rate. Later innovations of Lee’s included the Time Compressor Model 1200, which added a film and video component to the speeding-up process, allowing television producers to subtly speed up a movie, sitcom, or advertisement to precisely fill a limited time slot without having to resort to making cuts. For this work, he received an Emmy Award for technical contributions to editing.

In the mid-to-late 1980s, Lee’s influential academic career was brought to a close by a series of deeply personal tragedies, including the 1984 murder of his daughter Roberta, and the subsequent and sudden deaths of his wife, Theresa, and his son, Franklin. Reeling from his losses, Lee ultimately decided to take an early retirement, dedicating his energy to healing. For the next two decades, he would explore the world extensively, a nomadic second chapter that included multiple road trips across the United States in a Volkswagen camper van. He eventually settled in California, where he met his last wife, Ellen, and where his lively intellectual life persisted despite diagnoses of deafness and dementia; as his family recalled, he enjoyed playing games of Scrabble until his final weeks.

He is survived by his wife Ellen Li; his daughters Elizabeth Lee (David Goya) and Gloria Lee (Matthew Lynaugh); his grandsons Alex, Benjamin, Mason, and Sam; his sister Li Zhong (Lei Tongshen); and family friend Angelique Agbigay. His family have asked that gifts honoring Francis Fan Lee’s life be directed to the Hertz Foundation.

#000#1980s#Alumni/ae#approach#audio#birthday#Books#Born#Building#career#Children#China#compression#compressor#computer#Computer Science#computing#computing systems#data#dementia#Design#development#devices#disorders#Editing#education#Electrical Engineering&Computer Science (eecs)#Electronics#energy#Engineer

2 notes

·

View notes

Text

Karl Berggren named faculty head of electrical engineering in EECS

New Post has been published on https://thedigitalinsider.com/karl-berggren-named-faculty-head-of-electrical-engineering-in-eecs/

Karl Berggren named faculty head of electrical engineering in EECS

Karl K. Berggren, the Joseph F. and Nancy P. Keithley Professor of Electrical Engineering at MIT, has been named the new faculty head of electrical engineering in the Department of Electrical Engineering and Computer Science (EECS), effective Jan. 15.

“Karl’s exceptional interdisciplinary research combining electrical engineering, physics, and materials science, coupled with his experience working with industry and government organizations, makes him an ideal fit to head electrical engineering. I’m confident electrical engineering will continue to grow under his leadership,” says Anantha Chandrakasan, chief innovation and strategy officer, dean of engineering, and Vannevar Bush Professor of Electrical Engineering and Computer Science.

“Karl has made an incredible impact as a researcher and educator over his two decades in EECS. Students and faculty colleagues praise his thoughtful approach to teaching, and the care with which he oversaw the teaching labs in his prior role as undergraduate lab officer for the department. He will undoubtedly be an excellent leader, bringing his passion for education and collaborative spirit to this new role,” adds Daniel Huttenlocher, dean of the MIT Schwarzman College of Computing and the Henry Ellis Warren Professor of Electrical Engineering and Computer Science.

Berggren joins the leadership of EECS, which jointly reports to the MIT Schwarzman College of Computing and the School of Engineering. The largest academic department at MIT, EECS was reorganized in 2019 as part of the formation of the college into three overlapping sub-units in electrical engineering, computer science, and artificial intelligence and decision-making. The restructuring has enabled each of the three sub-units to concentrate on faculty recruitment, mentoring, promotion, academic programs, and community building in coordination with the others.

A member of the EECS faculty since 2003, Berggren has taught a range of subjects in the department, including Digital Communications, Circuits and Electronics, Fundamentals of Programming, Applied Quantum and Statistical Physics, Introduction to EECS via Interconnected Embedded Systems, Introduction to Quantum Systems Engineering, and Introduction to Nanofabrication. Before joining EECS, Berggren worked as a staff member at MIT Lincoln Laboratory for seven years. Berggren also maintains an active consulting practice and has experience working with industrial and government organizations.

Berggren’s current research focuses on superconductive circuits, electronic devices, single-photon detectors for quantum applications, and electron-optical systems. He heads the Quantum Nanostructures and Nanofabrication Group, which develops nanofabrication technology at the few-nanometer length scale. The group uses these technologies to push the envelope of what is possible with photonic and electrical devices, focusing on superconductive and free-electron devices.

Berggren has received numerous prestigious awards and honors throughout his career. Most recently, he was named an MIT MacVicar Fellow in 2024. Berggren is also a fellow of the AAAS, IEEE, and the Kavli Foundation, and a recipient of the 2015 Paul T. Forman Team Engineering Award from the Optical Society of America (now Optica). In 2016, he received a Bose Fellowship and was also a recipient of the EECS department’s Frank Quick Innovation Fellowship and the Burgess (’52) & Elizabeth Jamieson Award for Excellence in Teaching.

Berggren succeeds Joel Voldman, who has served as the inaugural electrical engineering faculty head since January 2020.

“Joel has been in leadership roles since 2018, when he was named associate department head of EECS. I am deeply grateful to him for his invaluable contributions to EECS since that time,” says Asu Ozdaglar, MathWorks Professor and head of EECS, who also serves as the deputy dean of the MIT Schwarzman College of Computing. “I look forward to working with Karl now and continuing along the amazing path we embarked on in 2019.”

#2024#amazing#America#amp#applications#approach#artificial#Artificial Intelligence#Building#career#collaborative#college#communications#Community#computer#Computer Science#Computer science and technology#computing#consulting#devices#education#Electrical engineering and computer science (EECS)#electron#electronic#electronic devices#Electronics#engineering#Faculty#Foundation#Government

0 notes

Text

Accelerating particle size distribution estimation

New Post has been published on https://thedigitalinsider.com/accelerating-particle-size-distribution-estimation/

Accelerating particle size distribution estimation

The pharmaceutical manufacturing industry has long struggled with the issue of monitoring the characteristics of a drying mixture, a critical step in producing medication and chemical compounds. At present, there are two noninvasive characterization approaches that are typically used: A sample is either imaged and individual particles are counted, or researchers use a scattered light to estimate the particle size distribution (PSD). The former is time-intensive and leads to increased waste, making the latter a more attractive option.

In recent years, MIT engineers and researchers developed a physics and machine learning-based scattered light approach that has been shown to improve manufacturing processes for pharmaceutical pills and powders, increasing efficiency and accuracy and resulting in fewer failed batches of products. A new open-access paper, “Non-invasive estimation of the powder size distribution from a single speckle image,” available in the journal Light: Science & Application, expands on this work, introducing an even faster approach.

“Understanding the behavior of scattered light is one of the most important topics in optics,” says Qihang Zhang PhD ’23, an associate researcher at Tsinghua University. “By making progress in analyzing scattered light, we also invented a useful tool for the pharmaceutical industry. Locating the pain point and solving it by investigating the fundamental rule is the most exciting thing to the research team.”

The paper proposes a new PSD estimation method, based on pupil engineering, that reduces the number of frames needed for analysis. “Our learning-based model can estimate the powder size distribution from a single snapshot speckle image, consequently reducing the reconstruction time from 15 seconds to a mere 0.25 seconds,” the researchers explain.

“Our main contribution in this work is accelerating a particle size detection method by 60 times, with a collective optimization of both algorithm and hardware,” says Zhang. “This high-speed probe is capable to detect the size evolution in fast dynamical systems, providing a platform to study models of processes in pharmaceutical industry including drying, mixing and blending.”

The technique offers a low-cost, noninvasive particle size probe by collecting back-scattered light from powder surfaces. The compact and portable prototype is compatible with most of drying systems in the market, as long as there is an observation window. This online measurement approach may help control manufacturing processes, improving efficiency and product quality. Further, the previous lack of online monitoring prevented systematical study of dynamical models in manufacturing processes. This probe could bring a new platform to carry out series research and modeling for the particle size evolution.

This work, a successful collaboration between physicists and engineers, is generated from the MIT-Takeda program. Collaborators are affiliated with three MIT departments: Mechanical Engineering, Chemical Engineering, and Electrical Engineering and Computer Science. George Barbastathis, professor of mechanical engineering at MIT, is the article’s senior author.

#ai#algorithm#Algorithms#amp#Analysis#approach#Article#Artificial Intelligence#author#Behavior#chemical#chemical compounds#Chemical engineering#Collaboration#Collective#computer#Computer Science#detection#efficiency#Electrical engineering and computer science (EECS)#engineering#engineers#Evolution#Fundamental#Hardware#Industry#Invention#it#learning#Light

0 notes

Text

Sam Madden named faculty head of computer science in EECS

New Post has been published on https://thedigitalinsider.com/sam-madden-named-faculty-head-of-computer-science-in-eecs/

Sam Madden named faculty head of computer science in EECS

Sam Madden, the College of Computing Distinguished Professor of Computing at MIT, has been named the new faculty head of computer science in the MIT Department of Electrical Engineering and Computer Science (EECS), effective Aug. 1.

Madden succeeds Arvind, a longtime MIT professor and prolific computer scientist, who passed away in June.

“Sam’s research leadership and commitment to excellence, along with his thoughtful and supportive approach, makes him a natural fit to help lead the department going forward. In light of Arvind’s passing, we are particularly grateful that Sam has agreed to take on this role on such short notice,” says Daniel Huttenlocher, dean of the MIT Schwarzman College of Computing and the Henry Ellis Warren Professor of Electrical Engineering and Computer Science.

“Sam’s exceptional research contributions in database management systems, coupled with his deep understanding of both academia and industry, make him an excellent fit for faculty head of computer science. The EECS department and broader School of Engineering will greatly benefit from his expertise and passion,” adds Anantha Chandrakasan, chief innovation and strategy officer, dean of engineering, and Vannevar Bush Professor of Electrical Engineering and Computer Science.

Madden joins the leadership of EECS, which jointly reports to the MIT Schwarzman College of Computing and the School of Engineering. The largest academic department at MIT, EECS was reorganized in 2019 as part of the formation of the college into three overlapping sub-units in electrical engineering (EE), computer science (CS), and artificial intelligence and decision-making (AI+D). The restructuring has enabled each of the three sub-units to concentrate on faculty recruitment, mentoring, promotion, academic programs, and community building in coordination with the others.

“I am delighted that Sam has agreed to step up to take on this important leadership role. His unique combination of academic excellence and forward-looking focus will be invaluable for us,” says Asu Ozdaglar, MathWorks Professor and head of EECS, who also serves as the deputy dean of the MIT Schwarzman College of Computing. “I am confident that he will offer exceptional leadership in his new role and further strengthen EECS for our students and the MIT community.”

A member of the MIT faculty since 2004, Madden is a professor in EECS and a principal investigator in the Computer Science and Artificial Intelligence Laboratory. He was recognized as the inaugural College of Computing Distinguished Professor of Computing in 2020 for being an outstanding faculty member, leader, and innovator.

Madden’s research interest is in database systems, focusing on database analytics and query processing, ranging from clouds to sensors to modern high-performance server architectures. He co-directs the Data Systems for AI Lab initiative and the Data Systems Group, investigating issues related to systems and algorithms for data focusing on applying new methodologies for processing data, including applying machine learning methods to data systems and engineering data systems for applying machine learning at scale.

He was named one of MIT Technology Review’s “Top 35 Under 35” in 2005 and an ACM Fellow in 2020. He is the recipient of several awards, including an NSF CAREER award, a Sloan Foundation Fellowship, the ACM SIGMOD Edgar F. Codd Innovations Award, and “test of time” awards from VLDB, SIGMOD, SIGMOBILE, and SenSys. He is also the co-founder and chief scientist at Cambridge Mobile Telematics, which develops technology to make roads safer and drivers better.

#Administration#ai#Algorithms#Analytics#approach#artificial#Artificial Intelligence#Building#career#clouds#college#Community#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computing#data#Database#database management#Electrical Engineering&Computer Science (eecs)#engineering#Faculty#focus#Foundation#Industry#Innovation#innovations#intelligence#issues

1 note

·

View note

Text

For developing designers, there’s magic in 2.737 (Mechatronics)

New Post has been published on https://thedigitalinsider.com/for-developing-designers-theres-magic-in-2-737-mechatronics/

For developing designers, there’s magic in 2.737 (Mechatronics)

The field of mechatronics is multidisciplinary and interdisciplinary, occupying the intersection of mechanical systems, electronics, controls, and computer science. Mechatronics engineers work in a variety of industries — from space exploration to semiconductor manufacturing to product design — and specialize in the integrated design and development of intelligent systems. For students wanting to learn mechatronics, it might come as a surprise that one of the most powerful teaching tools available for the subject matter is simply a pen and a piece of paper.

“Students have to be able to work out things on a piece of paper, and make sketches, and write down key calculations in order to be creative,” says MIT professor of mechanical engineering David Trumper, who has been teaching class 2.737 (Mechatronics) since he joined the Institute faculty in the early 1990s. The subject is electrical and mechanical engineering combined, he says, but more than anything else, it’s design.

“If you just do electronics, but have no idea how to make the mechanical parts work, you can’t find really creative solutions. You have to see ways to solve problems across different domains,” says Trumper. “MIT students tend to have seen lots of math and lots of theory. The hands-on part is really critical to build that skill set; with hands-on experiences they’ll be more able to imagine how other things might work when they’re designing them.”

Play video

A lot like magic Video: Department of Mechanical Engineering

Audrey Cui ’24, now a graduate student in electrical engineering and computer science, confirms that Trumper “really emphasizes being able to do back-of-the-napkin calculations.” This simplicity is by design, and the critical thinking it promotes is essential for budding designers.

“Sitting behind a computer terminal, you’re using some existing tool in the menu system and not thinking creatively,” says Trumper. “To see the trade-offs, and get the clutter out of your thinking, it helps to work with a really simple tool — a piece of paper and, hopefully, multicolored pens to code things — you can design so much more creatively than if you’re stuck behind a screen. The ability to sketch things is so important.”

Trumper studies precision mechatronics, broadly, with a particular interest in mechatronic systems for demanding resolutions. Examples include projects that employ magnetic levitation, linear motors for driving precision manufacturing for semiconductors, and spacecraft attitude control. His work also explores lathes, milling applications, and even bioengineering platforms.

Class 2.737, which is offered every two years, is lab-based. Sketches and concepts come to life in focused experiences designed to expose students to key principles in a hands-on way and are very much informed by what Trumper has found important in his research. The two-week-long lab explorations range from controlling a motor to evaluating electronic scales to vibration isolations systems built on a speaker. One year, students constructed a working atomic force microscope.

“The touch and sense of how things actually work is really important,” Trumper says. “As a designer, you have to be able to imagine. If you think of some new configuration of a motor, you need to imagine how it would work and see it working, so you can do design iterations in your imagined space — to make that real requires that you’ve had experience with the actual thing.”

He says his former late colleague, Woodie Flowers SM ’68, MEng ’71, PhD ’73, used to call it “running the movie.” Trumper explains, “once you have the image in your mind, you can more easily picture what’s going on with the problem — what’s getting hot, where’s the stress, what do I like and not like about this design. If you can do that with a piece of paper and your imagination, now you design new things pretty creatively.”

Flowers had been the Pappalardo Professor Emeritus of Mechanical Engineering at the time of his passing in October 2019. He is remembered for pioneering approaches to education, and was instrumental in shaping MIT’s hands-on approach to engineering design education.

Class 2.737 tends to attract students who like to design and build their own things. “I want people who are heading toward being hardware geeks,” says Trumper, laughing. “And I mean that lovingly.” He says his most important objective for this class is that students learn real tools that they will find useful years from now in their own engineering research or practice.

“Being able to see how multiple pieces fit in together and create one whole working system is just really empowering to me as an aspiring engineer,” says Cui.

For fellow 2.737 student Zach Francis, the course offered foundations for the future along with a meaningful tie to the past. “This class reminded me about what I enjoy about engineering. You look at it when you’re a young kid and you’re like ‘that looks like magic!’ and then as an adult you can now make that. It’s the closest thing I’ve been to a wizard, and I like that a lot.”

#applications#approach#atomic#bioengineering#Classes and programs#code#computer#Computer Science#Computer science and technology#course#Design#designers#development#domains#driving#education#Electrical Engineering&Computer Science (eecs)#electronic#Electronics#Engineer#engineering#engineers#Faculty#flowers#Future#hands-on#Hardware#how#how to#Industries

0 notes

Text

Duane Boning named vice provost for international activities

New Post has been published on https://thedigitalinsider.com/duane-boning-named-vice-provost-for-international-activities/

Duane Boning named vice provost for international activities

Duane Boning ’84, SM ’86, and PhD ’91 has been named the next MIT vice provost for international activities (VPIA), effective Sept. 1. Boning, the Clarence J. LeBel Professor in Electrical Engineering and Computer Science (EECS) at MIT, succeeds Japan Steel Industry Professor Richard Lester, who has served as VPIA since 2015.

The VPIA provides intellectual leadership, guidance, and oversight of MIT’s international policies and engagements. In this role, Boning will conduct strategic reviews of the portfolio of international activities, advise the administration on global strategic priorities, and work with academic unit leaders and researchers to develop major new global programs and projects. Boning will also help coordinate faculty and administrative reviews of certain international projects to identify and manage U.S. national security, human rights, and economic and other risks.

“Duane has an exceptional record of accomplishment and will provide the forward-looking and collaborative leadership needed to guide the Institute’s international engagements and policies,” says Provost Cynthia Barnhart. “I am thrilled to welcome him to the role.”

Boning’s ties to MIT are long and lasting, first receiving his SB, SM and PhD degrees in EECS at the Institute, in 1984, 1986 and 1991, respectively. His tenure includes several campus leadership positions, including as associate department head of EECS from 2004 to 2011, and associate chair of the faculty from 2019 to 2021. He is the associate director for computation and CAD for the Microsystems Technology Laboratories, where he leads the MTL Statistical Metrology Group.

In 2016, Boning became the engineering faculty co-director of the MIT Leaders for Global Operations (LGO) program. With LGO Sloan faculty co-director Retsef Levi, Boning led the formation of MIT’s Machine Intelligence for Manufacturing & Operations (MIMO), which extends LGO activities in machine intelligence through additional industrial research projects, seminars, and workshops.

His experiences as a researcher and an educator have helped him appreciate the benefits of MIT’s international collaboration efforts, Boning says. “Taking on the VPIA role is about me wanting to continue and amplify that appreciation into the future, where I think it’s going to become even more important for MIT to remain and be engaged in the world.”

Among his previous leadership roles in international collaborations, Boning served as faculty director of the MIT/Masdar Institute Cooperative Program from 2011 to 2018, and director/faculty lead of the MIT Skoltech Initiative from 2011 to 2013.

Boning says the office of the VPIA can act as a driver and initiator of international engagement, but he looks forward to being a “a facilitator or convener, a coalescing point to find out where there are international opportunities and to bring people to them.”

“Finding ways to support higher MIT institutional priorities through international activities will be important,” he adds, citing as an example of these priorities the Climate Project at MIT launched by President Sally Kornbluth in 2023. “We will be puzzling out how our international components can best contribute to that and other initiatives.”

Lester will step into the role of interim vice president of climate (VPC), reporting to Kornbluth, while the search for a permanent VPC continues. Lester expects to complete his interim role and return to his MIT research activities at the end of the calendar year.

Formative experiences

Boning’s participation in the Cambridge-MIT Institute was one of his first experiences in international research and education. “It was eye-opening, seeing, ‘oh, you mean they don’t have weekly problem sets here?’” he jokes. “It showed me very different approaches to education that can also work, and how I might try some of those ideas in my own context.”

He looks back on the Cambridge experience and later work in manufacturing research with the Singapore-MIT Alliance for Research and Technology “with fondness in my heart,” he says. “It enabled me to see how international activities can benefit my own research and the research of my colleagues around me.”

His leadership in larger programs such as LGO and the MIT/Masdar program taught him the importance of creating and recruiting for MIT’s international collaborations, “by finding appropriate ways to connect with the passions of MIT faculty,” Boning says.

Boning says he will also draw on his experiences in departmental and faculty-level governance to guide him in his new role. “I recognize how broad MIT is and how widespread the different practices and cultures are in different schools and departments and programs across MIT,” he explains. “It’s given me a broader appreciation of faculty, staff, administration — everybody across all corners of the Institute and how they contribute to MIT’s mission.”

Future goals

Barnhart praised Lester, the outgoing VPIA, saying that “Richard’s body of work as vice provost for international activities is impressive and impactful. He has applied his commendable leadership skills, sharp intellect, and broad vision to transforming the ways MIT engages and collaborates with partners across the globe.”

She noted that Lester had expanded the reach of MIT’s research and education missions through numerous international collaborations, especially in Africa and Asia. As convenor and co-chair of the MIT China Strategy Group, Lester led the preparation and implementation of an influential November 2022 report on how MIT should approach its interactions and collaborations with China.

Boning cites the China report as an excellent example of how the VPIA can identify best practices and address head-on the values and complexities of international collaboration. “We have to live up to the reputation of the mission of MIT in intellectual development and freedom, while also recognizing that there are risks that need to be managed and choices that need to be made,” he says.

Boning’s field of expertise — semiconductor and photonics manufacturing and design — has become a topic of intense interest and attention in innovation and economic circles, and he intends to stay engaged fully in research as a result. As VPIA, he may have to step back from some of his teaching, however, “and that is the piece I will miss the most. I will miss any semester when I am not in the classroom with students,” he says.

“But I’m curious about what the future is going to bring — boundless new opportunities, new technologies, AI — and how MIT can best facilitate the wise application of these for the world’s problems,” Boning adds. “I’m looking forward to lots of conversations with faculty colleagues and the whole community around what MIT can be doing, what we should be doing, and how we can best do it to support MIT’s mission through international activities.”

#2022#2023#Administration#Africa#ai#amp#approach#Asia#attention#Calendar#China#circles#climate#Collaboration#collaborative#Community#computation#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#curiosity#Design#development#economic#education#Electrical Engineering&Computer Science (eecs)#engineering#eye#Faculty#Future

0 notes

Text

3 Questions: How to prove humanity online

New Post has been published on https://thedigitalinsider.com/3-questions-how-to-prove-humanity-online/

3 Questions: How to prove humanity online

As artificial intelligence agents become more advanced, it could become increasingly difficult to distinguish between AI-powered users and real humans on the internet. In a new white paper, researchers from MIT, OpenAI, Microsoft, and other tech companies and academic institutions propose the use of personhood credentials, a verification technique that enables someone to prove they are a real human online, while preserving their privacy.

MIT News spoke with two co-authors of the paper, Nouran Soliman, an electrical engineering and computer science graduate student, and Tobin South, a graduate student in the Media Lab, about the need for such credentials, the risks associated with them, and how they could be implemented in a safe and equitable way.

Q: Why do we need personhood credentials?

Tobin South: AI capabilities are rapidly improving. While a lot of the public discourse has been about how chatbots keep getting better, sophisticated AI enables far more capabilities than just a better ChatGPT, like the ability of AI to interact online autonomously. AI could have the ability to create accounts, post content, generate fake content, pretend to be human online, or algorithmically amplify content at a massive scale. This unlocks a lot of risks. You can think of this as a “digital imposter” problem, where it is getting harder to distinguish between sophisticated AI and humans. Personhood credentials are one potential solution to that problem.

Nouran Soliman: Such advanced AI capabilities could help bad actors run large-scale attacks or spread misinformation. The internet could be filled with AIs that are resharing content from real humans to run disinformation campaigns. It is going to become harder to navigate the internet, and social media specifically. You could imagine using personhood credentials to filter out certain content and moderate content on your social media feed or determine the trust level of information you receive online.

Q: What is a personhood credential, and how can you ensure such a credential is secure?

South: Personhood credentials allow you to prove you are human without revealing anything else about your identity. These credentials let you take information from an entity like the government, who can guarantee you are human, and then through privacy technology, allow you to prove that fact without sharing any sensitive information about your identity. To get a personhood credential, you are going to have to show up in person or have a relationship with the government, like a tax ID number. There is an offline component. You are going to have to do something that only humans can do. AIs can’t turn up at the DMV, for instance. And even the most sophisticated AIs can’t fake or break cryptography. So, we combine two ideas — the security that we have through cryptography and the fact that humans still have some capabilities that AIs don’t have — to make really robust guarantees that you are human.

Soliman: But personhood credentials can be optional. Service providers can let people choose whether they want to use one or not. Right now, if people only want to interact with real, verified people online, there is no reasonable way to do it. And beyond just creating content and talking to people, at some point AI agents are also going to take actions on behalf of people. If I am going to buy something online, or negotiate a deal, then maybe in that case I want to be certain I am interacting with entities that have personhood credentials to ensure they are trustworthy.

South: Personhood credentials build on top of an infrastructure and a set of security technologies we’ve had for decades, such as the use of identifiers like an email account to sign into online services, and they can complement those existing methods.

Q: What are some of the risks associated with personhood credentials, and how could you reduce those risks?

Soliman: One risk comes from how personhood credentials could be implemented. There is a concern about concentration of power. Let’s say one specific entity is the only issuer, or the system is designed in such a way that all the power is given to one entity. This could raise a lot of concerns for a part of the population — maybe they don’t trust that entity and don’t feel it is safe to engage with them. We need to implement personhood credentials in such a way that people trust the issuers and ensure that people’s identities remain completely isolated from their personhood credentials to preserve privacy.

South: If the only way to get a personhood credential is to physically go somewhere to prove you are human, then that could be scary if you are in a sociopolitical environment where it is difficult or dangerous to go to that physical location. That could prevent some people from having the ability to share their messages online in an unfettered way, possibly stifling free expression. That’s why it is important to have a variety of issuers of personhood credentials, and an open protocol to make sure that freedom of expression is maintained.

Soliman: Our paper is trying to encourage governments, policymakers, leaders, and researchers to invest more resources in personhood credentials. We are suggesting that researchers study different implementation directions and explore the broader impacts personhood credentials could have on the community. We need to make sure we create the right policies and rules about how personhood credentials should be implemented.

South: AI is moving very fast, certainly much faster than the speed at which governments adapt. It is time for governments and big companies to start thinking about how they can adapt their digital systems to be ready to prove that someone is human, but in a way that is privacy-preserving and safe, so we can be ready when we reach a future where AI has these advanced capabilities.

#Accounts#agents#ai#AI AGENTS#AI-powered#Algorithms#artificial#Artificial Intelligence#chatbots#chatGPT#Community#Companies#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#content#credential#credentials#cryptography#cybersecurity#deal#disinformation#Electrical Engineering&Computer Science (eecs)#email#engineering#Environment#filter#Future#Government#how

0 notes

Text

A new approach to fine-tuning quantum materials

New Post has been published on https://thedigitalinsider.com/a-new-approach-to-fine-tuning-quantum-materials/

A new approach to fine-tuning quantum materials

Quantum materials — those with electronic properties that are governed by the principles of quantum mechanics, such as correlation and entanglement — can exhibit exotic behaviors under certain conditions, such as the ability to transmit electricity without resistance, known as superconductivity. However, in order to get the best performance out of these materials, they need to be properly tuned, in the same way that race cars require tuning as well. A team led by Mingda Li, an associate professor in MIT’s Department of Nuclear Science and Engineering (NSE), has demonstrated a new, ultra-precise way to tweak the characteristics of quantum materials, using a particular class of these materials, Weyl semimetals, as an example.

The new technique is not limited to Weyl semimetals. “We can use this method for any inorganic bulk material, and for thin films as well,” maintains NSE postdoc Manasi Mandal, one of two lead authors of an open-access paper — published recently in Applied Physics Reviews — that reported on the group’s findings.

The experiment described in the paper focused on a specific type of Weyl semimetal, a tantalum phosphide (TaP) crystal. Materials can be classified by their electrical properties: metals conduct electricity readily, whereas insulators impede the free flow of electrons. A semimetal lies somewhere in between. It can conduct electricity, but only in a narrow frequency band or channel. Weyl semimetals are part of a wider category of so-called topological materials that have certain distinctive features. For instance, they possess curious electronic structures — kinks or “singularities” called Weyl nodes, which are swirling patterns around a single point (configured in either a clockwise or counterclockwise direction) that resemble hair whorls or, more generally, vortices. The presence of Weyl nodes confers unusual, as well as useful, electrical properties. And a key advantage of topological materials is that their sought-after qualities can be preserved, or “topologically protected,” even when the material is disturbed.

“That’s a nice feature to have,” explains Abhijatmedhi Chotrattanapituk, a PhD student in MIT’s Department of Electrical Engineering and Computer Science and the other lead author of the paper. “When you try to fabricate this kind of material, you don’t have to be exact. You can tolerate some imperfections, some level of uncertainty, and the material will still behave as expected.”

Like water in a dam

The “tuning” that needs to happen relates primarily to the Fermi level, which is the highest energy level occupied by electrons in a given physical system or material. Mandal and Chotrattanapituk suggest the following analogy: Consider a dam that can be filled with varying levels of water. One can raise that level by adding water or lower it by removing water. In the same way, one can adjust the Fermi level of a given material simply by adding or subtracting electrons.

To fine-tune the Fermi level of the Weyl semimetal, Li’s team did something similar, but instead of adding actual electrons, they added negative hydrogen ions (each consisting of a proton and two electrons) to the sample. The process of introducing a foreign particle, or defect, into the TaP crystal — in this case by substituting a hydrogen ion for a tantalum atom — is called doping. And when optimal doping is achieved, the Fermi level will coincide with the energy level of the Weyl nodes. That’s when the material’s desired quantum properties will be most fully realized.

For Weyl semimetals, the Fermi level is especially sensitive to doping. Unless that level is set close to the Weyl nodes, the material’s properties can diverge significantly from the ideal. The reason for this extreme sensitivity owes to the peculiar geometry of the Weyl node. If one were to think of the Fermi level as the water level in a reservoir, the reservoir in a Weyl semimetal is not shaped like a cylinder; it’s shaped like an hourglass, and the Weyl node is located at the narrowest point, or neck, of that hourglass. Adding too much or too little water would miss the neck entirely, just as adding too many or too few electrons to the semimetal would miss the node altogether.

Fire up the hydrogen

To reach the necessary precision, the researchers utilized MIT’s two-stage “Tandem” ion accelerator — located at the Center for Science and Technology with Accelerators and Radiation (CSTAR) — and buffeted the TaP sample with high-energy ions coming out of the powerful (1.7 million volt) accelerator beam. Hydrogen ions were chosen for this purpose because they are the smallest negative ions available and thus alter the material less than a much larger dopant would. “The use of advanced accelerator techniques allows for greater precision than was ever before possible, setting the Fermi level to milli-electron volt [thousandths of an electron volt] accuracy,” says Kevin Woller, the principal research scientist who leads the CSTAR lab. “Additionally, high-energy beams allow for the doping of bulk crystals beyond the limitations of thin films only a few tens of nanometers thick.”

The procedure, in other words, involves bombarding the sample with hydrogen ions until a sufficient number of electrons are taken in to make the Fermi level just right. The question is: how long do you run the accelerator, and how do you know when enough is enough? The point being that you want to tune the material until the Fermi level is neither too low nor too high.

“The longer you run the machine, the higher the Fermi level gets,” Chotrattanapituk says. “The difficulty is that we cannot measure the Fermi level while the sample is in the accelerator chamber.” The normal way to handle that would be to irradiate the sample for a certain amount of time, take it out, measure it, and then put it back in if the Fermi level is not high enough. “That can be practically impossible,” Mandal adds.

To streamline the protocol, the team has devised a theoretical model that first predicts how many electrons are needed to increase the Fermi level to the preferred level and translates that to the number of negative hydrogen ions that must be added to the sample. The model can then tell them how long the sample ought to be kept in the accelerator chamber.

The good news, Chotrattanapituk says, is that their simple model agrees within a factor of 2 with trusted conventional models that are much more computationally intensive and may require access to a supercomputer. The group’s main contributions are two-fold, he notes: offering a new, accelerator-based technique for precision doping and providing a theoretical model that can guide the experiment, telling researchers how much hydrogen should be added to the sample depending on the energy of the ion beam, the exposure time, and the size and thickness of the sample.

Fine things to come with fine-tuning

This could pave the way to a major practical advance, Mandal notes, because their approach can potentially bring the Fermi level of a sample to the requisite value in a matter of minutes — a task that, by conventional methods, has sometimes taken weeks without ever reaching the required degree of milli-eV precision.

Li believes that an accurate and convenient method for fine-tuning the Fermi level could have broad applicability. “When it comes to quantum materials, the Fermi level is practically everything,” he says. “Many of the effects and behaviors that we seek only manifest themselves when the Fermi level is at the right location.” With a well-adjusted Fermi level, for example, one could raise the critical temperature at which materials become superconducting. Thermoelectric materials, which convert temperature differences into an electrical voltage, similarly become more efficient when the Fermi level is set just right. Precision tuning might also play a helpful role in quantum computing.