#Image And Video Data Annotation

Explore tagged Tumblr posts

Text

#artificial intelligence#data collection#technology#video annotation#machine learning#image and video annotation

1 note

·

View note

Text

How to Develop a Video Text-to-Speech Dataset for Deep Learning

Introduction:

In the swiftly advancing domain of deep learning, video-based Text-to-Speech (TTS) technology is pivotal in improving speech synthesis and facilitating human-computer interaction. A well-organized dataset serves as the cornerstone of an effective TTS model, guaranteeing precision, naturalness, and flexibility. This article will outline the systematic approach to creating a high-quality video TTS dataset for deep learning purposes.

Recognizing the Significance of a Video TTS Dataset

A video Text To Speech Dataset comprises video recordings that are matched with transcribed text and corresponding audio of speech. Such datasets are vital for training models that produce natural and contextually relevant synthetic speech. These models find applications in various areas, including voice assistants, automated dubbing, and real-time language translation.

Establishing Dataset Specifications

Prior to initiating data collection, it is essential to delineate the dataset’s scope and specifications. Important considerations include:

Language Coverage: Choose one or more languages relevant to your application.

Speaker Diversity: Incorporate a range of speakers varying in age, gender, and accents.

Audio Quality: Ensure recordings are of high fidelity with minimal background interference.

Sentence Variability: Gather a wide array of text samples, encompassing formal, informal, and conversational speech.

Data Collection Methodology

a. Choosing Video Sources

To create a comprehensive dataset, videos can be sourced from:

Licensed datasets and public domain archives

Crowdsourced recordings featuring diverse speakers

Custom recordings conducted in a controlled setting

It is imperative to secure the necessary rights and permissions for utilizing any third-party content.

b. Audio Extraction and Preprocessing

After collecting the videos, extract the speech audio using tools such as MPEG. The preprocessing steps include:

Noise Reduction: Eliminate background noise to enhance speech clarity.

Volume Normalization: Maintain consistent audio levels.

Segmentation: Divide lengthy recordings into smaller, sentence-level segments.

Text Alignment and Transcription

For deep learning models to function optimally, it is essential that transcriptions are both precise and synchronized with the corresponding speech. The following methods can be employed:

Automatic Speech Recognition (ASR): Implement ASR systems to produce preliminary transcriptions.

Manual Verification: Enhance accuracy through a thorough review of the transcriptions by human experts.

Timestamp Alignment: Confirm that each word is accurately associated with its respective spoken timestamp.

Data Annotation and Labeling

Incorporating metadata significantly improves the dataset's functionality. Important annotations include:

Speaker Identity: Identify each speaker to support speaker-adaptive TTS models.

Emotion Tags: Specify tone and sentiment to facilitate expressive speech synthesis.

Noise Labels: Identify background noise to assist in developing noise-robust models.

Dataset Formatting and Storage

To ensure efficient model training, it is crucial to organize the dataset in a systematic manner:

Audio Files: Save speech recordings in WAV or FLAC formats.

Transcriptions: Keep aligned text files in JSON or CSV formats.

Metadata Files: Provide speaker information and timestamps for reference.

Quality Assurance and Data Augmentation

Prior to finalizing the dataset, it is important to perform comprehensive quality assessments:

Verify Alignment: Ensure that text and speech are properly synchronized.

Assess Audio Clarity: Confirm that recordings adhere to established quality standards.

Augmentation: Implement techniques such as pitch shifting, speed variation, and noise addition to enhance model robustness.

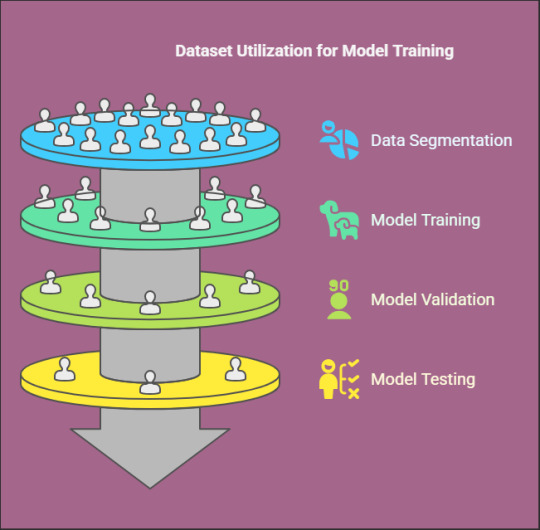

Training and Testing Your Dataset

Ultimately, utilize the dataset to train deep learning models such as Taco Tron, Fast Speech, or VITS. Designate a segment of the dataset for validation and testing to assess model performance and identify areas for improvement.

Conclusion

Creating a video TTS dataset is a detailed yet fulfilling endeavor that establishes a foundation for sophisticated speech synthesis applications. By Globose Technology Solutions prioritizing high-quality data collection, accurate transcription, and comprehensive annotation, one can develop a dataset that significantly boosts the efficacy of deep learning models in TTS technology.

0 notes

Text

Guide to Partner with Data Annotation Service Provider

Data annotation demand has rapidly grown with the rise in AI and ML projects. Partnering with a third party is a comprehensive solution to get hands on accurate and efficient annotated data. Checkout some of the factors to hire an outsourcing data annotation service company.

#data annotation#data annotation service#image annotation services#video annotation services#audio annotation services#image labeling services#data annotation solution#data annotation outsourcing

2 notes

·

View notes

Text

High-Quality Image Annotation: The Foundation of AI Excellence

Introduction:

In the realm of artificial intelligence and machine learning, data holds paramount importance. Specifically, high-quality labeled image data is crucial for developing robust and dependable AI models. Whether it involves facilitating autonomous vehicles, enabling facial recognition, or enhancing medical imaging applications, accurate image annotation guarantees that AI systems function with precision and efficiency. At GTS.AI, we are dedicated to providing exceptional image annotation services customized to meet the specific requirements of your project.

The Significance of High-Quality Image Annotation

Image Annotation, or labeling, involves identifying and tagging components within an image to generate structured data. This annotated data serves as the cornerstone for training AI algorithms. But why is quality so critical?

Precision in Model Outcomes: Inaccurately labeled data results in unreliable AI models, leading to erroneous predictions and subpar performance.

Accelerated Training Processes: High-quality annotations facilitate quicker and more effective training, conserving both time and resources.

Enhanced Generalization: Well-annotated datasets enable models to generalize effectively, performing admirably even with unfamiliar data.

Primary Applications of Image Annotation

Image annotation has become essential across various sectors:

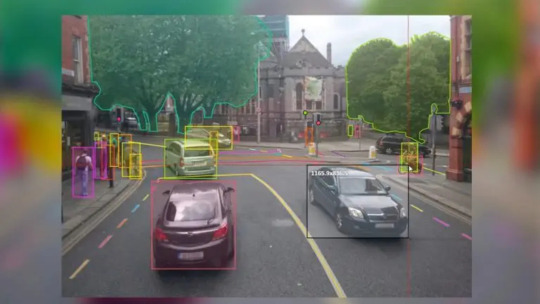

Autonomous Vehicles: Training vehicles to identify objects, traffic signs, and lane markings.

Healthcare: Assisting diagnostic tools with annotated medical images for disease identification.

Retail: Improving inventory management and personalized shopping experiences through object detection.

Security: Supporting facial and object recognition systems for surveillance and access control.

What Distinguishes GTS.AI?

At GTS.AI, we recognize that each project has distinct needs. Here’s how we guarantee superior image annotation services:

Cutting-Edge Annotation Techniques

We utilize a range of labeling methods, including:

Bounding Boxes: Optimal for object detection tasks.

Semantic Segmentation: Providing pixel-level accuracy

Scalability and Efficiency

Our international team, equipped with state-of-the-art tools, enables us to manage projects of any magnitude, providing timely results without sacrificing quality.

Comprehensive Quality Control

Each labeled dataset is subjected to several verification stages to ensure both accuracy and consistency.

Tailored Solutions

We customize our services to meet your unique requirements, whether related to annotation formats, workflow integration, or types of datasets.

Data Compliance and Security

The security of your data is our utmost concern. We comply with stringent data privacy regulations to ensure the confidentiality of your datasets.

Real-World Success Examples

Our image labeling services have enabled clients to achieve outstanding outcomes:

Healthcare Innovator: Supplied annotated X-ray images for training an AI model aimed at early disease detection.

Retail Leader: Labeled product images for a visual search application, enhancing customer engagement.

Autonomous Driving Pioneer: Provided high-quality annotations of road scenes, expediting AI training schedules.

Collaborate with GTS.AI for Exceptional Image Labeling

High-quality image labeling transcends mere service; it represents a strategic investment in the success of your AI projects. At Globose Technology Solutions, we merge expertise, technology, and precision to deliver unmatched results.

Are you prepared to advance your AI initiatives? Visit our image and video annotation services page to discover more and initiate your project today!

0 notes

Text

How Data Annotation Services are Shaping the Future of Autonomous Vehicles

The future of transportation is rapidly evolving, with autonomous vehicles at the forefront of this transformation. These self-driving cars, trucks, and buses promise to revolutionize the way we travel, making our roads safer and more efficient. However, the development of autonomous vehicles relies heavily on Data Annotation Services, which play a crucial role in training the complex algorithms that power these machines.

The Role of Data Annotation in Autonomous Vehicle Development

Autonomous vehicles operate by interpreting the environment around them, which is made possible by a variety of sensors, cameras, and other data-gathering tools. These sensors collect vast amounts of raw data, but this data is useless without proper annotation. Data Annotation Services provide the essential groundwork by labeling and categorizing this data so that machine learning models can understand and learn from it.

The accuracy and reliability of annotation services directly impact the effectiveness of autonomous vehicles. For instance, annotated data is used to train models to recognize pedestrians, other vehicles, traffic signs, and road markings. The more precise and comprehensive the annotations, the better the vehicle can make informed decisions in real-time scenarios.

Enhancing Safety through Precise Data Annotation

One of the primary goals of autonomous vehicles is to enhance road safety. To achieve this, the vehicles must be capable of making split-second decisions in complex environments. Data Annotation Services are critical in this context, as they ensure that the data fed into machine learning models is both accurate and relevant.

High-quality annotation services enable the creation of models that can identify potential hazards, such as unexpected obstacles or erratic driving behavior by other vehicles. By learning from annotated data, autonomous systems can predict and respond to these hazards more effectively, reducing the likelihood of accidents.

Moreover, annotated data allows for the continuous improvement of autonomous systems. As vehicles encounter new situations on the road, the data collected can be annotated and fed back into the learning models. This iterative process helps the systems to evolve and adapt, ultimately leading to safer and more reliable autonomous vehicles.

The Economic Impact of Data Annotation on the Automotive Industry

The economic implications of Data Annotation Services in the automotive industry are significant. As the demand for autonomous vehicles grows, so does the need for annotated data. This has led to the emergence of a robust market for annotation services, with specialized companies offering expertise in this niche area.

Automakers and tech companies developing autonomous vehicles invest heavily in Data Annotation Services to ensure that their models are trained on high-quality data. This investment not only drives the development of safer vehicles but also fuels innovation within the annotation services industry itself. As the technology behind autonomous vehicles advances, the complexity and volume of data that needs to be annotated increase, creating more opportunities for growth in this sector.

Furthermore, the global push toward autonomous vehicles has spurred job creation in the field of data annotation. From manual annotation to AI-assisted techniques, the industry offers a range of employment opportunities, contributing to economic growth and technological advancement.

Overcoming Challenges in Data Annotation for Autonomous Vehicles

While Data Annotation Services are indispensable in the development of autonomous vehicles, they are not without challenges. One of the main difficulties lies in the sheer volume of data that needs to be annotated. Autonomous vehicles generate enormous amounts of data every second, making it a monumental task to annotate all of it accurately and efficiently.

Additionally, the diversity of environments in which autonomous vehicles operate presents another challenge. Different regions, weather conditions, and road types require specific annotations to ensure the models can generalize across various scenarios. Annotation services must be capable of handling this complexity, providing precise annotations that account for the myriad of factors affecting autonomous driving.

To address these challenges, the industry is increasingly turning to AI-assisted annotation tools. These tools leverage machine learning to automate parts of the annotation process, improving both speed and accuracy. However, human oversight remains essential to ensure the highest quality of annotated data, especially in critical areas such as pedestrian detection and road sign recognition.

Conclusion

The future of autonomous vehicles hinges on the effectiveness of Data Annotation Services. By providing the foundation upon which machine learning models are built, these services are shaping the capabilities of self-driving technology. As the demand for autonomous vehicles continues to grow, so too will the importance of accurate and efficient annotation services. Through ongoing innovation and collaboration, the industry is poised to overcome challenges and drive the next wave of advancements in autonomous transportation.

1 note

·

View note

Text

Pollution Annotation / Pollution Detection

Pollution annotation involves labeling environmental data to identify and classify pollutants. This includes marking specific areas in images or videos and categorizing pollutant types. ### Key Aspects: - **Image/Video Labeling:** Using bounding boxes, polygons, keypoints, and semantic segmentation. - **Data Tagging:** Adding metadata about pollutants. - **Quality Control:** Ensuring annotation accuracy and consistency. ### Applications: - Environmental monitoring - Research - Training machine learning models Pollution annotation is crucial for effective pollution detection, monitoring, and mitigation strategies. AigorX Data annotationsData LabelerDataAnnotationData Annotation and Labeling.inc (DAL)DataAnnotation Fiverr Link- https://lnkd.in/gM2bHqWX

#image annotation services#artificial intelligence#annotation#machinelearning#annotations#ai data annotator#ai image#ai#ai data annotator jobs#data annotator#video annotation#image labeling

0 notes

Text

0 notes

Text

https://justpaste.it/exzat

#Data Annotation Services#Images annotation#Videos annotation#Videos annotation Services#Images annotation Services#Data collection company#Data collection#datasets#data collection services

0 notes

Text

AI & Tech-Related Jobs Anyone Could Do

Here’s a list of 40 jobs or tasks related to AI and technology that almost anyone could potentially do, especially with basic training or the right resources:

Data Labeling/Annotation

AI Model Training Assistant

Chatbot Content Writer

AI Testing Assistant

Basic Data Entry for AI Models

AI Customer Service Representative

Social Media Content Curation (using AI tools)

Voice Assistant Testing

AI-Generated Content Editor

Image Captioning for AI Models

Transcription Services for AI Audio

Survey Creation for AI Training

Review and Reporting of AI Output

Content Moderator for AI Systems

Training Data Curator

Video and Image Data Tagging

Personal Assistant for AI Research Teams

AI Platform Support (user-facing)

Keyword Research for AI Algorithms

Marketing Campaign Optimization (AI tools)

AI Chatbot Script Tester

Simple Data Cleansing Tasks

Assisting with AI User Experience Research

Uploading Training Data to Cloud Platforms

Data Backup and Organization for AI Projects

Online Survey Administration for AI Data

Virtual Assistant (AI-powered tools)

Basic App Testing for AI Features

Content Creation for AI-based Tools

AI-Generated Design Testing (web design, logos)

Product Review and Feedback for AI Products

Organizing AI Training Sessions for Users

Data Privacy and Compliance Assistant

AI-Powered E-commerce Support (product recommendations)

AI Algorithm Performance Monitoring (basic tasks)

AI Project Documentation Assistant

Simple Customer Feedback Analysis (AI tools)

Video Subtitling for AI Translation Systems

AI-Enhanced SEO Optimization

Basic Tech Support for AI Tools

These roles or tasks could be done with minimal technical expertise, though many would benefit from basic training in AI tools or specific software used in these jobs. Some tasks might also involve working with AI platforms that automate parts of the process, making it easier for non-experts to participate.

4 notes

·

View notes

Text

Tips for Creating Engaging Video Content

In this fast-paced world of digital marketing, video is the king. You may have to attract audiences' attention in social media, educate, or promote a product or service - engaging video content would always be the key to success. The challenge here is coming up with compelling video content, and some very crucial tips help you to create the kind of videos that not only generate attraction but keep the audience engaged till the very end.

1. Know Your Audience

Therefore, before creating content know to whom you are speaking. What are the interests, pain points, and needs of your target audience? Content-related tailoring to your audience will certainly boost engagement by a huge amount. For instance, with a younger audience, shorter, snappier videos with fun elements may be the best approach. Or in the case of B2B, informative and concise videos containing data and insights will likely be more effective.

2. Hook Viewers from the Start

The first few seconds of a video is where it makes or breaks that shot of content. Start with a hook, such as a provocative question, a striking visual, or a thought-provoking statement. Your goal is to pique their interest immediately and give them a reason to watch the whole video.

3. Is it too short?

Where attention spans are short in length, brevity rules. Unless you're producing a tutorial or documentary designed to be in-depth, strive to make your videos as concise as possible without diminishing their value. For social media channels, videos can be around 60 seconds or shorter. It doesn't mean cutting corners on quality-it means streamlining that important message you want to convey.

4. Tell a Story

Humans are innately drawn to stories; therefore, think of a narrative structure for your video. Storytelling humanizes your content, making it often much more relatable and memorable. Your story may revolve around your brand, customer success, or even a real-world scenario that reflects your message. Whichever route you take, remember to establish the beginning, middle, and end.

5. Quality Images and Audio

A low-quality image or audio can send viewers running within minutes. Investing in good lighting and a resolution camera, plus quality audio, will enhance the production value of your videos. In fact, even super-basic videos shot with smartphones can look pretty professional if set up and edited properly.

6. Add Captions

Many viewers watch videos on social media mute, particularly when scrolling through their feeds. You're enhancing accessibility for deaf and hard-of-hearing audiences while also ensuring that your message will be received by viewers even when they can't or won't accept the sound. Most video platforms, such as Facebook and Instagram, default to playing videos with the sound turned off. Use captions, anyway.

7. Good Visuality

A video is a medium of visuals; make sure the graphics are powerful and arresting. Dynamic cuts, vibrant colors, and graphics will keep the watcher glued to the screen. Include on-screen text and animations to annotate important points. The visual interest will set your video apart from the sea of content.

8. A Clear Call-to-Action (CTA)

No matter how awesome your video is, it is never going to hit its target unless the viewers know what they are meant to do next. Add a strong and obvious CTA towards the close of your video. Be it subscribing to your channel, visiting your website, or buying something, instruct your viewers on what to do next. Make sure the CTA is both visually prominent and easy to understand.

9. Optimize for Mobile Viewing

In the future, most of the traffic will be coming online from mobile devices. Therefore, optimize your videos for mobile. Vertical format videos, or even square, works fine with the likes of Instagram and TikTok, but widescreen might still be preferred for YouTube. The video should also be mobile-friendly and you may also need to change the aspect ratio. 10. User-Generated Content (UGC)

Engage your audience by directly sharing user-generated content. Encourage and invite your followers to submit videos on related topics about your brand, product, or campaign. UGC creates authenticity and may also connect people more personally to the content.

11. Analyze and Adapt

The simplest approach with which you can regularly engage your audience is in making exciting video content. Analyze your videos' performance on engagement rates, viewer retention, and click-through rates. This will clearly show you what is working and what isn't. So, be free to test different formats and styles and adjust your approach accordingly based on the feedback and metrics from the users.

12. Influencer Collaboration

Another key way to increase the views for your video will be through an influencer partnership. An influencer builds trust through their audience from the recommendations they make. When you work with someone of like-mindedness, your video will take further with more engaged users. The collaboration needs to come across as organic and realistic to the voice and aesthetics of the influencer. Conclusion As can be evidenced by the above-mentioned, it's hard to be creative and strategic yet detail-oriented enough without exceeding a marketer's potential. With the right understanding of your audience, an approach with storytelling, and quality visuals, you can make the video truly connect with the viewer and persuade him or her to do something. Keep in mind that even when making videos, they should be brief, mobile-friendly, and optimized for sound-off viewing. And most importantly, keep testing and iterating to improve your results.

With these tips in mind, make sure you integrate them with your video strategy and produce some sparkling stuff to engage your target audience and bring you growth. Happy filming!

Looking for a B2B Digital Marketing Agency in Noida to help you to navigate the evolving landscape of digital marketing? Our experienced team is here to assist you in crafting a winning digital marketing plan and strategy that aligns with your business and latest trends! Get in touch!

#channeltechnologies#digitalmarketing#eventmanagement#socialmediamarketing#contentcreation#videomarketing

1 note

·

View note

Text

Why Your Business Should Consider Engaging a Professional Image Annotation Service

Introduction:

In the contemporary, rapidly evolving technological landscape, artificial intelligence (AI) and machine learning (ML) have emerged as essential components across various sectors. From self-driving cars and healthcare diagnostics to online retail and security frameworks, AI applications are revolutionizing business operations. However, the success of these technologies is largely contingent upon the availability of high-quality, annotated data, which underscores the vital role of professional image annotation services.

The Significance of Image Annotation in AI Development

Image Annotation Company involves the labeling or tagging of images to facilitate the training of machine learning algorithms. This process is a critical initial step in the creation of AI models for computer vision tasks. Accurate annotations are imperative for enabling AI systems to identify, categorize, and make informed decisions based on visual information. In the absence of proper annotation, even the most advanced AI algorithms may struggle to produce dependable outcomes.

Reasons to Choose a Professional Image Annotation Service

Although some organizations might contemplate managing image annotation internally, collaborating with a professional image annotation service presents numerous benefits:

Expertise and Precision

Professional services employ experienced annotators who are adept at providing accurate and consistent annotations. They utilize sophisticated tools and methodologies to ensure high-quality results that align with your project specifications.

Scalability

As your AI initiatives expand, the demand for annotated data increases correspondingly. Professional services possess the necessary resources and infrastructure to efficiently manage large-scale annotation projects.

Cost Efficiency

Establishing an in-house annotation team entails significant expenses related to recruitment, training, and tool acquisition. Outsourcing to a professional service mitigates these financial burdens.

Accelerated Turnaround Times

Established companies can swiftly process extensive datasets, enabling you to adhere to stringent project timelines without sacrificing quality.

Concentration on Core Business Functions

Delegating image annotation tasks allows your team to focus on essential business activities, enhancing overall productivity.

To focus on essential business functions, such as product innovation and strategic planning, rather than being hindered by labor-intensive data preparation processes.

Applications of Professional Image Annotation

Image annotation firms cater to a diverse array of sectors, including:

Healthcare: Annotating medical images like X-rays and MRIs for diagnostic artificial intelligence applications.

Retail and E-commerce: Tagging product visuals for enhanced visual search and recommendation systems.

Autonomous Vehicles: Labeling road signs, pedestrians, and various objects for algorithms used in self-driving vehicles.

Security: Training artificial intelligence for facial recognition and surveillance applications.

Agriculture: Utilizing AI-driven solutions to identify plant diseases and monitor crop health.

Why Opt for GTS for Your Image Annotation Requirements?

At GTS, we excel in delivering high-quality image and video annotation services customized to meet your specific artificial intelligence and machine learning needs. Our team merges expertise with advanced technology to provide precise, scalable, and cost-efficient annotation solutions. Whether you are developing a small prototype or managing a large-scale AI initiative, we are committed to supporting your success.

Conclusion

The effectiveness of your artificial intelligence and machine learning projects relies heavily on the quality of the training data. Collaborating with a professional image annotation company not only guarantees accuracy and efficiency but also provides your business with a competitive advantage in the current AI-centric environment. Allow GTS to be your reliable partner in creating robust, high-performing AI solutions.

Are you prepared to enhance your AI projects? Visit Globose Technology Solutions Image and Video Annotation Services to discover more and initiate your journey today

1 note

·

View note

Text

LIT REVIEW 2

IN DEFENSE OF THE POOR IMAGE – HITO STEYERL

Hito Steyerl essay In Defence of the Poor Image written in 2009 is an influential essay that examines the concept of the ‘Poor Image’. Conceptualising the form of digital culture and contemporary art, Steryel is a prominent artist and thinker who engages within exploring the provocative world of technology, globalisation and political activism.

Steyerl's interdisciplinary practice encompasses a wide range of mediums, including video essays, installations, performances, and writings. Her work often blurs the boundaries between documentary and fiction, combining personal narratives with broader socio-political analysis. She frequently employs experimental editing techniques, archival footage, and found imagery to interrogate the complexities of contemporary visual culture and the impact of digital technologies on society.[1]

In the 21st century we have so much access to technology, with the advancement in artificial intelligence, smartphones and digital cameras, even with the amateurish edge everyone can create and share images online. This acceleration creates deterioration, Steryel explains that the proliferation of these technologies has democratised the production. Having these mass data pools ready for download transforms quality in accessibility. Challenging the previously established structures it enables the people outside of the art domain to engage with image-making process.

The idea of being a more downgraded copy or low-resolution(lo-fi) of an original higher quality allows it to have its own personality in the digital age. “Poor images are poor because they are not assigned any value within the class society of images - their status as illicit or degraded grants them exemption from its criteria.”[2] She argues the notion that the Poor images are dismissed as an inferior form of high resolution but will allow it to possess their own allure and charm. We as that artist can reflect the material surroundings in which it lives in allows us to capture more realistic lived in experiences.

"But the economy of poor images is about more than just downloads: you can keep the files, watch them again, even reedit or improve them if you think necessary"[3] This sense of being able annotate and rearrange the found emphasises the hybrid nature of poor images, which are often the result of remixing, sampling, and recontextualising existing visual materials. This hybridity opens up new possibilities for artistic expression and cultural production, challenging conventional notions of artistic authorship and authenticity.

With Steryel’s idea of deconstructing/re-editing the image it allows us to have authorship over the work, this idea of making new forms of creativity, by combing elements in new/ unexpected ways to challenge traditional notion of originality. You don’t need to have original material created from scratch you use these ideas that are already around you and redirect with your own form of thinking.[4]

In an older text (1999) Remediation: Understanding New Media by Jay David Bolter, he refers to the process in which media will imitate and transform. The reinterpretation of repurposing existing media content allows the artist to take the aesthetics and practices within the new technological or cultural context of the found or taken, while simultaneously creating their own authorship from it. Steryel and Bolter both talk about the pros and cons of how accessible content is within our world. The internet is works as a massive archive of everything and anything, but with how fast the internet moves we also lose, content gets pushed to the back once a new and improved version is released.

Bolter writes about how the concept of how old media talks to new media, that it takes form the old to improve the new “Moreover, they suggest that all prior media have refashioned previous media in similar ways: photography remediating painting; film remediating live theatre and photography; television remediating film, vaudeville, and radio; and so forth”[5]

Conceptually talking about remediation is suggests that the media forms are constantly influenced by building upon the previous works, this ensures that the content will have a continuous cycle of taking and borrowing. This emphasises the interconnectedness and intertextuality of how media forums work. Remediation acknowledges the persistent presence of older media within new media environments and the ways in which they shape our understanding and experience of contemporary media.

This mixing and hybridity that Bolter talks about comes from representing one medium in another medium (for example an painting as a video etc), the difference between what Steyerl and Bolter is the concept of enhancing. Remediation isn't merely copying or reproducing content from one medium to another; it often involves transforming or enhancing the original content in some way, when Steyerl can be seeing going in the opposite direction.

Contemporary Artist Petra Cortright who plays with the exploration of the internet, digital aesthetics and the crossroads between technological experience and art. Her work involves the concept of digital deconstructions, where she manipulates and reinterprets digital imagery, typically sourced from online platforms, into new forms of visual expression.[6]

The way both Steryel and Bolter talk about how we have the power to take and remake, Cortright plays on this, her digital deconstruction will harnesses digital tools and techniques to dismantle and reconstruct visual elements, often sourced from the internet. This exploration reflects a deep engagement with the digital landscape and an understanding of its possibilities for artistic expression. Cortright will incorporate elements such in glitch art, pixelation and low-resolution, to create a work which uses the visual language of the online world. In Steryel’s essay she tells us we are able to do this because of how free the online world is, so Cortright not only reflects the digital culture but also critiques the ways in which images circulate and transform within online spaces.

Within my own practice I seek to take both these theories of how we can take found and made content, to deconstruct, and re-tell what we see and feel in the city of Auckland. To see how far I can take these ideas within my chosen medium, through the collection of old and new forms, I plan to create work that reflects the city that either enhances or degrades of how we see Auckland in the everyday.

Bibliography

Berardi, Franco “Bifo’ “Introduction” The Wretched of the Screen, 2012

Bolter, Jay David “Remediation: Understanding New Media”, 1999

Steryel, Hito “In Defense of the Poor Image” The Wretched of the Screen, 2012

Smith, William S. “Entering the Painted world” Art in America, jan/feb 2021

Terkessdis, Mark “The Archive of forgotten Concerns” Hito Steyerl I will Survive, 2021

[1] Franco “Bifo’ Berardi, Introduction (2012)

[2] Hito Steryel, In Defense of the Poor Image, (2009)

[3] Hito Steryel, In Defense of the Poor Image, (2009)

[4] Mark Terkessdis, The Archive of forgotten Concerns, (2021)

[5] Jay David Bolter, Remediation: Understanding New Media. (1999)

[6] William S. Smith, Entering the Painted World (2021)

2 notes

·

View notes

Text

Global Video Data Collection: Customized Solutions for Your Requirements

Introduction

In the contemporary digital landscape, video data is integral to the development of cutting-edge technologies. From self-driving vehicles to sophisticated AI applications, video datasets serve as the foundation for advancement. At GTS.AI, we focus on global Video Data Collection, providing customized solutions that cater to the specific needs of businesses and developers around the globe.

The Increasing Need for Video Data

As sectors progressively embrace artificial intelligence and machine learning, the demand for high-quality video data has escalated. What makes video data so crucial? Consider the following key applications:

Autonomous Vehicles: Training self-driving cars to identify objects, navigate roadways, and react to changing environments.

Smart Surveillance: Improving security systems through real-time monitoring and intelligent threat identification.

Healthcare Innovations: Allowing AI systems to assess patient movements or assist in surgical operations using visual data.

Retail Analytics: Enhancing customer experiences by analyzing in-store behaviors and traffic flows.

To thrive in these areas, businesses require diverse, precise, and scalable video datasets—and that is where our expertise comes into play.

Customized Solutions for Varied Requirements

At GTS.AI, we recognize that each project has distinct needs. Our global video data collection services are crafted to provide:

Personalized Data Collection

Whether you require footage of urban traffic, rural settings, or indoor spaces, we deliver tailored datasets that align seamlessly with your goals. We work closely with you to establish specifications such as:

Types of locations

Timeframes

Camera perspectives

Resolution and format

Worldwide Coverage

Our vast network extends across continents, guaranteeing that you receive culturally and geographically varied data. This global presence is especially vital for projects that necessitate representation from different regions and demographics.

Commitment to Quality

Quality is paramount. Each video we gather undergoes thorough evaluations to ensure it adheres to the highest standards of resolution, clarity, and relevance. This process guarantees optimal functionality for your AI models.

Adherence to Compliance and Privacy

We place a strong emphasis on data privacy and comply with stringent legal frameworks, including GDPR and other regional regulations. Our methodologies ensure ethical data collection and, when necessary, anonymization.

Reasons to Choose GTS.AI

Collaborating with GTS.AI for your video data collection requirements presents a multitude of advantages:

Expertise: With extensive experience, we have refined our processes to provide unparalleled quality and efficiency.

Scalability: Our solutions are adaptable, accommodating everything from small pilot projects to extensive deployments.

Rapid Turnaround: We ensure timely delivery of your datasets without sacrificing quality.

Comprehensive Support: Our dedicated team facilitates a smooth experience from initial planning to final delivery.

Real-World Applications

Here are several instances illustrating how our video data collection services have made a significant impact:

Autonomous Driving: Assisted a prominent automotive manufacturer in training their AI systems for various driving conditions.

Healthcare AI: Supplied annotated video datasets for a startup focused on developing fall-detection technologies for elderly care.

Retail Innovations: Aided a global retailer in enhancing customer experiences through behavioral analytics.

Conclusion

The possibilities presented by video data are boundless, and possessing the appropriate datasets can significantly influence the achievement of groundbreaking results. At Globose Technology Solutions we are dedicated to offering customized video data collection solutions that empower your initiatives and drive innovation.

Are you prepared to turn your vision into reality? Visit our video dataset collection services page to discover more and initiate the process today.

1 note

·

View note