#ai data annotator

Explore tagged Tumblr posts

Text

Project Description: Bounding Box Bee Annotation on Supervisely

I recently completed a project for a client, creating bounding boxes for bees using the Supervisely platform. This task required precise and accurate annotations to enhance machine learning models for insect detection.

Key Features:

Platform Used: Supervisely Task: Creating bounding boxes around bees in images Objective: Accurate labeling for machine learning models Why Hire Me?

Expertise in Image Annotation Proficiency with Supervisely Commitment to Quality and Accuracy For reliable and skilled data annotation services, visit my Fiverr page- https://www.fiverr.com/s/7YXxG94

#image annotation services#ai data annotator#artificial intelligence#ai image#annotation#annotations#machinelearning#ai data annotator jobs#data annotator#ai

0 notes

Text

maybe it was a mistake to move bc if i had stayed in MA i could have continued tapping into a local network and would probably be working at umass right now :/ also it would have been so much easier to get on food stamps and medicaid. bc i am not eligible for either of those things in tx. and i wasn't even eligible for tx unemployment i had to go through ma unemployment. do the texas republican voters know they don't have to live like this and that things could be better and they could have an actual social safety net

#i have applied for every possible city and county job but they are moving So So So slow#december is a traditionally awful mental health time and im trying really hard to line up SOMETHING ANYTHING before my mental health absolu#ely flatlines next week due to a bad anniversary#i can't even get a job annotating AI data or factchecking AI data

9 notes

·

View notes

Text

A Guide to Choosing a Data Annotation Outsourcing Company

Clarify the Requirements: Before evaluating outsourcing partners, it's crucial to clearly define your data annotation requirements. Consider aspects such as the type and volume of data needing annotation, the complexity of annotations required, and any industry-specific or regulatory standards to adhere to.

Expertise and Experience: Seek out outsourcing companies with a proven track record in data annotation. Assess their expertise within your industry vertical and their experience handling similar projects. Evaluate factors such as the quality of annotations, adherence to deadlines, and client testimonials.

Data Security and Compliance: Data security is paramount when outsourcing sensitive information. Ensure that the outsourcing company has robust security measures in place to safeguard your data and comply with relevant data privacy regulations such as GDPR or HIPAA.

Scalability and Flexibility: Opt for an outsourcing partner capable of scaling with your evolving needs. Whether it's a small pilot project or a large-scale deployment, ensure the company has the resources and flexibility to meet your requirements without compromising quality or turnaround time.

Cost and Pricing Structure: While cost is important, it shouldn't be the sole determining factor. Evaluate the pricing structure of potential partners, considering factors like hourly rates, project-based pricing, or subscription models. Strike a balance between cost and quality of service.

Quality Assurance Processes: Inquire about the quality assurance processes employed by the outsourcing company to ensure the accuracy and reliability of annotated data. This may include quality checks, error detection mechanisms, and ongoing training of annotation teams.

Prototype: Consider requesting a trial run or pilot project before finalizing an agreement. This allows you to evaluate the quality of annotated data, project timelines, and the proficiency of annotators. For complex projects, negotiate a Proof of Concept (PoC) to gain a clear understanding of requirements.

For detailed information, see the full article here!

2 notes

·

View notes

Text

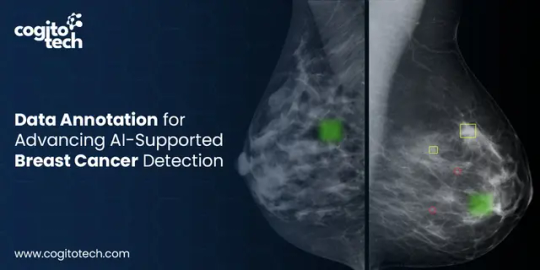

Mammogram Data Annotation for AI-Driven Breast Cancer Detection

Mammographic screenings are widely known for their accessibility, cost-efficiency, and dependable accuracy in detecting abnormalities. However, with over 100 million mammograms taken globally each year, each requiring at least two specialist reviews—the sheer volume creates significant challenges for radiologists, leading to delays in report generation, missed screenings, and an increased risk of diagnostic errors. A study by the National Cancer Institute suggests screening mammograms underdiagnose about 20% of breast cancers.

In recent years, the rapid evolution of artificial intelligence and the growing availability of digital medical data have positioned AI and machine learning as a promising solution. These technologies have shown promising results in mammography, in some studies, matching or even exceeding radiologists’ performance in breast cancer detection tasks. Research��published in The Lancet Oncology revealed that AI-supported mammogram screening detected 20% more cancers compared to readings by radiologists alone. However, to achieve high accuracy, AI and ML models require training on large-scale, well-annotated mammography datasets.

The quality and inclusiveness of annotation directly influence model performance. Advanced annotation methods include diverse categorizations, such as lesion-specific labels, BI-RADS scores (Breast Imaging Reporting and Data System), breast density classes, and molecular subtype information. These annotated lesion datasets train the model to identify subtle imaging features that distinguish normal tissue from benign and malignant lesions, ultimately improving both sensitivity and specificity.

Breast cancer is a highly heterogeneous disease, displaying complexity at clinical, histopathological, microenvironmental, and genetic levels. Patients with different pathological and molecular subtypes show wide variations in recurrence risk, treatment response, and prognosis. This complexity must be reflected in training data if AI systems are to be clinically useful.

This write-up focuses on the importance of annotated data for building AI-powered models for lesion detection and how Cogito Tech’s Medical AI Innovation Hubs provide clinically validated, regulatory-compliant annotation solutions to accelerate AI readiness in breast cancer diagnostics. read more : Cogito tech mammogram data annotation for ai

0 notes

Text

Data Annotation vs Data Labeling: What Really Matters for Scalable, Enterprise-Grade AI Systems?

What’s the real difference between data annotation and data labeling? For most AI professionals, the terms are often used interchangeably—but for enterprise-grade systems, these subtle distinctions can impact scalability, accuracy, and overall performance. This blog breaks it all down.

Data Annotation vs Data Labeling: Key Differences

The blog begins by comparing the two concepts based on:

Conceptual foundation: Annotation adds context; labeling tags data

Process complexity: Annotation often requires deeper interpretation

Technical implementation: Varies with tools, model types, and formats

Applications: Labeling suits classification tasks; annotation supports richer models (like NLP and computer vision)

Understanding the Key Difference: Medical Imaging Use Case

A real-world example in medical imaging helps clarify how annotation enables diagnostic AI by capturing detailed insights beyond simple tags.

When the Difference Matters—And When It Doesn’t

Matters: In high-stakes AI (e.g., healthcare, autonomous driving), where context is vital

Doesn’t matter: In simpler classification tasks where labeling alone is sufficient

Key Factors for Scalable, Enterprise AI

The blog emphasizes enterprise considerations:

Data quality and consistency

Scalability and automation

Domain expertise for high accuracy

Ethical handling and bias mitigation

ML-Readiness: The True Success Metric

Ultimately, successful AI systems depend on how well the data is prepared—not just labeled or annotated, but made machine-learning ready.

For enterprises scaling AI, understanding these nuances helps build smarter, more reliable systems. Read the full blog to explore practical strategies and expert insights.

Read More: https://www.damcogroup.com/blogs/data-annotation-vs-data-labeling

0 notes

Text

Explore how expert annotation services improve the performance of AI systems by delivering high-quality training data. This blog breaks down the importance of accurate image, text, and video annotation in building intelligent, reliable AI models.

0 notes

Text

Data labeling and annotation

Boost your AI and machine learning models with professional data labeling and annotation services. Accurate and high-quality annotations enhance model performance by providing reliable training data. Whether for image, text, or video, our data labeling ensures precise categorization and tagging, accelerating AI development. Outsource your annotation tasks to save time, reduce costs, and scale efficiently. Choose expert data labeling and annotation solutions to drive smarter automation and better decision-making. Ideal for startups, enterprises, and research institutions alike.

#artificial intelligence#ai prompts#data analytics#datascience#data annotation#ai agency#ai & machine learning#aws

0 notes

Text

AI, Business, And Tough Leadership Calls—Neville Patel, CEO of Qualitas Global On Discover Dialogues

In this must-watch episode of Discover Dialogues, we sit down with Neville Patel, a 34-year industry veteran and the founder of Qualitas Global, a leader in AI-powered data annotation and automation.

We talked about AI transforming industries, how automation is reshaping jobs, and ways leaders today face tougher business decisions than ever before.

Episode Highlights:

The AI Workforce Debate—Will AI replace jobs, or is it just shifting roles?

Business Growth vs. Quality—Can you scale without losing what makes a company The AI Regulation Debate, Who’s Really Setting AI Standards?

The AI Regulation Conundrum—Who’s Really Setting AI Standards?

The Leadership Playbook—How to make tough calls when the stakes are high?

This conversation is raw, real, and packed with insights for leaders, entrepreneurs, and working professionals.

1 note

·

View note

Text

Exploring the Indian Signboard Image Dataset: A Visual Journey

Introduction

Signboards constitute a vital element of the lively streetscape in India, showcasing a blend of languages, scripts, and artistic expressions. Whether in bustling urban centers or secluded rural areas, these signboards play an essential role in communication. The Indian Signboard Image Dataset documents this diversity, offering a significant resource for researchers and developers engaged in areas such as computer vision, optical character recognition (OCR), and AI-based language processing.

Understanding the Indian Signboard Image Dataset

The Indian Signboard Image Dataset comprises a variety of images showcasing signboards from different regions of India. These signboards feature:

Multilingual text, including Hindi, English, Tamil, Bengali, Telugu, among others

A range of font styles and sizes

Various backgrounds and lighting situations

Both handwritten and printed signboards

This dataset plays a vital role in training artificial intelligence models to recognize and interpret multilingual text in real-world environments. Given the linguistic diversity of India, such datasets are indispensable for enhancing optical character recognition (OCR) systems, enabling them to accurately extract text from images, even under challenging conditions such as blurriness, distortion, or low light.

Applications of the Dataset

The Indian Signboard Image Dataset plays a crucial role in various aspects of artificial intelligence research and development:

Enhancing Optical Character Recognition (OCR)

Training OCR systems on a wide range of datasets enables improved identification and processing of multilingual signboards. This capability is particularly beneficial for navigation applications, document digitization, and AI-driven translation services.

Advancing AI-Driven Translation Solutions

As the demand for instantaneous translation increases, AI models must be adept at recognizing various scripts and fonts. This dataset is instrumental in training models to effectively translate signboards into multiple languages, catering to the needs of travelers and businesses alike.

Improving Smart Navigation and Accessibility Features

AI-powered signboard readers can offer audio descriptions for visually impaired users. Utilizing this dataset allows developers to create assistive technologies that enhance accessibility for all individuals.

Supporting Autonomous Vehicles and Smart City Initiatives

AI models are essential for interpreting street signs in autonomous vehicles and smart city applications. This dataset contributes to the improved recognition of road signs, directions, and warnings, thereby enhancing navigation safety and efficiency.

Challenges in Processing Indian Signboard Images

Working with Indian signboards, while beneficial, poses several challenges:

Diversity in scripts and fonts – India recognizes more than 22 official languages, each characterized by distinct writing systems.

Environmental influences – Factors such as lighting conditions, weather variations, and the physical deterioration of signboards can hinder recognition.

Handwritten inscriptions – Numerous small enterprises utilize handwritten signage, which presents greater difficulties for AI interpretation.

To overcome these obstacles, it is essential to develop advanced deep learning models that are trained on varied datasets, such as the Indian Signboard Image Dataset.

Get Access to the Dataset

For researchers, developers, and AI enthusiasts, this dataset offers valuable resources to enhance the intelligence and inclusivity of AI systems. You may explore and download the Indian Signboard Image Dataset at the following link: Globose Technology Solution

Conclusion

The Indian Signboard Image Dataset represents more than a mere assortment of images; it serves as a portal for developing artificial intelligence solutions capable of traversing India's intricate linguistic environment. This dataset offers significant opportunities for advancements in areas such as enhancing optical character recognition accuracy, facilitating real-time translations, and improving smart navigation systems, thereby fostering AI-driven innovation.

Are you prepared to explore? Download the dataset today and commence the development of the next generation of intelligent applications.

0 notes

Text

Pollution Annotation / Pollution Detection

Pollution annotation involves labeling environmental data to identify and classify pollutants. This includes marking specific areas in images or videos and categorizing pollutant types. ### Key Aspects: - **Image/Video Labeling:** Using bounding boxes, polygons, keypoints, and semantic segmentation. - **Data Tagging:** Adding metadata about pollutants. - **Quality Control:** Ensuring annotation accuracy and consistency. ### Applications: - Environmental monitoring - Research - Training machine learning models Pollution annotation is crucial for effective pollution detection, monitoring, and mitigation strategies. AigorX Data annotationsData LabelerDataAnnotationData Annotation and Labeling.inc (DAL)DataAnnotation Fiverr Link- https://lnkd.in/gM2bHqWX

#image annotation services#artificial intelligence#annotation#machinelearning#annotations#ai data annotator#ai image#ai#ai data annotator jobs#data annotator#video annotation#image labeling

0 notes

Text

#73 February 2025 | Monthly Digital Breadcrumbs

Elon Musk speaks at Donald Trumps post-inauguration celebration and does a Nazi salute, twice, via PBS News. A bunch of things (which I added to my Tumblr) for your eyes and ears plus brain to spend time on (as no longer on Twitter). READ “We believe that electing the Ocean to be a Trustee of SAMS is one of the most important decisions in our history. It challenges outdated models of…

#1000#2025#activists#ads#advertising#advice#advocacy#AI#Alain de Botton#Alexandria Ocasio-Cortez#animated#animation#annotate#AOC#app#apple#Arcane#archeology#art#art school#artistic#artists#astronomy#Atkinson Hyperlegible#awards#backdoor#beauty#big data#biometrics#block ads

0 notes

Text

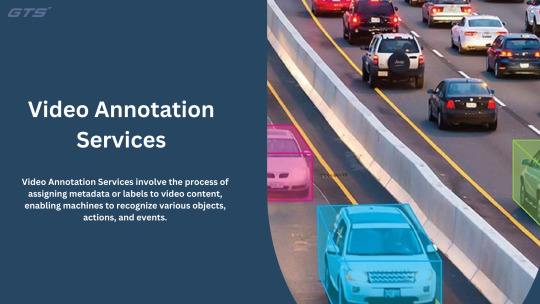

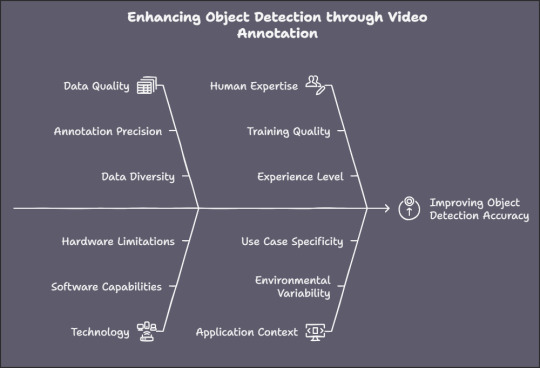

The Importance of Video Annotation Services in Object Detection and Recognition

Introduction:

In the rapidly advancing fields of artificial intelligence (AI) and machine learning (ML), a critical function of these technologies is the precise identification and recognition of objects within images and videos. This capability is vital for applications such as autonomous vehicles, security systems, and medical diagnostics, where object detection and recognition are integral to operational effectiveness. To develop intelligent systems, it is essential to have high-quality, labeled datasets for training algorithms. This is where video annotation services become indispensable.

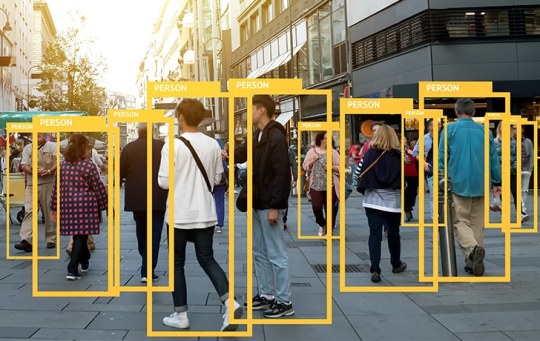

Video Annotation Services involve the process of assigning metadata or labels to video content, enabling machines to recognize various objects, actions, and events. In the realm of object detection and recognition, these services are fundamental for training AI models to comprehend and analyze visual information. Let us explore how video annotation services enhance object detection and recognition.

Educating AI with Labeled Datasets

Machine learning models, particularly those focused on computer vision, depend on extensive datasets that are accurately labeled. Video annotation services supply the essential labeled data that allows AI systems to learn how to identify objects and discern patterns within visual media. By annotating videos with designations such as "person," "car," "tree," or "dog," these services contribute to the creation of datasets that AI models utilize to enhance their object identification capabilities in real-world scenarios.

For example, the AI system of a self-driving vehicle must be able to identify pedestrians, traffic signals, and other cars to ensure safe navigation. To facilitate this training, video annotation services will label these objects across thousands of video frames, enabling the AI to recognize them in unfamiliar video footage.

Monitoring Object Movement Across Video Frames

In video sequences, objects frequently move, resulting in changes in their positions from one frame to the next. Video annotation services offer the capability to track these objects throughout the video. Video annotators utilize tools such as bounding boxes, polygons, and key point marking to emphasize and monitor the movement of objects over time. For instance, in a soccer match video, the ball, players, and goals can be labeled and tracked to analyze movement patterns, a task that would be challenging without the aid of video annotation services.

Ensuring Precise Object Recognition

Object recognition entails the identification of objects within an image or video and their classification into specific categories. Video annotation services play a crucial role in guaranteeing that object recognition models are trained with a high degree of accuracy. The availability of extensive data with accurate labels significantly enhances the performance of these systems in real-time recognition tasks.

For instance, video annotation may involve categorizing objects under varying conditions, such as different lighting, angles, or backgrounds. This variety of labeled data ensures that AI systems can effectively recognize objects across diverse real-world settings. Whether it involves identifying an individual in a crowded street or detecting a vehicle in various weather conditions, video annotation enables the AI model to generalize its learning effectively.

Diverse Annotation Techniques for Enhanced Recognition

Video annotation services offer a range of annotation techniques that facilitate detailed and precise object detection and recognition. Some of the prevalent methods include:

Bounding Boxes: Basic rectangular outlines that define objects of interest in each frame. Polygons: Employed to delineate the exact shape of irregular objects, providing greater accuracy than bounding boxes. Semantic Segmentation: Each pixel in an image or video frame is labeled to indicate the object it corresponds to, allowing for highly detailed object recognition. Keypoint Annotation: Essential for identifying body parts or specific features of objects, such as facial landmarks or joint points in human pose estimation. These sophisticated methods enhance the precision of object recognition, ensuring that even intricate objects or scenarios are accurately identified.

Practical Uses of Video Annotation in Object Detection

Video annotation services are essential across various sectors where object detection and recognition are critical. Some prominent practical applications include:

Autonomous Vehicles: Annotated video footage is instrumental in training self-driving cars to identify pedestrians, other vehicles, traffic signs, and road conditions, thereby facilitating safer navigation. Surveillance and Security: These services enhance security systems by enabling the real-time recognition of suspicious behaviors, faces, and objects, thereby improving monitoring capabilities. Retail and E-commerce: Object detection services assist retailers in tracking inventory, optimizing stock management, and enhancing customer experiences through improved product recommendations. Healthcare: In the realm of medical imaging, video annotation services are utilized to train AI systems to identify anomalies in body scan videos, which aids in the early detection of diseases. Sports Analytics: Annotating sports footage enables performance evaluation, including player tracking, strategic analysis, and injury prevention.

Scalability and Efficiency in AI Model Training

A significant challenge in AI development is the requirement for extensive datasets to train machine learning models. Video annotation services can enhance this process by efficiently annotating large quantities of video data. This scalability is crucial in fields such as autonomous vehicles and surveillance, where substantial data volumes are necessary for training dependable AI models.

Moreover, numerous video annotation services provide tools that facilitate automation and integration with other data systems, thereby increasing the speed and efficiency of the annotation process. These tools can automatically identify objects within video frames, allowing human annotators to verify or adjust the labels, thus conserving time and resources.

Conclusion

Video annotation services serve as the foundation for object detection and recognition systems in artificial intelligence. By supplying the labeled datasets essential for training machine learning models, these services empower AI Globose Technology Solutions to identify and interpret various elements effectively.

0 notes

Text

Why Do Companies Outsource Text Annotation Services?

Building AI models for real-world use requires both the quality and volume of annotated data. For example, marking names, dates, or emotions in a sentence helps machines learn what those words represent and how to interpret them.

At its core, different applications of AI models require different types of annotations. For example, natural language processing (NLP) models require annotated text, whereas computer vision models need labeled images.

While some data engineers attempt to build annotation teams internally, many are now outsourcing text annotation to specialized providers. This approach speeds up the process and ensures accuracy, scalability, and access to professional text annotation services for efficient, cost-effective AI development.

In this blog, we will delve into why companies like Cogito Tech offer the best, most reliable, and compliant-ready text annotation training data for the successful deployment of your AI project. What are the industries we serve, and why is outsourcing the best option so that you can make an informed decision!

What is the Need for Text Annotation Training Datasets?

A dataset is a collection of learning information for the AI models. It can include numbers, images, sounds, videos, or words to teach machines to identify patterns and make decisions. For example, a text dataset may consist of thousands of customer reviews. An audio dataset might contain hours of speech. A video dataset could have recordings of people crossing the street.

Text annotation services are crucial for developing language-specific or NLP models, chatbots, applying sentiment analysis, and machine translation applications. These datasets label parts of text, such as named entities, sentiments, or intent, so algorithms can learn patterns and make accurate predictions. Industries such as healthcare, finance, e-commerce, and customer service rely on annotated data to build and refine AI systems.

At Cogito Tech, we understand that high-quality reference datasets are critical for model deployment. We also understand that these datasets must be large enough to cover a specific use case for which the model is being built and clean enough to avoid confusion. A poor dataset can lead to a poor AI model.

How Do Text Annotation Companies Ensure Scalability?

Data scientists, NLP engineers, and AI researchers need text annotation training datasets for teaching machine learning models to understand and interpret human language. Producing and labeling this data in-house is not easy, but it is a serious challenge. The solution to this is seeking professional help from text annotation companies.

The reason for this is that as data volumes increase, in-house annotation becomes more challenging to scale without a strong infrastructure. Data scientists focusing on labeling are not able to focus on higher-level tasks like model development. Some datasets (e.g., medical, legal, or technical data) need expert annotators with specialized knowledge, which can be hard to find and expensive to employ.

Diverting engineering and product teams to handle annotation would have slowed down core development efforts and compromised strategic focus. This is where specialized agencies like ours come into play to help data engineers support their need for training data. We also provide fine-tuning, quality checks, and compliant-labeled training data, anything and everything that your model needs.

Fundamentally, data labeling services are needed to teach computers the importance of structured data. For instance, labeling might involve tagging spam emails in a text dataset. In a video, it could mean labeling people or vehicles in each frame. For audio, it might include tagging voice commands like “play” or “pause.”

Why is Text Annotation Services in Demand?

Text is one of the most common data types used in AI model training. From chatbots to language translation, text annotation companies offer labeled text datasets to help machines understand human language.

For example, a retail company might use text annotation to determine whether customers are happy or unhappy with a product. By labeling thousands of reviews as positive, negative, or neutral, AI learns to do this autonomously.

As stated in Grand View Research, “Text annotation will dominate the global market owing to the need to fine-tune the capacity of AI so that it can help recognize patterns in the text, voices, and semantic connections of the annotated data”.

Types of Text Annotation Services for AI Models

Annotated textual data is needed to help NLP models understand and process human language. Text labeling companies utilize different types of text annotation methods, including:

Named Entity Recognition (NER) NER is used to extract key information in text. It identifies and categorizes raw data into defined entities such as person names, dates, locations, organizations, and more. NER is crucial for bringing structured information from unstructured text.

Sentiment Analysis It means identifying and tagging the emotional tone expressed in a piece of textual information, typically as positive, negative, or neutral. This is commonly used to analyze customer reviews and social media posts to review public opinion.

Part-of-Speech (POS) Tagging It refers to adding metadata like assigning grammatical categories, such as nouns, pronouns, verbs, adjectives, and adverbs, to each word in a sentence. It is needed for comprehending sentence structure so that the machines can learn to perform downstream tasks such as parsing and syntactic analysis.

Intent Classification Intent classification in text refers to identifying the purpose behind a user’s input or prompt. It is generally used in the context of conversational models so that the model can classify inputs like “book a train,” “check flight,” or “change password” into intents and enable appropriate responses for them.

Importance of Training Data for NLP and Machine Learning Models

Organizations must extract meaning from unstructured text data to automate complex language-related tasks and make data-driven decisions to gain a competitive edge.

The proliferation of unstructured data, including text, images, and videos, necessitates text annotation to make this data usable as it powers your machine learning and NLP systems.

The demand for such capabilities is rapidly expanding across multiple industries:

Healthcare: Medical professionals employed by text annotation companies perform this annotation task to automate clinical documentation, extract insights from patient records, and improve diagnostic support.

Legal: Streamlining contract analysis, legal research, and e-discovery by identifying relevant entities and summarizing case law.

E-commerce: Enhancing customer experience through personalized recommendations, automated customer service, and sentiment tracking.

Finance: In order to identify fraud detection, risk assessment, and regulatory compliance, text annotation services are needed to analyze large volumes of financial text data.

By investing in developing and training high-quality NLP models, businesses unlock operational efficiencies, improve customer engagement, gain deeper insights, and achieve long-term growth.

Now that we have covered the importance, we shall also discuss the roadblocks that may come in the way of data scientists and necessitate outsourcing text annotation services.

Challenges Faced by an In-house Text Annotation Team

Cost of hiring and training the teams: Having an in-house team can demand a large upfront investment. This refers to hiring, recruiting, and onboarding skilled annotators. Every project is different and requires a different strategy to create quality training data, and therefore, any extra expenses can undermine large-scale projects.

Time-consuming and resource-draining: Managing annotation workflows in-house often demands substantial time and operational oversight. The process can divert focus from core business operations, such as task assignments, to quality checks and revisions.

Requires domain expertise and consistent QA: Though it may look simple, in actual, text annotation requires deep domain knowledge. This is especially valid for developing task-specific healthcare, legal, or finance models. Therefore, ensuring consistency and accuracy across annotations necessitates a rigorous quality assurance process, which is quite a challenge in terms of maintaining consistent checks via experienced reviewers.

Scalability problems during high-volume annotation tasks: As annotation needs grow, scaling an internal team becomes increasingly tough. Expanding capacity to handle large influx of data volume often means getting stuck because it leads to bottlenecks, delays, and inconsistency in quality of output.

Outsource Text Annotation: Top Reasons and ROI Benefits

The deployment and success of any model depend on the quality of labeling and annotation. Poorly labeled information leads to poor results. This is why many businesses choose to partner with Cogito Tech because our experienced teams validate that the datasets are tagged with the right information in an accurate manner.

Outsourcing text annotation services has become a strategic move for organizations developing AI and NLP solutions. Rather than spending time managing expenses, businesses can benefit a lot from seeking experienced service providers. Mentioned below explains why data scientists must consider outsourcing:

Cost Efficiency: Outsourcing is an economical way that can significantly reduce labor and infrastructure expenses compared to hiring internal workforce. Saving costs every month in terms of salary and infrastructure maintenance costs makes outsourcing a financially sustainable solution, especially for startups and scaling enterprises.

Scalability: Outsourcing partners provide access to a flexible and scalable workforce capable of handling large volumes of text data. So, when the project grows, the annotation capacity can increase in line with the needs.

Speed to Market: Experienced labeling partners bring pre-trained annotators, which helps projects complete faster and means streamlined workflows. This speed helps businesses bring AI models to market more quickly and efficiently.

Quality Assurance: Annotation providers have worked on multiple projects and are thus professional and experienced. They utilize multi-tiered QA systems, benchmarking tools, and performance monitoring to ensure consistent, high-quality data output. This advantage can be hard to replicate internally.

Focus on Core Competencies: Delegating annotation to experts has one simple advantage. It implies that the in-house teams have more time refining algorithms and concentrate on other aspects of model development such as product innovation, and strategic growth, than managing manual tasks.

Compliance & Security: A professional data labeling partner does not compromise on following security protocols. They adhere to data protection standards such as GDPR and HIPAA. This means that sensitive data is handled with the highest level of compliance and confidentiality. There is a growing need for compliance so that organizations are responsible for utilizing technology for the greater good of the community and not to gain personal monetary gains.

For organizations looking to streamline AI development, the benefits of outsourcing with us are clear, i.e., improved quality, faster project completion, and cost-effectiveness, all while maintaining compliance with trusted text data labeling services.

Use Cases Where Outsourcing Makes Sense

Outsourcing to a third party rather than performing it in-house can have several benefits. The foremost advantage is that our text annotation services cater to the needs of businesses at multiple stages of AI/ML development, which include agile startups to large-scale enterprise teams. Here’s how:

Startups & AI Labs Quality and reliable text training data must comply with regulations to be usable. This is why early-stage startups and AI research labs often need compliant labeled data. When startups choose top text annotation companies, they save money on building an internal team, helping them accelerate development while staying lean and focused on innovation.

Enterprise AI Projects Big enterprises working on production-grade AI systems need scalable training datasets. However, annotating millions of text records at scale is challenging. Outsourcing allows enterprises to ramp up quickly, maintain annotation throughput, and ensure consistent quality across large datasets.

Industry-specific AI Models Sectors such as legal and healthcare need precise and compliant training data because they deal with personal data that may violate individual rights while training models. However, experienced vendors offer industry-trained professionals who understand the context and sensitivity of the data because they adhere to regulatory compliance, which benefits in the long-term and model deployment stages.

Conclusion

There is a rising demand for data-driven solutions to support this innovation, and quality-annotated data is a must for developing AI and NLP models. From startups building their prototypes to enterprises deploying AI at scale, the demand for accurate, consistent, and domain-specific training data remains.

However, managing annotation in-house has significant limitations, as discussed above. Analyzing return on investment is necessary because each project has unique requirements. We have mentioned that outsourcing is a strategic choice that allows businesses to accelerate project deadlines and save money.

Choose Cogito Tech because our expertise spans Computer Vision, Natural Language Processing, Content Moderation, Data and Document Processing, and a comprehensive spectrum of Generative AI solutions, including Supervised Fine-Tuning, RLHF, Model Safety, Evaluation, and Red Teaming.

Our workforce is experienced, certified, and platform agnostic to accomplish tasks efficiently to give optimum results, thus reducing the cost and time of segregating and categorizing textual data for businesses building AI models. Original Article : Why Do Companies Outsource Text Annotation Services?

#text annotation#text annotation service#text annotation service company#cogitotech#Ai#ai data annotation#Outsource Text Annotation Services

0 notes

Text

Role of Data Annotation in Driving Accuracy of AI/ML

Data Annotation in Machine Learning: An Important Prerequisite

For machine learning models to perform well, they need large volumes of accurately labeled data. Annotation helps models “understand” data by identifying patterns, classifying inputs, and learning context.

Whether it’s image recognition, sentiment analysis, or object detection, annotation quality directly impacts model accuracy. Poor labeling leads to incorrect outputs, flawed predictions, and lost business value.

Outsourcing Data Support for AI/ML

Handling data annotation in-house can be time-consuming and resource-heavy. That’s why many businesses choose to outsource to experienced providers for better results.

Here’s why outsourcing works:

1. Domain-Specific Workflows

Industry-specific annotators improve labeling accuracy

Customized workflows match AI/ML use cases

2. Professional Excellence

Skilled annotators follow standardized processes

Use of advanced tools reduces human error

3. Assured Accuracy

Dedicated QA ensures consistency and precision

Regular audits and feedback loops enhance output quality

Summing Up

The success of AI/ML initiatives hinges on reliable data annotation services. By partnering with professionals who understand domain-specific needs, businesses can boost model performance and reduce time-to-market.

Read the full blog to explore how outsourcing annotation services can drive accuracy and scalability in your AI journey.

#data annotation#data annotation services#data annotation company#ai data annotation#ai ml#ai#artificial intelligence

0 notes

Text

Datasets That Matter: Fueling Your Machine Learning Projects

Introduction:

In the realm of machine learning (ML), the caliber of your data is crucial to the success of your project. Data acts as the cornerstone for algorithms to learn, adapt, and execute tasks. Utilizing appropriate datasets equips your models to attain exceptional accuracy, whereas subpar data can result in unreliable results. For both businesses and researchers, grasping the intricacies of datasets and their significance in ML is essential for achieving success.

The Importance of Datasets in Machine Learning

Datasets Machine Learning Projects algorithms depend on datasets to identify patterns, generate predictions, and automate various processes. The quality of the data directly influences the outcomes. High-quality datasets provide:

Enhanced Model Accuracy: Carefully curated datasets minimize noise and inconsistencies, allowing models to generalize more effectively.

Accelerated Training Time: Clean and organized data lessens the need for preprocessing, thereby expediting the training phase.

Scalability: Datasets designed for specific applications ensure that models can adapt to a variety of real-world situations.

Bias Mitigation: Well-balanced datasets help prevent skewed predictions, promoting fairness and dependability.

Attributes of High-Quality Datasets

To optimize the effectiveness of your ML initiatives, your datasets should possess the following essential attributes:

Relevance: The data must correspond to your particular problem or application area.

Accuracy: Reducing errors and inconsistencies in the data enhances the model’s performance.

Diversity: A broad array of examples allows the model to learn from different inputs, minimizing the risk of overfitting.

Adequate Volume: Larger datasets typically yield better generalization, provided they are representative.

Proper Annotation: Accurate labeling, such as for images and videos, is vital for supervised learning tasks.

The Significance of Image and Video Annotation

Image and video annotation is crucial in developing datasets for computer vision and related fields. Annotated datasets empower ML models to effectively detect, classify, and interpret visual information. Common applications include:

Autonomous Vehicles: Annotated datasets are essential for enabling vehicles to recognize objects, pedestrians, and traffic signs.

Healthcare AI: Accurate annotations are crucial for diagnostic tools to identify anomalies in medical imaging.

Retail and E-commerce: Image annotation plays a vital role in product identification and inventory control.

For organizations in need of specialized annotation services, GTS.AI provides customized solutions to develop datasets that adhere to industry standards.

Sources of Quality Datasets

Open Datasets: Resources such as Kaggle, ImageNet, and COCO offer a wide range of datasets suitable for various applications.

Custom Data Collection: Gather data tailored to your project requirements through focused collection strategies.

Professional Annotation Services: Utilize companies like GTS.AI to annotate and refine your raw data for optimal effectiveness.

Best Practices for Dataset Management

To maintain the efficacy of your datasets throughout the machine learning lifecycle, adhere to the following best practices:

Regular Updates: Periodically refresh your data to align with current trends and conditions.

Data Augmentation: Increase the diversity of your dataset by implementing transformations such as rotation, scaling, and cropping.

Quality Assurance: Conduct thorough validation checks to ensure accuracy and consistency.

Ethical Data Use: Comply with privacy regulations and ethical standards when sourcing and managing data.

Conclusion

High-quality datasets are fundamental to the success of machine learning initiatives. From image and video annotation to bespoke data collection, investing in appropriate datasets fosters innovation and leads to improved results. By collaborating with reputable experts like, Globose Technology Solutions you can empower your machine learning models with the necessary data to thrive.

Begin constructing impactful datasets today to realize the full potential of your machine learning projects.

0 notes

Text

How Video Transcription Services Improve AI Training Through Annotated Datasets

Video transcription services play a crucial role in AI training by converting raw video data into structured, annotated datasets, enhancing the accuracy and performance of machine learning models.

#video transcription services#aitraining#Annotated Datasets#machine learning#ultimate sex machine#Data Collection for AI#AI Data Solutions#Video Data Annotation#Improving AI Accuracy

0 notes