#LiDAR applications

Explore tagged Tumblr posts

Text

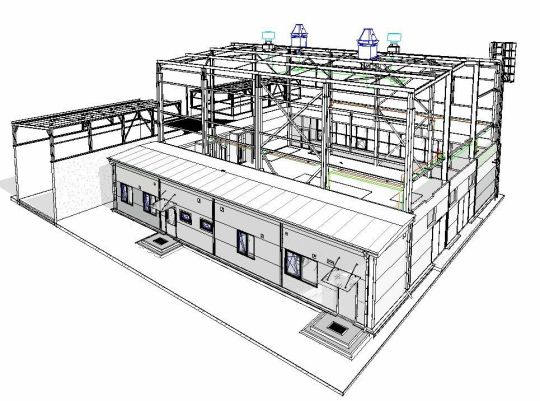

Exploring the Diverse Landscape of BIM Software in Construction: A Comprehensive Guide

Introduction: In the ever-evolving field of construction, Building Information Modeling (BIM) has emerged as a transformative technology that revolutionizes the way buildings are designed, constructed, and managed. BIM software plays a pivotal role in enhancing collaboration, improving efficiency, and minimizing errors throughout the construction process. This article delves into the various…

View On WordPress

#architectural design software#as-built documentation#BIM model accuracy#BIM software#Building Information Modeling#collaboration platforms#construction industry advancements#construction management software#construction project efficiency#Construction Technology#cost estimation tools#facility maintenance optimization#facility management solutions#laser scanning technology#LiDAR applications#MEP systems modeling#point cloud integration#project stakeholders collaboration#real-time coordination#structural engineering tools#sustainable building practices

0 notes

Text

#lidar#lidar technology#lidar ld06 module#innovation#iot#technology#raspberry pi#time of flight technology#tof#Autonomous Vehicles#robotics#drone mapping#industrial automation#360 degree scanning#iot applications#projects#agriculture drone#agriculture products

0 notes

Text

LiDAR Market will be around US$ 7.25 billion by 2030

The global lipid market is predicted to be worth US$ 21.71 Billion by 2030, as per Renub Research. Lipids exhibit amphoteric properties, featuring polar alcohol heads and a nonpolar fatty acid backbone. Triglycerides, a type of lipid, dissolve in nonpolar solvents like diethyl ether, benzene, and chloroform. Understanding the solubility behavior of lipids is crucial for experimental procedures.…

View On WordPress

#global LiDAR market#LiDAR market#LiDAR market by application#LiDAR market by technology#LiDAR market by types#LiDAR market growth#LiDAR market report#LiDAR market share#LiDAR market size

0 notes

Text

The Science of Discovering the Past: Geophysical Archaeology

By Glab310 - Own work, CC BY 4.0, https://commons.wikimedia.org/w/index.php?curid=113524155

While much of archaeology involves unearthing artifacts, knowing where to find those sites requires research at the surface, as does mapping the site. Geophysical surveys help archaeologists know where to focus their efforts and help them avoid fruitless digs where no artifacts or remaining structures lie below the surface as well as avoiding the destruction of sites that are culturally sensitive, such as cemeteries.

By see above - http://www.archaeophysics.com/3030/index.htmlTransferred from en.wikipedia by SreeBot, Public Domain, https://commons.wikimedia.org/w/index.php?curid=17210746

There are many methods used to map below the surface, some of which can be done with little training while others meld multiple methods for a more complete map of what's under the surface. Those techniques that are more specialized were adapted from those used to explore for minerals. Mineral surveys seek to know what is deep beneath the surface and archaeological sites are relatively near the surface. These surveys also are focused on larger structures that would take a long time to unearth.

Source: https://sha.org/the-montpelier-minelab-experiment/2012/03/

Various methods of performing geophysical surveys and reveal different information. Metal detectors can be used to find caches of metal, but they don't give detailed information of what is below the surface. They can be used to discover new places to focus studies on. They work by inducing eddy currents, or a looping current between the detector and the metal in the ground, which causes a change in how the current flows in the machine, resulting in a signal being reported to the user, either through sound or visual output. Many locations have regulations or laws that dictate how metal detectors can be used and the ownership of those items found.

Public Domain, https://commons.wikimedia.org/w/index.php?curid=109641426

A more precise form of the this falls into two types: Electrical resistance meters and electromagnetic conductivity. Electrical resistance meters work by inserting probes into the soil through which electrical currents are passed and the resistance of the ground around them is detected, revealing the structures beneath as things like stone have different resistance than the soil around them. Electromagnetic conductivity is similar to metal detection in that a magnetic field is created by an electric field of a known frequency while detectors pick up the change. These detectors and currents are stronger than those of metal detectors with a related increase in size of the detector.

By Archaeo-Physics LLC - http://www.archaeophysics.com, Public Domain, https://commons.wikimedia.org/w/index.php?curid=36404337

Ground-penetrating radar uses electromagnetic pulses to detect what is under the surface in a way similar to how radar works in other applications. The pulses are reflected off items under the surface and recorded by the detector. It's possible to discover how things are layered beneath the surface because of the differences in reflections.

By Cargyrak - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=48685015

Lidar is an optical technique that uses light, usually lasers, to map the land. It has the ability to penetrate foliage, such as forest canopies, and allows features beneath the surface to be distinguished. This also allows features that are too large to be distinguished from the ground to be mapped. Lidar has the additional benefit of being easily integrated into Geographical Information Systems, integrated computer hardware and software systems that are used to analyze and visualize geographic data.

24 notes

·

View notes

Text

Infrared heavy-metal-free quantum dots deliver sensitive and fast sensors for eye-safe LIDAR applications

The frequency regime lying in the shortwave infrared (SWIR) has very unique properties that make it ideal for several applications, such as being less affected by atmospheric scattering as well as being "eye-safe." These include Light Detection and Ranging (LIDAR), a method for determining ranges and distances using lasers, space localization and mapping, adverse weather imaging for surveillance and automotive safety, environmental monitoring, and many others. However, SWIR light is currently confined to niche areas, like scientific instrumentation and military use, mainly because SWIR photodetectors rely on expensive and difficult-to-manufacture materials. In the past few years, colloidal quantum dots—solution-processed semiconducting nanocrystals—have emerged as an alternative for mainstream consumer electronics.

Read more.

10 notes

·

View notes

Text

X950 New Lightweight Industrial Drone Platform

Streamlined Structure, Ingenious Design Spacious flight control bay ,supporting installation of various FC systems. Multiple payload mounts, flexible expansion. Help you DIY your drone for diverse industrial applications.

✅ Minimalist industrial design — streamlined and compact

✅ Tool-free, quick-detach spiral arms — lightweight and portable

✅ Spacious flight control bay for LiDAR, cameras & more

✅ Large battery bay supports multiple battery types

✅ Multiple mount points for gimbals, lights, speakers & more

youtube

Learn more about EFT drone:

EFT drone official store:

2 notes

·

View notes

Text

NASA tests new ways to stick the landing in challenging terrain

Advancing new hazard detection and precision landing technologies to help future space missions successfully achieve safe and soft landings is a critical area of space research and development, particularly for future crewed missions.

To support this, NASA's Space Technology Mission Directorate (STMD) is pursuing a regular cadence of flight testing on a variety of vehicles, helping researchers rapidly advance these critical systems for missions to the moon, Mars, and beyond.

"These flight tests directly address some of NASA's highest-ranked technology needs, or shortfalls, ranging from advanced guidance algorithms and terrain-relative navigation to lidar-and optical-based hazard detection and mapping," said Dr. John M. Carson III, STMD technical integration manager for precision landing and based at NASA's Johnson Space Center in Houston.

Since the beginning of this year, STMD has supported flight testing of four precision landing and hazard detection technologies from many sectors, including NASA, universities, and commercial industry. These cutting-edge solutions have flown aboard a suborbital rocket system, a high-speed jet, a helicopter, and a rocket-powered lander testbed. That's four precision landing technologies tested on four different flight vehicles in four months.

"By flight testing these technologies on Earth in spaceflight-relevant trajectories and velocities, we're demonstrating their capabilities and validating them with real data for transitioning technologies from the lab into mission applications," said Dr. Carson. "This work also signals to industry and other partners that these capabilities are ready to push beyond NASA and academia and into the next generation of moon and Mars landers."

The following NASA-supported flight tests took place between February and May:

Identifying landmarks to calculate accurate navigation solutions is a key function of Draper's Multi-Environment Navigator (DMEN), a vision-based navigation and hazard detection technology designed to improve safety and precision of lunar landings.

Aboard Blue Origin's New Shepard reusable suborbital rocket system, DMEN collected real-world data and validated its algorithms to advance it for use during the delivery of three NASA payloads as part of NASA's Commercial Lunar Payload Services (CLPS) initiative. On Feb. 4, DMEN performed the latest in a series of tests supported by NASA's Flight Opportunities program, which is managed at NASA's Armstrong Flight Research Center in Edwards, California.

During the February flight, which enabled testing at rocket speeds on ascent and descent, DMEN scanned the Earth below, identifying landmarks to calculate an accurate navigation solution. The technology achieved accuracy levels that helped Draper advance it for use in terrain-relative navigation, which is a key element of landing on other planets.

Several highly dynamic maneuvers and flight paths put Psionic's Space Navigation Doppler Lidar (PSNDL) to the test while it collected navigation data at various altitudes, velocities, and orientations.

Psionic licensed NASA's Navigation Doppler Lidar technology developed at Langley Research Center in Hampton, Virginia, and created its own miniaturized system with improved functionality and component redundancies, making it more rugged for spaceflight.

In February, PSNDL along with a full navigation sensor suite was mounted aboard an F/A-18 Hornet aircraft and underwent flight testing at NASA Armstrong.

The aircraft followed a variety of flight paths over several days, including a large figure-eight loop and several highly dynamic maneuvers over Death Valley, California. During these flights, PSNDL collected navigation data relevant for lunar and Mars entry and descent.

The high-speed flight tests demonstrated the sensor's accuracy and navigation precision in challenging conditions, helping prepare the technology to land robots and astronauts on the moon and Mars. These recent tests complemented previous Flight Opportunities-supported testing aboard a lander testbed to advance earlier versions of their PSNDL prototypes.

Researchers at NASA's Goddard Space Flight Center in Greenbelt, Maryland, developed a state-of-the-art Hazard Detection Lidar (HDL) sensor system to quickly map the surface from a vehicle descending at high speed to find safe landing sites in challenging locations, such as Europa (one of Jupiter's moons), our own moon, Mars, and other planetary bodies throughout the solar system. The HDL-scanning lidar generates three-dimensional digital elevation maps in real time, processing approximately 15 million laser measurements and mapping two football fields' worth of terrain in only two seconds.

In mid-March, researchers tested the HDL from a helicopter at NASA's Kennedy Space Center in Florida, with flights over a lunar-like test field with rocks and craters. The HDL collected numerous scans from several different altitudes and view angles to simulate a range of landing scenarios, generating real-time maps. Preliminary reviews of the data show excellent performance of the HDL system.

The HDL is a component of NASA's Safe and Precise Landing—Integrated Capabilities Evolution (SPLICE) technology suite. The SPLICE descent and landing system integrates multiple component technologies, such as avionics, sensors, and algorithms, to enable landing in hard-to-reach areas of high scientific interest. The HDL team is also continuing to test and further improve the sensor for future flight opportunities and commercial applications.

Providing pinpoint landing guidance capability with minimum propellant usage, the San Diego State University (SDSU) powered-descent guidance algorithms seek to improve autonomous spacecraft precision landing and hazard avoidance.

During a series of flight tests in April and May, supported by NASA's Flight Opportunities program, the university's software was integrated into Astrobotic's Xodiac suborbital rocket-powered lander via hardware developed by Falcon ExoDynamics as part of NASA TechLeap Prize's Nighttime Precision Landing Challenge.

The SDSU algorithms aim to improve landing capabilities by expanding the flexibility and trajectory-shaping ability and enhancing the propellant efficiency of powered-descent guidance systems. They have the potential for infusion into human and robotic missions to the moon as well as high-mass Mars missions.

By advancing these and other important navigation, precision landing, and hazard detection technologies with frequent flight tests, NASA's Space Technology Mission Directorate is prioritizing safe and successful touchdowns in challenging planetary environments for future space missions.

IMAGE: New Shepard booster lands during the flight test on February 4, 2025. Credit: Blue Origin

2 notes

·

View notes

Text

If you've been wondering when you’ll be able to order the flame-throwing robot that Ohio-based Throwflame first announced last summer, that day has finally arrived. The Thermonator, what Throwflame bills as “the first-ever flamethrower-wielding robot dog” is now available for purchase. The price? $9,420.

Thermonator is a quadruped robot with an ARC flamethrower mounted to its back, fueled by gasoline or napalm. It features a one-hour battery, a 30-foot flame-throwing range, and Wi-Fi and Bluetooth connectivity for remote control through a smartphone.

It also includes a Lidar sensor for mapping and obstacle avoidance, laser sighting, and first-person-view navigation through an onboard camera. The product appears to integrate a version of the Unitree Go2 robot quadruped that retails alone for $1,600 in its base configuration.

The company lists possible applications of the new robot as "wildfire control and prevention," "agricultural management," "ecological conservation," "snow and ice removal," and "entertainment and SFX." But most of all, it sets things on fire in a variety of real-world scenarios.

Back in 2018, Elon Musk made the news for offering an official Boring Company flamethrower that reportedly sold 10,000 units in 48 hours. It sparked some controversy, because flamethrowers can also double as weapons or potentially start wildfires.

Flamethrowers are not specifically regulated in 48 US states, although general product liability and criminal laws may still apply to their use and sale. They are not considered firearms by federal agencies. Specific restrictions exist in Maryland, where flamethrowers require a Federal Firearms License to own, and California, where the range of flamethrowers cannot exceed 10 feet.

Even so, to state the obvious, flamethrowers can easily burn both things and people, starting fires and wreaking havoc if not used safely. Accordingly, the Thermonator might be one Christmas present you should skip for little Johnny this year.

12 notes

·

View notes

Text

3D Laser Scanning – Types | Benefits | Applications

3D Laser Scanning

3D laser scanning techniques have been developed since the end of 1990s for 3D digital measurement, documentation and visualization in several fields including 3D design in processing industry, documentation and surveying in architecture and infrastructure. By using a 3D laser scanner, a tunnel or underground construction can be digitized in 3D with a fast-scanning speed and high resolution up to “mm” level.

The scanning data consists of not only XY-Z co-ordinates but also high-resolution images, either gray-scale (with reflex intensity data) or color (with RGB data), and then can be transformed into a global co-ordinate system by control survey. Therefore, any rock engineering objects with its as-built situation can be quickly recorded as the 3D digital and visual format in a real co-ordinate system and provides a potential application for 3D measurement, documentation and visualization with high resolution and accuracy.

In modern engineering the term ‘laser scanning’ meaning is the controlled steering of laser beams followed by a distance measurement at every direction. This method, often called 3D object scanning or 3D laser scanning, is used to rapidly capture shapes of objects, buildings, and landscapes.

What is 3D Laser Scanning?

3D laser scanning is a non-destructive, non-contact method of capturing data that can be used for rapid and accurate creation of three-dimensional files, for archiving and digital manipulation. A 3D laser scanner emits a narrow laser beam that hits a target object, gathering millions of closely spaced measurements in a matter of minutes. These scanned measurements are put together and grouped into compressed point cloud databases, which can be processed to generate a 3D dense representation of the object.

3D Scanners Bridging Physical and Digital Worlds

3D scanners are tri-dimensional measurement devices used to capture real-world objects or environments so that they can be remodeled or analyzed in the digital world. The latest generation of 3D scanners do not require contact with the physical object being captured.

3D scanners can be used to get complete or partial 3D measurements of any physical object. The majority of these devices generate points or measures of extremely high density when compared to traditional “point-by-point” measurement devices.

How 3D Scanning Works?

Scanning results are represented using free-form, unstructured three-dimensional data, usually in the form of a point cloud or a triangle mesh. Certain types of scanners also acquire color information for applications where this is important. Images/scans are brought into a common reference system, where data is merged into a complete model. This process — called alignment or registration — can be performed during the scan itself or as a post-processing step.

Computer software can be used to clean up the scan data, filling holes, correcting errors and improving data quality. The resulting triangle mesh is typically exported as an STL (STereoLithography or Standard Tessellation Language) file or converted to Non-uniform Rational B-Spline (NURBS) surfaces for CAD and BIM modeling.

Types of Laser Scanning

1. Airborne Laser Scanning (LiDAR)

Airborne laser scanning (LiDAR = acronym for ‘Light detection and Ranging’, also LIDAR) is a scanning technique for capturing data on the features of, and objects on, the surface of the earth. It is an important data source in environmental studies, since it is capable of mapping topographic height and the height of objects on the surface to a significant vertical and horizontal accuracy, and over large areas. Airborne laser scanning is an active remote sensing technology able to rapidly collect data from vast areas.

2. Terrestrial Laser Scanning

Terrestrial Laser Scanners (TLS) are positioned directly on the ground, or on a platform placed on the ground, and are normally mounted on a tripod. TLS is, in its essence, an improved version of the laser tachometric measurement toolkit (the so-called total station) that is based on the combination of distances and angles measured from a fixed point. Tachometric laser scanners digitize objects of interest with a frequency of 1000 Hz or higher. Each point is measured per one oblique distance and, additionally, two orthogonal angles are measured. Most TLS are long-range devices. Nowadays, a great variety of TLS is available with different range and pulse frequencies.

3. Handheld (portable) Laser Scanning

There has recently been an increase in the application of handheld scanners. Their basic advantage is their portability. Scanners that are attached to light portable stands fall in this category as well, even though they are not ‘handheld’ in the true sense of the word. Primary used in reverse engineering, nowadays they are very often employed in digital documentation of moveable cultural heritage objects.

4. Long- and Short-Range Laser Scanning

Long-range laser scanning is tailored for surveying and monitoring expansive areas or structures. Using high-powered lasers and advanced optics, it covers distances from yards to miles/meters to kilometers. Employing time-of-flight or phase-based technologies, it finds applications in geological surveys, urban planning, infrastructure monitoring, and archaeological site mapping. Short-range laser scanning focuses on high-precision tasks within confined spaces. Covering distances from centimeters to meters/ feet to yards, it utilizes structured light or laser triangulation. Widely used in industrial metrology, 3D scanning, quality control, and cultural heritage preservation, it excels in capturing fine details with accuracy.

3D Scanning File Formats

TZF: This format is a Trimble scan files in a zipper format. The software exports the current project as a folder with:

• One TZF format file per station • One TCF format file per station if the station has been acquired with images

E57: This format is a file format specified by the ASTM (American Society of Testing and Materials), an international standards organization. The E57 format supports two types of data: Gridded Data and Non-Gridded Data. Gridded Data is a data which aligned in regular arrays.

E57 Gridded Files: The software exports the current project as one LAS 1.2 format file.

E57 Non-Gridded Files: The software exports the current project as one LAS 1.2 format file.

PTX: This format is an ASCII based for scan file format. The software exports the current project as one LAS 1.2 format file.

LAS, Non-Gridded: The format is public file format for interchanging 3-dimensional point cloud data between users. It is binary-based and has several versions: 1.0, 1.1, 1.2, 1.3 and 1.4. The application exports the current project as one LAS 1.2 format files.

POD, Non-Gridded: The POD (Point Database) file format is Bently Pointools’ native point cloud format. The software exports the current project as one POD format file. Points, color, intensity and normal (if available) information are exported.

RCP: This format file is a project file for Recap from Autodesk. The software exports the current project as one RCP format file.

TDX: TDX is Trimble Data eXchange file format, commonly used in some Trimble software applications like TBC (Trimble Business Center) or RealWorks. The software exports the information listed below:

• Stations with registration sets • Created panorama(s) • Measured points • Leveling information

Benefits of 3D Laser Scanning

3D laser scanning has become an indispensable tool across many industries due to its ability to capture highly detailed and more accurate 3D data. Here are some of the key benefits of 3D laser scanning:

High Accuracy

Laser scanning provides extremely accurate measurements, making it effective for applications where precision is critical, such as engineering, construction, and product manufacturing.

Rapid Data Capture

Laser scanners can quickly collect a large amount of data, reducing the risk associated with scanning high buildings and improving field staff safety.

Non-contact Technology

Laser scanning is noninvasive and doesn’t require physical contact with the object or environment being scanned, making it ideal for fragile, hazardous, or hard-to-reach locations.

Comprehensive Documentation

Laser scanning creates detailed and comprehensive digital records of objects, buildings, or landscapes, which are invaluable for preservation and historical archiving.

Visualization

Data from 3D laser scans can be used to create highly realistic visualizations, aiding in the design, analysis, and communication of complex structures and spaces.

Clash Detection

When integrated with building information modeling (BIM), laser scanning helps identify clashes between design plans and existing structures, reducing costly construction errors.

Applications of 3D Laser Scanning

3D Laser Scanning is used in numerous applications: Industrial, architectural, civil surveying, urban topography, reverse engineering, and mechanical dimensional inspection are just a few of the versatile applications. 3D laser scanning technology allows for high resolution and dramatically faster 3D digitizing over other conventional metrology technologies and techniques. Some very exciting applications are animation and virtual reality applications.

1. Construction Industry and Civil Engineering

a. As-built drawings of bridges, industrial plants and monuments b. Documentation of historical sites c. Site modelling and lay outing d. Quality control e. Quantity surveys f. Freeway redesign g. Establishing a benchmark of prre-existing shape/state in order to detect structural changes resulting from exposure to extreme loadings such as earthquake, vessel/truck impact or fire. h. Create GIS (Geographic Information System) maps and Geomatics

2. Reverse Engineering

Reverse Engineering refers to the ability to reproduce the shape of an existing object. It is based on creating a digitized version of objects or surfaces, which can later be turned into molds or dies. It is a very common procedure, which has diverse applications in various industries. Non- contact 3D laser scanning allows even malleable objects to be scanned in a matter of minutes without compression, which could change their dimensions or damage to their surfaces. Parts and models of all sizes and shapes can be quickly and accurately captured. 3D laser scanning for reverse engineering provides excellent accuracies and helps to get products to market quicker and with less development and engineering costs. 3D Laser scanning provides the fast, accurate, and automated way to acquire 3D digital data and a CAD and BIM model of part’s geometry for reverse engineering when none is available. Also, new features and updates can be integrated into old parts once the modeling is accomplished. A practical mechanical and civil engineering application would be to assist in the production of "as built" data and documentation. Currently, many manufacturing or construction activities are documented after the actual assembly of a machine or civil project by a designer or engineering professional. 3D laser scanners could expedite this activity to reduce man-hours required to fully document an installation for legacy.

3. Mechanical Applications

Reverse engineering of a mechanical component requires a precise digital model of the objects to be reproduced. Rather than a set of points a precise digital model can be represented by a polygon mesh, a set of flat or curved NURBS surfaces, or ideally for mechanical components, a CAD solid model. A 3D scanner can be used to digitize free-form or gradually changing shaped components as well as prismatic geometries whereas a coordinate measuring machine is usually used only to determine simple dimensions of a highly prismatic model. These data points are then processed to create a usable digital model, usually using specialized reverse engineering software.

4. Civil Applications

Civil activities could be for a roadway periodic inspection. The digitized roadway data could be contrasted to previous roadway 3D scans to predict rate of deterioration. This data could be very helpful in estimating roadway repair or replacement costing information. When personnel accessibility and/or safety concerns prevent a standard survey, 3D laser scanning could provide an excellent alternative. 3D Laser scanning has been used to perform accurate and efficient as-built surveys and before-and after construction and leveling survey.

5. Design Process

Design process including: a. Increasing accuracy working with complex parts and shapes b. Coordinating product design using parts from multiple sources c. Updating old CD scans with those from more current technology d. Replacing missing or older parts e. Creating cost savings by allowing as-built design services, for example: automotive manufacturing plants. f. “Bringing the plant to the engineers” with web shared scan and saving travel costs.

Conclusion

3D laser scanning equipment senses the shape of an object and collects data that defines the location of the object’s outer surface. This distinct technology has found applications in many industries including discrete and process manufacturing, utilities, construction. Laser scanning technology has matured and developed in the past two decades to become a leading surveying technology for the acquisition of spatial information.

The high-quality data produced by laser scanners are now used in many of surveying’s specialty fields, including topographic, environmental, and industrial. These data include raw, processed, and edited dense point clouds; digital terrain and surface models; 3D city models; railroad and power line models; and 3D documentation of cultural and historical landmarks. 3D laser scanners have a wide range of applications which applicable to very small object to a wide range area.

#3DLaserScanning#ScantoBIMservices#BIMModelingServices#AirborneLaserScanning#TerrestrialLaserScanning#LongRangeLaserScanning#ShortRangeLaserScanning#BIMServices#Benefitsof3DLaserScanning#Applicationsof3DLaserScanning

1 note

·

View note

Text

Exploring Photonics and the Role of Photonics Simulation

Photonics is a cutting-edge field of science and engineering focused on the generation, manipulation, and detection of light (photons). From powering high-speed internet connections to enabling precision medical diagnostics, photonics drives innovation across industries. With advancements in photonics simulation, engineers and researchers can now design and optimize complex photonic systems with unparalleled accuracy, paving the way for transformative technologies.

What Is Photonics?

Photonics involves the study and application of photons, the fundamental particles of light. It encompasses the behavior of light across various wavelengths, including visible, infrared, and ultraviolet spectrums. Unlike electronics, which manipulates electrons, photonics harnesses light to transmit, process, and store information.

The applications of photonics span diverse fields, such as telecommunications, healthcare, manufacturing, and even entertainment. Technologies like lasers, optical fibers, and sensors all rely on principles of photonics to function effectively.

Why Is Photonics Important?

Photonics is integral to the modern world for several reasons:

Speed and Efficiency Light travels faster than electrons, making photonics-based systems ideal for high-speed data transmission. Fiber-optic networks, for instance, enable lightning-fast internet and communication.

Miniaturization Photonics enables the development of compact and efficient systems, such as integrated photonic circuits, which are smaller and more energy-efficient than traditional electronic circuits.

Precision Applications From laser surgery in healthcare to high-resolution imaging in astronomy, photonics offers unparalleled precision in diverse applications.

The Role of Photonics Simulation

As photonic systems become more complex, designing and optimizing them manually is increasingly challenging. This is where photonics simulation comes into play.

Photonics simulation involves using advanced computational tools to model the behavior of light in photonic systems. It allows engineers to predict system performance, identify potential issues, and fine-tune designs without the need for costly and time-consuming physical prototypes.

Key Applications of Photonics Simulation

Telecommunications Photonics simulation is crucial for designing optical fibers, waveguides, and integrated photonic circuits that power high-speed data networks. Simulations help optimize signal strength, reduce loss, and enhance overall system efficiency.

Healthcare In the medical field, photonics simulation aids in the development of imaging systems, laser-based surgical tools, and diagnostic devices. For instance, simulation tools are used to design systems for optical coherence tomography (OCT), a non-invasive imaging technique for detailed internal body scans. Medical device consulting provides expert guidance on the design, development, and regulatory compliance of innovative medical technologies.

Semiconductors and Electronics Photonics simulation supports the creation of photonic integrated circuits (PICs) that combine optical and electronic components. These circuits are essential for applications in computing, sensing, and communication.

Aerospace and Defense Photonics simulation enables the design of systems like lidar (Light Detection and Ranging), which is used for navigation and mapping. Simulations ensure these systems are accurate, reliable, and robust for real-world applications. Aerospace consulting offers specialized expertise in designing, analyzing, and optimizing aerospace systems for performance, safety, and innovation.

Energy and Sustainability Photonics plays a vital role in renewable energy technologies, such as solar cells. Simulation tools help optimize light capture and energy conversion efficiency, making renewable energy more viable and cost-effective. Clean energy consulting provides expert guidance on implementing sustainable energy solutions, optimizing efficiency, and reducing environmental impact.

Benefits of Photonics Simulation

Cost-Efficiency: By identifying potential issues early in the design phase, simulation reduces the need for multiple physical prototypes, saving time and resources.

Precision and Accuracy: Advanced algorithms model light behavior with high accuracy, ensuring designs meet specific performance criteria.

Flexibility: Simulations can model a wide range of photonic phenomena, from simple lenses to complex integrated circuits.

Innovation: Engineers can experiment with new materials, configurations, and designs in a virtual environment, fostering innovation without risk.

Challenges in Photonics Simulation

Despite its advantages, photonics simulation comes with its own set of challenges:

Complexity of Light Behavior Modeling light interactions with materials and components at nanoscales requires sophisticated algorithms and powerful computational resources.

Integration with Electronics Photonics systems often need to work seamlessly with electronic components, adding layers of complexity to the simulation process.

Material Limitations Accurately simulating new or unconventional materials can be challenging due to limited data or untested behavior.

The Future of Photonics and Photonics Simulation

Photonics is at the forefront of technological innovation, with emerging trends that promise to reshape industries. Some of these trends include:

Quantum Photonics: Leveraging quantum properties of light for applications in secure communication, advanced sensing, and quantum computing.

Silicon Photonics: Integrating photonics with silicon-based technologies for cost-effective and scalable solutions in telecommunications and computing.

Artificial Intelligence (AI) in Photonics: Using AI algorithms to enhance photonics simulation, enabling faster and more accurate designs.

Biophotonics: Exploring the interaction of light with biological systems to advance healthcare and life sciences.

As photonics continues to evolve, the role of simulation will only grow in importance. Advanced simulation tools will empower engineers to push the boundaries of what is possible, enabling innovations that improve lives and drive progress.

Conclusion

Photonics and photonics simulation are shaping the future of technology, offering solutions that are faster, more efficient, and precise. By harnessing the power of light, photonics is revolutionizing industries, from healthcare to telecommunications and beyond. With the aid of simulation tools, engineers can design and optimize photonic systems to meet the challenges of today and tomorrow. As this exciting field continues to advance, its impact on society will be nothing short of transformative.

2 notes

·

View notes

Text

Video as a Sensor Market Report: Opportunities, Challenges & Projections

Accelerating Intelligence: The Rise of Video as a Sensor Technology

We are witnessing a transformation in how machines perceive the world. The global Video as a Sensor market is advancing rapidly, driven by breakthroughs in edge computing, machine learning, and real-time video analytics. video as a sensor market is no longer confined to traditional video surveillance; it now serves as a dynamic, sensor-based system for intelligent decision-making across diverse industries. From optimizing urban traffic to enabling autonomous navigation, VaaS is a foundational layer of next-generation intelligent infrastructure.

By 2031, the Video as a Sensor market is projected to soar to USD 101.91 billion, growing at a robust CAGR of 8%, up from USD 69.72 billion in 2023. This trajectory is fueled by the demand for automation, real-time analytics, and safer environments.

Request Sample Report PDF (including TOC, Graphs & Tables): https://www.statsandresearch.com/request-sample/40562-global-video-as-a-sensor-market

Intelligent Video Analytics: Enabling Real-Time Situational Awareness

VaaS leverages video streams as rich data sources. Through embedded AI algorithms, these systems detect and analyze objects, behaviors, and environments—eliminating the need for additional sensor hardware. This shift towards intelligent visual perception is enhancing operations in mission-critical industries such as:

Public Safety: Automated threat recognition and proactive alert systems.

Retail: Heat mapping, customer journey tracking, and loss prevention.

Healthcare: Patient monitoring, anomaly detection, and contactless diagnostics.

Transportation: Traffic flow optimization, vehicle classification, and pedestrian safety.

Manufacturing: Equipment monitoring, quality inspection, and workplace safety.

Get up to 30%-40% Discount: https://www.statsandresearch.com/check-discount/40562-global-video-as-a-sensor-market

Video as a Sensor Market Segmentation and Strategic Insights

By Type: Standalone vs. Integrated Video Sensors

Standalone Video Sensors offer edge-based intelligence, allowing immediate processing without dependence on centralized systems. Their advantages include:

Reduced latency

Lower bandwidth usage

Enhanced privacy

Ideal for retail stores, small-scale surveillance, and localized analytics

Integrated Video Sensors incorporate multi-modal data inputs. They combine visual data with thermal, motion, acoustic, and even LiDAR sensors to provide a more comprehensive picture. Applications include:

Autonomous Vehicles: Real-time fusion of vision and radar data

Industrial Automation: Hazard detection and predictive maintenance

Smart Cities: Integrated environment and crowd monitoring

By Material: Components Driving Performance and Durability

High-performance materials play a pivotal role in ensuring the reliability and longevity of video sensors. The key components include:

Camera Lenses: Engineered from precision optical glass or polycarbonate for clarity and high zoom capabilities.

Semiconductor Materials: CMOS sensors dominate due to their power efficiency and speed, supporting high-frame-rate video and integration with AI accelerators.

Plastic & Metal Casings: Rugged enclosures designed for outdoor and industrial environments, supporting IP67/IP68 ratings and thermal regulation.

These innovations not only enhance video quality but also reduce device footprint and operational costs.

By End-User: Sector-Wide Transformation Through Video as a Sensor Market

1. Security and Surveillance

Automatic license plate recognition (ALPR)

Biometric identification (face, gait, posture)

Perimeter breach detection

Crowd density analysis

2. Retail

Queue management systems

Shopper intent prediction

Stock-out alerts and planogram compliance

Behavioral segmentation

3. Automotive

Adaptive cruise control and lane-keeping

360-degree situational awareness

Driver monitoring systems (DMS)

Smart parking automation

4. Healthcare

Non-intrusive patient surveillance

Elderly fall detection

Remote surgery and diagnostic imaging

Infection control via contact tracing

5. Smart Cities

Traffic light optimization

Illegal dumping and graffiti detection

Air quality monitoring via visual indicators

Emergency response coordination

Regional Video as a Sensor Market Outlook: A Global Wave of Adoption

North America

With a mature tech ecosystem and strong surveillance infrastructure, North America remains a leader in adopting advanced VaaS systems, especially for homeland security, smart policing, and industrial automation.

Asia-Pacific

Rapid urbanization and significant investments in smart city projects across China, Japan, and India position this region as the fastest-growing VaaS market. Automotive and manufacturing sectors serve as major adoption verticals.

Europe

Driven by stringent GDPR compliance and sustainability goals, Europe emphasizes privacy-focused AI video analytics. Intelligent transportation systems (ITS) and energy-efficient smart buildings are driving demand.

Middle East and Africa

Massive infrastructure initiatives and security upgrades are propelling demand. VaaS is gaining traction in oil facilities, public safety, and tourism hubs.

South America

Emerging VaaS applications in agriculture (precision farming), logistics, and crime detection are gaining momentum as governments and enterprises modernize legacy systems.

Competitive Landscape: Leaders in Video Intelligence

The competitive environment is shaped by innovation in AI chips, edge processors, and scalable cloud architectures. Major players include:

Hikvision – AI-powered surveillance and edge computing

Bosch Security Systems – Integrated security platforms

Axis Communications – Smart network cameras with in-built analytics

Honeywell International – Industrial-grade video intelligence

FLIR Systems – Thermal and multi-sensor fusion

Sony & Samsung Electronics – High-resolution CMOS sensors

Qualcomm, Intel, NVIDIA – AI chipsets and embedded vision

Google Cloud & AWS – VaaS via scalable, cloud-native platforms

Smaller innovators and startups are also disrupting the field with niche capabilities in facial recognition, retail analytics, and edge-AI chipsets.

Future Outlook: Pathways to Intelligent Automation

The future of the Video as a Sensor market is shaped by convergence and miniaturization. We anticipate:

Edge-AI Proliferation: Microprocessors integrated directly into cameras

5G-Enabled Real-Time Processing: Enabling ultra-low latency video transmission

Privacy-Preserving AI: Federated learning and on-device encryption

Autonomous Monitoring: Drones and mobile robots using vision as their primary sense

These trends position video as not just a sensor but as a strategic tool for perception, prediction, and control in an increasingly automated world.

Purchase Exclusive Report: https://www.statsandresearch.com/enquire-before/40562-global-video-as-a-sensor-market

Conclusion

The evolution of Video as a Sensor technology is redefining how industries sense, interpret, and act. As AI-driven video analysis becomes a core enabler of digital transformation, the Video as a Sensor market is poised to be one of the most impactful sectors of the coming decade. Enterprises and governments that invest early in scalable, intelligent video infrastructure will gain unprecedented advantages in efficiency, security, and operational agility.

Our Services:

On-Demand Reports: https://www.statsandresearch.com/on-demand-reports

Subscription Plans: https://www.statsandresearch.com/subscription-plans

Consulting Services: https://www.statsandresearch.com/consulting-services

ESG Solutions: https://www.statsandresearch.com/esg-solutions

Contact Us:

Stats and Research

Email: [email protected]

Phone: +91 8530698844

Website: https://www.statsandresearch.com

1 note

·

View note

Text

UAVISUALS: Leading Australia’s Drone Inspection and Data Solutions with Precision and Innovation

Industries across Australia are transforming through the power of drone technology, and UAVISUALS stands at the forefront of this revolution. UAVISUALS offers advanced drone inspections and data solutions to enhance safety, efficiency, and data accuracy across multiple sectors. From construction and energy to environmental monitoring, UAVISUALS empowers industries to leverage aerial data insights that drive better decision-making and operational excellence.

Rethinking Inspections with Drone Technology

Traditional inspection methods are often labor-intensive, costly, and risky, requiring personnel to access difficult or dangerous locations. UAVISUALS redefines this process with drone technology, providing safe, efficient, and detailed inspections without the need for scaffolding, cranes, or extensive downtime. Licensed by the Civil Aviation Safety Authority (CASA), UAVISUALS brings a high standard of safety and regulatory compliance to every mission.

With drones equipped with high-resolution cameras, UAVISUALS captures precise visuals of assets such as power lines, towers, bridges, and rooftops, identifying potential issues before they escalate into costly repairs or operational interruptions. This proactive approach enables companies to conduct timely maintenance, reduce costs, and extend the life of their assets.

Comprehensive Data Solutions Across Industries

UAVISUALS is not just an inspection provider; it’s a full-spectrum data solutions partner. The company offers specialized services tailored to meet the diverse needs of Australia’s industries, including:

Asset and Infrastructure Inspections: UAVISUALS conducts thorough inspections on a wide range of industrial assets, capturing data from hard-to-reach places with exceptional clarity. Their drones can identify issues like corrosion, structural defects, and equipment wear, helping clients address maintenance needs efficiently.

3D Mapping and Topographic Surveys: Using photogrammetry and LiDAR, UAVISUALS generates accurate 3D models and maps, ideal for construction planning, urban development, and land management. These models provide essential measurements and an overall view that enhances precision for project managers, architects, and engineers.

Thermal Imaging and Fault Detection: UAVISUALS’ thermal drones detect temperature anomalies that signal equipment malfunctions or energy inefficiencies, making them invaluable for industries such as renewable energy, utilities, and manufacturing. By spotting issues early, clients can implement corrective actions, reducing risks and avoiding potential failures.

Environmental Monitoring: For agricultural and environmental applications, UAVISUALS offers drone solutions that monitor vegetation health, water quality, and land use. These insights are crucial for sustainable farming practices, conservation efforts, and ecological studies.

Empowering Australian Businesses with Actionable Insights

At UAVISUALS, the goal is not just to collect data but to provide clients with insights they can act on. The UAVISUALS team consists of experienced drone operators, data analysts, and industry experts who turn raw data into meaningful reports and recommendations. Whether it’s helping clients plan maintenance, optimize resource usage, or monitor environmental impacts, UAVISUALS delivers actionable insights that drive smarter, more informed decisions.

Their services streamline processes, save time, and enhance safety, allowing clients to focus on core operations while UAVISUALS handles data capture and analysis. From routine inspections to complex surveys, UAVISUALS’ solutions make data accessible, understandable, and highly relevant to each client’s objectives.

Pioneering the Future of Drone Technology in Australia

As demand for drone technology grows, UAVISUALS remains committed to staying at the cutting edge. The company continually invests in the latest drone models, advanced imaging technology, and data processing tools to ensure clients receive the best solutions available. This dedication to innovation positions UAVISUALS as a leader in the evolving drone services industry, ready to meet the changing needs of Australian businesses.

For companies looking to integrate advanced data solutions, UAVISUALS offers a partnership that combines technological excellence with practical expertise. By transforming how businesses approach inspections, mapping, and monitoring, UAVISUALS enables a safer, smarter, and more sustainable future across Australia.

2 notes

·

View notes

Text

LiDAR vs. Photogrammetry: Best Survey Tech for Projects

Introduction: For land surveying purposes, selecting between LiDAR and photogrammetry can frequently be a difficult choice for many people in a variety of businesses. Selecting the incorrect aerial survey technique can lead to project failure, expensive delays, and erroneous data. Since each approach has unique benefits and drawbacks, it can be difficult to decide which technology is most appropriate for a given use case. Acquiring accurate data about the surface of the planet from an overhead viewpoint is essential for aerial surveying, a crucial procedure in domains such as environmental science, forestry, urban planning, and mapping. This field is dominated by two key technologies: photogrammetry and light detection and ranging, or LiDAR. Every technique has distinct advantages and disadvantages that make some applications better suited for it than others.

Understanding LiDAR and Photogrammetry Light Detection and Ranging technology is known as LiDAR. It is a technique for remote sensing that measures varying distances to Earth using light in the form of a pulsed laser. These light pulses produce exact, three-dimensional information on the Earth's structure and surface properties when paired with other data captured by the aerial system.

Photogrammetry is the art and science of using photographic images, patterns of electromagnetic radiant imaging, and other phenomena to measure, record, and interpret accurate information about physical things and the surrounding environment.

1. The challenge lies in balancing accuracy and resolution LiDAR: Generates 3D models of the target region with high resolution and great accuracy. It can map ground characteristics accurately, with vertical accuracy as low as 5 cm and horizontal accuracy of roughly 10 cm. It is especially good at piercing foliage.

Photogrammetry: Photogrammetry offers a little less accuracy and resolution than LiDAR. The survey's ambient conditions and camera quality have a substantial impact on accuracy. The typical range for vertical accuracy is 15–30 cm, and the range for horizontal accuracy is 20–40 cm.

2. Issues revolving around cost-effectiveness and the availability of suitable equipment

LiDAR: Typically more costly because of the advanced gear and technology needed. Compared to photogrammetry, a LiDAR system may require a much larger initial setup.

Photogrammetry: More economical, particularly for simpler or smaller-scale tasks. It can be carried out with less expensive equipment and standard cameras installed on drones or airplanes.

3. Challenges related to time optimization

LiDAR: LiDAR is highly effective at quickly covering large areas, particularly in regions with dense vegetation, as it can penetrate canopy cover and deliver accurate ground data.

Photogrammetry: Surveying time varies based on the project's size and the level of detail needed in the images. It can be slower than LiDAR, especially in areas with complex topographies or dense vegetation. Read our blog for more details: https://www.gsourcedata.com/blog/lidar-vs-photogrammetry

#gsourcetechnologies#architecturedesign#engineeringdesign#lidarservices#photogrammetry#photogrammetryservices#engineeringservices#lidar technology#land survey

2 notes

·

View notes

Text

samli drones

Precision Agriculture: Drones offer real-time crop monitoring and efficient use of water, fertilizers, and pesticides.

Advanced Drone Capabilities: High-resolution cameras, LiDAR, and AI-driven automation make farming smarter.

Government Support: Global incentives and grants are making drones more accessible to farmers.

Sustainable Practices: Drones reduce environmental impact and boost yields while conserving resources.

Expanding Applications: From crop spraying to livestock monitoring and irrigation management, drones are transforming farming.

Store link: https://samliglobal.en.alibaba.com/

Zhongshan Samli Drones Co. Ltd

CONTACT US

WhatsApp: +86 152 1870 3002

We chat: Samli 2022

mail address: [email protected]

#agriculturedrone#technology#agriculture#sprayingdrone#agritech#agropecuaria#precisionagriculture#hybrid drone#uav#battery

1 note

·

View note

Link

A new, higher-resolution infrared camera outfitted with a variety of lightweight filters could probe sunlight reflected off Earth’s upper atmosphere and surface, improve forest fire warnings, and reveal the molecular composition of other planets. The cameras use sensitive, high-resolution strained-layer superlattice sensors, initially developed at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, using IRAD, Internal Research and Development funding. Their compact construction, low mass, and adaptability enable engineers like Tilak Hewagama to adapt them to the needs of a variety of sciences. Goddard engineer Murzy Jhabvala holds the heart of his Compact Thermal Imager camera technology – a high-resolution, high-spectral range infrared sensor suitable for small satellites and missions to other solar-system objects. “Attaching filters directly to the detector eliminates the substantial mass of traditional lens and filter systems,” Hewagama said. “This allows a low-mass instrument with a compact focal plane which can now be chilled for infrared detection using smaller, more efficient coolers. Smaller satellites and missions can benefit from their resolution and accuracy.” Engineer Murzy Jhabvala led the initial sensor development at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, as well as leading today’s filter integration efforts. Jhabvala also led the Compact Thermal Imager experiment on the International Space Station that demonstrated how the new sensor technology could survive in space while proving a major success for Earth science. More than 15 million images captured in two infrared bands earned inventors, Jhabvala, and NASA Goddard colleagues Don Jennings and Compton Tucker an agency Invention of the Year award for 2021. The Compact Thermal Imager captured unusually severe fires in Australia from its perch on the International Space Station in 2019 and 2020. With its high resolution, detected the shape and location of fire fronts and how far they were from settled areas — information critically important to first responders. Credit: NASA Data from the test provided detailed information about wildfires, better understanding of the vertical structure of Earth’s clouds and atmosphere, and captured an updraft caused by wind lifting off Earth’s land features called a gravity wave. The groundbreaking infrared sensors use layers of repeating molecular structures to interact with individual photons, or units of light. The sensors resolve more wavelengths of infrared at a higher resolution: 260 feet (80 meters) per pixel from orbit compared to 1,000 to 3,000 feet (375 to 1,000 meters) possible with current thermal cameras. The success of these heat-measuring cameras has drawn investments from NASA’s Earth Science Technology Office (ESTO), Small Business Innovation and Research, and other programs to further customize their reach and applications. Jhabvala and NASA’s Advanced Land Imaging Thermal IR Sensor (ALTIRS) team are developing a six-band version for this year’s LiDAR, Hyperspectral, & Thermal Imager (G-LiHT) airborne project. This first-of-its-kind camera will measure surface heat and enable pollution monitoring and fire observations at high frame rates, he said. NASA Goddard Earth scientist Doug Morton leads an ESTO project developing a Compact Fire Imager for wildfire detection and prediction. “We’re not going to see fewer fires, so we’re trying to understand how fires release energy over their life cycle,” Morton said. “This will help us better understand the new nature of fires in an increasingly flammable world.” CFI will monitor both the hottest fires which release more greenhouse gases and cooler, smoldering coals and ashes which produce more carbon monoxide and airborne particles like smoke and ash. “Those are key ingredients when it comes to safety and understanding the greenhouse gases released by burning,” Morton said. After they test the fire imager on airborne campaigns, Morton’s team envisions outfitting a fleet of 10 small satellites to provide global information about fires with more images per day. Combined with next generation computer models, he said, “this information can help the forest service and other firefighting agencies prevent fires, improve safety for firefighters on the front lines, and protect the life and property of those living in the path of fires.” Probing Clouds on Earth and Beyond Outfitted with polarization filters, the sensor could measure how ice particles in Earth’s upper atmosphere clouds scatter and polarize light, NASA Goddard Earth scientist Dong Wu said. This applications would complement NASA’s PACE — Plankton, Aerosol, Cloud, ocean Ecosystem — mission, Wu said, which revealed its first light images earlier this month. Both measure the polarization of light wave’s orientation in relation to the direction of travel from different parts of the infrared spectrum. “The PACE polarimeters monitor visible and shortwave-infrared light,” he explained. “The mission will focus on aerosol and ocean color sciences from daytime observations. At mid- and long-infrared wavelengths, the new Infrared polarimeter would capture cloud and surface properties from both day and night observations.” In another effort, Hewagama is working Jhabvala and Jennings to incorporate linear variable filters which provide even greater detail within the infrared spectrum. The filters reveal atmospheric molecules’ rotation and vibration as well as Earth’s surface composition. That technology could also benefit missions to rocky planets, comets, and asteroids, planetary scientist Carrie Anderson said. She said they could identify ice and volatile compounds emitted in enormous plumes from Saturn’s moon Enceladus. “They are essentially geysers of ice,” she said, “which of course are cold, but emit light within the new infrared sensor’s detection limits. Looking at the plumes against the backdrop of the Sun would allow us to identify their composition and vertical distribution very clearly.” By Karl B. Hille NASA’s Goddard Space Flight Center, Greenbelt, Md. Share Details Last Updated May 22, 2024 Related TermsGoddard TechnologyGoddard Space Flight CenterTechnology Keep Exploring Discover More Topics From NASA Goddard Technology Innovations Goddard's Office of the Chief Technologist oversees the center's technology research and development efforts and provides updates on the latest… Goddard’s Internal Research & Development Program (IRAD) Information and links for Goddard's IRAD and CIF technology research and development programs and other NASA tech development sources. Technology Goddard Office of the Chief Technologist Staff page for the Goddard Office of the Chief Technologist with portraits and short bios

2 notes

·

View notes

Text

Chemists develop highly reflective black paint to make objects more visible to autonomous cars

Driving at night might be a scary challenge for a new driver, but with hours of practice it soon becomes second nature. For self-driving cars, however, practice may not be enough because the lidar sensors that often act as these vehicles' "eyes" have difficulty detecting dark-colored objects. New research published in ACS Applied Materials & Interfaces describes a highly reflective black paint that could help these cars see dark objects and make autonomous driving safer. Lidar, short for light detection and ranging, is a system used in a variety of applications, including geologic mapping and self-driving vehicles. The system works like echolocation, but instead of emitting sound waves, lidar emits tiny pulses of near-infrared light. The light pulses bounce off objects and back to the sensor, allowing the system to map the 3D environment it's in.

Read more.

12 notes

·

View notes