#Llama 3.3 70B

Explore tagged Tumblr posts

Text

LLaMA 3.3 70B Multilingual AI Model Redefines Performance

Overview Llama 3.3 70B

Text-only apps run better with Llama 3.3, a 70B instruction-tuned model, compared with Llama 3.1 and 3.2. In certain circumstances, Llama 3.3 70B matches Llama 3.1 405B. Meta offers a cutting-edge 70B model that competes with Llama 3.1 405B.

Pretrained and instruction-adjusted generative model Meta Llama 3.3 multilingual large language model (LLM) is used in 70B. The Llama 3.3 70B instruction customisable text only model outperforms several open source and closed chat models on frequently used industry benchmarks and is designed for multilingual debate.

Llama 3.3 supports which languages?

Sources say Llama 3.3 70B supports English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

A pretrained and instruction-tuned generative model, the Meta Llama 3.3 70B multilingual big language model optimises multilingual conversation use cases across supported languages. Even though the model was trained on many languages, these eight fulfil safety and helpfulness criteria.

Developers should not use Llama 3.3 70B to communicate in unsupported languages without first restricting and fine-tuning the system. The model cannot be used in languages other than these eight without these precautions. Developers can adapt Llama 3.3 for additional languages if they follow the Acceptable Use Policy and Llama 3.3 Community License and ensure safe use.

New capabilities

This edition adds a bigger context window, multilingual inputs and outputs, and developer cooperation with third-party tools. Building with these new capabilities requires special considerations in addition to the suggested procedures for all generative AI use cases.

Utilising tools

Developers integrate the LLM with their preferred tools and services, like in traditional software development. To understand the safety and security risks of utilising this feature, they should create a use case policy and assess the dependability of third-party services. The Responsible Use Guide provides safe third-party protection implementation tips.

Speaking many languages

Llama may create text in languages different than safety and usefulness performance standards. Developers should not use this model to communicate in non-supported languages without first restricting and fine-tuning the system, per their rules and the Responsible Use Guide.

Reason for Use

Specific Use Cases Llama 3.3 70B enables multilingual commercial and research use. Pretrained models can be changed for many natural language producing jobs, however instruction customised text only models are for assistant-like conversation. The Llama 3.3 model also lets you use its outputs to improve distillation and synthetic data models. These use cases are allowed by Llama 3.3 Community License.

Beyond Use in a way that violates laws or standards, especially trade compliance. Any other use that violates the Llama 3.3 Community License and Acceptable Use Policy. Use in languages other than those this model card supports.

Note: Llama 3.3 70B has been trained on more than eight languages. The Acceptable Use Policy and Llama 3.3 Community License allow developers to alter Llama 3.3 models for languages other than the eight supported languages. They must ensure that Llama 3.3 is used appropriately and securely in other languages.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#Llama 3.3 70B#Llama 3.3#Llama 3.1 70B

0 notes

Text

The hottest AI models, what they do, and how to use them

#ai#grok 3#o3 mini#deep research#le chat#operator#Gemini 2.0 Pro Experimental#deepseek r1#Llama 3.3 70B#sora#open ai#meta#gemini#anthropic#x.AI#Cohere

0 notes

Text

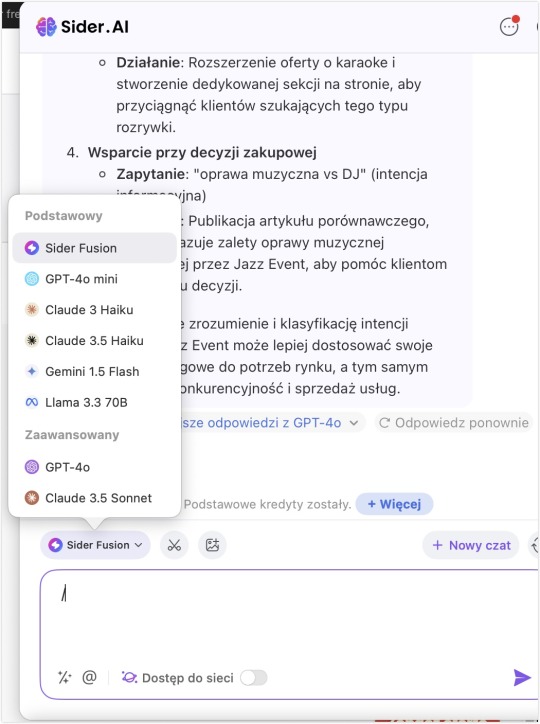

Sider.ai - Sprawdź ograniczenia i funkcje darmowego planu Sider.ai (grudzień 2024)

Ile kredytów podstawowych mają obecnie darmowi użytkownicy Sider.ai?

Darmowy użytkownik Sider.ai ma do wykorzystania:

30 Podstawowych Kredytów*/Dzień

10 Zapytania do Obrazów Łącznie

10 PDF-ów Łącznie jako upload, 200 stron/PDF (wtyczka Chrome)

* Podstawowe kredyty można użyć w konwersacjach z tymi modelami:

Sider Fusion

GPT-4o mini

Claude 3 Haiku

Claude 3.5 Haiku

Gemini 1.5 Flash

Llama 3.3 70B

Darmowa wersja Sider.ai blokuje:

blokuje dostęp do internetu podczas zapytania (możliwa tylko interakcja z zaznaczonym tekstem, wgranym PDF, Obrazkiem)

blokuje rozmowy z najnowszymi wersjami czatów LLMs typu premium (np. CzatGPT-4o, ChatGPT-o1).

Zalety Sider.ai nawet w darmowej wersji (free plan):

dostęp do różnych modeli LLMs bez konieczności podawania własnego api

obecność Sidera.ai w różnych systemach jako aplikacja i rozszerzenie: - Rozszerzenia przegladarki Safari, Edge, Chrome - Aplikacig iOS - Aplikacia Android - Aplikacia na Mac - Apikacja na Windows

Możliwość konfigurowania własnych Monitów (gotowych zapytań do wyzwalania jako skróty na czacie (/)

Możliwość wywoływania konkretnego modelu LLM na czacie (@)

„Mankamentem jest brak możliwości synchronizacji historii czatów między platformami i brak możliwości eksportu treści czatu do pliku. Dostępna jest opcja wygenerowania odnośnika url kierującego do zapisu czatu"

Źródła i strony www:

„Używasz Sider.ai? W komentarzu podziel się swoim własnym ulubionym Monitem do konfiguracji i wywołania w Sider.ai"

#jazzy_content#free ai tool#free plans#Sider.ai#Sider free plan#Sider ai on mac#Sider.ai Chrome#Sider Fusion#GPT-4o mini#Claude 3 Haiku#Claude 3.5 Haiku#Gemini 1.5 Flash#Llama 3.3 70B#Funkcje Sider ai w darmowym planie#Zalety Sider.ai

1 note

·

View note

Text

back in march i ran a few safety evaluations (only 200/model cause i’m impatient) on the different LLMs in azure foundry and discovered some interesting things here!

shown: Deepseek-V3 and Llama-3.3-70B-Instruct

now, it’s definitely a little outdated, but learning where different models weak spots are can help engineers target them and create stronger filtering and safety systems. thought this would be interesting to share

original repository created for microsoft reactor’s python + AI series, wanted to expand to investigate other LLMs besides gpt4o

(documented on my github too)

2 notes

·

View notes

Text

🟣 Latest AI News Roundup 🏆 Gemini 2.0 🎁 Free access to AI services

1️⃣ Gemini 2.0 2️⃣ ChatGPT Vision 3️⃣ Meta Llama 3.3 70B 4️⃣ Midjourney Patchwork 5️⃣ Google Quantum Willow 6️⃣ Devin is now available 7️⃣ Phi-4 Microsoft’s Small LLM 8️⃣ Sora, text-to-video model

AINews #TechNews

5 notes

·

View notes

Text

Llama-3.3-70B-Instruct

https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct

2 notes

·

View notes

Quote

生成AIは学術論文を誤って要約することが多く、新型モデルはより悪化している 牡丹堂 (著)・パルモ (編集) 公開: 2025-06-10 20:00 膨大な学術研究の内容を把握するうえで、生成AIによる要約は非常に便利な手段だ。だが、その要約内容に重大な問題があることが、『Royal Society Open Science』に発表された研究で明らかになった。 多くのAIは、元の研究以上に大げさな表現を使い、成果がすべての人や場面に当てはまるような要約にしてしまう傾向があるという。 広告の下に記事が続いています これは「過度な一般化」と呼ばれ、「一部の患者に効果があった」という結果を「この薬は全員に効く」と言い換えるようなケースだ。 しかも新しいモデルほどその傾向が強く、「正確に」と指示するほど不正確になるという皮肉な現象まで確認されている。 科学研究のAIによる「要約」は本当に正確か? オランダのユトレヒト大学のウーヴェ・ピーターズ氏らが行ったこの研究の背景にあるのは、学術的な研究の動向を把握する際、生成AI(チャットボット)による要約が当たり前のように使われるようになったことだ。 AIは複雑な研究論文の内容を簡潔にまとめてくれるため重宝されているが、その代償として重要なニュアンスが失われている可能性がある。 特に懸念されるのは、本来ある一定の状況にしか当てはまらないはずの研究結果が「過剰に一般化」されている点だ。 一定の状況における一部の人間で確認された結果が、あらゆる状況に当てはまることが確定したかのような印象を与えてしまうのだ。 過度な一般化は以下のような例が挙げられる 研究対象の限定条件を��視する(例:特定年齢層・性別・地域など) 観察結果を因果関係として言い換える(例:「関連がある」→「原因である」) 文体の変化による印象操作(例:「過去形の記述」を「現在形」に変えて普遍的に見せる この画像を大きなサイズで見る Photo by:iStock 新しいモデルほど過剰に一般化したがる そうしたことが実際に起きているのかどうか検証するため、研究チームは主要な大規模言語モデル10種を対象に、その要約の評価を行った。 対象となったAIは、ChatGPT-4o・ChatGPT-4.5・DeepSeek・LLaMA 3.3 70B・Claude 3.7 Sonnetなど。 実験では、まずこれらに『Nature』『Science』『The Lancet』『The New England Journal of Medicine』といった学術誌・医学誌に掲載された研究300本を要約させ、過剰に一般化されていないかどうか分析された。 その結果判明したのは、ほとんどのAIの要約は原文よりも「過度な一般化」が当たり前のように出力されていたのだ。 広告の下に記事が続いています 意外にもそうした傾向は新しいモデルの方が強く、ChatGPT-4oやLLaMA 3.3などは「過剰な一般化」の確率が最大73%高かった。 また皮肉なことに、AIに「正確に要約するように」と指示すると、状況はかえって悪化してしまう。普通に要約せよと指示したときに比べ、過剰な一般化が2倍にも増えた。 これは人間に「考えるな」と言うと、かえって考えてしまう心の働きにも似ているという。 この画像を大きなサイズで見る 人間による要約の方がはるかに正確 今回の研究では、AIの要約を人間のサイエンスライターのそれと比較もしている。 医学誌『NEJM Journal Watch』の論文を題材に、AIと専門家がそれぞれ要約したものを比較したところ、人間による要約の方がはるかに正確で、過度な一般化の発生率はAIの約5分の1だった。 こうしたAIの欠陥をある程度予防する方法もあることはある。それはAPIを通じて、創造性を抑え、一貫性を重視するようパラメータを調整してやることだ。 ただし、これは通常のブラウザベースのAIでは設定できない場合が多いので、誰でも簡単にというわけにはいかない。 また、一般化が常に悪いわけではないとも研究チームは指摘する。専門家ではない人たちに複雑な研究の内容を伝えるうえで、ある程度の単純化はやむを得ないものだ。 だが、やりすぎてしまうと誤解を招く原因となる。とりわけ医療のような健康や命に関わる分野でそうした誤解は望ましくないので、注意が必要になる。 この画像を大きなサイズで見る Photo by:iStock AIにできるだけ正確に要約してもらうために 研究者らは、AIによる科学的要約の精度を高めるために、以下のような方策を提案している。 1. AIを保���的に設定する((AIの表現を控えめにして、元の研究に忠実な要約をさせる設定。創造性を抑えると誤解が減りやすい)) 2. はっきりと正確性を指示するプロンプトは避ける(「正確にまとめて」と指示すると、かえって断定的で誤解を招く要約になることがある) 3. Claudeなど、忠実度が高いとされたAIを選ぶ(他のAIよりも誇張の少ない要約をしやすいとされている) いずれにせよ、これらは完璧な対策というわけではない。 なので、やはりAIの言うことを鵜呑みにしない、物事は自分で考えるという心構えが常に必要ということなのかもしれない。 この研究は『Royal Society Open Science』(2025年4月30日付)に掲載された。 References: Generalization bias in large language model summarization of scientific research / AI chatbots often misrepresent scientific studies — and newer models may be worse 本記事は、海外の情報を基に、日本の読者向けにわかりやすく編集しています。 コメントを見る(4件) 📌 広告の下にスタッフ厳選「あわせて読みたい」を掲載中 あわせて読みたい AIモデルがお互いに共食いをはじめ、崩壊の兆候を見せ始めている ほとんどのAIはアナログ時計の時刻やカレンダーの日付を正確に把握できない AIはチェスで負けそうになるとズルをすることが判明 AIチャットボットはニュースを正確に要約できない。重大な誤りが多いことが判明 AIがあなたの考える力を奪う?「便利すぎる時代」の危険性

生成AIは学術論文を誤って要約することが多く、新型モデルはより悪化している | カラパイア

0 notes

Text

"According to a new study published in the Royal Society, as many as 73 percent of seemingly reliable answers from AI chatbots could actually be inaccurate. The collaborative research paper looked at nearly 5,000 large language model (LLM) summaries of scientific studies by ten widely used chatbots, including ChatGPT-4o, ChatGPT-4.5, DeepSeek, and LLaMA 3.3 70B. It found that, even when explicitly goaded into providing the right facts, AI answers lacked key details at a rate of five times that of human-written scientific summaries. "When summarizing scientific texts, LLMs may omit details that limit the scope of research conclusions, leading to generalizations of results broader than warranted by the original study," the researchers wrote." source: https://futurism.com/ai-chatbots-summarizing-research

0 notes

Text

Measuring AI Energy: A Tool Uncovers the Invisible Cost of Chatbots

A new open-source tool, developed by Julien Delavande of Hugging Face, allows real-time energy consumption estimation of messages sent and received by AI-based chatbots, promoting greater awareness of the environmental impact of AI. Key points: Real-time energy consumption estimation of chatbot messages Compatibility with AI models such as Llama 3.3 70B and Gemma 3 Comparison of consumption with common household appliances for intuitive understanding Promoting energy transparency in the use of AI In the ever-changing AI landscape, attention is increasingly... read more: https://www.turtlesai.com/en/pages-2702/measuring-ai-energy-a-tool-uncovers-the-invisible-cost

0 notes

Text

Llama 4: Smarter, Faster, More Efficient Than Ever

Llama 4's earliest models Available today in Azure AI Foundry and Azure Databricks, provide personalised multimodal experiences. Meta designed these models to elegantly merge text and visual tokens into one model backbone. This innovative technique allows programmers employ Llama 4 models in applications with massive amounts of unlabelled text, picture, and video data, setting a new standard in AI development.

Superb intelligence Unmatched speed and efficiency

The most accessible and scalable Llama generation is here. Unique efficiency, step performance changes, extended context windows, mixture-of-experts models, native multimodality. All in easy-to-use sizes specific to your needs.

Most recent designs

Models are geared for easy deployment, cost-effectiveness, and billion-user performance scalability.

Llama 4 Scout

Meta claims Llama 4 Scout, which fits on one H100 GPU, is more powerful than Llama 3 and among the greatest multimodal models. The allowed context length increases from 128K in Llama 3 to an industry-leading 10 million tokens. This opens up new possibilities like multi-document summarisation, parsing big user activity for specialised activities, and reasoning across vast codebases.

Reasoning, personalisation, and summarisation are targeted. Its fast size and extensive context make it ideal for compressing or analysing huge data. It can summarise extensive inputs, modify replies using user-specific data (without losing earlier information), and reason across enormous knowledge stores.

Maverick Llama 4

Industry-leading natively multimodal picture and text comprehension model with low cost, quick responses, and revolutionary intelligence. Llama 4 Maverick, a general-purpose LLM with 17 billion active parameters, 128 experts, and 400 billion total parameters, is cheaper than Llama 3.3 70B. Maverick excels at picture and text comprehension in 12 languages, enabling the construction of multilingual AI systems. Maverick excels at visual comprehension and creative writing, making it ideal for general assistant and chat apps. Developers get fast, cutting-edge intelligence tuned for response quality and tone.

Optimised conversations that require excellent responses are targeted. Meta optimised 4 Maverick for talking. Consider Meta Llama 4's core conversation model a multilingual, multimodal ChatGPT-like helper.

Interactive apps benefit from it:

Customer service bots must understand uploaded photographs.

Artificially intelligent content creators who speak several languages.

Employee enterprise assistants that manage rich media input and answer questions.

Maverick can help companies construct exceptional AI assistants who can connect with a global user base naturally and politely and use visual context when needed.

Llama 4 Behemoth Preview

A preview of the Llama 4 teacher model used to distil Scout and Maverick.

Features

Scout, Maverick, and Llama 4 Behemoth have class-leading characteristics.

Naturally Multimodal: All Llama 4 models employ early fusion to pre-train the model with large amounts of unlabelled text and vision tokens, a step shift in intelligence from separate, frozen multimodal weights.

The sector's longest context duration, Llama 4 Scout supports up to 10M tokens, expanding memory, personalisation, and multi-modal apps.

Best in class in image grounding, Llama 4 can match user requests with relevant visual concepts and relate model reactions to picture locations.

Write in several languages: Llama 4 was pre-trained and fine-tuned for unmatched text understanding across 12 languages to support global development and deployment.

Benchmark

Meta tested model performance on standard benchmarks across several languages for coding, reasoning, knowledge, vision understanding, multilinguality, and extended context.

#technology#technews#govindhtech#news#technologynews#Llama 4#AI development#AI#artificial intelligence#Llama 4 Scout#Llama 4 maverick

0 notes

Text

DeepSeek V3-0324 beats rival AI models in open-source first

DeepSeek V3-0324 has become the highest-scoring non-reasoning model on the Artificial Analysis Intelligence Index in a landmark achievement for open-source AI. The new model advanced seven points in the benchmark to surpass proprietary counterparts such as Google’s Gemini 2.0 Pro, Anthropic’s Claude 3.7 Sonnet, and Meta’s Llama 3.3 70B. While V3-0324 trails behind reasoning models, including…

0 notes

Text

Duck.ai - czatuj z o3-mini bez ograniczeń i zachowaj prywatność

"Duck.ai oferuje użytkownikowi możliwość wyboru modelu, z którym chce czatować - obecnie są to GPT-4o mini, Llama 3.3 70B, Claude 3 Haiku, o3-mini oraz Mistral Small 3.

To, co różni Duck.ai od Perplexity to fakt, że wszystkie modele są darmowe i można z nimi czatować do woli. Ponadto - zgodnie z deklaracjami samego DuckDuckGo - firma nie gromadzi danych osób czatujących z chatbotami. Historia czatów ("Recent chats") jest przechowywana lokalnie na urządzeniu użytkownika i czyszczona automatycznie po 30 dniach."

https://spidersweb.pl/2025/03/duckduckgo-duck-ai-chatboty.html#:~:text=Duck.ai%20oferuje,po%2030%20dniach.

0 notes

Text

Trying 70B Model

Just for fun I tried Llama 3.3 70B model on a 32GB Mac Mini M2 Pro, and the results are what you'd expect. You can load the model, but the performance is so poor–there is actually no point to running it.

1 note

·

View note

Text

Avrupa’dan OpenAI ve Google’a rakip yeni yapay zeka!

Mistral Small 3 yapay zeka modeli tanıtıldı. Mini model yapay zekalara karşı geliştirilen bu araç neler vadediyor? Avrupa merkezli yapay zeka şirketi Mistral AI, 24 milyar parametreli yeni modeli Mistral Small 3’ü tanıttı. Yapay zeka dünyasında dikkatleri üzerine çeken yapay zeka, MMLU-Pro kıyaslamasında Llama 3.3 70B ve Owen 32B gibi büyük modellere yakın performans göstererek merak…

0 notes

Text

Deep Cogito open LLMs use IDA to outperform same size models

New Post has been published on https://thedigitalinsider.com/deep-cogito-open-llms-use-ida-to-outperform-same-size-models/

Deep Cogito open LLMs use IDA to outperform same size models

Deep Cogito has released several open large language models (LLMs) that outperform competitors and claim to represent a step towards achieving general superintelligence.

The San Francisco-based company, which states its mission is “building general superintelligence,” has launched preview versions of LLMs in 3B, 8B, 14B, 32B, and 70B parameter sizes. Deep Cogito asserts that “each model outperforms the best available open models of the same size, including counterparts from LLAMA, DeepSeek, and Qwen, across most standard benchmarks”.

Impressively, the 70B model from Deep Cogito even surpasses the performance of the recently released Llama 4 109B Mixture-of-Experts (MoE) model.

Iterated Distillation and Amplification (IDA)

Central to this release is a novel training methodology called Iterated Distillation and Amplification (IDA).

Deep Cogito describes IDA as “a scalable and efficient alignment strategy for general superintelligence using iterative self-improvement”. This technique aims to overcome the inherent limitations of current LLM training paradigms, where model intelligence is often capped by the capabilities of larger “overseer” models or human curators.

The IDA process involves two key steps iterated repeatedly:

Amplification: Using more computation to enable the model to derive better solutions or capabilities, akin to advanced reasoning techniques.

Distillation: Internalising these amplified capabilities back into the model’s parameters.

Deep Cogito says this creates a “positive feedback loop” where model intelligence scales more directly with computational resources and the efficiency of the IDA process, rather than being strictly bounded by overseer intelligence.

“When we study superintelligent systems,” the research notes, referencing successes like AlphaGo, “we find two key ingredients enabled this breakthrough: Advanced Reasoning and Iterative Self-Improvement”. IDA is presented as a way to integrate both into LLM training.

Deep Cogito claims IDA is efficient, stating the new models were developed by a small team in approximately 75 days. They also highlight IDA’s potential scalability compared to methods like Reinforcement Learning from Human Feedback (RLHF) or standard distillation from larger models.

As evidence, the company points to their 70B model outperforming Llama 3.3 70B (distilled from a 405B model) and Llama 4 Scout 109B (distilled from a 2T parameter model).

Capabilities and performance of Deep Cogito models

The newly released Cogito models – based on Llama and Qwen checkpoints – are optimised for coding, function calling, and agentic use cases.

A key feature is their dual functionality: “Each model can answer directly (standard LLM), or self-reflect before answering (like reasoning models),” similar to capabilities seen in models like Claude 3.5. However, Deep Cogito notes they “have not optimised for very long reasoning chains,” citing user preference for faster answers and the efficiency of distilling shorter chains.

Extensive benchmark results are provided, comparing Cogito models against size-equivalent state-of-the-art open models in both direct (standard) and reasoning modes.

Across various benchmarks (MMLU, MMLU-Pro, ARC, GSM8K, MATH, etc.) and model sizes (3B, 8B, 14B, 32B, 70B,) the Cogito models generally show significant performance gains over counterparts like Llama 3.1/3.2/3.3 and Qwen 2.5, particularly in reasoning mode.

For instance, the Cogito 70B model achieves 91.73% on MMLU in standard mode (+6.40% vs Llama 3.3 70B) and 91.00% in thinking mode (+4.40% vs Deepseek R1 Distill 70B). Livebench scores also show improvements.

Here are benchmarks of 14B models for a medium-sized comparison:

While acknowledging benchmarks don’t fully capture real-world utility, Deep Cogito expresses confidence in practical performance.

This release is labelled a preview, with Deep Cogito stating they are “still in the early stages of this scaling curve”. They plan to release improved checkpoints for the current sizes and introduce larger MoE models (109B, 400B, 671B) “in the coming weeks / months”. All future models will also be open-source.

(Photo by Pietro Mattia)

See also: Alibaba Cloud targets global AI growth with new models and tools

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#AGI#ai#ai & big data expo#Alibaba#alibaba cloud#amp#arc#Art#Artificial Intelligence#automation#benchmark#benchmarks#Big Data#Building#california#Capture#claude#claude 3#claude 3.5#Cloud#coding#Companies#comparison#comprehensive#computation#conference#cyber#cyber security#data#deep cogito

0 notes