#MLOps and AI Governance

Explore tagged Tumblr posts

Text

AI Consulting Services: Transforming Business Intelligence into Applied Innovation

In today’s enterprise landscape, Artificial Intelligence (AI) is no longer a differentiator — it’s the new standard. But AI’s real-world impact depends less on which algorithm is chosen and more on how it is implemented, integrated, and scaled. This is where AI consulting services become indispensable.

For companies navigating fragmented data ecosystems, unpredictable market shifts, and evolving customer expectations, the guidance of an AI consulting firm transforms confusion into clarity — and abstract potential into measurable ROI.

Let’s peel back the layers of AI consulting to understand what happens behind the scenes — and why it often marks the difference between failure and transformation.

1. AI Consulting is Not About Technology. It’s About Problem Framing.

Before a single model is trained or data point cleaned, AI consultants begin with a deceptively complex task: asking better questions.

Unlike product vendors or software devs who start with “what can we build?”, AI consultants start with “what are we solving?”

This involves:

Contextual Discovery Sessions: Business users, not developers, are the primary source of insight. Through targeted interviews, consultants extract operational pain points, inefficiencies, and recurring bottlenecks.

Functional to Technical Mapping: Statements like “our forecasting is always off” translate into time-series modeling challenges. “Too much manual reconciliation” suggests robotic process automation or NLP-based document parsing.

Value Chain Assessment: Consultants analyze where AI can reduce cost, increase throughput, or improve decision accuracy — and where it shouldn’t be applied. Not every problem is an AI problem.

This early-stage rigor ensures the roadmap is rooted in real needs, not in technological fascination.

2. Data Infrastructure Isn’t a Precondition — It’s a Design Layer

The misconception that AI begins with data is widespread. In reality, AI begins with intent and matures with design.

AI Consultants Assess:

Data Gravity: Where does the data live? How fragmented is it across systems like ERPs, CRMs, and third-party vendors?

Latency & Freshness: How real-time does the AI need to be? Fraud detection requires milliseconds. Demand forecasting can run nightly.

Data Lineage: Can we track how data transforms through the pipeline? This is critical for debugging, auditing, and model interpretability.

Compliance Zones: GDPR, CCPA, HIPAA — each imposes constraints on what data can be collected, retained, and processed.

Rather than forcing AI into brittle, legacy systems, consultants often design parallel data lakes, implement stream processors (Kafka, Flink), and build bridges using ETL/ELT pipelines with Airflow, Fivetran, or custom Python logic.

3. Model Selection Isn’t Magic. It’s Engineering + Intuition

The AI world is infatuated with model names — GPT, BERT, XGBoost, etc. But consulting work doesn’t start with what’s popular. It starts with what fits.

Real AI Consulting Looks Like:

Feature Engineering Workshops: Where 80% of success is often buried. Domain knowledge informs variables that matter: seasonality, transaction types, sensor noise, etc.

Model Comparisons: Consultants run experiments across classical ML models (Random Forest, Logistic Regression), deep learning (CNNs, LSTMs), or foundation models (transformers) depending on the task.

Cost-Performance Tradeoffs: A 2% gain in precision might not justify a 3x increase in GPU costs. Consultants quantify tradeoffs and model robustness.

Explainability Frameworks: Shapley values, LIME, and counterfactuals are often used to explain black-box outputs to non-technical stakeholders — especially in regulated industries.

Models are chosen, tested, and deployed based on impact, not novelty.

4. AI Systems Must Think — and Also Talk

One of the most undervalued aspects of AI consulting is integration and interface design.

A forecasting model is useless if its output is stuck in a Jupyter notebook.

Consultants Engineer:

APIs and Microservices: Wrapping models in RESTful interfaces that plug into CRM, ERP, or mobile apps.

BI Dashboards: Using tools like Power BI, Tableau, or custom front-ends in React/Angular, integrated with prediction layers.

Decision Hooks: Embedding AI outputs into real-world decision points — e.g., auto-approving invoices under a threshold, triggering alerts on anomaly scores.

Human-in-the-Loop Systems: Creating feedback loops where human corrections refine AI over time — especially critical in NLP and vision applications.

Consultants don’t just deliver models. They deliver systems — living, usable, and explainable.

5. Deployment Is a Process, Not a Moment

Too often, AI projects die in what’s called the “deployment gap” — the chasm between a working prototype and a production-ready tool.

Consulting teams close that gap by:

Setting up MLOps Pipelines: Versioning data and models using DVC, managing environments via Docker/Kubernetes, scheduling retraining cycles.

Failover Mechanisms: Designing fallbacks for when APIs are unavailable, model confidence is low, or inputs are incomplete.

A/B Testing and Shadow Deployments: Evaluating new models against current workflows without interrupting operations.

Observability Systems: Integrating tools like MLflow, Prometheus, and custom loggers to monitor drift, latency, and prediction quality.

Deployment is iterative. Consultants treat production systems as adaptive organisms, not static software.

6. Risk Mitigation: The Hidden Backbone of AI Consulting

AI done wrong isn't just ineffective — it’s dangerous.

Good Consultants Guard Against:

Bias and Discrimination: Proactively auditing datasets for demographic imbalances and using bias-detection tools.

Model Drift: Setting thresholds and alerts for when models no longer reflect current behavior due to market changes or user shifts.

Data Leaks: Ensuring train-test separation is enforced and no future information contaminates training.

Overfitting Traps: Using proper cross-validation strategies and regularization methods.

Regulatory Missteps: Ensuring documentation, audit trails, and explainability meet industry and legal standards.

Risk isn’t eliminated. But it’s systematically reduced, transparently tracked, and proactively addressed.

7. Industry-Specific AI Consulting: One Size Never Fits All

Generic AI doesn’t work. Business rules, data structures, and risk tolerance vary widely between sectors.

In Healthcare, AI must be:

Explainable

Compliant with HIPAA

Integrated with EHR systems

In Finance, it must be:

High-speed (low latency)

Auditable and traceable

Resistant to adversarial fraud inputs

In Retail, it must be:

Personalized at scale

Seasonal-aware

Integrated with pricing, promotions, and inventory systems

The best AI consulting firms embed vertical knowledge into every layer — from preprocessing to post-deployment feedback.

8. Why the Right AI Consulting Partner Changes Everything

Let’s be candid: many AI projects fail — not because the models are wrong, but because the implementation is shallow.

The right consulting partner brings:

Strategic Maturity: They don’t just know the tech; they understand the boardroom.

Architectural Rigor: Cloud-native, modular, secure-by-design systems.

Cross-Functional Teams: Data scientists, cloud engineers, domain experts, compliance officers — all under one roof.

Commitment to Outcome: Not just delivering models but improving metrics you care about — revenue, margin, throughput, satisfaction.

If you’re navigating the AI landscape, don’t go it alone. Firms like ours are built to lead this transition with precision, partnership, and purpose.

9. AI Consulting as a Competitive Lever

At a time when AI is reshaping every industry — from law to logistics — early adopters backed by the right consulting expertise enjoy a flywheel effect:

More automation → faster execution

Better forecasts → optimized inventory and cash flow

Smarter personalization → higher customer lifetime value

Real-time insights → faster, more confident decisions

This isn’t just about saving costs. It’s about creating a new operating model — one where machines amplify human judgment, not replace it.

AI consultants are the architects of that model — helping you build it, scale it, and own it.

Final Thoughts: AI Isn’t a Buzzword. It’s an Engineering Discipline.

In the coming years, the divide won’t be between companies that use AI and those that don’t — but between those that use it well, and those who rushed in without guidance.

AI consulting is what makes the difference.

It’s not flashy. It’s not about flashy tools or press releases. It’s about deep analysis, strategic alignment, rigorous testing, and building systems that actually work — in production, at scale, and under pressure.

If you're ready to unlock AI’s real potential in your business, not just experiment with it — talk to an AI consulting partner who can help you make it real.

#AI Consulting Services#Artificial Intelligence Consulting#AI Strategy and Implementation#Business AI Solutions#Enterprise AI Consulting#Machine Learning Consulting#Custom AI Development#AI Integration Experts#AI for Business Growth#MLOps and AI Governance#AI Model Deployment#Scalable AI Systems#AI Transformation Journey#AI Use Cases in Business#AI Automation Solutions

0 notes

Text

From Firewall to Encryption: The Full Spectrum of Data Security Solutions

In today’s digitally driven world, data is one of the most valuable assets any business owns. From customer information to proprietary corporate strategies, the protection of data is crucial not only for maintaining competitive advantage but also for ensuring regulatory compliance and customer trust. As cyber threats grow more sophisticated, companies must deploy a full spectrum of data security solutions — from traditional firewalls to advanced encryption technologies — to safeguard their sensitive information.

This article explores the comprehensive range of data security solutions available today and explains how they work together to create a robust defense against cyber risks.

Why Data Security Matters More Than Ever

Before diving into the tools and technologies, it’s essential to understand why data security is a top priority for organizations worldwide.

The Growing Threat Landscape

Cyberattacks have become increasingly complex and frequent. From ransomware that locks down entire systems for ransom to phishing campaigns targeting employees, and insider threats from negligent or malicious actors — data breaches can come from many angles. According to recent studies, millions of data records are exposed daily, costing businesses billions in damages, legal penalties, and lost customer trust.

Regulatory and Compliance Demands

Governments and regulatory bodies worldwide have enacted stringent laws to protect personal and sensitive data. Regulations such as GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and CCPA (California Consumer Privacy Act) enforce strict rules on how companies must safeguard data. Failure to comply can result in hefty fines and reputational damage.

Protecting Brand Reputation and Customer Trust

A breach can irreparably damage a brand’s reputation. Customers and partners expect businesses to handle their data responsibly. Data security is not just a technical requirement but a critical component of customer relationship management.

The Data Security Spectrum: Key Solutions Explained

Data security is not a single tool or tactic but a layered approach. The best defense employs multiple technologies working together — often referred to as a “defense-in-depth” strategy. Below are the essential components of the full spectrum of data security solutions.

1. Firewalls: The First Line of Defense

A firewall acts like a security gatekeeper between a trusted internal network and untrusted external networks such as the Internet. It monitors incoming and outgoing traffic based on pre-established security rules and blocks unauthorized access.

Types of Firewalls:

Network firewalls monitor data packets traveling between networks.

Host-based firewalls operate on individual devices.

Next-generation firewalls (NGFW) integrate traditional firewall features with deep packet inspection, intrusion prevention, and application awareness.

Firewalls are fundamental for preventing unauthorized access and blocking malicious traffic before it reaches critical systems.

2. Intrusion Detection and Prevention Systems (IDS/IPS)

While firewalls filter traffic, IDS and IPS systems detect and respond to suspicious activities.

Intrusion Detection Systems (IDS) monitor network or system activities for malicious actions and send alerts.

Intrusion Prevention Systems (IPS) not only detect but also block or mitigate threats in real-time.

Together, IDS/IPS adds an extra layer of vigilance, helping security teams quickly identify and neutralize potential breaches.

3. Endpoint Security: Protecting Devices

Every device connected to a network represents a potential entry point for attackers. Endpoint security solutions protect laptops, mobile devices, desktops, and servers.

Antivirus and Anti-malware: Detect and remove malicious software.

Endpoint Detection and Response (EDR): Provides continuous monitoring and automated response capabilities.

Device Control: Manages USBs and peripherals to prevent data leaks.

Comprehensive endpoint security ensures threats don’t infiltrate through vulnerable devices.

4. Data Encryption: Securing Data at Rest and in Transit

Encryption is a critical pillar of data security, making data unreadable to unauthorized users by converting it into encoded text.

Encryption at Rest: Protects stored data on servers, databases, and storage devices.

Encryption in Transit: Safeguards data traveling across networks using protocols like TLS/SSL.

End-to-End Encryption: Ensures data remains encrypted from the sender to the recipient without exposure in between.

By using strong encryption algorithms, even if data is intercepted or stolen, it remains useless without the decryption key.

5. Identity and Access Management (IAM)

Controlling who has access to data and systems is vital.

Authentication: Verifying user identities through passwords, biometrics, or multi-factor authentication (MFA).

Authorization: Granting permissions based on roles and responsibilities.

Single Sign-On (SSO): Simplifies user access while maintaining security.

IAM solutions ensure that only authorized personnel can access sensitive information, reducing insider threats and accidental breaches.

6. Data Loss Prevention (DLP)

DLP technologies monitor and control data transfers to prevent sensitive information from leaving the organization.

Content Inspection: Identifies sensitive data in emails, file transfers, and uploads.

Policy Enforcement: Blocks unauthorized transmission of protected data.

Endpoint DLP: Controls data movement on endpoint devices.

DLP helps maintain data privacy and regulatory compliance by preventing accidental or malicious data leaks.

7. Cloud Security Solutions

With increasing cloud adoption, protecting data in cloud environments is paramount.

Cloud Access Security Brokers (CASB): Provide visibility and control over cloud application usage.

Cloud Encryption and Key Management: Secures data stored in public or hybrid clouds.

Secure Configuration and Monitoring: Ensures cloud services are configured securely and continuously monitored.

Cloud security tools help organizations safely leverage cloud benefits without exposing data to risk.

8. Backup and Disaster Recovery

Even with the best preventive controls, breaches, and data loss can occur. Reliable backup and disaster recovery plans ensure business continuity.

Regular Backups: Scheduled copies of critical data stored securely.

Recovery Testing: Regular drills to validate recovery procedures.

Ransomware Protection: Immutable backups protect against tampering.

Robust backup solutions ensure data can be restored quickly, minimizing downtime and damage.

9. Security Information and Event Management (SIEM)

SIEM systems collect and analyze security event data in real time from multiple sources to detect threats.

Centralized Monitoring: Aggregates logs and alerts.

Correlation and Analysis: Identifies patterns that indicate security incidents.

Automated Responses: Enables swift threat mitigation.

SIEM provides comprehensive visibility into the security posture, allowing proactive threat management.

10. User Education and Awareness

Technology alone can’t stop every attack. Human error remains one of the biggest vulnerabilities.

Phishing Simulations: Train users to recognize suspicious emails.

Security Best Practices: Ongoing training on password hygiene, device security, and data handling.

Incident Reporting: Encourage quick reporting of suspected threats.

Educated employees act as a crucial line of defense against social engineering and insider threats.

Integrating Solutions for Maximum Protection

No single data security solution is sufficient to protect against today’s cyber threats. The most effective strategy combines multiple layers:

Firewalls and IDS/IPS to prevent and detect intrusions.

Endpoint security and IAM to safeguard devices and control access.

Encryption to protect data confidentiality.

DLP and cloud security to prevent leaks.

Backup and SIEM to ensure resilience and rapid response.

Continuous user training to reduce risk from human error.

By integrating these tools into a cohesive security framework, businesses can build a resilient defense posture.

Choosing the Right Data Security Solutions for Your Business

Selecting the right mix of solutions depends on your organization's unique risks, compliance requirements, and IT environment.

Risk Assessment: Identify critical data assets and potential threats.

Regulatory Compliance: Understand applicable data protection laws.

Budget and Resources: Balance costs with expected benefits.

Scalability and Flexibility: Ensure solutions grow with your business.

Vendor Reputation and Support: Choose trusted partners with proven expertise.

Working with experienced data security consultants or managed security service providers (MSSPs) can help tailor and implement an effective strategy.

The Future of Data Security: Emerging Trends

As cyber threats evolve, data security technologies continue to advance.

Zero Trust Architecture: Assumes no implicit trust and continuously verifies every access request.

Artificial Intelligence and Machine Learning: Automated threat detection and response.

Quantum Encryption: Next-generation cryptography resistant to quantum computing attacks.

Behavioral Analytics: Identifying anomalies in user behavior for early threat detection.

Staying ahead means continuously evaluating and adopting innovative solutions aligned with evolving risks.

Conclusion

From the traditional firewall guarding your network perimeter to sophisticated encryption safeguarding data confidentiality, the full spectrum of data security solutions forms an essential bulwark against cyber threats. In a world where data breaches can cripple businesses overnight, deploying a layered, integrated approach is not optional — it is a business imperative.

Investing in comprehensive data security protects your assets, ensures compliance, and most importantly, builds trust with customers and partners. Whether you are a small business or a large enterprise, understanding and embracing this full spectrum of data protection measures is the key to thriving securely in the digital age.

#azure data science#azure data scientist#microsoft azure data science#microsoft certified azure data scientist#azure databricks#azure cognitive services#azure synapse analytics#data integration services#cloud based ai services#mlops solution#mlops services#data governance. data security services#Azure Databricks services

0 notes

Text

Exciting developments in MLOps await in 2024! 🚀 DevOps-MLOps integration, AutoML acceleration, Edge Computing rise – shaping a dynamic future. Stay ahead of the curve! #MLOps #TechTrends2024 🤖✨

#MLOps#Machine Learning Operations#DevOps#AutoML#Automated Pipelines#Explainable AI#Edge Computing#Model Monitoring#Governance#Hybrid Cloud#Multi-Cloud Deployments#Security#Forecast#2024

0 notes

Photo

𝐘𝐨𝐮𝐫 𝐁𝐚𝐜𝐤𝐞𝐧𝐝 𝐈𝐬𝐧’𝐭 𝐚 𝐖𝐚𝐫𝐞𝐡𝐨𝐮𝐬𝐞 𝐀𝐧𝐲𝐦𝐨𝐫𝐞—𝐈𝐭’𝐬 𝐭𝐡𝐞 𝐁𝐫𝐚𝐢𝐧. Once upon a time, backends served code. Today, they serve context. Gone are the days when AI was bolted on like a plugin. Now it’s infused—woven through the very infrastructure that powers your product. 𝐖𝐞'𝐫𝐞 𝐰𝐚𝐭𝐜𝐡𝐢𝐧𝐠 𝐧𝐞𝐱𝐭-𝐠𝐞𝐧 𝐭𝐞𝐚𝐦𝐬 𝐟𝐥𝐢𝐩 𝐭𝐡𝐞 𝐬𝐜𝐫𝐢𝐩𝐭: • Moving from monoliths to modular—so AI runs where it works best • Deploying models with FastAPI & TensorFlow to cut latency by 40%+ • Using event-driven backends to make real-time decisions feel native • Automating pipelines with MLOps—turning weeks into minutes • Building with governance-first thinking, not governance-later panic And what’s the payoff? AI that adapts. Infra that scales. Experiences that feel like magic—but run on precision. 𝐀 𝐛𝐞𝐭𝐭𝐞𝐫 𝐛𝐚𝐜𝐤𝐞𝐧𝐝 𝐝𝐨𝐞𝐬𝐧’𝐭 𝐣𝐮𝐬𝐭 𝐥𝐨𝐚𝐝 𝐟𝐚𝐬𝐭—𝐢𝐭 𝐭𝐡𝐢𝐧𝐤𝐬 𝐟𝐚𝐬𝐭. Building with AI in mind? 𝐋𝐞𝐭’𝐬 𝐭𝐚𝐥𝐤 𝐛𝐚𝐜𝐤𝐞𝐧𝐝 𝐝𝐞𝐬𝐢𝐠𝐧 𝐭𝐡𝐚𝐭 𝐥𝐞𝐚𝐫𝐧𝐬 𝐰𝐢𝐭𝐡 𝐲𝐨𝐮. 🔗https://meraakidesigns.com/

#BackendIntelligence#AIArchitecture#FastAPI#MLOps#EngineeringForAI#DigitalTransformation#ComposableTech#MeraakiDesigns#TechLeaders#AIatScale#FutureStack

0 notes

Text

End-to-End Guide to AI Software Development Services for Enterprises

Artificial Intelligence (AI) has rapidly evolved from a niche innovation to an enterprise-essential technology. Today, businesses are leveraging AI software development services to automate workflows, improve decision-making, personalize user experiences, and reduce costs. But how do enterprises approach AI development effectively—from strategy to deployment?

In this end-to-end guide, we’ll walk you through the entire lifecycle of AI software development, and how Prologic Technologies empowers global enterprises with cutting-edge AI solutions.

📌 Step 1: Understanding Enterprise Needs and AI Readiness

Before diving into development, enterprises need a clear understanding of:

Their core business challenges

Current data infrastructure

AI feasibility and ROI potential

Prologic Technologies begins each engagement with a comprehensive AI readiness assessment, helping clients identify high-impact areas like:

Customer service automation

Predictive analytics for operations

Intelligent document processing

Recommendation engines

🧠 Step 2: AI Strategy & Roadmap

An AI project is only as strong as the strategy behind it. Prologic collaborates with enterprise stakeholders to:

Define measurable AI goals

Select relevant technologies (ML, NLP, CV, etc.)

Choose optimal cloud infrastructure

Establish governance and compliance models

This strategic alignment ensures scalable, future-proof AI integration.

💾 Step 3: Data Collection & Preprocessing

AI models rely heavily on clean, labeled, and structured data. At this stage, Prologic Technologies assists with:

Data collection from CRMs, ERPs, and IoT systems

Data cleansing and transformation

Creating training datasets

Ensuring GDPR and HIPAA compliance

By organizing high-quality data pipelines, the foundation for effective AI is set.

🧪 Step 4: Model Development and Training

Now comes the core of AI development. Prologic’s AI engineers use frameworks like TensorFlow, PyTorch, Scikit-Learn, and OpenAI APIs to:

Build custom ML and deep learning models

Train models with labeled datasets

Apply techniques like reinforcement learning or transfer learning

Continuously evaluate performance metrics (accuracy, F1-score, recall)

All models are tailored to business needs—whether it's fraud detection, NLP-based search, or predictive maintenance.

🧩 Step 5: Integration With Existing Systems

Prologic Technologies ensures seamless integration of AI models into enterprise environments, including:

ERP and CRM systems (Salesforce, SAP, etc.)

Cloud platforms (AWS, GCP, Azure)

Mobile and web applications

Real-time dashboards and APIs

This ensures your AI system doesn’t function in isolation but enhances your core platforms.

🛡️ Step 6: Testing, Compliance, and Security

Before deployment, AI applications undergo:

Unit testing and model validation

Bias detection and explainability audits

Security testing (for adversarial inputs or model theft)

Documentation for regulatory compliance

Prologic adheres to strict data security protocols and industry standards (SOC 2, ISO 27001) for enterprise confidence.

🚀 Step 7: Deployment & Optimization

Once the AI solution is validated, it’s deployed at scale. Prologic supports:

CI/CD pipelines for ML models (MLOps)

Auto-scaling infrastructure

On-premise, cloud, or hybrid deployment

Real-time monitoring and feedback loops

After deployment, Prologic Technologies provides continuous model tuning, retraining, and analytics to keep your AI solution accurate and impactful.

🔄 Step 8: Support, Maintenance, and Evolution

AI is not a one-time project. As business needs grow, so should your AI capabilities. Prologic offers:

SLA-backed support services

Regular updates to adapt to new data

Addition of GenAI and LLMs (e.g., ChatGPT, Claude, Gemini)

Upskilling for internal teams

This approach ensures enterprises get long-term value from their AI investment.

💡 Why Choose Prologic Technologies?

At Prologic Technologies, we bring a deep-tech mindset, proven enterprise experience, and end-to-end AI capabilities. Whether you're an enterprise just starting with AI or scaling your existing models, we deliver:

Bespoke AI development

Enterprise-grade scalability

Domain-specific solutions in healthcare, fintech, retail, legal, and logistics

Transparent pricing and flexible engagement models

🔎 Conclusion

AI is no longer optional—it's a competitive advantage. But realizing its full potential requires more than just algorithms. With a robust strategy, skilled engineering, and an experienced partner like Prologic Technologies, your enterprise can unlock the true power of AI.

0 notes

Text

Navigating the AI Skills Gap: What Data Scientists Need to Learn Next

The field of Artificial Intelligence is experiencing an unprecedented acceleration. What was cutting-edge last year is commonplace today, and what's emerging now will be essential tomorrow. For data scientists, this rapid evolution presents both immense opportunities and a pressing need for continuous upskilling. By mid-2025, merely possessing traditional machine learning skills is no longer enough to stay at the forefront; navigating the evolving AI skills gap requires strategic learning and a proactive approach to mastering the next wave of capabilities.

While your foundational skills in statistics, programming, and core machine learning remain crucial, the landscape demands a broader, more nuanced expertise. Here's what data scientists need to learn next to thrive in this dynamic environment:

The Ever-Evolving AI Landscape (Mid-2025 Context)

Several key trends are defining the AI landscape and, consequently, the demand for new skills:

Generative AI Maturity: Beyond Large Language Models (LLMs), diffusion models and multimodal generative AI are becoming more sophisticated, impacting everything from content creation to synthetic data generation.

MLOps Ubiquity: The focus has firmly shifted from building models in isolation to operationalizing them in robust, scalable production environments.

Increased Focus on Ethical AI & Governance: As AI pervades critical domains, regulations and societal expectations demand fairness, transparency, and accountability.

Specialized Hardware & Cloud Integration: The reliance on cloud-native AI services and understanding of specialized hardware (GPUs, TPUs, NPUs) is becoming more prevalent.

Real-time & Edge AI: The need for instantaneous insights and processing data closer to its source is driving innovation.

These shifts mean data scientists are increasingly expected to be T-shaped professionals – deep in their core analytical skills, but broad in their understanding of engineering, ethics, and deployment.

Key Skill Gaps & What to Learn Next

Here are the critical areas where data scientists should focus their learning:

1. Deep Dive into Generative AI

It's no longer sufficient to just know about LLMs; data scientists need to understand how to leverage them effectively.

Beyond Prompt Engineering: While prompt engineering is a starting point, delve into advanced techniques like Retrieval Augmented Generation (RAG), fine-tuning smaller open-source LLMs for specific tasks, and understanding the nuances of different model architectures (e.g., Transformer variants, diffusion models).

Synthetic Data Generation: Learn how to use generative models to create synthetic datasets for privacy-preserving research, data augmentation, and testing, especially when real data is scarce or sensitive.

Multimodal Generative AI: Explore how models combine text, image, audio, and video for richer content generation and analysis.

Ethical Implications of GenAI: Understand the risks of bias, misinformation, and intellectual property in generative models and how to mitigate them.

2. MLOps Mastery and Productionization

The ability to deploy, monitor, and maintain models in production is now a core competency, blurring the lines between data scientists and ML engineers.

Containerization (Docker) & Orchestration (Kubernetes): Learn to package your models and dependencies, and understand how to manage and scale them in production environments.

CI/CD for ML: Implement continuous integration and continuous deployment pipelines for automated testing, versioning, and deployment of ML models.

Model Monitoring & Observability: Set up systems to track model performance, detect data drift, concept drift, and anomalies, ensuring models remain relevant and accurate over time. Tools like MLflow, Prometheus, Grafana are key.

Model Serving Frameworks: Gain proficiency in exposing models as APIs using frameworks like FastAPI, Flask, or cloud-native serving solutions (e.g., AWS SageMaker Endpoints, Vertex AI Endpoints).

3. Advanced Data Engineering for Scalability and Reliability

AI models are only as good as the data they consume. A deeper understanding of data infrastructure is paramount.

Distributed Computing: Move beyond local Pandas. Master frameworks like Apache Spark or Dask for processing massive datasets across clusters.

Data Pipeline Orchestration: Learn to build robust and automated data pipelines using tools like Apache Airflow, Prefect, or Dagster, ensuring data quality and timely delivery for models.

Streaming Data: Understand concepts and technologies for real-time data processing (e.g., Apache Kafka, Flink) for applications requiring immediate insights.

Data Governance & Observability: Focus on ensuring data quality, lineage, and compliance throughout the data lifecycle.

4. Ethical AI, Fairness, and Explainability (XAI)

With increasing regulation and societal scrutiny, building responsible AI is no longer optional.

Bias Detection & Mitigation: Learn to identify and quantify bias in data and models using various fairness metrics, and implement techniques to mitigate it (e.g., re-weighing, adversarial debiasing, post-processing adjustments).

Explainable AI (XAI) Tools: Gain proficiency with tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) to interpret model predictions and explain them to non-technical stakeholders.

AI Governance & Regulations: Understand the principles behind emerging AI regulations (like the EU AI Act) and how to apply ethical frameworks to your AI projects from design to deployment.

5. Domain Specialization and Business Acumen

Technical skills are powerful, but their impact is amplified by deep industry knowledge.

Causal Inference: Move beyond correlation to understand cause-and-effect relationships, enabling more robust decision-making and A/B testing designs.

Product Thinking: Understand the end-user and business context of your AI solution. How will it integrate into existing products? What user experience will it create?

Communication & Storytelling: Effectively translate complex technical insights into clear, actionable business recommendations for diverse audiences.

6. Cloud Computing & Specialized Hardware Awareness

Most advanced AI development happens in the cloud.

Cloud ML Services: Deepen your expertise in the AI/ML offerings of major cloud providers (AWS SageMaker, Azure Machine Learning, Google Cloud Vertex AI).

GPU/TPU Optimization: Understand the basics of how specialized hardware accelerates AI training and inference, and how to optimize your models for these environments.

Strategies for Upskilling

Hands-on Projects: The best way to learn is by doing. Build end-to-end projects that incorporate these new skills, from data ingestion to model deployment and monitoring.

Online Courses & Certifications: Platforms like Coursera, edX, Udacity, and cloud provider certifications offer structured learning paths.

Open-Source Contributions: Contribute to relevant open-source projects to gain practical experience and network.

Networking & Mentorship: Connect with experts in these emerging fields; learn from their experiences.

Read & Research: Stay updated with academic papers, industry blogs, and conferences.

Conclusion

The "AI Skills Gap" isn't a deficit in core data science talent, but rather a dynamic shift in the required competencies. For data scientists, it's an exciting call to action. By proactively embracing these new areas – from the nuances of Generative AI and the rigor of MLOps to the critical importance of ethical AI and deep domain understanding – you won't just keep your job; you'll elevate your impact, becoming an indispensable architect of the intelligent future. Continuous learning isn't just a buzzword; it's the survival guide for the modern data scientist.

0 notes

Text

Accelerate Your Career with a Post Graduate Certificate in Artificial Intelligence and Machine Learning

In today’s fast-paced digital world, Artificial Intelligence (AI) and Machine Learning (ML) are not just buzzwords — they are at the heart of technological transformation across industries. From healthcare and finance to manufacturing and e-commerce, businesses are leveraging AI/ML to streamline operations, enhance customer experience, and make data-driven decisions. For working professionals looking to stay competitive, investing in a credible and industry-aligned program can make all the difference.

That’s where the Post Graduate Certificate In Artificial Intelligence And Machine Learning from BITS Pilani’s Work Integrated Learning Programmes (WILP) comes in.

Why Choose BITS Pilani WILP for AI and ML?

BITS Pilani is one of India’s most prestigious institutions, known for delivering academic excellence with a focus on industry relevance. The AI and ML certificate programme offered through WILP is uniquely designed for working professionals who want to advance their careers without taking a career break.

Here are some compelling reasons to consider this program:

1. Reputation and Credibility

The program is delivered by BITS Pilani, an institution that holds the status of “Institution of Eminence” by the Government of India. With decades of academic excellence, BITS is trusted by top-tier companies and working professionals alike.

2. Designed for Working Professionals

The coursework is flexible and can be completed alongside a full-time job. It offers a balanced mix of live online classes, recorded lectures, assignments, and hands-on projects, making it perfect for busy professionals.

3. Industry-Relevant Curriculum

The program includes cutting-edge modules such as:

Deep Learning

Natural Language Processing (NLP)

Computer Vision

Reinforcement Learning

AI for Decision Making

ML Operations (MLOps)

4. Hands-On Experience

Students work on real-world projects and case studies that simulate industry challenges. This helps them build a portfolio that showcases their practical understanding of AI/ML concepts.

5. Faculty with Industry and Academic Expertise

The courses are taught by faculty members with significant academic and industry experience, ensuring a robust blend of theory and application.

Who Should Enroll?

This certificate program is ideal for:

Software Engineers and Developers

Data Analysts and Scientists

IT and Software Professionals looking to switch to AI/ML roles

Product Managers and Tech Leads who want to integrate AI into their solutions

Whether you’re a beginner in the AI/ML space or a tech-savvy professional looking to upskill, this course offers a structured and comprehensive learning path.

Career Opportunities After the Program

After completing the Post Graduate Certificate In Artificial Intelligence And Machine Learning, professionals can explore roles such as:

Machine Learning Engineer

AI Developer

Data Scientist

NLP Engineer

Computer Vision Specialist

AI Product Manager

With the AI/ML job market booming globally, certified professionals are in high demand across various sectors.

Final Thoughts

In an era where technological disruption is the norm, the demand for skilled AI and ML professionals is higher than ever. By enrolling in a top-tier program like the Post Graduate Certificate In Artificial Intelligence And Machine Learning from BITS Pilani WILP, you not only gain in-depth technical knowledge but also open the door to exciting new career opportunities.

If you’re ready to future-proof your career and become a leader in the AI revolution, this program might just be the perfect next step.

0 notes

Text

The Impact of AI on Software Development

Artificial Intelligence is significantly transforming the way software is developed—shifting it from manual coding to intelligent, automated, and adaptive systems. The impact of AI on software development can be seen in increased efficiency, improved accuracy, faster delivery, and a redefined role for developers.

1. Streamlining Repetitive Work

AI automates time-consuming and repetitive development tasks. Modern tools like GitHub Copilot, IBM Watsonx, and ChatGPT support:

Code generation and smart autocomplete: Developers can generate code snippets from natural language prompts, reducing routine coding tasks.

Error detection and debugging: AI tools can proactively scan codebases for bugs and vulnerabilities, speeding up resolution.

Automated testing: AI helps create and execute test cases, improving test coverage and reducing human effort.

2. Enhancing Code Quality

AI-driven analysis of large code repositories helps identify common issues before deployment. These tools improve accuracy by simulating various user scenarios, ensuring robust and production-ready applications with fewer defects.

3. Speeding Up Development

The impact of AI on software development is evident in faster project delivery. By reducing manual intervention in coding, testing, and deployment, AI allows teams to:

Focus on core functionalities

Optimize continuous integration and delivery pipelines

Detect potential issues before they delay development

This leads to accelerated releases and a more responsive development cycle.

4. Unlocking Developer Creativity

By handling routine tasks, AI gives developers more room to focus on innovation and system design. It becomes a creativity enabler by allowing:

Faster prototyping

Informed decision-making through comparative analysis

Automation of technical assessments, such as code optimization and compliance checks

This empowers developers to build smarter solutions, not just faster ones.

5. Enabling Smarter Decision-Making

AI supports developers and project managers by providing intelligent recommendations throughout the development lifecycle. It enhances:

Planning and task prioritization

Resource allocation

Transparent, data-driven decisions backed by predictive analytics

These capabilities help reduce project risks and improve stakeholder alignment.

6. Redefining Developer Roles

AI is not here to replace developers—but to enhance their capabilities. The developer’s role is evolving into one of strategic oversight:

Guiding and refining AI-generated outputs

Collaborating with machines through hybrid workflows

Taking on new roles such as AI ethics specialists, MLOps engineers, and prompt designers

This evolution marks a shift toward high-impact, human-in-the-loop development.

7. Navigating Challenges and Ethical Concerns

With opportunity comes responsibility. The integration of AI into development raises several challenges:

Data privacy and security: AI systems must protect sensitive information

Job uncertainty: While automation reduces some tasks, it also creates demand for higher-skilled roles

AI ethics: Ensuring fairness, transparency, and accountability in AI outputs is critical

Responsible AI use demands strong governance and continuous human oversight.

8. Adopting AI in Development: What’s Next?

To fully harness the impact of AI on software development, organizations should focus on:

Upskilling teams in AI tools and practices

Implementing governance frameworks to address bias, compliance, and transparency

Seamless integration of AI into existing pipelines for testing, monitoring, and CI/CD

Balancing automation with human judgment to achieve optimal outcomes

Emerging trends include:

Predictive code maintenance to prevent bugs

Generative AI tools for faster ideation and prototyping

Automated security and compliance validations

These advancements point to a smarter, more resilient development future.

Conclusion: Embracing the Impact of AI on Software Development

The impact of AI on software development is transformative and far-reaching. It improves efficiency, reduces human error, accelerates timelines, and empowers developers to focus on strategy and innovation. Rather than replacing the human element, AI complements it—serving as a collaborative force that enhances creativity and decision-making.

To thrive in this evolving landscape, companies must:

Invest in AI training and talent development

Create ethical and responsible AI frameworks

Design hybrid systems that blend automation with human expertise

Prepare for future roles that support AI-driven workflows

Ultimately, AI is not just changing how software is built—it’s redefining what’s possible. Organizations that embrace this shift today will be better positioned for long-term innovation, agility, and growth.

0 notes

Text

Johnny Santiago Valdez Calderon Breaks Down AI Model Deployment in 2025

As artificial intelligence continues to evolve at lightning speed, deployment strategies have become more critical than ever. In 2025, AI is no longer just a buzzword — it’s the engine behind automation, real-time analytics, predictive maintenance, smart assistants, and much more.

Recently, tech visionary Johnny Santiago Valdez Calderon offered a deep dive into the evolving landscape of AI model deployment in 2025. With years of hands-on experience in machine learning, DevOps, and scalable cloud solutions, Johnny’s insights are a beacon for professionals looking to master this complex terrain.

From Prototype to Production: The Shift in 2025

One of the key highlights of Johnny’s analysis is the shifting pipeline from research to deployment. Gone are the days when ML engineers could build a model in a Jupyter notebook and hand it off to developers for production. In 2025, end-to-end AI deployment pipelines are tightly integrated, seamless, and require multi-disciplinary collaboration.

Johnny emphasized the importance of ModelOps — the new evolution of MLOps — that brings continuous integration, continuous delivery (CI/CD), and continuous training (CT) under one umbrella. According to him:

“Model deployment is no longer the final step. It’s a continuous lifecycle that requires monitoring, retraining, and governance.”

Top Deployment Architectures to Know in 2025

Johnny Santiago Valdez Calderon outlined the most relevant and high-performing deployment architectures of 2025:

1. Serverless AI Deployment

AI is now being deployed on serverless architectures like AWS Lambda, Azure Functions, and Google Cloud Run. This enables models to scale automatically, only consuming resources when triggered, making it cost-effective and highly efficient.

2. Edge AI with Microservices

In industries like automotive, manufacturing, and healthcare, Edge AI has taken center stage. Models are now deployed directly to edge devices with real-time inferencing capabilities, all orchestrated via lightweight microservices running in Kubernetes environments.

3. Multi-Cloud & Hybrid Deployments

In 2025, data sovereignty and latency concerns have pushed enterprises to adopt hybrid cloud and multi-cloud strategies. Johnny recommends using Kubernetes-based solutions like Kubeflow and MLRun for flexible, portable deployment across cloud environments.

Challenges Facing AI Model Deployment in 2025

Despite the advancements, Johnny is candid about the challenges that persist:

Model Drift: Models degrade over time due to changes in data. Monitoring pipelines with real-time feedback loops are a must.

Regulatory Compliance: With AI regulations tightening globally, auditability and explainability are now non-negotiable.

Latency vs. Accuracy Trade-offs: Choosing between faster inferencing and higher accuracy remains a difficult balance, especially in consumer-facing applications.

Best Practices Shared by Johnny Santiago Valdez Calderon

Here are Johnny’s top 5 recommendations for successful AI deployment in 2025:

Automate Everything: From data ingestion to model versioning and deployment — automation is critical.

Focus on Observability: Track model performance in production with tools like Prometheus, Grafana, and OpenTelemetry.

Data-Centric Development: Models are only as good as the data. Prioritize data pipelines as much as model architecture.

Use Feature Stores: Feature consistency between training and production environments is essential.

Invest in Cross-Functional Teams: AI deployment requires collaboration between data scientists, ML engineers, software developers, and compliance teams.

Looking Ahead: The Future of AI Deployment

Johnny predicts that by 2030, zero-touch AI deployment will be the norm, where systems self-monitor, retrain, and adapt without human intervention. But to get there, enterprises must invest now in strong deployment frameworks, scalable architecture, and governance.

His closing remark sums it up perfectly:

“AI model deployment in 2025 is not just about putting a model into production — it’s about building resilient, scalable, and intelligent systems that evolve with time.”

Conclusion

As AI adoption accelerates, insights from leaders like Johnny Santiago Valdez Calderon provide a clear path forward. Whether you're an ML engineer, DevOps professional, or product leader, understanding the nuances of AI model deployment in 2025 is essential for staying ahead of the curve.

#ai software developer#machine learning#machine learning systems#johnny santiago valdez calderon#johnnysantiagovaldezcalderon#software

0 notes

Text

Microsoft Azure Data Science in Brisbane

Unlock insights with Azure AI Data Science solutions in Brisbane. Expert Azure Data Scientists deliver scalable, AI-driven analytics for your business growth.

#azure data science#azure data scientist#microsoft azure data science#microsoft certified azure data scientist#azure databricks#azure cognitive services#azure synapse analytics#data integration services#cloud based ai services#mlops solution#mlops services#data governance. data security services#Azure Databricks services

0 notes

Text

AI Conferences in San Diego 2025

San Diego is shaping up to be one of the hottest destinations for artificial intelligence events in 2025.

With a stunning coastline, a vibrant tech ecosystem, and world-class venues, the city is hosting a diverse lineup of AI conferences that cater to researchers, developers, business leaders, and educators alike.

If you’re planning to stay ahead in the world of AI, San Diego’s 2025 conference calendar offers something for everyone—from practical tools and enterprise use cases to academic research and generative breakthroughs.

Early Year Momentum: Education & Enterprise AI

The year kicks off with a strong focus on AI in education. February brings together scholars and educators for a deep dive into how AI is reshaping learning, assessment, and student engagement. This is a must-attend for anyone working at the intersection of pedagogy and technology.

Following that, in March, the AI Copilot Summit will attract professionals interested in how AI tools like Microsoft Copilot are streamlining workflows, powering productivity, and transforming business operations.

With a mix of technical sessions and strategy-focused keynotes, this event is perfect for CIOs, product teams, and solution architects looking to integrate AI at scale.

Spring Focus: ML Engineers and Builders

In May, the spotlight turns to ML engineers and MLOps specialists with one of the season’s most practical events. MLcon San Diego is designed for those building and maintaining machine learning pipelines.

Attendees can expect workshops on real-time ML systems, productionizing generative models, and exploring the latest in MLOps tooling.

This is a hands-on experience for practitioners eager to move beyond theory and into applied AI at scale.

Summer Surge: Geospatial AI Innovation

By mid-year, AI meets geospatial intelligence at the Esri User Conference’s dedicated AI Summit.

Held in July, this event showcases how AI and machine learning are enhancing ArcGIS tools, enabling smarter location-based decision-making, and unlocking insights across urban planning, sustainability, and emergency response.

This summit is especially valuable for professionals working in government, environment, logistics, and infrastructure development.

Fall Highlights: Leadership, Governance, and Creativity

October is a power-packed month featuring the AI World Conference—one of the year’s most comprehensive gatherings for executives and decision-makers.

This conference dives into AI governance, risk management, cross-sector implementations, and scaling responsibly.

With sessions led by tech giants and industry veterans, it’s an essential event for enterprise leaders shaping their organization’s AI roadmap.

At the same time, TwitchCon North America returns to San Diego with a creative twist.

While not a traditional AI event, TwitchCon will feature next-gen tools for content creators—many powered by AI—including voice synthesis, real-time overlays, and content automation.

Year-End Prestige: AI Research at Its Peak

Closing out the year, December is expected to welcome NeurIPS—arguably the world’s most prestigious AI and machine learning research conference.

Known for groundbreaking papers, peer-reviewed workshops, and high-level academic discourse, NeurIPS brings the global research community together to explore the cutting edge of AI theory and practice.

If you’re passionate about deep learning, optimization, or experimental AI models, this event is unmatched.

Why Attend AI Conferences in San Diego?

San Diego isn’t just a beautiful location—it’s a rising force in the AI and tech ecosystem.

With a strong presence in biotech, defense, healthcare, and academia, the city offers the perfect environment for meaningful conversations and strategic collaborations.

The bonus?

Ocean views and sunshine after your sessions wrap up.

Read More: AI Conferences in San Diego 2025

0 notes

Text

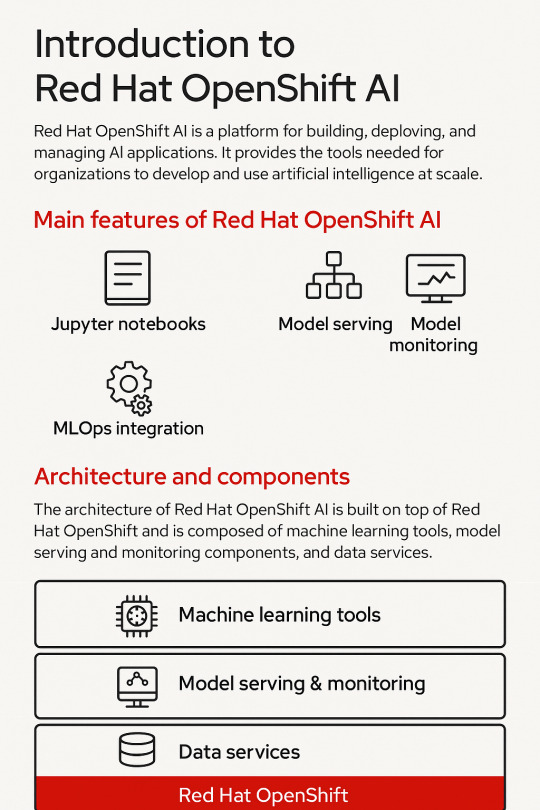

Introduction to Red Hat OpenShift AI: Features, Architecture & Components

In today’s data-driven world, organizations need a scalable, secure, and flexible platform to build, deploy, and manage artificial intelligence (AI) and machine learning (ML) models. Red Hat OpenShift AI is built precisely for that. It provides a consistent, Kubernetes-native platform for MLOps, integrating open-source tools, enterprise-grade support, and cloud-native flexibility.

Let’s break down the key features, architecture, and components that make OpenShift AI a powerful platform for AI innovation.

🔍 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly known as OpenShift Data Science) is a fully supported, enterprise-ready platform that brings together tools for data scientists, ML engineers, and DevOps teams. It enables rapid model development, training, and deployment on the Red Hat OpenShift Container Platform.

🚀 Key Features of OpenShift AI

1. Built for MLOps

OpenShift AI supports the entire ML lifecycle—from experimentation to deployment—within a consistent, containerized environment.

2. Integrated Jupyter Notebooks

Data scientists can use Jupyter notebooks pre-integrated into the platform, allowing quick experimentation with data and models.

3. Model Training and Serving

Use Kubernetes to scale model training jobs and deploy inference services using tools like KServe and Seldon Core.

4. Security and Governance

OpenShift AI integrates enterprise-grade security, role-based access controls (RBAC), and policy enforcement using OpenShift’s built-in features.

5. Support for Open Source Tools

Seamless integration with open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and ONNX for maximum flexibility.

6. Hybrid and Multicloud Ready

You can run OpenShift AI on any OpenShift cluster—on-premise or across cloud providers like AWS, Azure, and GCP.

🧠 OpenShift AI Architecture Overview

Red Hat OpenShift AI builds upon OpenShift’s robust Kubernetes platform, adding specific components to support the AI/ML workflows. The architecture broadly consists of:

1. User Interface Layer

JupyterHub: Multi-user Jupyter notebook support.

Dashboard: UI for managing projects, models, and pipelines.

2. Model Development Layer

Notebooks: Containerized environments with GPU/CPU options.

Data Connectors: Access to S3, Ceph, or other object storage for datasets.

3. Training and Pipeline Layer

Open Data Hub and Kubeflow Pipelines: Automate ML workflows.

Ray, MPI, and Horovod: For distributed training jobs.

4. Inference Layer

KServe/Seldon: Model serving at scale with REST and gRPC endpoints.

Model Monitoring: Metrics and performance tracking for live models.

5. Storage and Resource Management

Ceph / OpenShift Data Foundation: Persistent storage for model artifacts and datasets.

GPU Scheduling and Node Management: Leverages OpenShift for optimized hardware utilization.

🧩 Core Components of OpenShift AI

ComponentDescriptionJupyterHubWeb-based development interface for notebooksKServe/SeldonInference serving engines with auto-scalingOpen Data HubML platform tools including Kafka, Spark, and moreKubeflow PipelinesWorkflow orchestration for training pipelinesModelMeshScalable, multi-model servingPrometheus + GrafanaMonitoring and dashboarding for models and infrastructureOpenShift PipelinesCI/CD for ML workflows using Tekton

🌎 Use Cases

Financial Services: Fraud detection using real-time ML models

Healthcare: Predictive diagnostics and patient risk models

Retail: Personalized recommendations powered by AI

Manufacturing: Predictive maintenance and quality control

🏁 Final Thoughts

Red Hat OpenShift AI brings together the best of Kubernetes, open-source innovation, and enterprise-level security to enable real-world AI at scale. Whether you’re building a simple classifier or deploying a complex deep learning pipeline, OpenShift AI provides a unified, scalable, and production-grade platform.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Empowering AI Operations with Red Hat OpenShift AI (DO316)

In the rapidly evolving landscape of Artificial Intelligence and Machine Learning (AI/ML), enterprises face growing challenges in operationalizing, scaling, and managing AI workloads across hybrid cloud environments. Red Hat’s DO316 course – Operating and Managing Red Hat OpenShift AI – is designed to bridge the gap between data science innovation and enterprise-grade infrastructure management.

🚀 Course Overview

DO316 is a hands-on, lab-driven course tailored for DevOps engineers, platform engineers, and site reliability engineers (SREs) who are responsible for managing AI/ML infrastructure. It focuses on deploying, operating, and managing the Red Hat OpenShift AI platform (formerly OpenShift Data Science), which provides a Kubernetes-native environment to support the entire AI/ML lifecycle.

🛠️ What You’ll Learn

Participants gain practical experience in:

✅ Deploying and configuring Red Hat OpenShift AI components

✅ Managing user access and compute resource quotas

✅ Monitoring workloads and optimizing infrastructure for ML workloads

✅ Managing Jupyter notebooks, GPU resources, and pipelines

✅ Integrating storage solutions for persistent AI data

✅ Automating operations using GitOps and MLOps patterns

📦 Real-World Relevance

Organizations are increasingly adopting MLOps practices to ensure reproducibility, scalability, and governance of AI workflows. DO316 equips engineers with the operational expertise to support:

Large language models (LLMs)

Generative AI pipelines

Real-time inferencing on the edge

Multi-tenant AI environments

With tools like OpenShift Pipelines, ModelMesh Serving, and KServe, this course covers how to scale and serve AI models securely and efficiently.

🧑💻 Who Should Enroll?

This course is ideal for:

DevOps engineers supporting AI platforms

Infrastructure teams enabling data science workloads

SREs ensuring uptime and scalability of AI applications

Platform engineers building self-service AI/ML environments

Pre-requisite: Familiarity with OpenShift (DO180 or equivalent experience recommended)

🎓 Certification Pathway

DO316 is a part of the Red Hat Certified Specialist in OpenShift AI pathway, which strengthens your credibility in managing AI/ML workloads in production.

��� Final Thoughts

As AI moves from experimentation to production, the ability to operate and manage scalable, secure AI platforms is no longer optional—it's essential. Red Hat DO316 prepares professionals to be the backbone of enterprise AI success, enabling collaboration between data scientists and IT operations.

Interested in mastering AI infrastructure with OpenShift?

Start your journey with DO316 and become the enabler of next-gen intelligent applications. Visit our website www.hawkstack.com

0 notes

Text

What You’ll Learn in an Artificial Intelligence Course in Dubai: Topics, Tools & Projects

Artificial Intelligence (AI) is at the heart of global innovation—from self-driving cars and voice assistants to personalized healthcare and financial forecasting. As the UAE pushes forward with its Artificial Intelligence Strategy 2031, Dubai has emerged as a major hub for AI education and adoption. If you’re planning to join an Artificial Intelligence course in Dubai, understanding what you’ll actually learn can help you make the most of your investment.

In this detailed guide, we’ll explore the core topics, tools, and hands-on projects that form the foundation of a quality AI course in Dubai. Whether you're a student, IT professional, or business leader, this blog will give you a clear picture of what to expect and how this training can shape your future.

Why Study Artificial Intelligence in Dubai?

Dubai has positioned itself as a leader in emerging technologies. The city is home to:

Dubai Future Foundation and Dubai AI Lab

Smart city initiatives driven by AI

Multinational companies like Microsoft, IBM, and Oracle investing heavily in AI

A government-backed mission to make AI part of every industry by 2031

Pursuing an Artificial Intelligence course in Dubai offers:

Access to world-class instructors and global curricula

Networking opportunities with tech professionals and companies

A blend of theoretical knowledge and practical experience

Placement support and industry-recognized certification

Core Topics Covered in an AI Course in Dubai

A comprehensive Artificial Intelligence program in Dubai typically follows a structured curriculum designed to take learners from beginner to advanced levels.

1. Introduction to Artificial Intelligence

What is AI and its real-world applications

AI vs. Machine Learning vs. Deep Learning

AI use-cases in healthcare, banking, marketing, and smart cities

2. Mathematics and Statistics for AI

Linear algebra and matrices

Probability and statistics

Calculus concepts used in neural networks

3. Python Programming for AI

Python basics and intermediate syntax

Libraries: NumPy, Pandas, Matplotlib

Data visualization and manipulation techniques

4. Machine Learning

Supervised learning: Linear regression, Logistic regression, Decision Trees

Unsupervised learning: Clustering, PCA, Dimensionality reduction

Model evaluation metrics and performance tuning

5. Deep Learning

Basics of neural networks (ANN)

Convolutional Neural Networks (CNN) for image analysis

Recurrent Neural Networks (RNN) and LSTM for time-series and NLP

6. Natural Language Processing (NLP)

Text preprocessing, tokenization, lemmatization

Sentiment analysis, named entity recognition

Word embeddings: Word2Vec, GloVe, and Transformers

7. Generative AI and Large Language Models

Overview of GPT, BERT, and modern transformer models

Prompt engineering and text generation

Ethics and risks in generative AI

8. Computer Vision

Image classification and object detection

Use of OpenCV and deep learning for image processing

Real-time video analysis and face recognition

9. AI Deployment & MLOps

Building REST APIs using Flask or FastAPI

Deploying models using Streamlit, Docker, or cloud platforms

Basics of CI/CD and version control (Git, GitHub)

10. AI Ethics & Responsible AI

Bias in data and algorithms

AI governance and transparency

Global and UAE-specific ethical frameworks

Career Opportunities After an AI Course in Dubai

Upon completion of an Artificial Intelligence course in Dubai, learners can explore multiple in-demand roles:

AI Engineer

Machine Learning Engineer

Data Scientist

AI Analyst

Computer Vision Engineer

NLP Specialist

AI Product Manager

Top Companies Hiring in Dubai:

Emirates NBD (AI in banking)

Dubai Electricity and Water Authority (DEWA)

Microsoft Gulf

IBM Middle East

Oracle

Noon.com and Careem

Dubai Smart Government

Why Choose Boston Institute of Analytics (BIA) for AI Training in Dubai?

If you're looking for quality AI education with global recognition, Boston Institute of Analytics (BIA) stands out as a top choice.

Why BIA?

🌍 Globally Recognized Certification

👩🏫 Industry Experts as Instructors

🧪 Hands-On Projects and Practical Learning

💼 Placement Assistance and Career Coaching

📊 Updated Curriculum Aligned with Industry Demands

Whether you're a student, IT professional, or entrepreneur, BIA’s Artificial Intelligence Course in Dubai is designed to make you job-ready and industry-competent.

Final Thoughts

Enrolling in an Artificial Intelligence Course in Dubai is an excellent way to gain future-ready skills in one of the fastest-growing fields globally. From learning Python and machine learning to working with deep learning and generative AI, the curriculum is designed to give you practical knowledge and technical confidence.

Dubai’s focus on AI innovation, combined with international training standards, ensures you’ll be positioned to succeed in a global job market.

#Best Data Science Courses in Dubai#Artificial Intelligence Course in Dubai#Data Scientist Course in Dubai#Machine Learning Course in Dubai

0 notes

Text

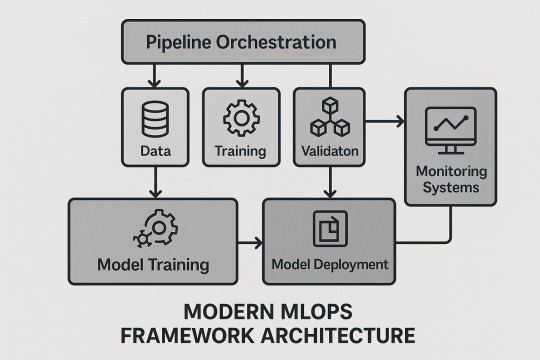

Scaling Machine Learning Operations with Modern MLOps Frameworks

The rise of business-critical AI demands sophisticated operational frameworks. Modern end to end machine learning pipeline frameworks combine ML best practices with DevOps, enabling scalable, reliable, and collaborative operations.

MLOps Framework Architecture

Experiment management and artifact tracking

Model registry and approval workflows

Pipeline orchestration and workflow management

Advanced Automation Strategies

Continuous integration and testing for ML

Automated retraining and rollback capabilities

Multi-stage validation and environment consistency

Enterprise-Scale Infrastructure

Kubernetes-based and serverless ML platforms

Distributed training and inference systems

Multi-cloud and hybrid cloud orchestration

Monitoring and Observability

Multi-dimensional monitoring and predictive alerting

Root cause analysis and distributed tracing

Advanced drift and business impact analytics

Collaboration and Governance

Role-based collaboration and cross-functional workflows

Automated compliance and audit trails

Policy enforcement and risk management

Technology Stack Integration

Kubeflow, MLflow, Weights & Biases, Apache Airflow

API-first and microservices architectures

AutoML, edge computing, federated learning

Conclusion

Comprehensive end to end machine learning pipeline frameworks are the foundation for sustainable, scalable AI. Investing in MLOps capabilities ensures your organization can innovate, deploy, and scale machine learning with confidence and agility.

0 notes