#OSS Fuzz

Explore tagged Tumblr posts

Text

How did this backdoor come to be?

It would appear that this backdoor was years in the making. In 2021, someone with the username JiaT75 made their first known commit to an open source project. In retrospect, the change to the libarchive project is suspicious, because it replaced the safe_fprint funcion with a variant that has long been recognized as less secure. No one noticed at the time.

The following year, JiaT75 submitted a patch over the xz Utils mailing list, and, almost immediately, a never-before-seen participant named Jigar Kumar joined the discussion and argued that Lasse Collin, the longtime maintainer of xz Utils, hadn’t been updating the software often or fast enough. Kumar, with the support of Dennis Ens and several other people who had never had a presence on the list, pressured Collin to bring on an additional developer to maintain the project.

In January 2023, JiaT75 made their first commit to xz Utils. In the months following, JiaT75, who used the name Jia Tan, became increasingly involved in xz Utils affairs. For instance, Tan replaced Collins' contact information with their own on oss-fuzz, a project that scans open source software for vulnerabilities that can be exploited. Tan also requested that oss-fuzz disable the ifunc function during testing, a change that prevented it from detecting the malicious changes Tan would soon make to xz Utils.

#consensus seems to be that 'jia tan' is an intelligence agency#as opposed to a ransomware group or similar

19 notes

·

View notes

Text

Google's AI-Powered OSS-Fuzz Tool Finds 26 Vulnerabilities in Open-Source Projects

Source: https://thehackernews.com/2024/11/googles-ai-powered-oss-fuzz-tool-finds.html

More info: https://security.googleblog.com/2024/11/leveling-up-fuzzing-finding-more.html

2 notes

·

View notes

Text

Fuzz Testing: Strengthening Software Security with Keploy

In today’s fast-paced digital world, ensuring software security is a top priority. One of the most effective ways to uncover hidden vulnerabilities is through fuzz testing (or fuzzing). This powerful software testing technique involves injecting random, malformed, or unexpected inputs into an application to identify security weaknesses. Fuzz testing is widely used to detect crashes, memory leaks, and security exploits that traditional testing methods might miss.

With the increasing complexity of modern software, AI-driven testing tools like Keploy can automate fuzz testing and improve the overall security and stability of applications. In this blog, we’ll explore fuzz testing, its importance, different types, tools, best practices, and how Keploy enhances fuzz testing.

What is Fuzz Testing?

Fuzz testing is an automated testing approach designed to test how software handles unexpected or incorrect input data. By flooding an application with random or malformed data, fuzzing helps identify security vulnerabilities, crashes, and stability issues that attackers could exploit.

Unlike traditional testing, which uses predefined test cases, fuzz testing is designed to break the system by simulating real-world attack scenarios. It plays a crucial role in penetration testing, security auditing, and robustness testing.

Why is Fuzz Testing Important?

Fuzz testing is one of the most effective ways to uncover security flaws in software applications. Here’s why it matters:

Identifies Hidden Vulnerabilities – Helps find security loopholes that traditional testing might miss.

Enhances Software Stability – Prevents crashes and unexpected behavior under extreme conditions.

Automates Security Testing – Saves time by automatically generating unexpected test cases.

Simulates Real-World Attacks – Identifies weaknesses before malicious attackers do.

Real-World Example

Fuzz testing has played a crucial role in identifying vulnerabilities in operating systems, web applications, and network protocols. Major software vendors like Google, Microsoft, and Apple use fuzzing techniques to detect security flaws before deployment.

Types of Fuzz Testing

There are different fuzzing techniques based on how inputs are generated and applied:

1. Mutation-Based Fuzzing

This method modifies existing valid inputs by introducing small changes or random errors. It is simple but may not always cover all edge cases.

2. Generation-Based Fuzzing

Instead of modifying existing inputs, this approach creates test inputs from scratch based on predefined rules. It is more structured and effective for testing complex applications.

3. Coverage-Guided Fuzzing

This technique monitors code coverage and adjusts test inputs dynamically to reach more parts of the application. Tools like AFL (American Fuzzy Lop) and LibFuzzer use this approach.

How Fuzz Testing Works

The fuzz testing process typically follows these steps:

Input Generation – The fuzzer creates random, malformed, or unexpected inputs.

Test Execution – The inputs are injected into the application to observe how it reacts.

Behavior Monitoring – The system is monitored for crashes, memory leaks, or unexpected outputs.

Bug Analysis – Any failures are logged and analyzed for potential security threats.

This approach automates the testing process and quickly identifies vulnerabilities.

Fuzz Testing vs. Other Testing Techniques

How does fuzz testing compare to traditional testing methods?

While unit testing and functional testing ensure correct behavior, fuzz testing goes beyond correctness to test robustness against unexpected conditions.

Tools for Fuzz Testing

Several tools automate fuzz testing:

AFL (American Fuzzy Lop): A powerful, coverage-guided fuzzer used for security testing.

LibFuzzer: An in-process fuzzer for C/C++ programs that helps find memory issues.

Google OSS-Fuzz: A large-scale fuzzing service that continuously tests open-source projects.

Keploy: An AI-powered testing platform that automates test case generation and fuzz testing for APIs and integration testing.

How Keploy Enhances Fuzz Testing

Keploy is an AI-driven testing tool that generates test cases automatically by recording real-world traffic and responses. It enhances fuzz testing by:

Generating unexpected test cases for API security testing.

Simulating real-world edge cases without manual effort.

Improving test coverage and reliability for software applications.

Automating bug detection in production-like environments.

With Keploy, developers can integrate fuzz testing into their continuous testing strategy to ensure secure and robust software deployments.

Best Practices for Fuzz Testing

To maximize fuzz testing effectiveness, follow these best practices:

Integrate Early in the Development Cycle – Detect vulnerabilities before release.

Use a Combination of Testing Approaches – Combine fuzz testing with unit and integration testing.

Monitor and Log Failures – Keep detailed logs to analyze bugs effectively.

Automate with AI-powered Tools – Use Keploy and other automated fuzz testing tools to enhance efficiency.

Conclusion

Fuzz testing is a critical security testing technique that helps identify vulnerabilities by injecting unexpected inputs into software applications. It ensures software stability, prevents crashes, and enhances security. By leveraging AI-driven tools like Keploy, developers can automate fuzz testing, generate more realistic test cases, and improve software reliability. As cyber threats continue to evolve, integrating fuzz testing into your testing strategy is essential for building secure, robust, and high-performing applications.

0 notes

Text

Google OSS-Fuzz Harnesses AI to Expose 26 Hidden Security Vulnerabilities

http://i.securitythinkingcap.com/TGJjxP

0 notes

Text

AIxCC, To Protect Nation’s Most Important Software

DARPA AI cyber challenge

Among the most obvious advantages of artificial intelligence (AI) is its capacity to improve cybersecurity for businesses and global internet users. This is particularly true since malicious actors are still focussing on and taking advantage of vital software and systems. These dynamics will change as artificial intelligence develops, and if used properly, AI may help move the sector towards a more secure architecture for the digital age.

Experience also teaches us that, in order to get there, there must be strong public-private sector partnerships and innovative possibilities such as DARPA’s AI Cyber Challenge (AIxCC) Semifinal Competition event, which will take place from August 8–11 at DEF CON 32 in Las Vegas. Researchers in cybersecurity and AI get together for this two-year challenge to create new AI tools that will aid in the security of significant open-source projects.

This competition is the first iteration of the challenge that DARPA AIxCC unveiled at Black Hat last year. Following the White House’s voluntary AI commitments, which saw business and government unite to promote ethical methods in AI development and application, is the Semifinal Competition. Today, we’re going to discuss how Google is assisting rivals in the AIxCC:

Google Cloud resources: Up to $1 million in Google Cloud credits will be awarded by Google to each qualifying AIxCC team, in an effort to position rivals for success. The credits can be redeemed by participants for Gemini and other qualified Google Cloud services. As a result, during the challenge, competitors will have access to Google Cloud AI and machine learning models, which they can utilise and expand upon for a variety of purposes. Google is urge participants to benefit from Google incentive schemes, such as the Google for firms Program, which reimburses up to $350,000 in Google Cloud expenses for AI firms.

Experts in cybersecurity: For years, Google has been in the forefront of utilising AI in security, whether it is for sophisticated threat detection or shielding Gmail users from phishing scams. Google excited to share knowledge with AIxCC by making security specialists available throughout the challenge, they have witnessed the promise of AI for security. Google security specialists will be available to offer guidance on infrastructure and projects as well as assist in creating the standards by which competitors will be judged. Specifically, Google discuss recommended approaches to AIxCC in Google tutorial on how AI may improve Google’s open source OSS-Fuzz technology.

Experience AIxCC at DEF CON: We’ll be showcasing Google’s AI technology next week at the AIxCC Semifinal Experience at DEF CON, complete with an AI-focused hacker demo and interactive demonstrations. In order to demonstrate AI technologies, which includes Google Security Operations and SecLM, Google will also have Chromebooks at the Google exhibit. Google security specialists will be available to guests to engage in technical conversations and impart information.

AI Cyber Challenge DARPA

At Black Hat USA 2023, DARPA invited top computer scientists, AI experts, software engineers, and others to attend the AI Cyber Challenge (AIxCC). The two-year challenge encourages AI-cybersecurity innovation to create new tools.

Software powers everything in Google ever-connected society, from public utilities to financial institutions. Software increases productivity and makes life easier for people nowadays, but it also gives bad actors a larger area to attack.

This surface includes vital infrastructure, which is particularly susceptible to cyberattacks, according to DARPA specialists, because there aren’t enough technologies to adequately secure systems on a large scale. Cyber defenders face a formidable attack surface, which has been evident in recent years as malevolent cyber actors take advantage of this state of affairs to pose risks to society. Notwithstanding these weaknesses, contemporary technological advancements might offer a way around them.

AIxCC DARPA

According to Perri Adams, DARPA’s AIxCC program manager, “AIxCC represents a first-of-its-kind collaboration between top AI companies, led by DARPA to create AI-driven systems to help address one of society’s biggest issue: cybersecurity.” Over the past decade, exciting AI-enabled capabilities have emerged. DARPA see a lot of potential for this technology to be used to important cybersecurity challenges when handled appropriately. DARPA can have the most influence on cybersecurity in the nation and the world by automatically safeguarding essential software at scale.Image credit to DARPA

Participation at AIxCC will be available on two tracks: the Funded Track and the Open Track. Competitors in the Funded Track will be chosen from submissions made in response to a request for proposals for Small Business Innovation Research. Funding for up to seven small enterprises to take part will be provided. Through the competition website, Open Track contestants will register with DARPA; they will not receive funds from DARPA.

During the semifinal phase, teams on all tracks will compete in a qualifying event where the best scoring teams (up to 20) will be invited to the semifinal tournament. The top five scoring teams from these will advance to the competition’s final stage and win cash prizes. Additional cash awards will be given to the top three contestants in the final competition.

DARPA AIxCC

Leading AI businesses come together at DARPA AIxCC, where they will collaborate with DARPA to make their state-of-the-art technology and knowledge available to rivals. DARPA will work with Anthropic, Google, Microsoft, and Open AI to allow rivals to create cutting-edge a cybersecurity solutions.

As part of the Linux Foundation, the Open Source Security Foundation (OpenSSF) will act as a challenge advisor to help teams develop AI systems that can tackle important cybersecurity problems, like the safety of a crucial infrastructure and software supply chains. The majority of software, and therefore the majority of the code that requires security, is open-source and frequently created by volunteers in the community. Approximately 80% of contemporary software stacks, which include everything from phones and cars to electricity grids, manufacturing facilities, etc., use open-source software, according to the Linux Foundation.

Lastly, there will be AIxCC competitions at DEF CON and additional events at Black Hat USA. These two globally renowned cybersecurity conferences bring tens of thousands of practitioners, experts, and fans to Las Vegas every August. There will be two stages of AIxCC: the semifinal stage and the final stage. DEF CON in Las Vegas will hold 2024 and 2025 semifinals and finals.

DEF CON 32’s AI Village

Google will keep supporting the AI Village at DEF CON, which informs attendees about security and privacy concerns related to AI, in addition to work with the AIxCC. In addition to giving the Village a $10,000 donation this year, Google will be giving workshop participants Pixel books so they can acquire practical experience with the nexus of cybersecurity and AI. After a successful red teaming event in which Google participated and security experts worked on AI security concerns, the AI Village is back this year.

Google excited to see what fresh ideas emerge from the AIxCC Semifinal Competition and to discuss how they may be used to safeguard the software that Google all use. Meanwhile, the Secure AI Framework and the recently established Coalition for Secure AI provide additional information about their efforts in the field of AI security.

Read more on govindhtech.com

#AIxCC#googlecloud#Cybersecurity#AI#meachinelearning#supplychain#aisecurity#aitools#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Google offers free access to fuzzing framework

Fuzzing can be a valuable tool for ferreting out zero-day vulnerabilities in software. In hopes of encouraging its use by developers and researchers, Google announced Wednesday it’s now offering free access to its fuzzing framework, OSS-Fuzz. https://jpmellojr.blogspot.com/2024/02/google-offers-free-access-to-fuzzing.html

1 note

·

View note

Text

This Week in Rust 525

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Announcing Rust 1.74.1

Cargo cache cleaning

Newsletters

This Month in Rust OSDev: November 2023

Project/Tooling Updates

rust-analyzer changelog #211

PC Music Generator

Announcing mfio - Completion I/O for Everyone

Watchexec Library 3.0 and CLI 1.24

[series] Spotify-Inspired: Elevating Meilisearch with Hybrid Search and Rust

Observations/Thoughts

Rust Is Beyond Object-Oriented, Part 3: Inheritance

Being Rusty: Discovering Rust's design axioms

Non-Send Futures When?

for await and the battle of buffered streams

poll_progress

Rust and ThreadX - experiments with an RTOS written in C, a former certified software component

Nine Rules for SIMD Acceleration of Your Rust Code (Part 1): General Lessons from Boosting Data Ingestion in the range-set-blaze Crate by 7x

Contributing to Rust as a novice

[audio] Exploring Rust's impact on efficiency and cost-savings, with Stefan Baumgartner

Rust Walkthroughs

Common Mistakes with Rust Async

Embassy on ESP: UART Transmitter

Writing a CLI Tool in Rust with Clap

Memory and Iteration

Getting Started with Axum - Rust's Most Popular Web Framework

Exploring the AWS Lambda SDK in Rust

Practical Client-side Rust for Android, iOS, and Web

[video] Advent of Code 2023

Miscellaneous

Turbofish ::<>

Rust Meetup and user groups

Adopting Rust: the missing playbook for managers and CTOs

SemanticDiff 0.8.8 adds support for Rust

Crate of the Week

This week's crate is io-adapters, a crate tha lets you convert between different writeable APIs (io vs. fmt).

Thanks to Alex Saveau for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

greptimedb - API improvement for pretty print sql query result in http output 1

greptimedb - Unify builders' patterns

tokio - Run loom tests in oss-fuzz 4

Ockam - Library - Validate CBOR structs according to the cddl schema for kafka/protocol_aware and nodes/services

Ockam - Command - refactor to use typed interfaces to implement commands for relays

Ockam - Make install.sh not fail when the latest version is already installed

zerocopy - Use cargo-semver-checks to make sure derive feature doesn't change API surface

zerocopy - Verify that all-jobs-succeeded CI job depends on all other jobs

Hyperswitch - [REFACTOR]: [Nuvei] MCA metadata validation

Hyperswitch - [Feature] : [Noon] Sync with Hyperswitch Reference

Hyperswitch - [BUG] : MCA metadata deserialization failures should be 4xx

Hyperswitch - [Feature] : [Zen] Sync with Hyperswitch Reference

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

391 pull requests were merged in the last week

introduce support for async gen blocks

implement 2024-edition lifetime capture rules RFC

riscv32 platform support

add teeos std impl

never_patterns: Parse match arms with no body

rustc_symbol_mangling,rustc_interface,rustc_driver_impl: Enforce rustc::potential_query_instability lint

add ADT variant infomation to StableMIR and finish implementing TyKind::internal()

add deeply_normalize_for_diagnostics, use it in coherence

add comment about keeping flags in sync between bootstrap.py and bootstrap.rs

add emulated TLS support

add instance evaluation and methods to read an allocation in StableMIR

add lint against ambiguous wide pointer comparisons

add method to get type of an Rvalue in StableMIR

add more SIMD platform-intrinsics

add safe compilation options

add support for gen fn

add support for making lib features internal

added shadowed hint for overlapping associated types

avoid adding builtin functions to symbols.o

avoid instantiating infer vars with infer

change prefetch to avoid deadlock

compile-time evaluation: detect writes through immutable pointers

coverage: be more strict about what counts as a "visible macro"

coverage: merge refined spans in a separate final pass

coverage: simplify the heuristic for ignoring async fn return spans

coverage: use SpanMarker to improve coverage spans for if ! expressions

dedup for duplicate suggestions

discard invalid spans in external blocks

do not parenthesize exterior struct lit inside match guards

don't include destruction scopes in THIR

don't print host effect param in pretty path_generic_args

don't warn an empty pattern unreachable if we're not sure the data is valid

enforce must_use on associated types and RPITITs that have a must-use trait in bounds

explicitly implement DynSync and DynSend for TyCtxt

fix is_foreign_item for StableMIR instance

fix const drop checking

fix in-place collect not reallocating when necessary

fix parser ICE when recovering dyn/impl after for<...>

fix: correct the arg for 'suggest to use associated function syntax' diagnostic

generalize LLD usage in bootstrap

generalize: handle occurs check failure in aliases

implement --env compiler flag (without tracked_env support)

implement repr(packed) for repr(simd)

improve print_tts

interpret: make numeric_intrinsic accessible from Miri

make async generators fused by default

make sure panic_nounwind_fmt can still be fully inlined (e.g. for panic_immediate_abort)

only check principal trait ref for object safety

pretty print Fn<(..., ...)> trait refs with parentheses (almost) always

privacy: visit trait def id of projections

provide context when ? can't be called because of Result<_, E>

rearrange default_configuration and CheckCfg::fill_well_known

recurse into refs when comparing tys for diagnostics

remove PolyGenSig since it's always a dummy binder

remove the precise_pointer_size_matching feature gate

resolve associated item bindings by namespace

streamline MIR dataflow cursors

structured use suggestion on privacy error

tell MirUsedCollector that the pointer alignment checks calls its panic symbol

tip for define macro name after macro_rules!

tweak .clone() suggestion to work in more cases

tweak unclosed generics errors

unescaping cleanups

uplift the (new solver) canonicalizer into rustc_next_trait_solver

use immediate_backend_type when reading from a const alloc

use default params until effects in desugaring

miri: fix promising a very large alignment

miri: fix x86 SSE4.1 ptestnzc

miri: move some x86 intrinsics code to helper functions in shims::x86

miri: return MAP_FAILED when mmap fails

stablize arc_unwrap_or_clone

add LinkedList::{retain,retain_mut}

simplify Default for tuples

restore const PartialEq

split Vec::dedup_by into 2 cycles

futures: fillBuf: do not call poll_fill_buf twice

futures: FuturesOrdered: use 64-bit index

futures: FuturesUnordered: fix clear implementation

futures: use cfg(target_has_atomic) on no-std targets

cargo: spec: Extend PackageIdSpec with source kind + git ref for unambiguous specs

cargo toml: disallow inheriting of dependency public status

cargo toml: disallow [lints] in virtual workspaces

cargo: schema: Remove reliance on cargo types

cargo: schemas: Pull out mod for proposed schemas package

cargo: trim-paths: assert OSO and SO cannot be trimmed

cargo: avoid writing CACHEDIR.TAG if it already exists

cargo: fix bash completion in directory with spaces

cargo: explicitly remap current dir by using .

cargo: print rustc messages colored on wincon

cargo: limit exported-private-dependencies lints to libraries

rustdoc-search: do not treat associated type names as types

rustdoc: Don't generate the "Fields" heading if there is no field displayed

rustdoc: Fix display of features

rustdoc: do not escape quotes in body text

rustdoc: remove unused parameter reversed from onEach(Lazy)

bindgen: support float16

rustfmt: add StyleEdition enum and StyleEditionDefault trait

clippy: fix(ptr_as_ptr): handle std::ptr::null{_mut}

clippy: needless_borrows_for_generic_args: Handle when field operand impl Drop

clippy: uninhabited_reference: new lint

clippy: add a function to check whether binary oprands are nontrivial

clippy: fix is_from_proc_macro patterns

rust-analyzer: check if lhs is also a binexpr and use its rhs in flip binexpr assist

rust-analyzer: fallback to method resolution on unresolved field access with matching method name

rust-analyzer: add trait_impl_reduntant_assoc_item diagnostic

rust-analyzer: allow navigation targets to be duplicated when the focus range lies in the macro definition site

rust-analyzer: implicit format args support (hooray!)

rust-analyzer: prioritize import suggestions based on the expected type

rust-analyzer: fix WideChar offsets calculation in line-index

rust-analyzer: fix panic with closure inside array len

rust-analyzer: bug in extract_function.rs

rust-analyzer: don't emit "missing items" diagnostic for negative impls

rust-analyzer: don't print proc-macro panic backtraces in the logs

rust-analyzer: fix concat_bytes! expansion emitting an identifier

rust-analyzer: fix completion failing in format_args! with invalid template

rust-analyzer: fix diagnostics panicking when resolving to different files due to macros

rust-analyzer: fix item tree lowering pub(self) to pub()

rust-analyzer: fix runnable cwd on Windows

rust-analyzer: fix token downmapping being quadratic

rust-analyzer: fix view mir, hir and eval function not working when cursor is inside macros

rust-analyzer: insert fn call parens only if the parens inserted around field name

rust-analyzer: make drop inlay hint more readable

rust-analyzer: resolve Self type references in delegate method assist

rust-analyzer: smaller spans for unresolved field and method diagnostics

rust-analyzer: make ParamLoweringMode accessible

rust-analyzer: query for nearest parent block around the hint to resolve

rust-analyzer: replace doc_comments_and_attrs with collect_attrs

rust-analyzer: show placeholder while run command gets runnables from server

rustc-perf: add support for benchmarking Cranelift codegen backend

Rust Compiler Performance Triage

A quiet week overall. A few smaller crate (e.g., helloworld) benchmarks saw significant improvements in #118568, but this merely restores performance regressed earlier.

Triage done by @simulacrum. Revision range: 9358642..5701093

5 Regressions, 2 Improvements, 3 Mixed; 2 of them in rollups

69 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Merge RFC 3531: "Macro fragment specifiers edition policy"

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs entered Final Comment Period this week.

Tracking Issues & PRs

[disposition: merge] Tracking Issue for Bound::map

[disposition: merge] Stabilize THIR unsafeck

[disposition: merge] Exhaustiveness: reveal opaque types properly

[disposition: merge] Properly reject default on free const items

[disposition: merge] Make inductive cycles in coherence ambiguous always

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline RFCs entered Final Comment Period this week.

New and Updated RFCs

Async Drop

Closure PartialEq and Eq

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

Cargo cache cleaning

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-12-13 - 2024-01-10 🦀

Virtual

2023-12-14 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-12-14 | Virtual (Nuremberg, DE) | Rust Nuremberg

Rust Nürnberg online

2023-12-17 | Virtual (Tel Aviv, IL) | Code Mavens

Don't panic! - Our journey to error handling in Rust

2023-12-18 | Virtual (Munich, DE) | Rust Munich

Rust Munich 2023 / 5 - hybrid

2023-12-19 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-12-19 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2023-12-19 | Virtual (Linz, AT) Rust Linz

Rust Meetup Linz - 35th Edition

2023-12-20 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Adventures in egui app dev

2023-12-26 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2023-12-28 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-01-03 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

Asia

2023-12-16 | Delhi, IN | Rust Delhi

Meetup #4

Europe

2023-12-14 | Augsburg, DE | Rust - Modern Systems Programming in Leipzig

Augsburg Rust Meetup #4

2023-12-14 | Basel, CH | Rust Basel

Testing: Learn from the pros

2023-12-18 | Munich, DE + Virtual | Rust Munich

Rust Munich 2023 / 5 - hybrid

2023-12-19 | Heidelberg, DE | Nix Your Bugs & Rust Your Engines

Nix Your Bugs & Rust Your Engines #1

2023-12-19 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

Tauri, an Electron-alternative

2023-12-27 | Copenhagen, DK | Copenhagen Rust Community

Rust hacknight #1: CLIs, TUIs and plushies

North America

2023-12-13 | Chicago, IL, US | Deep Dish Rust

Rust Hack Night

2023-12-14 | Lehi, UT, US | Utah Rust

Sending Data with Channels w/Herbert Wolverson

2023-12-14 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2023-12-15 | Somerville, MA, US | Boston Rust Meetup

Ball Square Rust Lunch

2023-12-19 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2023-12-27 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2024-01-09 | Minneapolis, MN, US | Minneapolis Rust Meetup

Minneapolis Rust Meetup Happy Hour

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

Sadly, the week went by without a nominated quote.

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

1 note

·

View note

Text

🎙 Les Heures Supp' #1 - Le Portrait Filmois d'Alix 🎙

C'est l'été, et la Feuille de Service se met en mode vacances avec une série spéciale : les Heures Supp' !

Pour ce premier épisode des HS, c'est enfin à moi de passer sur le grill et de répondre au redoutable portrait filmois. Je vous préviens tout de suite : j'ai des goûts bien définis et je parle toujours de la même chose, finalement... 😬

🎬 OEUVRES CITÉES :

Huit et Demi, Federico Fellini (1963)

Le Guépard, Luchino Visconti (1963)

La La Land, Damien Chazelle (2016)

Barbie, Greta Gerwig (2023)

Frances Ha, Noah Baumbach (2012)

Lady Bird, Greta Gerwig (2017)

Little Women, Greta Gerwig (2019)

Saga Narnia

The A24 Podcast

Girls On Top

Inside Llewyn Davis, Joel et Ethan Coen (2013)

The Artist, Michel Hazanavicius (2011)

Chantons Sous la Pluie, Stanley Donen & Gene Kelly (1952)

Disney Channel Original Movies

Astérix et Obélix : Mission Cléopâtre d'Alain Chabat (2002)

Saga OSS 117

Trilogie Cornetto d'Edgar Wright : Shaun of the Dead (2004), Hot Fuzz (2007), Le Dernier Pub avant la fin du Monde (2013)

Titanic, James Cameron (1997)

Le Tombeau des Lucioles, Isao Takahata (1988)

L'Incompris, Luigi Comencini (1966)

The Shining, Stanley Kubrick (1980)

Toy Story, John Lasseter (1995)

Alice au Pays des Merveilles, Clyde Geronimi, Wilfred Jackson & Hamilton Luske (1951)

Peter Pan, Clyde Geronimi, Wilfred Jackson & Hamilton Luske (1953)

The Greatest Showman, Michael Gracey (2017)

Once Upon a Time... in Hollywood, Quentin Tarantino (2019)

Sunset Boulevard, Billy Wilder (1950)

La Nuit du Chasseur, Charles Laughton (1955)

📝 TRANSCRIPTION

1 note

·

View note

Text

Google Introduces Open Sourch Tech to Help Its Interns Safely Work From Home

Google Introduces Open Sourch Tech to Help Its Interns Safely Work From Home

Image for representation.

This is the first year when Google summer internship programme would be virtual and several technical internships would focus on open source projects.

IANS

Last Updated: June 13, 2020, 11:39 AM IST

Thanks to the open-source technology, thousands of young people will join Google from their homes in 43 countries for the summer internship as…

View On WordPress

#Apache Beam#chromium#Coronavirus#coronavirus e-classes#coronavirus stay home#Coronavirus WFH#Covid-19 Severity Project#Google#Google covid-19 efforts#Google latest news#Google Open Source tech#Google summer interns#Home#Interns#Introduces#Istio#Kubernetes#open#OSS-Fuzz#Safely#Sourch#Tech#TensorFlow#virtual classes#work#world after coronavirus

0 notes

Text

Autoharness - A Tool That Automatically Creates Fuzzing Harnesses Based On A Library

Autoharness – A Tool That Automatically Creates Fuzzing Harnesses Based On A Library

AutoHarness is a tool that automatically generates fuzzing harnesses for you. This idea stems from a concurrent problem in fuzzing codebases today: large codebases have thousands of functions and pieces of code that can be embedded fairly deep into the library. It is very hard or sometimes even impossible for smart fuzzers to reach that codepath. Even for large fuzzing projects such as oss-fuzz,…

View On WordPress

50 notes

·

View notes

Text

Google boosts bounties for open source flaws found via fuzzing

Source: https://www.theregister.com/2023/02/01/google_fuzz_rewards/

More info:

https://bughunters.google.com/about/rules/5097259337383936/oss-fuzz-reward-program-rules

https://google.github.io/fuzzbench/

3 notes

·

View notes

Text

Fuzz Testing: A Comprehensive Guide to Uncovering Hidden Vulnerabilities

In the realm of software testing, fuzz testing, or fuzzing, has emerged as a powerful technique to uncover vulnerabilities and improve system robustness. By introducing random and unexpected input into systems, fuzz testing helps identify weaknesses that traditional testing methods might overlook.

What Is Fuzz Testing?

The Concept Behind Fuzz Testing Fuzz testing is a software testing method that involves feeding random, unexpected, or malformed data into a program to identify potential vulnerabilities or crashes. The idea is to simulate unpredictable user input or external data that could expose hidden bugs in the software.

History of Fuzz Testing Fuzz testing originated in the late 1980s when researchers began exploring ways to stress-test systems under random input conditions. Over time, it has evolved into a sophisticated and indispensable tool for ensuring software security and reliability.

How Does Fuzz Testing Work?

Types of Fuzzers There are different types of fuzzers, each serving unique testing needs:

Mutation-Based Fuzzers: Modify existing input samples to generate test cases.

Generation-Based Fuzzers: Create test inputs from scratch, based on predefined rules or formats.

Fuzzing Process The fuzzing process typically involves three key steps:

Input Generation: Randomized or malformed input is generated.

Test Execution: The input is fed into the target system.

Monitoring: The system is observed for crashes, memory leaks, or unexpected behavior.

Benefits of Fuzz Testing

Identifying Vulnerabilities Fuzz testing excels at uncovering security vulnerabilities, such as buffer overflows, SQL injection points, and memory leaks, that may not surface during manual or automated scripted testing.

Improving System Robustness By exposing systems to unexpected inputs, fuzz testing helps improve their resilience to real-world scenarios. This ensures that software can handle edge cases and unforeseen data gracefully.

Challenges in Fuzz Testing

High Computational Overhead Generating and processing large volumes of random input can consume significant computational resources, making it challenging to integrate fuzz testing into resource-constrained environments.

Difficulty in Analyzing Results Determining whether a system failure is meaningful or spurious requires careful analysis. False positives can complicate debugging and slow down the development process.

Popular Tools for Fuzz Testing

AFL (American Fuzzy Lop) AFL is a widely-used fuzzer that applies mutation-based fuzzing to identify bugs efficiently. Its lightweight design and high performance make it a favorite among developers.

LibFuzzer LibFuzzer is an in-process, coverage-guided fuzzing library that integrates seamlessly with projects using LLVM. It allows for precise, efficient testing.

Other Notable Tools Other tools, such as Peach, Honggfuzz, and OSS-Fuzz, provide robust fuzz testing capabilities for various platforms and use cases.

When Should You Use Fuzz Testing?

Applications in Security-Critical Systems Fuzz testing is essential for systems that handle sensitive data, such as payment gateways, healthcare systems, and financial software, as it ensures that they are secure against potential threats.

Ensuring Protocol Compliance Fuzz testing is particularly useful for testing communication protocols to verify adherence to standards and uncover weaknesses in data handling.

Best Practices for Effective Fuzz Testing

Combine Fuzz Testing with Other Methods Fuzz testing should complement, not replace, unit and integration testing for comprehensive coverage. By combining testing methods, you can achieve better results and uncover a wider range of issues.

Monitor and Analyze Results Effectively Employ tools and techniques to track crashes and interpret logs for actionable insights. This helps prioritize fixes and minimizes false positives.

Regularly Update and Customize Fuzzers Tailoring fuzzers to your application’s specific needs and updating them as your software evolves improves their effectiveness.

Future of Fuzz Testing

AI-Driven Fuzzing Artificial intelligence is enabling smarter fuzzers that generate more meaningful test cases. These AI-powered tools can better simulate real-world scenarios and uncover vulnerabilities faster.

Increased Automation in CI/CD Pipelines Fuzz testing is becoming an integral part of continuous testing in DevOps pipelines, ensuring that vulnerabilities are identified and fixed early in the software development lifecycle.

Conclusion

Fuzz testing is a powerful technique that can uncover hidden vulnerabilities and strengthen software systems. By feeding systems with random, malformed inputs, fuzz testing ensures resilience against unpredictable scenarios. Whether you're working on security-critical applications or enhancing protocol compliance, fuzz testing is a must-have in your testing strategy. As technology advances, integrating fuzz testing into your software development lifecycle will not only boost reliability but also safeguard your applications against evolving security threats. Don’t overlook the power of fuzzing—adopt it today to secure your software for tomorrow.

0 notes

Text

Google OSS-Fuzz Harnesses AI to Expose 26 Hidden Security Vulnerabilities

http://securitytc.com/TGJjxq

0 notes

Text

Cloud Virtual CISO: 3 Intriguing AI Cybersecurity Use Cases

Cloud Virtual CISO Three intriguing AI cybersecurity use cases from a Cloud Virtual CISO intriguing cybersecurity AI use cases

For years, They’ve believed artificial intelligence might transform cybersecurity and help defenders. According to Google Cloud, AI can speed up defences by automating processes that formerly required security experts to labour.

While full automation is still a long way off, AI in cybersecurity is already providing assisting skills. Today’s security operations teams can benefit from malware analysis, summarization, and natural-language searches, and AI can speed up patching.

AI malware analysis Attackers have created new malware varieties at an astonishing rate, despite malware being one of the oldest threats. Defenders and malware analyzers have more varieties, which increases their responsibilities. Automation helps here.

Their Gemini 1.5 Pro was tested for malware analysis. They gave a simple query and code to analyse and requested it to identify dangerous files. It was also required to list compromising symptoms and activities.

Gemini 1.5 Pro’s 1 million token context window allowed it to parse malware code in a single pass and normally in 30 to 40 seconds, unlike previous foundation models that performed less accurately. Decompiled WannaCry malware code was one of the samples They tested Gemini 1.5 Pro on. The model identified the killswitch in 34 seconds in one pass.

They tested decompiled and disassembled code with Gemini 1.5 Pro on multiple malware files. Always correct, it created human-readable summaries.

The experiment report by Google and Mandiant experts stated that Gemini 1.5 Pro was able to accurately identify code that was obtaining zero detections on VirusTotal. As They improve defence outcomes, Gemini 1.5 Pro will allow a 2 million token context frame to transform malware analysis at scale.

Boosting SecOps with AI Security operations teams use a lot of manual labour. They can utilise AI to reduce that labour, train new team members faster, and speed up process-intensive operations like threat intelligence analysis and case investigation noise summarising. Modelling security nuances is also necessary. Their security-focused AI API, SecLM, integrates models, business logic, retrieval, and grounding into a holistic solution. It accesses Google DeepMind’s cutting-edge AI and threat intelligence and security data.

Onboarding new team members is one of AI’s greatest SecOps benefits. Artificial intelligence can construct reliable search queries instead of memorising proprietary SecOps platform query languages.

Natural language inquiries using Gemini in Security Operations are helping Pfizer and Fiserv onboard new team members faster, assist analysts locate answers faster, and increase security operations programme efficiency.

Additionally, AI-generated summaries can save time by integrating threat research and explaining difficult facts in natural language. The director of information security at a leading multinational professional services organisation told Google Cloud that Gemini Threat Intelligence AI summaries can help write an overview of the threat actor, including relevant and associated entities and targeted regions.

The customer remarked the information flows well and helps us obtain intelligence quickly. Investigation summaries can be generated by AI. As security operations centre teams manage more data, they must detect, validate, and respond to events faster. Teams can locate high-risk signals and act with natural-language searches and investigation summaries.

Security solution scaling with AI In January, Google’s Machine Learning for Security team published a free, open-source fuzzing platform to help researchers and developers improve vulnerability-finding. The team told AI foundation models to write project-specific code to boost fuzzing coverage and uncover additional vulnerabilities. This was added to OSS-Fuzz, a free service that runs open-source fuzzers and privately alerts developers of vulnerabilities.

Success in the experiment: With AI-generated, extended fuzzing coverage, OSS-Fuzz covered over 300 projects and uncovered new vulnerabilities in two projects that had been fuzzed for years.

The team noted, “Without the completely LLM-generated code, these two vulnerabilities could have remained undiscovered and unfixed indefinitely.” They patched vulnerabilities with AI. An automated pipeline for foundation models to analyse software for vulnerabilities, develop patches, and test them before picking the best candidates for human review was created.

The potential for AI to find and patch vulnerabilities is expanding. By stacking tiny advances, well-crafted AI solutions can revolutionise security and boost productivity. They think AI foundation models should be regulated by Their Secure AI Framework or a similar risk-management foundation to maximise effect and minimise risk.

Please contact Ask Office of the CISO or attend Their security leader events to learn more. Attend Their June 26 Security Talks event to learn more about Their AI-powered security product vision.

Perhaps you missed it Recent Google Cloud Security Talks on AI and cybersecurity: Google Cloud and Google security professionals will provide insights, best practices, and concrete ways to improve your security on June 26.

Quick decision-making: How AI improves OODA loop cybersecurity: The OODA loop, employed in boardrooms, helps executives make better, faster decisions. AI enhances OODA loops.

Google rated a Leader in Q2 2024 Forrester Wave: Cybersecurity Incident Response Services Report.

From always on to on demand access with Privileged Access Manager: They are pleased to introduce Google Cloud’s built-in Privileged Access Manager to reduce the dangers of excessive privileges and elevated access misuse.

A FedRAMP high compliant network with Assured Workloads: Delivering FedRAMP High-compliant network design securely.

Google Sovereign Cloud gives European clients choice today: Customer, local sovereign partner, government, and regulator collaboration has developed at Google Sovereign Cloud.

Threat Intel news A financially motivated threat operation targets Snowflake customer database instances for data theft and extortion, according to Mandiant.

Brazilian cyber hazards to people and businesses: Threat actors from various reasons will seek opportunities to exploit Brazil’s digital infrastructure, which Brazilians use in all sectors of society, as its economic and geopolitical role grows.

Gold-phishing: Paris 2024 Olympics cyberthreats: The Paris Olympics are at high risk of cyber espionage, disruptive and destructive operations, financially driven behaviour, hacktivism, and information operations, according to Mandiant.Return of ransomware Compared to 2022, data leak site posts and Mandiant-led ransomware investigations increased in 2023.

Read more on Govindhtech.com

#CloudVirtualCISO#CloudVirtual#ai#cybersecurity#CISO#llm#MachineLearning#Gemini#GeminiPro#technology#googlecloud#technews#news#govindhtech

0 notes

Text

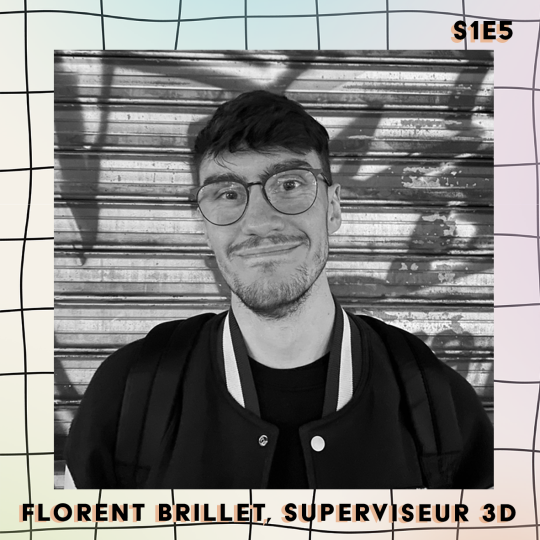

🎙 S1E5 / Florent Brillet, superviseur 3D 🎙

Dans cet épisode, j'ai le plaisir de recevoir Florent Brillet, graphiste 3D travaillant dans le milieu de l'audiovisuel institutionnel. Florent partage avec nous son parcours, son expérience dans le domaine et nous parle des spécificités de son métier, qui nécessite une grande maîtrise technique et artistique. Eh oui, il ne suffit pas de savoir bidouiller 2/3 trucs sur des logiciels aux noms obscurs; quand on fait de la création 3D, il faut une bonne dose d’imagination, de flexibilité, et savoir être touche à tout : parce qu’on peut porter plein de casquettes sur un seul et même projet.

Je vous préviens quand même, on évoque des termes un peu techniques pour quand on ne connaît pas du tout le vocabulaire de ce milieu; on a essayé d’expliquer le plus possible certains termes récurrents au fil de la conversation, et j’espère que les moins aguerris d’entre nous sur ces vastes sujets trouveront le maximum de réponses à leurs questions !

🎬 OEUVRES CITÉES

Le Seigneur des Anneaux : La Communauté de l'Anneau, Peter Jackson (2001)

Scott Pilgrim, Edgar Wright (2010)

Baby Driver, Edgar Wright (2017)

Last Night in Soho, Edgar Wright (2021)

Shaun of the Dead, Edgar Wright (2004)

Hot Fuzz, Edgar Wright (2007)

Le Dernier Pub avant la fin du monde, Edgar Wright (2013)

La saga OSS, Michel Hazanavicius

Chaîne Youtube Calmos, série de vidéos Rigolo

Pitch Perfect, Jason Moore (2015)

Santa et Cie, Alain Chabat (2017)

Maman, j'ai raté l'avion !, Chris Colombus (1990)

Hallmark movies

SuperGrave, Greg Mottola (2007)

Alabama Monroe, Felix Van Groeningen (2012)

Les Goonies, Richard Donner (1985)

Hook ou la Revanche du capitaine Crochet, Steven Spielberg (1991)

Jurassic Park, Steven Spielberg (1993)

The Mask, Chuck Russell (1994)

Madame Doubtfire, Chris Colombus (1993)

Toy Story, John Lasseter (1995)

Song to Song, Terrence Malick (2017)

Batman et Robin, Joel Schumacher (1997)

Forrest Gump, Robert Zemeckis (1994)

Daisy Jones & The Six, Prime Video (2023)

Peak TV, podcast de Marie Telling et Anaïs Bordages (Slate.fr)

Friends, NBC (1994-2004)

Twin Peaks, ABC (1990-1991)

Conjuring : les Dossiers Warren, James Wan (2013)

🧡 LES RECOS

Vinyl, HBO (2016)

Amies, podcast de Marie Telling et Anaïs Bordages (Slate.fr)

📝 TRANSCRIPTION :

0 notes

Text

OSS-Fuzz - Continuous Fuzzing Of Open Source Software

OSS-Fuzz - Continuous Fuzzing Of Open Source Software #AFL #Continuous #Fuzzer #Fuzzing #LibFuzzer #Open #OpenSource

[sc name=”ad_1″]

Fuzz testing is a well-known technique for uncovering programming errors in software. Many of these detectable errors, like buffer overflow, can have serious security implications. Google has found thousands of security vulnerabilities and stability bugs by deploying guided in-process fuzzing of Chromecomponents, and we now want to share that service with the open source…

View On WordPress

#AFL#Continuous#Fuzzer#Fuzzing#LibFuzzer#Open#Open Source#OSS Fuzz#OSSFuzz#reporting#software#Source#Stability#vulnerabilities

0 notes