#Outsource Content Moderation

Explore tagged Tumblr posts

Text

👉 How Agile Content Moderation Process Improves a Brand’s Online Visibility

🤷♀️ Agile content moderation enhances a brand’s online presence by swiftly addressing and adapting to evolving content challenges. This dynamic approach ensures a safer and more positive digital environment, boosting visibility and trust. 🔊 Read the blog: https://www.sitepronews.com/2022/12/20/how-agile-content-moderation-process-improves-a-brands-online-visibility/

View On WordPress

#content moderation#content moderation solution#Outsource Content Moderation#Outsource content moderation services#social media content moderation

0 notes

Text

i know AI isn't going anywhere and i also know there's nothing i can do to prevent someone from stealing my work; that's an accepted risk with sharing it publicly. i'm not going to stop - my issue with AI has always been with the surveillance, the environmental impact, and the exploitation of workers.

if you really want to have a conversation about AI, these are the things we need to be educating ourselves on and talking about. Emily Bender wrote it best in one of her #AIHype take-down threads:

When automated systems are being used, who is being left without recourse to challenge decisions? Whose data is being stolen? Whose labor is being exploited? How is mass surveillance being extended and normalized? What are the impacts to the natural environment and information ecosystem?

#openAI/chatgpt specifically is known for outsourcing labor and horrific working conditions#there are multiple articles on the abuse of kenyan workers for content moderation#so. just something to think about

161 notes

·

View notes

Note

what did you mean by "... produces ptsd on an industrial scale"? just trying to understand, thank u!

content moderation for platforms like facebook and tiktok employs thousands of people, sometimes in the usa but more commonly in the global south (so they can be paid less) to sit at computers and view hundreds of flagged posts a day, including graphic violence and csem, for awful wages, under ridiculously stringent conditions. this results in many, many of the people who work in this field developing PTSD -- and of course they are not given adequate treatment of support, one article cites facebook giving its moderaties nine minutes of 'wellness time' for employees to recover if they see something traumatic.

here's some articles on the topic that can give you a good overview of what working conditions in this field are like, but warning, there's pretty graphic descriptions of violence, animal abuse, and child sexual abuse in these articles, as well as frank discussion of suicidal ideation:

Nearby, in a shopping mall, I meet a young woman who I'll call Maria. She's on her lunch break from an outsourcing firm, where she works on a team that moderates photos and videos for the cloud storage service of a major US technology company. Maria is a quality-assurance representative, which means her duties include double-checking the work of the dozens of agents on her team to make sure they catch everything. This requires her to view many videos that have been flagged by moderators “I get really affected by bestiality with children,” she says. “I have to stop. I have to stop for a moment and loosen up, maybe go to Starbucks and have a coffee.” She laughs at the absurd juxtaposition of a horrific sex crime and an overpriced latte.

For Carlos, a former TikTok moderator, it was a video of child sexual abuse that gave him nightmares. The video showed a girl of five or six years old, he said [...] It hit him particularly hard, he said, because he’s a father himself. He hit pause, went outside for a cigarette, then returned to the queue of videos a few minutes later.

Randy also left after about a year. Like Chloe, he had been traumatized by a video of a stabbing. The victim had been about his age, and he remembers hearing the man crying for his mother as he died. “Every day I see that,” Randy says, “I have a genuine fear over knives. I like cooking — getting back into the kitchen and being around the knives is really hard for me.”

1K notes

·

View notes

Text

The Antivan Mafia

The Antivan Crows operate like the mob, especially when it comes to recruiting desperate members of the public in a system they uphold. They're also... a genuinely legitimate power with close connections to the King of Antiva. These things are both true.

Antiva appears to operate according to and be ruled by a system of merchant houses (including the Crows) and merchant princes (arguably including the Talons).

I posit, veering into headcanon territory, that if we're thinking of the Crows as the mob, we should extend that thinking across all of the merchant houses across all of the Antivan city states. (Of course, other houses often outsource their murder to professionals.)

In terms of understanding what the Crows are, and especially what the Crows we see in Treviso are, @ravioliage brought up the great example of the Yakuza being the first to send aid in the 2011 tsunami, and further, that the largest organized crime networks throughout history "donate food in bad neighborhoods" so that people think kindly of them and become fodder for recruitment. Meanwhile, that gang or mafia or whatever is actually responsible for the fact that the neighbourhood is a bad neighbourhood, and that the people there "can't afford to eat."

They do this because this is how you maintain power over people. You keep them moderately content in a system that's designed to feed on them, or you at least keep them blaming something other than you. The problem from here, as we the audience try to establish what is justifiable for our viewpoint characters according to our own morality, is that the Crows are also established within a system of merchant houses that have very little reason to operate in any other way.

By which I mean, the other merchant houses also operate like the mob, feeding on the people at the bottom to keep their entire operation spinning. The Crows are contract killers, with lives that very much seem dedicated to the job, in a set of rotating institutions that stab each other in the back all the time. The other merchant houses create silk and wine and the basic necessities of life, perhaps with more ordinary lives, but in a system where hiring a killer is a normal way to do business.

I think the reason that Lucanis sees "his" kind of commerce as not all that different than the other houses in Antiva is because, as far as he can tell, it's not. He gets hired by someone on the inside to kill the head of the family so that they can take power (in some of the extended media), which he likely considers evidence that they're not so different from the Crows themselves.

There's no reason to think that the other houses in Antiva operate according to a more optimistic model than the Crows do. If the merchant princes don't run their own "mobs" (with their own guards and men-at-arms, and their own ability to hire mercenaries), then at their best, they're still playing a Game of Thrones.

Is there an escape from that kind of system? If you care at all, do you leave or try to make it better? If you're Teia, you fight—and you might be wrong to do so, because your default response is to aim to slit a hundred throats by nightfall. If tomorrow there are a hundred more, then you have more knives.

The problem with systems is that you take the ones that shaped you with you... wherever you go.

#antivan crows#the antivan crows#lucanis dellamorte#dragon age meta#veilguard meta#my meta#crow thoughts#teia cantori#empire in dragon age#thedas#antiva#treviso

154 notes

·

View notes

Text

Being a content moderator on Facebook can give you severe PTSD.

Let's take time from our holiday festivities to commiserate with those who have to moderate social media. They witness some of the absolute worst of humanity.

More than 140 Facebook content moderators have been diagnosed with severe post-traumatic stress disorder caused by exposure to graphic social media content including murders, suicides, child sexual abuse and terrorism. The moderators worked eight- to 10-hour days at a facility in Kenya for a company contracted by the social media firm and were found to have PTSD, generalised anxiety disorder (GAD) and major depressive disorder (MDD), by Dr Ian Kanyanya, the head of mental health services at Kenyatta National hospital in Nairobi. The mass diagnoses have been made as part of lawsuit being brought against Facebook’s parent company, Meta, and Samasource Kenya, an outsourcing company that carried out content moderation for Meta using workers from across Africa. The images and videos including necrophilia, bestiality and self-harm caused some moderators to faint, vomit, scream and run away from their desks, the filings allege.

You can imagine what now gets circulated on Elon Musk's Twitter/X which has ditched most of its moderation.

According to the filings in the Nairobi case, Kanyanya concluded that the primary cause of the mental health conditions among the 144 people was their work as Facebook content moderators as they “encountered extremely graphic content on a daily basis, which included videos of gruesome murders, self-harm, suicides, attempted suicides, sexual violence, explicit sexual content, child physical and sexual abuse, horrific violent actions just to name a few”. Four of the moderators suffered trypophobia, an aversion to or fear of repetitive patterns of small holes or bumps that can cause intense anxiety. For some, the condition developed from seeing holes on decomposing bodies while working on Facebook content.

Being a social media moderator may sound easy, but you will never be able to unsee the horrors which the dregs of society wish to share with others.

To make matters worse, the moderators in Kenya were paid just one-eighth what moderators in the US are paid.

Social media platform owners have vast wealth similar to the GDPs of some countries. They are among the greediest leeches in the history of money.

#social media#social media owners#greed#facebook#meta#twitter/x#content moderation#moderators#ptsd#gad#mdd#kenya#samasource kenya#low wages#foxglove#get off of facebook#boycott meta#get off of twitter

37 notes

·

View notes

Text

on another note, that post from yesterday seems to be unintentionally sparking discourse about content moderation. i don't really want to go into it too hard, so all i'll say is that it's a much more nuanced topic than i think a lot of us understand (myself included) and it's not just as easy as "let's get rid of automated moderation by hiring real people to do it!" for a multitude of reasons, i.e. outsourcing, emotional/psychological toll, the demand always outweighing the supply, etc.

7 notes

·

View notes

Text

AI projects like OpenAI’s ChatGPT get part of their savvy from some of the lowest-paid workers in the tech industry—contractors often in poor countries paid small sums to correct chatbots and label images. On Wednesday, 97 African workers who do AI training work or online content moderation for companies like Meta and OpenAI published an open letter to President Biden, demanding that US tech companies stop “systemically abusing and exploiting African workers.”

Most of the letter’s signatories are from Kenya, a hub for tech outsourcing, whose president, William Ruto, is visiting the US this week. The workers allege that the practices of companies like Meta, OpenAI, and data provider Scale AI “amount to modern day slavery.” The companies did not immediately respond to a request for comment.

A typical workday for African tech contractors, the letter says, involves “watching murder and beheadings, child abuse and rape, pornography and bestiality, often for more than 8 hours a day.” Pay is often less than $2 per hour, it says, and workers frequently end up with post-traumatic stress disorder, a well-documented issue among content moderators around the world.

The letter’s signatories say their work includes reviewing content on platforms like Facebook, TikTok, and Instagram, as well as labeling images and training chatbot responses for companies like OpenAI that are developing generative-AI technology. The workers are affiliated with the African Content Moderators Union, the first content moderators union on the continent, and a group founded by laid-off workers who previously trained AI technology for companies such as Scale AI, which sells datasets and data-labeling services to clients including OpenAI, Meta, and the US military. The letter was published on the site of the UK-based activist group Foxglove, which promotes tech-worker unions and equitable tech.

In March, the letter and news reports say, Scale AI abruptly banned people based in Kenya, Nigeria, and Pakistan from working on Remotasks, Scale AI’s platform for contract work. The letter says that these workers were cut off without notice and are “owed significant sums of unpaid wages.”

“When Remotasks shut down, it took our livelihoods out of our hands, the food out of our kitchens,” says Joan Kinyua, a member of the group of former Remotasks workers, in a statement to WIRED. “But Scale AI, the big company that ran the platform, gets away with it, because it’s based in San Francisco.”

Though the Biden administration has frequently described its approach to labor policy as “worker-centered.” The African workers’ letter argues that this has not extended to them, saying “we are treated as disposable.”

“You have the power to stop our exploitation by US companies, clean up this work and give us dignity and fair working conditions,” the letter says. “You can make sure there are good jobs for Kenyans too, not just Americans."

Tech contractors in Kenya have filed lawsuits in recent years alleging that tech-outsourcing companies and their US clients such as Meta have treated workers illegally. Wednesday’s letter demands that Biden make sure that US tech companies engage with overseas tech workers, comply with local laws, and stop union-busting practices. It also suggests that tech companies “be held accountable in the US courts for their unlawful operations aboard, in particular for their human rights and labor violations.”

The letter comes just over a year after 150 workers formed the African Content Moderators Union. Meta promptly laid off all of its nearly 300 Kenya-based content moderators, workers say, effectively busting the fledgling union. The company is currently facing three lawsuits from more than 180 Kenyan workers, demanding more humane working conditions, freedom to organize, and payment of unpaid wages.

“Everyone wants to see more jobs in Kenya,” Kauna Malgwi, a member of the African Content Moderators Union steering committee, says. “But not at any cost. All we are asking for is dignified, fairly paid work that is safe and secure.”

35 notes

·

View notes

Text

Though the Biden administration has frequently described its approach to labor policy as “worker-centered.” The African workers’ letter argues that this has not extended to them, saying “we are treated as disposable.”

“You have the power to stop our exploitation by US companies, clean up this work and give us dignity and fair working conditions,” the letter says. “You can make sure there are good jobs for Kenyans too, not just Americans."

Tech contractors in Kenya have filed lawsuits in recent years alleging that tech-outsourcing companies and their US clients such as Meta have treated workers illegally. Wednesday’s letter demands that Biden make sure that US tech companies engage with overseas tech workers, comply with local laws, and stop union-busting practices. It also suggests that tech companies “be held accountable in the US courts for their unlawful operations aboard, in particular for their human rights and labor violations.”

The letter comes just over a year after 150 workers formed the African Content Moderators Union. Meta promptly laid off all of its nearly 300 Kenya-based content moderators, workers say, effectively busting the fledgling union. The company is currently facing three lawsuits from more than 180 Kenyan workers, demanding more humane working conditions, freedom to organize, and payment of unpaid wages.

“Everyone wants to see more jobs in Kenya,” Kauna Malgwi, a member of the African Content Moderators Union steering committee, says. “But not at any cost. All we are asking for is dignified, fairly paid work that is safe and secure.”

34 notes

·

View notes

Text

Content moderation is largely outsourced to the third world lol its the fucking worst

9 notes

·

View notes

Text

A friendly-as-I-can-manage reminder that Tumblr managment and employees (former and current) have said the majority of people who do content monitoring on this site are outsourced. If you don't want to begin to engage with the trials and tribulations of content moderation at all, at least direct your unhelpful fury slightly askew and stop making me a thousand conspiracy posts about how big tumblr is part of the terf mafia

10 notes

·

View notes

Text

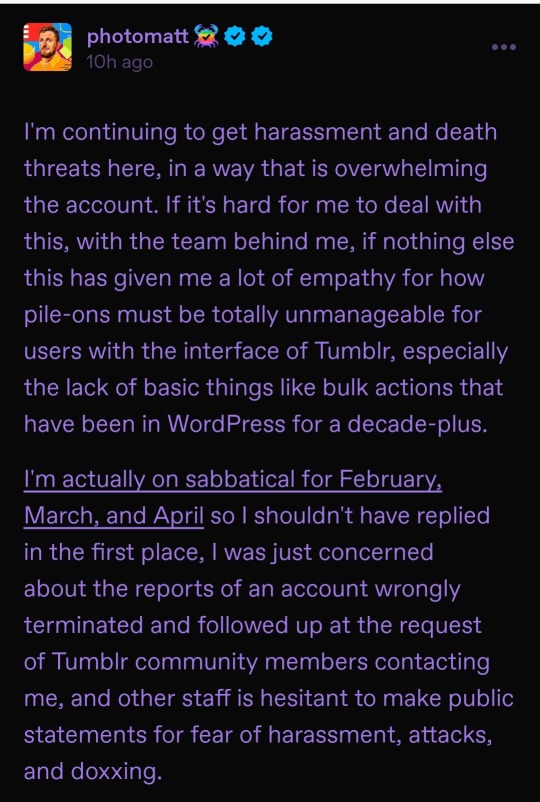

Looks like Photomatt has now deleted several of his posts regarding this whole incident, and edited the original one with further context. The edits to the original are 1) admitting that the hammer part of the "threat" seems silly but that he's apparently "almost died by car accident twice" (which, even if its true, are we taking into account personal triggers for what qualifies as harassment? And if so, why is misgendering people not considered one of those triggers?) And 2) adding that apparently Avery/Rita had "over 20 different blogs" which have names "so sexual to list them here would require a mature tag". (Which, he is perfectly capable of doing, and also it should be noted, there's not really rules against mature content so long as its tagged. There's been no proof of any untagged content. And third here, this is a *sex worker* we are talking about of COURSE she's got horny blogs!)

The posts which were deleted were largely the post-blowup tantrum replies, nothing too major there but overall trying to sweep away the tantrum. One post of note which was deleted is the one where he said the oh you know what i screenshotted it so lemme just post it

This is the one i'm most concerned about. This is the one where he mentions *pulling out investment* as well as saying "oh gosh I suddenly have empathy for those dealing with harassment campaigns now that I'm the victim of one".

Why do I think he deleted this one? He's scared of legal action. See, Tumblr was previously sued for bigoted moderation during the post-porn-ban era, and part of the stipulations is that they must make efforts to fix that. Then, we got in his other major post about this the tidbit that they *outsourced their moderation* and that they *had a known transphobic moderator in that company* LITERALLY last year. And now THIS post says that there (probably) werent plans to fix moderation before his sabbatical began, and even adds on that only *now* does he have empathy for how tumblr's poor moderation tools have made harassment a nightmare. Combine with multiple trustworthy testimonies that queer staff previously pointed this out to him...it sounds like the threat of legal action over moderation problems just led him to utterly ignore that problem until *JUST NOW*.

Now I am no legal expert. I have no clue whether this was culpable enough to show that he didnt make reasonable effort to fix biased moderation. Its entirely possible that fhis only sounds culpable to my untrained eye, and that he actually used secret language which dodges that culpability. But considering there's actual threat of lawsuit again now, and he posted all of this?

Well. Yeah, I'd delete some shit too. Too bad its probably too late. Anyways if you do nuke the site Matt I hope this follows you til you die. Or you could actually man up, and ask your lawyers whats the cheapest way you can apologize for this fuckup. It wouldnt erase this problem, but I think all of us would stop mocking you relentlessly if you at least had the guts to do that.

6 notes

·

View notes

Text

I think the biggest takeaway of this video, is how, when technology's ambitions exceed its grasp, it goes back to using human beings to pretend to be machines to make it seem that the technology works.

so the mechanical turk chess machine and the current outsourcing of, for example, social media content moderation, is basically the same thing. a human sits in a little box and does the work the owner of the machine is pretending the machine does

the video is worth watching in its entirety though, as an understanding of how machines and their concepts are much older than we think, just as 'globalism' is much older than we think

9 notes

·

View notes

Note

Unsolicited unprompted funfact of the week(sorry I know it hasn't been a week yet technically but I don't care):

Many platforms utilize image recognition software outsourced from third-party companies for content moderation, especially for detecting sexual content.

These models are often trained by humans who tell the software what is and isn't sexual content. This often leads to bias and the propagation of discrimination as it results in women and LGBTQ+ people getting marked more frequently and unfairly due to these systemic issues being projected onto the image recognition.

This is generally an issue with any form of machine learning models, including the likes of ChatGPT and other generative models. (often referred to as "AI" but that's just a buzzword that techbros use to make investors foam at the mouth and make it seem better than it actually is)

A video by "Answer in Progress" called "I taught an AI to stan BTS" (bear with me here) is a good example of this subject in how biases in these fields can lead to harm.

This is only scratching the surface of the problems with "AI" and if you want to learn more in more broad strokes I recommend the video "The AI Revolution is Rotten To The Core" by Jimmy McGee

.

2 notes

·

View notes

Text

ftr i'm not foolish enough to think that staff is like, a small team of like 10-20 people and that's it, i'm sure they've probably either got a bot running the moderation requests or otherwise some form of outsourcing for content moderation like with facebook. but still, their wording does imply a degree of control over avoiding this in the future, so let's press on that.

5 notes

·

View notes

Text

Last month, a court in Kenya issued a landmark ruling against Meta, owner of Facebook and Instagram. The US tech giant was, the court ruled, the “true employer” of the hundreds of people employed in Nairobi as moderators on its platforms, trawling through posts and images to filter out violence, hate speech and other shocking content. That means Meta can be sued in Kenya for labor rights violations, even though moderators are technically employed by a third party contractor.

Social media giant TikTok was watching the case closely. The company also uses outsourced moderators in Kenya, and in other countries in the global south, through a contract with Luxembourg-based Majorel. Leaked documents obtained by the NGO Foxglove Legal, seen by WIRED, show that TikTok is concerned it could be next in line for possible litigation.

“TikTok will likely face reputational and regulatory risks for its contractual arrangement with Majorel in Kenya,” the memo says. If the Kenyan courts rule in the moderators’ favor, the memo warns “TikTok and its competitors could face scrutiny for real or perceived labor rights violations.”

The ruling against Meta came after the tech company tried to get the court to dismiss a case brought against it and its outsourcing partner, Sama, by the South African moderator, Daniel Motaung, who was fired after trying to form a union in 2019.

Motaung said the work, which meant watching hours of violent, graphic, or otherwise traumatizing content daily, left him with post-traumatic stress disorder. He also alleged that he hadn’t been fully informed about the nature of the work before he’d relocated from South Africa to Kenya to start the job. Motaung accuses Meta and Sama of several abuses of Kenyan labor law, including human trafficking and union busting. Should Motaung’s case succeed, it could allow other large tech companies that outsource to Kenya to be held accountable for the way staff there are treated, and provide a framework for similar cases in other countries.

“[TikTok] reads it as a reputational threat,” says Cori Crider, director of Foxglove Legal. “The fact that they are exploiting people is the reputational threat.”

TikTok did not respond to a request for comment.

In January, as Motaung’s lawsuit progressed, Meta attempted to cut ties with Sama and move its outsourcing operations to Majorel—TikTok’s partner.

In the process, 260 Sama moderators were expected to lose their jobs. In March, a judge issued an injunction preventing Meta from terminating its contract with Sama and moving it to Majorel until the court was able to determine whether the layoffs violated Kenyan labor laws. In a separate lawsuit, Sama moderators, some of whom spoke to WIRED earlier this year, alleged that Majorel had blacklisted them from applying to the new Meta moderator jobs, in retaliation for trying to push for better working conditions at Sama. In May, 150 outsourced moderators working for TikTok, ChatGPT, and Meta via third-party companies voted to form and register the African Content Moderators Union.

Majorel declined to comment.

The TikTok documents show that the company is considering an independent audit of Majorel’s site in Kenya. Majorel has sites around the world, including in Morocco, where its moderators work for both Meta and TikTok. Such an exercise, which often involves hiring an outside law firm or consultancy to conduct interviews and deliver a formal assessment against criteria like local labor laws or international human rights standards, “may mitigate additional scrutiny from union representatives and news media,” the memo said.

Paul Barrett, deputy director of the Center for Business and Human Rights at New York University, says that these audits can be a way for companies to look like they’re taking action to improve conditions in their supply chain, without having to make the drastic changes they need.

“There have been instances in a number of industries where audits have been largely performative, just a little bit of theater to give to a global company a gold star so that they can say they're complying with all relevant standards,” he says, noting that it’s difficult to tell in advance whether a potential audit of TikTok’s moderation operations would be similarly cosmetic.

Meta has conducted multiple audits, including in 2018, contracting consultants Business for Social Responsibility to assess its human rights impact in Myanmar in the wake of a genocide that UN investigators alleged was partially fueled by hate speech on Facebook. Last year, Meta released its first human rights report. The company has, however, repeatedly delayed the release of the full, unredacted copy of its human rights impact report on India, commissioned in 2019 following pressure from rights groups who accused it of contributing to the erosion of civil liberties in the country.

The TikTok memo says nothing about how the company might use such an assessment to help guide improvements in the material conditions of its outsourced workers. “You'll note, the recommendations are not suddenly saying, they should just give people access to psychiatrists, or allow them to opt out of the toxic content and prescreen them in a very careful way, or to pay them in a more equal way that acknowledges the inherent hazards of the job,” says Crider. “I think it's about the performance of doing something.”

Barrett says that there is an opportunity for TikTok to approach the issue in a more proactive way than its predecessors have. “I think it would be very unfortunate if TikTok said, ‘We’re going to try to minimize liability, minimize our responsibility, and not only outsource this work, but outsource our responsibility for making sure the work that's being done on behalf of our platform is done in an appropriate and humane way.’”

5 notes

·

View notes

Text

Trusted Partner for Data Entry & Outsourcing Projects

The world of the present epoch is more and more intertwined with the world of bits and bytes, and nowadays businesses may use the services of non-voice BPO to optimize the work, lower the expenditures and increase the efficiency. Data entry and email support, content moderation, and backend processing, non-voice processes are efficient in a quiet way with utmost output. Amany of the leading brands in this field has listed the Zoetic BPO Services as one of the most trusted players in the market providing high value and dependability.

Reviews by various Zoetic BPO Services clients point out delivery in good time, accuracy of output and the open process of work. Irrelevant Zoetic is the low-cost focus, which does not mean a breach of data confidentiality or the sacrifice of the quality of services. As a start-up or an established business, Zoetic will provide non-voice scalable solutions that meet your specifications.

Zoetic guarantees an outsource process with the support of trained staff and a strong infrastructure. Their work model provides flexibility of the business to have reduced burden of business at its in-house without loss of control over the operations. The additional evidence that has been provided by the positive reviews of Zoetic BPO Services is that their clients are satisfied with the work of the onboarding system, and the responsiveness of the support team is high as well.

FAQs

What are the Non-Voice BPO services in Zoetic

Data entry, form filling, data processing, email management and backend tasks.

Is the projects secure?

Yes, Zoetic has high security measures on its data.

What can I check to know that Zoetic is credible

Simply review the various testimonials of Zoetic BPO Services found online by actual customers.

Conclusion

Zoetic BPO Services is a reliable partner in the event that you are interested in low cost and dependable non voice outsourcing. Having multiple good reviews in Zoetic BPO Services services, you may depend on the true way of being served and adequated help in the long run

0 notes