#PowerShell Deployment Extension

Explore tagged Tumblr posts

Text

So we have a bunch of new Development boxes at work. They're all high-end Lenovo Legion gaming PCs with i9 14th Gen processors and RTX4080 video cards. From our vendor, these are $6000/each. We have 12 of them.

Now, I was not actually engaged in the deployment process until very late in the game. So these did not get deployed correctly by org standards. They're using the pre-deployed image complete with all the bloatware, they were not on-boarded into Intune correctly, they did not have the app deployments done correctly so they were mostly user-installed.

I spent the better part of three days designing and refining PowerShell scripts to perform the bulk of the actions required. Uninstalling bloatware, creating a local admin account, bypassing the OOBE, updating to Win11 Enterprise, joining the domain, installing CCM, removing bloatware (again) etc. Then configuring all the deployments in our DEV instance of SCCM so they can be deployed correctly.

So I did my absolute level best with the 16 hour time frame that I was given. The thing that nobody actually understands is that software developed for developers is ... shit. It's absolutely garbage. It is not meant to be installed at the Enterprise level. So I wrote scripts to deploy this nonsense as best as possible. Some scripts just dropped the installer on the workstation in a central location. Some required the software to be deployed by the user from Software Center. Some simply ... didn't work the first time and required extensive time to fix.

Now, my other problem with Developers themselves is ... they're not the brightest. They don't think outside the box, they don't consider asking for help, if the thing doesn't do what they think it is supposed to do the first time, surely running the same thing another 14 times will make it work yes?

So I'm on vacation now because of this fucking project. And before I left one of the IT Engineers engaged me about one of the deployments. And I told him I did the best I could with that software, but it requires user intervention so I designed the script as such, and if he has any challenges with it he's welcome to take a swing at it but I am tired of it. To which he replied:

"No bud, the scripts are great, the developers are just fucking stupid" and let me tell you, that made my whole fuckin' week.

1 note

·

View note

Text

Reliable Microsoft 365 Migration Software for Large-Scale Enterprise Deployments

Enterprise-level Microsoft 365 migrations demand more than just mailbox transfers. The complexity increases with scale, data volume, legacy dependencies, compliance mandates, and tight downtime windows. Without a reliable migration solution, organizations risk email interruptions, data loss, and productivity setbacks. Choosing the right software is critical to ensure a smooth and secure transition.

Enterprise Migration Challenges That Demand Robust Solutions

Large-scale deployments typically involve thousands of mailboxes and terabytes of data. Migrating this data is not just about speed. It’s about integrity, continuity, and control. Enterprises often face issues such as throttling by Microsoft servers, broken mailbox permissions, calendar inconsistencies, and bandwidth limitations during migration windows.

Handling multiple domains, public folders, archive mailboxes, and hybrid configurations further complicates the process. Standard migration tools fail to handle these requirements efficiently. A purpose-built migration platform is essential for structured execution.

Features That Define Reliable Microsoft 365 Migration Software

A reliable enterprise migration solution must address the full lifecycle of the migration. It should support planning, execution, monitoring, and validation. It should also provide flexibility in terms of migration types and scenarios.

Support for tenant-to-tenant migration, Exchange to Microsoft 365, and PST import is a baseline. But what truly defines enterprise-grade software is its ability to handle granular mailbox filtering, role-based access control, auto-mapping, and impersonation-based migration.

Bandwidth throttling by Microsoft 365 is another concern. The right software must manage these limitations intelligently. It should use multithreaded connections and automatic retry mechanisms to maintain performance without violating Microsoft’s service boundaries.

Audit trails and reporting are equally important. Enterprises require a complete overview of what data moved, what failed, and what requires attention. Detailed logs and reports ensure transparency and make post-migration validation easier.

EdbMails: A Purpose-Built Tool for Enterprise Migrations

EdbMails Microsoft 365 Migration Software is built to address the challenges of enterprise deployments. It simplifies complex migration scenarios by providing automated features that reduce manual intervention. The software handles large user volumes efficiently without compromising speed or accuracy.

It supports tenant-to-tenant migrations with automatic mailbox mapping. This eliminates the need for extensive scripting or manual assignment. Its impersonation mode ensures that all mailbox items including contacts, calendar entries, notes, and permissions are transferred without requiring individual user credentials.

EdbMails also includes advanced filtering options. You can choose to migrate only specific date ranges, folders, or item types. This level of control helps in managing data volume and compliance boundaries.

Its incremental migration feature ensures that only new or changed items are migrated during subsequent syncs. This reduces server load and prevents duplicate content. Built-in throttling management ensures optimal data transfer rates even during peak usage times.

The software generates real-time progress dashboards and post-migration reports. These reports help IT administrators validate the outcome and identify any gaps immediately. The logs also serve as compliance evidence during audits.

Deployment and Usability at Scale

One of the key factors in enterprise environments is usability. EdbMails offers a clean, GUI-based interface that minimizes the learning curve. Admins can initiate and manage large-scale batch migrations from a centralized dashboard. There’s no need for extensive PowerShell scripting or third-party integration.

It supports secure modern authentication, including OAuth 2.0, ensuring that enterprise security policies remain intact. The software does not store any login credentials and operates under the secure authorization flow.

Another benefit is the ability to pause and resume migrations. In case of planned maintenance or network downtime, the migration process can be continued from where it left off. This reduces the risk of data loss and eliminates the need to restart from scratch.

Final Thoughts

Large-scale Office 365 migration require a solution that can handle complexity with accuracy and consistency. EdbMails stands out by offering an enterprise-ready platform that simplifies the migration journey. It reduces risks, accelerates deployment timelines, and ensures a seamless transition to the cloud.

For IT teams managing enterprise workloads, reliability is non-negotiable. EdbMails delivers that reliability with the technical depth and automation required for large-scale success.

0 notes

Text

Revolutionize Your Business with Exchange Server 2019

Empowering Enterprises: Unlocking the Full Potential of Exchange Server 2019

In today's fast-paced digital world, organizations require robust, secure, and scalable email solutions to maintain seamless communication and operational efficiency. Exchange Server 2019 stands out as the ultimate on-premise solution, empowering enterprises to scale their communication infrastructure confidently. This article explores how Exchange Server 2019 can revolutionize your enterprise, offering unmatched features, high availability, and security to meet the demands of large organizations.

One of the most significant advantages of Exchange Server 2019 is its ability to provide a high availability email server. Ensuring continuous access to email services is critical for business operations. Exchange 2019 offers advanced clustering and database availability groups (DAGs) that enable organizations to minimize downtime and maximize uptime, even during maintenance or unexpected failures. This high availability architecture guarantees that your enterprise communication remains uninterrupted, fostering productivity and customer satisfaction.

Security is paramount in today's cyber landscape, and Exchange Server 2019 excels in providing secure communication channels for large organizations. With built-in security features such as enhanced encryption, multi-factor authentication, and data loss prevention, it ensures that sensitive information remains protected. Furthermore, Exchange 2019's compliance tools help organizations adhere to industry regulations, reducing legal risks and safeguarding corporate reputation.

Scalability is another vital feature of Exchange Server 2019. As your enterprise grows, so do your communication needs. Exchange 2019 is designed to handle large mailboxes and increased user loads efficiently. Its improved performance and storage management capabilities allow organizations to scale seamlessly without compromising on speed or reliability. This scalability ensures that your email infrastructure can evolve alongside your business, supporting future growth and innovation.

Deployment flexibility is also a key benefit of Exchange Server 2019. Whether opting for on-premise hardware or hybrid configurations, businesses can tailor their email environment to suit their specific needs. On-premise deployment grants organizations complete control over their data, security policies, and customization options. This level of control is especially crucial for sectors with strict data sovereignty requirements or sensitive information handling.

Managing Exchange Server 2019 is streamlined with modern tools and features. The integration of PowerShell management, hybrid deployment options, and improved administrative interfaces simplifies routine maintenance and troubleshooting. This efficiency reduces operational costs and empowers IT teams to focus on strategic initiatives rather than routine tasks.

Investing in Exchange Server 2019 is a strategic move that enhances your enterprise's communication backbone. The cost of acquiring an enterprise license is justified by the extensive features, security, and scalability it offers. To learn more about the investment, visit exchange server 2019 enterprise license cost.

In conclusion, Exchange Server 2019 elevates your organization's communication capabilities, ensuring high availability, security, and scalability. It is the ultimate on-premise solution for enterprises aiming to maintain control, optimize performance, and foster seamless collaboration. Embrace the future of enterprise messaging with Exchange Server 2019 and unlock new levels of operational excellence.

#buy exchange 2019 enterprise key#high availability email server#secure communication for large organizations#exchange server 2019 features and benefits#on-premise mail server management

0 notes

Text

Is DevOps All About Coding? Exploring the Role of Programming and Essential Skills in DevOps

As technology continues to advance, DevOps has emerged as a key practice for modern software development and IT operations. A frequently asked question among those exploring this field is: "Is DevOps full of coding?" The answer isn’t black and white. While coding is undoubtedly an important part of DevOps, it’s only one piece of a much larger puzzle.

This article unpacks the role coding plays in DevOps, highlights the essential skills needed to succeed, and explains how DevOps professionals contribute to smoother software delivery. Additionally, we’ll introduce the Boston Institute of Analytics’ Best DevOps Training program, which equips learners with the knowledge and tools to excel in this dynamic field.

What Exactly is DevOps?

DevOps is a cultural and technical approach that bridges the gap between development (Dev) and operations (Ops) teams. Its main goal is to streamline the software delivery process by fostering collaboration, automating workflows, and enabling continuous integration and delivery (CI/CD). DevOps professionals focus on:

Enhancing communication between teams.

Automating repetitive tasks to improve efficiency.

Ensuring systems are scalable, secure, and reliable.

The Role of Coding in DevOps

1. Coding is Important, But Not Everything

While coding is a key component of DevOps, it’s not the sole focus. Here are some areas where coding is essential:

Automation: Writing scripts to automate processes like deployments, testing, and monitoring.

Infrastructure as Code (IaC): Using tools such as Terraform or AWS CloudFormation to manage infrastructure programmatically.

Custom Tool Development: Building tailored tools to address specific organizational needs.

2. Scripting Takes Center Stage

In DevOps, scripting often takes precedence over traditional software development. Popular scripting languages include:

Python: Ideal for automation, orchestration, and data processing tasks.

Bash: Widely used for shell scripting in Linux environments.

PowerShell: Commonly utilized for automating tasks in Windows ecosystems.

3. Mastering DevOps Tools

DevOps professionals frequently work with tools that minimize the need for extensive coding. These include:

CI/CD Platforms: Jenkins, GitLab CI/CD, and CircleCI.

Configuration Management Tools: Ansible, Chef, and Puppet.

Monitoring Tools: Grafana, Prometheus, and Nagios.

4. Collaboration is Key

DevOps isn’t just about writing code—it’s about fostering teamwork. DevOps engineers serve as a bridge between development and operations, ensuring workflows are efficient and aligned with business objectives. This requires strong communication and problem-solving skills in addition to technical expertise.

Skills You Need Beyond Coding

DevOps demands a wide range of skills that extend beyond programming. Key areas include:

1. System Administration

Understanding operating systems, networking, and server management is crucial. Proficiency in both Linux and Windows environments is a major asset.

2. Cloud Computing

As cloud platforms like AWS, Azure, and Google Cloud dominate the tech landscape, knowledge of cloud infrastructure is essential. This includes:

Managing virtual machines and containers.

Using cloud-native tools like Kubernetes and Docker.

Designing scalable and secure cloud solutions.

3. Automation and Orchestration

Automation lies at the heart of DevOps. Skills include:

Writing scripts to automate deployments and system configurations.

Utilizing orchestration tools to manage complex workflows efficiently.

4. Monitoring and Incident Response

Ensuring system reliability is a critical aspect of DevOps. This involves:

Setting up monitoring dashboards for real-time insights.

Responding to incidents swiftly to minimize downtime.

Analyzing logs and metrics to identify and resolve root causes.

Is DevOps Right for You?

DevOps is a versatile field that welcomes professionals from various backgrounds, including:

Developers: Looking to expand their skill set into operations and automation.

System Administrators: Interested in learning coding and modern practices like IaC.

IT Professionals: Seeking to enhance their expertise in cloud computing and automation.

Even if you don’t have extensive coding experience, there are pathways into DevOps. With the right training and a willingness to learn, you can build a rewarding career in this field.

Boston Institute of Analytics: Your Path to DevOps Excellence

The Boston Institute of Analytics (BIA) offers a comprehensive DevOps training program designed to help professionals at all levels succeed. Their curriculum covers everything from foundational concepts to advanced techniques, making it one of the best DevOps training programs available.

What Sets BIA’s DevOps Training Apart?

Hands-On Experience:

Work with industry-standard tools like Docker, Kubernetes, Jenkins, and AWS.

Gain practical knowledge through real-world projects.

Expert Instructors:

Learn from seasoned professionals with extensive industry experience.

Comprehensive Curriculum:

Dive deep into topics like CI/CD, IaC, cloud computing, and monitoring.

Develop both technical and soft skills essential for DevOps roles.

Career Support:

Benefit from personalized resume reviews, interview preparation, and job placement assistance.

By enrolling in BIA’s Best DevOps Training, you’ll gain the skills and confidence to excel in this ever-evolving field.

Final Thoughts

So, is DevOps full of coding? While coding plays an important role in DevOps, it’s far from the whole picture. DevOps is about collaboration, automation, and ensuring reliable software delivery. Coding is just one of the many skills you’ll need to succeed in this field.

If you’re inspired to start a career in DevOps, the Boston Institute of Analytics is here to guide you. Their training program provides everything you need to thrive in this exciting domain. Take the first step today and unlock your potential in the world of DevOps!

#cloud training institute#best devops training#Cloud Computing Course#cloud computing#devops#devops course

0 notes

Text

Microsoft 365 Administrator Essentials Training: Expertise in Modern Management

In today's digital age, the effective management of Microsoft 365 environments is crucial for organizational success. The Microsoft 365 Administrator Essentials (MS-102) training by Multisoft Systems offers comprehensive insights and hands-on skills necessary for professionals aiming to excel in modern workplace management. This course not only equips learners with technical expertise but also prepares them to navigate the complexities of cloud-based collaboration and productivity tools provided by Microsoft.

Course Overview

The MS-102 training covers a wide array of topics essential for Microsoft 365 administrators:

Introduction to Microsoft 365 Administration

Understanding the core components of Microsoft 365

Exploring the administrative interfaces and tools

Managing identities and administrative roles effectively

Microsoft 365 Deployment and Updates

Planning and executing Microsoft 365 deployments

Configuring and managing updates across the environment

Ensuring compliance and security during deployment processes

Managing Microsoft 365 Applications and Services

Administering Exchange Online for messaging and collaboration

Configuring SharePoint Online for content management and collaboration

Leveraging Microsoft Teams for modern teamwork and communication

Implementing Security and Compliance in Microsoft 365

Configuring security policies and settings

Managing compliance and data governance

Implementing threat protection solutions

Monitoring and Troubleshooting Microsoft 365

Monitoring service health and performance

Troubleshooting common issues and outages

Utilizing Microsoft 365 reporting and analytics tools

Automation and PowerShell Scripting for Microsoft 365

Automating administrative tasks using PowerShell

Creating scripts for managing user accounts, licenses, and permissions

Integrating automation workflows with other Microsoft services

Why Choose MS-102 Training?

The MS-102 training program stands out for several compelling reasons:

Expert Guidance: Learn from certified trainers with extensive industry experience who provide practical insights and best practices.

Hands-on Experience: Gain practical, real-world experience through interactive labs and simulations that simulate Microsoft 365 environments.

Certification Preparation: Prepare effectively for the Microsoft 365 Certified: Modern Desktop Administrator Associate certification exam (MS-102) to validate your skills and boost your career prospects.

Flexible Learning Options: Access training materials and participate in sessions online, accommodating diverse learning needs and schedules.

Career Benefits

Upon completion of the MS-102 training, participants can expect to:

Advance Their Career: Acquire the skills demanded by organizations worldwide for managing modern workplace environments effectively.

Enhance Productivity: Streamline operations and enhance user productivity by mastering Microsoft 365 administrative tasks.

Ensure Data Security: Implement robust security measures and compliance protocols to protect organizational data and assets.

Optimize IT Operations: Improve IT efficiency through automation and effective management practices.

Who Should Attend?

The MS-102 training is ideal for:

IT Professionals: System administrators, network administrators, and IT support specialists looking to specialize in Microsoft 365 administration.

Enterprise Administrators: IT professionals responsible for managing Microsoft 365 tenant environments within large organizations.

Aspiring Microsoft Certified Professionals: Individuals preparing for the Microsoft 365 Certified: Modern Desktop Administrator Associate certification exam.

Conclusion

The Microsoft 365 Administrator Essentials (MS-102) training by Multisoft Systems equips professionals with the skills and knowledge needed to excel in managing Microsoft 365 environments. Whether you are starting your career in IT administration or aiming to enhance your existing skills, this course provides a solid foundation in modern workplace management. Enroll today to take your career to the next level and become a proficient Microsoft 365 administrator.

#Microsoft 365 Administrator Essentials Training#MS-102 Online Training#Microsoft 365 Administrator Essentials Course

0 notes

Text

Enterprise Automation Expert Track: Unlocking Efficiency and Scalability

In today's fast-paced business environment, the demand for automation has skyrocketed as organizations strive to increase efficiency, reduce operational costs, and maintain a competitive edge. For professionals looking to stay ahead of the curve, mastering enterprise automation is essential. The Enterprise Automation Expert Track is designed to equip you with the skills and knowledge needed to lead automation initiatives within your organization.

Why Enterprise Automation?

Enterprise automation involves the use of technology to perform repetitive tasks, streamline processes, and enhance overall productivity. It spans across various domains including IT operations, business processes, and even customer service. By automating routine tasks, companies can focus on innovation, strategic planning, and delivering value to customers.

Key benefits of enterprise automation include:

Increased Efficiency: Automation reduces the time and effort required to complete tasks, leading to faster execution and improved efficiency.

Cost Savings: By automating manual processes, organizations can significantly cut down on labor costs and minimize errors.

Scalability: Automated systems can easily scale to accommodate business growth, allowing organizations to expand without the need for proportional increases in resources.

Improved Accuracy: Automation eliminates the risk of human error, ensuring consistent and accurate results.

What You’ll Learn in the Enterprise Automation Expert Track

This track is designed for IT professionals, system administrators, DevOps engineers, and anyone looking to specialize in enterprise automation. Here’s a glimpse of what you’ll learn:

Introduction to Automation Tools:

Gain a solid understanding of the most popular automation tools in the industry, including Ansible, Puppet, Chef, and Jenkins.

Learn how to choose the right tool for your organization's specific needs.

Advanced Scripting and Orchestration:

Master scripting languages like Python and PowerShell for automating tasks.

Explore orchestration techniques to manage complex workflows across multiple systems.

Infrastructure as Code (IaC):

Learn the principles of Infrastructure as Code and how to apply them using tools like Terraform.

Understand the importance of version control and automation in managing infrastructure at scale.

Continuous Integration/Continuous Deployment (CI/CD):

Dive into the world of CI/CD pipelines and learn how to automate the entire software delivery process.

Explore best practices for integrating testing and deployment into your CI/CD pipelines.

Security Automation:

Understand the critical role of automation in enhancing security.

Learn how to implement automated security measures, including vulnerability scanning and compliance checks.

Monitoring and Analytics:

Discover how to set up automated monitoring systems to track performance, identify issues, and optimize operations.

Explore the use of analytics in automation to drive data-driven decision-making.

Why Choose the Enterprise Automation Expert Track?

Hands-on Learning: The track offers practical, hands-on labs and real-world scenarios to help you apply what you've learned.

Expert Instructors: Learn from industry experts with extensive experience in enterprise automation.

Certification: Upon completion, earn a certification that validates your expertise and enhances your career prospects.

Conclusion

The Enterprise Automation Expert Track is more than just a learning path—it's your gateway to becoming a leader in automation. As businesses continue to embrace digital transformation, the demand for automation experts will only grow. By enrolling in this track, you'll gain the skills and confidence to drive automation initiatives and lead your organization into the future.

Ready to take the next step in your career? Enroll in the Enterprise Automation Expert Track today and start your journey towards becoming an automation leader!

For more details click www.hawkstack.com

#redhatcourses#docker#information technology#containerorchestration#container#kubernetes#containersecurity#linux#dockerswarm#aws#hawkstack#hawkstacktechnologies

0 notes

Text

Launch Your DevOps Engineering Career in Two Months: A Step-by-Step Guide

Starting out in DevOps engineering might seem challenging due to the extensive skill set required. However, with a well-structured and dedicated plan, you can become job-ready in just two months. This guide outlines a comprehensive roadmap to help you develop the essential skills and knowledge for a successful DevOps career.

Month 1: Laying the Groundwork

Week 1: Grasping DevOps Fundamentals

Begin by understanding the fundamentals of DevOps and its core principles. Use online courses, books, and articles to get acquainted with key concepts like continuous integration, continuous delivery, and infrastructure as code.

Week 2-3: Mastering Version Control Systems (VCS)

Version control systems such as Git are crucial for collaboration and managing code changes. Invest time in learning Git basics, including branching strategies, merging, and conflict resolution.

Week 4-5: Scripting and Automation

Start learning scripting languages like Bash, Python, or PowerShell. Focus on automating routine tasks such as file operations, system configuration, and deployment processes. Enhance your skills with hands-on practice through small projects or challenges.

Week 6: Docker and Containerization

Docker is essential in DevOps for packaging applications and dependencies into lightweight containers. Study Docker concepts, container orchestration, and best practices for Dockerfile creation. Practice building and running containers to deepen your understanding.

For further learning, consider the DevOps online training, which offers certifications and job placement opportunities both online and offline.

Week 7: Continuous Integration (CI) Tools

Explore popular CI tools like Jenkins, GitLab CI, or CircleCI. Learn to set up CI pipelines to automate the build, test, and deployment processes. Experiment with different configurations and workflows to understand their practical applications.

Week 8: Infrastructure as Code (IaC)

Dive into infrastructure as code principles using tools like Terraform or AWS CloudFormation. Learn to define and provision infrastructure resources programmatically for reproducibility and scalability. Practice creating infrastructure templates and deploying cloud resources.

Month 2: Advanced Skills and Practical Projects

Week 1-2: Configuration Management

Learn about configuration management tools like Ansible, Chef, or Puppet. Understand their role in automating server configuration and application deployment. Practice writing playbooks or manifests to manage system configurations effectively.

Week 3-4: Cloud Services and Deployment

Gain proficiency in cloud platforms such as AWS, Azure, or Google Cloud. Explore key services related to computing, storage, networking, and deployment. Learn to leverage cloud-native tools for building scalable and resilient architectures.

Week 5-6: Monitoring and Logging

Understand the importance of monitoring and logging in maintaining system health and diagnosing issues. Explore tools like Prometheus, Grafana, ELK stack, or Splunk for collecting and visualizing metrics and logs. Set up monitoring dashboards and alerts for practical experience.

Week 7-8: Security and Compliance

Familiarize yourself with DevSecOps principles and practices for integrating security into the DevOps lifecycle. Learn about security best practices, vulnerability scanning, and compliance standards relevant to your industry. Experiment with security tools and techniques to secure infrastructure and applications.

Conclusion

By following this two-month roadmap, you can build a solid foundation and acquire the necessary skills to become a job-ready DevOps engineer. Continuous learning and hands-on practice are crucial for mastering DevOps concepts and tools. Stay curious, engage with the DevOps community, and remain open to exploring new technologies and methodologies. With determination and perseverance, you’ll be well on your way to a successful DevOps engineering career.

0 notes

Text

Azure Development Demystified: Navigating the AZ-204 Certification

In today's rapidly changing technological landscape, cloud computing has become the foundation for businesses seeking scalability, flexibility, and efficiency. Among the various cloud service providers, Microsoft Azure stands out as a leading platform with a diverse set of services and solutions. For developers looking to demonstrate their expertise in Azure development, the Microsoft Azure Developer Associate Certification (AZ-204) is a critical milestone. This comprehensive guide delves into the complexities of AZ-204, including its significance, exam details, and preparation strategies.

Understanding AZ-204 Certification

The AZ-204 certification is intended for developers who specialize in designing, developing, testing, and maintaining cloud applications and services on Microsoft Azure. It validates the skills and knowledge needed to create solutions with Azure technologies such as compute, storage, security, and communication services.

Key Concepts Covered in AZ-204

1. Azure Development Tools and Technologies

AZ-204 evaluates candidates' ability to use various Azure development tools and technologies, including Azure SDKs, Azure CLI, Azure PowerShell, Azure Portal, and Azure DevOps. Understanding these tools is critical for the effective development, deployment, and management of Azure-based solutions.

2. Azure Compute Solutions

Candidates must demonstrate their ability to create Azure compute solutions using virtual machines, Azure App Service, Azure Functions, Azure Kubernetes Service (AKS), and Azure Batch. Mastery of these services enables developers to create scalable, resilient, and cost-effective Azure applications.

3. Azure Storage Solutions

Azure offers a variety of storage solutions that are tailored to specific application requirements. AZ-204 assesses candidates' understanding of Azure Blob storage, Azure Files, Azure Table storage, Azure Queue storage, and Azure Cosmos Database. Designing data-intensive applications requires proficiency in the use of these storage services.

4. Azure Security Implementation

Security is critical in cloud computing, and AZ-204 evaluates developers' abilities to implement secure solutions on Azure. Authentication, authorization, encryption, Azure Key Vault, Azure Active Directory (AAD), and Azure Role-Based Access Control (RBAC) are among the topics discussed.

5. Integration and Communication Services

Effective communication and integration are critical components of modern app development. AZ-204 addresses Azure services such as Azure Event Grid, Azure Service Bus, Azure Event Hubs, Azure Notification Hubs, and Azure Logic Apps. Competence in integrating these services enables developers to create seamless and interconnected applications.

AZ-204 Exam Details

The AZ-204 exam contains a variety of question types, including multiple-choice, scenario-based, and interactive items. The exam typically lasts about 150 minutes and contains a variety of questions. Candidates must register for the exam through the Microsoft certification website and adequately prepare to ensure success.

Preparation Strategies for AZ-204

1. Understand the Exam Objectives

Familiarize yourself with Microsoft's official exam objectives. This will serve as a road map for your preparation, ensuring that you cover all of the necessary topics thoroughly.

2. Hands-on Experience

Practical experience is invaluable when preparing for AZ-204. Create Azure accounts, experiment with different services, and work on real-world projects to gain practical experience with Azure development.

3. Utilize Official Microsoft Documentation and Resources

Microsoft provides extensive documentation, tutorials, and learning paths specifically designed for AZ-204 preparation. Take advantage of these resources to improve your understanding of Azure services and development methodologies.

4. Enroll in Azure Training Courses

Consider enrolling in instructor-led training courses or online tutorials provided by Microsoft or its authorized partners. These courses combine structured learning experiences with expert guidance to help you master Azure development concepts.

5. Practice with Sample Questions and Mock Exams

Practice is essential for success in any certification exam. Use sample questions, practice tests, and mock exams to evaluate your knowledge, identify areas for improvement, and become familiar with the exam format.

Conclusion

The Microsoft Azure Developer Associate Certification (AZ-204) demonstrates an individual's ability to develop cloud applications and services on the Azure platform. Developers can improve their career prospects and make significant contributions to organizations that use Azure technologies by mastering the key concepts covered in AZ-204 and implementing effective preparation strategies. Embrace the Azure development journey and let AZ-204 certification serve as your badge of expertise in the cloud computing realm.

#AzureDevelopment#AZ204Certification#CloudComputing#MicrosoftAzure#DeveloperSkills#CertificationPreparation#CloudServices#AzureMastery#ITCertification#CareerDevelopment

0 notes

Text

Launch LLM Chatbot and Boost Gen AI Inference with Intel AMX

LLM Chatbot Development Hi There, Developers! We are back and prepared to "turn the volume up" by using Intel Optimized Cloud Modules to demonstrate how to use our 4th Gen Intel Xeon Scalable CPUs for GenAI Inferencing. Boost Your Generative AI Inferencing Speed Did you know that our newest Intel Xeon Scalable CPU, the 4th Generation model, includes AI accelerators? That's true, the CPU has an AI accelerator that enables high-throughput generative AI inference and training without the need for specialized GPUs. This enables you to use CPUs for both conventional workloads and AI, lowering your overall TCO. For applications including natural language processing (NLP), picture production, recommendation systems, and image identification, Intel Advanced Matrix Extensions (Intel AMX), a new built-in accelerator, provides better deep learning training and inference performance on the CPU. It focuses on int8 and bfloat16 data types. Setting up LLM Chatbot The 4th Gen Intel Xeon CPU is currently generally available on GCP (C3, H3 instances) and AWS (m7i, m7i-flex, c7i, and r7iz instances), in case you weren't aware. Let's get ready to deploy your FastChat GenAI LLM Chabot on the 4th Gen Intel Xeon processor rather than merely talking about it. Move along! Modules for Intel's Optimized Cloud and recipes for Intel's Optimized Cloud Here are a few updates before we go into the code. At Intel, we invest a lot of effort to make it simple for DevOps teams and developers to use our products. The creation of Intel's Optimized Cloud Modules was a step in that direction. The Intel Optimized Cloud Recipes, or OCRs, are the modules' companions, which I'd want to introduce to you today. Intel Optimized Cloud Recipes: What are they? The Intel Optimized Cloud Recipes (OCRs), which use RedHat Ansible and Microsoft PowerShell to optimize operating systems and software, are integrated with our cloud modules. Here's How We Go About It Enough reading; let's turn our attention to using the FastChat OCR and GCP Virtual Machine Module. You will install your own generative AI LLM chatbot system on the 4th Gen Intel Xeon processor using the modules and OCR. The power of our integrated Intel AMX accelerator for inferencing without the need for a discrete GPU will next be demonstrated. To provision VMs on GCP or AWS, you need a cloud account with access and permissions. Implementation: GCP Steps The steps below are outlined in the Module README.md (see the example below) for more information. Usage Log on to the GCP portal Enter the GCP Cloud Shell (Click the terminal button on the top right of the portal page) Run the following commands in order:

git clone https://github.com/intel/terraform-intel-gcp-vm.git cd terraform-intel-gcp-vm/examples/gcp-linux-fastchat-simple terraform init terraform apply

Enter your GCP Project ID and "yes" to confirm

Running the Demo Wait approximately 10 minutes for the recipe to download and install FastChat and the LLM model before continuing. SSH into the newly created CGP VM Run: source /usr/local/bin/run_demo.sh On your local computer, open a browser and navigate to http://:7860 . Get your public IP from the "Compute Engine" section of the VM in the GCP console. Or use the https://xxxxxxx.gradio.live URL that is generated during the demo startup (see on-screen logs)

"chat" and observe Intel AMX in operation after launching (Step 3), navigating to the program (Step 4), and "chatting" (Step 3). Using the Intel Developer Cloud instead of GCP or AWS for deployment A virtual machine powered by an Intel Xeon Scalable Processor 4th Generation can also be created using the Intel Developer Cloud. For details on how to provision the Virtual Machine, see Intel Developer Cloud. After the virtual machine has been set up: As directed by the Intel Developer Cloud, SSH onto the virtual machine. To run the automated recipe and launch the LLM chatbot, adhere to the AI Intel Optimized Cloud Recipe Instructions. GenAI Inferencing: Intel AMX and 4th Gen Xeon Scalable Processors I hope you have a chance to practice generative AI inference! You can speed up your AI workloads and create the next wave of AI apps with the help of the 4th Gen Intel Xeon Scalable Processors with Intel AMX. You can quickly activate generative AI inferencing and start enjoying its advantages by utilizing our modules and recipes. Data scientists, researchers, and developers can all advance generative AI.

0 notes

Text

Planning and Administering Microsoft Azure for SAP Workloads AZ-120

This course teaches IT professionals with experience in SAP solutions how to take advantage of Azure resources including the deployment and configuration of virtual machines, virtual networks, storage accounts, and Azure AD including the implementation and management of hybrid identities . Students in this course will learn through concepts, scenarios, procedures, and hands-on labs how to better plan and implement the migration and operation of an SAP solution on Azure. You will receive instructions on subscriptions, create and scale virtual machines, implement storage solutions, configure virtual networks, back up and share data, connect Azure and on-premises sites, manage network traffic, implement Azure Active Directory, protect identities and will supervise the solution. Planning and Administering Microsoft Azure for SAP Workloads AZ-120

This course is intended for Azure administrators migrating and managing SAP solutions on Azure. Azure administrators manage cloud services spanning storage, networking, and cloud computing functions, with deep understanding of each service throughout the IT lifecycle. They take end-user requests for new cloud applications and make recommendations on which services to use for optimal performance and scale, and provision, scale, monitor, and tune as appropriate. This role requires communicating and coordinating with vendors. Azure administrators use the Azure portal, and as they become more proficient, use PowerShell and the command line interface. Azure for SAP Workloads Specialty

Module 1: Explore Azure for SAP workloads

Contains lessons covering Azure on SAP workloads, common SAP and Azure terms and definitions, SAP certified configurations, and architectures for SAP NetWeaver with AnyDB and SAP S4 HANA on Azure VMs.

Module 2: Exploring the foundation of infrastructure as a service (IaaS) for SAP on Azure

Contains lessons on Azure compute, Azure storage, Azure networking, and Azure databases.

Lab : Implementing Linux Clustering for SAP on Azure VMs

Lab : Implementing Windows Clustering for SAP on Azure VMs

Module 3: Fundamentals of Identity and Governance for SAP on Azure

Contains lessons on identity services, Azure remote management and manageability, and governance in Azure.

Module 4: Implementation of SAP on Azure

Contains lessons on implementing single instance (2-tier and 3-tier) deployments and implementing high availability in SAP NetWeaver with AnyDB on Azure VMs.

Lab : Implementing SAP architecture on Azure VMs running Windows

Lab : Implementing SAP architecture on Azure VMs running Linux

Module 5: Ensuring Business Continuity and Implementing Disaster Recovery for SAP Solutions on Azure

Contains lessons on implementing high availability for SAP workloads on Azure, disaster recovery for SAP workloads on Azure, and backup and restore.

Module 6: Migrating workloads from SAP to Azure

Contains lessons on using the SAP Workload Planning and Implementation Checklist, migration options, including the Database Migration Option (DMO) methodology and cloud migration options, and how to migrate large databases (VLDB) to Azure.

Module 7: Monitoring and troubleshooting Azure for SAP workloads

Contains lessons on Azure monitoring requirements for SAP workloads, configuring the Azure Enhanced Monitoring extension for SAP, and Azure virtual machine licensing, pricing, and support.

1 note

·

View note

Text

How to deploy MBAM Client to Computers as Part of a Windows Deployment

How to deploy MBAM Client to Computers as Part of a Windows Deployment

View On WordPress

#deployment#Deployment Image Servicing and Management#Invoke-MbamClientDeployment.ps1#MBAM#MDT#Microsoft BitLocker Administration and Monitoring#Microsoft Deployment Tool kit#Microsoft Windows#PowerShell Deployment Extension#WDS#Windows#Windows 10#Windows 11#Windows Deployment#Windows Deployment Services#Windows Server#Windows Server 2012#Windows Server 2019#Windows Server 2022

0 notes

Text

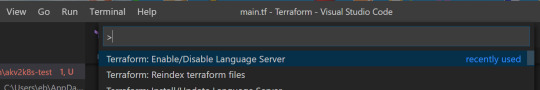

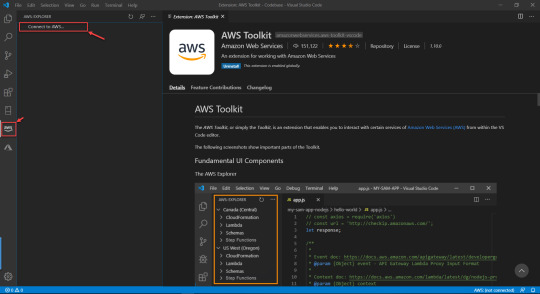

Terraform Visual Studio Code

Terraform is an open-source tool created by HashiCorp for developing, changing and versioning infrastructure safely and efficiently. It provides a service known as 'Infrastructure as Code' which enables users to define and provision infrastructure using a high-level configuration language. About the Terraform extension.

Terraform Editor

Setup Terraform Visual Studio Code

Vs Code Jquery

Visual Studio Code Terraform 12

Install and configure Terraform Get Started Configure Terraform using Azure Cloud Shell; Configure Terraform using Azure PowerShell; How-To Guide Install the Terraform Visual Studio Code extension; Create a Terraform base template using Yeoman.

Terraform This is a Visual Studio Code extension. Adds syntax support for the Terraform and Terragrunt configuration language.

I’ve been doing more work with Terraform and Azure recently, particularly around deployments that need to deploy both Azure and non-Azure resources at the same time. In particular, this has focussed on creating AKS clusters and then using Terraform to set these up and deploy all the standard services on top of the cluster. I’ve also been live streaming some of this work on Twitch so if your interested in watching how this is done, or want to ask a question, please do join me.

When I started this work, I stumbled across the Azure Terraform extension for VS Code. This is a Microsoft extension, but I hadn’t seen it before, and I’ve found it very useful during the development process. What this extension does is provide integration between VS Code and Terraform running in Azure Cloud Shell. It provides commands for all the standard Terraform functions, but where it is different is it runs all these commands in Cloud shell, not on my local machine. My Terraform files are on my machine (in this case checked out from Git), but when I run “Azure Terraform Plan”, the extension takes care of syncing the latest copy of the file up to cloud shell, and then running the “Plan” command directly in Cloud Shell.

By utilising Cloud Shell, I get several benefits:

I don’t have to care about authenticating to Azure in my Terraform files; this is taken care of for me by Cloud Shell. No need to create service principals or set up a provider with credentials. I can get up and running with Terraform very quickly

My commands execute in Cloud Shell, so if I have any connectivity issues from my local machine, they should not impact things

Cloud Shell is kept up to date with the latest version of Terraform and any dependencies

All my Terraform files are synced with my storage in Cloud Shell

One thing to bear in mind, however, is that this is very much designed as a development tool, to help you create your Terraform scripts in the first place. This is not generally the approach you would use to run deployments in production, particularly if you need governance around your process. Moving to production would usually involve checking the files you created using VS Code and this extension into version control and building a proper pipeline. Given all that, let’s take a look at how to get set up.

Pre-Requisites

There are a few things you need to have installed to use this extension:

Visual Studio Code (obviously)

Node.js 6.0 or higher - this is needed to run Cloud Shell in VS Code

Optionally, you might want to add some optional components that make life better:

Terraform - yes I did say all commands run in Cloud Shell, so you don’t need this, but if you want the option to run some commands (like validate) locally then it can be useful to have it installed, but it is optional

Terraform VS Code Plugin - The standard VS Code plugin that gives you auto-completion, syntax highlighting, linting etc.

GraphViz - This is entirely optional, but if you want to use the visualisation feature you will need it

Extension

Once all the pre-requisites are installed, we can install the extension by searching for “Azure Terraform” in the VS Code plugin gallery, or going to this page.

Once the plugin is installed, open up an existing Terraform project, or create a new one. Make sure you open the containing folder in VS Code, as it is this folder that gets created on your Cloud Drive.

When you are ready to start running your project, press the VS Code command keys (ctrl shift P on Windows) and look for “Azure Terraform Init”. Run this, and VS Code will ask you if you want to open Cloud Shell, click OK.

If your account is connected to more than one Azure AD tenant, then VS Code will ask you which tenant to connect to, pick the appropriate one.

You will see in the Shell that it tries to CD into your project directory in Cloud Shell but fails as it does not exist. It will then create the directory, and you will see a message indicating “Terraform initialized in an empty directory!”.

At this point, the plugin has created your directory in Cloud Shell, but to initialise Terraform we need to rerun Init. So again press the VS code command keys, and go to “Azure Terraform Init”. This time you will see it CD into your directory and then run init, which installs all the required Terraform Modules. You now have Terraform initialised and all your files in Cloud Shell.

The Cloud Shell console will remain open, so you can run manual commands to check your files if you wish.

That was all the setup there is. Now, whenever you run one of the Azure Terraform commands VS Code will sync the latest version of your files to Cloud Shell and then run the requested Terraform Command. The extension supports the following standard Terraform commands:

Terraform Editor

Init

Plan

Apply

Validate

Refresh

Destroy

Additional Commands

As well as the 6 commands listed above, this plugin also provides some extra commands:

Azure Terraform Visualize

Setup Terraform Visual Studio Code

If you installed the GraphViz pre-requisite, you can use this command to generate a visual representation of your templates. This command runs locally, not in Cloud Shell. Once you run it, it will seem to not do anything for a while, but eventually, it will create a png with a graph of your deployment.

I’m not yet sure how useful this feature is, but it is a helpful tool to have

Vs Code Jquery

Azure Terraform Push

Visual Studio Code Terraform 12

This command triggers a push of the latest versions of your files up to Cloud Shell if you wish to trigger this outside of the other commands

Azure Terraform Execute Test

This command allows you to run any tests you have set up against your Terraform code. It has two values:

lint - this will run tests to check the formatting of the code

e2e - this will take your Terraform code, and the settings in your variables file and actually run a deployment, test everything works, and then destroy the resources, so a full end to end test

1 note

·

View note

Photo

hydralisk98′s web projects tracker:

Core principles=

Fail faster

‘Learn, Tweak, Make’ loop

This is meant to be a quick reference for tracking progress made over my various projects, organized by their “ultimate target” goal:

(START)

(Website)=

Install Firefox

Install Chrome

Install Microsoft newest browser

Install Lynx

Learn about contemporary web browsers

Install a very basic text editor

Install Notepad++

Install Nano

Install Powershell

Install Bash

Install Git

Learn HTML

Elements and attributes

Commenting (single line comment, multi-line comment)

Head (title, meta, charset, language, link, style, description, keywords, author, viewport, script, base, url-encode, )

Hyperlinks (local, external, link titles, relative filepaths, absolute filepaths)

Headings (h1-h6, horizontal rules)

Paragraphs (pre, line breaks)

Text formatting (bold, italic, deleted, inserted, subscript, superscript, marked)

Quotations (quote, blockquote, abbreviations, address, cite, bidirectional override)

Entities & symbols (&entity_name, &entity_number,  , useful HTML character entities, diacritical marks, mathematical symbols, greek letters, currency symbols, )

Id (bookmarks)

Classes (select elements, multiple classes, different tags can share same class, )

Blocks & Inlines (div, span)

Computercode (kbd, samp, code, var)

Lists (ordered, unordered, description lists, control list counting, nesting)

Tables (colspan, rowspan, caption, colgroup, thead, tbody, tfoot, th)

Images (src, alt, width, height, animated, link, map, area, usenmap, , picture, picture for format support)

old fashioned audio

old fashioned video

Iframes (URL src, name, target)

Forms (input types, action, method, GET, POST, name, fieldset, accept-charset, autocomplete, enctype, novalidate, target, form elements, input attributes)

URL encode (scheme, prefix, domain, port, path, filename, ascii-encodings)

Learn about oldest web browsers onwards

Learn early HTML versions (doctypes & permitted elements for each version)

Make a 90s-like web page compatible with as much early web formats as possible, earliest web browsers’ compatibility is best here

Learn how to teach HTML5 features to most if not all older browsers

Install Adobe XD

Register a account at Figma

Learn Adobe XD basics

Learn Figma basics

Install Microsoft’s VS Code

Install my Microsoft’s VS Code favorite extensions

Learn HTML5

Semantic elements

Layouts

Graphics (SVG, canvas)

Track

Audio

Video

Embed

APIs (geolocation, drag and drop, local storage, application cache, web workers, server-sent events, )

HTMLShiv for teaching older browsers HTML5

HTML5 style guide and coding conventions (doctype, clean tidy well-formed code, lower case element names, close all html elements, close empty html elements, quote attribute values, image attributes, space and equal signs, avoid long code lines, blank lines, indentation, keep html, keep head, keep body, meta data, viewport, comments, stylesheets, loading JS into html, accessing HTML elements with JS, use lowercase file names, file extensions, index/default)

Learn CSS

Selections

Colors

Fonts

Positioning

Box model

Grid

Flexbox

Custom properties

Transitions

Animate

Make a simple modern static site

Learn responsive design

Viewport

Media queries

Fluid widths

rem units over px

Mobile first

Learn SASS

Variables

Nesting

Conditionals

Functions

Learn about CSS frameworks

Learn Bootstrap

Learn Tailwind CSS

Learn JS

Fundamentals

Document Object Model / DOM

JavaScript Object Notation / JSON

Fetch API

Modern JS (ES6+)

Learn Git

Learn Browser Dev Tools

Learn your VS Code extensions

Learn Emmet

Learn NPM

Learn Yarn

Learn Axios

Learn Webpack

Learn Parcel

Learn basic deployment

Domain registration (Namecheap)

Managed hosting (InMotion, Hostgator, Bluehost)

Static hosting (Nertlify, Github Pages)

SSL certificate

FTP

SFTP

SSH

CLI

Make a fancy front end website about

Make a few Tumblr themes

===You are now a basic front end developer!

Learn about XML dialects

Learn XML

Learn about JS frameworks

Learn jQuery

Learn React

Contex API with Hooks

NEXT

Learn Vue.js

Vuex

NUXT

Learn Svelte

NUXT (Vue)

Learn Gatsby

Learn Gridsome

Learn Typescript

Make a epic front end website about

===You are now a front-end wizard!

Learn Node.js

Express

Nest.js

Koa

Learn Python

Django

Flask

Learn GoLang

Revel

Learn PHP

Laravel

Slim

Symfony

Learn Ruby

Ruby on Rails

Sinatra

Learn SQL

PostgreSQL

MySQL

Learn ORM

Learn ODM

Learn NoSQL

MongoDB

RethinkDB

CouchDB

Learn a cloud database

Firebase, Azure Cloud DB, AWS

Learn a lightweight & cache variant

Redis

SQLlite

NeDB

Learn GraphQL

Learn about CMSes

Learn Wordpress

Learn Drupal

Learn Keystone

Learn Enduro

Learn Contentful

Learn Sanity

Learn Jekyll

Learn about DevOps

Learn NGINX

Learn Apache

Learn Linode

Learn Heroku

Learn Azure

Learn Docker

Learn testing

Learn load balancing

===You are now a good full stack developer

Learn about mobile development

Learn Dart

Learn Flutter

Learn React Native

Learn Nativescript

Learn Ionic

Learn progressive web apps

Learn Electron

Learn JAMstack

Learn serverless architecture

Learn API-first design

Learn data science

Learn machine learning

Learn deep learning

Learn speech recognition

Learn web assembly

===You are now a epic full stack developer

Make a web browser

Make a web server

===You are now a legendary full stack developer

[...]

(Computer system)=

Learn to execute and test your code in a command line interface

Learn to use breakpoints and debuggers

Learn Bash

Learn fish

Learn Zsh

Learn Vim

Learn nano

Learn Notepad++

Learn VS Code

Learn Brackets

Learn Atom

Learn Geany

Learn Neovim

Learn Python

Learn Java?

Learn R

Learn Swift?

Learn Go-lang?

Learn Common Lisp

Learn Clojure (& ClojureScript)

Learn Scheme

Learn C++

Learn C

Learn B

Learn Mesa

Learn Brainfuck

Learn Assembly

Learn Machine Code

Learn how to manage I/O

Make a keypad

Make a keyboard

Make a mouse

Make a light pen

Make a small LCD display

Make a small LED display

Make a teleprinter terminal

Make a medium raster CRT display

Make a small vector CRT display

Make larger LED displays

Make a few CRT displays

Learn how to manage computer memory

Make datasettes

Make a datasette deck

Make floppy disks

Make a floppy drive

Learn how to control data

Learn binary base

Learn hexadecimal base

Learn octal base

Learn registers

Learn timing information

Learn assembly common mnemonics

Learn arithmetic operations

Learn logic operations (AND, OR, XOR, NOT, NAND, NOR, NXOR, IMPLY)

Learn masking

Learn assembly language basics

Learn stack construct’s operations

Learn calling conventions

Learn to use Application Binary Interface or ABI

Learn to make your own ABIs

Learn to use memory maps

Learn to make memory maps

Make a clock

Make a front panel

Make a calculator

Learn about existing instruction sets (Intel, ARM, RISC-V, PIC, AVR, SPARC, MIPS, Intersil 6120, Z80...)

Design a instruction set

Compose a assembler

Compose a disassembler

Compose a emulator

Write a B-derivative programming language (somewhat similar to C)

Write a IPL-derivative programming language (somewhat similar to Lisp and Scheme)

Write a general markup language (like GML, SGML, HTML, XML...)

Write a Turing tarpit (like Brainfuck)

Write a scripting language (like Bash)

Write a database system (like VisiCalc or SQL)

Write a CLI shell (basic operating system like Unix or CP/M)

Write a single-user GUI operating system (like Xerox Star’s Pilot)

Write a multi-user GUI operating system (like Linux)

Write various software utilities for my various OSes

Write various games for my various OSes

Write various niche applications for my various OSes

Implement a awesome model in very large scale integration, like the Commodore CBM-II

Implement a epic model in integrated circuits, like the DEC PDP-15

Implement a modest model in transistor-transistor logic, similar to the DEC PDP-12

Implement a simple model in diode-transistor logic, like the original DEC PDP-8

Implement a simpler model in later vacuum tubes, like the IBM 700 series

Implement simplest model in early vacuum tubes, like the EDSAC

[...]

(Conlang)=

Choose sounds

Choose phonotactics

[...]

(Animation ‘movie’)=

[...]

(Exploration top-down ’racing game’)=

[...]

(Video dictionary)=

[...]

(Grand strategy game)=

[...]

(Telex system)=

[...]

(Pen&paper tabletop game)=

[...]

(Search engine)=

[...]

(Microlearning system)=

[...]

(Alternate planet)=

[...]

(END)

4 notes

·

View notes

Text

IT / OT SPECIALIZED APPLICATIONS LEAD - 202302-104997 Location: Oceanside, California

Industry: Pharmaceutical / Biotech Job Category: Information Technology - Other IT Are you passionate about Information (IT) and Automation Technology (OT) and aspiring to make a meaningful impact? Are you curious to shape a digital manufacturing architecture, ready to advance competitiveness on the market? We Make Medicines! Behind every product sold by the company is Pharma Global Technical Operations (PT). Starting with Phase I of the development process and continuing through to product maturity, PT makes lifesaving medicines at 11 locations, with the support of partners from around the world. Information (IT) and Automation Technology (OT) teams are key in producing and delivering medicine to patients. The organization is currently transforming towards digitalization, advancing fundamental elements to meet future needs, such as using new technologies. We are looking for a highly motivated engineer to take on the role of IT / OT Specialized Application Lead as part of the Site IT / OT Organization in a 24x7 Good Manufacturing Practice (GMP) environment. The successful candidate will be: Site Owner of “Customized Applications” that interface with Manufacturing System Platforms such as OSI PI (Plant Historian), Syncade (MES), DeltaV (Distributed Control System), PLC's, Lab Data Systems (Smartline Data Cockpit) using OPC or other middleware. Examples of custom applications include: reports/reporting tools, dashboards Skilled at scripting and programming preferably in a manufacturing systems environment (Java, C++, Python, PowerShell) Support Lead for custom applications but also knowledgeable in Operational Technology Systems May eventually expand the role to become a Regional role for multiple sites supporting IT / OT operating model evolution What you will be working on: Development and Lifecycle management of the customized applications and related systems Support the implementation of OT standards and best practices across all sites Support commissioning and startup activity of new process control systems and manufacturing systems (Syncade, DeltaV, PLC’s, OSI PI historian, etc.) Support the execution of computer system validation and control system lifecycle management Develop and maintain GMP/Non-GMP design documentation and engineering diagrams Execute testing and installation of system/database patches, upgrades, and new releases Troubleshoot and resolve incidents and problems associated with the system/databases and applications Execute implementation and delivery of projects on-site IT OT Product Portfolio Operate and execute change control process for system deployment and release management of system/database software across Good Manufacturing Practice (GMP) validated and non-GMP environments Requirements / Qualifications: Bachelor’s degree in Engineering, Computer Science, or equivalent experience A minimum of 5 years systems engineer experience involved in the design, implementation, and/or support of automation systems, preferably in a regulated (Pharmaceutical) industry. Candidates with 8-10 years of relevant experience are preferred. 24X7 support, participate in an on-call environment to meet business continuity requirements, including weekends and holidays as required Extensive experience with scripting and programming in various languages (Java, C++, Python, PowerShell) preferably in a Manufacturing Systems environment Experience with scripting and programming in various languages (Java, C++, Python, PowerShell) Experience with Microsoft Windows Server operating system, Microsoft SQL Server, and development tools Experience with Emerson DCS DeltaV, MES Syncade, OSIsoft PI, Rockwell Automation AssetCentre, and various OPC architectures Onsite at Oceanside, CA, required (no remote) Relocation assistance will be considered for exceptional candidates Security Clearance Required: No Visa Candidate Considered: No Read the full article

0 notes

Text

N0.1 Training institute for MS Azure Devops in India 2023 NareshIT

About the Microsoft Azure DevOps Online Training :

Azure DevOps Online Training makes you skilled in DevOps concepts like Continuous Integration (CI), Continuous Deployment or Continuous Delivery (CD) and Continuous Monitoring, using Azure DevOps. It covers training on Sprint Planning and Tracking, Azure Repos, Azure Pipelines, Azure Test Plans, Azure Artifacts and Extensions for Azure DevOps. The Azure DevOps Training will help you learn how to design and tool plan for collaboration, source code management.

Pre-requisites for learning Microsoft Azure DevOps Course:

Foundational knowledge on Cloud Technologies.

Basic understanding of Databases, Networking Concepts.

Basic Knowledge of Continuous Integration, Continuous Deployment ( CICD ).

Fundamentals of PowerShell.

Demo Details :

Enroll Now: https://bit.ly/3KrO6eD

Attend Free Online Demo On MS-Azure+DevOps by Real Time Expert.

Demo on: 13th April @ 09:00 AM (IST)

0 notes

Text

Ark Core Desktop Wallet