#ResponsibleTech

Explore tagged Tumblr posts

Text

Prince Harry Champions Service and Purpose at NEXUS Summit

NEED TO KNOW Prince Harry made a surprise appearance at the 2025 NEXUS Global Summit in New York City on June 27. He discussed his personal evolution from royal duty to purpose-driven leadership with Archewell Foundation. The Duke called for grassroots solutions, mental health investment, and responsible tech that puts people first. On June 27, the Duke of Sussex made an unannounced…

#ChangeMakers#EmpoweringYouth#FutureLeaders#GenerationZ#Innovation#Prince Harry#ResponsibleTech#SocialImpact#YouthSolutions#YouthVoice

6 notes

·

View notes

Text

youtube

You Won't Believe How Easy It Is to Implement Ethical AI

#ResponsibleAI#EthicalAI#AIPrinciples#DataPrivacy#AITransparency#AIFairness#TechEthics#AIImplementation#GenerativeAI#AI#MachineLearning#ArtificialIntelligence#AIRevolution#AIandPrivacy#AIForGood#FairAI#BiasInAI#AIRegulation#EthicalTech#AICompliance#ResponsibleTech#AIInnovation#FutureOfAI#AITraining#DataEthics#EthicalAIImplementation#artificial intelligence#artists on tumblr#artwork#accounting

2 notes

·

View notes

Text

#AI2025#FutureOfTech#ArtificialIntelligence#HumanVsAI#EthicalAI#AIandReality#AITrends#ResponsibleTech#Deepfakes#Chatbots#AIRevolution#ThinkBeforeYouClick#TechForGood#SmartTech#DigitalEthics#AIWorld#TechAwareness

0 notes

Text

Explainable AI (XAI) and Ethical AI: Opening the Black Box of Machine Learning

Artificial Intelligence (AI) systems have transitioned from academic experiments to mainstream tools that influence critical decisions in healthcare, finance, criminal justice, and more. With this growth, a key challenge has emerged: understanding how and why AI models make the decisions they do.

This is where Explainable AI (XAI) and Ethical AI come into play.

Explainable AI is about transparency—making AI decisions understandable and justifiable. Ethical AI focuses on ensuring these decisions are fair, responsible, and align with societal values and legal standards. Together, they address the growing demand for AI systems that not only work well but also work ethically.

🔍 Why Explainability Matters in AI

Most traditional machine learning algorithms, like linear regression or decision trees, offer a certain degree of interpretability. However, modern AI relies heavily on complex, black-box models such as deep neural networks, ensemble methods, and large transformer-based models.

These high-performing models often sacrifice interpretability for accuracy. While this might work in domains like advertising or product recommendations, it becomes problematic when these models are used to determine:

Who gets approved for a loan,

Which patients receive urgent care,

Or how long a prison sentence should be.

Without a clear understanding of why a model makes a decision, stakeholders cannot fully trust or challenge its outcomes. This lack of transparency can lead to public mistrust, regulatory violations, and real harm to individuals.

🛠️ Popular Techniques for Explainable AI

Several methods and tools have emerged to bring transparency to AI systems. Among the most widely adopted are SHAP and LIME.

1. SHAP (SHapley Additive exPlanations)

SHAP is based on Shapley values from cooperative game theory. It explains a model's predictions by assigning an importance value to each feature, representing its contribution to a particular prediction.

Key Advantages:

Consistent and mathematically sound.

Model-agnostic, though especially efficient with tree-based models.

Provides local (individual prediction) and global (overall model behavior) explanations.

Example:

In a loan approval model, SHAP could reveal that a customer’s low income and recent missed payments had the largest negative impact on the decision, while a long credit history had a positive effect.

2. LIME (Local Interpretable Model-agnostic Explanations)

LIME approximates a complex model with a simpler, interpretable model locally around a specific prediction. It identifies which features influenced the outcome the most in that local area.

Benefits:

Works with any model type (black-box or not).

Especially useful for text, image, and tabular data.

Fast and relatively easy to implement.

Example:

For an AI that classifies news articles, LIME might highlight certain keywords that influenced the model to label an article as “fake news.”

⚖️ Ethical AI: The Other Half of the Equation

While explainability helps users understand model behavior, Ethical AI ensures that behavior is aligned with human rights, fairness, and societal norms.

AI systems can unintentionally replicate or even amplify historical biases found in training data. For example:

A recruitment AI trained on resumes of past hires might discriminate against women if the training data was male-dominated.

A predictive policing algorithm could target marginalized communities more often due to biased historical crime data.

Principles of Ethical AI:

Fairness – Avoid discrimination and ensure equitable outcomes across groups.

Accountability – Assign responsibility for decisions and outcomes.

Transparency – Clearly communicate how and why decisions are made.

Privacy – Protect personal data and respect consent.

Human Oversight – Ensure humans remain in control of important decisions.

🧭 Governance Frameworks and Regulations

As AI adoption grows, governments and institutions have started creating legal frameworks to ensure AI is used ethically and responsibly.

Major Guidelines:

European Union’s AI Act – A proposed regulation requiring explainability and transparency for high-risk AI systems.

OECD Principles on AI – Promoting AI that is innovative and trustworthy.

NIST AI Risk Management Framework (USA) – Encouraging transparency, fairness, and reliability in AI systems.

Organizational Practices:

Model Cards – Documentation outlining model performance, limitations, and intended uses.

Datasheets for Datasets – Describing dataset creation, collection processes, and potential biases.

Bias Audits – Regular evaluations to detect and mitigate algorithmic bias.

🧪 Real-World Applications of XAI and Ethical AI

1. Healthcare

Hospitals use machine learning to predict patient deterioration. But if clinicians don’t understand the reasoning behind alerts, they may ignore them. With SHAP, a hospital might show that low oxygen levels and sudden temperature spikes are key drivers behind an alert, boosting clinician trust.

2. Finance

Banks use AI to assess creditworthiness. LIME can help explain to customers why they were denied a loan, highlighting specific credit behaviors and enabling corrective action—essential for regulatory compliance.

3. Criminal Justice

Risk assessment tools predict the likelihood of reoffending. However, these models have been shown to be racially biased. Explainable and ethical AI practices are necessary to ensure fairness and public accountability in such high-stakes domains.

🛡️ Building Explainable and Ethical AI Systems

Organizations that want to deploy responsible AI systems must adopt a holistic approach:

✅ Best Practices:

Choose interpretable models where possible.

Integrate SHAP/LIME explanations into user-facing platforms.

Conduct regular bias and fairness audits.

Create cross-disciplinary ethics committees including data scientists, legal experts, and domain specialists.

Provide transparency reports and communicate openly with users.

🚀 The Road Ahead: Toward Transparent, Trustworthy AI

As AI becomes more embedded in our daily lives, explainability and ethics will become non-negotiable. Users, regulators, and stakeholders will demand to know not just what an AI predicts, but why and whether it should.

New frontiers like causal AI, counterfactual explanations, and federated learning promise even deeper levels of insight and privacy protection. But the core mission remains the same: to create AI systems that earn our trust.

💬 Conclusion

AI has the power to transform industries—but only if we can understand and trust it. Explainable AI (XAI) bridges the gap between machine learning models and human comprehension, while Ethical AI ensures that models reflect our values and avoid harm.

Together, they lay the foundation for an AI-driven future that is accountable, transparent, and equitable.

Let’s not just build smarter machines—let’s build better, fairer ones too.

#ai#datascience#airesponsibility#biasinai#aiaccountability#modelinterpretability#humanintheloop#aigovernance#airegulation#xaimodels#aifairness#ethicaltechnology#transparentml#shapleyvalues#localexplanations#mlops#aiinhealthcare#aiinfinance#responsibletech#algorithmicbias#nschool academy#coimbatore

0 notes

Text

Every decision about AI design reflects the priorities of its creators.

#AIclarity#AIfacts#TechEducation#ResponsibleTech#DesignEthics#HumanCenteredAI#CulturalTech#CodeWithPurpose

1 note

·

View note

Text

AI Ethics: How to Audit Your Algorithms for Hidden Bias

🤖 Your AI might be discriminating—and you don’t even know it. Let’s fix it before regulators do.

0 notes

Text

The Robots Are Coming... to Work for the Government?

0 notes

Text

Google’s SGE Enhances Conversational Search with AI-Powered Image Generation and Draft Writing Features

Google’s Search Generative Experience (SGE) is receiving a significant upgrade, introducing new capabilities that further enhance the AI-powered search feature. Starting today, users will be able to leverage SGE to generate images through prompts, a feature akin to Bing’s support for OpenAI’s DALLE-E 3. Additionally, SGE users can now create drafts within the conversational mode, offering customization options for length, tone, and more.

Rapid AI Development

The rapid-fire updates to SGE over the past few months reflect the accelerated pace of AI technology development. Previous enhancements include AI-powered summaries, definitions of unfamiliar terms, coding improvements, travel and product search features, and more.

The new AI image generation feature allows users to input prompts specifying the desired image type, such as a drawing, photo, or painting. SGE will return four results directly in the conversational experience, and users can download these images as .png files or modify the prompt for a new set. The technology behind this feature is Google’s Imagen text-to-image model.

This image generation capability is also extended to Google Image search. Users can create new images using prompts when scrolling through image search results, providing an additional tool to find the perfect image.

Tackling Inappropriate Content

Given concerns about inappropriate content generated by AI, Company has implemented strict filtering policies, limiting the new image generation feature to users aged 18 and older. Despite SGE recently opening up to teens in the U.S. (ages 13-17), Company is mindful of responsible technology use and aims to prevent the creation of harmful, misleading, or explicit images, as well as blocking content that violates its prohibited use policies for generative AI.

Acknowledging that AI tools may not be perfect, Google has made these features opt-in through Google Search Labs, with a feedback mechanism for users to report misuses or misfires. The tools include metadata in the generated files, indicating that they are AI-generated, and invisible watermarking powered by SynthID.

The other notable feature enhancement builds on SGE’s role as a writing assistant. Users can now receive different types of drafts, varying in length or tone, expanding the tool’s utility as a versatile writing assistant. Export options for both the new features include saving images to Google Drive and exporting drafts to Google Workspace apps like Gmail or Google Docs.

To Summarize

These features will roll out gradually, starting tomorrow for a percentage of SGE users and expanding to the wider user base over the coming weeks. The enhancements are available to users who have opted in to use SGE via Google Search Labs and are currently offered in English in the U.S., with potential expansions to other regions in the future.

Read More: Google developing AI tools to help journalists report the news

#GoogleSGE#AIEnhancements#ConversationalSearch#ImageGeneration#DraftWriting#AIDevelopment#ResponsibleTech#GoogleSearchLabs

0 notes

Text

AI isn’t always neutral.

AI isn’t always neutral. Bias in algorithms can impact fairness, decisions, and trust. Learn what AI bias is, why it happens, and how it’s being addressed. Full post here: #aimartz #aimartz.com #AIBias #EthicalAI #ResponsibleTech Read the full article

0 notes

Photo

Did you know that AI advancements are sparking intense debates among industry leaders? Nvidia CEO Jensen Huang recently criticized Anthropic's Dario Amodei over claims that AI could eliminate half of all jobs, calling such predictions "dangerous" and pushing for transparency. Huang emphasizes building AI openly, safely, and responsibly, warning against creating it in dark rooms. Meanwhile, Amodei advocates for ethical development and warns of economic risks. This clash highlights the future of AI—balancing innovation with societal impact. Are we prepared for these technological shifts? Let’s discuss! Discover top custom computer builds at GroovyComputers.ca to stay ahead in this AI-driven world. 🚀 #AIcontroversy #TechLeadership #FutureOfJobs #ArtificialIntelligence #EthicalAI #Innovation #TechDebate #AIDevelopment #ResponsibleTech #CustomComputers #GamingPCs #HighPerformance #GroovyComputers

0 notes

Text

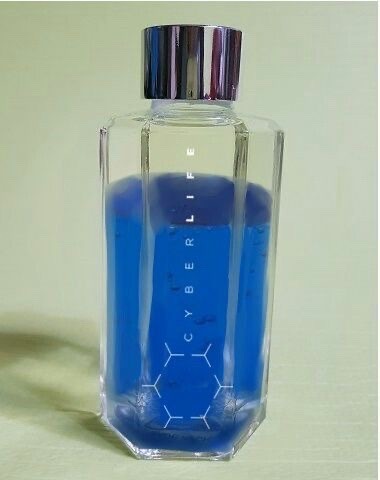

Lending therium out might seem like a simple transaction, but it’s not without its risks. When sharing this vital resource, it’s essential to ensure trust and security. Therium powers much more than machines—it fuels lives. Lending it responsibly means ensuring it’s used wisely and returned when needed. It’s not just about the transaction; it’s about maintaining balance and integrity. #LendWithCare #TheriumTrust #ResponsibleTech

0 notes

Text

Ethical Considerations in AI-Powered Digital Marketing. Balancing Automation with Privacy

In the age of AI-driven digital marketing, automation has revolutionized how businesses interact with customers, analyze data, and deliver personalized experiences. However, with great power comes great responsibility. As AI tools become more advanced, ethical considerations surrounding privacy and transparency have emerged as critical concerns.

One major challenge is maintaining customer trust while leveraging vast amounts of personal data. AI algorithms rely on data to provide insights, optimize campaigns, and predict consumer behavior. But where do we draw the line between personalized experiences and invasive surveillance? Businesses must prioritize transparency, clearly communicating how customer data is collected, stored, and used.

Another ethical dilemma involves algorithmic biases. AI systems learn from historical data, which may inadvertently include biases. Left unchecked, these biases can lead to unfair targeting or exclusion of specific groups, raising ethical and legal concerns. Regular audits and inclusive data training sets are essential for mitigating such risks.

Balancing automation with human oversight is also key. While AI can streamline processes, relying solely on automation risks losing the human touch in marketing strategies. Ethical decision-making requires a hybrid approach where AI enhances human creativity, not replaces it.

As digital marketers, we must embrace AI responsibly, ensuring it aligns with ethical standards and respects consumer privacy. By addressing these concerns head-on, we can foster trust, create meaningful connections, and build a sustainable digital future.

#DigitalMarketing #EthicalAI #AIinMarketing #PrivacyMatters #AutomationEthics #ResponsibleTech #DataTransparency #MarketingEthics #AIandPrivacy

#digital marketing#i love them smmmm#media marketing#seo company#smm#smm panel#smm2#smmagency#smmarketing#social marketing

0 notes

Text

AI is reshaping industries, enhancing efficiency, and pioneering breakthrough innovations. As we embrace the potential of this transformative technology, let's prioritize responsible development & ethical use. Together, we can build a bright future powered by #AI #Innovation #ResponsibleTech

0 notes

Text

#TelegramApp#CyberSecurity#OnlineSafety#PrivacyMatters#DigitalRisks#SafeInternet#DarkWeb#CyberAwareness#SocialMediaAbuse#TechEthics#StopOnlineHate#DigitalSafety#AIForGood#ResponsibleTech#TelegramMisuse

0 notes

Text

AI's mind soars, but ours are left in the dark! Can we understand how AI decides? Explore XAI, the key to transparency & trust. Build a future where AI empowers, not confuses. #ExplainableAI #DemystifyingAI #ResponsibleTech #FutureofAI #TrustTech Daniel Reitberg

#artificial intelligence#machine learning#deep learning#technology#robotics#autonomous vehicles#robots#collaborative robots#data center#sustainable energy#energy consumption#energy

0 notes

Text

There’s no better time for tech to be a force for

In recent years we’ve seen technology become an integral part of our lives.

It’s hard to imagine not being able to search our most pressing questions on Google, do our shopping on Amazon or connect with the world through our smart phones. Our dependency on technology has created giants in the business world, where they’ve also become hugely influential in society too. Who knew when Facebook was formed in a college dorm room all those years ago that they would now have a third of the world's population on its platform? Billions were made, unicorns were born and our lives were made simpler thanks to smart minds and the game-changing inventions that came from the tech industry.

From tech love to tech lash

Yet despite their success, we have seen the emergence of ‘tech lash.’ While many founders started their ventures with good intentions, over time the negative sides of technology began to emerge in ways they never could have imagined. The platforms and services we have welcomed into our lives became addictive. People were misled on online privacy; data was misused and social media fostered fake news and bullies. This caught the attention of the media in such a way that over 26% of technology stories in the U.S. during the past two years were centred on the exposure of tech-related scandals.

But did this ‘tech lash’ change anything? Has it awakened the industry to the weaknesses in their business models and the services they offer? Was it just a moment or the start of a movement?

Aligning purpose with profit

At last year’s Web Summit, the world’s largest tech event, there were echoes of a shared vision and calls for a new era in responsible technology.

Many organisations already have strong purpose and mission statements, as well as robust corporate responsibility programmes - so what can they do differently?

For the tech community to become more responsible, they need to explore the true meaning of being purpose-led and to thread those very principles throughout their organisation. If they are to walk the talk on their calls for a new era in responsible technology, it won’t be achieved by having one department or campaign to deliver their promise.

It’s about mobilising a framework of values, behaviours and attitudes that are embedded within the very fabric of business strategy, models and company culture - guiding a way of thinking, informing decisions, focusing investments and how they engage with customers. It should focus efforts on how technology is designed, built and used that places the customer and the societal value at its heart. This is the biggest area of opportunity.

Find out more in our blog

#responsibletech#technology#purposeled#purposefulbusiness#forceforgood#csr#socialresponsibility#leadership

1 note

·

View note