#modelinterpretability

Explore tagged Tumblr posts

Text

Explainable AI (XAI) and Ethical AI: Opening the Black Box of Machine Learning

Artificial Intelligence (AI) systems have transitioned from academic experiments to mainstream tools that influence critical decisions in healthcare, finance, criminal justice, and more. With this growth, a key challenge has emerged: understanding how and why AI models make the decisions they do.

This is where Explainable AI (XAI) and Ethical AI come into play.

Explainable AI is about transparency—making AI decisions understandable and justifiable. Ethical AI focuses on ensuring these decisions are fair, responsible, and align with societal values and legal standards. Together, they address the growing demand for AI systems that not only work well but also work ethically.

🔍 Why Explainability Matters in AI

Most traditional machine learning algorithms, like linear regression or decision trees, offer a certain degree of interpretability. However, modern AI relies heavily on complex, black-box models such as deep neural networks, ensemble methods, and large transformer-based models.

These high-performing models often sacrifice interpretability for accuracy. While this might work in domains like advertising or product recommendations, it becomes problematic when these models are used to determine:

Who gets approved for a loan,

Which patients receive urgent care,

Or how long a prison sentence should be.

Without a clear understanding of why a model makes a decision, stakeholders cannot fully trust or challenge its outcomes. This lack of transparency can lead to public mistrust, regulatory violations, and real harm to individuals.

🛠️ Popular Techniques for Explainable AI

Several methods and tools have emerged to bring transparency to AI systems. Among the most widely adopted are SHAP and LIME.

1. SHAP (SHapley Additive exPlanations)

SHAP is based on Shapley values from cooperative game theory. It explains a model's predictions by assigning an importance value to each feature, representing its contribution to a particular prediction.

Key Advantages:

Consistent and mathematically sound.

Model-agnostic, though especially efficient with tree-based models.

Provides local (individual prediction) and global (overall model behavior) explanations.

Example:

In a loan approval model, SHAP could reveal that a customer’s low income and recent missed payments had the largest negative impact on the decision, while a long credit history had a positive effect.

2. LIME (Local Interpretable Model-agnostic Explanations)

LIME approximates a complex model with a simpler, interpretable model locally around a specific prediction. It identifies which features influenced the outcome the most in that local area.

Benefits:

Works with any model type (black-box or not).

Especially useful for text, image, and tabular data.

Fast and relatively easy to implement.

Example:

For an AI that classifies news articles, LIME might highlight certain keywords that influenced the model to label an article as “fake news.”

⚖️ Ethical AI: The Other Half of the Equation

While explainability helps users understand model behavior, Ethical AI ensures that behavior is aligned with human rights, fairness, and societal norms.

AI systems can unintentionally replicate or even amplify historical biases found in training data. For example:

A recruitment AI trained on resumes of past hires might discriminate against women if the training data was male-dominated.

A predictive policing algorithm could target marginalized communities more often due to biased historical crime data.

Principles of Ethical AI:

Fairness – Avoid discrimination and ensure equitable outcomes across groups.

Accountability – Assign responsibility for decisions and outcomes.

Transparency – Clearly communicate how and why decisions are made.

Privacy – Protect personal data and respect consent.

Human Oversight – Ensure humans remain in control of important decisions.

🧭 Governance Frameworks and Regulations

As AI adoption grows, governments and institutions have started creating legal frameworks to ensure AI is used ethically and responsibly.

Major Guidelines:

European Union’s AI Act – A proposed regulation requiring explainability and transparency for high-risk AI systems.

OECD Principles on AI – Promoting AI that is innovative and trustworthy.

NIST AI Risk Management Framework (USA) – Encouraging transparency, fairness, and reliability in AI systems.

Organizational Practices:

Model Cards – Documentation outlining model performance, limitations, and intended uses.

Datasheets for Datasets – Describing dataset creation, collection processes, and potential biases.

Bias Audits – Regular evaluations to detect and mitigate algorithmic bias.

🧪 Real-World Applications of XAI and Ethical AI

1. Healthcare

Hospitals use machine learning to predict patient deterioration. But if clinicians don’t understand the reasoning behind alerts, they may ignore them. With SHAP, a hospital might show that low oxygen levels and sudden temperature spikes are key drivers behind an alert, boosting clinician trust.

2. Finance

Banks use AI to assess creditworthiness. LIME can help explain to customers why they were denied a loan, highlighting specific credit behaviors and enabling corrective action—essential for regulatory compliance.

3. Criminal Justice

Risk assessment tools predict the likelihood of reoffending. However, these models have been shown to be racially biased. Explainable and ethical AI practices are necessary to ensure fairness and public accountability in such high-stakes domains.

🛡️ Building Explainable and Ethical AI Systems

Organizations that want to deploy responsible AI systems must adopt a holistic approach:

✅ Best Practices:

Choose interpretable models where possible.

Integrate SHAP/LIME explanations into user-facing platforms.

Conduct regular bias and fairness audits.

Create cross-disciplinary ethics committees including data scientists, legal experts, and domain specialists.

Provide transparency reports and communicate openly with users.

🚀 The Road Ahead: Toward Transparent, Trustworthy AI

As AI becomes more embedded in our daily lives, explainability and ethics will become non-negotiable. Users, regulators, and stakeholders will demand to know not just what an AI predicts, but why and whether it should.

New frontiers like causal AI, counterfactual explanations, and federated learning promise even deeper levels of insight and privacy protection. But the core mission remains the same: to create AI systems that earn our trust.

💬 Conclusion

AI has the power to transform industries—but only if we can understand and trust it. Explainable AI (XAI) bridges the gap between machine learning models and human comprehension, while Ethical AI ensures that models reflect our values and avoid harm.

Together, they lay the foundation for an AI-driven future that is accountable, transparent, and equitable.

Let’s not just build smarter machines—let’s build better, fairer ones too.

#ai#datascience#airesponsibility#biasinai#aiaccountability#modelinterpretability#humanintheloop#aigovernance#airegulation#xaimodels#aifairness#ethicaltechnology#transparentml#shapleyvalues#localexplanations#mlops#aiinhealthcare#aiinfinance#responsibletech#algorithmicbias#nschool academy#coimbatore

0 notes

Text

#MachineLearning#AutoML#DataScience#PredictiveAnalytics#ModelInterpretability#BigData#DigitalTransformation#AI

0 notes

Text

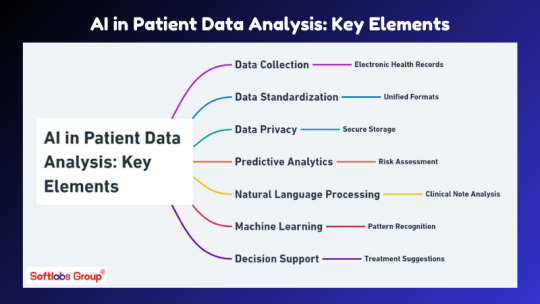

Delve into the world of AI-powered patient data analysis! Learn about the essential elements driving this transformative technology, including predictive analytics, natural language processing, and deep learning algorithms. Stay informed with Softlabs Group for the latest AI advancements and insights in manufacturing.

0 notes

Text

#Eli5Python#PythonExplainItLikeIm5#MachineLearning#DataScience#AI#ExplainableAI#InterpretableML#MLModel#Python#Programming#Code#DataAnalysis#DataVisualization#DeepLearning#ML#AIExplainability#ModelInterpretation#Transparency#TrustworthyAI

0 notes

Text

youtube

Session 12 : What is Regression Models for Predictions | Core Concept | Overview in Machine Learning

In Session 12 of our Machine Learning series, we delve into the fundamental concept of Regression Models for Predictions. Join us for a comprehensive overview as we demystify the core concepts behind regression in machine learning. Whether you're a beginner or looking to deepen your understanding, this session covers the essential principles of regression analysis.

youtube

Subscribe to "Learn And Grow Community" YouTube : https://www.youtube.com/@LearnAndGrowCommunity LinkedIn Group : https://linkedin.com/company/LearnAndGrowCommunity

Follow #learnandgrowcommunity

#MachineLearning#RegressionModels#PredictiveAnalytics#DataScience#CoreConcepts#DataAnalysis#AI#MLTutorial#FeatureEngineering#DataPredictions#ModelInterpretation#LearnAI#DataInsights#TechEducation#AlgorithmExplained#Session12#TechTalks#DataDrivenDecisions#DataMining#PythonML#ArtificialIntelligence#TechLearning#AnalyticsInsights#RegressionAnalysis#ModelBuilding#SkillDevelopment#AIExplained#DataDrivenSolutions#learnandgrowcommunity#Youtube

1 note

·

View note