#SQL Failover Cluster

Explore tagged Tumblr posts

Text

Choosing Between Failover Cluster Instances and Availability Groups in SQL Server

In SQL Server, high availability and disaster recovery are crucial aspects of database management. Two popular options for achieving these goals are Failover Cluster Instances (FCIs) and Availability Groups (AGs). While both technologies aim to minimize downtime and ensure data integrity, they have distinct use cases and benefits. In this article, we’ll explore scenarios where Failover Cluster…

View On WordPress

#automatic failover#Availability Groups#disaster recovery#Failover Cluster Instances#SQL Server high availability

0 notes

Text

Harness the Power of SQL Server Standard 2017 for Data Success

Transform Your Data Strategy with SQL Server Standard 2017

In today's data-driven world, organizations are constantly seeking robust solutions to unlock valuable insights from their vast data repositories. SQL Server Standard 2017 emerges as a powerhouse that combines performance, security, and scalability to meet these demands. By leveraging its advanced features, businesses can turn raw data into actionable intelligence, driving growth and innovation.

SQL Server Standard 2017 offers an impressive array of tools designed to streamline data management and enhance analytical capabilities. Its in-memory technology ensures faster query processing, enabling real-time insights that are critical for timely decision-making. With built-in machine learning capabilities, users can develop predictive models directly within the database environment, simplifying workflows and reducing dependency on external analytics tools.

One of the standout features of SQL Server 2017 is its support for Linux and Docker containers, providing flexibility and cost-efficiency for diverse IT environments. This cross-platform compatibility allows organizations to deploy their database solutions on preferred operating systems without compromising performance. Additionally, the integration of Python and R languages empowers data scientists to build complex analytics and models seamlessly within the database ecosystem.

Security is paramount in data management, and SQL Server 2017 addresses this with robust encryption, transparent data encryption (TDE), and advanced auditing features. These measures ensure that sensitive information remains protected against unauthorized access, helping organizations comply with industry regulations and maintain customer trust.

Performance and scalability are crucial for growing enterprises. SQL Server Standard 2017 supports high availability configurations, including Always On Failover Clustering, to minimize downtime and maintain continuous operations. Its ability to handle large-scale data warehousing workloads makes it an ideal choice for enterprises seeking to optimize their data infrastructure.

Furthermore, SQL Server's integration with Power BI allows for dynamic and interactive dashboards, transforming complex data sets into visually compelling reports. This synergy enables stakeholders at all levels to make informed decisions quickly and confidently.

To explore how SQL Server Standard 2017 can revolutionize your data management and analytics, visit this detailed guide: Unlock Data Insights: The Proven Performance of SQL Server Standard 2017. Harnessing its capabilities can unlock unprecedented value from your data assets.

In conclusion, SQL Server Standard 2017 stands out as a reliable, versatile, and high-performing database solution. Its comprehensive features empower organizations to unlock the full potential of their data, turning insights into strategic advantages. Embracing this technology today can pave the way for a smarter, more informed tomorrow.

#SQL Server 2017#Data Analytics#Business Intelligence#Data Management#Data Warehousing#Database Performance#Microsoft SQL

0 notes

Text

Building a multi-zone and multi-region SQL Server Failover Cluster Instance in Azure

Much has been written about SQL Server Always On Availability Groups, but the topic of SQL Server Failover Cluster Instances (FCI) that span both availability zones and regions is far less discussed. However, for organizations that require SQL Server high availability (HA) and disaster recovery (DR) without the added licensing costs of Enterprise Edition, SQL Server FCI remains a powerful and…

0 notes

Text

A new SAP BASIS consultant faces several challenges when starting in the role. Here are the most common ones:

1. Complex Learning Curve

SAP BASIS covers a broad range of topics, including system administration, database management, performance tuning, and security.

Understanding how different SAP components (ERP, S/4HANA, BW, Solution Manager) interact can be overwhelming.

2. System Installations & Migrations

Setting up and configuring an SAP landscape requires deep knowledge of operating systems (Windows, Linux) and databases (HANA, Oracle, SQL Server).

Migration projects, such as moving from on-premise to SAP BTP or HANA, involve risks like downtime and data loss.

3. Performance Tuning & Troubleshooting

Identifying bottlenecks in SAP system performance can be challenging due to the complexity of memory management, work processes, and database indexing.

Log analysis and troubleshooting unexpected errors demand experience and knowledge of SAP Notes.

4. Security & User Management

Setting up user roles and authorizations correctly in SAP is critical to avoid security breaches.

Managing Single Sign-On (SSO) and integration with external authentication tools can be tricky.

5. Handling System Upgrades & Patching

Applying support packs, kernel upgrades, and enhancement packages requires careful planning to avoid system downtime or conflicts.

Ensuring compatibility with custom developments (Z programs) and third-party integrations is essential.

6. High Availability & Disaster Recovery

Understanding failover mechanisms, system clustering, and backup/restore procedures is crucial for minimizing downtime.

Ensuring business continuity in case of server crashes or database failures requires strong disaster recovery planning.

7. Communication & Coordination

Working with functional consultants, developers, and business users to resolve issues can be challenging if there’s a lack of clear communication.

Managing stakeholder expectations during system outages or performance issues is critical.

8. Monitoring & Proactive Maintenance

New BASIS consultants may struggle with configuring SAP Solution Manager for system monitoring and proactive alerts.

Setting up background jobs, spool management, and RFC connections efficiently takes practice.

9. Managing Transport Requests

Transporting changes across SAP environments (DEV → QA → PROD) without errors requires an understanding of transport logs and dependencies.

Incorrect transport sequences can cause system inconsistencies.

10. Staying Updated with SAP Evolution

SAP is rapidly evolving, especially with the shift to SAP S/4HANA and cloud solutions.

Continuous learning is required to stay up-to-date with new technologies like SAP BTP, Cloud ALM, and AI-driven automation.

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

Having spent time as both developer and DBA, I’ve been able to identify a few bits of advice for developers who are working closely with SQL Server. Applying these suggestions can help in several aspects of your work from writing more manageable source code to strengthening cross-functional relationships. Note, this isn’t a countdown – all of these are equally useful. Apply them as they make sense to your development efforts. 1 Review and Understand Connection Options In most cases, we connect to SQL Server using a “connection string.” The connection string tells the OLEDB framework where the server is, the database we intend to use, and how we intend to authenticate. Example connection string: Server=;Database=;User Id=;Password=; The common connection string options are all that is needed to work with the database server, but there are several additional options to consider that you can potentially have a need for later on. Designing a way to include them easily without having to recode, rebuild, and redeploy could land you on the “nice list” for your DBAs. Here are some of those options: ApplicationIntent: Used when you want to connect to an AlwaysOn Availability Group replica that is available in read-only mode for reporting and analytic purposes MultiSubnetFailover: Used when AlwaysOn Availability Groups or Failover Clusters are defined across different subnets. You’ll generally use a listener as your server address and set this to “true.” In the event of a failover, this will trigger more efficient and aggressive attempts to connect to the failover partner – greatly reducing the downtime associated with failover. Encrypt: Specifies that database communication is to be encrypted. This type of protection is very important in many applications. This can be used along with another connection string option to help in test and development environments TrustServerCertificate: When set to true, this allows certificate mismatches – don’t use this in production as it leaves you more vulnerable to attack. Use this resource from Microsoft to understand more about encrypting SQL Server connections 2 When Using an ORM – Look at the T-SQL Emitted There are lots of great options for ORM frameworks these days: Microsoft Entity Framework NHibernate AutoMapper Dapper (my current favorite) I’ve only listed a few, but they all have something in common. Besides many other things, they abstract away a lot of in-line writing of T-SQL commands as well as a lot of them, often onerous, tasks associated with ensuring the optimal path of execution for those commands. Abstracting these things away can be a great timesaver. It can also remove unintended syntax errors that often result from in-lining non-native code. At the same time, it can also create a new problem that has plagued DBAs since the first ORMs came into style. That problem is that the ORMs tend to generate commands procedurally, and they are sometimes inefficient for the specific task at hand. They can also be difficult to format and read on the database end and tend to be overly complex, which leads them to perform poorly under load and as systems experience growth over time. For these reasons, it is a great idea to learn how to review the T-SQL code ORMs generate and some techniques that will help shape it into something that performs better when tuning is needed. 3 Always be Prepared to “Undeploy” (aka Rollback) There aren’t many times I recall as terrible from when I served as a DBA. In fact, only one stands out as particularly difficult. I needed to be present for the deployment of an application update. This update contained quite a few database changes. There were changes to data, security, and schema. The deployment was going fine until changes to data had to be applied. Something had gone wrong, and the scripts were running into constraint issues. We tried to work through it, but in the end, a call was made to postpone and rollback deployment. That is when the nightmare started.

The builders involved were so confident with their work that they never provided a clean rollback procedure. Luckily, we had a copy-only full backup from just before we started (always take a backup!). Even in the current age of DevOps and DataOps, it is important to consider the full scope of deployments. If you’ve created scripts to deploy, then you should also provide a way to reverse the deployment. It will strengthen DBA/Developer relations simply by having it, even if you never have to use it. Summary These 3 tips may not be the most common, but they are directly from experiences I’ve had myself. I imagine some of you have had similar situations. I hope this will be a reminder to provide more connection string options in your applications, learn more about what is going on inside of your ORM frameworks, and put in a little extra effort to provide rollback options for deployments. Jason Hall has worked in technology for over 20 years. He joined SentryOne in 2006 having held positions in network administration, database administration, and software engineering. During his tenure at SentryOne, Jason has served as a senior software developer and founded both Client Services and Product Management. His diverse background with relevant technologies made him the perfect choice to build out both of these functions. As SentryOne experienced explosive growth, Jason returned to lead SentryOne Client Services, where he ensures that SentryOne customers receive the best possible end to end experience in the ever-changing world of database performance and productivity.

0 notes

Text

Mastering Database Administration with Your Path to Expert DB Management

In the age of data-driven businesses, managing and securing databases has never been more crucial. A database administrator (DBA) is responsible for ensuring that databases are well-structured, secure, and perform optimally. Whether you're dealing with a small-scale application or a large enterprise system, the role of a database administrator is key to maintaining data integrity, availability, and security.

If you're looking to build a career in database administration or enhance your existing skills, Jazinfotech’s Database Administration course offers comprehensive training that equips you with the knowledge and hands-on experience to manage databases efficiently and effectively.

In this blog, we’ll explore what database administration entails, why it's an essential skill in today's tech industry, and how Jazinfotech’s can help you become an expert in managing and maintaining databases for various platforms.

1. What is Database Administration (DBA)?

Database Administration refers to the practice of managing, configuring, securing, and maintaining databases to ensure their optimal performance. Database administrators are responsible for the overall health of the database environment, including aspects such as:

Data Security: Ensuring data is protected from unauthorized access and data breaches.

Database Performance: Monitoring and optimizing the performance of database systems to ensure fast and efficient data retrieval.

Backup and Recovery: Implementing robust backup strategies and ensuring databases can be restored in case of failures.

High Availability: Ensuring that databases are always available and accessible, even in the event of system failures.

Data Integrity: Ensuring that data remains consistent, accurate, and reliable across all operations.

Database administrators work with various types of databases (SQL, NoSQL, cloud databases, etc.), and they often specialize in specific database management systems (DBMS) such as MySQL, PostgreSQL, Oracle, Microsoft SQL Server, and MongoDB.

2. Why is Database Administration Important?

Database administration is a critical aspect of managing the infrastructure of modern organizations. Here are some reasons why database administration is vital:

a. Ensures Data Security and Compliance

In today’s world, where data breaches and cyber threats are prevalent, ensuring that your databases are secure is essential. A skilled DBA implements robust security measures such as encryption, access control, and monitoring to safeguard sensitive information. Moreover, DBAs are responsible for ensuring that databases comply with various industry regulations and data privacy laws.

b. Optimizes Performance and Scalability

As organizations grow, so does the volume of data. A good DBA ensures that databases are scalable, can handle large data loads, and perform efficiently even during peak usage. Performance optimization techniques like indexing, query optimization, and database tuning are essential to maintaining smooth database operations.

c. Prevents Data Loss

Data is often the most valuable asset for businesses. DBAs implement comprehensive backup and disaster recovery strategies to prevent data loss due to system crashes, human error, or cyber-attacks. Regular backups and recovery drills ensure that data can be restored quickly and accurately.

d. Ensures High Availability

Downtime can have significant business impacts, including loss of revenue, user dissatisfaction, and brand damage. DBAs design high-availability solutions such as replication, clustering, and failover mechanisms to ensure that the database is always accessible, even during maintenance or in case of failures.

e. Supports Database Innovation

With the evolution of cloud platforms, machine learning, and big data technologies, DBAs are also involved in helping organizations adopt new database technologies. They assist with migration to the cloud, implement data warehousing solutions, and work on database automation to support agile development practices.

3. Jazinfotech’s Database Administration Course: What You’ll Learn

At Jazinfotech, our Database Administration (DBA) course is designed to give you a thorough understanding of the core concepts and techniques needed to become an expert in database management. Our course covers various DBMS technologies, including SQL and NoSQL databases, and teaches you the necessary skills to manage databases effectively and efficiently.

Here’s a breakdown of the core topics you’ll cover in Jazinfotech’s DBA course:

a. Introduction to Database Management Systems

Understanding the role of DBMS in modern IT environments.

Types of databases: Relational, NoSQL, NewSQL, etc.

Key database concepts like tables, schemas, queries, and relationships.

Overview of popular DBMS technologies: MySQL, Oracle, SQL Server, PostgreSQL, MongoDB, and more.

b. SQL and Query Optimization

Mastering SQL queries to interact with relational databases.

Writing complex SQL queries: Joins, subqueries, aggregations, etc.

Optimizing SQL queries for performance: Indexing, query execution plans, and normalization.

Data integrity and constraints: Primary keys, foreign keys, and unique constraints.

c. Database Security and User Management

Implementing user authentication and access control.

Configuring database roles and permissions to ensure secure access.

Encryption techniques for securing sensitive data.

Auditing database activity and monitoring for unauthorized access.

d. Backup, Recovery, and Disaster Recovery

Designing a robust backup strategy (full, incremental, differential backups).

Automating backup processes to ensure regular and secure backups.

Recovering data from backups in the event of system failure or data corruption.

Implementing disaster recovery plans for business continuity.

e. Database Performance Tuning

Monitoring and analyzing database performance.

Identifying performance bottlenecks and implementing solutions.

Optimizing queries, indexing, and database configuration.

Using tools like EXPLAIN (for query analysis) and performance_schema to improve DB performance.

f. High Availability and Replication

Setting up database replication (master-slave, master-master) to ensure data availability.

Designing high-availability database clusters to prevent downtime.

Load balancing to distribute database requests and reduce the load on individual servers.

Failover mechanisms to automatically switch to backup systems in case of a failure.

g. Cloud Database Administration

Introduction to cloud-based database management systems (DBaaS) like AWS RDS, Azure SQL, and Google Cloud SQL.

Migrating on-premise databases to the cloud.

Managing database instances in the cloud, including scaling and cost management.

Cloud-native database architecture for high scalability and resilience.

h. NoSQL Database Administration

Introduction to NoSQL databases (MongoDB, Cassandra, Redis, etc.).

Managing and scaling NoSQL databases.

Differences between relational and NoSQL data models.

Querying and optimizing performance for NoSQL databases.

i. Database Automation and Scripting

Automating routine database maintenance tasks using scripts.

Scheduling automated backups, cleanup jobs, and index maintenance.

Using Bash, PowerShell, or other scripting languages for database automation.

4. Why Choose Jazinfotech for Your Database Administration Course?

At Jazinfotech, we provide high-quality, practical training in database administration. Our comprehensive DBA course covers all aspects of database management, from installation and configuration to performance tuning and troubleshooting.

Here’s why you should choose Jazinfotech for your DBA training:

a. Experienced Trainers

Our instructors are seasoned database professionals with years of hands-on experience in managing and optimizing databases for enterprises. They bring real-world knowledge and industry insights to the classroom, ensuring that you learn not just theory, but practical skills.

b. Hands-On Training

Our course offers plenty of hands-on labs and practical exercises, allowing you to apply the concepts learned in real-life scenarios. You will work on projects that simulate actual DBA tasks, including performance tuning, backup and recovery, and database security.

c. Industry-Standard Tools and Technologies

We teach you how to work with the latest database tools and technologies, including both relational and NoSQL databases. Whether you're working with Oracle, SQL Server, MySQL, MongoDB, or cloud-based databases like AWS RDS, you'll gain the skills needed to manage any database environment.

d. Flexible Learning Options

We offer both online and in-person training options, making it easier for you to learn at your own pace and according to your schedule. Whether you prefer classroom-based learning or virtual classes, we have the right solution for you.

e. Career Support and Placement Assistance

At Jazinfotech, we understand the importance of securing a job after completing the course. That’s why we offer career support and placement assistance to help you find your next role as a Database Administrator. We provide resume-building tips, mock interviews, and help you connect with potential employers.

5. Conclusion

Database administration is a critical skill that ensures your organization’s data is secure, accessible, and performant. With the right training and experience, you can become a highly skilled database administrator and take your career to new heights.

Jazinfotech’s Database Administration course provides the comprehensive knowledge, hands-on experience, and industry insights needed to excel in the field of database management. Whether you’re a beginner looking to start your career in database administration or an experienced professional aiming to deepen your skills, our course will help you become a proficient DBA capable of managing complex database environments.

Ready to kickstart your career as a Database Administrator? Enroll in Jazinfotech’s DBA course today and gain the expertise to manage and optimize databases for businesses of all sizes!

0 notes

Text

Things You Need to Know on Why Your Business Needs SQL Server DBA Services NOW

With the advancement in data-driven solutions nowadays, businesses require on-time SQL Server database management services to maintain Business continuance, data security, and scalability. And, SQL Server Database Administration (DBA) services are key to the smooth operation of these databases, while enhancing performance and security of critical data. In this one, we dive into the best advantages of using SQL support services and how Datapatrol Technologies can cater to the needs of your organization perfectly.

The six takeaways on who should be benefiting from these services and why:

Businesses with Large Data Volumes:

Companies managing extensive amounts of data require SQL Server DBA services to ensure their databases are optimized for performance, reliability, and scalability. Efficient data handling helps in maintaining seamless business operations and enhances decision-making capabilities.

High Availability Demands:

Organizations that cannot afford downtime need SQL Server DBA services to implement and manage high-availability solutions. These services help setting up failover clustering, database mirroring, and Always On Availability Groups to ensure continuous database availability.

Data Security and Compliance:

Businesses dealing with sensitive information, such as financial institutions and healthcare providers, need SQL Server DBA services to safeguard their data. DBAs implement robust security measures and ensure compliance with industry regulations like GDPR, HIPAA, and SOX.

Performance Optimization:

Companies experiencing slow database performance can benefit from SQL Server DBA services. DBAs analyze and optimize query performance, index management, and resource allocation to enhance the overall efficiency of database operations.

Disaster Recovery Planning:

SQL Server DBA services are essential for organizations that require comprehensive disaster recovery plans. DBAs develop, test, and maintain backup and recovery strategies to ensure data integrity and availability in the event of a system failure.

Complex Database Environments:

Enterprises with complex, multi-database environments need SQL Server DBA services to manage database architecture, perform upgrades, and handle migrations seamlessly. DBAs ensure that the systems are integrated and function smoothly, minimizing disruptions and maximizing productivity.

Top Benefits of Utilizing SQL Server DBA Services:

Enhanced Database Performance and Optimization:

SQL Server DBA services focus on optimizing database performance, ensuring that systems operate at peak efficiency. DBAs analyze query performance, manage indexes, and fine-tune database configurations to minimize latency and enhance responsiveness. This proactive approach not only improves user experience but also supports business-critical applications that rely on swift data retrieval and processing.

Improved Data Security and Compliance:

Data security is paramount in today's regulatory environment. SQL Server DBA services implement robust security measures, including access controls, encryption, and regular audits to protect sensitive information. And majorly reducing the risk of data breaches and ensuring organizational adherence to legal requirements.

24/7 Monitoring and Support:

SQL Server DBA services provide round-the-clock monitoring and support, ensuring immediate detection and resolution of potential issues. This proactive monitoring minimizes downtime, safeguards data integrity, and maintains continuous availability of critical business applications. Datapatrol Technologies excels in delivering comprehensive monitoring solutions, leveraging advanced tools and expertise to ensure seamless operations and rapid incident response.

Cost Efficiency and Resource Optimization:

Outsourcing SQL Server DBA services offers significant cost advantages compared to maintaining an in-house team. Organizations benefit from reduced overhead costs, including salaries, training, and infrastructure expenses. Datapatrol Technologies provides flexible service packages tailored to specific business needs, allowing organizations to scale resources up or down as required without compromising on service quality.

Expertise and Scalability:

Partnering with SQL Server DBA experts like Datapatrol Technologies provides access to specialized skills and extensive experience. Our team of certified DBAs possesses deep knowledge of SQL Server environments, capable of managing complex database architectures and handling diverse challenges. This expertise enables seamless scalability, supporting business growth and adapting to evolving technological landscapes.

Disaster Recovery and Business Continuity:

SQL Server DBA services include robust disaster recovery planning, ensuring data continuity in the event of system failures or natural disasters. Datapatrol Technologies designs and implements comprehensive backup strategies, conducts regular testing, and implements failover mechanisms to minimize downtime and mitigate risks. This proactive approach safeguards business operations and enhances resilience against unforeseen disruptions.

How Datapatrol Technologies Can Support Your Needs:

Datapatrol Technologies stands out as a leader in SQL Server DBA services, offering tailored solutions designed to meet diverse organizational requirements. With a proven track record spanning 15+ years of expertise, Datapatrol Technologies ensures:

Customizable Service Packages:

Tailored service packages to meet specific business objectives and budgetary constraints.

24/7 Support and Monitoring:

Continuous monitoring and proactive support to maintain optimal database performance and minimize downtime.

Expert Guidance and Consultation:

Strategic advice and consultation on database optimization, scalability, and compliance to enhance operational efficiency.

Cloud Migration and Management:

Expertise in migrating SQL Server databases to cloud platforms like Amazon AWS and Oracle Cloud, ensuring seamless integration and enhanced scalability.

Comprehensive Security Measures:

Implementation of stringent security protocols and regular audits to protect against cyber threats and ensure regulatory compliance.

Scalable Solutions:

Flexibility to scale resources up or down based on business growth and evolving requirements, ensuring cost-effective and efficient service delivery.

CONCLUSION:

In conclusion, leveraging SQL Server DBA services provided by Datapatrol Technologies empowers organizations to optimize database performance, enhance data security, and achieve operational excellence. By outsourcing DBA responsibilities, businesses can focus on core competencies while benefiting from expert support, proactive monitoring, and scalable solutions tailored to their unique needs. Partnering with Datapatrol Technologies ensures reliable, efficient, and cost-effective management of SQL Server databases, driving business success in a competitive digital landscape.

For more details connect with Data Patrol Technologies at [email protected] or call us on +91 848 4839 896.

0 notes

Text

Horizontal Scaling: Harnessing the Potential of NoSQL Databases

In the contemporary landscape of big data and high-traffic applications, traditional relational databases frequently find it challenging to meet the requirements of modern enterprises. The advent of NoSQL databases represents a new category of database management systems, engineered to address the issues of scalability, performance, and flexibility that are pivotal in the era of digital transformation. This blog post aims to delve into the notion of scalable NoSQL database, elucidating their principal features and the advantages they present for businesses aiming to excel in a data-centric environment. Exploring NoSQL Databases

NoSQL databases, also known as "Not Only SQL" databases, signify a shift from the traditional, rigidly structured and schema-bound nature of conventional relational databases. Distinct from their SQL-based counterparts, NoSQL databases offer either a lack of schema or a flexible schema, facilitating the storage and management of unstructured or semi-structured data with greater efficiency.

A hallmark of NoSQL databases is their capability for horizontal scalability. Contrary to the vertical scaling approach of traditional relational databases—where an increase in data volume and workload necessitates the procurement of more powerful hardware—NoSQL databases achieve scalability through the distribution of data across an array of nodes or servers, thereby efficiently managing heightened traffic and storage demands.

Key Characteristics of Scalable NoSQL Databases

Horizontal Scalability: Scalable NoSQL databases excel in horizontal scalability, enabling organizations to augment their database cluster with additional nodes or servers in response to increasing demand. This distributed architecture facilitates seamless scaling without disruption, guaranteeing superior performance and reliability, even under significant loads.

Flexible Data Models: NoSQL databases offer support for a variety of flexible data models, including key-value stores, document databases, column-family stores, and graph databases. Such flexibility allows organizations to select the data model that best aligns with their specific requirements, be it for storing unstructured documents, hierarchical data, or intricately connected data.

High Performance: Designed for high performance and minimal latency, NoSQL databases are ideally suited for real-time applications and data-intensive tasks. Through data distribution across multiple nodes and the utilization of parallel processing, NoSQL databases are capable of managing substantial volumes of transactions and queries with negligible latency.

Fault Tolerance: Inherently fault-tolerant, scalable NoSQL databases incorporate mechanisms for data replication, partitioning, and failover. Should a node failure or network disruption occur, these databases automatically redirect traffic to operational nodes, ensuring continuous service and data accessibility.

Advantages of Scalable NoSQL Databases

Flexibility and Agility: NoSQL databases provide unparalleled flexibility and agility, which enables enterprises to swiftly adapt to evolving requirements and market dynamics. Their support for various data models and the ability to dynamically modify schemas allow organizations to iterate quickly and innovate with greater certainty.

Cost Efficiency: The horizontal scalability characteristic of NoSQL databases permits enterprises to incrementally scale their infrastructure based on demand, circumventing the initial expenses and complexities associated with vertical scaling strategies. Utilizing commodity hardware and cloud services enables cost-efficient scalability while maintaining high levels of performance and reliability.

Scalability on Demand: Scalable NoSQL databases afford enterprises the capacity to scale resources on demand, dynamically adjusting to fluctuations in workload in real time. This capability ensures that enterprises can manage periods of peak demand without the need for over-provisioning, thereby optimizing cost efficiency and resource utilization.

Future-Proofing: In the context of a rapidly changing digital environment, the ability to future-proof operations is crucial for sustained success. Scalable NoSQL databases provide the necessary flexibility and scalability for enterprises to navigate emerging technologies and shifting business demands, securing their competitive edge and relevance in future landscapes.

Conclusion

In conclusion, scalable NoSQL databases embody a significant shift in the landscape of database management, presenting enterprises with the scalability, flexibility, and performance necessary to excel in the age of big data and digital transformation. Through the adoption of horizontal scalability, adaptable data models, and high-performance architectures, enterprises are equipped to unlock unprecedented opportunities for innovation, agility, and expansion within a data-centric universe. As the adoption of NoSQL databases by businesses progresses, the potential for scalability and innovation becomes boundless, fundamentally altering the methodologies employed in data storage, management, and analysis in the digital era.

0 notes

Text

Empowering Businesses With Expert MySQL Development Solutions By Associative

In today’s data-driven world, effective management and utilization of databases play a crucial role in driving business growth and innovation. As businesses strive to harness the power of data to gain insights, optimize processes, and enhance decision-making, the role of MySQL development companies becomes increasingly vital. Among these, Pune-based software development and consulting company Associative stands out as a trusted partner, offering comprehensive MySQL development solutions tailored to meet the diverse needs of businesses worldwide.

Unveiling The Essence Of MySQL Development

MySQL, an open-source relational database management system, is renowned for its scalability, reliability, and performance, making it a preferred choice for businesses of all sizes across various industries. MySQL development involves designing, implementing, and optimizing databases to store, retrieve, and manage data efficiently, enabling businesses to unlock the full potential of their data assets.

Leveraging Associative’s MySQL Development Services

Associative’s MySQL development services encompass a wide range of capabilities aimed at helping businesses harness the power of MySQL databases:

Database Design and Architecture: Associative specializes in designing robust, scalable database architectures tailored to meet the specific needs and requirements of each client. Whether it’s designing a new database from scratch or optimizing an existing database structure, Associative’s team of experienced database architects ensures optimal performance, reliability, and scalability.

Database Development and Optimization: Associative offers end-to-end database development services, from schema design and query optimization to stored procedure development and performance tuning. By leveraging best practices and industry standards, Associative ensures that every MySQL database is finely tuned for maximum efficiency and reliability.

Migration and Integration: Associative assists businesses in seamlessly migrating from legacy database systems to MySQL or integrating MySQL databases with existing systems and applications. Whether it’s migrating data from Oracle, SQL Server, or another database platform, Associative ensures a smooth transition with minimal disruption to business operations.

Replication and High Availability: Ensuring high availability and data redundancy is essential for mission-critical applications. Associative implements MySQL replication and clustering solutions to provide fault tolerance, data redundancy, and automatic failover, ensuring continuous availability and reliability even in the event of hardware failures or network issues.

Performance Monitoring and Maintenance: Associative provides proactive monitoring and maintenance services to optimize the performance and reliability of MySQL databases. By monitoring key performance metrics, identifying bottlenecks, and implementing proactive maintenance strategies, Associative helps businesses maximize the performance and efficiency of their MySQL databases.

Why Choose Associative For Your MySQL Development Needs?

Expertise and Experience: With years of experience in MySQL development and a team of seasoned database specialists, Associative brings a wealth of expertise to every project.

Customized Solutions: Associative understands that every business is unique, and therefore, offers customized MySQL development solutions tailored to meet the specific needs and objectives of each client.

Reliability and Scalability: Associative ensures that every MySQL database is designed and optimized for reliability, scalability, and performance, enabling businesses to scale seamlessly as their data requirements grow.

Customer-Centric Approach: Associative places a strong emphasis on understanding the unique challenges and goals of its clients, taking a collaborative approach to MySQL development to ensure that every project delivers maximum value and ROI.

Conclusion

As businesses continue to recognize the strategic importance of effective database management in driving business success, partnering with a trusted MySQL development company like Associative becomes essential. With its expertise, experience, and customer-centric approach, Associative empowers businesses to unlock the full potential of their data assets and drive innovation and growth.

Embrace the power of MySQL development with Associative and embark on a journey of data-driven success. Whether you’re a startup looking to establish a robust database infrastructure or an enterprise seeking to optimize your existing MySQL environment, Associative is your trusted partner for all your MySQL development needs.

0 notes

Text

Supporting Clusterless Availability Groups in SQL Server

Introduction Hey there, fellow database administrators! If you’re like me, you’re always looking for ways to improve the availability and resilience of your SQL Server databases. One powerful tool in our arsenal is the Availability Group (AG) feature. But what if you want to use AGs without the complexity of a Windows Server Failover Cluster (WSFC)? Enter clusterless AGs, introduced in SQL…

View On WordPress

0 notes

Text

Accessible: Azure Elastic SAN simply moves SAN to the cloud

What is Azure Elastic SAN? Azure is pleased to announce the general availability (GA) of Azure Elastic SAN, the first fully managed and cloud-native storage area network (SAN) that simplifies cloud SAN deployment, scaling, management, and configuration. Azure Elastic SAN streamlines the migration of large SAN environments to the cloud, improving efficiency and ease.

A SAN-like resource hierarchy, appliance-level provisioning, and dynamic resource distribution to meet the needs of diverse workloads across databases, VDIs, and business applications distinguish this enterprise-class offering. It also offers scale-on-demand, policy-based service management, cloud-native encryption, and network access security. This clever solution combines cloud storage’s flexibility with on-premises SAN systems’ scale.

Since announcing Elastic SAN’s preview, they have added many features to make it enterprise-class:

Multi-session connectivity boosts Elastic SAN performance to 80,000 IOPS and 1,280 MBps per volume, even higher on the entire SAN. SQL Failover Cluster Instances can be easily migrated to Elastic SAN volumes with shared volume support. Server-side encryption with Customer-managed Keys and private endpoint support lets you restrict data consumption and volume access. Snapshot support lets you run critical workloads safely. Elastic SAN GA will add features and expand to more regions:

Use Azure Monitor Metrics to analyze performance and capacity. Azure Policy prevents misconfiguration incidents. This release also makes snapshot export public by eliminating the sign-up process.

When to use Azure Elastic SAN Elastic SAN uses iSCSI to increase storage throughput over compute network bandwidth for throughput and IOPS-intensive workloads. Optimization of SQL Server workloads is possible. SQL Server deployments on Azure VMs sometimes require overprovisioning to reach disk throughput targets.

“Azure SQL Server data warehouse workloads needed a solution to eliminating VM and managed data disk IO bottlenecks. The Azure Elastic SAN solution removes the VM bandwidth bottleneck and boosts IO throughput. They reduced VM size and implemented constrained cores to save money on SQL server core licensing thanks to Elastic SAN performance.”

Moving your on-premises SAN to the Cloud Elastic SAN uses a resource hierarchy similar to on-premises SANs and allows provisioning of input/output operations per second (IOPS) and throughput at the resource level, dynamically sharing performance across workloads, and workload-level security policy management. This makes migrating from on-premises SANs to the cloud easier than right-sizing hundreds or thousands of disks to serve your SAN’s many workloads.

The Azure-Sponsored migration tool by Cirrus Data Solutions in the Azure Marketplace simplifies data migration planning and execution. The cost optimization wizard in Cirrus Migrate Cloud makes migrating and saving even easier:

Azure is excited about Azure Elastic SAN’s launch and sees a real opportunity for companies to lower storage TCO. Over the last 18 months, they have worked with Azure to improve Cirrus Migrate Cloud so enterprises can move live workloads to Azure Elastic SAN with a click. Offering Cirrus Migrate Cloud to accelerate Elastic SAN adoption, analyze the enterprise’s storage performance, and accurately recommend the best Azure storage is an exciting expansion of their partnership with Microsoft and extends their vision of real-time block data mobility to Azure and Elastic SAN.

Cirrus Data Solutions Chairman and CEO Wayne Lam said that we work with Cirrus Data Solutions to ensure their recommendations cover all Azure Block Storage offerings (Disks and Elastic SAN) and your storage needs. The wizard will recommend Ultra Disk, which has the lowest sub-ms latency on Azure, for single queue depth workloads like OLTP.

Consolidate storage and achieve cost efficiency at scale Elastic SAN lets you dynamically share provisioned performance across volumes for high performance at scale. A shared performance pool can handle IO spikes, so you don’t have to overprovision for workload peak traffic. You can right-size to meet your storage needs with Elastic SAN because capacity scales independently of performance. If your workload’s performance requirements are met but you need more storage capacity, you can buy that (at 25% less cost) than more performance.

Get the lowest Azure VMware Solution GiB storage cost this recently announced preview integration lets you expose an Elastic SAN volume as an external datastore to your Azure VMware Solution (AVS) cluster to increase storage capacity without adding vSAN storage nodes. Elastic SAN provides 1 TiB of storage for 6 to 8 cents per GiB per month1, the lowest AVS storage cost per GiB. Its native Azure Storage experience lets you deploy and connect an Elastic SAN datastore through the Azure Portal in minutes as a first-party service.

Azure Container Storage, the first platform-managed container-native storage service in the public cloud, offers highly scalable, cost-effective persistent volumes built natively for containers. Use fast attach and detach. Elastic SAN can back up Azure Container Storage and take advantage of iSCSI’s fast attach and detach. Elastic SAN’s dynamic resource sharing lowers storage costs, and since your data persists on volumes, you can spin down your cluster for more savings. Containerized applications running general-purpose database workloads, streaming and messaging services, or CI/CD environments benefit from its storage.

Price and performance of Azure Elastic SAN Most throughput and IOPS-intensive workloads, like databases, suit elastic SAN. To support more demanding use cases, Azure raised several performance limits:

Azure saw great results with SQL Servers during their Preview. SQL Server deployments on Azure VMs sometimes require overprovisioning to reach disk throughput targets. Since Elastic SAN uses iSCSI to increase storage throughput over compute network bandwidth, this is avoided.

With dynamic performance sharing, you can cut your monthly bill significantly. Another customer wrote data to multiple databases during the preview. If you provision for the maximum IOPS per database, these databases require 100,000 IOPS for peak performance, as shown in the simplified graphic below. In real life, some database instances spike during business hours for inquiries and others off business hours for reporting. Combining these workloads’ peak IOPS was only 50,000.

Elastic SAN lets its volumes share the total performance provisioned at the SAN level, so you can account for the combined maximum performance required by your workloads rather than the sum of the individual requirements, which often means you can provision (and pay for) less performance. In the example, you would provision 50% fewer IOPS at 50,000 IOPS than if you served individual workloads, reducing costs.

Start Elastic SAN today Follow Azure start-up instructions or consult their documentation to deploy an Elastic SAN. Azure Elastic SAN pricing has not changed for general availability. Their pricing page has the latest prices.

Read more on Govindhtech.com

0 notes

Text

Data is the foundation of successful businesses in today's linked digital landscape, driving everything from decision-making procedures to consumer interactions. Organizations heavily rely on powerful database management systems to fully utilize the value of data. Microsoft SQL Server, MySQL, and MongoDB stand out among the many solutions available as three well-known names. These database systems are essential for organizing, retrieving, and storing data; yet, they each go about this in a different way and have different advantages and disadvantages.

This blog is to uncover the complexities of these three database juggernauts, comprehending what makes them special and how they cater to various needs.

Microsoft SQL Server

A game-changer in relational databases. Robust, reliable, and feature-packed, it drives apps from small projects to enterprise solutions. Join us to unveil its magic—data storage, retrieval, performance, and security.

Hardware:

Running on Windows-based hardware, Microsoft SQL Server is a dependable relational database management system (RDBMS). It can be installed on a variety of hardware arrangements, including single-server systems and clusters of powerful servers. For the best speed while processing big datasets and difficult queries, it makes use of multi-core computers, lots of RAM, and quick storage systems.

Software:

The Windows operating system-based Microsoft SQL Server has a number of editions, each suited to a particular purpose, including the Express, Standard, and Enterprise editions. In addition to SQL Server Management Studio (SSMS), Integration Services, Analysis Services, and Reporting Services, it offers a range of tools for database management. These resources support database creation, upkeep, and business intelligence.

Procedure:

For data administration and manipulation, Microsoft SQL Server adheres to the Structured Query Language (SQL) standards. SQL commands are used by users to create, update, and retrieve data. In order to improve data security and integrity, it offers stored processes, triggers, and functions for procedural logic. To maintain data availability and reliability, SQL Server also provides sophisticated tools including replication, backup and recovery, and failover clustering.

Data:

SQL Server maintains the consistency and integrity of the data by storing it in relational tables with established schemas. It supports ACID transactions, which ensure that database operations are carried out trustworthily. ACID stands for Atomicity, Consistency, Isolation, and Durability. Additionally, SQL Server makes it possible to build intricate connections between tables, which makes it easier to get and analyze data quickly.

MySQL

Is an open-source relational database management system, consists of key components that drive its functionality:

Hardware:

Popular open-source relational database system MySQL is compatible with Windows, Linux, and macOS in addition to other hardware systems. It is a versatile option for both small-scale applications and large-scale systems because it is designed to operate effectively even on basic hardware configurations.

Software:

The Community Edition and the Enterprise Edition are the two primary editions of MySQL's core system. While the Enterprise Edition offers extra tools and assistance, the Community Edition is open-source and delivers necessary features. A graphical tool called MySQL Workbench makes it easier to create, manage, and develop queries for databases.

Procedure:

Similar to Microsoft SQL Server, MySQL too employs SQL to manipulate data. Using SQL commands, users can create, change, and query databases, among other things. MySQL offers user-defined functions, stored procedures, and triggers, enabling programmers to incorporate unique logic inside the database. To improve data availability and fault tolerance, it provides replication and clustering functions.

Data:

Structured tables with predefined schemas are used by MySQL to store data. It is well renowned for performing well in read-intensive situations, making it appropriate for applications that call for quick data retrieval. Because MySQL supports ACID transactions, data consistency and durability are guaranteed. Additionally, it supports many storage engines, including InnoDB and MyISAM, each of which is tailored for a particular use case.

MongoDB

A popular NoSQL database, operates through essential components that define its functionality:

Hardware:

Leading NoSQL database system MongoDB was created to manage unstructured or partially organized data. It can be installed on a variety of hardware setups, including cloud-based settings and common hardware. The architecture of MongoDB is adaptable and can extend horizontally to handle expanding datasets.

Software:

MongoDB has both a community edition and an enterprise version, and it functions as a distributed database. MongoDB is accessed by developers through drivers tailored to their chosen programming languages. A graphical tool called MongoDB Compass facilitates query exploration and database management, making it simpler to work with the document-oriented approach.

Procedure:

The flexible schema-less method used by MongoDB differs from that of conventional RDBMS systems. Data is kept as groups of documents that resemble JSON. Users work with MongoDB by performing CRUD (Create, Read, Update, Delete) actions on documents using the MongoDB Query Language (MQL). Due to its nature, MongoDB is a good choice for applications that need quick and agile development cycles.

Data:

Documents, which can have different structures inside the same collection, are how MongoDB stores data. As data develops, this enables increased flexibility and agility. MongoDB offers sharding, which distributes data across different servers, to enable horizontal scaling. In its "eventual consistency" concept, availability is prioritized over consistency.

Blog by: Clarence Manulat

1 note

·

View note

Text

Setting up Always On SQL Clustering Group, with Microsoft Server 2016 and VMWare

Setting up clusters in Windows Server 2016 has become so easy. However; When integrating it with other environments, like VMWare, and AO SQL Clustering. It can get quite Tricky. First we want to setup our environment in VSphere. Next we will setup Windows Server 2016 with Failover Clustering. Then we’ll make sure to make some adjustments to DNS. Finally, We will setup AlwaysOn SQL Clustering.

Please make sure you have .NET Framework 3.5.1 or greater on the servers. Then We will need to create 2 VMs with 3 drives each. Then we will need to make sure that the drives are online, and available from other locations. However one main aspect that I had overlooked was that the Virtual Disks have to be created Eager Zero Thick not Lazy Zero Thick. I made the heinous mistake of using Lazy Zero Thick, and then could not understand why I was having so many problems.

Note: When creating virtual disks with Eager Zero Thick, it does take longer than using the faster Lazy Zero Thick option. Eager Zero Thick Disks allocates the space for the virtual disk, then zeros it all out unlike the Lazy Zero Thick which only allocates the space.

You also generally wouldn’t use Eager Zero Thick except for Microsoft clustering and Oracle programs. Once the disks are created we are reading to install Windows Server 2016.

Install either Datacenter edition or Standard edition. For this example we’ll use the standard edition. Install all the Microsoft Windows feature updates and verify that you have already allocated all the resources needed. Check that the additional Virtual Disks are available, and make sure you install the Failover Cluster feature. You may want to reboot after the feature is installed, if you have not done so. Once you installed the feature go to the Failover Cluster Manager and prepare to create the cluster. If this is a two node cluster be sure to add a Witness Server or Desktop. Once this is created and validated, go to your computer manager and verify that the virtual disks are online and initialized. Next, you will want to configure the cluster quorum settings. I created a separate server for this quorum and configured a File share witness.

Now, make sure you can access these from another computer on the secured network. You will have to setup a Host A record round robin DNS situation, where you give a specified name the 2 failover cluster nodes IP adresses in the Host address. Example: if the nodes had up address of 192.168.1.43 and 192.168.1.44. Then the two Host records you would need to create are AOSqlServer -> 192.168.1.43 and AOSqlServer -> 192.169.1.44

Finally, We will enable AlwaysOn Availability Groups on SQL Server 2016.

After Installing SQL Server 2012 or 2014 Enterprise edition on all the replicas. Please install it on as a stand-alone instance, and then we will configure SQL Server. Once you expand SQL Server Network Configuration node, and click on Protocols for MSSQLServer, you will see the TCP/IP dialog box on the right panel. Right click on the TCP/IP entry and select Enable.

In SQL Server Configuration Manager, right click on SQL Server Services to open the Properties dialog box. Navigate to the AlwaysOn High Availability tab to select the “Enable AlwaysOn Availability Groups.

Now we must configure the login accounts and the replicas that will need read write privileges.

First verify that your SQL Service Account is there and is a domain account, not a local machine account. Now login through SQL Management Studio (SSMS). Add you SQL Service account to the Administrators group on each replica (via computer management). Then allow connect permissions to the SQL Service account through SSMS: Right click on the SQL Service login to open the Properties dialog box. On each replica navigate to the Securables page and make sure Connect SQL Grant box is checked and allow remote connections. You can do this by using SSMS in the instance properties or by using sp_configure.

EXEC sp_configure ‘remote access’, 1;

GO

RECONFIGURE;

GO

Now we will create the file share through the Server Manager that the SQL Service account, and the replicas can access. The File is for the initial backup/restore process that happens to the databases when you join the AlwaysOn group during setup.

Last thing is to install the AlwaysOn Availability group. Once you’s ensured that full backups have been created, and all databases are in Full recovery mode, you will have to remove these databases from the tlog backup maintenece during the installation of Always on (you can always add them back). It could cause errors with both tlogs backing up while AlwaysOn is being created.

On you primary, open SSMS and expand the AlwaysOn High Availablity folder. Right click on the Availability Groups and select New Availability Group Wizard.

Select only the databases you want to include in the AlwaysOn group.

Next to the databases you will see the status with a blue link. If you see "Meets Prerequisites” it will signify that these databases are included in your group. If it does not say "Meets Prerequisites”, then click on the link to see more details on what needs to be corrected.

Now, you will specify and Add the Replicas. You will need to specify if you want Automatic or Manual Failover, Synchronous or Asynchronous Data Replication, and the type of Connections you are allowing to the end users.

Be sure to view the troubleshooting page if you have any issues:

http://blogs.msdn.com/b/alwaysonpro/archive/2013/12/09/trouble-shoot-error.aspx

The backup preferences tab will assist in choosing the type of backup and to prioritize the replica backups.

In the Listener tab, you will create an availability group listener button, Enter the string DNS name, enter port 1433 and enter the IP address for your listener, which should be an unused IP address on the network.

Next, you will Select Initial Data Synchronization page, join the databases to the Always on group, then verify the Full option is selected for using File Shares. For large databases select Join or Skip to restore the databases to the secondary replica. We will use Full for now. Last thing to do here is remember the SQL Service accounts and set that all replicas have read/write permissions to the file Share or it will not work.

Run the Validation checks, and make sure it the results are successful.

That is it, once you get that done you should have High availability and AlwaysOn SQL Server. I hope you’ve enjoyed this instructional blog. Please come back and visit us to see other projects.

#Always On SQL Server#High Availability SQL#Failover Cluster#SQL Failover Cluster#VMWare Failover Cluster#VMWare with MS Failover Cluster#VMWare with MSFC#AlwaysOn SQL

1 note

·

View note

Text

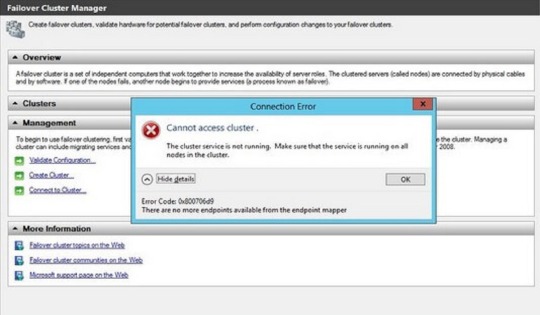

Force Start a Windows Server Failover Cluster without a Quorum to bring a SQL Server Failover Clustered Instance Online

Force Start a Windows Server Failover Cluster without a Quorum to bring a SQL Server Failover Clustered Instance Online

Problem The 2-node Windows Server Failover Cluster (WSFC) running my SQL Server failover clustered instance suddenly went offline. It turns out that my quorum disk and the standby node in the cluster both went offline at the same time. I could not connect to the WSFC nor to my SQL Server failover clustered instance. What do I need to do to bring my SQL Server failover clustered instance back…

View On WordPress

0 notes

Text

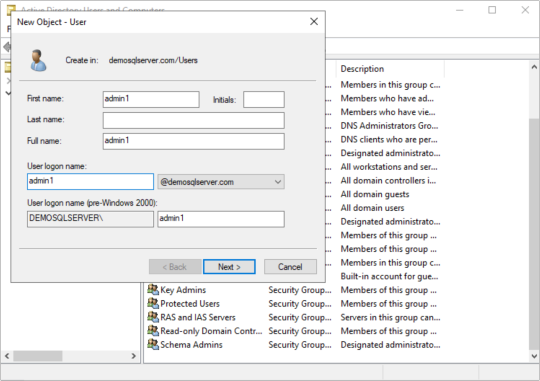

Configure Always on the SQL Server instances configured on Windows Failover cluster

Create a domain account to access the SQL Server database. While installation you can configure these account for the services or later on you can configure the Services to start with these account and create login account in SQL Server databases with sysadmin right. Open the DSA.EXE ( Active Directory user and computer) and right click on user to add new User. Start services with new account…

View On WordPress

0 notes