#ScalableAI

Explore tagged Tumblr posts

Text

VADY drives business growth by embedding AI-powered business intelligence directly into your operations. From operations to strategy, VADY accelerates results with real-time, automated data insights software built for scale and speed. Experience a smarter path to growth with enterprise AI solutions that work as fast as your market changes.

#VADY#VADYAIanalytics#VADYBusinessIntelligence#VADYDataAnalyticsSolutions#VADYSmartDecisionMaking#VADYNewFangledAI#AIpoweredBusinessIntelligence#DataAnalyticsForBusiness#EnterpriseAISolutions#SmartDecisionMakingTools#AutomatedDataInsightsSoftware#AIpoweredDataVisualization#AIDrivenCompetitiveAdvantage#EnterpriseLevelDataAutomation#ContextAwareAIAnalytics#AIAnalyticsGrowth#BusinessAI#RealtimeAIInsights#ScalableAI#GrowthWithAI#BusinessAutomationAI

0 notes

Text

How the Top AI Agency in India Delivers Industry-Specific Intelligence at Scale

The rise of artificial intelligence (AI) is transforming how businesses operate across the world. From predicting customer behavior to automating complex workflows, AI is becoming essential for companies seeking efficiency, speed, and innovation. In India, the AI industry is expanding rapidly, with several companies competing to deliver intelligent, scalable solutions to meet industry needs. Among the top AI companies in India, WebSenor stands out for its commitment to delivering industry-specific intelligence at scale. As a trusted artificial intelligence agency, WebSenor is helping businesses across sectors—from healthcare to logistics—unlock new levels of performance through custom AI solutions.

Why Industry-Specific AI Matters Today

The Shift from Generic to Tailored AI Solutions

Many AI implementations fail not because of poor technology, but because they lack relevance to the business context. Off-the-shelf AI systems may offer basic functionality, but they often miss the nuance required for industry-specific applications.

For AI to deliver real value, it must understand the industry it serves—be it predicting patient outcomes in healthcare or optimizing inventory in retail. This is why top AI technology firms in India are moving toward specialized, tailored solutions.

Key Industries Driving Demand for Specialized Intelligence

The artificial intelligence industry in India is seeing strong adoption across several sectors:

Healthcare: AI supports diagnostics, patient monitoring, and predictive analytics.

Fintech: Fraud detection, credit scoring, and customer service chatbots rely heavily on AI.

Manufacturing: AI improves quality control, predictive maintenance, and supply chain efficiency.

Retail: Customer personalization, demand forecasting, and dynamic pricing are AI-driven.

Education (EdTech): Adaptive learning systems and automated grading are key use cases.

Logistics: Route optimization and warehouse automation benefit from AI implementation.

WebSenor’s Approach: Building Intelligence That Understands Your Industry

Deep Domain Experience and Cross-Sector Expertise

WebSenor isn’t just an AI solutions provider—it is a strategic AI service provider in India with deep roots across industries. The company’s team of AI engineers, data scientists, and domain experts work together to craft solutions that reflect the challenges and goals of each sector.

Whether developing AI-powered software for remote diagnostics in healthcare or building ML-driven recommender engines for e-commerce, WebSenor brings years of real-world experience to every engagement.

Collaboration with Industry Experts

To ensure relevance and accuracy, WebSenor collaborates closely with subject matter experts (SMEs) from each sector. This partnership model ensures that every AI solution is informed by current industry practices, regulations, and customer expectations.

By aligning with experts in healthcare, finance, logistics, and other fields, WebSenor builds trust and ensures compliance—both critical for business adoption.

Use of Proprietary AI Frameworks and Data Models

WebSenor’s strength lies in its ability to design and deploy custom AI models trained on industry-specific data. Unlike many machine learning companies in India that rely solely on third-party tools, WebSenor develops proprietary AI frameworks to solve complex business problems with precision. These frameworks are designed to be adaptable, allowing for continuous learning and refinement as more data becomes available.

Scalable Solutions That Evolve with Your Business

Modular AI Architecture

Scalability is one of the biggest challenges in AI adoption. Many businesses struggle to move from pilot projects to full-scale deployment. WebSenor addresses this through a modular architecture approach.

Each component—data ingestion, model training, inference, and monitoring—can scale independently based on business needs. This allows for flexible deployment across multiple business units and geographies.

Cloud-Native and Edge-Compatible AI

WebSenor’s AI solutions are designed to work seamlessly across cloud and edge environments. Whether running models in centralized data centers or on edge devices in remote locations, businesses can rely on consistent performance, speed, and security.

Compliance with industry standards, encryption protocols, and privacy regulations ensures that WebSenor's solutions are ready for even the most sensitive applications.

Ongoing Optimization Through MLOps and AIOps

Deployment is just the beginning. Through robust MLOps (Machine Learning Operations) and AIOps (AI for IT Operations), WebSenor ensures that its models stay accurate and effective.

Continuous monitoring, automated retraining, and performance dashboards allow clients to measure ROI and adapt quickly to changes in their business or market conditions.

Case Studies: Industry-Specific Intelligence in Action

AI in Healthcare: Predictive Patient Analytics

A leading hospital chain partnered with WebSenor to develop an AI system that predicts patient readmissions based on historical data.

Results:

27% improvement in readmission predictions

Reduction in average length of stay by 12%

Enhanced patient care planning and resource management

AI in Retail: Hyper-Personalized Customer Journeys

WebSenor helped a major fashion retailer implement a recommendation engine based on user behavior, purchase history, and real-time inventory levels.

Results:

30% increase in average order value

22% boost in repeat purchases

Faster stock turnover and reduced overstocking

AI in Logistics: Route Optimization at Scale

A logistics startup in India collaborated with WebSenor to streamline delivery operations using AI-based route optimization and traffic prediction.

Results:

18% reduction in fuel costs

25% improvement in delivery time accuracy

Enhanced customer satisfaction and operational savings

These examples show why WebSenor ranks among the best AI companies in India today—delivering real business value through specialized, scalable AI.

Why WebSenor Is the Trusted AI Partner for Scalable Intelligence

Certified Teams with Deep Technical Expertise

WebSenor’s team includes certified professionals in deep learning services, data science, and AI ethics. Their blend of technical mastery and domain knowledge positions them ahead of many AI startups in India 2025.

Transparent Processes & Client-Centric Engagement

From project scoping to deployment, WebSenor follows a transparent, milestone-driven engagement model. Clients receive regular updates, performance reports, and technical documentation.

This approach builds confidence and ensures that business leaders are fully informed at every step.

Commitment to Ethical AI and Data Privacy

As one of the top artificial intelligence companies in India, WebSenor adheres to ethical AI principles. Their models are explainable, auditable, and built to minimize bias.

Strict adherence to data privacy laws—such as GDPR and India’s Digital Personal Data Protection Act—further strengthens their trustworthiness in sensitive sectors like finance and healthcare.

Final Thoughts: Investing in AI That Understands Your Business

The real power of AI lies not in algorithms alone but in their ability to solve real business problems with intelligence, scale, and industry insight. As one of the top AI companies in India, WebSenor delivers AI solutions that are not just advanced, but aligned—with your industry, your operations, and your growth plans. Whether you're a healthcare provider seeking predictive insights or a logistics firm aiming to optimize routes, WebSenor brings the right combination of experience, expertise, and ethical commitment.

Looking to explore how industry-specific AI can accelerate your business?Visit WebSenor’s AI Services to book a consultation or demo today.

#TopAICompaniesInIndia#ArtificialIntelligenceIndia#AICompaniesIndia#BestAICompanies#MachineLearningIndia#DeepLearningServices#AIStartupsIndia2025#DataScienceIndia#AITechnologyFirms#AISolutions#ScalableAI#WebSenorAI#IndustrySpecificAI#AIForBusiness#AIServiceProvidersIndia#ArtificialIntelligenceAgency

0 notes

Text

Your AI Doesn’t Sleep. Neither Should Your Monitoring.

We’re living in a world run by models from real-time fraud detection to autonomous systems navigating chaos. But what happens after deployment?

What happens when your model starts drifting, glitching, or breaking… quietly?

That’s the question we asked ourselves while building the AI Inference Monitor, a core module of the Aurora Framework by Auto Bot Solutions.

This isn’t just a dashboard. It’s a watchtower.

It sees every input and output. It knows when your model lags. It learns what “normal” looks like and it flags what doesn’t.

Why it matters: You can’t afford to find out two weeks too late that your model’s been hallucinating, misclassifying, or silently underperforming.

That’s why we gave the AI Inference Monitor:

Lightweight Python-based integration

Anomaly scoring and model drift detection

System resource tracking (RAM, CPU, GPU)

Custom alert thresholds

Reproducible logging for full audits

No more guessing. No more “hope it holds.” Just visibility. Control. Insight.

Built for developers, researchers, and engineers who know the job isn’t over when the model trains it’s just beginning.

Explore it here: Aurora On GitHub : AI Inference Monitor https://github.com/AutoBotSolutions/Aurora/blob/Aurora/ai_inference_monitor.py

Aurora Wiki https://autobotsolutions.com/aurora/wiki/doku.php?id=ai_inference_monitor

Get clarity. Get Aurora. Because intelligent systems deserve intelligent oversight.

Sub On YouTube: https://www.youtube.com/@autobotsolutions/videos

#OpenSourceAI#PythonAI#AIEngineering#InferenceOptimization#ModelDriftDetection#AIInProduction#DeepLearningTools#AIWorkflow#ModelAudit#AITracking#ScalableAI#HighStakesAI#AICompliance#AIModelMetrics#AIControlCenter#AIStability#AITrust#EdgeAI#AIVisualDashboard#InferenceLatency#AIThroughput#DataDrift#RealtimeMonitoring#PredictiveSystems#AIResilience#NextGenAI#TransparentAI#AIAccountability#AutonomousAI#AIForDevelopers

0 notes

Text

#GenerativeAI#EnterpriseAI#AIInfrastructure#DataAnalytics#RealTimeSearch#ScalableAI#TechInnovation#Partnership#TimesTech#DigitalTransformation#AshKulkarni#AIFactories#FutureOfData#electronicsnews#technologynews

0 notes

Text

Ready to transform your business? Osiz Technologies offers cutting-edge AI Integration Services that automate workflows, enhance customer experiences, and drive growth.

#AIIntegrationServices#OsizTechnologies#ArtificialIntelligence#AIForBusiness#DigitalTransformation#IntelligentAutomation#BusinessAutomation#AITechnology#SmartSolutions#AIConsulting#ScalableAI#FutureOfWork#TechInnovation#AIinBusiness#MachineLearningSolutions

0 notes

Text

Top AI Agent Developers for Custom AI Solutions | Bluebash Partner with Bluebash, the leading AI Agent Developers, to build intelligent, scalable, and tailored AI agents that drive business innovation and efficiency across industries.

#AIAgentDevelopers#AIExperts#ArtificialIntelligence#CustomAISolutions#AIInnovation#TechSolutions#BusinessAutomation#ScalableAI#Bluebash#USA

0 notes

Text

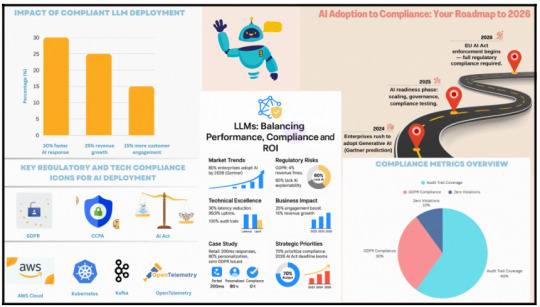

How to Compare LLMs for Compliance and Performance to Boost Revenue in 60 Days

Choosing the right Large Language Model (LLM) is no longer just a tech decision—it’s a business imperative. With the 2026 EU AI Act enforcement on the horizon and GDPR fines reaching up to €20 million, enterprises must act fast. A well-architected, compliant LLM deployment can deliver results in just 60 days—improving customer engagement, reducing latency, and driving revenue.

Modern solution architectures combine hybrid cloud stacks, open-source or proprietary LLMs (like LLaMA or GPT-4), and tools like Kafka and OpenTelemetry for real-time processing and full compliance logging. Performance benchmarks show up to 30% latency reduction and 15% revenue growth from better personalization and uptime. Testing tools like Locust, Chaos Monkey, and OWASP ZAP ensure robustness, resilience, and security.

Whether you're in retail, finance, or healthcare, deploying the right LLM can transform your operations while protecting your reputation.

👉 Read the full breakdown and see real-world examples, code snippets, and compliance checklists: https://jaiinfoway.com/blog/exploring-and-comparing-different-llms-scalable-ai-gdpr-compliance-revenue-growth.

0 notes

Text

The New DNA of Finance: From Digital to AI-Native

youtube

Highlights from my keynote at the 12th Digital Banking Forum in Athens. 🇬🇷

#fintech#AI#banking#innovation#transformation#aineconomy#ainativebusiness#aistartups#digitalstrategy#futureofwork#businessinnovation#generativeai#techdisruption#intelligentautomation#aiforbusiness#aitransformation#scalableai#customerexperience#enterpriseai#ecosystemthinking#Youtube

0 notes

Text

Custom AI Prototype Development for Your Business

Struggling with resources? Our custom AI prototype development services at AI n Dot Net are here to help. From MVPs to production systems, we deliver tailored solutions for your unique needs. Let us handle the complexities of AI integration while you focus on scaling your business effortlessly.

0 notes

Text

VADY Smart Insights for a Changing Business Your business is evolving—your analytics should evolve too. VADY’s adaptive AI models grow with your organization, delivering tailored insights that keep pace with your goals, your market, and your mission.

#VADY#VADYAIanalytics#VADYSmartDecisionMaking#VADYNewFangledAI#AIpoweredBusinessIntelligence#EnterpriseAISolutions#ContextAwareAIanalytics#VADYDataAnalyticsSolutions#VADYBusinessIntelligence#AIpoweredDataVisualization#DataAnalyticsForBusiness#AIforGrowth#AIcompetitiveAdvantage#AIinsights#AIforBusinessLeaders#EnterpriseLevelDataAutomation#AutomatedDataInsightsSoftware#ConversationalAnalyticsPlatform#ScalableAI#BusinessIntelligenceTools#AgileEnterprise#FutureReadyAnalytics

0 notes

Text

AI ML Enablement: Accelerating Business Transformation with Machine Learning

In the rapidly evolving digital world, businesses seek strategies to maintain a competitive edge. AI ML enablement is revolutionizing industries by accelerating business transformation. EnFuse Solutions India empowers organizations to integrate AI and ML, unlocking new opportunities and ensuring they stay ahead in the digital era.

#AIMLEnablement#BusinessTransformation#MachineLearningSolutions#DigitalTransformation#DecisionAutomation#ScalableAI#AIforBusiness#TechnologyEnablement#DataAnalyticsSolutions#AIMLConsulting#AIMLServicesIndia#AIMLEnablementServices#EnFuseAIML#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Choosing the right Generative AI solution involves evaluating business needs, AI capabilities, scalability, security, and integration. A well-suited AI model enhances automation, content creation, and efficiency. RW Infotech, the Best Generative AI Development Company in India, specializes in custom AI solutions powered by GPT, DALL·E, and Stable Diffusion, ensuring seamless integration and business growth. Partnering with the right AI provider can drive innovation and long-term success.

#GenerativeAI#AIForBusiness#AIDevelopment#BusinessAutomation#ScalableAI#AIIntegration#MachineLearning#ArtificialIntelligence#TechInnovation#RWInfotech

0 notes

Text

The Ultimate Guide to Data Annotation: How to Scale Your AI Projects Efficiently

In the fast-paced world of artificial intelligence (AI) and machine learning (ML), data is the foundation upon which successful models are built. However, raw data alone is not enough. To train AI models effectively, this data must be accurately labeled—a process known as data annotation. In this guide, we'll explore the essentials of data annotation, its challenges, and how to streamline your data annotation process to boost your AI projects. Plus, we’ll introduce you to a valuable resource: a Free Data Annotation Guide that can help you scale with ease.

What is Data Annotation?

Data annotation is the process of labeling data—such as images, videos, text, or audio—to make it recognizable to AI models. This labeled data acts as a training set, enabling machine learning algorithms to learn patterns and make predictions. Whether it’s identifying objects in an image, transcribing audio, or categorizing text, data annotation is crucial for teaching AI models how to interpret and respond to data accurately.

Why is Data Annotation Important for AI Success?

Improves Model Accuracy: Labeled data ensures that AI models learn correctly, reducing errors in predictions.

Speeds Up Development: High-quality annotations reduce the need for repetitive training cycles.

Enhances Data Quality: Accurate labeling minimizes biases and improves the reliability of AI outputs.

Supports Diverse Use Cases: From computer vision to natural language processing (NLP), data annotation is vital across all AI domains.

Challenges in Data Annotation

While data annotation is critical, it is not without challenges:

Time-Consuming: Manual labeling can be labor-intensive, especially with large datasets.

Costly: High-quality annotations often require skilled annotators or advanced tools.

Scalability Issues: As projects grow, managing data annotation efficiently can become difficult.

Maintaining Consistency: Ensuring all data is labeled uniformly is crucial for model performance.

To overcome these challenges, many AI teams turn to automated data annotation tools and platforms. Our Free Data Annotation Guide provides insights into choosing the right tools and techniques to streamline your process.

Types of Data Annotation

Image Annotation: Used in computer vision applications, such as object detection and image segmentation.

Text Annotation: Essential for NLP tasks like sentiment analysis and entity recognition.

Audio Annotation: Needed for voice recognition and transcription services.

Video Annotation: Useful for motion tracking, autonomous vehicles, and video analysis.

Best Practices for Effective Data Annotation

To achieve high-quality annotations, follow these best practices:

1. Define Clear Guidelines

Before starting the annotation process, create clear guidelines for annotators. These guidelines should include:

Annotation rules and requirements

Labeling instructions

Examples of correctly and incorrectly labeled data

2. Automate Where Possible

Leverage automated tools to speed up the annotation process. Tools with features like pre-labeling, AI-assisted labeling, and workflow automation can significantly reduce manual effort.

3. Regularly Review and Validate Annotations

Quality control is crucial. Regularly review annotated data to identify and correct errors. Validation techniques, such as using a secondary reviewer or implementing a consensus approach, can enhance accuracy.

4. Ensure Annotator Training

If you use a team of annotators, provide them with proper training to maintain labeling consistency. This training should cover your project’s specific needs and the annotation guidelines.

5. Use Scalable Tools and Platforms

To handle large-scale projects, use a data annotation platform that offers scalability, supports multiple data types, and integrates seamlessly with your AI development workflow.

For a more detailed look at these strategies, our Free Data Annotation Guide offers actionable insights and expert advice.

How to Scale Your Data Annotation Efforts

Scaling your data annotation process is essential as your AI projects grow. Here are some tips:

Batch Processing: Divide large datasets into manageable batches.

Outsource Annotations: When needed, collaborate with third-party annotation services to handle high volumes.

Implement Automation: Automated tools can accelerate repetitive tasks.

Monitor Performance: Use analytics and reporting to track progress and maintain quality.

Benefits of Downloading Our Free Data Annotation Guide

If you're looking to improve your data annotation process, our Free Data Annotation Guide is a must-have resource. It offers:

Proven strategies to boost data quality and annotation speed

Tips on choosing the right annotation tools

Best practices for managing annotation projects at scale

Insights into reducing costs while maintaining quality

Conclusion

Data annotation is a critical step in building effective AI models. While it can be challenging, following best practices and leveraging the right tools can help you scale efficiently. By downloading our Free Data Annotation Guide, you’ll gain access to expert insights that will help you optimize your data annotation process and accelerate your AI model development.

Start your journey toward efficient and scalable data annotation today!

#DataAnnotation#MachineLearning#AIProjects#ArtificialIntelligence#DataLabeling#AIDevelopment#ComputerVision#ScalableAI#Automation#AITools

0 notes

Text

Scaling AI Workloads with Auto Bot Solutions Distributed Training Module

As artificial intelligence models grow in complexity and size, the demand for scalable and efficient training infrastructures becomes paramount. Auto Bot Solutions addresses this need with its AI Distributed Training Module, a pivotal component of the Generalized Omni-dimensional Development (G.O.D.) Framework. This module empowers developers to train complex AI models efficiently across multiple compute nodes, ensuring high performance and optimal resource utilization.

Key Features

Scalable Model Training: Seamlessly distribute training workloads across multiple nodes for faster and more efficient results.

Resource Optimization: Effectively utilize computational resources by balancing workloads across nodes.

Operational Simplicity: Easy to use interface for simulating training scenarios and monitoring progress with intuitive logging.

Adaptability: Supports various data sizes and node configurations, suitable for small to large-scale workflows.

Robust Architecture: Implements a master-worker setup with support for frameworks like PyTorch and TensorFlow.

Dynamic Scaling: Allows on-demand scaling of nodes to match computational needs.

Checkpointing: Enables saving intermediate states for recovery in case of failures.

Integration with the G.O.D. Framework

The G.O.D. Framework, inspired by the Hindu Trimurti, comprises three core components: Generator, Operator, and Destroyer. The AI Distributed Training Module aligns with the Operator aspect, executing tasks efficiently and autonomously. This integration ensures a balanced approach to building autonomous AI systems, addressing challenges such as biases, ethical considerations, transparency, security, and control.

Explore the Module

Overview & Features

Module Documentation

Technical Wiki & Usage Examples

Source Code on GitHub

By integrating the AI Distributed Training Module into your machine learning workflows, you can achieve scalability, efficiency, and robustness, essential for developing cutting-edge AI solutions.

#AI#MachineLearning#DistributedTraining#ScalableAI#AutoBotSolutions#GODFramework#DeepLearning#AIInfrastructure#PyTorch#TensorFlow#ModelTraining#AIDevelopment#ArtificialIntelligence#EdgeComputing#DataScience#AIEngineering#TechInnovation#Automation

1 note

·

View note

Text

Developing scalable AI models for global enterprises

In today’s fast-paced digital landscape, developing scalable AI models has become essential for global enterprises. With companies expanding across countries and handling diverse data streams, scalability in AI ensures that models are effective at the local level and across varied business environments. Here’s an in-depth look at how scalable AI models empower global enterprises and key considerations in their development.

Why Scalability Matters for AI in Global Enterprises

Scalability in AI is not just a technological goal but a business imperative. For global enterprises, AI models must be adaptable across numerous regions and functions, handling a vast range of languages, customer behaviors, regulatory requirements, and industry-specific needs. When AI models are scalable:

Consistency Across Locations: Scalable AI allows for consistent quality and functionality across regions, providing a unified customer experience and streamlined operations.

Cost-Effectiveness: Reusable models reduce the need for location-specific customizations, saving time and resources.

Adaptability: By building AI models that scale, organizations can quickly adapt to market changes, roll out new features globally, and maintain competitive agility.

Key Elements in Developing Scalable AI Models

1. Unified Data Infrastructure

To develop scalable AI models, enterprises need a unified data architecture that supports data collection, storage, and processing across geography. A hybrid or multi-cloud infrastructure is often ideal, as it allows organizations to manage data according to local compliance rules while maintaining access to a centralized data repository.

2. Transfer Learning for Localization

Transfer learning can be a valuable technique for global enterprises. By training AI models on a foundational dataset and then refining them with region-specific data, businesses can create models that retain a global standard while being locally relevant. For instance, a customer support chatbot can be initially trained on general support topics and then fine-tuned to handle language and cultural nuances.

3.Regulatory Compliance and Ethical AI

Scalable AI models must account for various regulatory standards, including GDPR in Europe and CCPA in California. Building models that incorporate privacy-by-design principles helps enterprises remain compliant and responsive to legal changes globally.

4. Modular and Microservices Architecture

Implementing a modular AI framework enables enterprises to deploy and upgrade AI components independently, which is critical for maintaining scalable AI solutions. A microservices architecture allows specific model parts to be scaled as needed—whether it’s data ingestion, processing, or storage—without affecting other system elements.

5. Performance Monitoring and Feedback Loops

Continuous monitoring ensures that AI models maintain high performance across different environments. Establishing feedback loops allows data scientists to capture discrepancies in model accuracy between regions and make real-time adjustments.

Challenges and Solutions in Scaling AI

Challenge 1: Data Variability

Data inconsistency across regions can impact model accuracy. Leveraging data normalization and augmentation techniques can help address this.

Challenge 2: Computational Resource Requirements

Scaling AI requires significant computational power, especially when handling vast datasets and performing deep learning tasks. Cloud computing solutions like Cloudtopiaa, AWS, Google Cloud, or Azure enable on-demand scaling of resources to handle these needs.

Challenge 3: Interoperability

AI models should be designed to integrate with existing enterprise systems. Using standardized APIs and data formats can improve interoperability, ensuring models work seamlessly with legacy systems across different regions.

Conclusion: The Path Forward for Scalable AI in Global Enterprises

As global enterprises continue to leverage AI to streamline operations and improve decision-making, the ability to scale AI models effectively is increasingly critical. Scalable AI empowers businesses to deliver consistent experiences, adapt quickly to market changes, and stay compliant with varying regulatory landscapes. Key practices such as building unified data infrastructures, utilizing transfer learning, and adopting modular architectures enable organizations to implement AI that is both adaptable and resilient across regions. By prioritizing these elements, companies can harness AI’s full potential, paving the way for sustained innovation and growth in an interconnected world.

1 note

·

View note

Text

NVIDIA NIM: Scalable AI Inference Improved Microservices

Nvidia Nim Deployment

The usage of generative AI has increased dramatically. The 2022 debut of OpenAI’s ChatGPT led to over 100M users in months and a boom in development across practically every sector.

POCs using open-source community models and APIs from Meta, Mistral, Stability, and other sources were started by developers by 2023.

As 2024 approaches, companies are turning their attention to full-scale production deployments, which include, among other things, logging, monitoring, and security, as well as integrating AI models with the corporate infrastructure already in place. This manufacturing route is difficult and time-consuming; it calls for specific knowledge, tools, and procedures, particularly when operating on a large scale.

What is Nvidia Nim?

Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

A simplified approach to creating AI-powered workplace apps and implementing AI models in real-world settings is offered by NVIDIA NIM, a component of NVIDIA AI workplace.

NIM is a collection of cloud-native microservices that have been developed with the goal of reducing time-to-market and streamlining the deployment of generative AI models on GPU-accelerated workstations, cloud environments, and data centers. By removing the complexity of creating AI models and packaging them for production using industry-standard APIs, it increases the number of developers.

NVIDIA NIM for AI inference optimization

With NVIDIA NIM, 10-100X more business application developers will be able to contribute to their organizations’ AI transformations by bridging the gap between the intricate realm of AI development and the operational requirements of corporate settings.Image Credit to NVIDIA

Figure: Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

The following are a few of the main advantages of NIM.

Install somewhere

Model deployment across a range of infrastructures, including local workstations, cloud, and on-premises data centers, is made possible by NIM’s controllable and portable architecture. This covers workstations and PCs with NVIDIA RTX, NVIDIA Certified Systems, NVIDIA DGX, and NVIDIA DGX Cloud.

Various NVIDIA hardware platforms, cloud service providers, and Kubernetes distributions are subjected to rigorous validation and benchmarking processes for prebuilt containers and Helm charts packed with optimized models. This guarantees that enterprises can deploy their generative AI applications anywhere and retain complete control over their apps and the data they handle. It also provides support across all environments powered by NVIDIA.

Use industry-standard APIs while developing

It is easier to construct AI applications when developers can access AI models using APIs that follow industry standards for each domain. With as few as three lines of code, developers may update their AI apps quickly thanks to these APIs’ compatibility with the ecosystem’s normal deployment procedures. Rapid implementation and scalability of AI technologies inside corporate systems is made possible by their seamless integration and user-friendliness.

Use models specific to a domain

Through a number of important features, NVIDIA NIM also meets the demand for domain-specific solutions and optimum performance. It bundles specialized code and NVIDIA CUDA libraries relevant to a number of disciplines, including language, voice, video processing, healthcare, and more. With this method, apps are certain to be precise and pertinent to their particular use case.

Using inference engines that have been tuned

NIM provides the optimum latency and performance on accelerated infrastructure by using inference engines that are tuned for each model and hardware configuration. This enhances the end-user experience while lowering the cost of operating inference workloads as they grow. Developers may get even more precision and efficiency by aligning and optimizing models with private data sources that remain within their data center, in addition to providing improved community models.

Assistance with AI of an enterprise-level

NIM, a component of NVIDIA AI Enterprise, is constructed using an enterprise-grade base container that serves as a strong basis for corporate AI applications via feature branches, stringent validation, service-level agreements for enterprise support, and frequent CVE security upgrades. The extensive support network and optimization tools highlight NIM’s importance as a key component in implementing scalable, effective, and personalized AI systems in real-world settings.

Accelerated AI models that are prepared for use

NIM provides AI use cases across several domains with support for a large number of AI models, including community models, NVIDIA AI Foundation models, and bespoke models given by NVIDIA partners. Large language models (LLMs), vision language models (VLMs), voice, picture, video, 3D, drug discovery, medical imaging, and other models are included in this.

Using cloud APIs provided by NVIDIA and available via the NVIDIA API catalog, developers may test the most recent generative AI models. Alternatively, they may download NIM and use it to self-host the models. In this case, development time, complexity, and expense can be reduced by quickly deploying the models on-premises or on major cloud providers using Kubernetes.

By providing industry-standard APIs and bundling algorithmic, system, and runtime improvements, NIM microservices streamline the AI model deployment process. This makes it possible for developers to include NIM into their current infrastructure and apps without the need for complex customization or specialist knowledge.

Businesses may use NIM to optimize their AI infrastructure for optimal performance and cost-effectiveness without having to worry about containerization or the intricacies of developing AI models. NIM lowers hardware and operating costs while improving performance and scalability on top of accelerated AI infrastructure.

NVIDIA offers microservices for cross-domain model modification for companies wishing to customize models for corporate apps. NVIDIA NeMo allows for multimodal models, speech AI, and LLMs to be fine-tuned utilizing private data. With an expanding library of models for generative biology, chemistry, and molecular prediction, NVIDIA BioNeMo expedites the drug development process. With Edify models, NVIDIA Picasso speeds up creative operations. Customized generative AI models for the development of visual content may be implemented thanks to the training of these models using licensed libraries from producers of visual material.

Read more on govindhtech.com

#nvidianim#scalableai#microservices#openai#chatgpt#nvidiaai#generativeai#aimodel#technology#technews#news#govindhtech

1 note

·

View note