#Setting locale with the API

Explore tagged Tumblr posts

Text

how to save 101

so i recently had a poll asking what you'd do if you have $10,000, and over half of the respondents said that they'd save it for something big

if you're saving for something big — like college, a car, starting a side hustle, or even financial freedom — here's some unexpected advice that actually does something. not cute. not tiny. real.

open a HYSA (high-yield savings account) at a credit union or online bank. no, not your regular bank. they usually pay literal cents in interest. but online banks like Ally or SoFi (or your local credit union) offer 4–5% APY as of now. if you’re saving over time, that compound interest builds and beats inflation. it’s not glamorous, but it works. set it. forget it. grow.

invest in an I-Bond. you heard right — a government bond. it’s basically a super-safe investment you can buy with as little as $25. I-Bonds adjust with inflation and earn interest over time. teens can buy them through a parent or guardian's TreasuryDirect account. way better than letting your money rot in checking.

don’t save — prepay. saving up for something long-term? like a course, a trip, or even SAT tutoring? instead of stashing cash, prepay now if there’s a discount or price lock. a lot of services let you pay in advance, especially if they’re small businesses. this saves you from price hikes — and yourself.

build credit (yes, really). if you’re 18 or close to it, use $10,000 as a starter safety net for a secured credit card. this builds your credit history early — a big deal for apartments, student loans, and future jobs. make one small charge monthly (like Spotify), pay it off, never miss. boring? yes. life-changing? also yes.

micro-fund a revenue-generating skill. take that $10,000 and turn it into money. Examples:

buy a domain + hosting for a blog you monetize

invest in a course that teaches design, data entry, or UX

get supplies for a hyper-niche Etsy shop (e.g. enamel pin display boards or zines)

buy an external mic and start voiceover freelancing

a 10,000 bucks won’t change your life. but how you use it might.

#explorepage#fyp#goals#tumblr tips#saving money#helpful#poll results#useful#useful information#resources#viral#relatable content#budgeting#money#spending money#teen blog#financial advice#advice blog#real talk#self improvement#investinyourself#moneyforstudents#saveitforsomethingbig#mintconditioned

64 notes

·

View notes

Text

using LLMs to control a game character's dialogue seems an obvious use for the technology. and indeed people have tried, for example nVidia made a demo where the player interacts with AI-voiced NPCs:

youtube

this looks bad, right? like idk about you but I am not raring to play a game with LLM bots instead of human-scripted characters. they don't seem to have anything interesting to say that a normal NPC wouldn't, and the acting is super wooden.

so, the attempts to do this so far that I've seen have some pretty obvious faults:

relying on external API calls to process the data (expensive!)

presumably relying on generic 'you are xyz' prompt engineering to try to get a model to respond 'in character', resulting in bland, flavourless output

limited connection between game state and model state (you would need to translate the relevant game state into a text prompt)

responding to freeform input, models may not be very good at staying 'in character', with the default 'chatbot' persona emerging unexpectedly. or they might just make uncreative choices in general.

AI voice generation, while it's moved very fast in the last couple years, is still very poor at 'acting', producing very flat, emotionless performances, or uncanny mismatches of tone, inflection, etc.

although the model may generate contextually appropriate dialogue, it is difficult to link that back to the behaviour of characters in game

so how could we do better?

the first one could be solved by running LLMs locally on the user's hardware. that has some obvious drawbacks: running on the user's GPU means the LLM is competing with the game's graphics, meaning both must be more limited. ideally you would spread the LLM processing over multiple frames, but you still are limited by available VRAM, which is contested by the game's texture data and so on, and LLMs are very thirsty for VRAM. still, imo this is way more promising than having to talk to the internet and pay for compute time to get your NPC's dialogue lmao

second one might be improved by using a tool like control vectors to more granularly and consistently shape the tone of the output. I heard about this technique today (thanks @cherrvak)

third one is an interesting challenge - but perhaps a control-vector approach could also be relevant here? if you could figure out how a description of some relevant piece of game state affects the processing of the model, you could then apply that as a control vector when generating output. so the bridge between the game state and the LLM would be a set of weights for control vectors that are applied during generation.

this one is probably something where finetuning the model, and using control vectors to maintain a consistent 'pressure' to act a certain way even as the context window gets longer, could help a lot.

probably the vocal performance problem will improve in the next generation of voice generators, I'm certainly not solving it. a purely text-based game would avoid the problem entirely of course.

this one is tricky. perhaps the model could be taught to generate a description of a plan or intention, but linking that back to commands to perform by traditional agentic game 'AI' is not trivial. ideally, if there are various high-level commands that a game character might want to perform (like 'navigate to a specific location' or 'target an enemy') that are usually selected using some other kind of algorithm like weighted utilities, you could train the model to generate tokens that correspond to those actions and then feed them back in to the 'bot' side? I'm sure people have tried this kind of thing in robotics. you could just have the LLM stuff go 'one way', and rely on traditional game AI for everything besides dialogue, but it would be interesting to complete that feedback loop.

I doubt I'll be using this anytime soon (models are just too demanding to run on anything but a high-end PC, which is too niche, and I'll need to spend time playing with these models to determine if these ideas are even feasible), but maybe something to come back to in the future. first step is to figure out how to drive the control-vector thing locally.

48 notes

·

View notes

Text

I hate making such posts, but i've been told someone's using one of my arts without credit for their telegram stickerpack and i know that the majority of pics has been found ON tumblr so maybe there's a chance this person will see that (or maybe some of the local tg users have seen this particular stickerpack "in the wild" or could recognize them somehow).

There's lots of artworks from so many fandom artists there, and i honestly doubt anyone has been asked for permission at all.

I tried some of the built-in APIs to reach out and ask them to take my artwork down from that sticker set (obviously as there wasn't any kind of consent from my side to put it in there in the first place), and as a result i've got their profile ID (which i do not have any direct knowledge to do anything with rn, what i know is that it should be tied to a username in a way), this post is simply a warning for the fellow ghartists...and that person - if they end up seeing that.

It's not nice to scrap others work like that, buddy. One of the reasons people put insanely big watermarks on their art

#kers ramblings#the band ghost#swiss ghoul#this post obviously isn't about what jt did(and i already stated that i do not support the person) but about the artworks that has been used#I'm very sensitive when it comes to my art. doesnt matter if it's fully rendered pieces or sketches#and like i said this is simply a warning to other artists about this situation.#im honestly disappointed i had to wake up to that and I'm very stubborn when it comes to such kind of situations#i do know people do that all the time and despite any rules but it felt kinda personal to me#considering upgrading my watermarks to a whole different level rn

31 notes

·

View notes

Text

How to play RPGMaker Games in foreign languages with Machine Translation

This is in part a rewrite of a friend's tutorial, and in part a more streamlined version of it based on what steps I found important, to make it a bit easier to understand!

Please note that as with any Machine Translation, there will errors and issues. You will not get the same experience as someone fluent in the language, and you will not get the same experience as with playing a translation done by a real person.

If anyone has questions, please feel free to ask!

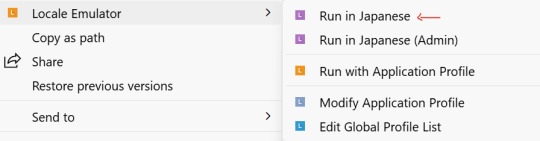

1. Download and extract the Locale Emulator

Linked here!

2. Download and set up your Textractor!

Textractor tutorial and using it with DeepL. The browser extension tools are broken, so you will need to use the built in textractor API (this has a limit, so be careful), or copy-paste the extracted text directly into your translation software of choice. Note that the textractor DOES NOT WORK on every game! It works well with RPGMaker, but I've had issues with visual novels. The password for extraction is visual_novel_lover

3. Ensure that you are downloading your game in the right region.

In your region/language (administrative) settings, change 'Language for non-unicode programs' to the language of your choice. This will ensure that the file names are extracted to the right language. MAKE SURE you download AND extract the game with the right settings! DO NOT CHECK THE 'use utf-8 encoding' BOX. This ONLY needs to be done during the initial download and extraction; once everything is downloaded+extracted+installed, you can set your region back to your previous settings, but test to ensure that the text will display properly after you return to your original language settings; there have been issues before. helpful tutorials are here and here!

4. Download your desired game and, if necessary, relevant RTP

The tools MUST be downloaded and extracted in the game's language. For japanese games, they are here. ensure that you are still in the right locale for non-unicode programs!

5. Run through the Locale Emulator

YES, this is a necessary step, EVEN IF YOUR ADMIN-REGION/LANGUAGE SETTINGS ARE CORRECT. Some games will not display the correct text unless you also RUN it in the right locale. You should be able to right click the game and see the Locale Emulator as an option like this. Run in Japanese (or whatever language is needed). You don't need to run as Admin if you don't want to, it should work either way.

6. Attach the Textractor and follow previously linked tutorials on how to set up the tools and the MTL.

Other notes:

There are also inbuilt Machine Translation Extensions, but those have a usage limit due to restrictions on the API. The Chrome/Firefox add-ons in the walkthrough in step 4 get around this by using the website itself, which doesn't have the same restrictions as the API does.

This will work best for RPGMaker games. For VNs, the textractor can have difficulties hooking in to extract the text, and may take some finagling.

#rpgmaker#tutorial#rpgmaker games#aria rambles#been meaning to make a proper version of this for a while#i have another version of this but it's specifically about coe#it was time to make a more generalized version

112 notes

·

View notes

Text

Hellenic Paganism FAQs

I see a lot of the same questions asked on r/Hellenism and other pagan spaces online, so here's my answers to them:

Is [god] mad at me for [thing]?

No.

Versions of this question are asked almost every day, about all kinds of situations, and the answer is always no. Your gods are not mad at you. Contrary to popular belief, gods do not anger easily. I think there are two main reasons why people assume this: The first is that the Greek gods are often perceived as being quick-tempered, petty, and vindictive in mythology, but this isn't an accurate or fair perception (see below). The other is that a majority of new converts are ex-Christians, and in many sects of Christianity, God is constantly breathing down your neck to catch you in a sin. If you grew up in that kind of culture, it can be very difficult to break out of that mindset.

In truth, Greek gods are kind and very forgiving, and it's nearly impossible to offend them by accident. You'd have to actively try to piss them off, and even then, you're more likely to get an "I'm very disappointed in you" than a show of divine wrath. Even in mythology, the things that anger them tend to be big things like kinslaying (murdering your family), desecration (intentional — as in not accidental — destruction of temples and holy objects), crimes against their worshippers, and disruption of the natural order. You can't do any of that by accident. Gods also aren't constantly looking over your shoulder for reasons to punish you. Believe me, they've got better things to do, and they don't have any reason to alienate their own worshippers over petty shit.

Can I worship multiple gods?

Yes! This is a polytheistic religion. Worshipping multiple gods is kind of the point. Gods do not get jealous of each other or possessive of their worshippers. Even if you have a patron deity, it is not going to prevent you from branching out to other gods. There's technically no limit to the number of gods you can worship; you're only limited by the amount of time and resources you have to devote to each one. Historically, people often had a handful of gods associated with their city, their profession, their local natural features, etc. that they worshipped regularly. They would cycle through the other ones as-needed or on their respective sacred days.

You also don't have to worry about putting different gods on separate altars, asking permission before working with a new god, or whether the gods you're working with will like each other or not. They expect to be worshipped alongside each other.

Can I mix Hellenism with Christianity or another religion?

Yes! Mixing religions is called "syncretism," and it's normal. It's how religion is supposed to work. All pagan religions are intercompatible to some extent; Ancient Greeks interpreted everyone else's gods as versions of their own with different names. (This is called interpretatio graeca.) There's lots of weird Greco-Egyptian hybrid gods, like Hermes Trismegistus (Hermes + Thoth), Hermanubis, Harpocrates, Isis-Aphrodite, Osiris-Dionysus, Zeus Ammon, and Serapis (Zeus + Hades + Dionysus + Apis + Osiris). There's lots of other examples of syncretism within and around Greece, and the Romans made syncretism their whole thing. Again, gods will not get mad if you choose to syncretize. But it is a good idea to be mindful of cultural appropriation when approaching syncretism.

Why do gods do such bad things in the myths? / How should I interpret the myths?

The majority of modern Hellenists don't take myths literally. We definitely don't treat them like the Bible. It's important to remember that Greek myths are at least two thousand years old! Nothing ages well after that long. Ancient Greeks had a very different value set from people today. So, for example, Zeus has disturbing SA myths because he's portrayed as an Ancient Greek king, and that's how Ancient Greek kings were expected to behave. Anyone who worships Zeus can tell you that Zeus, the entity, is not like that at all! He's very gentle and fatherly. What's actually important in those ancient myths is that Zeus is supposed to be the ultimate embodiment of power, and in those days, that was one way of showing how powerful and virile Zeus is. It's important to read between the lines and see what myths are actually trying to say, instead of taking them at face value. It takes time to learn how to interpret myths, but they can teach us a lot about the gods in this symbolic, indirect way if we know how to look at them.

Exactly how Ancient Greeks interpreted myths is a whole other discussion that I don't have space for here. The short version is that they didn't treat them like we treat the Bible or modern media. Myths are not literal or allegorical, it's a secret third thing. Myth straight-up didn't play the same role in society that our stories do today. So, until you learn more about that, I recommend taking the myths with a grain of salt. Enjoy them as stories, learn whatever you can from them, but please don't base your opinions about who the gods are as entities purely on myths. See below for other kinds of sources!

Do I need to pray every day?

Nope. Don't drive yourself crazy thinking you have to maintain a regular practice forever. That's asking a lot of yourself. Life gets in the way, and you don’t always have the time, energy, or emotional bandwidth to practice. (I tried to do regular rituals in August. I lasted about five days out of what was supposed to be a week-long series of rituals.) It’s important to remember that, in Ancient Greece, religious activity was just built into people’s routines. That’s no longer the case — we have to go out of our way to do even the most basic devotional activities, and that makes practicing much harder than it’s supposed to be. The gods understand that we’re human, and they understand the limitations of the way our lives are structured. Regular practice is, frankly, unrealistic.

Do I need to wait for a god to reach out to me before I worship/work with them?

Nope. This is a common misconception based on the way modern paganism is often presented. It's perfectly okay to seek the gods out based on what you need from them, and you don't need permission to begin working with a new one. (Gods want your worship the way corporations want your money. They're not going to turn you away.) It's possible that a god might "reach out" to you, but you can't control whether that happens or not, and you don't need to wait around for that to happen.

Do I need a patron deity? / How do I tell who my patron deity is?

You do not need a patron deity. Historically, your patron deity was the god that rules your profession. (So, the patron of doctors was Apollo, of merchants was Hermes, of agricultural workers was Demeter, of artisans was Athena, of politicians was Zeus, etc.) Nowadays, a patron deity is a god that takes a personal interest in you and your spiritual development, and whom you have a special connection to. I'm lucky enough to have one, but not everyone does, and you don't need a patron in order to practice or to have close relationships with gods. If you do have one, you don't need to only worship that one god.

If you have a patron deity, you will know. Chances are, it will not be subtle about getting your attention. I knew my patron deity because I became inexplicably obsessed with him more than once, and when I started doing research into him, everything about him resonated. Please do not ask if random symbols you're seeing are signs, or which god a tarot spread is pointing to. Part of what makes a sign a sign is that you think of the god when you see it! If you want gods to reach out through signs, I recommend familiarizing yourself with their iconography (symbols and attributes). Tarot doesn't have a one-to-one relationship with any group of gods, so it's unlikely that tarot will point you towards any specific god, unless you're already really familiar with the gods and your cards.

How do I talk to gods?

That's what divination is for. There's lots of divination methods: tarot and oracle cards, dice, pendulums, scrying, etc. Personally, I'm partial to automatic writing, which is writing a question, and then writing whatever comes to mind as the answer. I get answers in full sentences. (No, I don't know for sure that I'm talking to gods and not just to myself, but I recognize the gods' "voices," and I experience very intense waves of emotion and insight when I speak to them.) If you're a more visual person, scrying is also a great tool to receive messages from gods in the form of images. Simply meditating is also a good way to interact with gods, and something you should probably practice anyway.

Divination takes time to master. If you're not getting clear answers right away, take some time to familiarize yourself with your tool. Try using it to ask about your life, not just to talk to gods. Don't take it too seriously. Some methods are more reliable than others, and you may be better suited to some than others. I advise against yes/no divination, because it tends to be too vague and can be easily influenced by what you want to hear.

And please, for the love of Zeus, do not use candle flames! I know candle divination is the trendy thing on TikTok right now, but it's almost completely ineffective, because candle flames are easily affected by external factors: the length of the wick, the quality of the wax, the humidity of the air, drafts, you breathing on it wrong, etc. And any answers you might get from a candle flame will be vague, anyway! 90% of the time, it's not a message from a god, it's just the way fire works. Please don't read into it.

What can I give as offerings?

Standard offerings for all gods include bread, meat, milk, honey, cakes, olive oil, barley meal, flowers, fruit, wine, and incense. Some gods have more specific offerings consistent with their domains or personalities (like, for example, offering sun water or bay leaves to Apollo). You can also offer creative works like songs, poems, dances, art, etc., and devotional activities. Gods will appreciate almost anything you do for them.

As for how to dispose of offerings, I usually just eat them if its food. I don't give food offerings often, because I'm uncomfortable with "wasting" food, so I'm not really the right person to ask about that.

Which historical texts should I read?

We usually recommend that you start with the Homeric epics (The Iliad and Odyssey) and Hesiod's Theogony and Works and Days. But mythology is not the only resource we have to learn about the gods! There's the Homeric and Orphic Hymns, poems dedicated to the gods that you can recite for them at their altars. There's texts on theology like De Natura Deorum by Cicero, On the Gods and the World by Sallustius, and On Images by Porphyry. There's also Description of Greece by Pausanias, a travel guide (of a sort) that describes the everyday religious life of ordinary Ancient Greeks. Reading Plato is a tall order for some, but I recommend familiarizing yourself with his ideas at least a little bit. Most of these are available on theoi.com or perseus.tufts.edu, and the Internet Classics Archive.

If you're interested in magic, definitely take a look at the Greek Magical Papyri (PGM) as well.

Can I be a Hellenist and a witch?

Yes, but keep in mind, paganism and witchcraft are not interchangeable. You do not have to practice witchcraft to be a pagan, and vice-versa. Modern witchcraft is (long story short) an outgrowth of the popularity of Wicca, a neopagan religion founded in 1951. It doesn't bear much resemblance to ancient pagan religions, and most of the modern witchcraft content that you see on the internet isn't directly relevant to Hellenism (even if it concerns Greek gods). Witchcraft did exist in Ancient Greek religion, but it's different from the modern stuff. You can definitely combine modern witchcraft or Wicca with Hellenism, but I recommend studying them separately. Treat it like any other kind of syncretism.

Okay, that's all for now! Let me know if there's anything I missed or should add.

#hallenic paganism#hellenism#hellenic polytheism#hellenic pagan#paganism#greek paganism#paganblr#neopagan#pagan community#witchblr#faqs

20 notes

·

View notes

Text

Black Diamond Pool just erupted (05/30)

For anybody who was following the news last summer, you may remember this unexpected event on July 23rd. In the aftermath, the park service shut down the boardwalk area (which was completely destroyed). Park Service, USGS, and University of Utah geologists set up monitoring instruments immediately after.

In the time since the event, there hasn't been much news (at least that i've heard). Until today.

Last week, USGS went public with a webcam for monitoring Black Diamond Pool. It takes a snapshot every 15 minutes - you can see the most recent snapshot here (links to previous snapshots can be found on the USGS AshCam API).

Today (05/30/2025), we noticed the first signs of activity. A disturbance in the left side of the pool (relative to the webcam) starting at 13:45 (local time):

Followed by periods of increased convection, and finally...

at 20:45 we see the water level drop daramtically, indicating the end of an "eruption." (At the time of posting, it is not clear whether this activity meets the definition of an actual eruption. Will update). The event had enough force to move those rocks all the way on the right. Heres a before and after:

Safe to say they probably aren't opening this thing up anytime soon. But, for anybody interested in this kind of stuff, this is a landmark event, signalling continued (and possibly regular) activity at Black Diamond. This is a long-awaited continuation of what is probably the biggest national-park related news in recent history.

Happy pride month.

7 notes

·

View notes

Text

Apis mellifera. The honey bee a series of pictures of bees from Phil Frank, local Maryland Bee Keeper and author. This set includes males (drones) and females (workers). Check out the eyes on the males that meet atop the head, the long hair coming out of the eyeballs, and the modified hing legs of the female that are one of many things special about this genus of bees. Phil also took the photos after struggling with putting together our new Canon R and various gizmos that make stacking work. We now are working with some thirty something megapixels of detail Winter is here so expect more picture uploads

97 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) used artificial intelligence from Meta’s Llama model to comb through and analyze emails from federal workers.

Materials viewed by WIRED show that DOGE affiliates within the Office of Personnel Management (OPM) tested and used Meta’s Llama 2 model to review and classify responses from federal workers to the infamous “Fork in the Road” email that was sent across the government in late January.

The email offered deferred resignation to anyone opposed to changes the Trump administration was making to its federal workforce, including an enforced return-to-office policy, downsizing, and a requirement to be “loyal.” To leave their position, recipients merely needed to reply with the word “resign.” This email closely mirrored one that Musk sent to Twitter employees shortly after he took over the company in 2022.

Records show that Llama was deployed to sort through email responses from federal workers to determine how many accepted the offer. The model appears to have run locally, according to materials viewed by WIRED, meaning it’s unlikely to have sent data over the internet.

Meta and OPM did not respond to requests for comment from WIRED.

Meta CEO Mark Zuckerberg appeared alongside other Silicon Valley tech leaders like Musk and Amazon founder Jeff Bezos at Trump’s inauguration in January, but little has been publicly known about his company’s tech being used in government. Because of Llama’s open-source nature, the tool can easily be used by the government to support Musk’s goals without the company’s explicit consent.

Soon after Trump took office in January, DOGE operatives burrowed into OPM, an independent agency that essentially serves as the human resources department for the federal government. The new administration’s first big goal for the agency was to create a government-wide email service, according to current and former OPM employees. Riccardo Biasini, a former Tesla engineer, was involved in building the infrastructure for the service that would send out the original “Fork in the Road” email, according to material viewed by WIRED and reviewed by two government tech workers.

In late February, weeks after the Fork email, OPM sent out another request to all government workers and asked them to submit five bullet points outlining what they accomplished each week. These emails threw a number of agencies into chaos, with workers unsure how to manage email responses that had to be mindful of security clearances and sensitive information. (Adding to the confusion, it has been reported that some workers who turned on read receipts say they found that the responses weren’t actually being opened.) In February, NBC News reported that these emails were expected to go into an AI system for analysis. While the materials seen by WIRED do not explicitly show DOGE affiliates analyzing these weekly “five points” emails with Meta’s Llama models, the way they did with the Fork emails, it wouldn’t be difficult for them to do so, two federal workers tell WIRED.

“We don’t know for sure,” says one federal worker on whether DOGE used Meta’s Llama to review the “five points” emails. “Though if they were smart they’d reuse their code.”

DOGE did not appear to use Musk’s own AI model, Grok, when it set out to build the government-wide email system in the first few weeks of the Trump administration. At the time, Grok was a proprietary model belonging to xAI, and access to its API was limited. But earlier this week, Microsoft announced that it would begin hosting xAi’s Grok 3 models as options in its Azure AI Foundry, making the xAI models more accessible in Microsoft environments like the one used at OPM. This potentially, should they want it, would enable Grok as an option as an AI system going forward. In February, Palantir struck a deal to include Grok as an AI option in the company’s software, which is frequently used in government.

Over the past few months, DOGE has rolled out and used a variety of AI-based tools at government agencies. In March, WIRED reported that the US Army was using a tool called CamoGPT to remove DEI-related language from training materials. The General Services Administration rolled out “GSAi” earlier this year, a chatbot aimed at boosting overall agency productivity. OPM has also accessed software called AutoRIF that could assist in the mass firing of federal workers.

4 notes

·

View notes

Text

hey wanna hear about the crescent stack structure because i don't really have anyone to tell this to

YAYYYY!! THANK YOU :3

small note: threads are also referred to internally as lstates (local states) as when creating a new thread, rather than using crescent_open again, they're connected to a single gstate (global state)

the stack is the main data structure in a crescent thread, containing all of the locals and call information.

the stack is divided into frames, and each time a function is called, a new frame is created. each frame has two parts: base and top. a frame's base is where the first local object is pushed to, and the top is the total amount of stack indexes reserved after the base. also in the stack is two numbers, calls and cCalls. calls keeps track of the number of function calls in the thread, whether it's to a c or crescent function. cCalls keeps track of the number of calls to c functions. c functions, using the actual stack rather than the dynamically allocated crescent stack, could overflow the real stack and crash the program in a way crescent is unable to catch. so we want to limit the amount of these such that this (hopefully) doesn't happen.

calls also keeps track of another thing with the same number, that being the stack level. the stack level is a number starting from 0 that increments with each function call, and decrements on each return from a function. stack level 0 is the c api, where the user called crescent_open (or whatever else function that creates a new thread, that's just the only one implemented right now), and is zero because zero functions have been called before this frame.

before the next part, i should probably explain some terms:

- stack base: the address of the first object on the entire stack, also the base of frame 0

- stack top: address of the object immediately after the last object pushed onto the stack. if there are no objects on the stack, this is the stack base.

- frame base: the address of the first object in a frame, also the stack top upon calling a function (explained later) - frame top: amount of stack indexes reserved for this frame after the base address

rather than setting its base directly after the reserved space on the previous frame, we simply set it right at the stack top, such that the first object on this frame is immediately after the last object on the previous frame (except not really. it's basically that, but when pushing arguments to functions we just subtract the amount of arguments from the base, such that the top objects on the previous frame are in the new frame). this does make the stack structure a bit more complicated, and maybe a bit messy to visualize, but it uses (maybe slightly) less memory and makes some other stuff related to protected calls easier.

as we push objects onto the stack and call functions, we're eventually going to run out of space, so we need to dynamically resize the stack. though first, we need to know how much memory the stack needs. we go through all of the frames, and calculate how much that frame needs by taking the frame base offset from the stack base (framebase - stackbase) and add the frame top (or the new top if resizing the current frame in using crescent_checkTop in the c api). the largest amount a frame needs is the amount that the entire stack needs. if the needed size is less than or equal to a third of the current stack size, it shrinks. if the needed size is greater than he current stack size, it grows. when shrinking or growing, the new size is always calculated as needed * 1.5 (needed + needed / 2 in the code).

when shrinking the stack, it can only shrink to a minimum of CRESCENT_MIN_STACK (64 objects by default). even if the resizing fails, it doesn't throw an error as we still have enough memory required. when growing, if the new stack size is greater than CRESCENT_MAX_STACK, it sets it back down to just the required amount. if that still is over CRESCENT_MAX_STACK, it either returns 1 or throws a stack overflow error depending on the throw argument. if it fails to reallocate the stack, it either throws an out of memory error or returns 1 again depending on the throw argument. growing the stack can throw an error because we don't have the memory required, unlike shrinking the stack.

and also the thing about the way a frame's base is set making protected calls easier. when handling an error, we want to go back to the most recent protected call. we do this by (using a jmp_buf) saving the stack level in the handler (structure used to hold information when handling errors and returning to the protected call) and reverting the stack back to that level. but we're not keeping track of the amount of objects in a stack frame! how do we restore the stack top? because a frame's base is immediately after that last object in the previous frame, and that the stack top is immediately after the last object pushed onto the stack, we just set the top to the stack level above's base.

6 notes

·

View notes

Text

Stardew Valley Mods!

Below are a list of mods (organized as best as I can) that I use when I play Stardew Valley! Here are instructions on how to install on PC/Mac: ☆ Install SMAPI! This is important when later running the actual mods with the game. When installing and running SMAPI, it will give step-by-step instructions. ☆ Download your desired mods! Make sure you read the other required mods to run the ones you download. Without them, they will not run! ☆ Unzip the downloaded mods! A zipped file will not run unfortunately... ☆ Open the Stardew Valley mods folder. You can do this by opening the game (not launching) in your steam library, hitting the cog on the right-hand side of the screen, hovering over manage, and hitting 'browse local files'. From here you should see a folder labeled 'mods' ☆ Transfer your downloaded and unzipped mods into the folder! ☆ From here you should be able to run the game with mods! It may take some tweaking if mods aren't updated-- using this website shows whether or not the mods run and the latest version!

**if i missed anything please let me know! the SDV mod guide is much more coherent than me so be sure to use your resources!

Required for most: ☆ SMAPI ☆ SpaceCore ☆ Json Assets ☆ Generic Mod Config Menu ☆ Farm Type Manager (FTM) ☆ Content Patcher ☆ Fashion Sense ☆ Shop Tile Framework ☆ Event Repeater ☆ Non Destructive NPCs ☆ Mail Framework Mod ☆ Anti-Social NPCs ☆ SAAT - Audio API and Toolkit ☆ Expanded Preconditions Utility

Expansions: ☆ Stardew Valley Expanded ☆ Ridgeside Village

Gameplay altering: ☆ Skip Fishing Minigame ☆ Skull Cavern Elevator ☆ Chests Anywhere ☆ Destroyable Bushes ☆ Automate ☆ Ui Info Suite ☆ Show Birthdays ☆ Friends Forever ☆ Customize Anywhere ☆ Ellie's Ideal Greenhouse

Add-ons: ☆ Tractor Mod ☆ New Years Eve ☆ Pregnancy and Birth Events ☆ Date Night ☆ Spouses in Ginger Island

Texture/Recolor: ☆ DaisyNiko's Earthy Interface ☆ DaisyNiko's Earthy Recolour ☆ Medieval buildings & Medieval SV Expanded ☆ Elle's New Coop Animals ☆ Elle's New Barn Animals ☆ Rustic Country Town Interiors

Item Replacements: ☆ Firefly Torch ☆ Fox - Pet Replacer ☆ Gwen's Lamps for CP and AT

Appearance: ☆ Seasonal Outfits - Slightly Cuter Aesthetic for SVE ☆ Seasonal Outfits for Ridgeside Village ☆ Coii's All Hats Pack ☆ Coii's Girls Sets Pack ☆ Hats Won't Mess Up Hair ☆ Babies Take After Spouse ☆ Sugarmaples' Cafe Clothes

Other: ☆ Canon-Friendly Dialogue Expansion ☆ BusLocations (Required for Desert mod) ☆ Sprites in Detail

4 notes

·

View notes

Text

What if I just become an annoying ADHD money blogger sometimes

#adhd adult money liveblogging

If you have problems saving money (especially emergency savings money) because you always spend it on too many impulse purchases, or take money out of your savings to cover your fun money:

you need to open a savings account with a new bank. The more impulsive you are, the more I recommend a small credit union or online only bank, or a really local bank. Someone whose online fund transfers to other banks takes three whole business days, so you literally can't just instant transfer money from savings to your checking account to spur of the moment buy things. If you're afraid this defeats the point of an emergency savings fund in the case of, well, an emergency, set up a small checking account with a minimal amount at this bank too, and just set aside the debit card somewhere you won't frequently use because it won't have much money until you pull it from emergency savings and put it in the checking account.

Look for one with a high APY relative to having basically no deposit minimum (mine is like 3%) and no minimum deposit or monthly fees. The APY is basically when bank sometimes pays you money for not spending money. It will be like, cents at first. Change in the sofa cushions. But over time, it will be more. Don't worry about it. It's just surprise money for later. Not a lot, mind you. But you're a competitive winner and every cent they give you FREE is a success to zap your brain with dopamine. (Eventually if you have enough money you can do this by like, investing in shit or buying CDs and they just give you MORE MONEY. BUT!!! BABY STEPS.)

This is crucial: if you have some kind of direct deposit paycheck set up, see if you can SPLIT the direct deposit between multiple accounts. The company my job uses to pay people allows us to choose between depositing a fixed dollar amount to certain accounts (with "remainder of paycheck balance" being automatic for one account), OR depositing a percentage of my paycheck to certain accounts. (Percents of a paycheck tend to be higher to start). If you don't get paid this way, figure out a good date to set a recurring transfer from your checking to your savings for an amount so it won't sit in your spendy account long. The goal is to pretend like you just actually never had the savings money in that paycheck. Poof. Gone. Disappeared. It got saved before you became aware of the money.

Feel free to start with a small amount. It can be $5 or whatever. Once you start doing this for a few paychecks look at your money. If you're not genuinely struggling to stay afloat after 2-3 months and are still comfortable, try increasing the number a little. Repeat as needed.

Now you've saved money. 🎉

This is genuinely how I managed to save money more consistently than anything else I've ever tried. Savings money goes in the secret money account. 🤷🏽♀️ Incredibly silly but it works.

37 notes

·

View notes

Note

Hello! I know the template has been out for a while, so this may be a bit of an outdated ask; I've been trying to set up your OC Directory template on my Neocities, but I've been having issues. (I believe with some of the links breaking and css specifically; it all works when I run the project on the local server, but the build files don't seem port correctly to Neocities, or something...)

Is there any way you could make some sort of tutorial on some of the related steps after initial setup of the template? Even just screenshots of how the files might look on Neocities could help for troubleshooting, or links to any similar setup tutorials (though I've followed a few so far that haven't really solved my issues...)

I'm not new to programming but am new to Eleventy, so I feel a little silly asking about it. Please ignore this ask if it's not possible for any reason :) Thank you!

sure thing! when you run "npm run build", it should create a "build" folder with the final files for your comic site. the contents of the "build" folder should effectively be 1-to-1 with the file structure of your neocities website, unless you add other files to your neocities site outside of the comic template.

for this example i'm starting fresh, by:

downloading the git repository for the comic template,

installing dependencies with npm install,

building everything with npm run build.

i didn't change anything else about my setup, so the files are unedited.

if i throw a local server up, it looks something like this, alongside the file list:

if i go to the actual neocities file list, you'll notice the folder structure is the same:

including the folders that just have an html file in them:

when you push the website to neocities, you should only push the contents of the "build" folder, and nothing else. don't put it in a subdirectory, think of the build folder itself as the "root".

i generally sync my website changes to neocities by using the command line API and running neocities push build, which "pushes" the contents of the build folder as your website.

2 notes

·

View notes

Text

AI Code Generators: Revolutionizing Software Development

The way we write code is evolving. Thanks to advancements in artificial intelligence, developers now have tools that can generate entire code snippets, functions, or even applications. These tools are known as AI code generators, and they’re transforming how software is built, tested, and deployed.

In this article, we’ll explore AI code generators, how they work, their benefits and limitations, and the best tools available today.

What Are AI Code Generators?

AI code generators are tools powered by machine learning models (like OpenAI's GPT, Meta’s Code Llama, or Google’s Gemini) that can automatically write, complete, or refactor code based on natural language instructions or existing code context.

Instead of manually writing every line, developers can describe what they want in plain English, and the AI tool translates that into functional code.

How AI Code Generators Work

These generators are built on large language models (LLMs) trained on massive datasets of public code from platforms like GitHub, Stack Overflow, and documentation. The AI learns:

Programming syntax

Common patterns

Best practices

Contextual meaning of user input

By processing this data, the generator can predict and output relevant code based on your prompt.

Benefits of AI Code Generators

1. Faster Development

Developers can skip repetitive tasks and boilerplate code, allowing them to focus on core logic and architecture.

2. Increased Productivity

With AI handling suggestions and autocompletions, teams can ship code faster and meet tight deadlines.

3. Fewer Errors

Many generators follow best practices, which helps reduce syntax errors and improve code quality.

4. Learning Support

AI tools can help junior developers understand new languages, patterns, and libraries.

5. Cross-language Support

Most tools support multiple programming languages like Python, JavaScript, Go, Java, and TypeScript.

Popular AI Code Generators

Tool

Highlights

GitHub Copilot

Powered by OpenAI Codex, integrates with VSCode and JetBrains IDEs

Amazon CodeWhisperer

AWS-native tool for generating and securing code

Tabnine

Predictive coding with local + cloud support

Replit Ghostwriter

Ideal for building full-stack web apps in the browser

Codeium

Free and fast with multi-language support

Keploy

AI-powered test case and stub generator for APIs and microservices

Use Cases for AI Code Generators

Writing functions or modules quickly

Auto-generating unit and integration tests

Refactoring legacy code

Building MVPs with minimal manual effort

Converting code between languages

Documenting code automatically

Example: Generate a Function in Python

Prompt: "Write a function to check if a number is prime"

AI Output:

python

CopyEdit

def is_prime(n):

if n <= 1:

return False

for i in range(2, int(n**0.5) + 1):

if n % i == 0:

return False

return True

In seconds, the generator creates a clean, functional block of code that can be tested and deployed.

Challenges and Limitations

Security Risks: Generated code may include unsafe patterns or vulnerabilities.

Bias in Training Data: AI can replicate errors or outdated practices present in its training set.

Over-reliance: Developers might accept code without fully understanding it.

Limited Context: Tools may struggle with highly complex or domain-specific tasks.

AI Code Generators vs Human Developers

AI is not here to replace developers—it’s here to empower them. Think of these tools as intelligent assistants that handle the grunt work, while you focus on decision-making, optimization, and architecture.

Human oversight is still critical for:

Validating output

Ensuring maintainability

Writing business logic

Securing and testing code

AI for Test Case Generation

Tools like Keploy go beyond code generation. Keploy can:

Auto-generate test cases and mocks from real API traffic

Ensure over 90% test coverage

Speed up testing for microservices, saving hours of QA time

Keploy bridges the gap between coding and testing—making your CI/CD pipeline faster and more reliable.

Final Thoughts

AI code generators are changing how modern development works. They help save time, reduce bugs, and boost developer efficiency. While not a replacement for skilled engineers, they are powerful tools in any dev toolkit.

The future of software development will be a blend of human creativity and AI-powered automation. If you're not already using AI tools in your workflow, now is the time to explore. Want to test your APIs using AI-generated test cases? Try Keploy and accelerate your development process with confidence.

2 notes

·

View notes

Text

Blog 10: Overpriced Jackets and Underrated Poems

London Markets and street culture

The London markets are not exactly what I was expecting…though, to be fair, I’m not sure what I was expecting. It felt like a huge, permanent flea market. Shops and eateries lined the streets, with even some street performers sprinkled in for atmosphere.

The shops were fascinating because they ranged from antiques to retro clothing to books to brand-new items, and even some handmade originals. The Notting Hill shops (Portobello Road) ended up being some of my favorites. Our API guide offered to take anyone interested there on one of our free days, and a group of us took her up on it; partly because she knew the way, and partly because phone data wasn’t always reliable.

When we got there, I realized the shops were only a small part of the experience. The buildings, the homes, even the graffiti on the walls of the market— all of it seemed to be part of a larger story. My favorite was a mural of eyes above a shop. I couldn’t stop thinking about the detail that went into those eyes. Whose eyes were they? They looked old and wise, almost like they had seen the entire history of the market. And maybe I’m reading too much into it, but they reminded me of my grandfather. Like my own guardian, who watches over me and protects me. Maybe these were the eyes of the market’s grandfather, silently guarding the place. Or maybe I’m just getting sentimental and overanalyzing everything… but then again, I wouldn’t be an English major if I didn’t.

I quickly came to understand, though, that not all markets are created equal. Some, like Camden Market and Borough Market, were… not as original. Sure, they had shops and food, but the small stalls that lined the streets were full of knockoffs. Bad ones, at that. And the prices? Let’s just say they didn’t exactly scream “street vendor,” they were closer to a high-end boutique.

While walking through Camden, a friend of mine, Justin, was checking out jackets. He found one he really liked and was about to buy it, that is until the vendor told him it would set him back nearly seventy pounds (about $95). For a fake jacket! It blew my mind. I thought these markets were supposed to be cheap. Maybe they’re just cleverly disguised tourist traps where locals make their living.

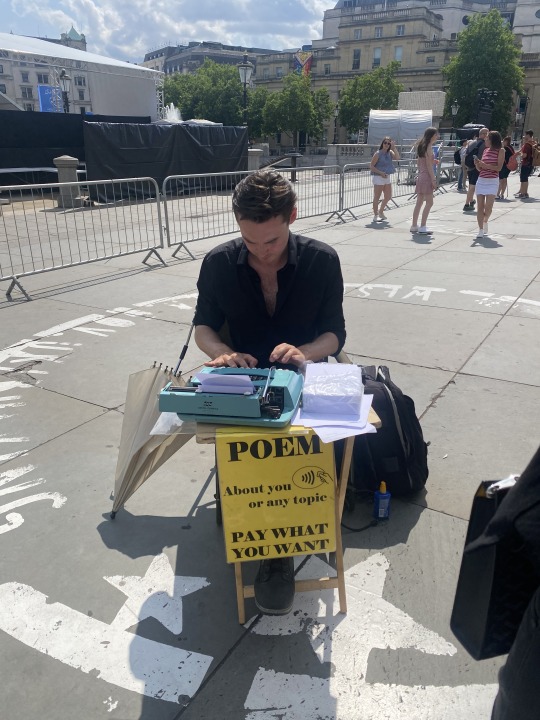

Still, the street life all around London was just as lively as the marketplaces. One day, while walking in front of the National Gallery, I came across a man sitting with an old typewriter and a small cardboard sign that read: “Pay What You Want for a Poem.” Naturally, I had to take him up on it. I was broke, I love poetry, and it felt like a once-in-a-very-London opportunity.

When I approached him, he smiled and asked what kind of poem I wanted. I’d never had a poem written about me before, so I asked for one based on his first impression of me. Without missing a beat, he started typing, occasionally glancing up at me. At one point, he even asked me to take off my glasses so he could get a better look at my eyes (a bit intense, but we’re leaning into the poetic, right?).

When he finished, I gave him what I could and walked back to meet my group. We read the poem together, and I couldn’t help but smile. But not because it was the best thing ever written, but because it was something I never expected to experience. It was spontaneous, human, and full of imperfect charm.

At the end of my trip, as I was going through everything I’d bought, I came back to that piece of paper. The poem. And I realized it was my favorite thing I’d picked up in London (yes, even counting the Harry Potter Studio Tour).

#shakespeare#william shakespeare#literary criticism#literary analysis#literary fiction#shakespeare memes#rhode island college#books#drama#london#england#notting hill#camden market#borough market#united kingdom#UK#poetry#street culture#street poems#graffiti#graffittiart#eyes#electric avenue#portobello road#street markets#markets#flea market

3 notes

·

View notes

Text

Apis mellifera. The honey bee a series of pictures of bees from Phil Frank, local Maryland Bee Keeper and author. This set includes males (drones) and females (workers). Check out the eyes on the males that meet atop the head, the long hair coming out of the eyeballs, and the modified hing legs of the female that are one of many things special about this genus of bees. Phil also took the photos after struggling with putting together our new Canon R and various gizmos that make stacking work. We now are working with some thirty something megapixels of detail Winter is here so expect more picture uploads

36 notes

·

View notes