#DataEngineering

Explore tagged Tumblr posts

Text

7 Best Hadoop Book Deals in 2025

📘 Dive into Hadoop: Unbelievable Deals in 2025! 🎉

Gear up, data enthusiasts! We've got the ultimate list of Hadoop books with deals you won't believe. Perfect for beginners or pros looking to refresh their knowledge. Learn more about the deals here: 7 Best Hadoop Book Deals in 2025

👇 Let me know your must-have book from the list!

7 Best Hadoop Book Deals in 2025

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Discover the key differences between SQL, NoSQL, and NewSQL databases—helping developers choose the right solution for scalability, speed, and flexibility.

1 note

·

View note

Text

How Dr. Imad Syed Transformed PiLog Group into a Digital Transformation Leader?

The digital age demands leaders who don’t just adapt but drive transformation. One such visionary is Dr. Imad Syed, who recently shared his incredible journey and PiLog Group’s path to success in an exclusive interview on Times Now.

In this inspiring conversation, Dr. Syed reflects on the milestones, challenges, and innovative strategies that have positioned PiLog Group as a global leader in data management and digital transformation.

The Journey of a Visionary:

From humble beginnings to spearheading PiLog’s global expansion, Dr. Syed’s story is a testament to resilience and innovation. His leadership has not only redefined PiLog but has also influenced industries worldwide, especially in domains like data governance, SaaS solutions, and AI-driven analytics.

PiLog’s Success: A Benchmark in Digital Transformation:

Under Dr. Syed’s guidance, PiLog has become synonymous with pioneering Lean Data Governance SaaS solutions. Their focus on data integrity and process automation has helped businesses achieve operational excellence. PiLog’s services are trusted by industries such as oil and gas, manufacturing, energy, utilities & nuclear and many more.

Key Insights from the Interview:

In the interview, Dr. Syed touches upon:

The importance of data governance in digital transformation.

How PiLog’s solutions empower organizations to streamline operations.

His philosophy of continuous learning and innovation.

A Must-Watch for Industry Leaders:

If you’re a business leader or tech enthusiast, this interview is packed with actionable insights that can transform your understanding of digital innovation.

👉 Watch the full interview here:

youtube

The Global Impact of PiLog Group:

PiLog’s success story resonates globally, serving clients across Africa, the USA, EU, Gulf countries, and beyond. Their ability to adapt and innovate makes them a case study in leveraging digital transformation for competitive advantage.

Join the Conversation:

What’s your take on the future of data governance and digital transformation? Share your thoughts and experiences in the comments below.

#datamanagement#data governance#data analysis#data analytics#data scientist#big data#dataengineering#dataprivacy#data centers#datadriven#data#businesssolutions#techinnovation#businessgrowth#businessautomation#digital transformation#piloggroup#drimadsyed#timesnowinterview#datascience#artificialintelligence#bigdata#datadrivendecisions#Youtube

3 notes

·

View notes

Text

Data Professionals: Want to Stand Out?

If you're a Data Engineer, Data Scientist, or Data Analyst, having a strong portfolio can be a game-changer.

Our latest blog dives into why portfolios matter, what to include, and how to build one that shows off your skills and projects. From data pipelines to machine learning models and interactive dashboards, let your work speak for itself!

#DataScience#DataEngineering#TechCareers#DataPortfolio#CareerTips#MachineLearning#DataAnalytics#CodingLife#ai resume#ai resume builder#airesumebuilder

2 notes

·

View notes

Text

🚀 𝐉𝐨𝐢𝐧 𝐃𝐚𝐭𝐚𝐏𝐡𝐢'𝐬 𝐇𝐚𝐜𝐤-𝐈𝐓-𝐎𝐔𝐓 𝐇𝐢𝐫𝐢𝐧𝐠 𝐇𝐚𝐜𝐤𝐚𝐭𝐡𝐨𝐧!🚀

𝐖𝐡𝐲 𝐏𝐚𝐫𝐭𝐢𝐜𝐢𝐩𝐚𝐭𝐞? 🌟 Showcase your skills in data engineering, data modeling, and advanced analytics. 💡 Innovate to transform retail services and enhance customer experiences.

📌𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫 𝐍𝐨𝐰: https://whereuelevate.com/drills/dataphi-hack-it-out?w_ref=CWWXX9

🏆 𝐏𝐫𝐢𝐳𝐞 𝐌𝐨𝐧𝐞𝐲: Winner 1: INR 50,000 (Joining Bonus) + Job at DataPhi Winners 2-5: Job at DataPhi

🔍 𝐒𝐤𝐢𝐥𝐥𝐬 𝐖𝐞'𝐫𝐞 𝐋𝐨𝐨𝐤𝐢𝐧𝐠 𝐅𝐨𝐫: 🐍 Python,💾 MS Azure Data Factory / SSIS / AWS Glue,🔧 PySpark Coding,📊 SQL DB,☁️ Databricks Azure Functions,🖥️ MS Azure,🌐 AWS Engineering

👥 𝐏𝐨𝐬𝐢𝐭𝐢𝐨𝐧𝐬 𝐀𝐯𝐚𝐢𝐥𝐚𝐛𝐥𝐞: Senior Consultant (3-5 years) Principal Consultant (5-8 years) Lead Consultant (8+ years)

📍 𝐋𝐨𝐜𝐚𝐭𝐢𝐨𝐧: 𝐏𝐮𝐧𝐞 💼 𝐄𝐱𝐩𝐞𝐫𝐢𝐞𝐧𝐜𝐞: 𝟑-𝟏𝟎 𝐘𝐞𝐚𝐫𝐬 💸 𝐁𝐮𝐝𝐠𝐞𝐭: ₹𝟏𝟒 𝐋𝐏𝐀 - ₹𝟑𝟐 𝐋𝐏𝐀

ℹ 𝐅𝐨𝐫 𝐌𝐨𝐫𝐞 𝐔𝐩𝐝𝐚𝐭𝐞𝐬: https://chat.whatsapp.com/Ga1Lc94BXFrD2WrJNWpqIa

Register now and be a part of the data revolution! For more details, visit DataPhi.

2 notes

·

View notes

Text

What sets Konnect Insights apart from other data orchestration and analysis tools available in the market for improving customer experiences in the aviation industry?

I can highlight some general factors that may set Konnect Insights apart from other data orchestration and analysis tools available in the market for improving customer experiences in the aviation industry. Keep in mind that the competitive landscape and product offerings may have evolved since my last knowledge update. Here are some potential differentiators:

Aviation Industry Expertise: Konnect Insights may offer specialized features and expertise tailored to the unique needs and challenges of the aviation industry, including airports, airlines, and related businesses.

Multi-Channel Data Integration: Konnect Insights may excel in its ability to integrate data from a wide range of sources, including social media, online platforms, offline locations within airports, and more. This comprehensive data collection can provide a holistic view of the customer journey.

Real-Time Monitoring: The platform may provide real-time monitoring and alerting capabilities, allowing airports to respond swiftly to emerging issues or trends and enhance customer satisfaction.

Customization: Konnect Insights may offer extensive customization options, allowing airports to tailor the solution to their specific needs, adapt to unique workflows, and focus on the most relevant KPIs.

Actionable Insights: The platform may be designed to provide actionable insights and recommendations, guiding airports on concrete steps to improve the customer experience and operational efficiency.

Competitor Benchmarking: Konnect Insights may offer benchmarking capabilities that allow airports to compare their performance to industry peers or competitors, helping them identify areas for differentiation.

Security and Compliance: Given the sensitive nature of data in the aviation industry, Konnect Insights may include robust security features and compliance measures to ensure data protection and adherence to industry regulations.

Scalability: The platform may be designed to scale effectively to accommodate the data needs of large and busy airports, ensuring it can handle high volumes of data and interactions.

Customer Support and Training: Konnect Insights may offer strong customer support, training, and consulting services to help airports maximize the value of the platform and implement best practices for customer experience improvement.

Integration Capabilities: It may provide seamless integration with existing airport systems, such as CRM, ERP, and database systems, to ensure data interoperability and process efficiency.

Historical Analysis: The platform may enable airports to conduct historical analysis to track the impact of improvements and initiatives over time, helping measure progress and refine strategies.

User-Friendly Interface: Konnect Insights may prioritize a user-friendly and intuitive interface, making it accessible to a wide range of airport staff without requiring extensive technical expertise.

It's important for airports and organizations in the aviation industry to thoroughly evaluate their specific needs and conduct a comparative analysis of available solutions to determine which one aligns best with their goals and requirements. Additionally, staying updated with the latest developments and customer feedback regarding Konnect Insights and other similar tools can provide valuable insights when making a decision.

#DataOrchestration#DataManagement#DataOps#DataIntegration#DataEngineering#DataPipeline#DataAutomation#DataWorkflow#ETL (Extract#Transform#Load)#DataIntegrationPlatform#BigData#CloudComputing#Analytics#DataScience#AI (Artificial Intelligence)#MachineLearning#IoT (Internet of Things)#DataGovernance#DataQuality#DataSecurity

2 notes

·

View notes

Text

Data Engineer vs. Data Scientist The Battle for Data Supremacy

In the rapidly evolving landscape of technology, two professions have emerged as the architects of the data-driven world: Data Engineers and Data Scientists. In this comparative study, we will dive deep into the worlds of these two roles, exploring their unique responsibilities, salary prospects, and essential skills that make them indispensable in the realm of Big Data and Artificial Intelligence.

The world of data is boundless, and the roles of Data Engineers and Data Scientists are indispensable in harnessing its true potential. Whether you are a visionary Data Engineer or a curious Data Scientist, your journey into the realm of Big Data and AI is filled with infinite possibilities. Enroll in the School of Core AI’s Data Science course to day and embrace the future of technology with open arms.

2 notes

·

View notes

Text

Choosing between Power BI and Tableau for Databricks integration? This visual guide breaks down the key features that matter to data engineers and analysts—from connection methods to real-time analytics, cloud compatibility, and authentication.

🔍 Whether you're working in a Microsoft ecosystem or multi-cloud environment, knowing the right BI tool to pair with Databricks can accelerate your data pipeline efficiency.

💡 Explore the comparison and choose smarter.

🧠 Learn more from our detailed blog post: 🔗 https://databrickstraining7.blogspot.com/2025/08/databricks-power-bitableau-integration.html

🎓 Upskill with expert-led Databricks Training at AccentFuture: 🔗 https://www.accentfuture.com/courses/databricks-training/

#Databricks#PowerBI#Tableau#DataEngineering#BigDataTools#BIcomparison#ETLtools#DataAnalytics#MicrosoftPowerBI#TableauIntegration#SparkWithDatabricks#DataPipeline#AccentFuture#CloudAnalytics#DataCareer

0 notes

Text

Discover how combining Apache Spark, Power BI, and Tableau on Databricks enhances big data analytics and real-time insights. This powerful trio empowers organizations to process large-scale data, visualize patterns instantly, and make informed business decisions.

🚀 What You'll Learn:

How Apache Spark powers large-scale data processing

Real-time visualization with Tableau

Data-driven insights using Power BI

Seamless integration using Databricks

🎓 Learn Databricks from experts: 🔗 AccentFuture Databricks Training Course 📝 Read full blog here: 🔗 Databricks + Power BI + Tableau Integration Explained

💡 Start building your data career with the right tools and insights!

#Databricks#ApacheSpark#PowerBI#Tableau#DataVisualization#BigData#DataEngineering#AccentFuture#DataAnalytics#MachineLearning#LinkedInLearning#FlipboardTech#Scoopit#DataCommunity#TechTraining#RealTimeInsights#BItools

0 notes

Text

Top Data Engineering Services by BestPeers | Scalable & Cloud-Ready Solutions

Get scalable, cloud-based data engineering services from BestPeers. Clean, process & model data to unlock real-time insights for your business.

0 notes

Text

7 Types of Data Pipelines Every Data Engineer Should Know | #dataengineering #shorts

Join this channel to get access to perks: – – – Book a … source

0 notes

Text

🚀 Azure Data Engineer Online Training – Build Your Cloud Data Career with VisualPath! Step confidently into one of the most in-demand IT roles with VisualPath’s Azure Data Engineer Course Online. Whether you’re a fresher, a working professional, or an enterprise team seeking corporate upskilling, this practical program will help you master the skills to design, develop, and manage scalable data solutions on Microsoft Azure.

💡 Key Skills You’ll Gain:🔹 Azure Data Factory – Create and automate robust data pipelines🔹 Azure Databricks – Handle big data and deliver real-time analytics🔹 Power BI – Build interactive dashboards and data visualizations

📞 Reserve Your FREE Demo Spot Today – Limited Seats Available!

📲 WhatsApp Now: https://wa.me/c/917032290546

🔗 Visit: https://www.visualpath.in/online-azure-data-engineer-course.html 📖 Blog: https://visualpathblogs.com/category/azure-data-engineering/

#visualpathedu#Azure#AzureDataEngineer#MicrosoftAzure#AzureCloud#DataEngineering#CloudComputing#AzureTraining#AzureCertification#BigData#ETL#SQL#PowerBI#Databricks#AzureDataFactory#DataAnalytics#CloudEngineer#MachineLearning#AI#BusinessIntelligence#Snowflake#AzureDataScience

0 notes

Text

Azure Data Engineer Skills

🌐 Master In-Demand Azure Data Engineer Skills in 2025! 🌐

Hands-On Skills That Matter Build End-to-End Data Pipelines (ETL/ELT) Work with Azure Data Factory & Synapse Analytics Learn SQL, Python, Spark & Databricks Real-Time Data with Event Hubs & Stream Analytics Implement Data Security & Role-Based Access Automate with CI/CD using Azure DevOps

Ready to upgrade your data career? 📞 Enroll Now: +91-9882498844 🔗 Visit Us: www. azuretrainings.in

#azurecertification#azuretraining#ittraining#dataengineering#azure devops#microsoft azure#azuretime#certificationtraining#azurecourse#LearnAzure#AzureDataEngineer#TechTraining#MicrosoftAzure#CareerGrowth#Trending#Viral

0 notes

Text

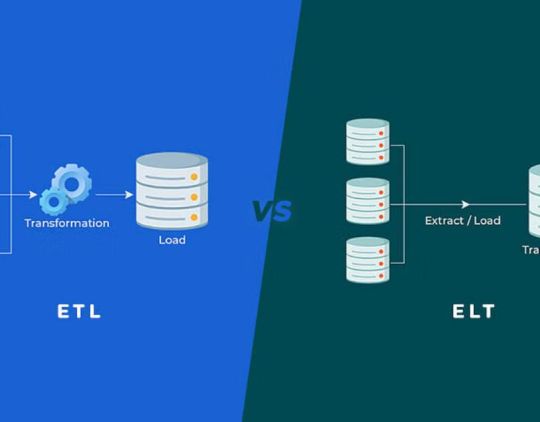

ETL vs ELT – Phân biệt đúng để chọn đúng chiến lược xử lý dữ liệu

ETL (Extract – Transform – Load) và ELT (Extract – Load – Transform) đều là quy trình xử lý dữ liệu trong hệ thống phân tích, nhưng khác biệt về trình tự và cách tối ưu tài nguyên. Việc hiểu rõ ưu – nhược điểm của từng mô hình sẽ giúp doanh nghiệp chọn đúng giải pháp, nâng cao hiệu suất và độ chính xác trong phân tích dữ liệu.

Đọc chi tiết: ETL và ELT – Những sự khác biệt cần phải biết

0 notes

Text

Data Engineering and AI: What You Need to Know

In today’s digital-first world, Artificial Intelligence (AI) is driving innovation across every industry. From personalized product recommendations to intelligent chatbots and self-driving cars, AI is everywhere. But behind every smart system lies something critical — data. And that’s where data engineering comes in.

If you're considering a career in tech, understanding how Data Engineering and AI work together is essential. Let’s explore their connection, why this pairing matters, and how a BCA in Data Engineering from Edubex can prepare you for this exciting future.

What is Data Engineering?

Data engineering involves designing, building, and maintaining systems that collect, store, and process large volumes of data. Data engineers make it possible for data scientists and AI systems to access clean, reliable, and scalable data.

Their core responsibilities include:

Developing data pipelines

Working with databases and cloud platforms

Ensuring data integrity and security

Automating data workflows

Without data engineers, AI algorithms would have no reliable data to work with.

How AI Relies on Data Engineering

AI needs vast amounts of high-quality data to learn and make accurate predictions. Here’s how data engineers support AI:

✅ Data Collection: Gathering raw data from multiple sources

✅ Data Cleaning: Removing errors, duplicates, and inconsistencies

✅ Data Structuring: Organizing data into usable formats

✅ Real-Time Data Flow: Providing continuous data streams for AI applications like fraud detection or recommendation systems

In short, AI is only as good as the data it receives, and that data is managed by engineers.

Key Tools and Technologies You’ll Learn

A BCA in Data Engineering from Edubex will help you master the tools that power modern AI systems:

Programming Languages: Python, SQL, Java

Big Data Platforms: Hadoop, Apache Spark

Cloud Computing: AWS, Google Cloud, Microsoft Azure

Data Warehousing: Snowflake, Redshift, BigQuery

AI/ML Basics: Understanding models and how they consume data

This skill set forms the backbone of both data engineering and AI development.

Why Choose a BCA in Data Engineering at Edubex?

Industry-Aligned Curriculum: Learn what's relevant in today’s job market

Hands-on Learning: Work on real-world data sets and projects

Career-Focused: Prepare for roles like Data Engineer, AI Engineer, and Data Analyst

Flexible Learning: 100% online learning options to fit your schedule

Global Recognition: Programs aligned with international standards

Careers at the Intersection of Data Engineering and AI

Completing a BCA in Data Engineering opens doors to a variety of AI-related careers:

Machine Learning Data Engineer

Data Pipeline Developer

Big Data Engineer

AI Infrastructure Engineer

Cloud Data Engineer

These roles are in high demand across industries like finance, healthcare, e-commerce, and more.

Final Thoughts

As AI continues to reshape our world, data engineers are becoming the unsung heroes of this transformation. By enrolling in a BCA in Data Engineering at Edubex, you're not just learning how to handle data — you’re preparing to power the intelligent systems of tomorrow.

Ready to take your place in the future of AI and data?

0 notes