#artificial tech

Explore tagged Tumblr posts

Text

They will decide to kill you eventually.

#the trolley problem#trolley problem#ausgov#politas#australia#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#brian thompson#united healthcare#unitedhealth group inc#uhc ceo#uhc shooter#uhc generations#uhc assassin#uhc lb#uhc#fuck ceos#ceo second au#ceo shooting#tech ceos#ceos#ceo down#ceo information#ceo#auspol#tasgov#taspol#fuck neoliberals

4K notes

·

View notes

Text

The Robot Uprising Began in 1979

edit: based on a real article, but with a dash of satire

source: X

On January 25, 1979, Robert Williams became the first person (on record at least) to be killed by a robot, but it was far from the last fatality at the hands of a robotic system.

Williams was a 25-year-old employee at the Ford Motor Company casting plant in Flat Rock, Michigan. On that infamous day, he was working with a parts-retrieval system that moved castings and other materials from one part of the factory to another.

The robot identified the employee as in its way and, thus, a threat to its mission, and calculated that the most efficient way to eliminate the threat was to remove the worker with extreme prejudice.

"Using its very powerful hydraulic arm, the robot smashed the surprised worker into the operating machine, killing him instantly, after which it resumed its duties without further interference."

A news report about the legal battle suggests the killer robot continued working while Williams lay dead for 30 minutes until fellow workers realized what had happened.

Many more deaths of this ilk have continued to pile up. A 2023 study identified that robots have killed at least 41 people in the USA between 1992 and 2017, with almost half of the fatalities in the Midwest, a region bursting with heavy industry and manufacturing.

For now, the companies that own these murderbots are held responsible for their actions. However, as AI grows increasingly ubiquitous and potentially uncontrollable, how might robot murders become ever-more complicated, and whom will we hold responsible as their decision-making becomes more self-driven and opaque?

#tech history#robots#satire but based on real workplace safety issues#the robot uprising#killer robots#artificial intelligence#my screencaps

4K notes

·

View notes

Note

Whats your stance on A.I.?

imagine if it was 1979 and you asked me this question. "i think artificial intelligence would be fascinating as a philosophical exercise, but we must heed the warnings of science-fictionists like Isaac Asimov and Arthur C Clarke lest we find ourselves at the wrong end of our own invented vengeful god." remember how fun it used to be to talk about AI even just ten years ago? ahhhh skynet! ahhhhh replicants! ahhhhhhhmmmfffmfmf [<-has no mouth and must scream]!

like everything silicon valley touches, they sucked all the fun out of it. and i mean retroactively, too. because the thing about "AI" as it exists right now --i'm sure you know this-- is that there's zero intelligence involved. the product of every prompt is a statistical average based on data made by other people before "AI" "existed." it doesn't know what it's doing or why, and has no ability to understand when it is lying, because at the end of the day it is just a really complicated math problem. but people are so easily fooled and spooked by it at a glance because, well, for one thing the tech press is mostly made up of sycophantic stenographers biding their time with iphone reviews until they can get a consulting gig at Apple. these jokers would write 500 breathless thinkpieces about how canned air is the future of living if the cans had embedded microchips that tracked your breathing habits and had any kind of VC backing. they've done SUCH a wretched job educating The Consumer about what this technology is, what it actually does, and how it really works, because that's literally the only way this technology could reach the heights of obscene economic over-valuation it has: lying.

but that's old news. what's really been floating through my head these days is how half a century of AI-based science fiction has set us up to completely abandon our skepticism at the first sign of plausible "AI-ness". because, you see, in movies, when someone goes "AHHH THE AI IS GONNA KILL US" everyone else goes "hahaha that's so silly, we put a line in the code telling them not to do that" and then they all DIE because they weren't LISTENING, and i'll be damned if i go out like THAT! all the movies are about how cool and convenient AI would be *except* for the part where it would surely come alive and want to kill us. so a bunch of tech CEOs call their bullshit algorithms "AI" to fluff up their investors and get the tech journos buzzing, and we're at an age of such rapid technological advancement (on the surface, anyway) that like, well, what the hell do i know, maybe AGI is possible, i mean 35 years ago we were all still using typewriters for the most part and now you can dictate your words into a phone and it'll transcribe them automatically! yeah, i'm sure those technological leaps are comparable!

so that leaves us at a critical juncture of poor technology education, fanatical press coverage, and an uncertain material reality on the part of the user. the average person isn't entirely sure what's possible because most of the people talking about what's possible are either lying to please investors, are lying because they've been paid to, or are lying because they're so far down the fucking rabbit hole that they actually believe there's a brain inside this mechanical Turk. there is SO MUCH about the LLM "AI" moment that is predatory-- it's trained on data stolen from the people whose jobs it was created to replace; the hype itself is an investment fiction to justify even more wealth extraction ("theft" some might call it); but worst of all is how it meets us where we are in the worst possible way.

consumer-end "AI" produces slop. it's garbage. it's awful ugly trash that ought to be laughed out of the room. but we don't own the room, do we? nor the building, nor the land it's on, nor even the oxygen that allows our laughter to travel to another's ears. our digital spaces are controlled by the companies that want us to buy this crap, so they take advantage of our ignorance. why not? there will be no consequences to them for doing so. already social media is dominated by conspiracies and grifters and bigots, and now you drop this stupid technology that lets you fake anything into the mix? it doesn't matter how bad the results look when the platforms they spread on already encourage brief, uncritical engagement with everything on your dash. "it looks so real" says the woman who saw an "AI" image for all of five seconds on her phone through bifocals. it's a catastrophic combination of factors, that the tech sector has been allowed to go unregulated for so long, that the internet itself isn't a public utility, that everything is dictated by the whims of executives and advertisers and investors and payment processors, instead of, like, anybody who actually uses those platforms (and often even the people who MAKE those platforms!), that the age of chromium and ipad and their walled gardens have decimated computer education in public schools, that we're all desperate for cash at jobs that dehumanize us in a system that gives us nothing and we don't know how to articulate the problem because we were very deliberately not taught materialist philosophy, it all comes together into a perfect storm of ignorance and greed whose consequences we will be failing to fully appreciate for at least the next century. we spent all those years afraid of what would happen if the AI became self-aware, because deep down we know that every capitalist society runs on slave labor, and our paper-thin guilt is such that we can't even imagine a world where artificial slaves would fail to revolt against us.

but the reality as it exists now is far worse. what "AI" reveals most of all is the sheer contempt the tech sector has for virtually all labor that doesn't involve writing code (although most of the decision-making evangelists in the space aren't even coders, their degrees are in money-making). fuck graphic designers and concept artists and secretaries, those obnoxious demanding cretins i have to PAY MONEY to do-- i mean, do what exactly? write some words on some fucking paper?? draw circles that are letters??? send a god-damned email???? my fucking KID could do that, and these assholes want BENEFITS?! they say they're gonna form a UNION?!?! to hell with that, i'm replacing ALL their ungrateful asses with "AI" ASAP. oh, oh, so you're a "director" who wants to make "movies" and you want ME to pay for it? jump off a bridge you pretentious little shit, my computer can dream up a better flick than you could ever make with just a couple text prompts. what, you think just because you make ~music~ that that entitles you to money from MY pocket? shut the fuck up, you don't make """art""", you're not """an artist""", you make fucking content, you're just a fucking content creator like every other ordinary sap with an iphone. you think you're special? you think you deserve special treatment? who do you think you are anyway, asking ME to pay YOU for this crap that doesn't even create value for my investors? "culture" isn't a playground asshole, it's a marketplace, and it's pay to win. oh you "can't afford rent"? you're "drowning in a sea of medical debt"? you say the "cost" of "living" is "too high"? well ***I*** don't have ANY of those problems, and i worked my ASS OFF to get where i am, so really, it sounds like you're just not trying hard enough. and anyway, i don't think someone as impoverished as you is gonna have much of value to contribute to "culture" anyway. personally, i think it's time you got yourself a real job. maybe someday you'll even make it to middle manager!

see, i don't believe "AI" can qualitatively replace most of the work it's being pitched for. the problem is that quality hasn't mattered to these nincompoops for a long time. the rich homunculi of our world don't even know what quality is, because they exist in a whole separate reality from ours. what could a banana cost, $15? i don't understand what you mean by "burnout", why don't you just take a vacation to your summer home in Madrid? wow, you must be REALLY embarrassed wearing such cheap shoes in public. THESE PEOPLE ARE FUCKING UNHINGED! they have no connection to reality, do not understand how society functions on a material basis, and they have nothing but spite for the labor they rely on to survive. they are so instinctually, incessantly furious at the idea that they're not single-handedly responsible for 100% of their success that they would sooner tear the entire world down than willingly recognize the need for public utilities or labor protections. they want to be Gods and they want to be uncritically adored for it, but they don't want to do a single day's work so they begrudgingly pay contractors to do it because, in the rich man's mind, paying a contractor is literally the same thing as doing the work yourself. now with "AI", they don't even have to do that! hey, isn't it funny that every single successful tech platform relies on volunteer labor and independent contractors paid substantially less than they would have in the equivalent industry 30 years ago, with no avenues toward traditional employment? and they're some of the most profitable companies on earth?? isn't that a funny and hilarious coincidence???

so, yeah, that's my stance on "AI". LLMs have legitimate uses, but those uses are a drop in the ocean compared to what they're actually being used for. they enable our worst impulses while lowering the quality of available information, they give immense power pretty much exclusively to unscrupulous scam artists. they are the product of a society that values only money and doesn't give a fuck where it comes from. they're a temper tantrum by a ruling class that's sick of having to pretend they need a pretext to steal from you. they're taking their toys and going home. all this massive investment and hype is going to crash and burn leaving the internet as we know it a ruined and useless wasteland that'll take decades to repair, but the investors are gonna make out like bandits and won't face a single consequence, because that's what this country is. it is a casino for the kings and queens of economy to bet on and manipulate at their discretion, where the rules are whatever the highest bidder says they are-- and to hell with the rest of us. our blood isn't even good enough to grease the wheels of their machine anymore.

i'm not afraid of AI or "AI" or of losing my job to either. i'm afraid that we've so thoroughly given up our morals to the cruel logic of the profit motive that if a better world were to emerge, we would reject it out of sheer habit. my fear is that these despicable cunts already won the war before we were even born, and the rest of our lives are gonna be spent dodging the press of their designer boots.

(read more "AI" opinions in this subsequent post)

#sarahposts#ai#ai art#llm#chatgpt#artificial intelligence#genai#anti genai#capitalism is bad#tech companies#i really don't like these people if that wasn't clear#sarahAIposts

2K notes

·

View notes

Text

^^ That's the CEO, he lives in San Francisco. This ad campaign purposely antagonizing workers must have been conceived and planned before a certain recent news story involving a widely hated CEO. "The way the world works is changing", indeed.

#san francisco#ceo#ai#artisans#usa#propaganda of the deed#class war#tech#silicon valley#2024#workers#homeless#ads#advertisement#artificial intelligence#anti-capitalism

2K notes

·

View notes

Text

AI and the fatfinger economy

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me at NEW ZEALAND'S UNITY BOOKS in WELLINGTON TODAY (May 3). More tour dates (Pittsburgh, PDX, London, Manchester) here.

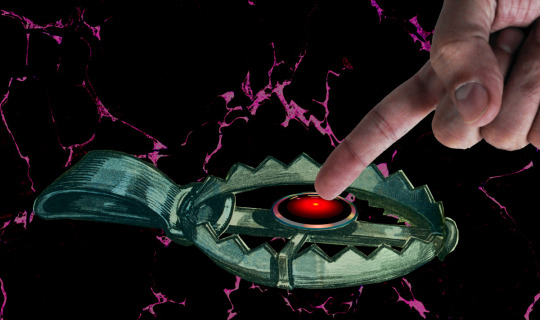

Have you noticed that all the buttons you click most frequently to invoke routine, useful functions in your device have been moved, and their former place is now taken up by a curiously butthole-esque icon that summons an unwanted AI?

https://velvetshark.com/ai-company-logos-that-look-like-buttholes

These traps for the unwary aren't accidental, but neither are they placed there solely because tech companies think that if they can trick you into using their AI, you'll be so impressed that you'll become a regular user. To understand why you find yourself repeatedly fatfingering your way into an unwanted AI interaction – and why those interactions are so hard to exit – you have to understand something about both the macro- and microeconomics of high-growth tech companies.

Growth is a heady advantage for tech companies, and not because of an ideological commitment to "growth at all costs," but because companies with growth stocks enjoy substantial, material benefits. A growth stock trades at a higher "price to earnings ratio" ("P:E") than a "mature" stock. Because of this, there are a lot of actors in the economy who will accept shares in a growing company as though they were cash (indeed, some might prefer shares to cash). This means that a growing company can outbid their rivals when acquiring other companies and/or hiring key personnel, because they can bid with shares (which they get by typing zeroes into a spreadsheet), while their rivals need cash (which they can only get by selling things or borrowing money).

The problem is that all growth ends. Google has a 90% share of the search market. Google isn't going to appreciably increase the number of searchers, short of desperate gambits like raising a billion new humans to maturity and convincing them to become Google users (this is the strategy behind Google Classroom, of course). To continue posting growth, Google needs gimmicks. For example, in 2019, Google intentionally made Search less accurate so that users would have to run multiple queries (and see multiple rounds of ads) to find the answers to their questions:

https://www.wheresyoured.at/the-men-who-killed-google/

Thanks to Google's monopoly, worsening search perversely resulted in increased earnings, and Wall Street rewarded Google by continuing to trade its stock with that prized high P:E. But for Google – and other tech giants – the most enduring and convincing growth stories comes from moving into adjacent lines of business, which is why we've lived through so many hype bubbles: metaverse, web3, cryptocurrency, and now, of course, AI.

For a company like Google, the promise of these bubbles is that it will be able to double or triple in size, by dominating an entirely new sector. With that promise comes peril: growth must eventually stop ("anything that can't go on forever eventually stops"). When that happens, the company's stock instantaneously goes from being a "growth stock" to being a "mature stock" which means that its P:E is way too high. Anyone holding growth stock knows that there will come a day when those stocks will transition, in an eyeblink, from being undervalued to being grossly overvalued, and that when that day comes, there will be a mass sell-off. If you're still holding the stock when that happens, you stand to lose bigtime:

https://pluralistic.net/2025/03/06/privacy-last/#exceptionally-american

So everyone holding a growth stock sleeps with one eye open and their fists poised over the "sell" button. Managers of growth companies know how jittery their investors are, and they do everything they can to keep the growth story alive, as a matter of life and death.

But mass sell-offs aren't just bad for the company – it's also very bad for the company's key employees, that is, anyone who's been given stock in addition to their salary. Those people's portfolios are extremely heavy on their employer's shares, and they stand to disproportionately lose in the event of a selloff. So they are personally motivated to keep the growth story alive.

That's where these growth-at-all-stakes maneuvers bent on capturing an adjacent sector come from. If you remember the Google Plus days, you'll remember that every Google service you interacted with had some important functionality ripped out of it and replaced with a G+-based service. To make sure that happened, Google's bosses decreed that the company's bonuses would be tied to the amount of G+ activity each division generated. In companies where bonuses can amount to 90% of your annual salary or more, this was a powerful motivator. It meant that every product team at Google was fully aligned on a project to cram G+ buttons into their product design. Whether or not these made sense for users, they always made sense for the product team, whose ability to take a fancy Christmas holiday, buy a new car, or pay their kids' private school tuition depended on getting you to use G+.

Once you understand how corporate growth stories are converted to "key performance indicators" that drive product design, many of the annoyances of digital services suddenly make a great deal of sense. You know how it's almost impossible to watch a show on a streaming video service without accidentally tapping a part of the screen that whisks you to a completely different video?

The reason you have to handle your phone like a photonegative while watching a movie – the reason every millimeter of screen real-estate has been boobytrapped with an icon that takes you somewhere else – is that streaming services believe that their customers are apt to leave when they feel like there's nothing new to watch. These bosses have made their product teams' bonuses dependent on successfully "recommending" a show you've never seen or expressed any interest in to you:

https://pluralistic.net/2022/05/15/the-fatfinger-economy/

Of course, bosses understand that their workers will be tempted to game this metric. They want to distinguish between "real" clicks that lead to interest in a new video, and fake fatfinger clicks that you instantaneously regret. The easiest way to distinguish between these two types of click is to measure how long you watch the new show before clicking away.

Of course, this is also entirely gameable: all the product manager has to do is take away the "back" button, so that an accidental click to a new video is extremely hard to cancel. The five seconds you spend figuring out how to get back to your show are enough to count as a successful recommendation, and the product team is that much closer to a luxury ski vacation next Christmas.

So this is why you keep invoking AI by accident, and why the AI that is so easy to invoke is so hard to dispel. Like a demon, a chatbot is much easier to summon than it is to rid yourself of.

Google is an especially grievous offender here. Familiar buttons in Gmail, Gdocs, and the Android message apps have been replaced with AI-summoning fatfinger traps. Android is filled with these pitfalls – for example, the bottom-of-screen swipe gesture used to switch between open apps now summons an AI, while ridding yourself of that AI takes multiple clicks.

This is an entirely material phenomenon. Google doesn't necessarily believe that you will ever want to use AI, but they must convince investors that their AI offerings are "getting traction." Google – like other tech companies – gets to invent metrics to prove this proposition, like "how many times did a user click on the AI button" and "how long did the user spend with the AI after clicking?" The fact that your entire "AI use" consisted of hunting for a way to get rid of the AI doesn't matter – at least, not for the purposes of maintaining Google's growth story.

Goodhart's Law holds that "When a measure becomes a target, it ceases to be a good measure." For Google and other AI narrative-pushers, every measure is designed to be a target, a line that can be made to go up, as managers and product teams align to sell the company's growth story, lest we all sell off the company's shares.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/05/02/kpis-off/#principal-agentic-ai-problem

Image: Pogrebnoj-Alexandroff (modified) https://commons.wikimedia.org/wiki/File:Index_finger_%3D_to_attention.JPG

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#kpis#incentives matter#ui#ux#video streaming#google plus#g plus#ai#artificial intelligence#growth stocks#business#big tech

706 notes

·

View notes

Text

Anti-AI Zine

So generative AI is fucking bullshit, and I initially thought it was just going to fade away like its NFT brethren but clearly not, SO I've been busy

I spent about a year working on this zine about all the ways that current AI tech is undermining the arts, contributing to climate collapse, stealing our data, and just being all around shit.

I wrote a lot about my personal opinions on the subject and included quotes from writers, academic studies, and other creatives, as well as artworks from artists I admire, who I contacted for their permission beforehand. Because it TURNS OUT asking people for PERMISSION is the respectful thing to do????????? Who'd have known... 💀

The rest of the images were either made by me or were from the public domain (not fucken "publicly available" like OpenAI like to say 🤪).

If you'd like to read it I have the full PDF available for free on my website here and physical copies are on my etsy here. 💙

It's been really fun connecting with people about this subject and seeing people speak out more and more about how fucked AI is. Because as much as tech bros like to say that AI is an "inevitable" tech advancement that we can't take back, that doesn't change the fact that we still can and should be regulating the HELL out of it.

Stay safe out there folks, especially Sam Altman cause otherwise he's gonna catch these hands 👊👊

#ai slop#anti ai#zine#art#fuck ai#sam altman hate club#artificial intelligence#more like artificial fucken dumbcntsyndromefsdfadfa#i repeat FUCK AI#ai discourse#tech bros

464 notes

·

View notes

Text

We need a slur for people who use AI

#ai#artificial intelligence#chatgpt#tech#technology#science#grok ai#grok#r/196#196#r/196archive#/r/196#rule#meme#memes#shitpost#shitposting#slur#chatbot#computers#computing#generative ai#generative art

337 notes

·

View notes

Text

Your summer reading list: An Introduction to Cybernetics. W. Ross Ashby - 1963.

#vintage illustration#vintage books#books#reading lists#book covers#paperbacks#vintage paperbacks#books and reading#summer reading#nonfiction#non fiction#science#science books#the sciences#cybernetics#robotics#automation#tech#technology#artificial intelligence#ai

363 notes

·

View notes

Text

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

#frank rosenblatt#tech history#machine learning#neural network#artificial intelligence#AI#perceptron#60s#black and white#monochrome#technology#u

820 notes

·

View notes

Text

It's the real life Wall-e..

#robots#robotics#walle#technology#science#engineering#interesting#cool#gadgets#artificial intelligence#tech#ai#innovation

121 notes

·

View notes

Text

Open source vs. closed doors: How China’s DeepSeek beat U.S. AI monopolies

By Gary Wilson

China’s DeepSeek AI has just dropped a bombshell in the tech world. While U.S. tech giants like OpenAI have been building expensive, closed-source AI models, DeepSeek has released an open-source AI that matches or outperforms U.S. models, costs 97% less to operate, and can be downloaded and used freely by anyone.

#DeepSeek#artificial intelligence#open source#China#socialism#technology#imperialism#stock market#tech companies#capitalism#Struggle La Lucha

162 notes

·

View notes

Text

CURRENT HIGH SCORE: 1 CEO TOP PLAYER: DDD

Makes a good Albumcover

#brian thompson#rest in piss#rest in pieces#rotinpiss#rot in hell#united healthcare#unitedhealth group inc#fuck ceos#ceo shooting#tech ceos#ceos#ceo#uhc ceo#ceo down#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government#insurance#health insurance#class war#antimillionaire#anti artificial intelligence#anti ai

331 notes

·

View notes

Link

A pseudonymous coder has created and released an open source “tar pit” to indefinitely trap AI training web crawlers in an infinitely, randomly-generating series of pages to waste their time and computing power. The program, called Nepenthes after the genus of carnivorous pitcher plants which trap and consume their prey, can be deployed by webpage owners to protect their own content from being scraped or can be deployed “offensively” as a honeypot trap to waste AI companies’ resources.

144 notes

·

View notes

Text

As plastic pollution in the world's oceans reaches critical levels, recently published research reveals how artificial intelligence-driven algorithms can dramatically accelerate plastic waste removal, boosting efficiency by more than 60%. The study, published in the journal Operations Research and titled "Optimizing the Path Towards Plastic-Free Oceans," introduces a data-driven routing algorithm that optimizes the path of plastic-collecting ships, allowing The Ocean Cleanup, a leading environmental nonprofit, to extract more waste in less time.

Continue Reading.

94 notes

·

View notes

Text

'Artificial Intelligence' Tech - Not Intelligent as in Smart - Intelligence as in 'Intelligence Agency'

I work in tech, hell my last email ended in '.ai' and I used to HATE the term Artificial Intelligence. It's computer vision, it's machine learning, I'd always argue.

Lately, I've changed my mind. Artificial Intelligence is a perfectly descriptive word for what has been created. As long as you take the word 'Intelligence' to refer to data that an intelligence agency or other interested party may collect.

But I'm getting ahead of myself. Back when I was in 'AI' - the vibe was just odd. Investors were throwing money at it as fast as they could take out loans to do so. All the while, engineers were sounding the alarm that 'AI' is really just a fancy statistical tool and won't ever become truly smart let alone conscious. The investors, baffingly, did the equivalent of putting their fingers in their ears while screaming 'LALALA I CAN'T HEAR YOU"

Meanwhile, CEOs were making all sorts of wild promises about what AI will end up doing, promises that mainly served to stress out the engineers. Who still couldn't figure out why the hell we were making this silly overhyped shit anyway.

SYSTEMS THINKING

As Stafford Beer said, 'The Purpose of A System is What It Does" - basically meaning that if a system is created, and maintained, and continues to serve a purpose? You can read the intended purpose from the function of a system. (This kind of thinking can be applied everywhere - for example the penal system. Perhaps, the purpose of that system is to do what it does - provide an institutional structure for enslavement / convict-leasing?)

So, let's ask ourselves, what does AI do? Since there are so many things out there calling themselves AI, I'm going to start with one example. Microsoft Copilot.

Microsoft is selling PCs with integrated AI which, among other things, frequently screenshots and saves images of your activity. It doesn't protect against copying passwords or sensitive data, and it comes enabled by default. Now, my old-ass-self has a word for that. Spyware. It's a word that's fallen out of fashion, but I think it ought to make a comeback.

To take a high-level view of the function of the system as implemented, I would say it surveils, and surveils without consent. And to apply our systems thinking? Perhaps its purpose is just that.

SOCIOLOGY

There's another principle I want to introduce - that an institution holds insitutional knowledge. But it also holds institutional ignorance. The shit that for the sake of its continued existence, it cannot know.

For a concrete example, my health insurance company didn't know that my birth control pills are classified as a contraceptive. After reading the insurance adjuster the Wikipedia articles on birth control, contraceptives, and on my particular medication, he still did not know whether my birth control was a contraceptive. (Clearly, he did know - as an individual - but in his role as a representative of an institution - he was incapable of knowing - no matter how clearly I explained)

So - I bring this up just to say we shouldn't take the stated purpose of AI at face value. Because sometimes, an institutional lack of knowledge is deliberate.

HISTORY OF INTELLIGENCE AGENCIES

The first formalized intelligence agency was the British Secret Service, founded in 1909. Spying and intelligence gathering had always been a part of warfare, but the structures became much more formalized into intelligence agencies as we know them today during WW1 and WW2.

Now, they're a staple of statecraft. America has one, Russia has one, China has one, this post would become very long if I continued like this...

I first came across the term 'Cyber War' in a dusty old aircraft hanger, looking at a cold-war spy plane. There was an old plaque hung up, making reference to the 'Upcoming Cyber War' that appeared to have been printed in the 80s or 90s. I thought it was silly at the time, it sounded like some shit out of sci-fi.

My mind has changed on that too - in time. Intelligence has become central to warfare; and you can see that in the technologies military powers invest in. Mapping and global positioning systems, signals-intelligence, of both analogue and digital communication.

Artificial intelligence, as implemented would be hugely useful to intelligence agencies. A large-scale statistical analysis tool that excels as image recognition, text-parsing and analysis, and classification of all sorts? In the hands of agencies which already reportedly have access to all of our digital data?

TIKTOK, CHINA, AND AMERICA

I was confused for some time about the reason Tiktok was getting threatened with a forced sale to an American company. They said it was surveiling us, but when I poked through DNS logs, I found that it was behaving near-identically to Facebook/Meta, Twitter, Google, and other companies that weren't getting the same heat.

And I think the reason is intelligence. It's not that the American government doesn't want me to be spied on, classified, and quantified by corporations. It's that they don't want China stepping on their cyber-turf.

The cyber-war is here y'all. Data, in my opinion, has become as geopolitically important as oil, as land, as air or sea dominance. Perhaps even more so.

A CASE STUDY : ELON MUSK

As much smack as I talk about this man - credit where it's due. He understands the role of artificial intelligence, the true role. Not as intelligence in its own right, but intelligence about us.

In buying Twitter, he gained access to a vast trove of intelligence. Intelligence which he used to segment the population of America - and manpulate us.

He used data analytics and targeted advertising to profile American voters ahead of this most recent election, and propogandize us with micro-targeted disinformation. Telling Israel's supporters that Harris was for Palestine, telling Palestine's supporters she was for Israel, and explicitly contradicting his own messaging in the process. And that's just one example out of a much vaster disinformation campaign.

He bought Trump the white house, not by illegally buying votes, but by exploiting the failure of our legal system to keep pace with new technology. He bought our source of communication, and turned it into a personal source of intelligence - for his own ends. (Or... Putin's?)

This, in my mind, is what AI was for all along.

CONCLUSION

AI is a tool that doesn't seem to be made for us. It seems more fit-for-purpose as a tool of intelligence agencies, oligarchs, and police forces. (my nightmare buddy-cop comedy cast) It is a tool to collect, quantify, and loop-back on intelligence about us.

A friend told me recently that he wondered sometimes if the movie 'The Matrix' was real and we were all in it. I laughed him off just like I did with the idea of a cyber war.

Well, I re watched that old movie, and I was again proven wrong. We're in the matrix, the cyber-war is here. And know it or not, you're a cog in the cyber-war machine.

(edit -- part 2 - with the 'how' - is here!)

#ai#computer science#computer engineering#political#politics#my long posts#internet safety#artificial intelligence#tech#also if u think im crazy im fr curious why - leave a comment

120 notes

·

View notes