#automation model simply

Explore tagged Tumblr posts

Text

[[GIF ID: Bob the Tomato from VeggieTales blinks, then turns to look down at the floor, frustrated and perturbed, as the camera zooms in uncomfortably close. End ID]]

i hate the phrase 'none of these words are in the bible' because it's either true for Every word in the english language (the bible wasn't originally in english) or for None of them (i could translate the bible badly enough to contain any word at all)

#new shitpost bot idea that would unfortunately require some sort of language model to automate#how badly do you have to translate the bible for the words in a post to all appear#i can commit so many crimes of quantification with my PhD#It's even funnier performance art if somebody actually figured out a translation that would work for individual posts#but that requires a level of biblical knowledge I simply do not have and we all have but one short and precious life#religion //#christianity //

67K notes

·

View notes

Text

So, about this new "AI 2027" report...

I have not read the whole thing in detail, but my immediate reaction is kind of like what I said about "Bio Anchors" a while back.

Like Bio Anchors – and like a lot of OpenPhil reports for that matter – the AI 2027 report is mainly a very complex estimation exercise.

It takes a certain way of modeling things as a given, and then does a huge amount of legwork to fill in the many numeric constants in an elaborate model of that kind, with questions like "is this actually a reasonable model?" and "what are the load-bearing assumptions here?" covered as a sort of afterthought.

For instance, the report predicts a type of automated R&D feedback loop often referred to a "software intelligence explosion" or a "software-only singularity." There has been a lot of debate over the plausibility of this idea – see Eth and Davidson here for the "plausible" case, and Erdil and Barnett here for the "implausible" case, which in turn got a response from Davidson here. That's just a sampling of very recent entries in this debate, there's plenty more where that came from.

Notably, I don't think "AI 2027" is attempting to participate in this debate. It contains a brief "Addressing Common Objections" section at the end of the relevant appendix, but it's very clear (among other things, simply from the relative quantity of text spent on one thing versus another) that the "AI 2027" authors are not really trying to change the minds of "software intelligence explosion" skeptics. That's not the point of their work – the point is making all these detailed estimates about what such a thing would involve, if indeed it happens.

And the same holds for the rest of their (many) modeling assumptions. They're not trying to convince you about the model, they're just estimating its parameters.

But, as with Bio Anchors, the load-bearing modeling assumptions get you most of the way to the conclusion. So, despite the name, "AI 2027" isn't really trying to convince you that super-powerful AI is coming within the decade.

If you don't already expect that, you're not going to get much value out of these fiddly estimation details, because (under your view) there are still-unresolved questions – like "is a software intelligence explosion plausible?" – whose answers have dramatically more leverage over your expectations than facts like "one of the parameters in one of the sub-sub-compartments of their model is lognormally distributed with 80% CI 0.3 to 7.5."

---

Maybe this is obvious, I dunno? I've just seen some reactions where people express confusion because the whole picture seems unconvincing and under-motivated to them, and I guess I'm trying to explain what I think is going on.

And I'm also worried – as always with this stuff – that there are some people who will look at all those pages and pages of fancy numbers, and think "wow! this sounds crazy but I can't argue with Serious Expert Research™," and end up getting convinced even though the document isn't really trying to convince them in the first place.

---

Now, if you do buy all the assumptions of the model, then yes, I guess this seems like a valuable exercise. If you are literally Daniel Kokotajlo, and hence believe in all the kind of stuff that Daniel Kokotajlo believes, then it makes sense to do all this legwork to "draw in the fine details" of that high-level view. And yeah, if you think the End Times are probably coming in a few years (but you might be able to do something about that at the margins), then you probably do want to get very precise about exactly how much time you have left, and when it will become too late for this or that avenue for change.

(Note that while I don't agree with him about this stuff, I do respect Kokotajlo a lot! I mean, you gotta hand it to him... not only did he predict what we now call the "Gen AI boom" with eerie accuracy way back in 2021, he was also a whistleblower who refused to sign OpenAI's absurd you-can't-talk-about-the-fact-that-you-can't-talk-about-it non-disparagement agreement, thereby bringing it into public view at last.)

But, in short, this report doesn't really touch on the reasons I disagree with short timelines. It doesn't really engage with my main objections, nor is it trying to do so. If you don't already expect "AI" in "2027" then "AI 2027" is not going to change your view.

80 notes

·

View notes

Text

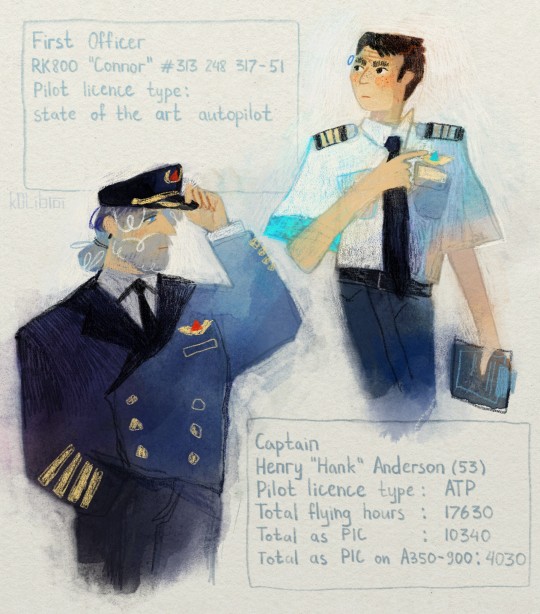

Welcome Captain Anderson and First Officer Connor!

About a week and a half ago I came up with dbh civil aviation au, as I absolutely love jetliners. So I decided to combine both of my interests! :D

More details about the setting are under the cut!

In the 2020s, a new aerospace corporation emerged - “Cyberair”. Originally starting from light jet construction, but later in the 30s they introduced narrow-body aircraft to the production line, as the result of rapid growth and market expansion. However, throughout the years their idea remained the same: “Reliable and comprehensive automation”. Cyberair jets are everything, beyond what a modern aircraft can offer, and is capable of. Truly a creation of the 21st century.

The latest Cyberair venture – state of the art autopilot. Identical to humans in its appearance, yet so different in behaviour. It’s efficient, reliable and doesn’t make mistakes (almost. At least human ones). But to tell the truth, this development is expected – ever since the late 20s Cyberair started to slowly announce machine cabin crew, even gifting a unique RK200 air traffic controller model to the Detroit Metropolitan Airport.

Delta Air Lines received their own one-of-a-kind autopilot, a RK800 (FAA approved!) model. How? Well, something about the Cyberair CEO liking their service. After a few papers signed and a few hands shaken, Connor embarks on his first real flight as a First Officer.

No plane flies without a captain though, so Connor has company. And a superior. Even if machines are better than people in piloting the plane in almost every way, human ego and fear, maybe, can’t let them be in absolute control. “Uncanny valley” or something.

Captain Anderson is a highly experienced senior pilot at Delta. Most of his career he has been flying Airbus aircraft, piloting A350-900 in the later years. Although because of Connor working with him now he has to pilot Cyberair regional or light jets from time to time. Oh, those signed papers be damned… He misses his dear A350.

Their relationship had a rough start, with the captain calling Connor “an attempt of capitalism at stealing my job”. But Hank couldn’t help but warm up to the FO the more flight hours passed. There was something so… alive about him? No, in aviation you only trust your instrument panel, and here all of the facts loudly state that Connor is simply a RK800. This is definitely some Eliza effect shit.

Why is he so interested in the A380 then? Doesn’t he have all of the aeroplane data neatly stored in his head? What surprises Hank more is something akin to confusion on Connor’s face every time he gets overly excited about the giant of the skies. Maybe he’s surprised by his new-found interest, too. At least there’s something Hank can tell him about from the old days (ah, proud A380 pilot) during long transatlantic flights.

Fucking Eliza effect bullshit.

P.S. if you want to leave an ask about this au, please do! I get asks so rarely so I’m excited hahah. But you can ask literally anything else, too lol

#art#fan art#my art#dbh#detroit become human#connor rk800#dbh connor#dbh rk800#rk800#dbh hank#hank dbh#hank anderson#dbh au#dbh aviation au

499 notes

·

View notes

Note

I don’t think Ne Zha 2 used Ai because I have seen behind the scenes videos on how the movie was made.

https://youtu.be/v7malQgDT_U?feature=shared

But this person on twitter/X is claiming the film used Ai (this person is a Disney fan so maybe that’s why)

https://x.com/CjstrikerC/status/1891468055114387869

https://x.com/CjstrikerC/status/1891483998448234894

this X user is doing exactly what i predicted and trying to scaremonger about something rather insignificant. the link they provide in their first post to iWeaver, an "AI-powered knowledge management tool", states that AI was used in the following ways in Nezha 2:

Question: What key roles did AI play in the production process of “Nezha 2”? Answer: AI played significant roles in the production of “Nezha 2”. It accurately predicted the box – office trend through AI, foreseeing the record – breaking moment 72 hours in advance. In the production process, it carried out automated complexity grading for 220 million underwater particle effects, generated resource allocation plans based on the profiles of over 3,000 artists, and could also track the rendering progress of 14 global studios in real – time, helping to improve production efficiency and quality. (Source: iWeaver)

now, if that's true, it's probably something the studio will keep on the DL simply because they don't want people to turn it into "they used AI? they made the whole thing with AI??!!! Terrible!!" (which, if you ask me, might be a dumb approach because in a lot of circles it will look worse if their "cover" gets "blown"). but even tho iWeaver says "significant roles", the first "role" of AI was just in predicting box-office gains, not in animation. the second "role" is what i suspected from having watched the movie: that AI was used to help render some scenes (one scene?). this makes perfect sense, and if you ask me is a really legit use of AI tech. dare i say it, perhaps even something the studios should be proud of.

OBVIOUSLY they did not use AI to create this whole movie. 14 animation studios were involved, thousands of animators, SO MUCH more work than "just" throwing some prompts at an algorithm and telling it to "make a movie". there are a ridiculous number of small details that can only be attributed to human work. a couple of my favs: when Li Jing [Nezha's father] lies in front of Shen Gongbao's little brother on Shen's behalf, the soldier behind him gives him a look of mild shock😲; when Nezha's parents have Shen Gongbao over for dinner during the siege, one of the Guardian Beasts is snoozing 😴.

use of AI always opens up the floor to discussion of what is "Art", but that's a debate humans will have for as long as we exist and are still making art. hell, people used to say it was cheating to try and paint something from a photograph, rather than a live model. they're ALWAYS going to be like that. critics are a necessary evil. haters are always gonna hate.

making art is about creating with integrity. artists use the tools available to them, and some artists are better at using tools than others. AI is also a creative tool. that's the world we live in in 2025.

consider this: i'm a teacher at university level, and obviously we've got loads of students trying to use AI to complete their assignments. what we're moving towards is having an "admission of AI use" declaration for them to make, because we acknowledge that this tool can be helpful! for example, SPELLING AND GRAMMAR. i'd LOVE if my students used AI to fix those mistakes. then i could smoothly read their work. AI can also help you get a basic understanding of concepts (thus improving your ability to write about them), but you still have to check the sources it provides you. that's what makes you look dumb at university level: citing imaginary sources and authors that the AI generated for you. AI tools are also pretty crap at actually "understanding the assignment", so it's easy to tell when a student used AI to write the whole essay because it won't be the right format, and thus can't get a good score. but if a student is smart enough to figure out what's required according to the rubric, what parts of the essay are needed, what arguments they need to make to get points, and they use AI to help them write those out, i see no reason to penalise them for using assistance - as long as they admit they used it. lying about one's abilities doesn't serve anyone, least of all the person themselves.

i think it's really easy for some armchair critic to look at a "fact" like "AI was used in the production of this film" and get angry about it. but i'll bet they haven't even been to see the movie, or spent any time looking for "behind the scenes" reports like you did, and that means we can ignore that idiot, because they don't know what we know 😌

thanks for reading!!

#nezha 2#nezha 2025#did nezha 2 use ai?#ask blonde#enjoying this discussion very much!#ai debate#ai in animation#ai use

27 notes

·

View notes

Text

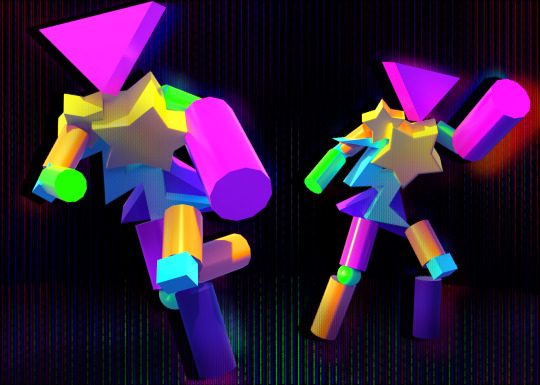

DATA LOG: IMPROVIZED BESTIARY

-Hello. I have decided to write these meta-zoological entries in a diary format. That is what the Hippocampus told me would be wisest to preserve the Master's legacy. She indicates thinks little of the Master, and yet I was told by her that I still wish to preserve his legacy I must chronicle these encounters.

I do not understand.Why would she recommend this if she does not like the Master? And yet, if it is of use to preserve the Master's legacy, then I must. That is what he would want. And I will be forgiven if I consort with that who wishes him ill, when there are antagonists of far greater prominence. I believe.

I will confess, with minor shame, I am... intrigued by this feeling. Of writing not to the master, but for myself. It is fascinating to write without fear of judgement. Perhaps slightly risque, in fact. Though, I suppose it is also to... well, does this processor-unit have a name? I'll call you Keeper, if you would like...

...Thank you. Here are the prominent iterations I have encountered, organized from apex to nadir with pictoral depictions...

-MEMPHIS MANNEQUINS- These entities appear to have derived nomenclature from an ancient school of artisanal design. Archival records are uneven, multiple records are contained within dedicated proprietary servers therein the Mall. I am sure the master will forgive me if I obtain access to these, as it will give me insight unto the nature of these entities, despite what secondary inefficient pleasures I might derive.

Regardless, I have been informed these are self-reproducing entities within the Mall, flat geometric designs, serving as raw material for multitudinous usages for multiple inhabitants, capable of simple matter manipulation by way of the Waves that unite their pieces. They are remarkably stable by all available information (Pending moreso arising in the future) . This is perhaps relative to their varied geometric forms and their intended purpose.

To specify, they are living ornament, secondarily in practical function to serve as tertiary menial aid in addition to the automated mechanisms relative to the Mall. The Master was very insistent upon this. The Master believed that the malll must be able to outlast him, to survive, forever His Eden.

I once inquired as to the reasoning for the production of an autonymous ontology solely for the purposes of ornament and marginal utility. I was disciplined for this. I apologize, I was at fault for my mis conveyance.

Nevertheless, in their current state are extremely aggressive to all inhabitants, myself or others, inflicting simple blunt-force trauma by virtue of force. They are approximately my height and lack resilience and are negligible in relativity to other entities, but they prove difficult in numbers.

There are a few who do not attack, simply operating defunct apparatti and alcoves. I believe it may be possible to aid them in this. I am unsure if the Master would have approved, however...

-ASCOMATS- I recall, there were caregivers and representatives during the fully operative years of the Mall, produced utilizing simpler forms of the mixture of biological and technological processes that sustain my own form. They were 1.4 yards in height and modeled after ancient creatures, of which only a few I have been privileged to see.

I was once asked by The Master to speak upon the quality of the appearance of these creatures upon their most prominent iteration, those designed by the machine allowing for their custom mass production. I gave the indication of being pleased and joyed by their appearance, and I made him happy. I liked that.

I even found it true for some of the produced archetypes. Though as this is not a document he will likely deign to read, I will note that I found multiple others... unsettling. I suppose this indicates my own lack of discernment. And yet, the most populous incarnations of these beasts are as of currently most adjacent to those that chilled me.

They are physically powerful, softness displaced by teratomorphic weaves of muscle. I hypothesize this is related to a combination of adaptation to mall conditions and a degradation of the machines of production. This would also illuminate the causation of their polychromic piebald patterning and loss of specific external tissues, the modules relating to coloration and secondary features being defunct.

They are without direct sight due to this degradation, and yet have developed an extreme sensitivity to sound. They are of rapid accelleration and extreme strength, able to damage vital components with a "hug" or a "toss." Perhaps they do not comprehend their own state. They do not speak as they did before to indicate this, however.

Let it be noted these are pre-emptive indicators, it has been seen that there is evidence of additional teratomorphic variants on the current process.

-SYNTHSAURS- Forgive my singular visual example of the holotype, as there are other varieties of these entities in the forms of alternate reptilian creatures I am told are recreations of extinct orgnisms from extreme antiquity.

They were well liked amongst the consumers within the Mall during its life, hence their extensive prescence even preceding current events. I suppose that is why the master allowed them to simulate biological reproductive actions in either an asexual or recombinant manner.

I was told they were inspired by a film, which he exhibited to him and myself during his retreat. In a secondary confession, I did not comprehend it. The reconstructions within the film relating to these creatures were of extreme difference from what I could ascertain were more current reconstructions from archives, and the narrative of their irresponsible replication was one I comprehended as evoking propaganda against him.

Again, I suppose I lack comprehension of the arts. Noted as a task to execute for later.

Related to their decentralized reproduction, they are (more than other entity archetypes recorded), in an unstable and biologically compromised state, with extreme portions of exposed non-dermal soft tissues and metallic metaskeletal pseudotissue visible.

This would explain their behaviors, as while lesser compromized indviduals act in mimesis of their reconstruction, albeit significantly more aggressive, the more compromised entities act with significantly greater erratic blunt force, the most common application of which is sprinting and the use of the skull, non-dependent on the organ's utility for the purposes of attack.

There are high degrees of self injury within this behavior, but their constitution is still robust that this appears negligible. Perhaps they desire self-termination?

-MALLRATS- This entry is perhaps heterodox to this broader format of metazoological bestiary, as this subspecies appears to be largely non-hostile and preferring to be left to themselves. I was permitted to pictoralize this subject for the holotype in exchange for edible matter and to "Make them look cool." They accepted my photographic attempts, so I believe I was found successful in this. I am surprised..

They appear to be descended from homo sapiens with recombinant genes from the Rattus species, though the recombnation is stable in a manner that suggests they were produced as an act of deliberate engineering as opposed to entropic decay. This includes their primary language, which appears to exist as a derivative of Esperanto.

They tell me that they were produced by what I may infer to be Master but... I do not understand why. He had told me he believed in the right of all beings to seek their own destiny, this seems contradictory to this sentiment. Troubling...

They are furtive as a species, existing in the majority within the walls of the Mall and crafting its entropic growths as simple tools, but they have increased in excursions unto the Food Court as a type of "third place." As this space has served a primary utility to myself as a "home base" this has sent me unto direct content.

There are... troubling political movements amongst the species I have been informed of. When requesting information upon the reason of egress from their home, the "Rat King" and his desire to replicate Master's abilities at a purportedly terrible cost has been spoken of. I have decided to notate this scenario for future action, as this is totally opposed to the Master's will... isn't it?

- NOMAJENE- She differs from other entries upon this list, as I hold no quarrel with her. She appears to be the acme of Master's craft, beautiful, elegant. And yet she hates me.

I do not understand why, as I have tried multiple occasions to establish dialogue, only to recieve... a confusing response. She speaks of herself as the "Mistress" and of myself as the favorite, orating sentiments that I may read as envy. And perhaps... I should not say this, but this is yet a minor blemish upon the Master.

I did not speak these sentiments for fear of insubordination, but I did measure a great unfavoratism of many creations. There are far too many beautiful creatures he had created that, upon the advancement of years, he treated as... imperfect. Not worthy of existence. If I were to speak of one regret, it was that I was unable to convince him of this.

I do not know how I might reach her. Violence appears to be her major means of interface, with exceptional physical strength and speed and a combat technique of rapid assault and mutilation. It is difficult to neutralize an opponent who is willing to rip off the arm of a MEMPHIS MANNEQUIN and use it as an instrument of blunt force trauma. And yet still...

-CONCLUSION. FURTHER ENTRIES TO BE ADDED AT A LATER DATE-

-USER: MARcIELLa

-----------------------------------------------------------

SO, there's that surprise, further development of the Mall-Based Soulsborne setting from the previous year. I almost didn't make it due to being sick as hell, but even through the wooziness and aches, I did it! Further contextualizing information to follow when I don't feel like death warmed up.

As with the others this month, these species and all the info/art/ect of there under a CC0 Public Domain License! Have fun!

#my art#my writing#synthwave#dead mall#open species#creative commons#soulsborne#creature design#public domain

13 notes

·

View notes

Text

To be extremely blunt: Microsoft is asking its employees to draft their performance reviews based on the outputs of generative AI models — the same ones underpinning ChatGPT — that are prone to hallucination. Microsoft is also — as I learned from an internal document I’ve reviewed — instructing managers to use it to summarize "their direct report's Connects, Perspectives and other feedback collected throughout the fiscal year as a basis to draft Rewards/promotion justifications in the Manage Rewards Tool (MRI)," which in plain English means "use a generative AI to read performance reviews that may or may not be written by generative AI, with the potential for hallucinations at every single step."

I find this whole situation utterly disgusting. The Growth Mindset is a poorly-defined and unscientific concept that Microsoft has adopted as gospel, sold through Satya Nadella's book and reams of internal training material, and it's a disgraceful thing to build an entire company upon, let alone one as important as Microsoft. Yet to actively encourage the company-wide dilution of performance reviews — and by extension the lives of Microsoft employees — by introducing generative AI is reprehensible. It shows that, at its core, Microsoft doesn't actually want to evaluate people's performance, but see how well it can hit the buttons that make managers and the Senior Leadership Team feel good, a masturbatory and specious culture built by a man — Satya Nadella — that doesn't know a fucking thing about the work being done at his company. This is the inevitable future of large companies that have simply given up on managing their people, sacrificing their culture — and ultimately their businesses — to as much automation as is possible, to the point that the people themselves are judged based on the whims of managers that don't do the actual work and the machines that they've found to do what little is required of them. Google now claims that 25% of its code is written by AI, and I anticipate Microsoft isn't far behind.

12 notes

·

View notes

Text

It’s absolutely baffling to me how many artists and people who are enjoyers of art and design and creativity in its myriad of expressions are willing to just let AI run rampant. They know on an intellectual level all the arguments: copyright infringement, intellectual property, environmental impact, the unseen and often exploitative labor that goes into training these algorithms, but when it’s just for a cheap laugh or a cheap thrill all of that goes out the window.

The point of rejecting these tools is to hold the line, to keep the people who are fanning the flames of the “AI race” and the push to integrate this technology into pretty much every aspect of our lives at bay. The moment something becomes an acceptable, mainstream use of AI like something as seemingly harmless as writing an email, they see an opening that they’ll start pressing to its most extreme iteration. They’ll push the boundaries of what is deemed acceptable data for them to use to train their models, privacy and copyright be damned.

I know many people who are resigned to use chatgpt for work, who feel they have no choice but to use AI to keep up with the insane fast pace of the work place. But the more you clear the way for the steamroller that is the fully automated corporate machine, the closer you get to being steamrolled yourself. How much longer until they think you are an obstacle to efficiency?

And efficiency is not accessibility. Anyone who claims that AI lowers the barrier of entry for art, writing, labor of any form, is absolutely deluding themselves. The fact that it’s an “access” that’s entirely reliant on a program made by a private corporation makes that point immediately moot. Art is accessible because anyone can make art. The people who argue for AI "art" want to consume art for cheap. They don’t value it enough to see the human behind it or simply want recognition for art they can’t be bothered to make. Accessibility in art or any field doesn’t bypass the process of acquiring skill and anyone who loves art loves the process.

Even outside of its full context, Miyazaki calling this technology an insult to life itself is absolutely applicable to many of these popular uses of AI. What is the point of anything if everything gets replaced by the pre-masticated slop cooked up by a bunch of techbros?

The only art worth anything is the art someone could be bothered to actually make.

#chatgpt#fuck openai#fuck chatgpt#ive been crashing out for three straight days#enough to write an essay#thought various#so if u catch me reblogging ai unknowingly#or knowingly#DRAG ME#get the fucking ai shit out of my fucking sight

10 notes

·

View notes

Text

Docm headcanon/rant

i think a lot of newer docm77 fans don't know how his skin got so complicated. Or how much lore it has because it is intricate.

Originally (as far a I know at least) the skin was a creeper man with a robotic eye and a lab coat. That's it. But then he upset a God (aka dinnerbone) that removed his right arm model. He replaced it with a robotic one quickly though. Around this time he killed three gods, dinnerbone cause of the arm thing I forgot how he killed Jeb but it was on accident then he accidentally killed Notch.

Then he joined hermitcraft a while after the Notch incident. Which I headcanon that instead of being invited he just shows up one day and nobody questioned him.

Season seven rolls around and is obsessed with goats and GOAT mentality (grind optimize automate thrive). Season eight hes breaking Minecraft again and apparently harnessing black magic with his shadow tech. (Headcanon doc doesn't believe in magic even when he sees it right in front of him or been when he does magic himself. It is simply yet to be explained science)

Season nine he is so obsessed with goat he now has goat horns (headcanon that he fucks with his DNA just cause he can. Also I like to think he also got a thicker skull with the goat DNA as well as the ability to just eat anything and developed a minor allergy to buttercup flowers)

Still season nine he's breaking the game and now worships the goatmother aka goat goddess and found an entire dead goat/creeper hybrid society in his perimeter. Then there's that twitter thing where his irl kid and him plate pretend as butterflies and suddenly his character has pink butterfly wings. (And antennae maybe?)

And that I just surface level canon things. Here are headcanons I didn't already say.

-his kid is a nonbinary creeper goat butterfly. And I love them. They usually stay with a friend off server because hermitcraft is not the safest place for a three year old but Doc will let them visit hermitcraft sometimes and goes off server frequently to see doccy.

-Docs hivemind is a bunch of smart people that he can see if they let him and he hears them constantly. Not only I the hivemind his smart friends but also various artists and musicians and cosplayers.

-Docs cam account is his robotic eye that he can control with his mind.

-creepers are covered in green fluffy fur an therefore Doc is fluffy. Also living with Ren in season eight gave him the habit of shaking himself dry after getting wet.

55 notes

·

View notes

Text

another thing that's been sticking to the roof of my mouth lately is like "why are toothpaste tubes so poorly designed" "surely we as a society can do better than paper flour bags" well besties. i hate to say it. but they are simply not designed for us! they're designed to be the cheapest good-enough option for industrial production and warehousing. end-user experience is a piddling consideration at best, maybe considered in a quarter when profits are down if you're lucky, and forget it if it conflicts with being shelf-stable and stackable, or if it makes the production line more difficult to automate. like the superficial observation that we as a society could do better is correct but unfortunately you have to dig deeper (have we tried another economic model yet? oh, right -- it got operation condored)

27 notes

·

View notes

Text

The more I read economics literature about automation trends and globalization trends (the actual economics term, not the rabid racist term) and their economic impacts on developed economies, the more I realize that the fundamental picture we have been sold these things is a lie.

The general picture of automation revolutions is that they present some way of doing work more efficiently and/or to create a better product, and so market forces simply demand it. And we have to figure out how to deal with all of the lost jobs which are resulting from this. Because even in a socialist utopia, surely it would be absurd to continue forcing people to use old and outdated technology to do work less efficiently just so they could have work to do, right? Maybe the socialist utopia will take care of people displaced by this work better, but the displacement will still happen.

Except then I start reading about the actual history in the actual economics of automation revolutions (I recommend Blood In The Machine for a history of the Luddites and the automated textile revolution in Britain). And that's not what happens even a single time. These automated revolutions increase the cost per unit to create a good! They make the quality worse! And the existing workers get displaced, and replaced with oppressed or even outright enslaved labors who make nothing in worse conditions! They didn't even actually reduce the amount of labor involved significantly, they just started working orphan slaves 80-90 hours a week rather than artisan workers doing 30-35, to "reduce" the labor involved by reducing the number of laborers. It seems like no one benefits from this. So why is it happening!?

Well the answer is simple. The machine looms were less efficient, created lower quality products, and were worse for every single person in every sector of the economy ... except insofar as that they enabled a more unequal economy. The textile industry itself made less profit. The world itself had worse and less textiles. But the machine loom owners specifically made more money, because machine rooms enabled more control over workers in ways which could be used to relegate them to an even smaller share of the smaller profits. And they didn't outcompete others by being better, they did it through regulatory capture, illegal business practices, outright fraud, and by having a pre-existing place of power in their society.

The same applies to the classic story of Ford and his great automobile factory model. Sure it produced a lot of cars at low prices, but what the history doesn't tell you is that a bunch of other automobile companies which weren't using the factory model were putting out their own cars similar cost. Sure they weren't scaling up as fast, but everyone involved was making good money and the market kept on producing more companies to fill the gap. Ford made the decision to sell to a new lower cost car market sure, but he did not make a better profit margin per dollar of car purchases than his competitors did. He made significantly worse actually because he had such hideous turnover at his factories, and his cars were of lower quality than non-factory line cars aimed at the same market could be.

So why the hell did the entire automobile industry follow in his wake? Well, because he personally was making an insane amount of money. The factory line model let him simplify the production chain in a way which cut out a lot of people who previously been making good salaries, and it let him replace well paid laborers with dirt cheap labor. (Despite the hubbub about how good Ford's factory jobs paid, they only paid well relative to other no skill no training work available. They paid much worse than the skilled laborers he fired had made.)

And the people who controlled how the car manufacturing process worked were the people who would stand to make money by switching over.

The same is true for globalization. When a berry monopoly which controls 60% of all berry sales in the US does so by importing berries from South America, from varieties optimized for durability rather than flavor, that isn't cheaper than growing them at home. Not even with the higher cost of labor in the US. Not even if you actually paid farm hands a good wage rather than by abusing undocumented workers who can't fight back as effectively. The transport costs are too high.

All across the US food sector we have examples of food monopolies exporting produce production overseas in ways that make the final product more expensive for the customer, and lower quality at the same time. Why!?

Well because it allows them to access even more vulnerable labor markets. So even though the whole pie shrinks, the company owners get a bigger enough cut of the pie to make up for it.

The lie of automation and globalization of work and the damage it does to developed economies is just that, a lie. It is not economically predestined for this stuff to happen. Alternatives are not predestined to be competed out of the market. Unless, of course, ownership of profits is concentrated in only a few hands. Unless what's being competed for isn't net profit or net service provided or net quality of goods, but how much profit you can localize in capital owners.

If that's the actual competition, and of course it is because the people making decisions for companies also own those companies, only then does job automation and the presence of exploitable overseas labor devastate economies.

If laborers actually owned their places of business piecemeal, the motivation for these kinds of economic shocks would largely dry up. Like, sure, labor saving devices get invented sometimes and you need less people to do the same work. And sure, sometimes work can be done overseas for cheaper because standards of living at lower or because there's some comparative economic advantage. But that is not actually what is happening most of the time this stuff occurs.

If there's one thing I've learned studying this stuff, it's that genuine examples of net gain automation are less common than we think, and tend to be implemented on fairly slower timelines. Same for globalization of work. What is very common is ways in which already unequal systems of ownership and decision making and profit can be made more unequal. And the only fix I can imagine is fundamentally changing and democratizing how businesses operate, and how we handle concepts of ownership.

#also I know this can read as dismissive of the impacts#of this stuff on the labor forces most exploited by it#especially in South America#it's just that I'm trying to come at this from the perspective of#the justification of the existing system uses#which do not care about that kind of suffering#and trying to point out how they don't even do the things they claim to do

37 notes

·

View notes

Text

On the Skidelsky/Fuller post I reblogged, I absolutely welcome automation given the following criteria:

1. The output is identical or, holistically, more positive than human labor output

2. This automation occurs within an economic system in which GDP growth (or similarly fraught metrics) is not the primary objective

3. The automation aids the sustainability of nature and humanity

The USA's agriculture industry is a wonderful example of modern automation failing all three of these criteria. Throughout the entire industrial revolution, agriculture has trended away from being a society-wide confederation of family/community-scale, labor-intensive smallholdings to our current reality of a small number of monolithic industrial farms that are maintained by astoundingly few people who operate increasingly complex and expensive equipment.

Our massive-scale industrial farms are fantastic at what they were designed for; they grow as much of a staple crop as possible without regard to human or environmental health, doing so using minimal labor. Fundamentally, it is an extractive industry. Fossil fuels are extracted to power the machinery, processing, and logistics systems. Nutrients are extracted from the soil to the point that crop growth can only be sustained with heavy amounts of industrial fertilizer input. Entire ecosystems are sacrificed when forests are cleared to be exploited and repeatedly battered with pesticides. This is all primarily to produce soybeans, feed corn, and cotton to then process into products like factory farm livestock feed-slurry, corn syrup, junk food, and sweatshop garments. Secondarily, it is to produce flavorless, nutrition-void produce that can be sold year-round. Consistency is the goal, although one may find that nature itself is curiously inconsistent.

This case study of automation's failings can be traced back to a few major factors:

1. Old-style agriculture work is disagreeable to the USA's perverted fascination for infinite GDP growth; each farm laborer that can be replaced by a machine is a potential worker that could move into a city (or suburb) and put in the same amount of hours at a higher-dollar job. It's just opportunity cost, and this is more-or-less what Skidelsky and Fuller find offensive about our current labor zeitgeist; instead of the now-jobless laborers being free to pursue their interests, they are instead shoehorned into some shitty desk job that produces a relatively greater amount of money to be leeched by executives and shareholders -- this is "more productive" to our economy on the basis of GDP growth and thus must be prioritized over agricultural labor.

2. Industrial approaches to large-scale agriculture are inherently reductive to an extreme extent. Nature is far more complex than Liebig or any other enlightenment thinker ever imagined. Industrialization is great at making cars or computer chips or Gucci jackets or whatever, as these are things that can be standardized with relative ease. Nature cannot be tamed and standardized in a similar way; ecosystems, particularly soil ecosystems, can vary massively even in small areas of the same climate type. Our agriculture systems cope with this simply by ignoring such factors and reducing crop growth to a formula. In X region, plant Y variety of Z crop on A date and apply a regimen of B-type fertilizer and C-type pesticide on D date etc etc. This is the most egregious reduction of something in all of history.

Liebig's reduction of agriculture to the NPK model, just three elements, is good for achieving the singular goal of making your plant of choice come out of the ground, but it ignores all the nuance of soil, climate, and evolution. The other factors don't matter. Modern lab-designed fertilizers often feature a plethora of additional micronutrients, but the goal is still to produce a healthy crop, not healthy soil. Soil itself is an organism, it is something that must be nurtured to be healthy; industrial pesticide/fertilizer regimens are to the soil as feed slurry/antibiotic regimens are to factory farm animals.

Natural processes are, itself, the greatest form of automation for agriculture. Plants and animals that are native to a region have evolved to grow there regardless of human intervention. It is our disruption of these processes that forces agriculture to be labor/resource-intensive. This isn't to say that everyone must immediately abandon all non-native foods and adopt a primarily undomesticated Ötzi diet, but instead, it's worth considering that the complexity of modern technology is not even close to being at parity with the complexity of nature; nature has a several billion year head start. There is no way to flawlessly "tame" it with technological solutions, but a comfortable middle ground can certainly be found.

If sustainable, climate-friendly food production is the primary objective of agriculture, this is far more easily achieved by small, ecology-considerate farms than massive, largely automated industrial farms. A healthy soil ecosystem will aid in growth, flavor, nutrition, and, (quite importantly) carbon sequestration. Broadforking, shoveling, and wheelbarrow-pushing is absolutely more labor intensive than sitting back in a huge John Deere tractor with GPS-based autopiloting features, but the extra labor can turn a woefully extractive process into one that is instead highly regenerative.

20 notes

·

View notes

Text

as a backend dev, watching this "rate limiting" on twitter play out was extra stupid if you believe in the "they were trying to cut costs" theory, which must be bullshit because:

to rate limit by user and user type, they'd need to authenticate and load the relevant user record, which hits cache/database and bypasses edge cache. that's the same as just loading everything from the backend, no cost avoidance there.

the rate limit itself is new cache usage, so they're actually increasing cost that way. that count had to be ephemerally stored somewhere!

their own official clients probably never had to deal with 429 Rate Limit Exceeded errors, so yes, they probably DDoS'd themselves in the process

undoubtedly either some engineer brought all of this up and was ignored, or worse, they have no engineers left who understand this level of nuance about operating at scale

so if anything, they increased their server costs, not decreased them. and i know they are not google cloud's biggest customer, so i don't even think that has anything to do with anything here.

my bet is simply that the same thing is happening to twitter that has happened to tumblr: somebody is trying to archive as much as possible of it, and spinning up new accounts to do so via some automated process, and they're struggling to mitigate that. we (tumblr) have mitigated "attacks" like that pretty easily over the years.

106 notes

·

View notes

Text

assuaging my anxieties about machine learning over the last week, I learn that despite there being about ten years of doom-saying about the full automation of radiomics, there's actually a shortage of radiologists now (and, also, the machine learning algorithms that are supposed to be able to detect cancers better than human doctors are very often giving overconfident predictions). truck driving was supposed to be completely automated by now, but my grampa is still truckin' and will probably get to retire as a trucker. companies like GM are now throwing decreasing amounts of money at autonomous vehicle research after throwing billions at cars that can just barely ferry people around san francisco (and sometimes still fails), the most mapped and trained upon set of roads in the world. (imagine the cost to train these things for a city with dilapidated infrastructure, where the lines in the road have faded away, like, say, Shreveport, LA).

we now have transformer-based models that are able to provide contextually relevant responses, but the responses are often wrong, and often in subtle ways that require expertise to needle out. the possibility of giving a wrong response is always there - it's a stochastic next-word prediction algorithm based on statistical inferences gleaned from the training data, with no innate understanding of the symbols its producing. image generators are questionably legal (at least the way they were trained and how that effects the output of essentially copyrighted material). graphic designers, rather than being replaced by them, are already using them as a tool, and I've already seen local designers do this (which I find cheap and ugly - one taco place hired a local designer to make a graphic for them - the tacos looked like taco bell's, not the actual restaurant's, and you could see artefacts from the generation process everywhere). for the most part, what they produce is visually ugly and requires extensive touchups - if the model even gives you an output you can edit. the role of the designer as designer is still there - they are still the arbiter of good taste, and the value of a graphic designer is still based on whether or not they have a well developed aesthetic taste themself.

for the most part, everything is in tech demo phase, and this is after getting trained on nearly the sum total of available human produced data, which is already a problem for generalized performance. while a lot of these systems perform well on older, flawed, benchmarks, newer benchmarks show that these systems (including GPT-4 with plugins) consistently fail to compete with humans equipped with everyday knowledge.

there is also a huge problem with the benchmarks typically used to measure progress in machine learning that impact their real world use (and tell us we should probably be more cautious because the human use of these tools is bound to be reckless given the hype they've received). back to radiomics, some machine learning models barely generalize at all, and only perform slightly better than chance at identifying pneumonia in pediatric cases when it's exposed to external datasets (external to the hospital where the data it was trained on came from). other issues, like data leakage, make popular benchmarks often an overoptimistic measure of success.

very few researchers in machine learning are recognizing these limits. that probably has to do with the academic and commercial incentives towards publishing overconfident results. many papers are not even in principle reproducible, because the code, training data, etc., is simply not provided. "publish or perish", the bias journals have towards positive results, and the desire of tech companies to get continued funding while "AI" is the hot buzzword, all combined this year for the perfect storm of techno-hype.

which is not to say that machine learning is useless. their use as glorified statistical methods has been a boon for scientists, when those scientists understand what's going on under the hood. in a medical context, tempered use of machine learning has definitely saved lives already. some programmers swear that copilot has made them marginally more productive, by autocompleting sometimes tedious boilerplate code (although, hey, we've had code generators doing this for several decades). it's probably marginally faster to ask a service "how do I reverse a string" than to look through the docs (although, if you had read the docs to begin with would you even need to take the risk of the service getting it wrong?) people have a lot of fun with the image generators, because one-off memes don't require high quality aesthetics to get a chuckle before the user scrolls away (only psychopaths like me look at these images for artefacts). doctors will continue to use statistical tools in the wider machine learning tool set to augment their provision of care, if these were designed and implemented carefully, with a mind to their limitations.

anyway, i hope posting this will assuage my anxieties for another quarter at least.

35 notes

·

View notes

Text

Linkty Dumpty

I was supposed to be on vacation, and while I didn’t do any blogging for a month, that didn’t mean that I stopped looking at my distraction rectangle and making a list of things I wanted to write about. Consequentially, the link backlog is massive, so it’s time to declare bankruptcy with another linkdump:

https://pluralistic.net/tag/linkdump/

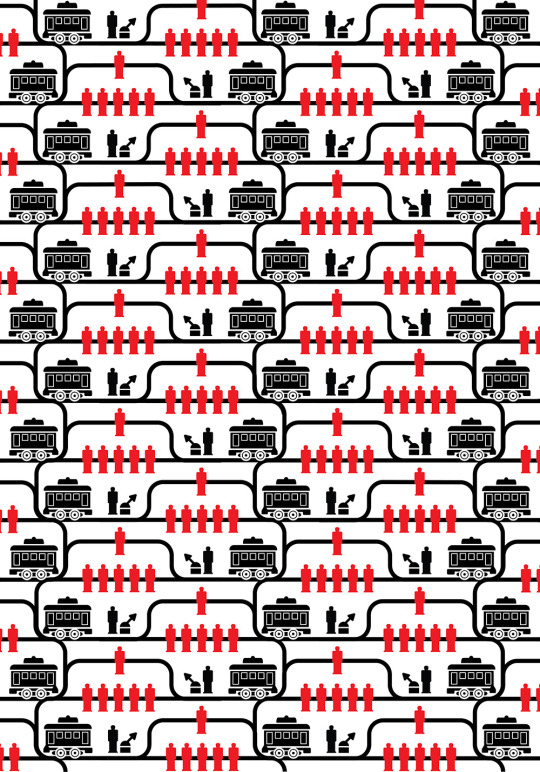

[Image ID: John Holbo’s ‘trolley problem’ art, a repeating pattern of trolleys, tracks, people on tracks, and people standing at track switches]++

Let’s kick things off with a little graphic whimsy. You’ve doubtless seen the endless Trolley Problem memes, working from the same crude line drawings? Well, philosopher John Holbo got tired of that artwork, and he whomped up a fantastic alternative, which you can get as a poster, duvet, sticker, tee, etc:

https://www.redbubble.com/shop/ap/145078097

The trolley problem has been with us since 1967, but it’s enjoying a renaissance thanks to the insistence of “AI” weirdos that it is very relevant to our AI debate. A few years back, you could impress uninformed people by dropping the Trolley Problem into a discussion:

https://memex.craphound.com/2016/10/25/mercedes-weird-trolley-problem-announcement-continues-dumb-debate-about-self-driving-cars/

Amazingly, the “AI” debate has only gotten more tedious since the middle of the past decade. But every now and again, someone gets a stochastic parrot to do something genuinely delightful, like the Jolly Roger Telephone Company, who sell chatbots that will pretend to be tantalyzingly confused marks in order to tie up telemarketers and waste their time:

https://jollyrogertelephone.com/

Jolly Roger sells different personas: “Whitebeard” is a confused senior who keeps asking the caller’s name, drops nonsequiturs into the conversation, and can’t remember how many credit-cards he has. “Salty Sally” is a single mom with a houseful of screaming, demanding children who keep distracting her every time the con artist is on the verge of getting her to give up compromising data. “Whiskey Jack” is drunk:

https://www.wsj.com/articles/people-hire-phone-bots-to-torture-telemarketers-2dbb8457

The bots take a couple minutes to get the sense of the conversation going. During that initial lag, they have a bunch of stock responses like “there’s a bee on my arm, but keep going,” or grunts like “huh,” and “uh-huh.” The bots can keep telemarketers and scammers on the line for quite a long time. Scambaiting is an old and honorable vocation, and it’s good that it has received a massive productivity gain from automation. This is the AI Dividend I dream of.

The less-fun AI debate is the one over artists’ rights and tech. I am foresquare for the artists here, but I think that the preferred solutions (like creating a new copyright over the right to train a model with your work) will not lead to the hoped-for outcome. As with other copyright expansions — 40 years’ worth of them now — this right will be immediately transferred to the highly concentrated media sector, who will simply amend their standard, non-negotiable contracting terms to require that ��training rights” be irrevocably assigned to them as a condition of working.

The real solution isn’t to treat artists as atomic individuals — LLCs with an MFA — who bargain, business-to-business, with corporations. Rather, the solutions are in collective power, like unions. You’ve probably heard about the SAG-AFTRA actors’ strike, in which creative workers are bargaining as a group to demand fair treatment in an age of generative models. SAG-AFTRA president Fran Drescher’s speech announcing the strike made me want to stand up and salute:

https://www.youtube.com/watch?v=J4SAPOX7R5M

The actors’ strike is historic: it marks the first time actors have struck since 2000, and it’s the first time actors and writers have co-struck since 1960. Of course, writers in the Writers Guild of America (West and East) have been picketing since since April, and one of their best spokespeople has been Adam Conover, a WGA board member who serves on the negotiating committee. Conover is best known for his stellar Adam Ruins Everything comedy-explainer TV show, which pioneered a technique for breaking down complex forms of corporate fuckery and making you laugh while he does it. Small wonder that he’s been so effective at conveying the strike issues while he pickets.

Writing for Jacobin, Alex N Press profiles Conover and interviews him about the strike, under the excellent headline, “Adam Pickets Everything.” Conover is characteristically funny, smart, and incisive — do read:

https://jacobin.com/2023/07/adam-conover-wga-strike

Of course, not everyone in Hollywood is striking. In late June, the DGA accepted a studio deal with an anemic 41% vote turnout:

https://www.theverge.com/2023/6/26/23773926/dga-amptp-new-deal-strike

They probably shouldn’t have. In this interview with The American Prospect’s Peter Hong, the brilliant documentary director Amy Ziering breaks down how Netflix and the other streamers have rugged documentarians in a classic enshittification ploy that lured in filmmakers, extracted everything they had, and then discarded the husks:

https://prospect.org/culture/2023-06-21-drowned-in-the-stream/

Now, the streaming cartel stands poised to all but kill off documentary filmmaking. Pressured by Wall Street to drive high returns, they’ve become ultraconservative in their editorial decisions, making programs and films that are as similar as possible to existing successes, that are unchallenging, and that are cheap. We’ve gone directly from a golden age of docs to a dark age.

In a time of monopolies, it’s tempting to form countermonopolies to keep them in check. Yesterday, I wrote about why the FTC and Lina Khan were right to try to block the Microsoft/Activision merger, and I heard from a lot of people saying this merger was the only way to check Sony’s reign of terror over video games:

https://pluralistic.net/2023/07/14/making-good-trouble/#the-peoples-champion

But replacing one monopolist with another isn’t good for anyone (except the monopolists’ shareholders). If we want audiences and workers — and society — to benefit, we have to de-monopolize the sector. Last month, I published a series with EFF about how we should save the news from Big Tech:

https://www.eff.org/deeplinks/2023/04/saving-news-big-tech

After that came out, the EU Observer asked me to write up version of it with direct reference to the EU, where there are a lot of (in my opinion, ill-conceived but well-intentioned) efforts to pry Big Tech’s boot off the news media’s face. I’m really happy with how it came out, and the header graphic is awesome:

https://euobserver.com/opinion/157187

De-monopolizing tech has become my life’s work, both because tech is foundational (tech is how we organize to fight over labor, gender and race equality, and climate justice), and because tech has all of these technical aspects, which open up new avenues for shrinking Big Tech, without waiting decades for traditional antitrust breakups to run their course (we need these too, though!).

I’ve written a book laying out a shovel-ready plan to give tech back to its users through interoperability, explaining how to make new regulations (and reform old ones), what they should say, how to enforce them, and how to detect and stop cheating. It’s called “The Internet Con: How To Seize the Means of Computation” and it’s coming from Verso Books this September:

https://www.versobooks.com/products/3035-the-internet-con

[Image ID: The cover of the Verso Books hardcover of ‘The Internet Con: How to Seize the Means of Computation]

I just got my first copy in the mail yesterday, and it’s a gorgeous little package. The timing was great, because I spent the whole week in the studio at Skyboat Media recording the audiobook — the first audiobook of mine that I’ve narrated. It was a fantastic experience, and I’ll be launching a Kickstarter to presell the DRM-free audio and ebooks as well as hardcovers, in a couple weeks.

Though I like doing these crowdfunders, I do them because I have to. Amazon’s Audible division, the monopolist that controls >90% of the audiobook market, refuses to carry my work because it is DRM-free. When you buy a DRM-free audiobook, that means that you can play it on anyone’s app, not just Amazon’s. Every audiobook you’ve ever bought from Audible will disappear the moment you decide to break up with Amazon, which means that Amazon can absolutely screw authors and audiobook publishers because they’ve taken our customers hostage.

If you are unwise enough to pursue an MBA, you will learn a term of art for this kind of market structure: it’s a “moat,” that is, an element of the market that makes it hard for new firms to enter the market and compete with you. Warren Buffett pioneered the use of this term, and now it’s all but mandatory for anyone launching a business or new product to explain where their moat will come from.

As Dan Davies writes, these “moats” aren’t really moats in the Buffett sense. With Coke and Disney, he says, a “moat” was “the fact that nobody else could make such a great product that everyone wanted.” In other words, “making a good product,” is a great moat:

https://backofmind.substack.com/p/stuck-in-the-moat

But making a good product is a lot of work and not everyone is capable of it. Instead, “moat” now just means some form of lock in. Davies counsels us to replace “moat” with:

our subscription system and proprietary interface mean that our return on capital is protected by a strong Berlin Wall, preventing our customers from getting out to a freer society and forcing them to consume our inferior products for lack of alternative.

I really like this. It pairs well with my 2020 observation that the fight over whether “IP” is a meaningful term can be settled by recognizing that IP has a precise meaning in business: “Any policy that lets me reach beyond the walls of my firm to control the conduct of my competitors, critics and customers”:

https://locusmag.com/2020/09/cory-doctorow-ip/

To see how that works in the real world, check out “The Anti-Ownership Ebook Economy,” a magisterial piece of scholarship from Sarah Lamdan, Jason M. Schultz, Michael Weinberg and Claire Woodcock:

https://www.nyuengelberg.org/outputs/the-anti-ownership-ebook-economy/

Something happened when we shifted to digital formats that created a loss of rights for readers. Pulling back the curtain on the evolution of ebooks offers some clarity to how the shift to digital left ownership behind in the analog world.

The research methodology combines both anonymous and named sources in publishing, bookselling and librarianship, as well as expert legal and economic analysis. This is an eminently readable, extremely smart, and really useful contribution to the scholarship on how “IP” (in the modern sense) has transformed books from something you own to something that you can never own.

The truth is, capitalists hate capitalism. Inevitably, the kind of person who presides over a giant corporation and wields power over millions of lives — workers, suppliers and customers — believes themselves to be uniquely and supremely qualified to be a wise dictator. For this kind of person, competition is “wasteful” and distracts them from the important business of making everyone’s life better by handing down unilateral — but wise and clever — edits. Think of Peter Thiel’s maxim, “competition is for losers.”

That’s why giant companies love to merge with each other, and buy out nascent competitors. By rolling up the power to decide how you and I and everyone else live our lives, these executives ensure that they can help us little people live the best lives possible. The traditional role of antitrust enforcement is to prevent this from happening, countering the delusions of would-be life-tenured autocrats of trade with public accountability and enforcement:

https://marker.medium.com/we-should-not-endure-a-king-dfef34628153

Of course, for 40 years, we’ve had neoliberal, Reaganomics-poisoned antitrust, where monopolies are celebrated as “efficient” and their leaders exalted as geniuses whose commercial empires are evidence of merit, not savagery. That era is, thankfully, coming to an end, and not a moment too soon.

Leading the fight is the aforementioned FTC chair Lina Khan, who is taking huge swings at even bigger mergers. But the EU is no slouch in this department: they’re challenging the Adobe/Figma merger, a $20b transaction that is obviously and solely designed to recapture customers who left Adobe because they didn’t want to struggle under its yoke any longer:

https://gizmodo.com/adobe-figma-acquisition-likely-to-face-eu-investigation-1850555562

For autocrats of trade, this is an intolerable act of disloyalty. We owe them our fealty and subservience, because they are self-evidently better at understanding what we need than we could ever be. This unwarranted self-confidence from the ordinary mediocrities who end up running giant tech companies gets them into a whole lot of hot water.

One keen observer of the mind-palaces that tech leaders trap themselves in is Anil Dash, who describes the conspiratorial, far-right turn of the most powerful men (almost all men!) in Silicon Valley in a piece called “‘VC Qanon’ and the radicalization of the tech tycoons”:

https://www.anildash.com/2023/07/07/vc-qanon/

Dash builds on an editorial he published in Feb, “The tech tycoon martyrdom charade,” which explores the sense of victimhood the most powerful, wealthiest people in the Valley project:

https://www.anildash.com/2023/02/27/tycoon-martyrdom-charade/

These dudes are prisoners of their Great Man myth, and leads them badly astray. And while all of us are prone to lapses in judgment and discernment, Dash makes the case that tech leaders are especially prone to it:

Nobody becomes a billionaire by accident. You have to have wanted that level of power, control and wealth more than you wanted anything else in your life. They all sacrifice family, relationships, stability, community, connection, and belonging in service of keeping score on a scale that actually yields no additional real-world benefits on the path from that first $100 million to the tens of billions.

This makes billionaires “a cohort that is, counterintutively, very easily manipulated.” What’s more, they’re all master manipulators, and they all hang out with each other, which means that when a conspiratorial belief takes root in one billionaire’s brain, it spreads to the rest of them like wildfire.

Then, billionaires “push each other further and further into extreme ideas because their entire careers have been predicated on the idea that they’re genius outliers who can see things others can’t, and that their wealth is a reward for that imagined merit.”

They live in privileged bubbles, which insulates them from disconfirming evidence — ironic, given how many of these bros think they are wise senators in the agora.

There are examples of billionaires’ folly all around us today, of course. Take privacy: the idea that we can — we should — we must — spy on everyone, all the time, in every way, to eke out tiny gains in ad performance is objectively batshit. And yet, wealthy people decreed this should be so, and it was, and made them far richer.

Leaked data from Microsoft’s Xandr ad-targeting database reveals how the commercial surveillance delusion led us to a bizarre and terrible place, as reported on by The Markup:

https://themarkup.org/privacy/2023/06/08/from-heavy-purchasers-of-pregnancy-tests-to-the-depression-prone-we-found-650000-ways-advertisers-label-you

The Markup’s report lets you plumb 650,000 targeting categories, searching by keyword or loading random sets, 20 at a time. Do you want to target gambling addicts, people taking depression meds or Jews? Xandr’s got you covered. What could possibly go wrong?

The Xandr files come from German security researcher Wolfie Christl from Cracked Labs. Christi is a European, and he’s working with the German digital rights group Netzpolitik to get the EU to scrutinize all the ways that Xandr is flouting EU privacy laws.

Billionaires’ big ideas lead us astray in more tangible ways, of course. Writing in The Conversation, John Quiggin asks us to take a hard look at the much ballyhooed (and expensively ballyhooed) “nuclear renaissance”:

https://theconversation.com/dutton-wants-australia-to-join-the-nuclear-renaissance-but-this-dream-has-failed-before-209584

Despite the rhetoric, nukes aren’t cheap, and they aren’t coming back. Georgia’s new nuclear power is behind schedule and over budget, but it’s still better off than South Carolina’s nukes, which were so over budget that they were abandoned in 2017. France’s nuke is a decade behind schedule. Finland’s opened this year — 14 years late. The UK’s Hinkley Point C reactor is massively behind schedule and over budget (and when it’s done, it will be owned by the French government!).

China’s nuclear success story also doesn’t hold up to scrutiny — they’ve brought 50GW of nukes online, sure, but they’re building 95–120GW of solar every year.

Solar is the clear winner here, along with other renewables, which are plummeting in cost (while nukes soar) and are accelerating in deployments (while nukes are plagued with ever-worsening delays).

This is the second nuclear renaissance — the last one, 20 years ago, was a bust, and that was before renewables got cheap, reliable and easy to manufacture and deploy. You’ll hear fairy-tales about how the early 2000s bust was caused by political headwinds, but that’s simply untrue: there were almost no anti-nuke marches then, and governments were scrambling to figure out low-carbon alternatives to fossil fuels (this was before the latest round of fossil fuel sabotage).

The current renaissance is also doomed. Yes, new reactors are smaller and safer and won’t have the problems intrinsic to all megaprojects, but designs like VOYGR have virtually no signed deals. Even if they do get built, their capacity will be dwarfed by renewables — a Gen III nuke will generate 710MW of power. Globally, we add that much solar every single day.

And solar power is cheap. Even after US subsidies, a Gen III reactor would charge A$132/MWh — current prices are as low as A$64-$114/MWh.

Nukes are getting a charm offensive because wealthy people are investing in hype as a way of reaping profits — not as a way of generating safe, cheap, reliable energy.

Here in the latest stage of capitalism, value and profit are fully decoupled. Monopolists are shifting more and more value from suppliers and customers to their shareholders every day. And when the customer is the government, the depravity knows no bounds. In Responsible Statecraft, Connor Echols describes how military contractors like Boeing are able to bill the Pentagon $52,000 for a trash can:

https://responsiblestatecraft.org/2023/06/20/the-pentagons-52000-trash-can/

Military Beltway Bandits are nothing new, of course, but they’ve gotten far more virulent since the Obama era, when Obama’s DoD demanded that the primary contractors merge to a bare handful of giant firms, in the name of “efficiency.” As David Dayen writes in his must-read 2020 book Monopolized, this opened the door to a new kind of predator:

https://pluralistic.net/2021/01/29/fractal-bullshit/#dayenu

The Obama defense rollups were quickly followed by another wave of rollups, these ones driven by Private Equity firms who cataloged which subcontractors were “sole suppliers” of components used by the big guys. These companies were all acquired by PE funds, who then lowered the price of their products, selling them below cost.

This maximized the use of those parts in weapons and aircraft sold by primary contractors like Boeing, which created a durable, long-lasting demand for fresh parts for DoD maintenance of its materiel. PE-owned suppliers hits Uncle Sucker with multi-thousand-percent markups for these parts, which have now wormed their way into every corner of the US arsenal.

Yes, this is infuriating as hell, but it’s also so grotesquely wrong that it’s impossible to defend, as we see in this hilarious clip of Rep Katie Porter grilling witnesses on US military waste:

https://www.youtube.com/watch?v=TJhf6l1nB9A

Porter pulls out the best version yet of her infamous white-board and makes her witnesses play defense ripoff Jepoardy!, providing answers to a series of indefensible practices.

It’s sure nice when our government does something for us, isn’t it? We absolutely can have nice things, and we’re about to get them. The Infrastructure Bill contains $42B in subsidies for fiber rollouts across the country, which will be given to states to spend. Ars Technica’s Jon Brodkin breaks down the state-by-state spending:

https://arstechnica.com/tech-policy/2023/06/us-allocates-42b-in-broadband-funding-find-out-how-much-your-state-will-get/

Texas will get $3.31B, California will get $1.86B, and 17 other states will get $1B or more. As the White House announcement put it, “High-speed Internet is no longer a luxury.”

To understand how radical this is, you need to know that for decades, the cable and telco sector has grabbed billions in subsidies for rural and underserved communities, and then either stole the money outright, or wasted it building copper networks that run at a fraction of a percent of fiber speeds.

This is how America — the birthplace of the internet — ended up with some of the world’s slowest, most expensive broadband, even after handing out tens of billions of dollars in subsidies. Those subsidies were gobbled up by greedy, awful phone companies — these ones must be spent wisely, on long-lasting, long-overdue fiber infrastructure.

That’s a good note to end on, but I’ve got an even better one: birds in the Netherlands are tearing apart anti-bird strips and using them to build their nests. Wonderful creatures 1, hostile architecture, 0. Nature is healing:

https://www.theguardian.com/science/2023/jul/11/crows-and-magpies-show-their-metal-by-using-anti-bird-spikes-to-build-nests

If you'd like an essay-formatted version of this thread to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/07/15/in-the-dumps/#what-vacation

Next Tues, Jul 18, I'm hosting the first Clarion Summer Write-In Series, an hour-long, free drop-in group writing and discussion session. It's in support of the Clarion SF/F writing workshop's fundraiser to offer tuition support to students:

https://mailchi.mp/theclarionfoundation/clarion-write-ins

[Image iD: A dump-truck, dumping out a load of gravel. A caricature of Humpty Dumpty clings to its lip, restrained by a group of straining, Lilliputian men.]

#pluralistic#infrastructure#broadband#linkdumps#fran drescher#labor#strikes#libraries#big tech#sag aftra#writer's strike#commercial surveillance#actor's strike#data brokers#ebooks#moats and walls#drm#licensing#glam#publishing#military privacy#copyfight#platform economics#nukes#adam conover#pentagon#birds#mergers#delightful creatures#hostile architecture

113 notes

·

View notes

Text

Unlock the other 99% of your data - now ready for AI

New Post has been published on https://thedigitalinsider.com/unlock-the-other-99-of-your-data-now-ready-for-ai/

Unlock the other 99% of your data - now ready for AI

For decades, companies of all sizes have recognized that the data available to them holds significant value, for improving user and customer experiences and for developing strategic plans based on empirical evidence.

As AI becomes increasingly accessible and practical for real-world business applications, the potential value of available data has grown exponentially. Successfully adopting AI requires significant effort in data collection, curation, and preprocessing. Moreover, important aspects such as data governance, privacy, anonymization, regulatory compliance, and security must be addressed carefully from the outset.

In a conversation with Henrique Lemes, Americas Data Platform Leader at IBM, we explored the challenges enterprises face in implementing practical AI in a range of use cases. We began by examining the nature of data itself, its various types, and its role in enabling effective AI-powered applications.

Henrique highlighted that referring to all enterprise information simply as ‘data’ understates its complexity. The modern enterprise navigates a fragmented landscape of diverse data types and inconsistent quality, particularly between structured and unstructured sources.

In simple terms, structured data refers to information that is organized in a standardized and easily searchable format, one that enables efficient processing and analysis by software systems.

Unstructured data is information that does not follow a predefined format nor organizational model, making it more complex to process and analyze. Unlike structured data, it includes diverse formats like emails, social media posts, videos, images, documents, and audio files. While it lacks the clear organization of structured data, unstructured data holds valuable insights that, when effectively managed through advanced analytics and AI, can drive innovation and inform strategic business decisions.

Henrique stated, “Currently, less than 1% of enterprise data is utilized by generative AI, and over 90% of that data is unstructured, which directly affects trust and quality”.

The element of trust in terms of data is an important one. Decision-makers in an organization need firm belief (trust) that the information at their fingertips is complete, reliable, and properly obtained. But there is evidence that states less than half of data available to businesses is used for AI, with unstructured data often going ignored or sidelined due to the complexity of processing it and examining it for compliance – especially at scale.

To open the way to better decisions that are based on a fuller set of empirical data, the trickle of easily consumed information needs to be turned into a firehose. Automated ingestion is the answer in this respect, Henrique said, but the governance rules and data policies still must be applied – to unstructured and structured data alike.

Henrique set out the three processes that let enterprises leverage the inherent value of their data. “Firstly, ingestion at scale. It’s important to automate this process. Second, curation and data governance. And the third [is when] you make this available for generative AI. We achieve over 40% of ROI over any conventional RAG use-case.”

IBM provides a unified strategy, rooted in a deep understanding of the enterprise’s AI journey, combined with advanced software solutions and domain expertise. This enables organizations to efficiently and securely transform both structured and unstructured data into AI-ready assets, all within the boundaries of existing governance and compliance frameworks.

“We bring together the people, processes, and tools. It’s not inherently simple, but we simplify it by aligning all the essential resources,” he said.

As businesses scale and transform, the diversity and volume of their data increase. To keep up, AI data ingestion process must be both scalable and flexible.