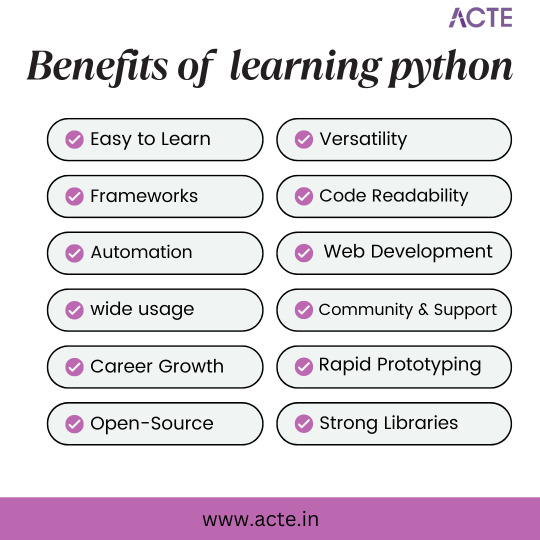

#benefits of python development services

Explore tagged Tumblr posts

Text

Python development can bring various benefits to your business. It saves time and money for many businesses by providing productivity.

#Python app development#benefits of Python app development#Python app development services#Python app development USA#Hire Python app developers#best Python app development USA#Best Python app development Services

1 note

·

View note

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

youtube

Want to break into the world of Artificial Intelligence? Join eMexo Technologies for the Best AI Training in Electronic City, Bangalore – your gateway to mastering AI and building a high-paying, future-ready career in one of the fastest-growing tech domains.

Our job-oriented AI Certification Course in Electronic City Bangalore is designed for both beginners and experienced professionals. With hands-on projects, live case studies, and expert guidance, you’ll gain the practical skills and confidence needed to become job-ready and certified.

🌟Who Should Enroll in this AI Course in Electronic City Bangalore?

Whether you're a student, fresher, software developer, data analyst, or IT professional aiming to transition into AI, this comprehensive AI Course in Electronic City Bangalore is tailored for all experience levels.

📘What You’ll Learn in Our AI Certification Course:

Foundations of AI: Core AI concepts, machine learning, deep learning, and neural networks

Python for AI: Programming essentials with real-time examples and exercises

Machine Learning Models: Supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: TensorFlow, Keras, OpenCV, and other key libraries

Natural Language Processing (NLP): Chatbots, sentiment analysis, and text analytics

Live AI Projects: Real-time case studies like image recognition and recommendation systems

🚀Why Choose eMexo Technologies for AI Training in Electronic City Bangalore?

We are more than just an AI training center in Electronic City Bangalore – we are your AI career partner. Our mission is to provide top-quality, job-oriented learning through certified trainers, personalized mentorship, and AI training placement in Electronic City Bangalore.

What Makes Us the Best AI Training Institute in Electronic City Bangalore:

✅ Expert-led sessions by certified AI and ML professionals ✅ Fully-equipped labs and real-time hands-on learning ✅ Career services including resume building & mock interviews ✅ Individual learning paths tailored to your career goals ✅ 100% placement assistance with dedicated support

📅Upcoming AI Training Batch Details:

Start Date: June 1, 2025

Time: 10:00 AM IST

Location: eMexo Technologies, Electronic City, Bangalore

Mode: Available in both Classroom & Online formats

👥Who Can Benefit from This AI Training in Electronic City Bangalore?

Students and freshers aiming to launch their AI careers

IT professionals and software developers looking to upskill

Data analysts and system engineers moving into AI and ML

Anyone preparing for a career in Artificial Intelligence

🎯Secure Your AI Career with Confidence

Enroll now in the top-rated AI Training Institute in Electronic City Bangalore and unlock global opportunities in Artificial Intelligence, Machine Learning, and Data Science.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Limited Seats Available – Enroll Today and Begin Your AI Journey with eMexoTechnologies!

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#AICertificationCourseBangalore#MachineLearning#DeepLearning#LearnAI#AIJobs#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore#Youtube

1 note

·

View note

Text

Gemini Code Assist Enterprise: AI App Development Tool

Introducing Gemini Code Assist Enterprise’s AI-powered app development tool that allows for code customisation.

The modern economy is driven by software development. Unfortunately, due to a lack of skilled developers, a growing number of integrations, vendors, and abstraction levels, developing effective apps across the tech stack is difficult.

To expedite application delivery and stay competitive, IT leaders must provide their teams with AI-powered solutions that assist developers in navigating complexity.

Google Cloud thinks that offering an AI-powered application development solution that works across the tech stack, along with enterprise-grade security guarantees, better contextual suggestions, and cloud integrations that let developers work more quickly and versatile with a wider range of services, is the best way to address development challenges.

Google Cloud is presenting Gemini Code Assist Enterprise, the next generation of application development capabilities.

Beyond AI-powered coding aid in the IDE, Gemini Code Assist Enterprise goes. This is application development support at the corporate level. Gemini’s huge token context window supports deep local codebase awareness. You can use a wide context window to consider the details of your local codebase and ongoing development session, allowing you to generate or transform code that is better appropriate for your application.

With code customization, Code Assist Enterprise not only comprehends your local codebase but also provides code recommendations based on internal libraries and best practices within your company. As a result, Code Assist can produce personalized code recommendations that are more precise and pertinent to your company. In addition to finishing difficult activities like updating the Java version across a whole repository, developers can remain in the flow state for longer and provide more insights directly to their IDEs. Because of this, developers can concentrate on coming up with original solutions to problems, which increases job satisfaction and gives them a competitive advantage. You can also come to market more quickly.

GitLab.com and GitHub.com repos can be indexed by Gemini Code Assist Enterprise code customisation; support for self-hosted, on-premise repos and other source control systems will be added in early 2025.

Yet IDEs are not the only tool used to construct apps. It integrates coding support into all of Google Cloud’s services to help specialist coders become more adaptable builders. The time required to transition to new technologies is significantly decreased by a code assistant, which also integrates the subtleties of an organization’s coding standards into its recommendations. Therefore, the faster your builders can create and deliver applications, the more services it impacts. To meet developers where they are, Code Assist Enterprise provides coding assistance in Firebase, Databases, BigQuery, Colab Enterprise, Apigee, and Application Integration. Furthermore, each Gemini Code Assist Enterprise user can access these products’ features; they are not separate purchases.

Gemini Code Support BigQuery enterprise users can benefit from SQL and Python code support. With the creation of pre-validated, ready-to-run queries (data insights) and a natural language-based interface for data exploration, curation, wrangling, analysis, and visualization (data canvas), they can enhance their data journeys beyond editor-based code assistance and speed up their analytics workflows.

Furthermore, Code Assist Enterprise does not use the proprietary data from your firm to train the Gemini model, since security and privacy are of utmost importance to any business. Source code that is kept separate from each customer’s organization and kept for usage in code customization is kept in a Google Cloud-managed project. Clients are in complete control of which source repositories to utilize for customization, and they can delete all data at any moment.

Your company and data are safeguarded by Google Cloud’s dedication to enterprise preparedness, data governance, and security. This is demonstrated by projects like software supply chain security, Mandiant research, and purpose-built infrastructure, as well as by generative AI indemnification.

Google Cloud provides you with the greatest tools for AI coding support so that your engineers may work happily and effectively. The market is also paying attention. Because of its ability to execute and completeness of vision, Google Cloud has been ranked as a Leader in the Gartner Magic Quadrant for AI Code Assistants for 2024.

Gemini Code Assist Enterprise Costs

In general, Gemini Code Assist Enterprise costs $45 per month per user; however, a one-year membership that ends on March 31, 2025, will only cost $19 per month per user.

Read more on Govindhtech.com

#Gemini#GeminiCodeAssist#AIApp#AI#AICodeAssistants#CodeAssistEnterprise#BigQuery#Geminimodel#News#Technews#TechnologyNews#Technologytrends#Govindhtech#technology

3 notes

·

View notes

Text

Mastering Power BI A Comprehensive Online Course for Data professionals

In the period of big data, the capability to visualize and interpret data effectively has come a vital skill for businesses and professionals likewise. Power BI, a robust business analytics tool from Microsoft, empowers stoners to transform raw data into practicable perceptivity. This composition explores the benefits of learning Power BI through an online course, outlining the essential chops covered, and pressing how this training can elevate your data analysis capabilities.

Why Choose Power BI?

Power BI is celebrated for its capability to seamlessly integrate with various data sources, offering intuitive and interactive visualizations. Its user-friendly interface and important features make it a favorite among data professionals.Thera are some pivotal reasons to choose Power BI.

Interactive Dashboards Power BI allows stoners to produce visually fascinating and interactive dashboards that give a comprehensive view of business criteria.

Data Connectivity With the capability to connect to a wide range of data sources, including databases, pall services, and Excel spreadsheets, Power BI ensures data integration is royal.

Advanced Analytics Power BI supports advanced analytics with features like DAX( Data Analysis Expressions) for custom calculations and predictive modeling.

Collaboration and sharing Power BI enables easy sharing of reports and dashboards, fostering collaboration across armies and departments.

Benefits of an Online Power BI Course :

Flexible knowledge Environment

Online courses offer the strictness to learn at your own pace, making it ideal for professionals balancing work commitments.

Access to Expert Instruction

Learn from sedulity experts who give perceptivity and practical knowledge, icing you gain a comprehensive understanding of Power BI.

Practical operation

Hands- on exercises and real- world systems help you apply what you learn, solidifying your chops and enhancing your confidence in using Power BI.

Cost-Effective knowledge

Online courses generally offer a cost-effective volition to traditional classroom training, with savings on trip and accommodation charges.

Core topics Covered in the Course:

A well- rounded Power BI online course generally includes the following pivotal areas.

Prolusion to Power BI

Understanding the Power BI ecosystem.

Setting up Power BI Desktop and navigating the interface.

Connecting to different data sources.

Data Preparation and Transformation .

Using Power Query for data drawing and transformation.

Creating connections between data tables.

Understanding and applying DAX for data analysis.

Data Visualization and Reporting .

Designing compelling visualizations and interactive reports.

Customizing dashboards and using themes.

Administering drill- through and drill- down functionalities.

Advanced Features and Stylish Practices.

Exercising AI illustrations and integrating R and Python scripts.

Performance optimization ways for large datasets.

swish practices for report design and data fabricator.

Collaboration and sharing :

Publishing reports to Power BI Service.

sharing and uniting with team members.

Setting up data refresh schedules and cautions.

Career Advancement with Power BI Chops.

Acquiring Power BI chops can significantly enhance your career prospects in various places, including .

Data Critic transfigure data into perceptivity to support business decision- timber.

Business Intelligence innovator Develop and maintain BI results that drive strategic enterprise.

Data Scientist influence Power BI for data visualization and communication of complex findings.

IT Professional Enhance data operation and reporting capabilities within associations.

Conclusion:

Mastering Power BI through an online course offers a precious occasion to develop in- demand data analytics chops. With the capability to produce poignant visualizations, perform advanced data analysis, and unite effectively, Power BI proficiency positions you as a vital asset in moment’s data- centric world. Start your trip with a comprehensive Power BI online course and unleash the full eventuality of your data analysis capabilities.

#powerbi#darascience#dataanalytics#BusinessIntelligence#data#DataInsights#python#DataDrivenDecisions#tableau#DataTools#sql#dashboard#DataReporting#onlinelearning#analytics#courses#dynamics#bi#software#nareshit

1 note

·

View note

Text

Eko API Integration: A Comprehensive Solution for Money Transfer, AePS, BBPS, and Money Collection

The financial services industry is undergoing a rapid transformation, driven by the need for seamless digital solutions that cater to a diverse customer base. Eko, a prominent fintech platform in India, offers a suite of APIs designed to simplify and enhance the integration of various financial services, including Money Transfer, Aadhaar-enabled Payment Systems (AePS), Bharat Bill Payment System (BBPS), and Money Collection. This article delves into the process and benefits of integrating Eko’s APIs to offer these services, transforming how businesses interact with and serve their customers.

Understanding Eko's API Offerings

Eko provides a powerful set of APIs that enable businesses to integrate essential financial services into their digital platforms. These services include:

Money Transfer (DMT)

Aadhaar-enabled Payment System (AePS)

Bharat Bill Payment System (BBPS)

Money Collection

Each of these services caters to different needs but together they form a comprehensive financial toolkit that can significantly enhance a business's offerings.

1. Money Transfer API Integration

Eko’s Money Transfer API allows businesses to offer domestic money transfer services directly from their platforms. This API is crucial for facilitating quick, secure, and reliable fund transfers across different banks and accounts.

Key Features:

Multiple Transfer Modes: Support for IMPS (Immediate Payment Service), NEFT (National Electronic Funds Transfer), and RTGS (Real Time Gross Settlement), ensuring flexibility for various transaction needs.

Instant Transactions: Enables real-time money transfers, which is crucial for businesses that need to provide immediate service.

Security: Strong encryption and authentication protocols to ensure that every transaction is secure and compliant with regulatory standards.

Integration Steps:

API Key Acquisition: Start by signing up on the Eko platform to obtain API keys for authentication.

Development Environment Setup: Use the language of your choice (e.g., Python, Java, Node.js) and integrate the API according to the provided documentation.

Testing and Deployment: Utilize Eko's sandbox environment for testing before moving to the production environment.

2. Aadhaar-enabled Payment System (AePS) API Integration

The AePS API enables businesses to provide banking services using Aadhaar authentication. This is particularly valuable in rural and semi-urban areas where banking infrastructure is limited.

Key Features:

Biometric Authentication: Allows users to perform transactions using their Aadhaar number and biometric data.

Core Banking Services: Supports cash withdrawals, balance inquiries, and mini statements, making it a versatile tool for financial inclusion.

Secure Transactions: Ensures that all transactions are securely processed with end-to-end encryption and compliance with UIDAI guidelines.

Integration Steps:

Biometric Device Integration: Ensure compatibility with biometric devices required for Aadhaar authentication.

API Setup: Follow Eko's documentation to integrate the AePS functionalities into your platform.

User Interface Design: Work closely with UI/UX designers to create an intuitive interface for AePS transactions.

3. Bharat Bill Payment System (BBPS) API Integration

The BBPS API allows businesses to offer bill payment services, supporting a wide range of utility bills, such as electricity, water, gas, and telecom.

Key Features:

Wide Coverage: Supports bill payments for a vast network of billers across India, providing users with a one-stop solution.

Real-time Payment Confirmation: Provides instant confirmation of bill payments, improving user trust and satisfaction.

Secure Processing: Adheres to strict security protocols, ensuring that user data and payment information are protected.

Integration Steps:

API Key and Biller Setup: Obtain the necessary API keys and configure the billers that will be available through your platform.

Interface Development: Develop a user-friendly interface that allows customers to easily select and pay their bills.

Testing: Use Eko’s sandbox environment to ensure all bill payment functionalities work as expected before going live.

4. Money Collection API Integration

The Money Collection API is designed for businesses that need to collect payments from customers efficiently, whether it’s for e-commerce, loans, or subscriptions.

Key Features:

Versatile Collection Methods: Supports various payment methods including UPI, bank transfers, and debit/credit cards.

Real-time Tracking: Allows businesses to track payment statuses in real-time, ensuring transparency and efficiency.

Automated Reconciliation: Facilitates automatic reconciliation of payments, reducing manual errors and operational overhead.

Integration Steps:

API Configuration: Set up the Money Collection API using the detailed documentation provided by Eko.

Payment Gateway Integration: Integrate with preferred payment gateways to offer a variety of payment methods.

Testing and Monitoring: Conduct thorough testing and set up monitoring tools to track the performance of the money collection service.

The Role of an Eko API Integration Developer

Integrating these APIs requires a developer who not only understands the technical aspects of API integration but also the regulatory and security requirements specific to financial services.

Skills Required:

Proficiency in API Integration: Expertise in working with RESTful APIs, including handling JSON data, HTTP requests, and authentication mechanisms.

Security Knowledge: Strong understanding of encryption methods, secure transmission protocols, and compliance with local financial regulations.

UI/UX Collaboration: Ability to work with designers to create user-friendly interfaces that enhance the customer experience.

Problem-Solving Skills: Proficiency in debugging, testing, and ensuring that the integration meets the business’s needs without compromising on security or performance.

Benefits of Integrating Eko’s APIs

For businesses, integrating Eko’s APIs offers a multitude of benefits:

Enhanced Service Portfolio: By offering services like money transfer, AePS, BBPS, and money collection, businesses can attract a broader customer base and improve customer retention.

Operational Efficiency: Automated processes for payments and collections reduce manual intervention, thereby lowering operational costs and errors.

Increased Financial Inclusion: AePS and BBPS services help businesses reach underserved populations, contributing to financial inclusion goals.

Security and Compliance: Eko’s APIs are designed with robust security measures, ensuring compliance with Indian financial regulations, which is critical for maintaining trust and avoiding legal issues.

Conclusion

Eko’s API suite for Money Transfer, AePS, BBPS, and Money Collection is a powerful tool for businesses looking to expand their financial service offerings. By integrating these APIs, developers can create robust, secure, and user-friendly applications that meet the diverse needs of today’s customers. As digital financial services continue to grow, Eko’s APIs will play a vital role in shaping the future of fintech in India and beyond.

Contact Details: –

Mobile: – +91 9711090237

E-mail:- [email protected]

#Eko India#Eko API Integration#api integration developer#api integration#aeps#Money transfer#BBPS#Money transfer Api Integration Developer#AePS API Integration#BBPS API Integration

2 notes

·

View notes

Text

Exploring the Python and Its Incredible Benefits:

Python, a versatile programming language known for its simplicity and adaptability, holds a prominent position in the technological landscape. Originating in the late 1980s, Python has garnered substantial attention due to its user-friendly syntax, making it an accessible choice for individuals at all levels of programming expertise. Notably, Python's design principles prioritize code clarity, empowering developers to articulate their ideas effectively and devise elegant solutions.

Python's applicability spans a multitude of domains, encompassing web development, data analysis, artificial intelligence, and scientific computing, among others. Its rich array of libraries and frameworks enhances efficiency in diverse tasks, including crafting dynamic websites, automating routine processes, processing and interpreting data, and constructing intricate applications.

The confluence of Python's flexibility and robust community support has driven its widespread adoption across varied industries. Whether one is a newcomer or an accomplished programmer, Python constitutes a potent toolset for software development and systematic problem-solving.

The ensuing enumeration underscores the merits of acquainting oneself with Python:

Accessible Learning: Python's straightforward syntax expedites the learning curve, enabling a focus on logical problem-solving rather than grappling with intricate language intricacies.

Versatility in Application: Python's versatility finds expression in applications spanning web development, data analysis, AI, and more, cultivating diverse avenues for career exploration.

Data Insight and Analysis: Python's specialized libraries, such as NumPy and Pandas, empower adept data analysis and visualization, enhancing data-driven decision-making.

AI and Machine Learning Proficiency: Python's repository of libraries, including Scikit-Learn, empowers the creation of sophisticated algorithms and AI models.

Web Development Prowess: Python's frameworks, notably Django, facilitate the swift development of dynamic, secure web applications, underscoring its relevance in modern web environments.

Efficient Prototyping: Python's agile development capabilities facilitate the rapid creation of prototypes and experimental models, fostering innovation.

Community Collaboration: The dynamic Python community serves as a wellspring of resources and support, nurturing an environment of continuous learning and problem resolution.

Varied Career Prospects: Proficiency in Python translates to an array of roles across diverse sectors, reflecting the expanding demand for skilled practitioners.

Cross-Disciplinary Impact: Python's adaptability transcends industries, permeating sectors such as finance, healthcare, e-commerce, and scientific research.

Open-Source Advantage: Python's open-source nature encourages collaboration, fostering ongoing refinement and communal contribution.

Robust Toolset: Python's toolkit simplifies complex tasks and accelerates development, enhancing productivity.

Code Elegance: Python's elegant syntax fosters code legibility, promoting teamwork and fostering shared comprehension.

Professional Advancement: Proficiency in Python translates into promising career advancement opportunities and the potential for competitive compensation.

Future-Proofed Skills: Python's enduring prevalence and versatile utility ensure that acquired skills remain pertinent within evolving technological landscapes.

In summation, Python's stature as a versatile, user-friendly programming language stands as a testament to its enduring relevance. Its impact is palpable across industries, driving innovation and technological progress.

If you want to learn more about Python, feel free to contact ACTE Institution because they offer certifications and job opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

10 notes

·

View notes

Text

This Week in Rust 542

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Announcing Rust 1.77.2

Security advisory for the standard library (CVE-2024-24576)

Changes to Rust's WASI targets

Rust Nation UK

Hannah Aubrey - A Web of Rust: The Future of the Internet Depends on Trust

JD Nose - Rust Infrastructure: What it takes to keep Rust running

Amanieu D'Antras - The path to a stable ABI for Rust

Luca Palmieri - Pavex: re-imaging API development in Rust

Lachezar Lechev - Typed for Safety

Marco Concetto Rudilosso - Building a profiler for web assembly

Jon Gjengset - Towards Impeccable Rust

Nicholas Yang - Porting Turborepo From Go To Rust

David Haig - What’s that behind your ear? An open source hearing aid in Rust.

Frédéric Ameye - Renault want to sell cars with rust!

Nikita Lapkov - Type-safe and fault-tolerant mesh services with Rust

Andre Bogus - Easy Mode Rust

Lars Bergstrom - Beyond Safety and Speed: How Rust Fuels Team Productivity

Tim McNamara - Unwrapping unsafe

Nicholas Matsakis - Rust 2024 and beyond

Project/Tooling Updates

Shipping Jco 1.0, WASI 0.2

This month in Pavex, #10

"Containerize" individual functions in Rust with extrasafe

rust-analyzer changelog #228

Rerun 0.15.0 - Blueprints from Python · rerun-io/rerun

Bevy 0.13.2, Curves, Gizmos, and Games

What's new in SeaORM 1.0-rc.x

Observations/Thoughts

Improve performance of you Rust functions by const currying

Ownership in Rust

Thoughts on the xz backdoor: an lzma-rs perspective

hyper HTTP/2 Continuation Flood

Leaky Abstractions and a Rusty Pin

[audio] Launching RustRover: JetBrains' Investment in Rust

[audio] Pavex with Luca Palmieri

[video] Decrusting the tokio crate

[video] Rust 1.77.0: 70 highlights in 30 minutes

[video] Simulate the three body problem in #rustlang

[video] Exploring Fiberplane's 3-Year Rust Journey - with Benno van den Berg

Rust Walkthroughs

Working with OpenAPI using Rust

Zed Decoded: Async Rust

Writing a Unix-like OS in Rust

Fivefold Slower Compared to Go? Optimizing Rust's Protobuf Decoding Performance

Write Cleaner, More Maintainable Rust Code with PhantomData

[video] Extreme Clippy for an existing Rust Crate

[video] developerlife.com - Build a color gradient animation for a spinner component, for CLI, in Rust

[video] developerlife.com - Build a spinner component, for CLI, in Rust

[video] developerlife.com - Build an async readline, and spinner in Rust, for interactive CLI

Research

"Against the Void": An Interview and Survey Study on How Rust Developers Use Unsafe Code

Sound Borrow-Checking for Rust via Symbolic Semantics

Miscellaneous

Rust indexed - Rust mdbooks search

March 2024 Rust Jobs Report

Rust Meetup and user groups (updated)

Embedding the Servo Web Engine in Qt

A memory model for Rust code in the kernel

Building Stock Market Engine from scratch in Rust (II)

Ratatui Received Funding: What's Next?

Crate of the Week

This week's crate is archspec-rs, a library to track system architecture aspects.

Thanks to Orhun Parmaksız for the suggestion!

Please submit your suggestions and votes for next week!

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

CFP - Speakers

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

If you are an event organizer hoping to expand the reach of your event, please submit a link to the submission website through a PR to TWiR.

Updates from the Rust Project

431 pull requests were merged in the last week

CFI: change type transformation to use TypeFolder

CFI: fix ICE in KCFI non-associated function pointers

CFI: restore typeid_for_instance default behavior

CFI: support function pointers for trait methods

CFI: support non-general coroutines

MSVC targets should use COFF as their archive format

actually use the inferred ClosureKind from signature inference in coroutine-closures

add Ord::cmp for primitives as a BinOp in MIR

add a debug asserts call to match_projection_projections to ensure invariant

add aarch64-apple-visionos and aarch64-apple-visionos-sim tier 3 targets

add consistency with phrases "meantime" and "mean time"

assert FnDef kind

assert that args are actually compatible with their generics, rather than just their count

avoid ICEing without the pattern_types feature gate

avoid expanding to unstable internal method

avoid panicking unnecessarily on startup

better reporting on generic argument mismatchs

cleanup: rename HAS_PROJECTIONS to HAS_ALIASES etc

do not ICE in fn forced_ambiguity if we get an error

do not ICE on field access check on expr with ty::Error

do not ICE when calling incorrectly defined transmute intrinsic

fix ByMove coroutine-closure shim (for 2021 precise closure capturing behavior)

fix capture analysis for by-move closure bodies

fix diagnostic for qualifier in extern block

hir: use ItemLocalId::ZERO in a couple more places

impl get_mut_or_init and get_mut_or_try_init for OnceCell and OnceLock

implement T-types suggested logic for perfect non-local impl detection

implement minimal, internal-only pattern types in the type system

instantiate higher ranked goals outside of candidate selection

link against libc++abi and libunwind as well when building LLVM wrappers on AIX

make inductive cycles always ambiguous

make sure to insert Sized bound first into clauses list

match ergonomics: implement "&pat everywhere"

match lowering: make false edges more precise

more postfix match fixes

move check for error in impl header outside of reporting

only allow compiler_builtins to call LLVM intrinsics, not any link_name function

only inspect user-written predicates for privacy concerns

pass list of defineable opaque types into canonical queries

pattern analysis: fix union handling

postfix match fixes

privacy: stabilize lint unnameable_types

put checks that detect UB under their own flag below debug_assertions

revert removing miri jobserver workaround

safe Transmute: Compute transmutability from rustc_target::abi::Layout

sanitizers: create the rustc_sanitizers crate

split hir ty lowerer's error reporting code in check functions to mod errors

teach MIR inliner query cycle avoidance about const_eval_select

transforms match into an assignment statement

use the more informative generic type inference failure error on method calls on raw pointers

add missing ?Sized bounds for HasInterner impls

introduce Lifetime::Error

perf: cache type info for ParamEnv

encode dep graph edges directly from the previous graph when promoting

remove debuginfo from rustc-demangle too

stabilize const_caller_location and const_location_fields

stabilize proc_macro_byte_character and proc_macro_c_str_literals

stabilize const Atomic*::into_inner

de-LLVM the unchecked shifts

rename expose_addr to expose_provenance

rename ptr::from_exposed_addr → ptr::with_exposed_provenance

remove rt::init allocation for thread name

use unchecked_sub in str indexing

don't emit divide-by-zero panic paths in StepBy::len

add fn const BuildHasherDefault::new

add invariant to VecDeque::pop_* that len < cap if pop successful

add Context::ext

provide cabi_realloc on wasm32-wasip2 by default

vendor rustc_codegen_gcc

cargo: Build script not rerun when target rustflags change

cargo add: Stabilize MSRV-aware version req selection

cargo toml: Decouple target discovery from Target creation

cargo toml: Split out an explicit step to resolve Cargo.toml

cargo metadata: Show behavior with TOML-specific types

cargo: don't depend on ? affecting type inference in weird ways

cargo: fix github fast path redirect

cargo: maintain sorting of dependency features

cargo: switch to using gitoxide by default for listing files

rustdoc-search: shard the search result descriptions

rustdoc: default to light theme if JS is enabled but not working

rustdoc: heavily simplify the synthesis of auto trait impls

rustdoc: synthetic auto trait impls: accept unresolved region vars for now

clippy: manual_swap auto fix

clippy: manual_unwrap_or_default: check for Default trait implementation in initial condition when linting and use IfLetOrMatch

clippy: allow cast lints in macros

clippy: avoid an ICE in ptr_as_ptr when getting the def_id of a local

clippy: correct parentheses for needless_borrow suggestion

clippy: do not suggest assigning_clones in Clone impl

clippy: fix ice reporting in lintcheck

clippy: fix incorrect suggestion for !(a as type >= b)

clippy: reword arc_with_non_send_sync note and help messages

clippy: type certainty: clear DefId when an expression's type changes to non-adt

rust-analyzer: apply cargo flags in test explorer

rust-analyzer: fix off-by-one error converting to LSP UTF8 offsets with multi-byte char

rust-analyzer: consider exported_name="main" functions in test modules as tests

rust-analyzer: fix patch_cfg_if not applying with stitched sysroot

rust-analyzer: set the right postfix snippets competion source range

Rust Compiler Performance Triage

A quiet week; all the outright regressions were already triaged (the one biggish one was #122077, which is justified as an important bug fix). There was a very nice set of improvements from PR #122070, which cleverly avoids a lot of unnecessary allocator calls when building an incremental dep graph by reusing the old edges from the previous graph.

Triage done by @pnkfelix. Revision range: 3d5528c2..86b603cd

3 Regressions, 3 Improvements, 7 Mixed; 1 of them in rollups 78 artifact comparisons made in total

See full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Merge RFC 3513: Add gen blocks

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] RFC: Drop temporaries in tail expressions before local variables

[disposition: merge] RFC: Reserve unprefixed guarded string literals in Edition 2024

Tracking Issues & PRs

Rust

[disposition: merge] Always display stability version even if it's the same as the containing item

[disposition: merge] Tracking Issue for cstr_count_bytes

[disposition: merge] rustdoc-search: single result for items with multiple paths

[disposition: merge] Tracking Issue for #![feature(const_io_structs)]

[disposition: merge] Tracking Issue for alloc::collections::BinaryHeap::as_slice

[disposition: merge] Tracking Issue for fs_try_exists

[disposition: merge] stabilize -Znext-solver=coherence

[disposition: merge] Document overrides of clone_from() in core/std

[disposition: merge] Stabilise inline_const

[disposition: merge] Tracking Issue for RFC 3013: Checking conditional compilation at compile time

[disposition: merge] sess: stabilize -Zrelro-level as -Crelro-level

[disposition: merge] Implement FromIterator for (impl Default + Extend, impl Default + Extend)

[disposition: close] Return the delimiter from slice::split_once

[disposition: merge] Support type '/' to search

[disposition: merge] Tracking Issue for Seek::seek_relative

[disposition: merge] Tracking Issue for generic NonZero

New and Updated RFCs

[new] Add an expression for direct access to an enum's discriminant

[new] RFC: Drop temporaries in tail expressions before local variables

Upcoming Events

Rusty Events between 2024-04-10 - 2024-05-08 🦀

Virtual

2024-04-11 | Virtual + In Person (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-04-11 | Virtual (Nürnberg, DE) | Rust Nüremberg

Rust Nürnberg online

2024-04-11 | Virtual (San Diego, CA, US) | San Diego Rust

San Diego Rust April 2024 Tele-Meetup

2024-04-15 & 2024-04-16 | Virtual | Mainmatter

Remote Workshop: Testing for Rust projects – going beyond the basics

2024-04-16 | Virtual (Dublin, IE) | Rust Dublin

A reverse proxy with Tower and Hyperv1

2024-04-16 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful—forensic parsing via Artemis

2024-04-17 | Virtual | Rust for Lunch

April 2024 Rust for Lunch

2024-04-17 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Reflections on RustNation UK 2024

2024-04-17 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-04-18 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-04-21 | Virtual (Israel) | Rust in Israel

Using AstroNvim for Rust development (in Hebrew)

2024-04-25 | Virtual + In Person (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-04-30 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2024-05-01 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Rust for Rustaceans Book Club: Chapter 5 - Project Structure

2024-05-01 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-05-02 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-05-07 | Virtual (Buffalo, NY) | Buffalo Rust Meetup

Buffalo Rust User Group

Africa

2024-05-04 | Kampala, UG | Rust Circle Kampala

Rust Circle Meetup

Asia

2024-04-16 | Tokyo, JP | Tokyo Rust Meetup

The Good, the Bad, and the Async (RSVP by 15 Apr)

Europe

2024-04-10 | Cambridge, UK | Cambridge Rust Meetup

Rust Meetup Reboot 3

2024-04-10 | Cologne/Köln, DE | Rust Cologne

This Month in Rust, April

2024-04-10 | Manchester, UK | Rust Manchester

Rust Manchester April 2024

2024-04-10 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2024-04-11 | Bordeaux, FR | Rust Bordeaux

Rust Bordeaux #2 : Présentations

2024-04-11 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2024-04-15 | Zagreb, HR | impl Zagreb for Rust

Rust Meetup 2024/04: Building cargo projects with NIX

2024-04-16 | Bratislava, SK | Bratislava Rust Meetup Group

Rust Meetup by Sonalake #5

2024-04-16 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

winnow/nom

2024-04-16 | Munich, DE + Virtual | Rust Munich

Rust Munich 2024 / 1 - hybrid

2024-04-17 | Bergen, NO | Hubbel kodeklubb

Lær Rust med Conways Game of Life

2024-04-17 | Ostrava, CZ | TechMeetup Ostrava

TechMeetup: RUST

2024-04-20 | Augsburg, DE | Augsburger Linux-Infotag 2024

Augsburger Linux-Infotag 2024: Workshop Einstieg in Embedded Rust mit dem Raspberry Pico WH

2024-04-23 | Berlin, DE | Rust Berlin

Rust'n'Tell - Rust for the Web

2024-04-23 | Paris, FR | Rust Paris

Paris Rust Meetup #67

2024-04-25 | Aarhus, DK | Rust Aarhus

Talk Night at MFT Energy

2024-04-23 | Berlin, DE | Rust Berlin

Rust'n'Tell - Rust for the Web

2024-04-25 | Berlin, DE | Rust Berlin

Rust and Tell - TBD

2024-04-27 | Basel, CH | Rust Basel

Fullstack Rust - Workshop #2 (Register by 23 April)

2024-04-30 | Budapest, HU | Budapest Rust Meetup Group

Rust Meetup Budapest 2

2024-04-30 | Salzburg, AT | Rust Salzburg

[Rust Salzburg meetup]: 6:30pm - CCC Salzburg, 1. OG, ArgeKultur, Ulrike-Gschwandtner-Straße 5, 5020 Salzburg

2024-05-01 | Utrecht, NL | NL-RSE Community

NL-RSE RUST meetup

2024-05-06 | Delft, NL | GOSIM

GOSIM Europe 2024

2024-05-07 & 2024-05-08 | Delft, NL | RustNL

RustNL 2024

North America

2024-04-10 | Boulder, CO, US | Boulder Rust Meetup

Rust Meetup: Better Builds w/ Flox + Hangs

2024-04-11 | Lehi, UT, US | Utah Rust

Interactive Storytelling using Yarn Spinner with Rex Magana

2024-04-11 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-04-11 | Spokane, WA, US | Spohttps://www.meetup.com/minneapolis-rust-meetup/kane Rust

Monthly Meetup: The Rust Full-Stack Experience

2024-04-15 | Minneapolis, MN, US | Minneapolish Rust Meetup

Minneapolis Rust: Getting started with Rust! #2

2024-04-15 | Somerville, MA, US | Boston Rust Meetup

Davis Square Rust Lunch, Apr 15

2024-04-16 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-04-16 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group: Meet Servo and Robius Open Source Projects

2024-04-18 | Chicago, IL, US | Deep Dish Rust

Rust Talk: What Are Panics?

2024-04-18 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-04-24 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2024-04-25 | Nashville, TN, US | Music City Rust Developers

Music City Rust Developers - Async Rust on Embedded

2024-04-26 | Boston, MA, US | Boston Rust Meetup

North End Rust Lunch, Apr 26

Oceania

2024-04-15 | Melbourne, VIC, AU | Rust Melbourne

April 2024 Rust Melbourne Meetup

2024-04-17 | Sydney, NSW, AU | Rust Sydney

WMaTIR 2024 Gala & Talks

2024-04-30 | Auckland, NZ | Rust AKL

Rust AKL: Why Rust? Convince Me!

2024-04-30 | Canberra, ACT, AU | Canberra Rust User Group

CRUG April Meetup: Generics and Traits

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

As a former JavaScript plebeian who has only been semi-recently illuminated by the suspiciously pastel pink, white and blue radiance of Rust developers, NOT having to sit in my web console debugger for hours pushing some lovingly crafted [object Object] or undefined is a blessing.

– Julien Robert rage-blogging against bevy

Thanks to scottmcm for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

1 note

·

View note

Text

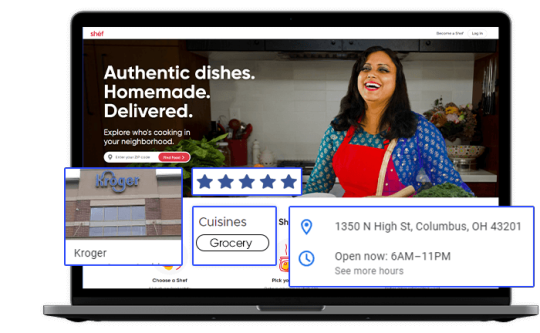

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

Django-Based Laundry Management System for Online Booking & Delivery | Pythoncodeverse

In today's fast-paced world, convenience and time-saving services have become a necessity. Laundry, being a daily chore, often becomes a time-consuming task for working professionals, students, and families. To address this problem, the idea of an Laundry Management System using Django comes into play. This system allows users to schedule laundry pick-ups, track their orders, and make payments online — all from the comfort of their home. Using Python Django, a powerful web framework, we can build a robust, scalable, and secure portal for managing laundry services efficiently.

Why Choose Django?

Django is a high-level Python web framework that promotes rapid development and clean, pragmatic design. It is known for its:

Built-in admin panel

ORM (Object-Relational Mapping)

Security features

Scalability

Well-documented structure

These features make Django an ideal choice for developing an Online Laundry Portal System.

Key Features of the System

User Registration and Login:

Customers can sign up and log in securely using email and password.

Django's authentication system ensures password hashing and session security.

Service Selection:

Users can choose different laundry services like washing, dry cleaning, ironing, etc.

Options for selecting clothes quantity, fabric type, and special instructions.

Order Scheduling:

Customers can schedule pick-up and delivery times as per their convenience.

Delivery staff can view their assigned pick-ups and deliveries.

Real-time Order Tracking:

Users can track their laundry status (e.g., Picked Up, In Process, Ready, Delivered).

Payment Integration:

Online payment through Razorpay, Stripe, or Cash on Delivery options.

Secure checkout process integrated using Django views and APIs.

Admin Dashboard:

Admins can view, update, and manage orders, users, and staff details.

Analytical insights like revenue, most used services, and customer feedback.

Email/SMS Notifications:

Automatic email or SMS updates about order status, delays, or offers.

Technical Architecture

Frontend:

HTML, CSS, Bootstrap, and JavaScript for designing responsive UI.

Django Templates used to dynamically display data from the backend.

Backend:

Python with Django framework.

Models for Users, Orders, Services, Payments, and Feedback.

Django’s in-built ORM to interact with the database.

Database:

SQLite (development) or PostgreSQL/MySQL (production).

Secure and efficient data storage for user details, orders, and transactions.

Security:

Django handles CSRF protection, SQL Injection prevention, and XSS security.

Login throttling and password validation ensure secure authentication.

Workflow of the System

A new user signs up and logs into the portal.

The user selects the laundry service and schedules a pickup.

The order is assigned to a delivery agent by the admin.

Pickup is completed and updated in the system.

Clothes are processed and status is updated in real-time.

After completion, the delivery is scheduled and completed.

The user pays online and can rate the service.

Benefits for Users and Businesses

Users: Time-saving, convenience, contactless service, real-time updates.

Laundry Owners: Better order management, customer retention, and revenue growth.

Staff: Clear delivery schedules, route planning, and performance tracking.

Future Enhancements

Mobile app integration (using Django REST API).

Machine Learning for demand prediction and dynamic pricing.

Loyalty programs and coupon systems.

GPS tracking for real-time delivery agent location.

Multi-vendor platform for supporting various local laundries.

Conclusion

The Online Laundry Portal System using Python Django is a modern solution to an age-old problem. It brings digital transformation to the traditional laundry business, enhancing customer experience and operational efficiency. With its scalable and modular architecture, Django makes it easy to add features and handle high traffic. Whether you’re a developer building a portfolio project or a startup founder looking for a product idea, this system offers an excellent opportunity to make a real-world impact.

Contact us now or visit our website to get a quote!

Email: [email protected]

Website: https://pythoncodeverse.com

#Online Laundry Portal System#Laundry Management System using Django#Laundry Service Management System#Online Laundry Service Platform in Python#Python Django Laundry Project#Laundry System Python Project#Online Laundry System using Django

0 notes

Text

How Students Benefit from Computer Assignment Help UK for Java and C++

Java and C++ are standard programming languages that are widely used in software development, game programming, and system applications. However, the assignments sometimes prove challenging for the students due to the complexity of the syntax, debugging, and the short duration available. This is where computer assignment help UK becomes necessary. At Locus Assignment, we provide professional services that help students complete their assignments properly and within the provided timeline. If you need help with Java assignment in UK or C++ coding assistance, professional services offered by us simplify learning and make it more efficient.

Why C++ and Java are necessary for students

Java and C++ are vital to building a strong computer science foundation due to the following reasons:

Java for enterprise applications: Enterprises require Java for enterprise applications, web application development, and smartphone apps. Knowledge of Java enhances job prospects in leading technology corporations.

C++ for High-Performance Computing: C++ finds extensive usage in system programming, game programming, and embedded systems. C++ programming enables students to create efficient and scalable software applications.

Object-Oriented Programming Concepts: The two programming languages implement OOP concepts, which enhance solving abilities and are transferable to programming languages such as C# and Python.

Career opportunities: The top organisations prefer Java and C++ professionals, leading to better job opportunities and professional growth in the IT field.

Common Issues Faced by Students in C++ and Java Projects

Students typically find C++ and Java assignments challenging because:

Advanced Syntactics and Concepts: Data structures, object-oriented programming, and memory are some concepts that are hard to learn.

Code writing is one thing, but debugging requires patience and technical skill.

Implementation of the Algorithm: Most assignments entail complicated algorithmic designs that require professional direction.

Tight Deadlines: Having multiple assignments along with academic work can be stressful.

Deficiency in Practical Knowledge: The majority of the students struggle to apply theoretical knowledge to real-life situations.

How Locus Assignment Delivers the Best Computer Assignment Help in UK

We at Locus Assignment ensure academic success with our tailor-made services:

Professional Mentoring: Our professional coders provide one-on-one mentorship, with concept explanation in simple terms.

Tailored Solutions: We make assignments that fit university requirements and specific learning needs.

Original Work: All assignments are original and thoroughly checked to be plagiarism-free work.

On-Time Delivery: We prioritise deadlines to help students submit their work on time.

Reasonable Prices: We provide reasonable prices, with quality work at an affordable price.

Our C++ and Java Homework Help UK includes:

We provide the top programming assignment help UK with focused services on C++ and Java:

Java Project Assistance: Including object-oriented programming, GUI programs, multithreading, and database connectivity

C++ Support: Assistance with STL, file operations, handling memory, and complicated algorithms.

Real-time learning opportunities where students engage with the experts in live coding sessions.

Code Optimisation and Debugging: We help students optimise their code for better efficiency and performance.

Code Documentation and Explanation: Well-documented assignments for easier understanding and future modifications.

Conclusion

Java and C++ assignments are challenging, but with the right guidance, students are able to excel in these programming languages. Locus Assignment delivers professional computer assignment help UK, ensuring that students achieve high-quality, well-structured assignments on time. If you require help with Java assignment in UK or C++ project work, we are there to guide you. If you require coding assignment help UK, we are ready to guide you towards academic success. Receive the best programming assignment help UK today and enhance your programming skills with professional guidance!

0 notes

Text

How to Select the Right web development company in Ireland for Your Business

With today’s digital-first world, a professional, working, and scalable website is not a luxury; it’s a necessity. Whether you’re starting a new business or overhauling an aging website, choosing the right Best Web Development Partner can be the difference between online success or failure. But with there being hundreds of agencies providing the same kind of services, how do you get the right fit?

Why Choosing the Right Web Development Partner Matters

Your site is the online presence of your brand. It’s usually the first encounter for your future customers. If your site is not properly designed or is slow, you can lose leads, conversions, and credibility. A professionally built site, however, can:

Enhance user experienc

Heighten search engine visibility

Increase brand credibility

Facilitate long-term growth through scalable solutions

That’s why selecting the appropriate development partner is more than a business deal; it’s an investment.

Step 1: Determine Your Goals and Needs

Take the time to establish clearly defined goals for your project prior to contacting an Irish web development company. Consider the following questions:

What is your website’s main purpose? (e.g., e-commerce, lead generation, portfolio)

What functionality do you require? (e.g., content management system, booking forms, SEO tools)

Who is your target market?

How much money are you willing to spend, and how long do you need to do it?

Having a distinct idea of what you require will enable you to effectively communicate with likely development partners.

Step 2: Opt for Local Expertise. Why Ireland?

While there is no shortage of web developers globally, doing business with a web development firm in Ireland has various benefits:

Local Market Insight : Irish agencies have local market awareness and consumer behavior insights.

Time Zone Compatibility : Sharing the same time zone allows for enhanced communication and quicker response times.

On-Site Collaboration: Should it be necessary, face-to-face meetings with a web development firm in Dublin or elsewhere can facilitate the coordination of projects.

When your customer base is regional or local, an Irish-based team can provide an understanding that international companies just can’t replicate.

Step 3: Review Their Portfolio

The best measure of a web development business in Dublin or any other part of Ireland is through their history. A good portfolio should include:

Various sectors

Various types of websites (e-commerce, corporate, portfolio, etc.)

Mobile-responsive design

Performance optimization

Designing with UX/UI in mind

Be sure to also look closely at sites that are specific to your sector or mission.

Request live examples and test the websites for load time, usability, and user experience.

Step 4: Verify Client Testimonials and Reviews

Honesty and integrity are essential in contracting a web development partner. Verify:

Client testimonials on the website of the company

Google reviews and ratings

Clutch or Trustpilot profiles

Case studies

You want to hire a web development firm in Ireland with a history of producing high-quality output on schedule and within the budget.

Step 5: Assess Technical Competence

Web development is a general term. It’s not design alone; it also includes backend infrastructure, security, performance, and scalability.

Your partner of choice should provide:

Front-end development: HTML, CSS, JavaScript frameworks (React, Vue, etc.)

Back-end development: PHP, Python, Node.js, or alternative new-age technologies

CMS expertise: WordPress, Joomla, Magento, or custom CMS solutions

E-commerce development: WooCommerce, Shopify, or custom platforms

Mobile responsiveness and optimization

SEO basics built into development

When you engage a professional web development firm in Dublin, you’re not simply getting a designer; you’re getting a full-stack digital partner.

Step 6: Inquire About Their Development Process

A quality web development partner should have a defined, clear process that keeps you aware at each stage. Ask questions such as:

What’s your average project duration?

Do you provide wireframes or a prototype?

How do you integrate feedback and revisions?

Will I have a specific project manager assigned to me?

Do you adopt agile development methods?

Effective communication, frequent updates, and teamwork enable the project to be on track and meet your expectations.

Step 7: Post-Launch Support

Your collaboration with an Irish web development company shouldn’t fade after the website is launched. Post-launch support is equally vital as development.

Seek out companies providing:

Regular maintenance and updates

Security monitoring and backups

Optimization of performance

Technical support and troubleshooting

SEO improvements and content updates

Several of the best web development firms in Dublin provide website care packages to keep your site secure, fast, and current.

Step 8: Negotiate Budget and Clarity

While cost shouldn’t be a sole determining factor in your choice, it is essential to make sure there are no surprises or unclear terms. A good Irish web development firm will give you:

Clear estimates with itemized breakdowns

Clear timelines and deliverables

Flexible pricing models (fixed price, hourly, milestone-based)

Shun companies that are evasive about pricing or offer “too-good-to-be-true” rates good web development does cost a reasonable price.

Step 9: Assess Cultural Fit and Communication Style

Beyond skills and experience, you need a partner who understands your brand vision and communicates effectively. When choosing a web development company in Dublin, ask:

Do they listen to your ideas and provide feedback?

Are they proactive when proposing solutions?

Is their staff responsive and available?