#but like… we need cloud computing and cloud storage now

Explore tagged Tumblr posts

Text

just like with machine learning, we can and should demystify “the cloud” without demonizing it in the process. cloud computing and cloud storage architecture are extremely useful tools; it’s how they get deployed by big tech companies that can be a problem.

#ray.txt#i’m making this one unrebloggable because i do not have the bandwidth (lol) for managing this conversation with strangers#tl:dr i’m not a programmer and i’m not in enterprise architecture anymore#but like… we need cloud computing and cloud storage now#we would have to re-engineer and re-design a lot of our infrastructure to achieve that#idk i am just leery of seeing cloud storage and cloud computing getting lumped in with generative AI in the cultural discourse#okay i’m going back to bed now

30 notes

·

View notes

Text

I would like to address something that has come up several times since I relaunched my computer recommendation blog two weeks ago. Part of the reason that I started @okay-computer and that I continue to host my computer-buying-guide is that it is part of my job to buy computers every day.

I am extremely conversant with pricing trends and specification norms for computers, because literally I quoted seven different laptops with different specs at different price-points *today* and I will do more of the same on Monday.

Now, I am holding your face in my hands. I am breathing in sync with you. We are communicating. We are on the same page. Listen.

Computer manufacturers don't expect users to store things locally so it is no longer standard to get a terabyte of storage in a regular desktop or laptop. You're lucky if you can find one with a 512gb ssd that doesn't have an obnoxious markup because of it.

If you think that the norm is for computers to come with 1tb of storage as a matter of course, you are seeing things from a narrow perspective that is out of step with most of the hardware out there.

I went from a standard expectation of a 1tb hdd five years ago to expecting to get a computer with a 1tb hdd that we would pull and replace with a 1tb ssd to expecting to get a computer that came with a 256gb ssd that we would pull and replace with a 1tb ssd, to just having the 256gb ssd come standard and and only seeking out more storage if the customer specifically requested it because otherwise they don't want to pay for more storage.

Computer manufacturers consider any storage above 256gb to be a premium feature these days.

Look, here's a search for Lenovo Laptops with 16GB RAM (what I would consider the minimum in today's market) and a Win11 home license (not because I prefer that, but to exclude chromebooks and business machines). Here are the storage options that come up for those specs:

You will see that the majority of the options come with less than a terabyte of storage. You CAN get plenty of options with 1tb, but the point of Okay-Computer is to get computers with reasonable specs in an affordable price range. These days, that mostly means half a terabyte of storage (because I can't bring myself to *recommend* less than that but since most people carry stuff in their personal cloud these days, it's overkill for a lot of people)

All things being equal, 500gb more increases the price of this laptop by $150:

It brings this one up by $130:

This one costs $80 more to go from 256 to 512 and there isn't an option for 1TB.

For the last three decades storage has been getting cheaper and cheaper and cheaper, to the point that storage was basically a negligible cost when HDDs were still the standard. With the change to SSDs that cost increased significantly and, while it has come down, we have not reached the cheap, large storage as-a-standard on laptops stage; this is partially because storage is now SO cheap that people want to entice you into paying a few dollars a month to use huge amounts of THEIR storage instead of carrying everything you own in your laptop.

You will note that 1tb ssds cost you a lot less than the markup to pay for a 1tb ssd instead of a 500gb ssd

In fact it can be LESS EXPENSIVE to get a 1tb ssd than a 500gb ssd.

This is because computer manufacturers are, generally speaking, kind of shitty and do not care about you.

I stridently recommend getting as much storage as you can on your computer. If you can't get the storage you want up front, I recommend upgrading your storage.

But also: in the current market (December 2024), you should not expect to find desktops or laptops in the low-mid range pricing tier with more than 512gb of storage. Sometimes you'll get lucky, but you shouldn't be expecting it - if you need more storage and you need an inexpensive computer, you need to expect to upgrade that component yourself.

So, if you're looking at a computer I linked and saying "32GB of RAM and an i7 processor but only 500GB of storage? What kind of nonsense is that?" Then I would like to present you with one of the computers I had to quote today:

A three thousand dollar macbook with the most recent apple silicon (the m4 released like three weeks ago) and 48 FUCKING GIGABYTES OF RAM with a 512gb ssd.

You can't even upgrade that SSD! That's an apple that drive isn't going fucking anywhere! (don't buy apple, apple is shit)

The norms have shifted! It sucks, but you have to be aware of these kinds of things if you want to pay a decent price for a computer and know what you're getting into.

5K notes

·

View notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

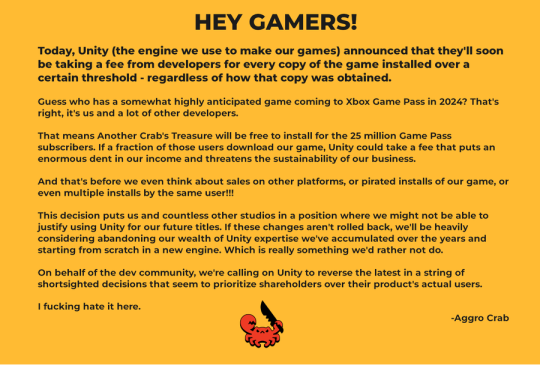

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

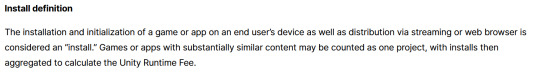

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Text

TECH RANT I THINK EVERYONE SHOULD KNOW. (and alot of people probably already know but WE DIGRESSS)

As a non-tech savvy person I learn something new every day from my boyfriend.

Today I learned; Stores don't provide the largest storage options for gadgets bcs "most people don't need that."

Which is nonsense. Bcs I'd rather have like 2 2TB memory sticks and not use them all the way up than like 15 20gb ones.

There are 2TB memory sticks for cheap online. And in stores the 32gb ones legit cost more (6 vs 15). (Its very much this illusion of the best there is for people who don't know tech).

I tend to prefer memory cards, they don't last as long and they never have 2TB of storage BUT i can use them for my camera and 3ds and they're tiny so its more convenient for a lot of things (and now i have multiple copies of my 3ds with different downloaded games so I can have more on me at all times).

HOWEVER; I download my dvd's and TV shows. AND I DIDN'T REALISE EACH EPISODE COULD BE A GB. Thats only 32 episodes!! (Which is a lot but often not even a whole show).

So if you're like me and building a digital library (and even if not and you genuinely don't need that much storage) GET THE 2TB MEMORY STICKS FOR CHEAP ONLINE. Better to have more than ya need on storage than not enough.

ALSO YOU CAN NOW BASICALLY BACK UP YOUR WHOLE COMPUTER OR HAVE 10 TIMES AS MANY GAMES ON THERE: YOU ARE SO WELCOME ❤️

Bro i love having a tech boyfriend. and i love reddit. YEAH!

(I am an indoor person with no spare money to give things like amazon-prime or Netflix and I plan to go back to uni; I need alot ALOT of tech storage.)

I FORGOT TO MENTION:

Here is the issue; not a great life-span. Memory sticks need maintenance. And, depending on quality and care, can really range in life span. From incredibly short to wonderfully long. This is why my father (a data man to the moon and therefore choked full of memory sticks and collected info) recommends the cloud. I don't like the cloud so will just have multiple back-ups. And open my files freqently-ish.

Memory and Storage are two different things in tech language. But I am talking about storage. When i say "memory stick" or "memory card" i am simply referring to the item not the ram or memory on the item itself.

#tech#memory sticks#rant#random tip#random#computer#technology#chronic pain#disabled#actually disabled#disabled community#chronic fatigue#spoonie

23 notes

·

View notes

Text

Tron Ares looks good in that new trailer and I like that there’s a bit referencing 1982 but it still sucks that Jared Leto is in it lmao (I really don’t care how bad it may be I’m still watching it so Disney stops doing their own franchise dirty)

Regarding posts I read about how Tron has turned into the typical evil AI story (heck the new Ares trailer literally mentions AI and most likely is a critique of irl AI practices, kinda like Split Fiction (mild game spoilers) but more so “Terminator” problem instead of generative AI problem from what I can tell) it’s interesting to see (and I will try to read into it more in depth once my darn summer college class ends :,) ) and it’s just really sad to see how AI and technology in general has been tainted by generative AI and techbros and bigots, especially robots (I say this as someone who loves robots, drawing them and grew up wanting a robot friend, loving Wall-E etc) and now they’re just painful reminders of generative AI being a plague AND irl robots are always either plagiarism machines or uncanny valley looking freaks (instead of a cute screen face etc they do the fake skin realistic face).

Frutiger aero is one of my favorite aesthetics and I miss the era of that aesthetic because it was the last time technology felt hopeful and optimistic, and now it’s all the same flavorless corporate flatness, generative AI now plagues everything and I’m so sick and tired of it (I have had people irl tell me multiple times to use ChatGPT, one instance being to “come up with a more original OC story title” like??? No that’s not even original anymore BUZZ OFF)

Going back to Tron I will always love the franchise for its anti fascism themes, which so far have been in all of its iterations (I’ve only seen the two movies and Uprising but plan to consume everything, resources would be much appreciated) and I find it insane how well everything has aged, from 1982’s “The computers will start thinking, and the people will stop” to Legacy’s Quorra being a program who loves humanity and human culture like books (seriously we need more robots in fiction like her and not Terminators or humanity haters) and even Uprising delves into that aspect in some artistic ways (mild spoilers) and ohhh Beck you will always be my favorite protagonist who never stopped fighting back against fascism <3 (my husband-)

There are criticisms to be said about Tron Legacy feeling so disconnected from 1982 (and I’m saying this as someone who used to only be into Legacy and didn’t know about either 1982 or Uprising, you can blame my beasties (best friends) fumbling it but it was probably a blessing in disguise for reasons I won’t elaborate on lmao) but at the same time considering the time difference between the two movies inevitably aesthetics change and evolve and what is considered futuristic has changed, and in a way it did predict technology becoming sleeker but also more corporate and predatory (paywalling stuff like cloud storage makes me think of how in the Legacy and Uprising grid identity discs seem to be required for all programs and if you lose it you can’t function properly for long, mild Uprising spoilers again, while Yori in 1982 didn’t even have an identity disc nor did she need one, and I literally kept this idea for my original cyber fantasy OC story that is now mainly inspired by Tron, especially Uprising lmao) but yeah it’s still sad to see it just be rewritten as “oooo evil AI” instead of “mechanical/electronic lifeform that thinks and feels just like a human is organic” (more of the latter or heck both in the same story is much appreciated) and regardless generative AI has further ruined the idea of robots and what I previously mentioned.

Now do I prefer 1982 or Legacy? Honestly even now I’m not sure (I really need to rewatch Legacy it’s been years) but I prefer the aesthetics of Legacy mostly out of nostalgia and my love for futuristic sleek aesthetics (Legacy and Uprising feel dark aero and Uprising specifically does feel sort of frutiger aero with the colorful suit designs (Beck’s blue accents, Mara’s blue hair and yellow accents etc) but my favorite of the franchise is still Uprising lol (the two movies are tied, both have pros and cons), and looking at the concept art for Uprising you can definitely see the 1982 inspirations and references (forever mourning Beck’s bootsies </3 went from having work boots to looking like he’s wearing footie pajamas /lh) and it’s kind of awkward trying to connect Uprising to Legacy with how the plot goes but yeah we keep on going lol

(Also sorry Sam but Beck won me over because he’s got so much more character, action and flavor, charm and personality LMAO also sorry Quorra you can have Sam I wanna be with Beck, Paige, Mara and Zed)

This post is really rambly and all over the place but I figured I’d let everyone know my thoughts and opinions, feel free to disagree respectfully (just don’t be a jerk please :/ ) and if people want to discuss this or talk I’m open to it :}

9 notes

·

View notes

Text

HOW TO SCREENCAP & POST YOUR CAPS : A MOSTLY COMPREHENSIVE GUIDE

i got an ask about this, and it felt like it was too long of an explanation to answer in an ask so i made this guide. i am definitely not the expert (as proven by the fact that my VLC tutorial is two links to better tutorials than i could ever make) , but i hope this is helpful!

TABLE OF CONTENTS - finding stuff to cap - capping 101 - storage

FINDING STUFF TO CAP the less "crime" you do while doing this the better honestly. make someone else do it for you and if you absolutely must sail the seven seas 🏴☠️ for the love of god use a good vpn and anti-virus. the safest way to find downloads is to find pages who post them for you to use - on tumblr that is hdsources ! we love hdsources here - there are also pages on instagram (and apparently the site formerly known as twitter, but i don't use those) who post downloads of stuff. my favorites on insta are megaawrld_ , logolessfiles, djatsscenes, sadisticscenes and elyse.logoless . to get into these pages you do have to have an instagram account, but once you get in you can get links to them posting shows and movies. this is significantly safer than p*racy. the next step if these pages don't have what you want is to get them yourself through other ways. if you have to do that, GET A FUCKING VPN.

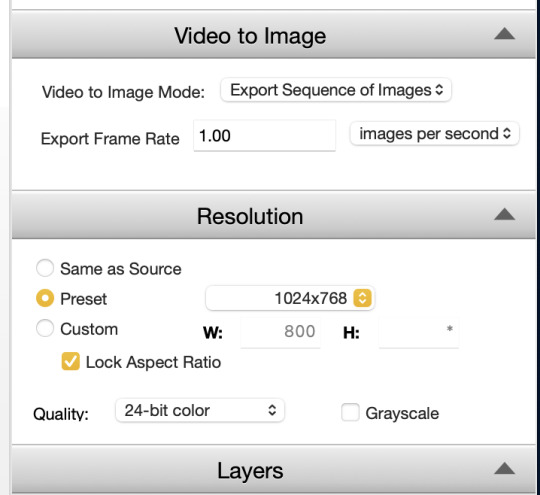

CAPPING 101 now that you have something to cap, it's time to actually make screencaps. you're gonna wanna download a program to do that. most people use VLC, i use adapter for the most part but it can be fickle so i'm learning to use VLC too.

adapter doesn't require much in depth so here's a quick tutorial: - have file and drop it into the window (it can read mp4 and mkv files) - select where you want your screencaps to end up, i make a folder for them - select your frame rate (how many images you want to generate per second of video. i tend to do 1, and anything over 5 creates so many pictures that its too much to deal with, but if you're making gifs you want more pictures) - select your file size and image quality.

i could not explain VLC to you if i tried, i am still figuring out how to use it. this tutorial & this tutorial have been very helpful though !

STORAGE

honestly this should have probably come first, but i didn't want to scare people. there are two types of storage, physical and cloud storage. to run a resource blog you need both.

physical storage comes in the form of space on a hard drive. your computer has a limited amount of space and i truly do not suggest keeping every screencap you've ever made on your computer's hard drive. screencaps take up A LOT of space. get an external hard drive and get the beefiest one you can afford. ssds (solid state drives) are fast as fuck. depending on how much content you make (and how much you can afford) get at LEAST 2 tbs but maybe get more. i like this guy cause it's fast and small!

if you just came here to learn how to screencap you can stop here unless you want to learn how to back up your files because that's really what cloud storage is for.

cloud storage is storage that is not on your actual computer. you cannot touch it but it's important if you want to make your screencaps available for other people to use.

i'm a big fan of dropbox, mega, mediafire and if you absolutely must use it google drive. (my preferences are in that order) unfortunately, cloud storage gets really expensive really fast and there's kind of no way to avoid it. compress your files when you upload them so they take up less space in whatever form of cloud storage you do get, and pray.

that's what i got for ya! if you have any questions feel free to send an ask or join my discord server!

15 notes

·

View notes

Text

By Madge Waggy MadgeWaggy.blogspot.com

December 30, 2024

According to Steven Overly From: www.politico.com The freakout moment that set journalist Byron Tau on a five-year quest to expose the sprawling U.S. data surveillance state occurred over a “wine-soaked dinner” back in 2018 with a source he cannot name.

The tipster told Tau the government was buying up reams of consumer data — information scraped from cellphones, social media profiles, internet ad exchanges and other open sources — and deploying it for often-clandestine purposes like law enforcement and national security in the U.S. and abroad. The places you go, the websites you visit, the opinions you post — all collected and legally sold to federal agencies.

I’m going to alert you to what many are considering to be on of the worst doomsday scenarios for free American patriots. One that apparently not many are prepping for, or even seem to care about.

By now everybody knows that the government ‘alphabet agencies’ including mainly the NSA have been methodically collecting data on us. Everything we do, say, buy and search on the internet will be on permanent data base file by next year. All phone calls now are computer monitored, automatically recorded and stored with certain flag/trigger words (in all languages).

As technology improves, every single phone call will be entirely recorded at meta-data bases in government computer cloud storage, when ‘They’ finish the huge NSA super spy center in Utah. Which means they will be available anytime authorities want to look them up and personally listen for any information reference to any future investigation. Super computer algorithms will pin point search extrapolations of ANY relationship to the target point.

You can rest uneasily, but assured, that in the very near future when a cop stops you and scans your driver license into his computer, he will know anything even remotely ’suspicious’ or ’questionable’ about ALL the recent activities and behavior in your life he chooses to focus upon!

This is the ‘privacy apocalypse’ coming upon us. And you need to know these five devices that you can run to protect your privacy, but you can’t hide from.

When Security Overlaps Freedom

11 notes

·

View notes

Text

Excerpt from this story from Inside Climate News:

Illinois is already a top destination for data centers, and more are coming. One small Chicago suburb alone has approved one large complex and has proposals for two more.

Once they’re online, data centers require a lot of electricity, which is helping drive rates up around the country and grabbing headlines. What gets less attention is how much water they need, both to generate that electricity and dissipate the heat from the servers powering cloud computing, storage and artificial intelligence.

A high-volume “hyperscale” data center uses the same amount of water in a year as 12,000 to 60,000 people, said Helena Volzer, a senior source water policy manager for the environmental nonprofit Alliance for the Great Lakes.

Increasingly, residents, legislators and freshwater advocacy groups are calling for municipalities to more carefully consider where the water that supplies these data centers will come from and how it will be managed. Even in the water-rich Great Lakes region, those are important questions as erratic weather patterns fueled by climate change affect water resources.

Illinois already has more than 220 data centers, and a growing number of communities interested in the attendant tax revenue are trying to entice companies to build even more. Many states in the Great Lakes region—Illinois, Indiana, Michigan and Minnesota among them—are offering tax credits and incentives for data center developments. The Illinois Department of Commerce and Economic Opportunity has approved tax breaks for more than 20 data centers since 2020.

“Hyperscale data centers are the really large data centers that are being built now for [generative] AI, which is really driving a lot of the growth in this sector because it requires vast data processing capabilities,” said Volzer. “The trend is larger and bigger centers to feed this demand for AI.”

Much of the water used in data centers never gets back into the watershed, particularly if the data center uses a method called evaporative cooling. Even if that water does go back into the ecosystem, deep bedrock aquifers, like the Mahomet in central Illinois, can take centuries to recharge. In the Great Lakes, just 1 percent of the water is renewed each year from rain, runoff and groundwater.

In Illinois, 40 percent of the population gets its water from aquifers. In some places, like Chicago’s southwest suburbs in Will and Kendall counties, the amount of water in those aquifers is dwindling.

To ensure that they can supply citizens with safe drinking water, officials from six suburbs southwest of Chicago—Joliet, Channahon, Crest Hill, Minooka, Romeoville and Shorewood—made an agreement with the city two years ago to buy millions of gallons of water a day from Lake Michigan. They are currently building a $1.5 billion pipeline to transport the water, which is expected to be completed by 2030.

Illinois is unique among the Great Lakes states when it comes to water. The Great Lakes Compact each state signed in 2008 bans diversions of water from the lakes to communities outside the basin, but it makes an exception for Illinois thanks to a 1967 Supreme Court ruling allowing Chicago to sell water to farther-flung municipalities.

“We are concerned about the planning of the explosion of data centers, and if these far-out suburbs are actually accounting for that,” said Iyana Simba, city government affairs director for the Illinois Environmental Council. “How much of that was taken into account when they did their initial planning to purchase water from the city of Chicago? This isn’t reused wastewater. This is drinking water.”

5 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

HOW TO SWITCH TO LINUX

So, we're going to go through this step by step.

Before we begin, let's keep a few things clear:

Linux is not Windows, it is its own system, with its own culture, history and way of doing things.

There are many "distributions", "distros" or "flavors" of Linux. What works for you may be different from what people recommend.

You'll want to read up on how to use the terminal; the basics an absolute beginner needs is short, but important. It's not hard to learn, just takes a bit of time and effort.

ADOBE DOES NOT WORK ON LINUX.

WINE is not a Windows Emulator, it should not be treated as such.

Proton is a compatibility tool built on WINE by Valve, which has its own compatibility database, called ProtonDB. It still isn't an emulator and can have quirks.

Not everything will work on Linux. Dead by Daylight actively blocks Linux players from joining a game, as an example. The Windows Edition of Minecraft is another. (But the Java Edition does!)

There are many FOSS alternatives to popular programs, but they may lack maturity and features compared to their commercial counterparts.

You might want to invest in an external drive. It'll keep your files safe and you'll be able to move all your files to a

Step 1: why do you want to switch? Are you concerned about privacy? Are you wanting to boycott Windows? Is 11 not an option for your hardware? Want to try something new? Be honest with yourself on what you want to do. Write down your hardware specs. You'll want to know what kind of processor, RAM, video card and memory you're working on.

Step 2: Make three lists: Programs you need for work, programs you use at home (that aren't games) and games you like to play. Check each of these for if they already have a Linux port. For games, you can check if it's Steamdeck compatible! For those where you can't find one or it's not clear, you can check for the program on WINE HQ and ProtonDB (for games.) Not all of them might be compatible!

There might be Linux-based alternatives for several things, but keep in mind that Adobe does NOT support Linux and does NOT work on WINE! Sea of Thieves and LibreOffice works, Dead by Daylight and Scrivener do not.

Step 3: Get a GOOD QUALITY USB drive stick! I recommend one that's at least 30 GB. That sounds like a lot, but operating systems these days are huge-but there's some fun stuff you can get. It's really important that you get a good quality one, not just a random stick off a reseller like Wish.

Step 4: Remember when I asked you why you were switching? Time to pick a Linux version. There is no "one, true Linux" version-the operating system is open, groups make their own versions and put it out into the world. If you're confused, check out Distrowatch. Read a list here. Download an option-if you have a few sticks around, try multiple ones.

Step 5: Plug in your USB and use either UNetbootin or Rufus to create your boot device. Rufus might be easier if you're not super computer savvy. When looking over the options, make sure there's some storage, set it to most of what's left. Take out your boot stick for now.

Step 6: Find out how to boot to your BIOS. Every computer has a BIOS. Check out your model of laptop/motherboard to figure out what it is. Arrange boot order so that your USB gets checked first.

Step 7: If you have an external drive, move all your personal stuff, game saves, etc to it or purchase cloud drive storage for it. Always back up your files, and with multiple methods.

Step 8: You aren't going to be installing Linux quite yet; instead, boot it up from USB. Note, it'll be a bit slow on USB 2.0, though a USB-3 device and slot should make matters easier. Test each version you're considering for a week. It is super important that you test! Sometimes problems crop up or you turn out to not like it!

Step 9: Once you find a distro you like and have tested, consider if you want to dual boot or completely wipe Windows. Some programs for work might require Windows or you might have a few games that ONLY work on Windows and that's perfectly fine! Just keep in mind, as of Windows 11, this option is not recommended. If you want to use dualboot, you want to keep Windows 10 and NOT update. There's great tutorials on how to make it happen, search engines should be able to point you to one.

Step 10: Fully install Linux and immediately update. Even the latest installers will not have the current security patches. Just let it update and install whatever programs you want to use.

Step 11: Enable compatibility tools in Steam if you're a gamer. File, options, compatibility. Also, check the Software Store in your OS for open source re-implementations for your favorite older games!

You're now a Penguin!

23 notes

·

View notes

Text

It seems to me that the trouble with digital is it's all still physical objects. Maybe there can be copies of things and they can travel very fast and be in multiple places and be compressed down and stored in unthinkably tinier places and accessed cleverly and take forms we could barely conceive of before we met computers.

But it's still an SSD card, a big server, a powerplant, physical equipment to read a bunch of information inscribed into a very high tech substrate (stuff!)

Old film negatives or plates aren't nearly as useful until you use a bunch of old processes, materials and skills to pull prints from them. Even digitisation requires both image capture tools (objects) and software (runs on objects) to replicate a process that was originally made with objects, and which is therefore modelled on data from having those objects.

Lots of people say 'oh get rid of manuals and physical books and photo albums and go digital' and sure. Tactility aside it's great option for space. Fold your two hundred book library into a flash drive the size of a postage stamp like an old man folding the broadsheet down to a postcard. Saves on a lot of dusting and apartments are pretty small. (First person to use this as an excuse to pile on konmari is getting hit with a saucepan btw)

But putting it all in the digital space is treated like using a magic hammerspace where it's safe, retrievable and doesn't rely on the physical world at all. Just your passwords which are now tied to you specific phone, your accounts which run on servers which are just computers you don't own. Your evergreen file formats. Your hard drives which will not fail of course, and will always be backed up to other infallible hard drives and other people's computers and Google searches which definitely find that blog (dead) which had a link (dead) to a file on a Google drive (deleted) that one time. Electricity which will always be on and legacy software that will definitely still run on the new hardware because the old ones long gone, and WiFi which is a basic utility so will always be flowing and so so much water in the big server farms run by the monopolies bigger than nations.

It just seems. Like a grown-up, acceptable equivalent to stuffing a bunch of stuff under your bed so you don't have to think about it. Because even if you ignore the monopolies and the exploitation for water and minerals, and the planned obsolescence. Isn't it all still depending on stuff? Physical, very dense stuff. That needs to be kept dry and cool and powered on and connected and very Not Near big magnets? But it's still objects to look after.

It feels like when we're selling each other the idea of keeping everything 'digital' there's an undercurrent of 'because it loopholes having to think about the inherent ephemerality, storage requirements and maintenance needs of physical objects'. But just as computing can transcend the forms of the physical, so is it dependent on it. I'm worried what's happening given that as a broader culture we pretend that it removes thinking about objects, rather than adding a whole new bunch of objects to look after.

And at some point that file in that file format on that cloud server or storage device will be as inaccessible to you as an undeveloped glass plate negative is now. That day is coming sooner than we think. And pretending it's not still made of physical stuff is contributing to the narratives that let it get pushed here faster.

(Also my computer harddrive died last week. Pah.)

#talking freds#sorry i said flash drive im old. ehat do they even call them now. thumb drives?#sorry i said 200 book library I'm dyscalculic not saying ANYTHING in particular about your big or small library i dont even know which it i#better peiple than me have said this already#but im insomniac and i saw an article about glass negatives and got thinking#long post#my entire teenage music libary is on a semi corrupted ipod classic and jalf of it disappeared from the itunes Library at some point#i just. dont listen to lot of music now. i do listen. but not close. its not the same.

17 notes

·

View notes

Text

VPS vs. Dedicated vs. cloud hosting: How to choose?

A company's website is likely to be considered one of the most significant components of the business. When first beginning the process of developing a new website, selecting a hosting platform is one of the first things to do. One of the decisions that need to be made, regardless of whether you are starting an online store, building a blog, or making a landing page for a service, is selecting which kind of server is the most ideal for your organization. This is one of the decisions that needs to be made.

The first part of this tutorial will be devoted to providing an explanation of what web hosting is, and then it will move on to examine the many types of hosting servers that are now accessible. This article will compare shared hosting to dedicated hosting and cloud hosting. All of these comparisons will be included in this post. However, with terminology such as "dedicated," "VPS," and "cloud" swirling around, how can you evaluate which approach is the most appropriate for your specific requirements?

Each sort of hosting has advantages and disadvantages, and they are tailored to certain use cases and financial restrictions. We will now conclude by providing you with some advice on how to select the web hosting option that is most suitable for your requirements. Let's jump right in.

Web hosting—how does it work?

Web hosting providers provide storage and access for companies to host their websites online. Developing and hosting a website involves HTML, CSS, photos, videos, and other data. All of this must be stored on a strong Internet-connected server. A website domain is also needed to make your website public. It would be best if you bought a website domain with hosting so that when someone enters your domain in their browser, it is translated into an IP address that leads the browser to your website's files.

Best web hosting providers provide the infrastructure and services needed to make your website accessible to Internet consumers. Web hosting firms run servers that store your website's files and make them available when someone types in your domain name or clicks on a link. When website visitors click on the website or add its URL to their browser, your web server sends back the file and any related files. A web server displays your website in the browser.

VPS hosting: What is it?

VPS hosting, which stands for virtual private server hosting, is a type of hosting that falls somewhere in the center of shared hosting and dedicated hosting. Several virtual private server instances are hosted on a physical server, which is also referred to as the "parent." Each instance is only permitted to make use of a certain portion of the hardware resources that are available on the parent server. All of these instances, which function as unique server settings, are rented out by individuals. To put it another way, you are renting a separate portion of a private server.

The pricing for these plans vary, and in comparison to shared hosting, they provide superior benefits in terms of performance, protection, and the capacity to expand. Through the utilization of virtualization technology, a single server can be partitioned into multiple online versions. Each and every one of these instances functions as its very own independent and private server environment. By utilizing virtual private server (VPS) hosting, a company can have the same resource-rich environment as a dedicated server at a significantly lower cost.

There are distinct distinctions between virtual private servers (VPS) and dedicated servers, yet neither one is superior to the other. It is dependent on the requirements of your company as to which hosting environment would be most suitable for you and your team.

Dedicated hosting: What is it?

What exactly is fully controlled dedicated hosting? There is only one computer that belongs to you on a dedicated server, as the name suggests. You can handle every piece of hardware that makes up the server. These computers usually share a data center's network with nearby dedicated servers but not any hardware. Although these plans are usually more pricey than shared or VPS, they may offer better speed, security, and adaptability.

For example, if you need custom settings or certain hardware, this is particularly accurate. A business that uses dedicated hosting has its own actual server. The company utilizes the server's hardware and software tools exclusively, not sharing them with any other business. There are some differences between dedicated servers and VPS, but they work exactly the same. VPS creates a virtual separate server instance, which is the main difference. For business owners to have more control, speed, and security, truly dedicated server environments depend on physical legacy IT assets.

Cloud Hosting: What is it?

The term "cloud hosting" refers to a web hosting solution that can either be shared or dedicated to services. Instead of depending on a virtual private server (VPS) or a dedicated server, it makes use of a pool of virtual servers to host websites and applications. Resources are distributed among a number of virtual servers in a dedicated cloud environment, which is typically situated in a variety of data centers located all over the world. Multiple users share pooled resources on a single physical server environment, regardless of whether the environment is shared or cloud-based. Users are able to feel safe utilizing any of these environments despite the fact that they are the least secure of the two locations.

Therefore, cloud hosting is essentially a system that functions in small partitions of multiple servers at the same time. Having servers in the cloud also becomes advantageous in the event that servers become unavailable. When cloud hosting is compared to dedicated hosting, the case of dedicated servers experiencing an outage is significantly more dangerous because it causes the entire system to become unavailable. When using cloud servers, your system is able to switch to another server in the event that one of the servers fails.

There is no physical hardware involved in cloud servers; rather, cloud computing technology is utilized. Cloud web hosting is an option that can be considered financially burdensome. Considering that the cost of cloud server hosting is determined by utilization, higher-priced plans typically include greater amounts of storage, random access memory (RAM), and central processing unit (CPU) allocations.

By having the capacity to scale resources up or down in response to changes in user traffic, startups, and technology firms that are launching new web apps can reap the desired benefits. Cloud hosting provides rapid scalability, which is beneficial for applications that may confront unanticipated growth or abrupt spikes in traffic. When it comes to backing up data, cloud hosting offers a dependable environment. Data can be quickly restored from a cloud backup in the event of a disaster, hence reducing the amount of time that the system is offline.

How to choose the best web hosting?

When deciding between a dedicated, virtual private server (VPS), and cloud hosting, it is vital to understand your specific requirements and evaluate them in relation to your financial constraints. Making a list of the things that are non-negotiable and items on your wish list is a simple approach to getting started. From there, you should do some calculations to determine how much money you can afford on a monthly or annual basis.

Last but not least, you should initiate the search for a solution that provides what you require at the price that you desire. The use of a dedicated web server, for instance, might be beneficial if you have the financial means to do so and require increased security and dependability. On the other hand, if you are starting out and are not hosting a website that collects sensitive information, sharing hosting is a good choice to consider. If, on the other hand, the web host provides reliable support, a substantial amount of documentation, and a knowledge base in which you can get the majority of the answers to your inquiries, then the advantages of using that web host are far more significant.

Conclusion-

Dedicated, shared, virtual private server (VPS) and cloud hosting are all excellent choices for a variety of use cases. When it comes to aspiring business owners, bloggers, or developers, the decision frequently comes down to striking a balance between the limits of their budget and the requirements of performance and scalability. Because of its low cost, shared hosting can be the best option for individuals who are just beginning their journey into the realm of digital technology.

Nevertheless, when your online presence expands, you might find that the sturdiness of dedicated servers or the adaptability of virtual private servers (VPS) are more enticing to you. Cloud hosting, on the other hand, is distinguished by its scalability and agility, making it suitable for meeting the requirements of enterprises that are expanding rapidly or applications that have variable traffic.

When it comes down to it, your hosting option needs to be influenced by your particular objectives, your level of technical knowledge, and the growth trajectory that you anticipate. If you take the time to evaluate your specific needs, you will not only ensure that your website functions without any problems, but you will also position yourself for sustained success.

Dollar2host Dollar2host.com We provide expert Webhosting services for your desired needs Facebook Twitter Instagram YouTube

2 notes

·

View notes

Text

Allow me translate some bits from an interview with a data journalist upon release of DeepSeek:

What did you talk about? I've read that DeepSeek doesn't like it much when you ask it sensitive questions about Chinese history.

Before we get into the censorship issues, let me point out one thing I think is very important. People tend to evaluate large language models by treating them as some sort of knowledge base. They ask it when Jan Hus was burned, or when the Battle of White Mountain was, and evaluate it to see if they get the correct school answer. But large language models are not knowledge bases. That is, evaluating them by factual queries doesn't quite make sense, and I would strongly discourage people from using large language models as a source of factual information.

And then, over and over again when I ask people about a source for whatever misguided information they insist on, they provide me with a chatGPT screenshot. Now can I blame them if the AI is forced down their throat?

What's the use of...

Exactly, we're still missing really compelling use cases. It's not that it can't be used for anything, that's not true, these things have their uses, but we're missing some compelling use cases that we can say, yes, this justifies all the extreme costs and the extreme concentration of the whole tech sector.

We use that in medicine, we use that here in the legal field, we just don't have that.

There are these ideas out there, it's going to help here in the legal area, it's going to do those things here in medicine, but the longer we have the technology here and the longer people try to deploy it here in those areas, the more often we see that there are some problems, that it's just not seamless deployment and that maybe in some of those cases it doesn't really justify the cost that deploying those tools here implies.

This is basically the most annoying thing. Yes, maybe it can be useful. But so far I myself haven’t seen a use that would justify the resources burned on this. Do we really need to burn icebergs to “search with AI”? Was the picture of “create a horse with Elon Musks head” that took you twenty asks to AI to create worth it when you could have just pasted his head on a horse as a bad photoshop job in 5 minutes and it’d be just as funny? Did you really need to ask ChatGPT for a factually bad recap of Great Expectations when Sparknotes exist and are correct? There’s really no compelling use case to do this. I’ve just seen a friend trying to force ChatGPT to create a script in Python for twenty hours that didn’t work while the time she spent rephrasing the task, she could have researched it herself, discuss why it isn’t working on stackoverflow and actually…learn Python? But the tech companies invested heavily in this AI bullshit and keep forcing it down our throats hoping that something sticks.

So how do you explain the fact that big American technology companies want to invest tens of billions of dollars in the next few years in the development of artificial intelligence?

We have to say that if we are talking about those big Silicon Valley technology companies that have brought some major innovations in the past decades. Typically, for example, social networks, or typically cloud computing storage. Cloud computing storage really pushed the envelope. That was an innovation that moved IT forward as a significant way forward. There is some debate about those other innovations, how enduring they are and how valuable they are. And the whole sector is under a lot of pressure to bring some more innovation because, as I said, a lot of the stock market is concentrated in those companies here. And in fact, we can start to ask ourselves today, and investors can start to ask themselves, whether that concentration is really justified here. Just here on this type of technology. So it's logical that these companies here are rushing after every other promising-looking technology. But again, what we see here is a really big concentration of capital, a really big concentration of human brains, of development, of labour in this one place. That means some generative artificial intelligence. But still, even in spite of all that, even in these few years, we don't quite see the absolutely fundamental shifts that technology is bringing us here socially. And that's why I think it's just a question of slowly starting to look at whether maybe as a society we should be looking at other technologies that we might need more of.

Meaning which ones?

Energy production and storage. Something sustainable, or transporting it. These are issues that we are dealing with as a society, and it may have some existential implications, just in the form of the climate crisis. And we're actually putting those technologies on the back burner a little bit and replacing it with, in particular, generative models, where we're still looking for the really fundamental use that they should bring.

This is basically it. The stock market and investing in the wrong less needed places…

The full interview in Czech original linked bellow. No AI was used in my translation of the bits I wanted to comment on.

"edit images with AI-- search with AI-- control your life with AI--"

60K notes

·

View notes

Text

Explainer: Everything You Need to Know About DePIN — The Future of Decentralized Physical Infrastructure

#DePIN #Decentralized #RWA

I still remember the first time I heard the word “DePIN.” My initial reaction was: “Yet another fancy Web3 acronym?” The first question that popped into my head was — how is this different from DeFi?

Only later did I realize that DePIN — short for Decentralized Physical Infrastructure Networks — might be far more important than we thought. Unlike some concepts that feel abstract or intangible, DePIN connects directly with the physical world we live in: wireless networks, cars, map data, even the idle hard drive in your home.

If DeFi changed the rules of the financial game, then DePIN is here to tell us: the real-world infrastructure can also be rebuilt in a Web3-native way. Imagine this — you’re not just a user of a network, but also a builder. You can contribute physical resources and actually earn from doing so.

This article aims to explain DePIN in the simplest terms: what it is, how it evolved, how hot it is right now, and where it might go. Don’t worry — by the end of this read, you’ll no longer feel like DePIN is just some buzzword only VCs understand.

What Is DePIN and Why Did It Suddenly Go Viral?

DePIN stands for Decentralized Physical Infrastructure Networks. As the name suggests, it refers to reimagining traditional infrastructure — historically built and controlled by centralized corporations or governments — into open networks where anyone can contribute, and everyone can share the rewards, powered by blockchain and token incentives.

Here are a few examples to help make sense of it:

Helium: The world’s first wireless DePIN project. Ordinary users can set up Helium hotspot devices to provide LoRaWAN or 5G network coverage for others — and earn tokens as a reward.

Filecoin + DePIN storage projects: Allow people to share unused hard drive space to form a decentralized global cloud storage network — cheaper and more secure than AWS.

WeatherXM: Gathers weather data via decentralized weather stations, rewarding contributors with tokens.

DIMO: Uses connected car devices to collect vehicle data — letting car owners monetize their own data.

What do these projects have in common?

They all turn idle physical resources into Web3-compatible assets, using token incentives to attract participants and build infrastructure from the bottom up.

Why is DePIN suddenly on fire? I see three main reasons:

Strong real-world demand: Deploying things like 5G or edge computing is expensive. Traditional companies move slowly, but decentralized methods allow everyone to chip in.

A new narrative for Web3: DeFi and NFTs have already run their storytelling course. DePIN combines crypto and the physical world — fresh and exciting.

Token-driven incentives: Users aren’t just consumers — they’re builders and earners. That’s much more appealing to everyday people.

DePIN’s Development: From Fringe Experiment to Hot Sector

If we draw DePIN’s evolution on a timeline, we can divide it into three key stages:

1. The Seed Stage (2015–2018)

This was the concept exploration phase. Around 2015, the crypto world was still focused on Bitcoin and Ethereum. DePIN didn’t even have a name yet — but some projects were already trying out the idea.

A prime example is Filecoin, which proposed using blockchain to incentivize distributed storage nodes, turning idle hard drives into a global decentralized cloud. While Filecoin didn’t launch its mainnet until 2020, it laid the groundwork for the token-incentivized physical network model.

Another early pioneer was Helium (founded in 2013). Initially an IoT startup, Helium started integrating blockchain and tokens in 2018 to boost its LoRaWAN and 5G network efforts.

2. The Growth Stage (2019–2022)

This was when the DePIN ecosystem began taking shape.

Helium’s network exploded in popularity, with thousands of users voluntarily buying hotspot miners to extend wireless coverage in cities.

Filecoin launched its mainnet in 2020, becoming one of the largest decentralized storage networks in crypto history.

Other projects like Pollen Mobile, DIMO, and HiveMapper emerged, turning car data sharing, map data, and edge computing into real use cases.

You could call this the “from 0 to 1” phase. The term DePIN started gaining traction and was eventually included in yearly reports by research firms like Messari.

3. The Boom Stage (2023–2025)

By 2023, a shift began: VCs stopped focusing only on DeFi, and started calling DePIN the next 10x opportunity.

Messari’s 2023 Crypto Theses listed DePIN as one of Web3’s biggest potential directions.

Helium modularized its 5G offering, partnering with traditional telcos like T-Mobile.

Render Network emerged, offering decentralized GPU networks for AI and Web3 compute.

WeatherXM and Geodnet began building decentralized weather and geo-location services.

At this point, DePIN is no longer “a geeky toy” — it’s a full-blown industry trend. Some VCs are calling it the next trillion-dollar application layer opportunity.

Why DePIN Matters: Strengths and Potential

If you ask me why DePIN could be the next big thing in Web3, I’d summarize it in three core value propositions:

1. Decentralizing Infrastructure Development

Traditional infrastructure — cell towers, cloud servers, map data collection — requires massive upfront capital. That’s always been a centralized giant’s game. But DePIN flips that: by using tokens as incentives, anyone can contribute. Even sharing a bit of bandwidth or spare disk space can earn you rewards.

2. Solving Resource Waste

Globally, we have an enormous amount of idle computing power, bandwidth, storage, and vehicle data. DePIN organizes this untapped supply into a sustainable marketplace via smart contracts.

3. Merging Web3 with the Real Economy

DePIN isn’t just a financial product or a digital collectible — it’s tied to real-world infrastructure. This means a broader market and greater potential to scale beyond crypto-native audiences.

But It’s Not All Sunshine: The Risks of DePIN

Let’s be real — DePIN is not a silver bullet. It comes with real challenges:

High hardware costs: Unlike DeFi, which only needs code and capital, DePIN involves physical devices — which slows growth and adoption.

Hard-to-achieve network effects: Many DePIN projects need to “fake it till they make it” early on — because without participants, no one wants to join.

Token economy complexity: Poorly designed incentives can lead to mining for arbitrage, not actual network building.

Regulatory grey areas: Physical infrastructure and data collection could cross legal or privacy boundaries.

Final Thoughts: DePIN Is Web3’s Bridge to the Real World

People often say Web3 is too abstract — mostly speculation, with no real-world use cases. But DePIN shows a different possibility: using blockchain incentives to decentralize infrastructure building, letting anyone join the network and share in the rewards.

Over the next decade, DePIN might follow the same arc DeFi once did — starting as a niche concept, and becoming widely understood. If you’re looking for the “next breakout sector,��� DePIN is worth your time and research.

0 notes

Text

Great Online Tools: Your Gateway to Smart Digital Workflows

The internet has completely transformed how we work, learn, and create. From startups and freelancers to educators and large enterprises, everyone now relies on digital solutions to streamline operations and boost productivity. In this landscape, the tools you use can define your efficiency, creativity, and competitiveness. That’s where great online tools come in—they make complex tasks easier, save time, and reduce the need for manual effort.

Whether you’re managing projects, building websites, designing graphics, or analyzing data, there’s an online tool designed to do it faster and better. These tools aren’t just helpful—they’ve become essential.

What Makes Online Tools Truly Great?

While thousands of web-based platforms exist, only a few earn the label of great online tools. What sets them apart is their ability to provide real value while being easy to use, highly accessible, and scalable. The best tools seamlessly integrate into your existing workflow, minimize learning curves, and offer a consistent user experience across devices.

Online tools also empower users with automation, real-time collaboration, and advanced features, without requiring high-end equipment or technical expertise. Whether you're creating a professional logo, managing a distributed team, or optimizing SEO strategies, these tools help users achieve big results with minimal friction.

A Cloud-Based World with Fewer Limits

Moreover, these tools reduce the dependency on physical infrastructure. For instance, cloud storage platforms like Google Drive and Dropbox eliminate the need for external hard drives. Similarly, design platforms like Canva and Figma allow non-designers and professionals alike to create impactful visuals without needing high-end computers or expensive software. These great online tools make it possible to work from anywhere, using just a browser, while still maintaining professional standards and performance.

Categories of Tools That Power the Web

Let’s break down some of the most valuable categories of online tools:

Communication and Meetings: Platforms like Zoom, Slack, and Google Meet facilitate real-time communication, virtual meetings, and collaboration across continents.

Project Management: Trello, Notion, and Asana help individuals and teams track progress, set priorities, and organize tasks visually.

Design and Creativity: Canva, Figma, and Adobe Express empower users to create high-quality visual content, even without a design background.