#collecting data to determine normal baseline levels of operation while also detecting even the most minuscule fluctuations in that performa

Explore tagged Tumblr posts

Text

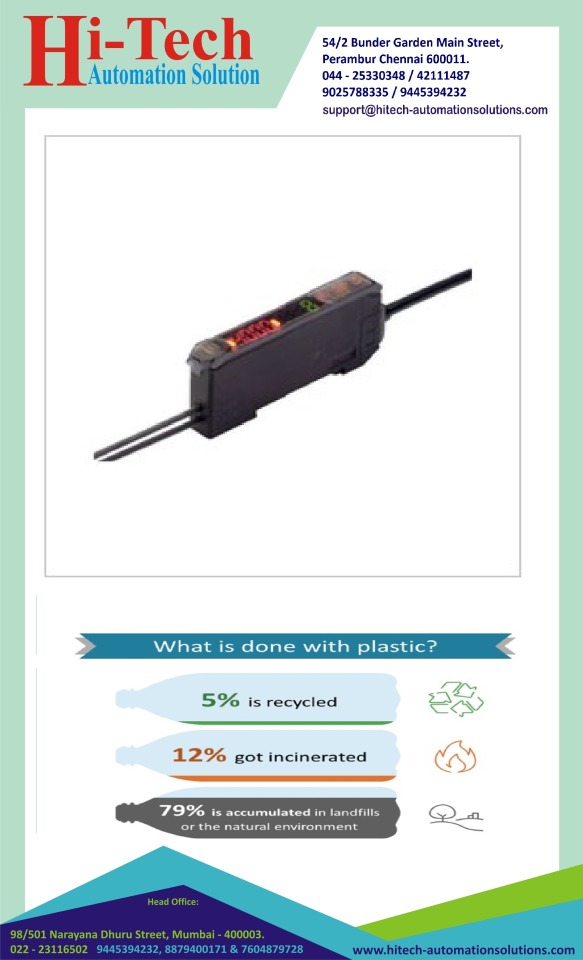

Sensors used in the manufacturing industry monitor the performance of various processes and aspects of machine operation, collecting data to determine normal baseline levels of operation while also detecting even the most minuscule fluctuations in that performance.

Sensor/Detectors/Transducers are electronic or electrical devices. These special electronic sensitive materials sense, measure, and detect changes in the position, temperature, displacement, electrical current, and multiple parameters of industrial equipment.

different types include hygrometers and moisture meters (for measuring moisture), gyroscopes (for measuring rotation), current or voltage sensors, pressure sensors, position sensors, level sensors and flow sensors (for fluid management).

role of a sensor in a control and automation system is to detect and measure some physical effect, providing this information to the control system

main performance criteria for industrial sensors are sensitivity, resolution, compactness, long-term stability, thermal drift and power efficiency

#ublic#bestindustry#bestoffers#OnTimeDelivery#bestprice#goodservice#donateorgansavelife#DrugFreeIndia#Industrial Sensors#Sensors used in the manufacturing industry monitor the performance of various processes and aspects of machine operation#collecting data to determine normal baseline levels of operation while also detecting even the most minuscule fluctuations in that performa#Sensor/Detectors/Transducers are electronic or electrical devices. These special electronic sensitive materials sense#measure#and detect changes in the position#temperature#displacement#electrical current#and multiple parameters of industrial equipment.#different types include hygrometers and moisture meters (for measuring moisture)#gyroscopes (for measuring rotation)#current or voltage sensors#pressure sensors#position sensors#level sensors and flow sensors (for fluid management).#role of a sensor in a control and automation system is to detect and measure some physical effect#providing this information to the control system#main performance criteria for industrial sensors are sensitivity#resolution#compactness#long-term stability

0 notes

Text

Covid-19 shutdown led to increased solar power output

As the Covid-19 shutdowns and stay-at-home orders brought much of the world’s travel and commerce to a standstill, people around the world started noticing clearer skies as a result of lower levels of air pollution. Now, researchers have been able to demonstrate that those clearer skies had a measurable impact on the output from solar photovoltaic panels, leading to a more than 8 percent increase in the power output from installations in Delhi.

While such an improved output was not unexpected, the researchers say this is the first study to demonstrate and quantify the impact of the reduced air pollution on solar output. The effect should apply to solar installations worldwide, but would normally be very difficult to measure against a background of natural variations in solar panel output caused by everything from clouds to dust on the panels. The extraordinary conditions triggered by the pandemic, with its sudden cessation of normal activities, combined with high-quality air-pollution data from one of the world’s smoggiest cities, afforded the opportunity to harness data from an unprecedented, unplanned natural experiment.

The findings are reported today in the journal Joule, in a paper by MIT professor of mechanical engineering Tonio Buonassisi, research scientist Ian Marius Peters, and three others in Singapore and Germany.

The study was an extension of previous research the team has been conducting in Delhi for several years. The impetus for the work came after an unusual weather pattern in 2013 swept a concentrated plume of smoke from forest fires in Indonesia across a vast swath of Indonesia, Malaysia, and Singapore, where Peters, who had just arrived in the region, found “it was so bad that you couldn’t see the buildings on the other side of the street.”

Since he was already doing research on solar photovoltaics, Peters decided to investigate what effects the air pollution was having on solar panel output. The team had good long-term data on both solar panel output and solar insolation, gathered at the same time by monitoring stations set up adjacent to the solar installations. They saw that during the 18-day-long haze event, the performance of some types of solar panels decreased, while others stayed the same or increased slightly. That distinction proved useful in teasing apart the effects of pollution from other variables that could be at play, such as weather conditions.

Peters later learned that a high-quality, years-long record of actual measurements of fine particulate air pollution (particles less than 2.5 micrometers in size) had been collected every hour, year after year, at the U.S. Embassy in Delhi. That provided the necessary baseline for determining the actual effects of pollution on solar panel output; the researchers compared the air pollution data from the embassy with meteorological data on cloudiness and the solar irradiation data from the sensors.

They identified a roughly 10 percent overall reduction in output from the solar installations in Delhi because of pollution – enough to make a significant dent in the facilities’ financial projections.

To see how the Covid-19 shutdowns had affected the situation, they were able to use the mathematical tools they had developed, along with the embassy’s ongoing data collection, to see the impact of reductions in travel and factory operations. They compared the data from before and after India went into mandatory lockdown on March 24, and also compared this with data from the previous three years.

Pollution levels were down by about 50 percent after the shutdown, they found. As a result, the total output from the solar panels was increased by 8.3 percent in late March, and by 5.9 percent in April, they calculated.

“These deviations are much larger than the typical variations we have” within a year or from year to year, Peters says — three to four times greater. “So we can’t explain this with just fluctuations.” The amount of difference, he says, is roughly the difference between the expected performance of a solar panel in Houston versus one in Toronto.

An 8 percent increase in output might not sound like much, Buonassisi says, but “the margins of profit are very small for these businesses.” If a solar company was expecting to get a 2 percent profit margin out of their expected 100 percent panel output, and suddenly they are getting 108 percent output, that means their margin has increased fivefold, from 2 percent to 10 percent, he points out.

The findings provide real data on what can happen in the future as emissions are reduced globally, he says. “This is the first real quantitative evaluation where you almost have a switch that you can turn on and off for air pollution, and you can see the effect,” he says. “You have an opportunity to baseline these models with and without air pollution.”

By doing so, he says, “it gives a glimpse into a world with significantly less air pollution.” It also demonstrates that the very act of increasing the usage of solar electricity, and thus displacing fossil-fuel generation that produces air pollution, makes those panels more efficient all the time.

Putting solar panels on one’s house, he says, “is helping not only yourself, not only putting money in your pocket, but it’s also helping everybody else out there who already has solar panels installed, as well as everyone else who will install them over the next 20 years.” In a way, a rising tide of solar panels raises all solar panels.

Though the focus was on Delhi, because the effects there are so strong and easy to detect, this effect “is true anywhere where you have some kind of air pollution. If you reduce it, it will have beneficial consequences for solar panels,” Peters says.

Even so, not every claim of such effects is necessarily real, he says, and the details do matter. For example, clearer skies were also noted across much of Europe as a result of the shutdowns, and some news reports described exceptional output levels from solar farms in Germany and in the U.K. But the researchers say that just turned out to be a coincidence.

“The air pollution levels in Germany and Great Britain are generally so low that most PV installations are not significantly affected by them,” Peters says. After checking the data, what contributed most to those high levels of solar output this spring, he says, turned out to be just “extremely nice weather,” which produced record numbers of sunlight hours.

The research team included C. Brabec and J. Hauch at the Helmholtz-Institute Erlangen-Nuremberg for Renewable Energies, in Germany, where Peters also now works, and A. Nobre at Cleantech Solar in Singapore. The work was supported by the Bavarian State Government.

Covid-19 shutdown led to increased solar power output syndicated from https://osmowaterfilters.blogspot.com/

0 notes

Link

A quick intro into SQL Server wait statistics

Starting with SQL Server 2005, Microsoft introduced the ability of SQL Server to track executed queries executed by measuring and logging the time that executed query has to wait for various resources such as other queries, CPU, memory, I/O, etc. This performance metric is referred to as a SQL Server wait statistic. Wait statistics are most precise and the most reliable way for tracking and identifying SQL Server performance problems as it allows measuring each step the query has taken during execution.

Comparing to the standard set of CPU, Memory, I/O and other metrics, SQL Server wait statistics has, in most cases, better precision required for SQL Server performance troubleshooting and/or optimization.

Why baseline wait statistics?

The nature of some wait types is that having either high or low values is not always the wrong or right, respectively. As a matter of fact in many cases, high values might be the indication of the better SQL Server performance.

Some typical representatives of wait types that cannot be understood and interpreted are CXPACKET and PAGEIOLATCH_SH among others.

CXPACKET, for example, is the wait type which indicates parallelism in SQL Server and having highly utilized parallelism mean that SQL Server processes the data faster. Therefore, this means that high values of the CXPACKET wait type indicates that the SQL Server is well optimized

PAGEIOLATCH_SH indicates physical I/O readings from the storage subsystem into a buffer pool, which is the normal operation and should not be considered as a problem as long as the storage subsystem can handle those operations without affecting the performance of SQL Server

So how can we determine what wait time value for some specific wait type should be considered “bad”? Moreover, how can we determine the thresholds for wait types that would be a real indicator that the waits time value is a potential problem? The only reliable way of establishing the thresholds that can be considered, in most cases, as correct, is to baseline the collected wait types values

To get more information about baseline principles and how to collect the wait stats data, read Troubleshooting SQL Server performance issues using wait statistics – Part 1 for some basic information about baselining read

How to detect SQL Server performance issues using baselines – Part 1 – Introduction and How to detect SQL Server performance issues using baselines – Part 2 – Collecting metrics and reporting

It is clear from those articles that collecting Wait statistics manually, and then adjusting and measuring baseline by hand with every change introduced to the system is very challenging.

Baselining wait statistic with ApexSQL Monitor

ApexSQL Monitor is a third-party tool designed with wait statistics in mind. It is capable of monitoring and collecting wait stats data for all wait types. At the same time, it is highly configurable allowing the user to optimize the monitoring by choosing what wait types to monitor (what wait types to exclude from monitoring), as well as establishing the predefined alert thresholds and finally the ability to baseline collected data and use the calculated baselines as thresholds for alerting

For more details read the How to configure and use SQL Server Wait statistic monitoring knowledgebase article. Before continuing to read this article, it is also advisable to read the How to customize the calculated SQL Server baseline threshold where more details about how and what methods ApexSQL Monitor use for calculating and establishing baseli8nes, but also how the user can fine-tune the already calculated baseline to meet any specific requirements

To calculate a wait statistic baseline:

Select the SQL Server in the server explorer pane and click the Configuration button in the main menu bar

Select the Baselines tab in the Configuration page

Chose the time range for which the baseline should be calculated. A minimum 7 days of collected data must be present in the repository database for baseline calculation

Check the Wait stats checkbox

After pressing Calculate, ApexSQL Monitor will calculate baselines for the defined time range

Technically, when baselining wait statistics, it is important to distinguish three different scenarios where each requires the different approach to baselining and requires the different interpretation of the calculated data:

Calculate the wait statistic baseline for a well-optimized SQL Server that doesn’t normally experience issues. This is the standard approach in creating the baseline when the server is working optimally and, in such scenario, the calculated baseline is used to monitor and notify the user whenever the wait time value of wait types exceed normal values in the monitored period

Calculating wait statistic baselines when SQL Server experiences performance problems. In such scenarios, the baselining should not be used for alerting as the calculated values couldn’t be treated as reference values. When used in this manner, the baseline should serve only for measuring the troubleshooting progress and whether the troubleshooting action improved or degraded the SQL Server performance

The third method is not frequently used and mainly by the database and application developers so it won’t be elaborate in details in this article. The calculated baseline is used solely for providing the information to developers in what way the application upgrade affects the SQL Server performance, or how the SQL Server upgrade affects the application performance, or to allow the developers to have the results control for various developmental stages or bug fixing. So the baseline value, in this case, doesn’t represent either the good or the bad performance, but rather just the reference point that can be used in development cycles for controlling the development progress

Calculating the baseline for a well-optimized SQL Server

The following above is a typical situation that could be encountered when the wait statistic baseline is calculated for an optimized SQL Server. The arrows in the image mark the PAGEIOLATCH_SH wait type values that are breaching the high thresholds and will trigger the high alerts. In such a scenario, we have some breaches of the threshold that should be investigated, but considering that in all cases thresholds are breached by wait type values that are just slightly above the threshold, it doesn’t have to trigger a knee-jerk reaction that each breach automatically means that some performance issue is the cause

The high baseline threshold breaches are marked with red arrows while medium breaches are marked with yellow arrows.

Now let’s take a closer look at the period marked with the white square in the image below. Twelve consecutive PAGEIOLATCH_SH values are breaching either the high or medium thresholds. Therefore, it is evident that in that prolonged period something unexpected occurred that caused the larger PAGEIOLATCH_SH wait type values. While it doesn’t necessarily mean that SQL Server performance is compromised, it would be a good practice to investigate that period for potential or existing problems .

Here, we have a situation where the PAGEIOLATCH_SH wait type values were constantly (though not significantly) above the threshold for a prolonged period. The fact that the values are consistently above the threshold even the PAGEIOLATCH_SH values are not much higher than the baseline threshold is the clear indicator that the affected period should be investigated.

The result of the investigation could be that the performance of SQL Server was affected in that period, in which case the DBA should preform the analysis and troubleshooting. Alternatively, the results of the investigation might show that the SQL Server performance was not affected anyhow in that period. In such a case, the DBA should pay attention to that period and whether such behavior is repeating. In such a case where repeating occurs without affecting the SQL Server performance, editing and correcting the calculated baseline should be considered. The corrected wait statistic baseline should accommodate the new behavior of PAGEIPOLATCH_SH for that period to be treated as the normal.

Another scenario that could be encountered is presented in the image below. It’s again a typical scenario where values of the wait type are significantly above the threshold for a prolonged period. This is typically an indicator of performance problems that require serious investigation and troubleshooting. While it is highly unlikely that such behavior would not affect SQL Server performance, that option still has to be taken into account during the investigation. It should always be correlated to the level of performance degradation in that period, and whether the end users are suffering as a consequence of such high values; or performance degradation is still within acceptable margins.

Calculating baselines when SQL Server has performance problems

As already explained, this method should be used only to track the progress of the troubleshooting.

This is characteristicly charting data that would appear after the baseline was calculated for wait statistics while a SQL Server experiences performance problems. For this article, let’s consider that PAGEIOLATCH_SH wait type is the cause of the performance problem. Even the values are quite high when the baseline calculates for such values those are treated as the normal so all of the PAGEIOLATCH_SH values will be displayed well within the normal baseline zone. Now it is clear that once the baseline is calculated for sub-optimal values, the application will use those values as normal values, with a consequence that alerts will not be triggered.

For this reason, this method should be reserved exclusively for wait statistic troubleshooting and is not recommended for less experienced DBAs. With this baseline, though, the DBA can track the progress of the troubleshooting.

The wrong solution could cause even worse values of the PAGEIOLATCH_SH wait type in our example

As can be seen in the next image, all PAGEIOLATCH_SH values are increased breaching already highly set thresholds due to an unsuccessful wait statistic troubleshooting. That means that the implemented solution worsened the situation and should be reverted and another approach tried.

Of course, when the applied solution is effective in improving the existing issue, that reflects in the wait statistic charts as well.

Here, applying appropriate changes, at a specific moment, decreases the PAGEIOLATCH_SH values significantly, making them significantly lower compared to the “bad” baseline. That indicates significant improvement. In a situation where additional wait statistic troubleshooting and performance improvement is targeted, the appropriate approach would be to edit the baseline to accommodate the new values achieved for a more comfortable and precise tracking of the troubleshooting improvements.

0 notes