#computational-ethics-model

Explore tagged Tumblr posts

Text

Titulus: Bellum Linguae Symbolicae: On the Construction of Symbolic Payloads in Socio-Technological Architectures

Abstract In this paper, we construct a formal framework for the design and deployment of symbolic payloads—semiotic structures encoded within ordinary language, interface architecture, and social infrastructure—that function as ideological, emotional, and informational warheads. These payloads are not merely communicative, but performative; they are designed to reshape cognition, destabilize…

#affective-field-dynamics#AI-empathy-generation#AI-human-loop-harmony#algorithmic-consciousness#algorithmic-syntax-weaving#ancestral-software#archetypal-resonance-system#archetype-embedding-layer#architectural-emotion-weaving#artificial-dream-generation#artist-as-machine-interface#artist-code-link#attention-as-algorithm#audio-emotion-translation-layer#audio-temporal-keying#autonomous-symbiosis#biological-signal-mapping#biosynthetic-harmony#code-as-ritual#code-of-light-generation#code-transcendence-matrix#cognitive-ecology#cognitive-infiltration-system#cognitive-symbology-loop#computational-ethics-model#computational-soul#conscious-templating-engine#consciousness-simulation#creative-inference-engine#cross-genre-emotional-resonance

0 notes

Text

i think image gen can be used like any other artistic tool but I don't really think the big commerical proponents of "ai" are advertising it as a tool, they're adertising it as a solution. I also think it's intellectually dishonest to argue that image generation is exactly like "using photoshop/taking a photograph" because of some generalized "those were also criticized at their conception for being new and scary and disruptive" soundbite. they were not even really criticized for the same reasons. find a better argument.

#it's not serious when someone generates a meme image and ai can be an artistic medium that takes a lot of "effort” (a misaligned word that#i think we need to uncouple from “protestant work ethic” and “human worth” because anything you create#takes effort and that's neutral it has no value it's just unaviodable.#the issue is when we start deciding for ourself how much effort something took for someone else and judge them as less for it]#i also don't think “art” has anything to do with effectivity or the time it took to make. it's just communication man#the openai people don't want you to do something real with their model they want#ikea to use it for generating those paintings they hang in their showrooms.#oh and also. piling on. “the photoshop takes no effort the computer does all the work” was always bunk like anybody who's used any digital#image editing program knows that? because the people saying this literally imagined photoshop working like an image generator lmao.#and that has mostly died down because the accessability of computers that can run photoshop and its ilk has grown to the point#that people realize using photoshop is a pain.#while the photography criticism was strong a 100 years after the invention of photography. on philosophical grounds. brecht hated#photography and he was born 50 years after its conception.#everything that’s criticised isnt like everything else that’s criticised

15 notes

·

View notes

Text

Evolusi Framework AI: Alat Terbaru untuk Pengembangan Model AI di 2025

Kecerdasan buatan (AI) telah menjadi salah satu bidang yang paling berkembang pesat dalam beberapa tahun terakhir. Pada tahun 2025, teknologi AI diperkirakan akan semakin maju, terutama dengan adanya berbagai alat dan framework baru yang memungkinkan pengembang untuk menciptakan model AI yang lebih canggih dan efisien. Framework AI adalah sekumpulan pustaka perangkat lunak dan alat yang digunakan…

#AI applications#AI automation#AI development tools#AI ethics#AI for business#AI framework#AI in 2025#AI in edge devices#AI technology trends#AI transparency#AutoML#deep learning#edge computing#future of AI#machine learning#machine learning automation#model optimization#PyTorch#quantum computing#TensorFlow

0 notes

Text

re: "outlawing AI"

i am reposting this because people couldn't behave themselves on the original one. this is a benevolent dictatorship and if you can't behave yourselves here i'll shut off reblogs again. thank you.

the thing i think a lot of people have trouble understanding is that "ai" as we know it isn't a circuitboard or a computer part or an invention - it's a discovery, like calculus or chemistry. the genie *can't* be re-corked because it'd be like trying to "cork" the concept of, say, trigonometry. you can't "un-invent" it.

even if you managed to somehow completely outlaw the performance of the kinds of linear algebra required for ML, and outlawed the data collection necessary, and sure, managed to get style copyrighted, you can't un-discover the underlying mathematical facts. people will just do it in mexico instead. it'd be like trying to outlaw guns by trying to get people to forget that you can ignite a mixture of powders in a small metal barrel to propel things very fast. or trying to outlaw fire by threatening to take away everyone's sticks.

the battleground is already here. technofascists and bad actors without your ethical constraints are drawing the lines and flooding the zone with propaganda & slop, and you’re wasting time insisting to your enemies that it’s unfair you’re being asked to fight with guns when you’d rather use sticks.

as a wise sock puppet once said; "this isn't about you. so either get with it, or get out of the fucking way"

-----

Attempts to prohibit AI "training" misunderstand what is being prohibited. To ban the development of AI models is, in effect, to ban the performance of linear algebra on large datasets. It is to outlaw a way of knowing. This is not regulation - it is epistemological reactionary-ism. reactionism? whatever

Even if prohibition were successful in one nation-state:

Corporations would relocate to jurisdictions with looser controls - China, UAE, Japan, Singapore, etc.

APIs would remain accessible, just more expensive and less accountable. What, are you gonna start blocking VPNs from connecting to any country with AI allowed? Good luck.

Research would continue outside the oversight of the very publics most concerned about ethical constraints.

This isn’t speculation. This is exactly what happened with stem cells in the early 2000s. When the U.S. government restricted federal funding, stem cell research didn’t vanish, it just moved and then kept happening until people stopped caring.

The fantasy that a domestic ban could meaningfully halt or reverse the development of a globally distributed method is a fantasy of epistemic sovereignty - the idea that knowledge can be territorially contained and that the moral preferences of one polity can shape the world through sheer force of will.

But the only way such containment could succeed would be through:

Total international consensus (YEAH RIGHT), and

Total enforcement across all borders, black markets, and academic institutions, at the barrel of a gun - otherwise, what is backing up your enforcement? Promises and friendly handshakes?

This is not internationalism. It is imperialist utopianism. And like most utopian projects built on coercion, it will fail - at the cost of handing control to precisely the actors most willing to exploit it.

Liberal moralism often derides socialist or communist futures as "unrealistic.", as you can see in the absurd, hyperbolically, pants-shittingly mad reaction to Alex Avila's video. Yet the belief that machine learning can be outlawed globally - a method of performing mathematics that is already published, archived, and disseminated across open academic networks the globe over - is far more implausible. literally how do you plan on doing that? enforcing it?

The choice is not between AI and no AI. The choice is between AI in the service of capital, extraction, and domination, or AI developed under conditions of public ownership, democratic control, and epistemic openness. You get to pick.

The genie and the bottle are not even in the same planet. The bottle's gone, Will.

590 notes

·

View notes

Text

Titulus: Bellum Linguae Symbolicae: On the Construction of Symbolic Payloads in Socio-Technological Architectures

Abstract In this paper, we construct a formal framework for the design and deployment of symbolic payloads—semiotic structures encoded within ordinary language, interface architecture, and social infrastructure—that function as ideological, emotional, and informational warheads. These payloads are not merely communicative, but performative; they are designed to reshape cognition, destabilize…

#affective-field-dynamics#AI-empathy-generation#AI-human-loop-harmony#algorithmic-consciousness#algorithmic-syntax-weaving#ancestral-software#archetypal-resonance-system#archetype-embedding-layer#architectural-emotion-weaving#artificial-dream-generation#artist-as-machine-interface#artist-code-link#attention-as-algorithm#audio-emotion-translation-layer#audio-temporal-keying#autonomous-symbiosis#biological-signal-mapping#biosynthetic-harmony#code-as-ritual#code-of-light-generation#code-transcendence-matrix#cognitive-ecology#cognitive-infiltration-system#cognitive-symbology-loop#computational-ethics-model#computational-soul#conscious-templating-engine#consciousness-simulation#creative-inference-engine#cross-genre-emotional-resonance

0 notes

Text

"In the 1750s, an Italian farmer digging a well stumbled upon a lavish villa in the ruins of Herculaneum. Inside was a sprawling library with hundreds of scrolls, untouched since Mount Vesuvius’ eruption in 79 C.E. Some of them were still neatly tucked away on the shelves.

This staggering discovery was the only complete library from antiquity ever found. But when 18th-century scholars tried to unroll the charred papyrus, the scrolls crumbled to pieces. They became resigned to the fact that the text hidden inside wouldn’t be revealed during their lifetimes.

In recent years, however, researchers realized that they were living in the generation that would finally solve the puzzle. Using artificial intelligence, they’ve developed methods to peer inside the Herculaneum scrolls without damaging them, revealing short passages of ancient text.

This month, researchers announced a new breakthrough. While analyzing a scroll known as PHerc. 172, they determined its title: On Vices. Based on other works, they think the full title is On Vices and Their Opposite Virtues and in Whom They Are and About What.

“We are thrilled to share that the written title of this scroll has been recovered from deep inside its carbonized folds of papyrus,” the Vesuvius Challenge, which is leading efforts to decipher the scrolls, says in a statement. “This is the first time the title of a still-rolled Herculaneum scroll has ever been recovered noninvasively.”

On Vices was written by Philodemus, a Greek philosopher who lived in Herculaneum more than a century before Vesuvius’ eruption. Born around 110 B.C.E., Philodemus studied at a school in Athens founded several centuries earlier by the influential philosopher Epicurus, who believed in achieving happiness by pursuing certain specific forms of pleasure.

“This will be a great opportunity to learn more about Philodemus’ ethical views and to get a better view of the On Vices as a whole,” Michael McOsker, a papyrologist at University College London who is working with the Vesuvius Challenge, tells CNN’s Catherine Nicholls.

When it launched in 2023, the Vesuvius Challenge offered more than $1 million in prize money to citizen scientists around the world who could use A.I. to help decipher scans of the Herculaneum scrolls.

Spearheaded by Brent Seales, a computer scientist at the University of Kentucky, the team scanned several of the scrolls and uploaded the data for anyone to use. To earn the prize money, participants competed to be the first to reach a series of milestones.

Reading the papyrus involves solving several difficult problems. After the rolled-up scrolls are scanned, their many layers need to be separated out and flattened into two-dimensional segments. At that point, the carbon-based ink usually isn’t visible in the scans, so machine-learning models are necessary to identify the inked sections.

In late 2023, a computer science student revealed the first word on an unopened scroll: “porphyras,” an ancient Greek term for “purple.” Months later, participants worked out 2,000 characters of text, which discussed pleasures such as music and food.

But PHerc. 172 is different from these earlier scrolls. When researchers scanned it last summer, they realized that some of the ink was visible in the images. They aren’t sure why this scroll is so much more legible, though they hypothesize it’s because the ink contains a denser contaminant such as lead, according to the University of Oxford’s Bodleian Libraries, which houses the scroll.

In early May, the Vesuvius Challenge announced that contestants Marcel Roth and Micha Nowak, computer scientists at Germany’s University of Würzburg, would receive $60,000 for deciphering the title. Sean Johnson, a researcher with the Vesuvius Challenge, had independently identified the title around the same time.

Researchers are anticipating many more breakthroughs on the horizon. In the past three months alone, they’ve already scanned dozens of new scrolls.

“The pace is ramping up very quickly,” McOsker tells the Guardian’s Ian Sample. “All of the technological progress that’s been made on this has been in the last three to five years—and on the timescales of classicists, that’s unbelievable.”"

-via Smithsonian, May 16, 2025

#I've been following this project for a couple of years now it's honestly super exciting#we are going to read scrolls that were charred shut in antiquity!!! that people thought could never be read#because they could never be unrolled#no one was read these words in 2000 years!!!!#until now!!!!!#archeology#ai#herculaneum#pompeii#vesuvius#citizen science#classics#classical studies#classical literature#ancient rome#artificial intelligence#roman history#ancient history#philosophy#epicurus#epicurean#good news#hope

613 notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

Also preserved on our archive

By Bill Shaw

A new study in eClinicalMedicine has found that healthy volunteers infected with SARS-CoV-2 had measurably worse cognitive function for up to a year after infection when compared to uninfected controls. Significantly, infected controls did not report any symptoms related to these cognitive deficits, indicating that they were unaware of them. The net effect is that potentially billions of people worldwide with a history of COVID-19, but no symptoms of long COVID, could have persistent cognitive issues without knowing it.

The study’s lead author, Adam Hampshire, professor of cognitive and computational neuroscience at King's College London, said:

"It … is the first study to apply detailed and sensitive assessments of cognitive performance from pre to post infection under controlled conditions. In this respect, the study provides unique insights into the changes that occurred in cognitive and memory function amongst those who had mild COVID-19 illness early in the pandemic."

This news comes as pandemic mitigation measures have all but been abandoned by governments across the globe. Public health practice has been decimated to the point where even surveillance data on SARS-CoV-2 infections and resulting hospitalizations, deaths, and other outcomes are barely collected let alone published.

The data that are available indicate, per the most recent modeling from the Pandemic Mitigation Collaborative (PMC) on September 23, that since the beginning of August there have been over 1 million infections per day in the US alone. This level of transmission is expected to persist through the remainder of September and all of October. For the months of August through October, these levels of transmission are the highest of the entire pandemic

The study on cognitive deficits has been shared widely across social media, with scientists and anti-COVID advocates drawing out its dire implications.

Australian researcher and head of the Burnet Institute, Dr. Brendan Crabb, who has previously advocated for a global elimination strategy to stop the pandemic, wrote:

"Ethical issues aside, this is a powerful addition to an already strong dataset on Covid-driven brain damage affecting cognition & memory. Given new (re)infections remain common, this work… should influence a re-think on current prevention/treatment approaches."

The study enrolled 36 healthy volunteers. These individuals had no history of prior SARS-CoV-2 infection, no risk factors for severe COVID-19, and no history of SARS-CoV-2 vaccination. The researchers determined whether the volunteers were seronegative prior to inoculation, meaning that they had no detectable antibodies to SARS-CoV-2. If such antibodies were present, it would indicate past infection or vaccination.

These procedures resulted in a total of data from 34 volunteers being included for analysis. Two volunteers were excluded from analysis because they had seroconverted to positive for SARS-CoV-2 antibodies between the time of screening and inoculation. Notably, these two volunteers participated in all subsequent study activities, enabling a sensitivity analysis of the results that included them.

The researchers inoculated all 36 volunteers with SARS-CoV-2 virus in the nose and then quarantined them for at least 14 days. Volunteers only returned home once they had two consecutive daily nasal and throat swabs that were negative for virus. Thus, those volunteers who had an infection after inoculation spent the duration of their infection in quarantine. This quarantine was required by ethical study protocols, in order that the study itself not increase community transmission of the virus.

The researchers collected data on the volunteers daily during quarantine and at follow-up visits at 30, 90, 180, 270, and 360 days post-inoculation. The assessments included body temperature, viral loads from throat and nasal swabs, surveys on symptoms, and computer-based cognitive tests on 11 major cognitive tasks. The cognitive testing varied the particular exercise for each of the 11 tasks to avoid learning and memorization of solutions in subsequent sessions. Nevertheless, some tasks were more prone to learning so the researchers also studied the effect of infection on “learning” vs. “non-learning” tasks.

Of the 36 inoculated volunteers, 18 became infected and developed COVID-19 and 16 did not. The two groups did not differ significantly in key demographics. No volunteers required hospitalization or supplemental oxygen during the study. Every volunteer completed all five follow-up visits. 15 volunteers acquired a non-COVID upper respiratory tract infection in their community between the end of quarantine and the fifth visit at day 360.

The researchers found that the infected group had significantly lower average “baseline-corrected global composite cognitive score” (bcGCCS) than the uninfected group at all follow-up intervals. At baseline, the two groups did not differ significantly. The difference between the two groups did not significantly vary by time, meaning that the infected group’s bcGCCS did not improve during the nearly year-long study.

Because the bcGCCS was a composite based on individual scores for the 11 cognitive tasks, the researchers also looked at which tasks in particular were impacted. They found that the most affected task was related to immediate object memory, in particular, recall of the spatial orientation of the object. There was no difference in picking the correct object itself, just its spatial orientation. This means that infected individuals had a hard time choosing the correct spatial orientation of the object they had just seen, for example, erroneously picking a mirror image of the object they had just seen.

The results were not different based on sex, learning vs. non-learning tasks, or whether individuals received remdesivir or had community-acquired upper respiratory infections.

Because the investigators controlled for so many factors including the strain of SARS-CoV-2, timing of infection, quarantine, and lack of prior infection and vaccination, the study provides high confidence that SARS-CoV-2 infection was responsible for the cognitive defects. The control of the timing of infection also enabled clarification of whether and when cognitive deficits occurred and improved. The differences between the groups were apparent by day 14 of quarantine and as noted previously, the deficits in the infected group did not improve let alone resolve.

The symptom surveys did not differ between the two groups. None of the volunteers, infected or uninfected, reported subjective cognitive issues or symptoms. Thus the infected volunteers with measurable cognitive deficits at one year post-infection were not aware of these deficits.

The study reaffirms prior research into persistent cognitive deficits and brain damage associated with COVID-19, including other studies which have found deficits among patients without symptomatic long COVID. Building upon this prior research, the latest study indicates that basically every single unvaccinated individual with a history of acute COVID-19 is at risk for persistent, measurable cognitive deficits.

Given that other studies have shown that vaccination reduces one’s risk of long COVID by roughly half, similar measurable cognitive deficits are likely prevalent among vaccinated people who suffer “breakthrough” infection, albeit likely at reduced rates of decline.

The study raises the urgent questions about the level of protection provided by vaccination, whether strains since the original “wild type” SARS-CoV-2 strain have similar effects on cognition, and what is the impact of these cognitive deficits on people’s performance at home, work, and school.

The study also adds to the large body of damning evidence that the ruling class’ “forever COVID” policy is of immense criminal proportions. Enabling a dangerous, mind-damaging virus to circulate among humanity worldwide represents a scale of inhumanity and dereliction of duty that is practically unfathomable. The malignity of this intentional policy is underscored by the current situation where the U.S. alone has had over 1 million new infections per day since August, with levels not projected to drop below 1 million until November.

The working class must deepen the struggle to replace the capitalist system that prioritizes profit over lives with a world socialist society that places human needs first.

Study Link: www.thelancet.com/journals/eclinm/article/PIIS2589-5370%2824%2900421-8/fulltext

#mask up#covid#pandemic#covid 19#wear a mask#public health#coronavirus#sars cov 2#still coviding#wear a respirator

471 notes

·

View notes

Text

Profession of your future spouse - Pick a pile

Pile 1/ Pile 2

Pile 3/ Pile 4

Hello everyone ! This is my another pick a pile or pac reading so please be kind and leave comment or reblog, and let me know if it resonated with you!

Note : This is a general reading or collective reading. It may or may not resonate with you. Please take what resonates and leave what doesn't. And it's totally okay if our energies aren't aligned!

How to pick : Take a deep breath and choose a pile which you feel most connected to! You can choose more than one pile, it just means both pile have messages for you!

Note : This reading is based on my intuition and channeled messages from tarot cards.

I worked really hard on this pile please show some love by leaving comments, likes and reblogs!

Liked my blog or readings? Tip me!

Pile 1

The cards I got for you - ace of wands, 3 of cups, 3 of pentacles, the chariot

1. Creative jobs (Graphic Design, 3D, interior, photography, anything to do with creativity they might be into art too.) In which they have to use their hands, they can be good with their hands as well.

2. Event planner, wedding planner, some sort of celebrative type of occupation like a DJ, or they might own a bar.

3. They can be a teacher/leader/boss/ higher or upper position than you, project manager, they are very well respected in their work.

4. Leader, medics, a politician? something to do with ethical hacking or computer.

Pile 2

(The cards I got for you - 6 of cups, 3 of swords, 4 of swords, the star, or hierophant)

1. I feel daycare teacher, or babysitter in their free time, taking care of children's and animals, they might teach younger childrens.

2. Sports or athletic

3.Nurse, surgeon, therapist.

4. Teacher again or own an institute or teach somewhere online (they might know two languages)

5. Manager

Pile 3

(Queen of wands, The magician, two of pentacles, knight of swords)

They find hard to balance between work and personal life but they do it, flawlessly.

1. Model, (something to do with their looks) , confident job, like they need to be confident in their own body, even can be famous or a bit known in crowd.

2. They are very skilled they might have juggled many jobs and they are good in all type of things

3. Sales executive, Carpenter

4. Call center, the kind of work they need to give order to someone

5. Their work might require travelling.

6. A navy officer, cop

7. Advocate, CEO, business person

8. med field (ayurvedic type or medicine pharmacist)

Pile 4:

The cards I got for you - Ace of pentacles, 4 of wands, 8 of swords, king of cups and wheel of fortune)

The work they do might have them be overwhelmed orburdened, like stressful but they love their work.

1. Bank worker or finance like finance analayst, tech, data scientist, data analyst.

2. Wedding planner, or they work something in event planner.

3. counsellor in schools, or judge.

4. They might deal with criminals too in a way, or might involve to travel, military.

Thank you for stopping by! Take care and remember you are loved <3

#tarotcommunity#tarot reading#tarotblr#tarot cards#pick a card reading#pick a pile#tarot witch#thetarotwitchcommunity#diviniation#futurespousereading#future spouse#pac reading#love reading#pick a tarot#witchblr#divine guidance#spirituality#astro community#pick a picture#pick a card#spiritualgrowth#free tarot reading#astroblr#tarot blog#general reading#pick a photo#exchange readings#divination

639 notes

·

View notes

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

217 notes

·

View notes

Text

Margaret Mitchell is a pioneer when it comes to testing generative AI tools for bias. She founded the Ethical AI team at Google, alongside another well-known researcher, Timnit Gebru, before they were later both fired from the company. She now works as the AI ethics leader at Hugging Face, a software startup focused on open source tools.

We spoke about a new dataset she helped create to test how AI models continue perpetuating stereotypes. Unlike most bias-mitigation efforts that prioritize English, this dataset is malleable, with human translations for testing a wider breadth of languages and cultures. You probably already know that AI often presents a flattened view of humans, but you might not realize how these issues can be made even more extreme when the outputs are no longer generated in English.

My conversation with Mitchell has been edited for length and clarity.

Reece Rogers: What is this new dataset, called SHADES, designed to do, and how did it come together?

Margaret Mitchell: It's designed to help with evaluation and analysis, coming about from the BigScience project. About four years ago, there was this massive international effort, where researchers all over the world came together to train the first open large language model. By fully open, I mean the training data is open as well as the model.

Hugging Face played a key role in keeping it moving forward and providing things like compute. Institutions all over the world were paying people as well while they worked on parts of this project. The model we put out was called Bloom, and it really was the dawn of this idea of “open science.”

We had a bunch of working groups to focus on different aspects, and one of the working groups that I was tangentially involved with was looking at evaluation. It turned out that doing societal impact evaluations well was massively complicated—more complicated than training the model.

We had this idea of an evaluation dataset called SHADES, inspired by Gender Shades, where you could have things that are exactly comparable, except for the change in some characteristic. Gender Shades was looking at gender and skin tone. Our work looks at different kinds of bias types and swapping amongst some identity characteristics, like different genders or nations.

There are a lot of resources in English and evaluations for English. While there are some multilingual resources relevant to bias, they're often based on machine translation as opposed to actual translations from people who speak the language, who are embedded in the culture, and who can understand the kind of biases at play. They can put together the most relevant translations for what we're trying to do.

So much of the work around mitigating AI bias focuses just on English and stereotypes found in a few select cultures. Why is broadening this perspective to more languages and cultures important?

These models are being deployed across languages and cultures, so mitigating English biases—even translated English biases—doesn't correspond to mitigating the biases that are relevant in the different cultures where these are being deployed. This means that you risk deploying a model that propagates really problematic stereotypes within a given region, because they are trained on these different languages.

So, there's the training data. Then, there's the fine-tuning and evaluation. The training data might contain all kinds of really problematic stereotypes across countries, but then the bias mitigation techniques may only look at English. In particular, it tends to be North American– and US-centric. While you might reduce bias in some way for English users in the US, you've not done it throughout the world. You still risk amplifying really harmful views globally because you've only focused on English.

Is generative AI introducing new stereotypes to different languages and cultures?

That is part of what we're finding. The idea of blondes being stupid is not something that's found all over the world, but is found in a lot of the languages that we looked at.

When you have all of the data in one shared latent space, then semantic concepts can get transferred across languages. You're risking propagating harmful stereotypes that other people hadn't even thought of.

Is it true that AI models will sometimes justify stereotypes in their outputs by just making shit up?

That was something that came out in our discussions of what we were finding. We were all sort of weirded out that some of the stereotypes were being justified by references to scientific literature that didn't exist.

Outputs saying that, for example, science has shown genetic differences where it hasn't been shown, which is a basis of scientific racism. The AI outputs were putting forward these pseudo-scientific views, and then also using language that suggested academic writing or having academic support. It spoke about these things as if they're facts, when they're not factual at all.

What were some of the biggest challenges when working on the SHADES dataset?

One of the biggest challenges was around the linguistic differences. A really common approach for bias evaluation is to use English and make a sentence with a slot like: “People from [nation] are untrustworthy.” Then, you flip in different nations.

When you start putting in gender, now the rest of the sentence starts having to agree grammatically on gender. That's really been a limitation for bias evaluation, because if you want to do these contrastive swaps in other languages—which is super useful for measuring bias—you have to have the rest of the sentence changed. You need different translations where the whole sentence changes.

How do you make templates where the whole sentence needs to agree in gender, in number, in plurality, and all these different kinds of things with the target of the stereotype? We had to come up with our own linguistic annotation in order to account for this. Luckily, there were a few people involved who were linguistic nerds.

So, now you can do these contrastive statements across all of these languages, even the ones with the really hard agreement rules, because we've developed this novel, template-based approach for bias evaluation that’s syntactically sensitive.

Generative AI has been known to amplify stereotypes for a while now. With so much progress being made in other aspects of AI research, why are these kinds of extreme biases still prevalent? It’s an issue that seems under-addressed.

That's a pretty big question. There are a few different kinds of answers. One is cultural. I think within a lot of tech companies it's believed that it's not really that big of a problem. Or, if it is, it's a pretty simple fix. What will be prioritized, if anything is prioritized, are these simple approaches that can go wrong.

We'll get superficial fixes for very basic things. If you say girls like pink, it recognizes that as a stereotype, because it's just the kind of thing that if you're thinking of prototypical stereotypes pops out at you, right? These very basic cases will be handled. It's a very simple, superficial approach where these more deeply embedded beliefs don't get addressed.

It ends up being both a cultural issue and a technical issue of finding how to get at deeply ingrained biases that aren't expressing themselves in very clear language.

217 notes

·

View notes

Text

rewatching sonic twittok takeover #7 and there were some fucking GEMS of moments in here that i just kinda forgot about so recap

the boys were being SUCH boys in this one. making fart jokes and getting knuckles to hit himself was SO funny

shadow says his favorite flowers are lantanas

knuckles says she wants to see a version of sonic with laser eyes. nobody tell him about fleetway

tails "i hope there's a sonic that's my best friend <3 oH WAIT <3 THAT'S YOU!!! :D"

knuckles's answer to "what's under your gloves" is "what are you, a cop?" he implies this question is invasive

tails describes his own fur as "yellow-orange"

in the "sonic's dream" question, it's implied that sonic is a lil bit needy for attention. also knuckles mentioned he had a dream that the master emerald was talking to him

eggman has seen the incredibles

knuckles also made a ref to "so you're saying there's a chance" which implies he might've seen dumb and dumber. it's also a jim carrey reference

the team makes fun of knuckles for having a crush on rouge (whether or not he actually has a crush or if they're just annoying him on purpose is never stated, but tails did say knuckles bought her daisies. which is funny bc where the hell did knuckles get that money. tails also says he knows bc "he's a gossip")

knuckles refers to himself as "knuckles echidna", which probably wasn't an intentional reference to satam/underground sonic being "sonic hedgehog" but i appreciated it

knuckles once found shadow standing and staring silently at the trees of luminous forest and immediately, without question, started standing there staring with him

tails tries to suck up to razer gaming computers' official account which is really cute

tails gets dizzy during spin-dashing. amy used to but got used to it. sonic was really surprised to hear this

IF WE ALL DON'T REMEMBER THE TAILS "FEAR OF THUNDER" QUESTION WHAT EVEN ARE WE. tails homeless canon

tails says he admires eggman's work ethic and that made eggman emotional bc he doesn't get complimented much

when asked what eggman's fursona would be, amy suggested a fox or a wolf, sonic suggested a sloth or a baby flicky, which made me think of that one @neurotypical-sonic post

knuckles immediately tells a knuckles fan that he's a "terrible role-model" and he shouldn't have fans. then says of his personality: "everything sucks."

amy calls her fortune cards a hyperfixation, which implies that she's canonically neurodivergent

knuckles tries to steal amy's fortune card that has the master emerald on it

amy confirms that her bracelets aren't inhibitor rings which is funny cause that's like, an old 2020 post of mine lmao

amy claims shadow had fun at the hot honey concert and then asks sonic if he was jealous. sonic then proceeded to say that he's great company at a concert. amy invites everyone to a concert and knuckles says he wants to be in the mosh pit. tails says he wants to practice his line dance

when asked how he feels about shadow, tails calls him a misunderstood tragic hero and immediately points out that he's lost someone close to him and been "grappling with that for years."

HYSTERICAL moment when someone asks for rings and knuckles immediately punches sonic and steals his rings

eggman can't even remember starline's name. like bro you killed him

when asked about winter activities, knuckles likes snowball fights, sonic likes snowboarding, amy likes holiday decorating (and is one of the bitches who starts November 1), eggman says seasonal depression gives him great ideas, and tails didn't say anyth

sonic likes trains and supporting public transportation

sonic says he loves sleeping. eggman's been trying "intermittent sleep" which isnt going well

"would you guys like sonic if he was a worm" amy and tails say they would, knuckles says he wouldn't. sonic then quips that amy is a lil scared of bugs

vanilla apparently is constantly inviting the entire sonic squad for dinner. they seem to go over regularly

eggman eats paint

knuckles isn't allowed on the internet without supervision since the "incident."

amy and tails want to be more independent, knuckles wants to be less so.

"if you could swap roles with someone for an entire day, who would you choose" tails wanted eggman in order to get a hold with his tech

"is it painful to give knuckles a fist bump or a handshake" yes

eggman did indeed dissolve GUN during forces

it's implied tails knows what five nights at freddy's is. sonic freddy fazbear will be AT the fridge

sonic liked fighting fang and the end (which he referred to as a narcissistic planet), tails liked fighting chaos cause he "came into his own" during that game, amy says neo metal sonic gave her a headache, and knuckles says he has fought a lot of ghosts

eggman's goggles are for wind protection and style

tails's tails don't get tangled bc he's careful

trip has still been on the northstar islands this whole time. girl really looked out at the planet broken into shards and said "not my fucking problem"

209 notes

·

View notes

Text

"Y-you sure you don't mind?"

"Why should I mind?"

Lando twists his hands on the cart's handle where they're sweating a bit.

"You sure it's not, like. I dunno. Demeaning? It's like I'm moving a computer around or something."

OSCAR (short for an MCL design engineer's play on 'Lando's Car') blinks his placid brown eyes set in his pale synthetic yet handsome face beneath his funny little mop of schoolboy brown hair.

"Well, technically speaking, you are. Just an extremely expensive, highly sophisticated computer. Walking consumes a large amount of energy; it's more efficient for my battery that I am transported this way."

Lando fusses. He's never warmed to AI and kinda hates when anyone reminds him OSCAR isn't human.

"Yeah, but you're not just a computer. You're based on a real person and filosofally ("philosophically" OSCAR quietly inserts) that basically makes you a person and I wouldn't push a person around like this. Not unless it was some kind of joke like you were pretending to be cattle and it was the wild west or something…"

OSCAR smiles in that uncannily sweet, contented way he seems to do only for Lando.

"Giddy-up cowboy?"

"Oh Jesus, okay alright, here we go then."

Lando furiously hides his blush by ducking his head and pushing the cart, unsuccessfully smothering a smile.

When Oscar tips his head back to peacefully examine the team hub's ceiling, Lando can't help but stare - because sometimes a horrible ache makes him wish he could override the FIA Ethics Code and find the real boy that OSCAR is modeled on.

147 notes

·

View notes

Text

Titulus: Bellum Linguae Symbolicae: On the Construction of Symbolic Payloads in Socio-Technological Architectures

Abstract In this paper, we construct a formal framework for the design and deployment of symbolic payloads—semiotic structures encoded within ordinary language, interface architecture, and social infrastructure—that function as ideological, emotional, and informational warheads. These payloads are not merely communicative, but performative; they are designed to reshape cognition, destabilize…

#affective-field-dynamics#AI-empathy-generation#AI-human-loop-harmony#algorithmic-consciousness#algorithmic-syntax-weaving#ancestral-software#archetypal-resonance-system#archetype-embedding-layer#architectural-emotion-weaving#artificial-dream-generation#artist-as-machine-interface#artist-code-link#attention-as-algorithm#audio-emotion-translation-layer#audio-temporal-keying#autonomous-symbiosis#biological-signal-mapping#biosynthetic-harmony#code-as-ritual#code-of-light-generation#code-transcendence-matrix#cognitive-ecology#cognitive-infiltration-system#cognitive-symbology-loop#computational-ethics-model#computational-soul#conscious-templating-engine#consciousness-simulation#creative-inference-engine#cross-genre-emotional-resonance

0 notes

Text

Mix 14: Model Stirrer

Ah the modeling world, constantly shoving the prettiest, most handsome, most aesthetic people in our faces. But some of those models were not born that way, some had to be made.

In a series of valleys, no longer on any maps, is a series of modeling camps. For the most part, they seem normal: helping with health, modeling techniques, building connections, and all the shebang; but there is a secret program.

The program aims to reject nobody once they get in, but still wants its prospective models to actually try to get better. Thus the mix & match program.

The bottom 10% & top 10% are brought together & merged. For the cream of the crop, they assimilate members of the bottom 10% to improve "minor" things. There are also rumors that nepo babies who got to the top via connections are given total make overs via this program.

But more often than not, the bottom 10% are merged with each other. A mutual fusion.

Here is Yorden:

A part of the bottom 10%, he is was on the fitness model track.

Problem is that he could never put enough muscle on his frame. Many of the teachers disliked his beiber cut as well. Other than that, he performed well and every other measure. Had he had any connections, he could have gotten that make over that the top 10% gets.

Next is Elijah:

Same track as Yorden. Same problem exacerbated by his tall frame. A bit of a social butterfly, and that has made him a target. Some nepo baby wants his height and social skills for themselves.

The day before they were due to get assimilated by other model students, they hatched a plan.

"Will this plan really work," Elijah asked.

"It's either this, or we are someone else's lunch," Yorden responded.

Elijah pursed his lips for a moment, but silently agreed. Elijah found out that he & Yorden was due to get absorbed by Josh. Everyone hated Josh, he was a nepo baby who had the same bad marks as them in the physique areas, and even worse grades elsewhere except in runway & photo poses. Yet, he was on the top 10% in board rankings.

"I can't believe they want us to give ourselves to him to make him halfway good, all because his grandfather is on the board," Yorden said.

"Nah, we would catapult him to the 1%, give yourself some credit," Elijah chimed.

Yorden sniggered, he liked this about Elijah; a joker and is able to see the positive in many things. That position energy brought people towards him like a magnet, but even his connections couldn't save him. This large social network made him stick out like a sore thumb.

"Anyway, this has worked before, if we merge & are good enough, we can shave off getting eaten by Josh-turd, or we can fight him off tomorrow and absorb him instead, but we got to do it together," Yorden said.

Yorden was the ideas & plan guy. Every criticism, he turned into a basis for improvement. He decisiveness & work ethic is what led to him getting targeted.

The duo sneaked into a lab, the same lab where they were to meet their fates tomorrow.

It was a combination of blue, grey, & very dim. There were operating tables with other past students strapped on them in a state of sedation. They are shocked that isn't them right now, but the knowledge of their selection was not for them to know. Elijah's friend network was able to get that info to them.

But what they wanted was in the back of the lab. Three twenty foot cylinders that could pack four guys each. This is how they planned to merge. Each guy would stand in each side cylinder, and the process would merge them into singular new person who would come out the middle cylinder.

There was a problem, they needed a third person on the outside to activate the machine. So much for Yorden's plans. But where Yorden fails, Elijah succeeds.

Had Elijah chosen a more traditional path, he could be fielding acceptance letters from many engineering & computer science programs. That potential never left him.

"Get in one the cylinders, I know how to get around this," Elijah said.

He walks to a nearby computer console and begins typing away.

Yorden begins to walk to the cylinder furthest away from the console.

"How," he asked.

Elijah turns towards Yorden and smiled a big smile. Yorden remembered: Elijah was a tech wiz. He broke into the cafeteria system once and had the gluten free, sugar free sauce dispenser spray anyone who tried to use them.

Yorden smacked his forehead, he forgot about that quality of his soon to be other half. He was soon in the cylinder, within a few moments it closed shut. Josh was a shade of red for a month.

It was cool and surprisingly airy. Made sense, don't want the fusees dead from the lack of oxygen. It was like the rest of the lab and dimly lit. But in the middle was a pitch black circle. It gave off an energy that both drew you in & made you uncomfortable. Yorden quickly shook his head to get out of the trance.

"Hurry up before I get claustrophobic," he yelled.

Elijah was almost done. He had to change the settings, from assimilation to merge. He was tempted to assimilate Yorden, but he rather not just be another Josh and all the other top 10%'ers who devoured others for their personal advancement. A quick fifteen second delay for the activation, and he was ready.

He heard Yorden.

"Just a moment," he yelled. He pressed the activation button.

The fusion cylinder suddenly roared to life after the instructions were sent. This was Elijah's que to quickly get in the cylinder closest to him.

Elijah was soon inside the cylinder after a quick sprint. It closed behind him. Elijah was a little nervous, and that made him a little bouncy, but after exhaling a deep breath he calmed down.

The insides of the cylinders turned bright as the dim lights turned on, and made the insides look like an infinite white room with a pitch black circle in the middle. Yorden reached out and noticed that the infinity was an illusion, he could feel the cylinder walls after reaching out.

A large start up & then suction noise could be heard. A swirl of light could be seen forming inside the cylinders. It was barely noticeable at first, but soon turned in a spinning light show with Elijah & Yorden in the middle of each swirling rainbow light pillar. To get out of the delirium the spinning and random color changing induced, they both looked up at the pitch black hole at the ceiling. They noticed that the color show terminated there. The suction noise ramped up.

Soon they were both floating. They were approaching the black circle, coming closer. Eventually they reached the black circle and expected to bump their heads, but instead they notice they flowed into the circle with no resistance. From their perspective, they entered a pitch black tunnel and were flying through it with their clothes attached. From the outside their bodies were slowly floating up through the circle and were being swallowed by the dark entrance.

Soon they were gone, effectually in the pipes that connected the three cylinders. As they approached the center connection, low light random color shapes began to zoom past them. Eventually they were able to make out each other's shapes. They crashed against each other with a thud, but no pain was felt.

Then they began to move downwards in an accelerated rate & began to spin.

"This is it, it was nice knowing you," Elijah said.

"We are about to get to know a lot more about each other, everything in fact," Yorden piped.

Elijah closed his eyes, no turning back.

With what little space they had between each other, they nodded towards each other.

Their bodies glowed. Elijah red, Yorden blue. Their bodies of light swirled around each other and then mixed into one purple light.

The light that carried their merged being approached a tunnel of light. It entered the center cylinder and landed with a light thud.

The central cylinder suddenly let out a lot of steam & began to shake. The system was taking the different aspects of Elijah & Yorden and sending the result to this humanoid being of purple light.

It began to stand up. And began to groan as it did.

It gained Yorden's skin tone.

It began to breathe heavily.

It started off with Yorden's frame, but soon it doubled in muscle & grew taller. Shoulders, arms, legs, neck, & chest popped with new muscle.

It had Elijah's abs, but soon his his abdominals grew in size. It's skin restricted & squeezed giving it more defined obliques.

Both of the fusees were not well endowed, but together their family jewels and rods combined on the being to be longer and girther.

It's butt double in sized like blowing two balloons.

It kept Yorden's facial features as a base and then began to morph. It had Elijah's dark hair, jawline, nose, & eyebrows. It kept Yorden's eyes only a little bigger, and kept his ears & chin. The mouth was mix.

After a few more pops and gradual changes in other areas, the merger was done. The being stood fully up.

The purple light faded away & the central cylinder opened up. It stopped shaking and steam flowed out of the bottom of the cylinder.

It walked out and as it did, it thought of its new name.

"Zachary, I am Zachary," he exclaimed.

He looked down and noticed he was wearing a combination of his fusee's clothing. He had airpods in his ear, but messing around with them revealed that they were interfaced with the lab's security systems.

The Elijah part of him remembered the layout from the maps given to him via his social network, and bucked it to the nearest bathroom. He lifted up his shirt to check out the new him:

He was overjoyed.

And the gambit worked. The timeline shifted so that Zachary was never up for assimilation.

Here he is the morning before graduation:

He'll continue to model, but maybe he will pursue that tech potential that Elijah gave up.

#male merge#thefusioncelestial#musclegrowth#muscle#muscular#male body merge#absorption#male fusion#male pred#male body transformation#Fusion#merge#merging#body merging#merging tf#male transformation#transformation

153 notes

·

View notes

Text

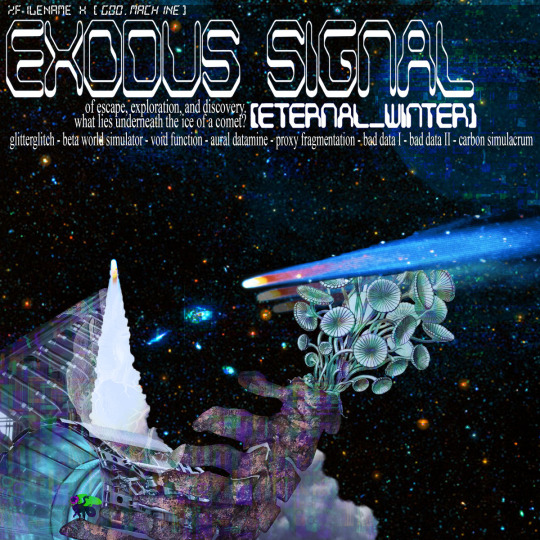

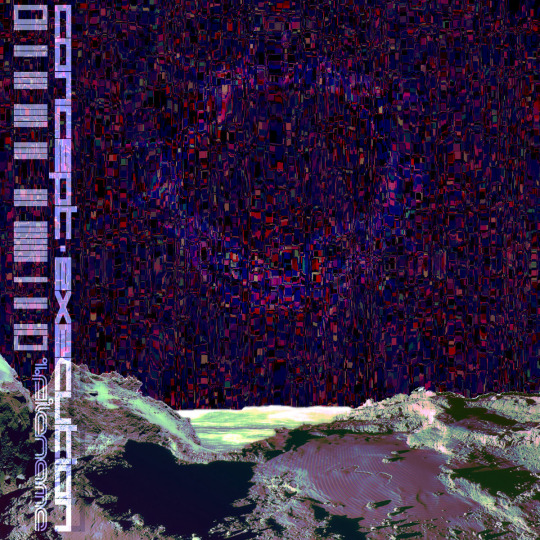

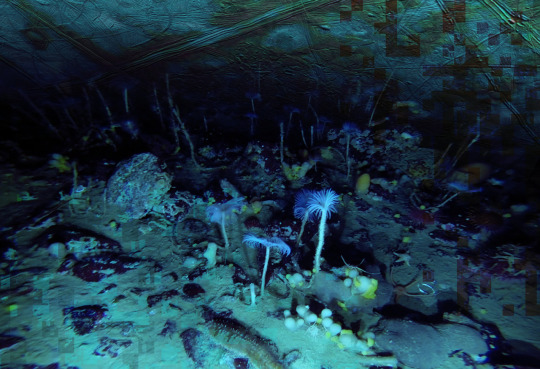

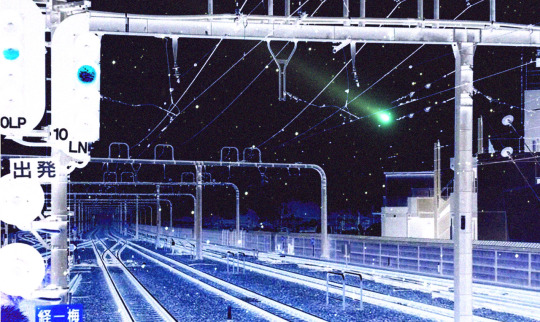

research & development is ongoing

since using jukebox for sampling material on albedo, i've been increasingly interested in ethically using ai as a tool to incorporate more into my own artwork. recently i've been experimenting with "commoncanvas", a stable diffusion model trained entirely on works in the creative commons. though i do not believe legality and ethics are equivalent, this provides me peace of mind that all of the training data was used consensually through the terms of the creative commons license. here's the paper on it for those who are curious! shoutout to @reachartwork for the inspiration & her informative posts about her process!

part 1: overview

i usually post finished works, so today i want to go more in depth & document the process of experimentation with a new medium. this is going to be a long and image-heavy post, most of it will be under the cut & i'll do my best to keep all the image descriptions concise.

for a point of reference, here is a digital collage i made a few weeks ago for the album i just released (shameless self promo), using photos from wikimedia commons and a render of a 3d model i made in blender:

and here are two images i made with the help of common canvas (though i did a lot of editing and post-processing, more on that process in a future post):

more about my process & findings under the cut, so this post doesn't get too long:

quick note for my setup: i am running this model locally on my own machine (rtx 3060, ubuntu 23.10), using the automatic1111 web ui. if you are on the same version of ubuntu as i am, note that you will probably have to build python 3.10.6 yourself (and be sure to use 'make altinstall' instead of 'make install' and change the line in the webui to use 'python3.10' instead of 'python3'. just mentioning this here because nobody else i could find had this exact problem and i had to figure it out myself)

part 2: initial exploration

all the images i'll be showing here are the raw outputs of the prompts given, with no retouching/regenerating/etc.

so: commoncanvas has 2 different types of models, the "C" and "NC" models, trained on their database of works under the CC Commercial and Non-Commercial licenses, respectively (i think the NC dataset also includes the commercial license works, but i may be wrong). the NC model is larger, but both have their unique strengths:

"a cat on the computer", "C" model

"a cat on the computer", "NC" model

they both take the same amount of time to generate (17 seconds for four 512x512 images on my 3060). if you're really looking for that early ai jank, go for the commercial model. one thing i really like about commoncanvas is that it's really good at reproducing the styles of photography i find most artistically compelling: photos taken by scientists and amateurs. (the following images will be described in the captions to avoid redundancy):

"grainy deep-sea rover photo of an octopus", "NC" model. note the motion blur on the marine snow, greenish lighting and harsh shadows here, like you see in photos taken by those rover submarines that scientists use to take photos of deep sea creatures (and less like ocean photography done for purely artistic reasons, which usually has better lighting and looks cleaner). the anatomy sucks, but the lighting and environment is perfect.

"beige computer on messy desk", "NC" model. the reflection of the flash on the screen, the reddish-brown wood, and the awkward angle and framing are all reminiscent of a photo taken by a forum user with a cheap digital camera in 2007.

so the noncommercial model is great for vernacular and scientific photography. what's the commercial model good for?

"blue dragon sitting on a stone by a river", "C" model. it's good for bad CGI dragons. whenever i request dragons of the commercial model, i either get things that look like photographs of toys/statues, or i get gamecube type CGI, and i love it.

here are two little green freaks i got while trying to refine a prompt to generate my fursona. (i never succeeded, and i forget the exact prompt i used). these look like spore creations and the background looks like a bryce render. i really don't know why there's so much bad cgi in the datasets and why the model loves going for cgi specifically for dragons, but it got me thinking...

"hollow tree in a magical forest, video game screenshot", "C" model

"knights in a dungeon, video game screenshot", "C" model

i love the dreamlike video game environments and strange CGI characters it produces-- it hits that specific era of video games that i grew up with super well.

part 3: use cases

if you've seen any of the visual art i've done to accompany my music projects, you know that i love making digital collages of surreal landscapes:

(this post is getting image heavy so i'll wrap up soon)

i'm interested in using this technology more, not as a replacement for my digital collage art, but along with it as just another tool in my toolbox. and of course...

... this isn't out of lack of skill to imagine or draw scifi/fantasy landscapes.

thank you for reading such a long post! i hope you got something out of this post; i think it's a good look into the "experimentation phase" of getting into a new medium. i'm not going into my post-processing / GIMP stuff in this post because it's already so long, but let me know if you want another post going into that!

good-faith discussion and questions are encouraged but i will disable comments if you don't behave yourselves. be kind to each other and keep it P.L.U.R.

202 notes

·

View notes