#cron scheduler

Explore tagged Tumblr posts

Note

Since earth is Unicron imagine if moon. Is Unicron's some sort of minicon or something

Like Unicron went out cold for so long and it juet kind of stayed by just chilling and then Earth fully formed and gained form of communication with Moon etcetera

Moon when Cron wakes up: Finally you're awake. Take care of your kid now

Unicron: what

Earth: :D

I honestly love the concept of sentient cities and planets. Like they interact with each other in their own ways that humans don't really comprehend or understand. Beasts on their own scale.

(Blame blood and bone by DragonflyxParodies, The Desert Storm series by BlueSunshine, and silverpard's London Incarnate series and a mirror, darkly. All of them on Ao3.)

Especially with a possible theory that the moon came from a very young/proto-Earth after a massive collision with an object, so-

Unicron made 1.5 kids or a child/sister with her weirder cat that likes a particular schedule with seasons, tides, and light.

#ask#transformers#transformer prime#tfp#unicron#gaea#fic recs#gods and goddesses#maccadam#my thoughts#magic#fantasy#cats run a very tight schedule and enjoy fucking with things

53 notes

·

View notes

Text

I've never had a coffee maker that ran on a schedule before but if I did I would want it to trigger with a cron job. Literally anything else dishonors our Unix heritage

30 notes

·

View notes

Text

⏱Hangfire + Serilog: How EasyLaunchpad Handles Jobs and Logs Like a Pro

Modern SaaS applications don’t run on user actions alone.

From sending emails and processing payments to updating user subscriptions and cleaning expired data, apps need background tasks to stay efficient and responsive.

That’s why EasyLaunchpad includes Hangfire for background job scheduling and Serilog for detailed, structured logging — out of the box.

If you’ve ever wondered how to queue, manage, and monitor background jobs in a .NET Core application — without reinventing the wheel — this post is for you.

💡 Why Background Jobs Matter

Imagine your app doing the following:

Sending a password reset email

Running a weekly newsletter job

Cleaning abandoned user sessions

Retrying a failed webhook

Syncing data between systems

If these were handled in real-time within your controller actions, it would:

Slow down your app

Create a poor user experience

Lead to lost or failed transactions under load

Background jobs solve this by offloading non-critical tasks to a queue for asynchronous processing.

🔧 Hangfire: Background Job Management for .NET Core

Hangfire is the gold standard for .NET Core background task processing. It supports:

Fire-and-forget jobs

Delayed jobs

Recurring jobs (via cron)

Retry logic

Job monitoring via a dashboard

Best of all, it doesn’t require a third-party message broker like RabbitMQ. It stores jobs in your existing database using SQL Server or any other supported backend.

✅ How Hangfire Is Integrated in EasyLaunchpad

When you start with EasyLaunchpad:

Hangfire is already installed via NuGet

It’s preconfigured in Startup.cs and appsettings.json

The dashboard is live and secured under /admin/jobs

Common jobs (like email dispatch) are already using the queue

You don’t have to wire it up manually — it’s plug-and-play.

Example: Email Queue

Let’s say you want to send a transactional email after a user registers. Here’s how it’s done in EasyLaunchpad:

_backgroundJobClient.Enqueue(() =>

_emailService.SendWelcomeEmailAsync(user.Id));

This line of code:

Queues the email job

Executes it in the background

Automatically retries if it fails

Logs the event via Serilog

🛠 Supported Job Types

Type and Description:

Fire-and-forget- Runs once, immediately

Delayed- Runs once after a set time (e.g., 10 minutes later)

Recurring- Scheduled jobs using CRON expressions

Continuations- Run only after a parent job finishes successfully

EasyLaunchpad uses all four types in various modules (like payment verification, trial expiration notices, and error logging).

🖥 Job Dashboard for Monitoring

Hangfire includes a web dashboard where you can:

See pending, succeeded, and failed jobs

Retry or delete failed jobs

Monitor job execution time

View exception messages

In EasyLaunchpad, this is securely embedded in your admin panel. Only authorized users with admin access can view and manage jobs.

🔄 Sample Use Case: Weekly Cleanup Job

Need to delete inactive users weekly?

In EasyLaunchpad, just schedule a recurring job:

_recurringJobManager.AddOrUpdate(

“InactiveUserCleanup”,

() => _userService.CleanupInactiveUsersAsync(),

Cron.Weekly

);

Set it and forget it.

🧠 Why This Is a Big Deal for Devs

Most boilerplates don’t include job scheduling at all.

In EasyLaunchpad, Hangfire is not just included — it’s used throughout the platform, meaning:

You can follow working examples

Extend with custom jobs in minutes

Monitor, retry, and log with confidence

You save days of setup time, and more importantly, you avoid production blind spots.

📋 Logging: Meet Serilog

Of course, background jobs are only useful if you know what they’re doing.

That’s where Serilog comes in.

In EasyLaunchpad, every job execution is logged with:

Timestamps

Job names

Input parameters

Exceptions (if any)

Success/failure status

This structured logging ensures you have a full audit trail of what happened — and why.

Sample Log Output

{

“Timestamp”: “2024–07–20T14:22:10Z”,

“Level”: “Information”,

“Message”: “Queued email job: PasswordReset for userId abc123”,

“JobType”: “Background”,

“Status”: “Success”

}

You can send logs to:

Console (for dev)

File (for basic prod usage)

External log aggregators like Seq, Elasticsearch, or Datadog

All of this is built into EasyLaunchpad’s logging layer.

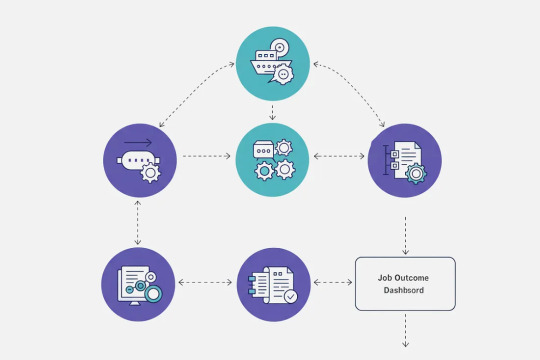

🧩 How Hangfire and Serilog Work Together

Here’s a quick visual breakdown:

Job Triggered → Queued via Hangfire

Job Executed → Email sent, cleanup run, webhook processed

Job Outcome Logged → Success or error captured by Serilog

Job Visible in Dashboard → Retry if needed

Notifications Sent (optional) → Alert team or log activity via admin panel

This tight integration ensures your background logic is reliable, observable, and actionable.

💼 Real-World Use Cases You Can Build Right Now

-Feature and the Background Job

Welcome Emails- Fire-and-forget

Trial Expiration- Delayed

Subscription Cleanup- Recurring

Payment Webhook Retry- Continuation

Email Digest- Cron-based job

System Backups- Nightly scheduled

Every one of these is ready to be implemented using the foundation in EasyLaunchpad.

✅ Why Developers Love It

-Feature and the Benefit

Hangfire Integration- Ready-to-use queue system

Preconfigured Retry- Avoid lost messages

Admin Dashboard- See and manage jobs visually

Structured Logs- Full traceability

Plug-and-Play Jobs- Add your own in minutes

🚀 Final Thoughts

Robust SaaS apps aren’t just about UI and APIs — they’re also about what happens behind the scenes.

With Hangfire + Serilog built into EasyLaunchpad, you get:

A full background job system

Reliable queuing with retry logic

Detailed, structured logs

A clean, visual dashboard

Zero config — 100% production-ready

👉 Launch smarter with EasyLaunchpad today. Start building resilient, scalable applications with background processing and logging already done for you. 🔗 https://easylaunchpad.com

#.net development#.net boilerplate#Hangfire .net Example#easylaunchpad#Serilog Usage .net#Background Jobs Logging#prebuilt apps#Saas App Development

2 notes

·

View notes

Text

Leveraging XML Data Interface for IPTV EPG

This blog explores the significance of optimizing the XML Data Interface and XMLTV schedule EPG for IPTV. It emphasizes the importance of EPG in IPTV, preparation steps, installation, configuration, file updates, customization, error handling, and advanced tips.

The focus is on enhancing user experience, content delivery, and securing IPTV setups. The comprehensive guide aims to empower IPTV providers and tech enthusiasts to leverage the full potential of XMLTV and EPG technologies.

1. Overview of the Context:

The context focuses on the significance of optimizing the XML Data Interface and leveraging the latest XMLTV schedule EPG (Electronic Program Guide) for IPTV (Internet Protocol Television) providers. L&E Solutions emphasizes the importance of enhancing user experience and content delivery by effectively managing and distributing EPG information.

This guide delves into detailed steps on installing and configuring XMLTV to work with IPTV, automating XMLTV file updates, customizing EPG data, resolving common errors, and deploying advanced tips and tricks to maximize the utility of the system.

2. Key Themes and Details:

The Importance of EPG in IPTV: The EPG plays a vital role in enhancing viewer experience by providing a comprehensive overview of available content and facilitating easy navigation through channels and programs. It allows users to plan their viewing by showing detailed schedules of upcoming shows, episode descriptions, and broadcasting times.

Preparation: Gathering Necessary Resources: The article highlights the importance of gathering required software and hardware, such as XMLTV software, EPG management tools, reliable computer, internet connection, and additional utilities to ensure smooth setup and operation of XMLTV for IPTV.

Installing XMLTV: Detailed step-by-step instructions are provided for installing XMLTV on different operating systems, including Windows, Mac OS X, and Linux (Debian-based systems), ensuring efficient management and utilization of TV listings for IPTV setups.

Configuring XMLTV to Work with IPTV: The article emphasizes the correct configuration of M3U links and EPG URLs to seamlessly integrate XMLTV with IPTV systems, providing accurate and timely broadcasting information.

3. Customization and Automation:

Automating XMLTV File Updates: The importance of automating XMLTV file updates for maintaining an updated EPG is highlighted, with detailed instructions on using cron jobs and scheduled tasks.

Customizing Your EPG Data: The article explores advanced XMLTV configuration options and leveraging third-party services for enhanced EPG data to improve the viewer's experience.

Handling and Resolving Errors: Common issues related to XMLTV and IPTV systems are discussed, along with their solutions, and methods for debugging XMLTV output are outlined.

Advanced Tips and Tricks: The article provides advanced tips and tricks for optimizing EPG performance and securing IPTV setups, such as leveraging caching mechanisms, utilizing efficient data parsing tools, and securing authentication methods.

The conclusion emphasizes the pivotal enhancement of IPTV services through the synergy between the XML Data Interface and XMLTV Guide EPG, offering a robust framework for delivering engaging and easily accessible content. It also encourages continual enrichment of knowledge and utilization of innovative tools to stay at the forefront of IPTV technology.

3. Language and Structure:

The article is written in English and follows a structured approach, providing detailed explanations, step-by-step instructions, and actionable insights to guide IPTV providers, developers, and tech enthusiasts in leveraging the full potential of XMLTV and EPG technologies.

The conclusion emphasizes the pivotal role of the XML Data Interface and XMLTV Guide EPG in enhancing IPTV services to find more information and innovative tools. It serves as a call to action for IPTV providers, developers, and enthusiasts to explore the sophisticated capabilities of XMLTV and EPG technologies for delivering unparalleled content viewing experiences.

youtube

7 notes

·

View notes

Text

The 10 Best Hosting Packages for WordPress Developers in 2025 – Speckyboy

New Post has been published on https://thedigitalinsider.com/the-10-best-hosting-packages-for-wordpress-developers-in-2025-speckyboy/

The 10 Best Hosting Packages for WordPress Developers in 2025 – Speckyboy

Updated: 5th of March, 2025

As a WordPress developer, choosing the right host package is one of the most important decisions you can make. Performance, security, scalability, and development tools all play a role in whether a hosting provider is worth considering.

A great host should offer an optimized server stack with the latest PHP versions, solid database support, and built-in caching. Reliable uptime, global CDN integration, and multiple server locations help sites run fast for visitors everywhere.

Security is another major factor. Automated backups, malware scanning, and free SSL certificates help protect data. A staging environment makes testing safer, while features like WP-CLI, Git integration, SSH, and SFTP access give you more control over your work. Flexible resource allocation and support for both vertical and horizontal scaling mean a site can grow without hassle or having to switch hosts.

This collection ranks hosting providers based on those technical features mentioned above. Every developer has different needs, so requirements should come first—and cost second. Each hosting provider here meets the key standards a WordPress developer would expect, making them strong choices for any project.

Pressable is a managed WordPress host designed for developers who need performance, security, and scalability. It runs on Automattic’s WP Cloud.

They have built-in page and query caching and are supported by a global CDN. Automated daily backups, malware scanning, and free SSL certificates are included. A one-click staging environment allows for safe testing and quicker deployment.

You get WP-CLI access, Git integration, SSH, SFTP, and auto-scaling for traffic spikes. Core updates are managed automatically (optional), and plugins or themes can be updated on a schedule.

Support is available 24/7 with a below four-minute response time. The Pressable hosting environment is optimized for WordPress and guarantees 100% uptime.

Our Rating: 9.8/10

Get 50% Off All Pressable Plans Using Promo Code

Kinsta is a managed WordPress host built on Google Cloud, using C3D and C2 virtual machines. It includes server-level caching and a free CDN with over 260 locations. The platform guarantees 99.9% uptime and offers 37 data center options.

Security features include free SSL certificates, malware removal, and daily backups. A one-click staging environment allows for safe testing before deployment. Developers get WP-CLI, Git integration, SSH, SFTP, and flexible resource scaling.

Kinsta supports automatic core updates, optional plugin and theme auto-updates, and cron job scheduling. You can scale resources such as CPU, RAM, and storage as needed. Kinsta offers an optimized stack for WordPress, making it a great choice for WordPress developers that want performance, security, and flexibility.

Our Rating: 9.7/10

WordPress.com is a managed WordPress hosting platform with a global infrastructure designed for performance and reliability. It runs on high-frequency CPUs and uses a built-in caching system with Global Edge Cache and a CDN with over 28 data centers worldwide.

Security features include Jetpack Scan for malware detection and removal, real-time backups with one-click restore through VaultPress, and free SSL certificates. Their one-click staging environment allows for safe testing before deployment.

You have access to WP-CLI, SSH, SFTP, and GitHub integration. The platform supports scaling to handle traffic spikes and resource demands. Automatic core updates are included, with optional scheduled plugin and theme updates.

Our Rating: 9.7/10

Get 50% Off All WordPress.com Plans Using Promo Code

Bluehost is a managed WordPress hosting provider with built-in caching, automatic scaling, and a global CDN. The platform runs on the PHP 5 and higher with MySQL 8 databases.

Security features include free SSL certificates, malware scanning, and daily backups with easy restoration. A staging environment is available for safe testing before deployment.

You have access to WP-CLI, SSH, and SFTP. They support cron job scheduling and automatic core updates.

The Bluehost hosting environment is built to handle traffic surges with vertical and horizontal scaling, making it a practical option for growing websites.

Our Rating: 9.6/10

Hostinger is a managed WordPress hosting provider with LiteSpeed web servers and support for PHP 7.4 and higher. It includes built-in caching and a comprehensive global CDN. They guarantee 99.9% uptime.

Security features include a WordPress vulnerability scanner, daily and on-demand backups, and free SSL certificates. A one-click staging tool is available for testing changes before deployment.

You have access to WP-CLI, SSH, and SFTP. The platform allows CPU, RAM, and storage scaling to handle traffic increases. Custom cron job scheduling is supported.

Automatic core updates are included, with optional smart updates for plugins and themes. Hostinger’s hosting environment is designed for speed, security, and flexibility.

Our Rating: 9.5/10

InMotion Hosting is a managed WordPress provider with an UltraStack infrastructure that includes Apache and NGINX Reverse Proxy. It supports PHP 7 and 8, built-in caching, and global CDN. They guarantee a 99.9% uptime.

Security features include malware protection, automated backups, and free SSL certificates. A one-click staging tool is available for testing.

You have access to WP-CLI, Git, SSH, and SFTP, and they support cron job scheduling and automatic core updates. Plugin and theme auto-updates are also available.

Our Rating: 9.4/10

Cloudways is a managed WordPress host with a flexible cloud-based infrastructure. It supports PHP 7.4 to 8.2 and runs on Nginx and Apache with MariaDB and MySQL databases. Built-in caching includes Memcached, Varnish, and Redis.

Users can choose from over 50 data centers worldwide through various cloud providers. A Cloudflare CDN add-on is available to improve site speed. Security measures include dedicated firewalls, security patching, and IP whitelisting. Automated backups with one-click restore are included, along with free SSL certificates.

You have access to WP-CLI, Git integration, SSH, and SFTP. CPU, RAM, and storage scaling are supported with vertical and horizontal scaling options. Core updates can be managed, and automatic plugin and theme updates are available through SafeUpdates.

Our Rating: 9.4/10

SiteGround offers managed WordPress hosting with a setup that supports PHP 7.4 through 8.2, running on Nginx and Apache with MySQL databases. SuperCacher is built in for page and object caching, and a CDN is included to speed up content delivery.

Security includes daily backups, automatic security patches, and proactive updates. Free SSL certificates from Let’s Encrypt come standard. A one-click staging tool allows for rapid testing before pushing live.

You have access to WP-CLI, Git, SSH, and SFTP. Sites can scale CPU, RAM, and storage to handle growth. Core updates are managed automatically, and plugins and themes can be set to update on a schedule.

Our Rating: 9.3/10

DreamPress is a managed WordPress hosting service, built on on DreamHost’s cloud computing service OpenStack. It includes server-side caching and has a global CDN.

Security features include daily automated backups with one-click restore, malware scanning, and free SSL certificates from Let’s Encrypt. They also include a one-click staging environment.

You have access to WP-CLI, Git integration, SSH, and SFTP. The platform allows flexible resource allocation with both vertical and horizontal scaling to support growing sites. Core updates are managed automatically, and optional plugin and theme auto-updates are available. Cron job scheduling is also supported.

Our Rating: 9.3/10

A2 Hosting provides managed WordPress hosting with a stack that includes LiteSpeed servers and MariaDB databases. The A2 Optimized plugin offers built-in page and object caching. A 99.9% uptime guarantee is included, and while a global CDN is not built-in, Cloudflare integration is supported.

Security measures include HackScan, firewalls, and malware removal. Automatic daily backups with easy restore options are available. Free SSL certificates are provided for all sites.

A one-click staging environment allows testing before deployment. Developers have access to WP-CLI, Git, SSH, and SFTP. CPU, RAM, and storage can be scaled as needed. Configurable core updates are available, along with optional plugin and theme auto-updates.

Our Rating: 9.2/10

The Questions We Ask Each Host

For each web host in this collection, we asked them 18 developer-focused questions to confirm they provide everything a WordPress developer needs. Here are the questions we ask.

✔ Do they have an optimized server stack? What does it include?

✔ Do they have built-in caching?

✔ Do they provide a high uptime guarantee?

✔ Do they integrate with a global CDN to reduce latency?

✔ Do they offer multiple server location options?

✔ Do they provide malware scanning and removal?

✔ Do they include automatic and regular backups?

✔ Do they offer free SSL certificates, such as Let’s Encrypt?

✔ Do they provide a one-click staging environment?

✔ Do they support WP-CLI?

✔ Do they offer Git integration or version control support?

✔ Do they allow flexible resource allocation for scaling CPU, RAM, and storage?

✔ Do they support both vertical and horizontal scaling for growing sites?

✔ Do they offer 24/7 support via phone, chat, or email?

✔ Do they provide SSH and SFTP access for secure file management?

✔ Do they support cron job management for custom scheduling?

✔ Do they allow configurable core updates?

✔ Do they offer optional automatic updates for plugins and themes?

This page may contain affiliate links. At no extra cost to you, we may earn a commission from any purchase via the links on our site. You can read our Disclosure Policy at any time.

Related Topics

Top

#2025#ADD#add-on#affiliate#Apache#automatic updates#backups#BlueHost#BlueHost Hosting#C2#cache#cdn#certificates#Cloud#cloud computing#cloud providers#cloudflare#cloudways#code#comprehensive#computing#content#cpu#data#Data Center#Data Centers#Database#databases#deployment#Design

2 notes

·

View notes

Text

Alright, I have a plan for March!!!

And I am going to post it here in the vague hope that it makes me stick to it.

So, as I've said in previous posts, I want to post more of my short stories over March. More of my Superhero Short Stories.

So, March is now my month for Tales of Hero City!!!

(Now with new, EVEN MORE IMPROVED crappy banner, and a fixed spelling error)

(Bloody hell...)

I'll be posting Tales of Hero City short stories throughout the month, and just to keep me to task

I've come up with a schedule:

01/03/25 - Law and Disorder (AO3 Link)

Detective Lucy Washburn has to solve a bank robbery... all the while dealing with a gosh darned superhero in the mix.

07/03/25 - Surreal Estate (AO3 Link)

Hero team, Omi and Cron, have just had their hideout wrecked.

It's time to go house hunting.

11/03/25 - Academic Rigour (AO3 Link)

Tessa Kwells has to impress her teachers if she wants the grade she deserves. Luckily, she has a supervillain in the family.

17/03/25 - Trust and Nemesis (AO3 Link)

When Justice Man's daughter is kidnapped, he turns to the one person he can trust. His nemesis.

21/03/25 - New Management (AO3 Link)

The Villains Bureau is mostly just paperwork, trying to keep a few dozen crazed criminals in line. But what happens when an idiot makes a bid for the throne?

27/03/25 - Start of Watch (AO3 Link)

Stealth Watcher is new to heroing. And having no powers can be a bit of a setback. But he's not going to let those superpowered do-gooders upstage him!

31/03/25 - Love is a Superhero Fight (AO3 Link)

Tessa Kwells is having some difficulties in her love life, and as a villain.

Strangely, her nemesis Judgement is both these problems.

-

So yeah, seven Short Stories over March. Please, do read them, but even if you can't, please Reblog and Share so that maybe someone else might.

I am also working on an AO3 account to post them on, but my invite isn't scheduled until mid-march. Didn't realise you needed an invite.

I'll of course still be editing in the background, got a contest to prepare for, but I'm just hoping people will enjoy theses stories.

So, yeah. There's my plan.

Looking forward to it!

2 notes

·

View notes

Text

SYSTEM ADMIN INTERVIEW QUESTIONS 24-25

Table of Content

Introduction

File Permissions

User and Group Management:

Cron Jobs

System Performance Monitoring

Package Management (Red Hat)

Conclusion

Introduction

The IT field is vast, and Linux is an important player, especially in cloud computing. This blog is written under the guidance of industry experts to help all tech and non-tech background individuals secure interviews for roles in the IT domain related to Red Hat Linux.

File Permissions

Briefly explain how Linux file permissions work, and how you would change the permissions of a file using chmod. In Linux, each file and directory has three types of permissions: read (r), write (w), and execute (x) for three categories of users: owner, group, and others. Example: You will use chmod 744 filename, where the digits represent the permission in octal (7 = rwx, 4 = r–, etc.) to give full permission to the owner and read-only permission to groups and others.

What is the purpose of the umask command? How is it helpful to control default file permissions?umask sets the default permissions for newly created files and directories by subtracting from the full permissions (777 for directories and 666 for files). Example: If you set the umask to 022, new files will have permissions of 644 (rw-r–r–), and directories will have 755 (rwxr-xr-x).

User and Group Management:

Name the command that adds a new user in Linux and the command responsible for adding a user to a group. The Linux useradd command creates a new user, while the usermod command adds a user to a specific group. Example: Create a user called Jenny by sudo useradd jenny and add him to the developer’s group by sudo usermod—aG developers jenny, where the—aG option adds users to more groups without removing them from other groups.

How do you view the groups that a user belongs to in Linux?

The group command in Linux helps to identify the group a user belongs to and is followed by the username. Example: To check user John’s group: groups john

Cron Jobs

What do you mean by cron jobs, and how is it scheduled to run a script every day at 2 AM?

A cron job is defined in a crontab file. Cron is a Linux utility to schedule tasks to run automatically at specified times. Example: To schedule a script ( /home/user/backup.sh ) to run daily at 2 AM: 0 2 * * * /home/user/backup.sh Where 0 means the minimum hour is 2, every day, every month, every day of the week.

How would you prevent cron job emails from being sent every time the job runs?

By default, cron sends an email with the output of the job. You can prevent this by redirecting the output to /dev/null. Example: To run a script daily at 2 AM and discard its output: 0 2 * * * /home/user/backup.sh > /dev/null 2>&1

System Performance Monitoring

How can you monitor system performance in Linux? Name some tools with their uses.

Some of the tools to monitor the performance are: Top: Live view of system processes and usage of resource htop: More user-friendly when compared to the top with an interactive interface. vmstat: Displays information about processes, memory, paging, block IO, and CPU usage. iostat: Showcases Central Processing Unit (CPU) and I/O statistics for devices and partitions. Example: You can use the top command ( top ) to identify processes consuming too much CPU or memory.

In Linux, how would you check the usage of disk space?

The df command checks disk space usage, and Du is responsible for checking the size of the directory/file. Example: To check overall disk space usage: df -h The -h option depicts the size in a human-readable format like GB, MB, etc.

Package Management (Red Hat)

How do you install, update, or remove packages in Red Hat-based Linux distributions by yum command?

In Red Hat and CentOS systems, the yum package manager is used to install, update, or remove software. Install a package: sudo yum install httpd This installs the Apache web server. Update a package: sudo yum update httpd Remove a package:sudo yum remove httpd

By which command will you check the installation of a package on a Red Hat system?

The yum list installed command is required to check whether the package is installed. Example: To check if httpd (Apache) is installed: yum list installed httpd

Conclusion

The questions are designed by our experienced corporate faculty which will help you to prepare well for various positions that require Linux such as System Admin.

Contact for Course Details – 8447712333

2 notes

·

View notes

Text

The Importance of Regular SSD Health Checks for Data Security

Solid-State Drives (SSDs) have become the preferred storage solution due to their speed, durability, and energy efficiency. However, despite their advanced technology, SSDs are not immune to wear and potential failures. Regular health checks play a crucial role in ensuring data security, preventing data loss, and maintaining optimal performance. This article explores why routine test SSD drive health is essential and how it safeguards critical data.

Why SSD Health Checks Matter

1. Preventing Data Loss

Unlike traditional hard drives, SSDs have a finite number of write cycles. Over time, repeated writes can degrade NAND flash memory, leading to data corruption or loss. Regular health checks help identify wear patterns and alert users before critical failures occur.

2. Detecting Early Signs of Failure

Tools that read SMART (Self-Monitoring, Analysis, and Reporting Technology) data can detect issues such as bad blocks, high temperatures, and excessive reallocated sectors. Identifying these warning signs early allows users to take preventative action, like data backup or drive replacement.

3. Maintaining Optimal Performance

As SSDs age, their performance can degrade due to factors like increased bad blocks or inefficient data management. Regular health checks help identify performance bottlenecks and ensure that features like TRIM are functioning correctly to maintain speed and responsiveness.

4. Enhancing Data Security

Failing SSDs can lead to partial data corruption, making sensitive information vulnerable. Regular health checks reduce the risk of data breaches by ensuring that the storage medium remains secure and intact.

How to Perform Regular SSD Health Checks

1. Utilize SMART Monitoring Tools

CrystalDiskInfo (Windows): Provides real-time health statistics and temperature monitoring.

Smartmontools (Linux/macOS): Command-line tools (smartctl) for in-depth drive analysis.

Manufacturer-Specific Utilities: Samsung Magician, Intel SSD Toolbox, and others offer tailored monitoring and firmware updates.

2. Schedule Automated Health Checks

Windows Task Scheduler: Set up recurring checks using tools like CrystalDiskInfo.

Linux Cron Jobs: Automate smartctl commands to log health data periodically.

macOS Automator: Create workflows that run disk utility scripts at regular intervals.

3. Monitor Key SMART Attributes

Reallocated Sectors Count: Indicates bad blocks that have been replaced.

Wear Leveling Count: Reflects the evenness of data distribution across memory cells.

Temperature: High temperatures can accelerate wear and cause failures.

Best Practices for SSD Health and Data Security

Regular Backups: Always maintain updated backups to safeguard against sudden failures.

Enable TRIM: Ensures that deleted data blocks are efficiently managed.

Keep Firmware Updated: Manufacturers often release updates to fix bugs and improve drive reliability.

Avoid Full Drive Usage: Maintain at least 10-20% free space to allow efficient data management.

Conclusion

Regular SSD health checks are a proactive strategy to ensure data security, prevent unexpected failures, and optimize performance. With the right tools and scheduled monitoring, users can extend the lifespan of their SSDs and protect valuable data. In an age where data integrity is paramount, regular health checks are not just recommended—they're essential.

1 note

·

View note

Text

Profile Display Mechanism

18+ please. Spambots and bigots subject to on-sight cutoff regulations. My existence isn't up for debate.

More info under the cut, but meh

Who am I?

Kat/Katerina/Katgirl At-Scale

She/It

Trans

Poly + Pan

26

Seeker of Knowledge

Artist-in-progress

Writer-in-distress

Meow

Things I like

Cybersecurity (In-joke: Lightbulbs, otherwise I fucking love cryptography and BGP)

Systems At-Scale (Mostly OpenTofu/Terraform and AWS but I like Ansible quite a bit.)

Language (Gotta keep the brain sharp somehow...)

Finding Digital Esoterica (Why is there a PON tester open to the internet? I dunno but that's pretty interesting!)

Playing with design (There was this one forum signature post I really liked....)

Music (Drum and Bass, Electro-Industrial, Trip Hop, many others)

Creating computational horrors but not in like the really shitty unethical way (I'm eating your e820 and it's really tasty)

Public Transport (I fucking love the DC Metro)

My Sideblogs

Autumnrain Radio (Music I like) [autumnrain-radio]

Katerina Obscura (Photography) [katerina-obscura]

Things I'm doing now

Stretching the bounds of my design knowledge

Beating my head into the pavement as my existence meets reality or something similarly philosophically masturbatory, probably (the world is making me weary)

Detangling my neuroses and quirks

Not sleeping, apparently!

and way way too much more. I'm always doing SOMETHING.

Tags I use

#inspiration distillation conduit -> Inspirations for design work

#design annealment lattice -> my design works-in-progress, experiments, etc

#kat cron daemon -> Scheduled posts

#paradigm: gridspace freedom -> general trans posts, the trans experience as it meets the local sleepers¹, etc

#ramblings of a cryotank -> Sad/Stressed posts

#katerina e820 -> text-only posts and scattered thoughts

#katerina obscura -> reblogs of my photography sideblog to here.

[1]: why yes I have been obsessing about Mage the Ascension how can you tell? I'm borrowing Mage as metaphor; There's a whole thing. Ask me about it later idk

5 notes

·

View notes

Text

Powering Smarter Data Pipelines with Automated Scheduling and No-Code API Tools

The Problem: Legacy Data Workflows Are Slowing Businesses Down

Across industries, data teams are spending far too much time wrestling with outdated or overly complex data integration methods. Whether it's pulling data from dozens of third-party APIs, scheduling jobs to move data between systems, or transforming raw data into something meaningful—manual workflows are slow, error-prone, and inefficient.

Even basic tasks like syncing marketing analytics, customer data, or eCommerce metrics can take hours of manual effort, especially when data is coming from REST APIs or when engineers are needed just to build and maintain scripts.

Enter it: A Smarter, Simpler Data Automation Platform

Weaddress these challenges head-on. By streamlining how data is ingested, moved, and delivered, the platform empowers teams to focus on insights—not infrastructure.

Let’s explore the three core innovations that make a standout solution:

1. Automated Data Scheduling: Set It, Forget It, and Stay Informed

Imagine never having to manually run a report or refresh a dashboard again. With automated data scheduling, you can define when and how often your data should update—hourly, daily, weekly, or even by custom trigger events.

From syncing CRM data each morning to updating inventory every 15 minutes, automated scheduling ensures that your team always has fresh, up-to-date data, right when they need it. And since the process is fully automated, it eliminates the risk of human error and missed updates.

Key benefits include:

Improved reporting accuracy

Time savings for analysts and developers

Reduced reliance on manual workflows or cron jobs

Whether you're building dashboards or powering machine learning models, nsures your data pipelines stay on time, every time.

2. REST API Data Automation: Simplifying Complex API Integrations

Modern businesses rely on an ecosystem of tools—CRMs, ERPs, ad platforms, payment processors—all offering data via REST APIs. But integrating these APIs is rarely simple. They involve authentication, pagination, error handling, and data transformation—tasks typically left to skilled developers.

we changes that.

With its built-in support for REST API data automation, the platform makes it easy to connect, extract, and normalize data from nearly any API, all without writing code. Users simply configure API endpoints using guided forms, and handles the rest—authentication, retries, and data parsing included.

This not only accelerates integration timelines, but also puts powerful data sources into the hands of business users and analysts, not just engineers.

Use cases include:

Pulling customer data from payment gateways

Syncing marketing campaign performance from ad platforms

Consolidating eCommerce metrics from multiple marketplaces

With, REST APIs become a bridge—not a barrier.

3. Point-and-Click Data Tools: No Code, No Problem

Data integration has traditionally required either complex SQL or custom code. But that model doesn’t scale, especially for non-technical teams. solves this with a suite of intuitive point-and-click data tools that allow users to configure data pipelines, transformations, and destinations using a simple interface.

No coding. No command-line. Just powerful functionality with user-friendly controls.

With a few clicks, users can:

Merge data from multiple sources

Filter, sort, and enrich data sets

Map fields between different systems

Push data to BI tools or cloud storage

These tools democratize data access and enable every team—marketing, finance, operations—to build and manage their own automated workflows without waiting on IT or data engineers.

The Big Picture: Scalable Data Workflows for a Modern Business

As companies scale, their data needs become more complex. More APIs, more tools, more teams, and more insights to deliver. Manual data management simply can’t keep up.

provides a future-ready solution that grows with your organization. Its combination of automated data scheduling, REST API data automation, and point-and-click data tools empowers businesses to streamline operations, drive real-time insights, and unlock new opportunities from their data.

0 notes

Text

Inside an Odoo Development Company: A Systems Engineer's Perspective

Where process meets code, and complexity becomes a craft

Enterprise software is not just about automating tasks — it’s about structuring how organizations function. ERP (Enterprise Resource Planning) systems are the digital infrastructure that govern finance, supply chain, HR, manufacturing, and everything in between. Among the wide range of ERP systems, Odoo has emerged as a uniquely powerful platform — not because it’s ready-made, but because it’s designed to be made ready.

This modularity and openness, however, come with a cost: intelligent implementation. And that’s where an Odoo development company plays a role far beyond “installation.” Let’s walk through the landscape of what such companies actually do — from backend architecture to frontend UX, from ORM design to business rule orchestration — all written from the lens of real-world implementation.

1. Why Odoo Development Is Not Just “Customization”

It’s a common misconception that Odoo is plug-and-play. While it can be used out-of-the-box for small businesses, most mid-sized and enterprise organizations require development, not just configuration. This development can fall into several layers:

a. Model Layer (Database + Business Objects)

At its core, Odoo uses an object-relational mapping (ORM) framework layered on PostgreSQL. Each model represents a business object — and these are often extended or redefined to accommodate custom needs. A typical Odoo dev project will:

Define new models using Python classes inheriting

Extend existing ones using

Add computed fields, constraints, access rules, and indexes

Use inheritance modes to extend or replace logic without breaking core behavior

b. View Layer (User Interface)

UI in Odoo is generated dynamically via XML-defined views, QWeb templating, and now OWL components. Developers work with:

Form and tree views with field-level access controls

Kanban and calendar views for operational teams

Smart buttons, context actions, and domain filters

OWL (Odoo Web Library) for reactive frontend behavior, replacing legacy jQuery-based widgets

c. Workflow Layer (Automation)

ERP isn't static. It’s a set of flows, and Odoo allows for automating them using:

Server actions and automated actions

Scheduled cron jobs for background tasks (e.g., automated invoices, reminders)

Python-based workflows to replace deprecated legacy systems

Integration with webhooks and asynchronous queues (RabbitMQ, Redis) for non-blocking processes

This layered structure makes Odoo powerful but also demands structured engineering — which is the real product of an Odoo development company.

2. How a Real Development Team Approaches a Project

a. Discovery Is the Foundation

Before any development begins, the team must decode the business. This means interviewing users across departments, mapping legacy systems, documenting exceptions, and most importantly — identifying the real pain points.

For instance:

Does procurement get delayed because approvals aren’t centralized?

Is inventory data unreliable due to offline sync issues?

Are compliance reports being manually compiled because of disconnected modules?

These answers don’t map to technical specifications directly. But they define the problem space the team must solve.

b. Domain-Driven Design in ERP

Good Odoo developers think in terms of domain logic, not modules. They start by modeling the organization’s vocabulary — products, vendors, routes, agents, compliance stages — and translate that into structured data models.

This results in:

Clearly named classes and fields

Relationships that reflect real-world dependencies

Model constraints and validation logic that reduce bad data at the source

This also means resisting over-reliance on “default modules” and instead engineering clarity into the system.

3. Integration: The Hidden Complexity

Modern businesses rarely use a single system. Odoo has to talk to:

CRMs (Salesforce, HubSpot)

eCommerce platforms (Magento, WooCommerce)

Shipping carriers (FedEx APIs, EasyPost, Delhivery)

Tax systems (Avalara, GSTN, etc.)

External data feeds (EDI, SFTP, APIs)

Each integration requires:

API client development using Python’s libraries

Middleware for data transformation (especially when schemas differ)

Sync strategies (push vs pull, scheduled vs real-time)

Retry queues and failure logging

A good Odoo development company builds middleware pipelines — not one-off connectors. They create logging dashboards, API rate management, and monitoring hooks to ensure long-term stability.

4. Versioning and Upgrades: A Quiet Challenge

Odoo releases a new version yearly, and major changes (like the shift to OWL or new accounting frameworks) often break old code.

To survive these upgrades:

Developers isolate custom modules from core changes

Use test environments with demo data and sandboxed upgrades

Write migration scripts to remap fields and relations

Follow naming conventions and avoid hard-coded logic

This is an ongoing, code hygiene practice — not a one-time task. Dev companies that ignore this soon find their systems stuck in legacy versions, unable to adapt to change.

5. Performance Engineering Is Not Optional

A working ERP isn’t just functional — it has to be fast. Especially with large data volumes (10K+ products, 1M+ invoices), performance tuning becomes critical.

Typical performance interventions include:

Adding PostgreSQL indexes for filter-heavy views

Optimizing read-heavy models with Using in loops to avoid N+1 query patterns

Separating batch processing into async jobs

Profiling using Odoo’s logging levels, SQL logs, and custom benchmark scripts

The job of an Odoo development company isn’t just to make things work — it’s to make them scale responsibly.

6. UX Is a Development Concern

ERP systems often fall into the trap of being “complete but unusable.” Good developers don’t offload UX to designers — they own it as part of the codebase.

This includes:

Context-aware defaults (e.g., auto-filling fields based on last action)

Guided flows (like a wizard or checklist for complex processes)

Custom reports (using Studio, JasperReports, or Python rendering engines)

Mobile-friendly layouts for field workers

They treat user complaints (“It takes 12 clicks to approve a PO”) as bugs, not training issues.

7. Maintenance Culture: Logging, Docs, DevOps

Any real development team knows that the code they write today will be inherited by someone else — maybe even themselves in six months.

That’s why they:

Use structured logging for every transaction

Write internal documentation and comments with context, not just syntax

Automate deployments via Docker, GitLab CI/CD, and Odoo.sh pipelines

Implement staging environments and rollback mechanisms

A production ERP system is never done — and development teams must build for longevity.

Final Thoughts: ERP as Infrastructure, Not Software

Most people think of ERP as “tools that help a business run.” But from the perspective of a development company, ERP is infrastructure. It is the digital equivalent of plumbing, wiring, and load-bearing beams.

An Odoo development company isn’t a vendor. It’s a system architect and process engineer — someone who reshapes how data flows, how decisions are enforced, and how work actually gets done.

There’s no applause when a multi-step manufacturing process flows from RFQ to delivery without a glitch. But in that silence is the sound of a system doing its job — thoughtfully built, quietly running, and always ready to adapt.

#Odoo Development Company#Odoo ERP Development#Odoo Implementation Experts#Odoo Developers#Odoo Software Development#Odoo ERP Integration#Odoo Technical Consulting#Enterprise Odoo Solutions

0 notes

Text

Automating backups on your storage VPS is not just a technical step; it's a business safeguard. Data loss can be devastating, but it’s entirely avoidable with the right strategy.

From setting up cron jobs to writing backup scripts and pushing data offsite, automation takes the stress out of data protection. Regardless of whether you're hosting on a cheap virtual server, managing resources through VPS hosting services, or operating high-traffic systems on dedicated hosting services, automated backups give you peace of mind and operational resilience.

0 notes

Text

Is Your VPS Underperforming? It Could Be Your vCPU Allocation

🖥️ How Virtual CPU (vCPU) Allocation Impacts VPS Performance

When choosing a Virtual Private Server (VPS), one of the most misunderstood specifications is the vCPU — or virtual CPU. It might seem like just another number on a hosting plan, but in reality, vCPU allocation plays a major role in the performance, responsiveness, and stability of your applications.

Whether you're running a website, SaaS product, game server, or backend system, understanding how vCPUs work can help you select the right VPS and avoid costly performance bottlenecks.

In this blog, we’ll break down what a vCPU really is, how it works under the hood, and how VCCLHOSTING ensures optimal CPU allocation for your workloads.

💡 What is a vCPU?

A vCPU (virtual CPU) is a portion of a physical CPU (core or thread) allocated to your VPS by the hypervisor (the software that powers virtualization). Think of it as your VPS’s brain — the more vCPUs you have, the more simultaneous tasks it can handle.

In cloud hosting environments, vCPUs are mapped to physical cores or hyperthreads (via Intel Hyper-Threading or AMD SMT), and their performance is influenced by how many virtual machines are sharing those cores.

⚙️ How vCPUs Affect VPS Performance

Here’s how vCPU allocation directly impacts your hosting experience:

1. Parallel Processing Power

More vCPUs = more parallel processing = faster execution for CPU-heavy tasks.

Great for multi-threaded applications, such as media servers, compilers, and big data workloads.

2. Task Queuing & Latency

With too few vCPUs, tasks queue up and wait their turn.

This causes slow page loads, timeouts, and poor user experience — especially under high traffic.

3. Database & Application Speed

Apps like MySQL, PostgreSQL, Node.js, and Python APIs scale better with more CPU headroom.

vCPU bottlenecks lead to query lag, dropped connections, or delayed processing.

🧠 vCPU vs Core: What’s the Difference?

Term Description vCPU Virtual processor thread assigned to a VPS Core Actual processing unit on a physical CPU Thread A logical division of a core (via hyper-threading)

Multiple vCPUs can be backed by the same physical core — so 4 vCPUs doesn’t necessarily mean 4 full physical cores.

With VCCLHOSTING, we maintain a low contention ratio, ensuring your vCPUs aren’t overloaded with noisy neighbors.

🧪 Real-World Examples

Use CaseMinimum Recommended vCPUsBasic WordPress Site1–2 vCPUsSmall SaaS or CRM2–4 vCPUsVideo Streaming or Gaming4–6 vCPUsMachine Learning API6–8 vCPUscPanel Hosting with Email + DB2–4 vCPUs

🔍 Signs You Need More vCPUs

High CPU usage consistently above 80%

Slow server response during peak traffic

Background tasks (like backups or cron jobs) slowing down your app

MySQL queries taking too long

Uploads, downloads, or API calls lagging

If you notice any of the above, it’s time to upgrade your VPS plan with more CPU power.

🔧 How VCCLHOSTING Optimizes vCPU Allocation

At VCCLHOSTING, we use high-performance infrastructure to ensure:

Fair vCPU Scheduling: No over-provisioning, no surprises.

Dedicated Resource Tiers: Get exclusive CPU power when needed.

Performance Monitoring: Track CPU usage in real-time via your control panel.

Flexible Upgrades: Scale from 1 vCPU to 16+ without downtime.

24/7 Support: Get expert advice on choosing the right plan.

🧩 Final Thoughts

When choosing a VPS, don’t just compare vCPU counts blindly. Consider:

Your workload type (static website vs dynamic app)

Your expected traffic

Your performance expectations

A well-optimized VPS with 2 vCPUs may outperform a poorly configured 4 vCPU server.

VCCLHOSTING offers scalable, high-performance VPS plans with clean resource allocation and honest performance. Whether you're starting small or scaling big, we’ve got you covered.

Need help choosing the right VPS plan? 📞 Call: 9096664246 🌐 Visit: www.vcclhosting.com

0 notes

Text

Top 5 Challenges in Astrology App Development (Solved)

Astrology is undergoing a digital transformation in 2025. With Gen Z and Millennials leading the way, the demand for mobile-first spiritual tools has never been higher. What was once reserved for newspaper columns or in-person consultations is now available at your fingertips thanks to astrology apps.

The global astrology market is now valued at over $12 billion and digital platforms are playing a huge role in this growth. But if you're a startup founder, entrepreneur, app developer or freelancer looking to tap into this booming space, there’s a catch:

✅ Building an accurate, secure and scalable astrology app is not as easy as reading a daily horoscope.

In this article, we’ll explore the top 5 astrology app development challenges and how to solve them using modern tech, smart UX, and AI integration.

1. Challenge: Ensuring Prediction Accuracy

Astrology is only as good as its predictions. But most apps face major problems:

Poorly-sourced or outdated astrological data

Lack of certified astrologer input

Generic predictions that don’t feel personalized

Solution:

Use Verified Astrological Data Sets: Tools like the Swiss Ephemeris offer accurate planetary data that can be used to power daily predictions, compatibility charts and more.

Collaborate with Professional Astrologers: Having real experts validate your algorithm improves trust and credibility.

Integrate AI Personalization: AI can tailor horoscope content based on user behavior, zodiac sign, past readings and more delivering a more meaningful experience.

2. Challenge: Protecting User Privacy & Sensitive Birth Data

Astrology apps ask users for their birth date, time and location which is extremely personal. Mishandling this data can lead to serious privacy violations and legal issues.

Solution:

End-to-End Encryption: Ensure all birth-related data is encrypted both at rest and in transit. Use tools like AES-256 encryption for sensitive user info.

Anonymous Usage Options: Allow users to access basic features without revealing personal details. This builds trust with privacy-conscious users.

Compliance-First Design: Implement GDPR, CCPA and other data laws through transparent consent flows, opt-in permissions, and detailed privacy policies.

3. Challenge: Real-Time Updates & Notifications

From daily horoscopes to planetary transits and moon phases, astrology apps rely on timely, real-time data. Any lag or server delay can make your predictions feel outdated or worse, irrelevant.

Solution:

Cloud-Based Infrastructure: Use reliable backend platforms like AWS, Google Cloud, or Firebase to host and scale your app with minimal downtime.

Cron Jobs for Automation: Automate your content pipeline (e.g., daily horoscopes) using cron jobs or cloud functions.

Push Notification Tools: Implement services like OneSignal or Firebase Cloud Messaging to send timely updates, alerts, and daily predictions.

4. Challenge: Interpreting Complex Birth Chart Data

A full natal chart can include dozens of astrological elements: sun, moon, rising, planets in houses, aspects, transits making it too complex for average users to understand.

Solution:

Use Astrology APIs: Services like Astro-Seek or custom-built astrology engines can generate accurate charts with all key elements.

Visual Representation: Show users clean, mobile-friendly chart visualizations with tooltips and simple interpretations.

Gamify the Experience: Turn chart exploration into an engaging journey e.g., “Unlock your Venus in Leo traits” or “Your next moon phase challenge.”

5. Challenge: Scaling Live Astrologer Features

Many apps now offer live chat or video calls with astrologers. But scaling this model is difficult:

Time zone mismatches

Scheduling conflicts

Limited expert availability

Language barriers

Solution:

Smart Scheduling System: Integrate a real-time calendar with timezone awareness, slot management, and buffer time for each session.

In-App Call Module: Use Twilio or Agora for real-time video/audio calls with astrologers.

Pre-Recorded Readings: Offer users personalized voice or video readings from astrologers, which can be delivered asynchronously — helping scale without real-time constraints.

Final Thoughts: Turn Astrology Challenges Into Scalable Features

The future of astrology apps in 2025 isn’t just about daily horoscopes, it's about building personalized, data-secure, and real-time experiences that users can trust and enjoy.

By tackling the top 5 challenges in astrology app development, you’ll be positioned to launch an app that actually works, not just mystifies.

Ready to build your astrology app without the stress?

At Deorwine, we specialize in secure, AI-powered, and scalable astrology apps. From birth chart algorithms to real-time predictions, we’ve got the tech and the talent to bring your vision to life.

0 notes

Text

Best Linux Training in Chandigarh & Cloud Computing Courses – The Path to a Successful IT Career in 2025

In today’s dynamic IT landscape, professionals must continuously evolve and stay ahead of the curve. Two of the most in-demand skill sets that open doors to lucrative careers are Linux system administration and cloud computing. Whether you’re an aspiring IT professional or someone looking to upskill in 2025, enrolling in Linux training in Chandigarh and cloud computing courses is a smart investment in your future.

Why Linux and Cloud Computing?

The modern IT infrastructure heavily relies on Linux-based systems and cloud platforms. Enterprises around the world prefer Linux for its stability, security, and open-source flexibility. Simultaneously, the adoption of cloud services like AWS, Microsoft Azure, and Google Cloud Platform (GCP) has soared as businesses transition to scalable, cost-efficient computing models.

These two technologies intersect deeply — most cloud-based environments run on Linux servers. Thus, mastering both Linux and cloud computing equips professionals with a competitive edge, enabling them to manage systems, deploy applications, and troubleshoot issues across complex networked environments.

Chandigarh – A Growing IT and Education Hub

Chandigarh has quickly grown into one of North India’s leading education and IT destinations. With the rise of industrial areas, IT parks, and startups, there is a growing demand for technically skilled professionals. Training institutes in Chandigarh offer up-to-date programs, expert mentors, and job placement assistance that align perfectly with market demands.

Whether you are a beginner or a working professional, finding the best Linux training in Chandigarh will set the foundation for a successful IT career.

Why Opt for Linux Training?

Linux powers the majority of servers, supercomputers, and enterprise environments globally. Here are a few compelling reasons to learn Linux:

1. High Demand Across Industries

From banks to e-commerce platforms, Linux is everywhere. System administrators, DevOps engineers, and security analysts often require deep knowledge of Linux.

2. Open Source and Cost-Effective

Being open-source, Linux allows individuals to download, use, and modify the OS for free. This fosters innovation and gives learners an opportunity to work on real-world scenarios.

3. Essential for Cloud and DevOps Careers

Understanding Linux is crucial if you plan to work in cloud environments or in DevOps roles. Almost every cloud platform requires command-line expertise and scripting in Linux.

4. Powerful Career Boost

Linux certifications like RHCSA (Red Hat Certified System Administrator), LFCS (Linux Foundation Certified System Administrator), and CompTIA Linux+ are highly regarded and can significantly enhance your employability.

Topics Covered in Linux Training Programs

Here’s what a comprehensive Linux training course typically includes:

Introduction to Linux OS and Distributions

Linux File System and Permissions

Shell Scripting and Bash Commands

User and Group Management

Software Installation and Package Management

Network Configuration and Troubleshooting

System Monitoring and Log Analysis

Job Scheduling with Cron

Server Configuration (Apache, DNS, DHCP, etc.)

Backup and Security Measures

Basics of Virtualization and Containers (Docker)

Such courses are ideal for beginners, professionals shifting to system administration, or those preparing for certification exams.

Importance of Cloud Computing Courses

Cloud computing is no longer a luxury — it is a necessity in the digital era. Whether you're developing applications, deploying infrastructure, or analyzing big data, the cloud plays a central role.

By enrolling in Cloud Computing Courses, you gain skills to design, deploy, and manage cloud-based environments efficiently.

Advantages of Cloud Computing Training

Versatility Across Platforms You’ll get hands-on experience with Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), giving you broad exposure.

Career Opportunities Cloud computing opens pathways to roles such as:

Cloud Engineer

Cloud Solutions Architect

DevOps Engineer

Site Reliability Engineer

Cloud Security Specialist

Project-Based Learning Courses often include real-world projects like server provisioning, database deployment, CI/CD pipelines, and container orchestration using Kubernetes.

Integration with Linux Since most cloud servers are based on Linux, combining both Linux and cloud skills makes you exceptionally valuable to employers.

Core Modules in a Cloud Computing Program

Cloud programs typically span across foundational to advanced topics:

Cloud Fundamentals & Service Models (IaaS, PaaS, SaaS)

Virtual Machines and Storage

Networking in the Cloud

Identity and Access Management (IAM)

Serverless Computing

DevOps Tools Integration (Jenkins, Docker, Kubernetes)

Monitoring and Logging

Load Balancing and Auto-Scaling

Data Security and Compliance

Disaster Recovery and Backups

Cost Optimization and Billing

Midway Recap

By now, it should be clear that mastering Linux and cloud computing together offers exponential benefits. To explore structured, practical, and certification-ready learning environments, consider enrolling in:

Linux Training in Chandigarh

Cloud Computing Courses

These courses are designed not only to provide theoretical knowledge but also to empower you with the practical experience needed in real-world job roles.

Choosing the Right Institute in Chandigarh

When selecting an institute for Linux or cloud computing, consider the following criteria:

Accreditation & Certification Partnerships: Choose institutes affiliated with Red Hat, AWS, Google Cloud, or Microsoft.

Experienced Faculty: Trainers with industry certifications and hands-on experience.

Real-Time Lab Infrastructure: Labs with Linux environments and cloud platform sandboxes.

Updated Curriculum: Courses aligned with the latest industry trends and certifications.

Placement Assistance: Resume building, interview preparation, and tie-ups with hiring partners.

Career Prospects After Training

Once you've completed training in Linux and cloud computing, a multitude of roles becomes available:

For Linux Professionals:

System Administrator

Network Administrator

Linux Support Engineer

DevOps Technician

For Cloud Computing Professionals:

Cloud Administrator

Solutions Architect

Infrastructure Engineer

Site Reliability Engineer

Additionally, knowledge of both domains makes you an ideal candidate for cross-functional roles that require expertise in hybrid environments.

Certification Matters

To stand out in a competitive job market, acquiring certifications validates your knowledge and boosts your resume. Consider certifications like:

Linux:

Red Hat Certified System Administrator (RHCSA)

Linux Foundation Certified Engineer (LFCE)

CompTIA Linux+

Cloud:

AWS Certified Solutions Architect

Microsoft Azure Administrator

Google Cloud Associate Engineer

These globally recognized certifications serve as proof of your commitment and expertise.

Final Thoughts

The fusion of Linux and cloud computing is shaping the future of IT. With businesses adopting cloud-first strategies and requiring skilled professionals to manage and optimize these environments, there has never been a better time to invest in your technical education.

Chandigarh, with its growing ecosystem of IT training institutes and industry demand, is a fantastic location to begin or enhance your tech journey. By enrolling in comprehensive Linux Training in Chandigarh and Cloud Computing Courses, you lay a solid foundation for a dynamic and future-ready career.

So, take the leap, get certified, and prepare to be a part of the digital transformation sweeping across industries in 2025 and beyond.

1 note

·

View note

Text

Introduction to Serverless and Knative

Unlocking Event-Driven Architecture on Kubernetes

As organizations seek faster development cycles and better resource utilization, serverless computing has emerged as a compelling solution. It allows developers to focus on writing code without worrying about the underlying infrastructure. But how can you bring serverless to the powerful ecosystem of Kubernetes?

Enter Knative—an open-source platform that bridges the gap between Kubernetes and serverless.

In this article, we’ll introduce serverless architecture, explain how Knative enhances Kubernetes with serverless capabilities, and explore why it’s becoming essential in modern cloud-native development.

🚀 What is Serverless?

At its core, serverless computing allows you to build and run applications without managing servers. Despite the name, servers still exist—but provisioning, scaling, and management are abstracted away and handled by the cloud provider or platform.

Key Characteristics:

No server management: Developers don’t manage infrastructure.

Event-driven: Functions or services respond to events or triggers.

Auto-scaling: Automatically scales based on demand.

Pay-as-you-go: You’re charged only for actual usage.

Common Use Cases:

API backends

Real-time file or data processing

Scheduled tasks or cron jobs

Event-driven microservices

🧩 Serverless on Kubernetes: The Need

While Kubernetes has become the standard for orchestrating containers, it doesn’t offer native support for serverless out of the box. This gap leads to the rise of platforms like Knative, which add serverless capabilities on top of Kubernetes.

⚙️ What is Knative?

Knative is an open-source Kubernetes-based platform designed to manage modern, container-based workloads with a serverless-like experience.

Developed by Google and now a CNCF project, Knative provides a set of middleware components that help developers deploy and manage functions and services seamlessly on Kubernetes.

Knative’s Core Components:

Knative Serving Handles the deployment of serverless applications and automatically manages scaling (including to zero), traffic routing, and versioning.

Knative Eventing Enables building event-driven architectures. It decouples event producers and consumers and supports a variety of event sources.

Knative Functions (Optional) Provides a streamlined developer experience for building and deploying single-purpose functions (still evolving and optional in some setups).

Knative brings the agility of serverless with the power of Kubernetes.

🌍 Real-World Example

Imagine an e-commerce platform where certain services—like order confirmation or payment notification—don’t need to run 24/7. Using Knative:

You deploy these services as serverless workloads.

They scale to zero when not in use.

When triggered (e.g., by a new order), they spin up automatically and handle the task.

You save on compute resources and improve responsiveness.

🧰 Getting Started with Knative

To start experimenting with Knative:

Install a Kubernetes cluster (e.g., Minikube, GKE, EKS).

Install Knative Serving and Eventing via YAML or operator.

Deploy your first service using Knative YAML or kn CLI.

Trigger your service with HTTP requests or event sources.

Knative’s documentation is robust, and the community is growing, making it easier to adopt.

🏁 Conclusion

Knative is revolutionizing how developers build and deploy applications on Kubernetes. It brings the flexibility of serverless, cost efficiency of event-driven workloads, and the robustness of Kubernetes into one powerful platform.

If you're already using Kubernetes and want to adopt serverless paradigms without vendor lock-in, Knative is a perfect fit.

✅ Quick Takeaways:

Serverless = focus on code, not infrastructure

Knative = serverless on Kubernetes

Best for microservices, event-driven apps, and on-demand workloads

Supports auto-scaling, routing, and eventing out of the box

Ready to go serverless on Kubernetes? Explore Knative and supercharge your application development today.

For more info, Kindly follow: Hawkstack Technologies

#Knative#Serverless#Kubernetes#CloudNative#EventDrivenArchitecture#DevOps#Microservices#CloudComputing

0 notes