#data engineering training

Explore tagged Tumblr posts

Text

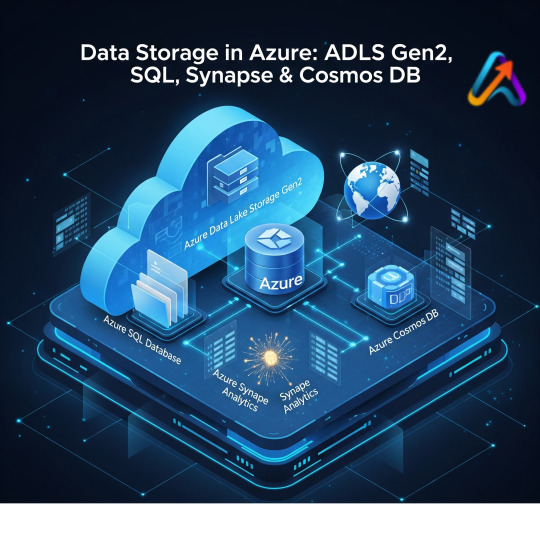

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

AWS Data Engineering Training Online Certification course in Ahmedabad

Enhance your skills with the AWS Data Engineering Online Certification course in Ahmedabad by Multisoft Systems. Learn to design and manage data pipelines, handle big data analytics, and optimize cloud data solutions. This course offers hands-on training and expert insights to boost your data engineering career. Enroll today and become a certified AWS data engineer!

#AWS Data Engineering Training in Ahmedabad#AWS Data Engineering Certification#AWS Data Engineering course#Data Engineering Training

0 notes

Text

Projects and Challenges in a Data Engineering Bootcamp

Participants in a data engineering bootcamp can expect to work on real-world projects involving data pipelines, ETL processes, data warehousing, and big data technologies. Challenges may include designing and implementing scalable data architectures, optimizing workflows, and integrating various data sources. Additionally, participants may tackle issues related to data quality, data governance, and using cloud-based data engineering tools to solve practical data-related problems.

For more information visit: https://www.webagesolutions.com/courses/data-engineer-training

#Data Engineering Bootcamp#Data Engineering#Data Engineering course#Data Engineering training#Data Engineering certification

0 notes

Text

Bullshit engines.

I mean, I don’t suppose many people following this blog strongly disagree.

#they are not search engines#they are not the new improved version! they are something else!#they are probabilistic text generators#they produce something plausible based on the data set they were trained on#there is no knowledge or understanding involved

13 notes

·

View notes

Text

Learning C for work, and, even having learnt C++ syntax before, it is truly a humbling experience after having gotten so used to python over the past half year 💀

#coding#freshly trained data engineer (me) learns why we use python for data#bc we'd lose our minds if we tried doing it with lower level languages holy heck#oh yeah I started my new job this week!!! I work for tram now

2 notes

·

View notes

Text

Protecting Your AI Investment: Why Cooling Strategy Matters More Than Ever

New Post has been published on https://thedigitalinsider.com/protecting-your-ai-investment-why-cooling-strategy-matters-more-than-ever/

Protecting Your AI Investment: Why Cooling Strategy Matters More Than Ever

Data center operators are gambling millions on outdated cooling technology. The conversation around data center cooling isn’t just changing—it’s being completely redefined by the economics of AI. The stakes have never been higher.

The rapid advancement of AI has transformed data center economics in ways few predicted. When a single rack of AI servers costs around $3 million—as much as a luxury home—the risk calculation fundamentally changes. As Andreessen Horowitz co-founder Ben Horowitz recently cautioned, data centers financing these massive hardware investments “could get upside down very fast” if they don’t carefully manage their infrastructure strategy.

This new reality demands a fundamental rethinking of cooling approaches. While traditional metrics like PUE and operating costs are still important, they are secondary to protecting these multi-million-dollar hardware investments. The real question data center operators should be asking is: How do we best protect our AI infrastructure investment?

The Hidden Risks of Traditional Cooling

The industry’s historic reliance on single-phase, water-based cooling solutions carries increasingly unacceptable risks in the AI era. While it has served data centers well for years, the thermal demands of AI workloads have pushed this technology beyond its practical limits. The reason is simple physics: single-phase systems require higher flow rates to manage today’s thermal loads, increasing the risk of leaks and catastrophic failures.

This isn’t a hypothetical risk. A single water leak can instantly destroy millions in AI hardware—hardware that often has months-long replacement lead times in today’s supply-constrained market. The cost of even a single catastrophic failure can exceed a data center’s cooling infrastructure budget for an entire year. Yet many operators continue to rely on these systems, effectively gambling their AI investment on aging technology.

At Data Center World 2024, Dr. Mohammad Tradat, NVIDIA’s Manager of Data Center Mechanical Engineering, asked, “How long will single-phase cooling live? It’ll be phased out very soon…and then the need will be for two-phase, refrigerant-based cooling.” This isn’t just a growing opinion—it’s becoming an industry consensus backed by physics and financial reality.

A New Approach to Investment Protection

Two-phase cooling technology, which uses dielectric refrigerants instead of water, fundamentally changes this risk equation. The cost of implementing a two-phase cooling system—typically around $200,000 per rack—should be viewed as insurance for protecting a $5 million AI hardware investment. To put this in perspective, that’s a 4% premium to protect your asset—considerably lower than insurance rates for other multi-million dollar business investments. The business case becomes even clearer when you factor in the potential costs of AI training disruption and idle infrastructure during unplanned downtime.

For data center operators and financial stakeholders, the decision to invest in two-phase cooling should be evaluated through the lens of risk management and investment protection. The relevant metrics should include not just operating costs or energy efficiency but also the total value of hardware being protected, the cost of potential failure scenarios, the future-proofing value for next-generation hardware and the risk-adjusted return on cooling investment.

As AI continues to drive up the density and value of data center infrastructure, the industry must evolve its approach to cooling strategy. The question isn’t whether to move to two-phase cooling but when and how to transition while minimizing risk to existing operations and investments.

Smart operators are already making this shift, while others risk learning an expensive lesson. In an era where a single rack costs more than many data centers’ annual operating budgets, gambling on outdated cooling technology isn’t just risky – it’s potentially catastrophic. The time to act is now—before that risk becomes a reality.

#000#2024#Accelsius#aging#ai#AI Infrastructure#ai training#approach#budgets#Business#cooling#data#Data Center#Data Centers#disruption#Economics#efficiency#energy#energy efficiency#engineering#factor#financial#Fundamental#Future#gambling#Hardware#how#how to#Industry#Infrastructure

2 notes

·

View notes

Text

Who provides the best Informatica MDM training?

1. Introduction to Informatica MDM Training

Informatica MDM (Master Data Management) is a crucial aspect of data management for organizations dealing with large volumes of data. With the increasing demand for professionals skilled in Informatica MDM, the need for quality training has become paramount. Choosing the right training provider can significantly impact your learning experience and career prospects in this field.

2. Importance of Choosing the Right Training Provider

Selecting the best Informatica MDM training provider is essential for acquiring comprehensive knowledge, practical skills, and industry recognition. A reputable training provider ensures that you receive the necessary guidance and support to excel in your career.

3. Factors to Consider When Choosing Informatica MDM Training

Reputation and Experience

A reputable training provider should have a proven track record of delivering high-quality training and producing successful professionals in the field of Informatica MDM.

Course Curriculum

The course curriculum should cover all essential aspects of Informatica MDM, including data modeling, data integration, data governance, and data quality management.

Training Methodology

The training methodology should be interactive, engaging, and hands-on, allowing participants to gain practical experience through real-world scenarios and case studies.

Instructor Expertise

Experienced and certified instructors with extensive knowledge of Informatica MDM ensure effective learning and provide valuable insights into industry best practices.

Flexibility of Learning Options

Choose a training provider that offers flexible learning options such as online courses, instructor-led classes, self-paced learning modules, and blended learning approaches to accommodate your schedule and learning preferences.

4. Comparison of Training Providers

When comparing Informatica MDM training providers, consider factors such as cost, course duration, support services, and reviews from past participants. Choose a provider that offers the best value for your investment and aligns with your learning objectives and career goals.

5. Conclusion

Selecting the right Informatica MDM training provider is crucial for acquiring the necessary skills and knowledge to succeed in this competitive field. Evaluate different providers based on factors such as reputation, course curriculum, instructor expertise, and flexibility of learning options to make an informed decision.

Contact us 👇

📞Call Now: +91-9821931210 📧E Mail: [email protected] 🌐Visit Website: https://inventmodel.com/course/informatica-mdm-online-live-training

#informatica#software engineering#MDM Training#Informatica MDM Training#Data Management courses#MDM online course#online informatica training

3 notes

·

View notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

50 Big Data Concepts Every Data Engineer Should Know

Big data is the primary force behind data-driven decision-making. It enables organizations to acquire insights and make informed decisions by utilizing vast amounts of data. Data engineers play a vital role in managing and processing big data, ensuring its accessibility, reliability, and readiness for analysis. To succeed in this field, data engineers must have a deep understanding of various big data concepts and technologies.

This article will introduce you to 50 big data concepts that every data engineer should know. These concepts encompass a broad spectrum of subjects, such as data processing, data storage, data modeling, data warehousing, and data visualization.

1. Big Data

Big data refers to datasets that are so large and complex that traditional data processing tools and methods are inadequate to handle them effectively.

2. Volume, Velocity, Variety

These are the three V’s of big data. Volume refers to the sheer size of data, velocity is the speed at which data is generated and processed, and variety encompasses the different types and formats of data.

3. Structured Data

Data that is organized into a specific format, such as rows and columns, making it easy to query and analyze. Examples include relational databases.

4. Unstructured Data

Data that lacks a predefined structure, such as text, images, and videos. Processing unstructured data is a common challenge in big data engineering.

5. Semi-Structured Data

Data that has a partial structure, often in the form of tags or labels. JSON and XML files are examples of semi-structured data.

6. Data Ingestion

The process of collecting and importing data into a data storage system or database. It’s the first step in big data processing.

7. ETL (Extract, Transform, Load)

ETL is a data integration process that involves extracting data from various sources, transforming it to fit a common schema, and loading it into a target database or data warehouse.

8. Data Lake

A centralized repository that can store vast amounts of raw and unstructured data, allowing for flexible data processing and analysis.

9. Data Warehouse

A structured storage system designed for querying and reporting. It’s used to store and manage structured data for analysis.

10. Hadoop

An open-source framework for distributed storage and processing of big data. Hadoop includes the Hadoop Distributed File System (HDFS) and MapReduce for data processing.

11. MapReduce

A programming model and processing technique used in Hadoop for parallel computation of large datasets.

12. Apache Spark

An open-source, cluster-computing framework that provides in-memory data processing capabilities, making it faster than MapReduce.

13. NoSQL Databases

Non-relational databases designed for handling unstructured and semi-structured data. Types include document, key-value, column-family, and graph databases.

14. SQL-on-Hadoop

Technologies like Hive and Impala that enable querying and analyzing data stored in Hadoop using SQL-like syntax.

15. Data Partitioning

Dividing data into smaller, manageable subsets based on specific criteria, such as date or location. It improves query performance.

16. Data Sharding

Distributing data across multiple databases or servers to improve data retrieval and processing speed.

17. Data Replication

Creating redundant copies of data for fault tolerance and high availability. It helps prevent data loss in case of hardware failures.

18. Distributed Computing

Computing tasks that are split across multiple nodes or machines in a cluster to process data in parallel.

19. Data Serialization

Converting data structures or objects into a format suitable for storage or transmission, such as JSON or Avro.

20. Data Compression

Reducing the size of data to save storage space and improve data transfer speeds. Compression algorithms like GZIP and Snappy are commonly used.

21. Batch Processing

Processing data in predefined batches or chunks. It’s suitable for tasks that don’t require real-time processing.

22. Real-time Processing

Processing data as it’s generated, allowing for immediate insights and actions. Technologies like Apache Kafka and Apache Flink support real-time processing.

23. Machine Learning

Using algorithms and statistical models to enable systems to learn from data and make predictions or decisions without explicit programming.

24. Data Pipeline

A series of processes and tools used to move data from source to destination, often involving data extraction, transformation, and loading (ETL).

25. Data Quality

Ensuring data accuracy, consistency, and reliability. Data quality issues can lead to incorrect insights and decisions.

26. Data Governance

The framework of policies, processes, and controls that define how data is managed and used within an organization.

27. Data Privacy

Protecting sensitive information and ensuring that data is handled in compliance with privacy regulations like GDPR and HIPAA.

28. Data Security

Safeguarding data from unauthorized access, breaches, and cyber threats through encryption, access controls, and monitoring.

29. Data Lineage

A record of the data’s origins, transformations, and movement throughout its lifecycle. It helps trace data back to its source.

30. Data Catalog

A centralized repository that provides metadata and descriptions of available datasets, making data discovery easier.

31. Data Masking

The process of replacing sensitive information with fictional or scrambled data to protect privacy while preserving data format.

32. Data Cleansing

Identifying and correcting errors or inconsistencies in data to improve data quality.

33. Data Archiving

Moving data to secondary storage or long-term storage to free up space in primary storage and reduce costs.

34. Data Lakehouse

An architectural approach that combines the benefits of data lakes and data warehouses, allowing for both storage and structured querying of data.

35. Data Warehouse as a Service (DWaaS)

A cloud-based service that provides on-demand data warehousing capabilities, reducing the need for on-premises infrastructure.

36. Data Mesh

An approach to data architecture that decentralizes data ownership and management, enabling better scalability and data access.

37. Data Governance Frameworks

Defined methodologies and best practices for implementing data governance, such as DAMA DMBOK and DCAM.

38. Data Stewardship

Assigning data stewards responsible for data quality, security, and compliance within an organization.

39. Data Engineering Tools

Software and platforms used for data engineering tasks, including Apache NiFi, Talend, Apache Beam, and Apache Airflow.

40. Data Modeling

Creating a logical representation of data structures and relationships within a database or data warehouse.

41. ETL vs. ELT

ETL (Extract, Transform, Load) involves extracting data, transforming it, and then loading it into a target system. ELT (Extract, Load, Transform) loads data into a target system before performing transformations.

42. Data Virtualization

Providing a unified view of data from multiple sources without physically moving or duplicating the data.

43. Data Integration

Combining data from various sources into a single, unified view, often involving data consolidation and transformation.

44. Streaming Data

Data that is continuously generated and processed in real-time, such as sensor data and social media feeds.

45. Data Warehouse Optimization

Improving the performance and efficiency of data warehouses through techniques like indexing, partitioning, and materialized views.

46. Data Governance Tools

Software solutions designed to facilitate data governance activities, including data cataloging, data lineage, and data quality tools.

47. Data Lake Governance

Applying data governance principles to data lakes to ensure data quality, security, and compliance.

48. Data Curation

The process of organizing, annotating, and managing data to make it more accessible and valuable to users.

49. Data Ethics

Addressing ethical considerations related to data, such as bias, fairness, and responsible data use.

50. Data Engineering Certifications

Professional certifications, such as the Google Cloud Professional Data Engineer or Microsoft Certified: Azure Data Engineer, that validate expertise in data engineering.

Elevate Your Data Engineering Skills

Data engineering is a dynamic field that demands proficiency in a wide range of concepts and technologies. To excel in managing and processing big data, data engineers must continually update their knowledge and skills.

If you’re looking to enhance your data engineering skills or start a career in this field, consider enrolling in Datavalley’s Big Data Engineer Masters Program. This comprehensive program provides you with the knowledge, hands-on experience, and guidance needed to excel in data engineering. With expert instructors, real-world projects, and a supportive learning community, Datavalley’s course is the ideal platform to advance your career in data engineering.

Don’t miss the opportunity to upgrade your data engineering skills and become proficient in the essential big data concepts. Join Datavalley’s Data Engineering Course today and take the first step toward becoming a data engineering expert. Your journey in the world of data engineering begins here.

#datavalley#data engineering#dataexperts#data analytics#data science course#data analytics course#data science#business intelligence#dataexcellence#power bi#data engineering roles#online data engineering course#data engineering training#data#data visualization

1 note

·

View note

Text

How Data Engineering Training Course Providers are Using AI

As the demand for data engineering expertise continues to surge, training course providers are increasingly integrating Artificial Intelligence (AI) to enhance the learning experience and equip professionals with advanced skills.

This article will explore how data engineering training course providers leverage AI to deliver comprehensive and innovative learning experiences.

AI-Powered Learning Platforms

Data engineering training course providers are harnessing AI to create dynamic and personalized learning platforms, revolutionizing how professionals acquire data engineering skills.

Personalized Learning Paths: AI algorithms analyze individual learning patterns and preferences, tailoring course content and pace to meet the unique needs of each learner.

Adaptive Assessments: AI-powered assessments dynamically adjust based on the learner's performance, providing targeted feedback and identifying areas for improvement.

Intelligent Recommendations: AI algorithms recommend supplementary learning materials, projects, or exercises based on the learner's progress and interests, fostering a holistic learning experience.

Enhanced Curriculum Design

AI is influencing the design and delivery of data engineering training courses, enriching the curriculum and ensuring its alignment with industry trends and emerging technologies.

AI-Driven Course Content: Course providers leverage AI to curate and update course content, ensuring it reflects the latest advancements in data engineering and aligns with industry best practices.

Real-Time Industry Insights: AI tools analyze industry trends and job market demands, enabling course providers to integrate relevant and future-focused topics into their curriculum.

Project Guidance: AI-powered project guidance systems offer real-time feedback and suggestions to learners, enhancing their practical application of data engineering concepts.

Intelligent Mentorship and Support

AI is being employed to augment mentorship and support services, providing learners with intelligent and responsive assistance throughout their learning journey.

AI-Powered Chatbots: Course providers integrate AI chatbots to offer instant support, answer learner queries, provide resources, and offer technical assistance.

Smart Feedback Systems: AI tools analyze learner submissions and interactions, offering targeted feedback and guidance to enhance comprehension and skill development.

Predictive Interventions: AI algorithms identify potential learning obstacles and provide proactive interventions to support learners in overcoming challenges.

Adaptive Learning Technologies

Data engineering training course providers are embracing AI-powered adaptive learning technologies to cater to diverse learning styles and optimize learning outcomes.

Personalized Learning Modules: AI systems customize learning modules based on individual learning styles, ensuring an adaptive and engaging learning experience.

Cognitive Skills Development: AI-powered platforms focus on developing cognitive skills such as problem-solving, critical thinking, and data interpretation through interactive exercises and scenarios.

Performance Analytics: AI analytics provide comprehensive insights into learner performance, enabling course providers to tailor interventions and support mechanisms.

AI-Enabled Certification Pathways

AI technologies are reshaping the certification pathways offered by data engineering training course providers, ensuring relevance, rigor, and industry recognition.

AI-Driven Assessment: Certification assessments are enhanced through AI technologies that offer advanced proctoring, plagiarism detection, and robust evaluation mechanisms.

Competency-Based Badging: AI-powered badging systems recognize and credential learners based on demonstrated competencies and skill mastery, offering a nuanced representation of expertise.

Real-Time Credentialing: AI streamlines and accelerates the credentialing process, providing instant certification outcomes and feedback to learners.

Conclusion

Data engineering training course providers are leveraging AI to revolutionize the learning experience, empowering professionals with advanced skills and expertise in data engineering.

By integrating AI technologies into their learning platforms, curriculum design, mentorship, and certification pathways, providers like Web Age Solutions ensure that learners receive a comprehensive, adaptive, and industry-relevant education in data engineering. For more details, visit: https://www.webagesolutions.com/courses/data-engineer-training

1 note

·

View note

Text

Palantir Foundry Developer Training Program

Enhance your data engineering skills with the Palantir Foundry Developer Training Program. Learn to leverage Palantir’s powerful platform for building data solutions, managing complex workflows, and creating advanced data visualizations. This hands-on course is perfect for developers who want to dive into the world of big data and real-time analytics. Build your expertise and take your career to the next level by mastering Palantir Foundry. Start your journey today!

0 notes

Text

*laughs in still 923 characters in my data set after cleaning up all the duplicate names* 🙃 (down from 975 (unique) old names)

#ship stats#coding#I love csv files they're so satisfying to look at#I'm like THIS 🤏 close to visualising this first version aaaah#I've assigned and completed/corrected (as much as possible) the demographic data#(you need to understand the amount of wikis I had to manually look through for this cleaning process)#now turning everything into csv files again so I can LOOK AT EM BETTER#and then need to look into vis softwares to use o.o#we had bootcamp graduation today also!! 🎉 I am officially a trained data engineer#what will I do with my time now until I have hunted myself a job? hoPEFULLY FINALLY EDIT MY REMAINING MATRIARCHY VIDEOS Q.Q

3 notes

·

View notes

Text

Big Data, Big Opportunities: A Beginner's Guide

Big Data is a current trend and the number of specialists in the field of Big Data is growing rapidly. If you are a beginner looking to enter the world of Big Data, you've come to the right place! This Beginner’s Guide will help you understand the basics of Big Data, Data Science, Data Analysis, and Data Engineering, and highlight the skills you need to build a career in this field.

What is Big Data?

Big Data refers to the massive volumes of structured and unstructured data that are too complex for traditional processing software. These Big Data concepts form the foundation for data professionals to extract valuable insights.

While the term might sound intimidating, think of Big Data as just a collection of data that's too large to be processed by conventional databases. Imagine the millions of transactions happening on Amazon or the vast amounts of data produced by a single flight from an airline. These are examples of Big Data in action. Learning the fundamentals will help you understand the potential of this massive resource

Why Big Data Matters

Big Data enables companies to uncover trends, improve decision-making, and gain a competitive edge. This demand has created a wealth of opportunities in Data Science careers, Data Analysis, and Data Engineering.

Key Big Data Concepts

Some key Big Data concepts include:

Volume, Velocity, and Variety: Large volume of data, generated rapidly in various formats.

Structured vs. Unstructured Data: Organized data in databases versus raw, unstructured data.

Tools like Hadoop and Spark are crucial in handling Big Data efficiently.

Data Engineering: The Backbone of Big Data

Data Engineering is the infrastructure behind Big Data. Data Engineering basics involve creating pipelines and processing systems to store and manage massive datasets. Learning these fundamentals is critical for those aspiring to Data Engineering jobs.

Big Data Applications Across Industries

Big Data applications span across industries, from healthcare and finance to marketing and manufacturing. In healthcare, Big Data is used for predictive analytics and improving patient care. In finance, it helps detect fraud, optimize investment strategies, and manage risks. Marketing teams use Big Data to understand customer preferences, personalize experiences, and create targeted campaigns. The possibilities are endless, making Big Data one of the most exciting fields to be a part of today.

As a beginner, you might wonder how Big Data fits into everyday life. Think of online streaming services like Netflix, which recommend shows based on your previous viewing patterns, or retailers who send personalized offers based on your shopping habits. These are just a couple of ways Big Data is being applied in the real world.

Building a Career in Big Data

The demand for Big Data professionals is on the rise, and there are a variety of career paths you can choose from:

Data Science Career: As a Data Scientist, you'll focus on predictive modeling, machine learning, and advanced analytics. This career often involves a strong background in mathematics, statistics, and coding.

Data Analysis Jobs: As a Data Analyst, you’ll extract meaningful insights from data to support business decisions. This role emphasizes skills in statistics, communication, and data visualization.

Data Engineering Jobs: As a Data Engineer, you’ll build the infrastructure that supports data processing and analysis, working closely with Data Scientists and Analysts to ensure that data is clean and ready for use.

Whether you're interested in Data Science, Data Analysis, or Data Engineering, now is the perfect time to jumpstart your career. Each role has its own unique challenges and rewards, so finding the right fit will depend on your strengths and interests.

Career Opportunities in Big Data and Their Salaries

As the importance of Big Data continues to grow, so does the demand for professionals skilled in handling large data sets. Let’s check the different career paths in Big Data, their responsibilities, and average salaries:

Data Scientist

Role: Data Scientists develop models and algorithms to extract insights from large data sets. They work on predictive analytics, machine learning, and statistical modeling.

Average Salary: $120,000 to $150,000 per year in the U.S.

Skills Needed: Strong background in math, statistics, programming (Python, R), and machine learning.

Data Analyst

Role: Data Analysts interpret data to provide actionable insights for decision-making. They focus on generating reports, dashboards, and business insights.

Average Salary: $60,000 to $90,000 per year in the U.S.

Skills Needed: Proficiency in SQL, Excel, Python, data visualization tools like Tableau or Power BI, and statistical analysis.

Data Engineer

Role: Data Engineers build and maintain the architecture (databases, pipelines, etc.) necessary for data collection, storage, and analysis.

Average Salary: $100,000 to $140,000 per year in the U.S.

Skills Needed: Knowledge of cloud platforms (AWS, Google Cloud), database management, ETL tools, and programming languages like Python, Scala, or Java.

Big Data Architect

Role: Big Data Architects design the infrastructure that supports Big Data solutions, ensuring scalability and performance.

Average Salary: $140,000 to $180,000 per year in the U.S.

Skills Needed: Expertise in cloud computing, distributed systems, database architecture, and technologies like Hadoop, Spark, and Kafka.

Machine Learning Engineer

Role: Machine Learning Engineers create algorithms that allow systems to automatically improve from experience, which is key in processing and analyzing large data sets.

Average Salary: $110,000 to $160,000 per year in the U.S.

Skills Needed: Proficiency in machine learning libraries (TensorFlow, PyTorch), programming (Python, R), and experience with large datasets.

Learn Big Data with Guruface

Guruface, an online learning platform, offers different Big Data courses. Whether you’re looking for an Introduction to Big Data, a Data Science tutorial, or Data Engineering basics, Guruface provides beginner-friendly resources to guide your learning. Their courses are ideal for those looking to learn Big Data concepts and practical applications in Data Science, Data Analysis, and Data Engineering.

Conclusion

With data being the driving force in today’s society, understanding the Big Data concepts, tools as well as applications, is a key step towards an exciting Big Data Career. Platforms like Guruface provide the ideal starting point for beginners interested in Big Data, Data Science, Data Analysis, or Data Engineering. Start your journey today and explore the vast potential of Big Data.

0 notes

Text

Azure Data Engineer Online Training | Azure Data Engineer Online Course

Master Azure Data Engineering with AccentFuture. Get hands-on training in data pipelines, ETL, and analytics on Azure. Learn online at your pace with real-world projects and expert guidance.

🚀 Enroll Now: https://www.accentfuture.com/enquiry-form/

📞 Call Us: +91–9640001789

📧 Email Us: [email protected]

🌐 Visit Us: AccentFuture

#Azure data engineer course#Azure data engineer course online#Azure data engineer online training#Azure data engineer training

0 notes

Text

Why You Need to Enroll in a Data Engineering Course

Data science teams consist of individuals specializing in data analysis, data science, and data engineering. The role of data engineers within these teams is to establish connections between various components of the data ecosystem within a company or institution. By doing so, data engineers assume a pivotal position in the implementation of data strategy, sparing others from this responsibility. They are the initial line of defense in managing the influx of both structured and unstructured data into a company’s systems, serving as the bedrock of any data strategy.

In essence, data engineers play a crucial role in amplifying the outcomes of a data strategy, acting as the pillars upon which data analysts and data scientists rely. So, why should you enroll in a data engineering course? And how will it help your career? Let’s take a look at the top reasons.

1. Job Security and Stability

In an era where data is considered one of the most valuable assets for businesses, data engineers enjoy excellent job security and stability. Organizations heavily rely on their data infrastructure, and the expertise of data engineers is indispensable in maintaining the smooth functioning of these systems. Data engineering roles are less susceptible to outsourcing since they involve hands-on work with sensitive data and complex systems that are difficult to manage remotely. As long as data remains crucial to businesses, data engineers will continue to be in demand, ensuring a stable and secure career path.

2. High Salary Packages

The increasing demand for data engineers has naturally led to attractive salary packages. Data engineers are among the highest-paid tech professionals because their work is so specialized. Companies are willing to offer competitive compensation to attract and retain top talent. In the United States, the average pay for a data engineer stands at approximately $109,000, while in India, it ranges from 8 to 9 lakhs per annum. Moreover, as you gain experience and expertise in the field, your earning potential will continue to grow.

3. Rewarding Challenges

Data engineering is a highly challenging yet rewarding profession. You’ll face tough challenges like data integration, data quality, scalability, and system reliability. Designing efficient data pipelines and optimizing data processing workflows can be intellectually stimulating and gratifying. As you overcome these challenges and witness your solutions in action, you will experience a sense of accomplishment that comes from playing a critical role in transforming raw data into actionable insights that drive business success.

4. Contributing to Cutting-Edge Technologies

Data engineering is at the forefront of technological advancements. As a data engineer, you will actively engage in creating and implementing innovative solutions to tackle big data challenges. This may include working with distributed systems, real-time data processing frameworks, and cloud-based infrastructure. By contributing to cutting-edge technologies, you become a key player in shaping the future of data management and analytics. The rapid evolution of data engineering technologies ensures that the field remains dynamic and exciting, providing continuous opportunities for learning and growth.

5. Positive Job Outlook

The demand for data engineers has consistently been high and is projected to continue growing. Companies of all sizes recognize the importance of data-driven decision-making and actively seek skilled data engineers to build and maintain their data infrastructure. The scarcity of qualified data engineers means that job opportunities are abundant, and you’ll have the flexibility to choose from a diverse range of industries and domains. Whether you’re interested in finance, healthcare, e-commerce, or any other sector, there will be a demand for your skills.

6. The Foundation of Data Science

Data engineering forms the backbone of data science. Without robust data pipelines and reliable storage systems, data scientists would be unable to extract valuable insights from raw data. As a data engineer, you get to collaborate with data scientists, analysts, and other stakeholders to design and implement data solutions that fuel an organization’s decision-making process. Your contributions will directly impact how organizations make strategic decisions, improve customer experiences, optimize operations, and gain a competitive edge in the market.

Why choose Datavalley’s Data Engineering Course?

Datavalley provides a Data Engineering course specifically designed for individuals at the beginner level. Our course offers a perfectly structured learning path that encompasses comprehensive facets of data engineering. Irrespective of one’s technical background or the transition from a different professional domain, our course is for all levels.

Here are some reasons to consider our course:

Comprehensive Curriculum: Our course teaches you all the essential topics and tools for data engineering. The topics include, big data foundations, Python, data processing, AWS, Snowflake advanced data engineering, data lakes, and DevOps.

Hands-on Experience: We believe in experiential learning. You will work on hands-on exercises and projects to apply your knowledge.

Project-Ready, Not Just Job-Ready: Upon completion of our program, you will be prepared to start working immediately and carry out projects with confidence.

Flexibility: Self-paced learning is suitable for both full-time students and working professionals because it allows learners to learn at their own speed and convenience.

Cutting-Edge Curriculum: Our curriculum is regularly updated to reflect the latest trends and technologies in data engineering.

Career Support: We offer career guidance and support, including job placement assistance, to help you launch your data engineering career.

On-call Project Assistance After Landing Your Dream Job: Our experts are available to assist you with your projects for up to 3 months. This will help you succeed in your new role and confidently tackle any challenges that come your way.

Networking Opportunities: Joining our course opens doors to a network of fellow learners, professionals, and employers.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on all our courses Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Big Data Engineer Masters Program visit Datavalley’s official website.

Conclusion

In conclusion, becoming a data engineer offers a promising and fulfilling career path for several compelling reasons. The demand for data engineers continues to soar as companies recognize the value of data-driven decision-making. This high demand translates into excellent job opportunities and competitive salaries. To start this exciting journey in data engineering, consider enrolling in a Big Data Engineer Masters Program at Datavalley. It will equip you with the skills and knowledge needed to thrive in the world of data and technology, opening up limitless possibilities for your career.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#data analytics course#data science course#power bi#business intelligence#data engineering course#data engineering training#data engineer#online data engineering course#data engineering roles

1 note

·

View note

Text

Fastest Growing IT Roles in India 2025 Edition

Step into the most in-demand tech careers of 2025 with Evision Technoserve! Explore top career paths such as Cloud Administrator, Cybersecurity Analyst, AI & Data Science Engineer, Full Stack Developer, and DevOps Engineer. Gain real-world experience through hands-on projects and learn directly from industry mentors. Evision’s expert-led training programs are designed to fast-track your IT career with 100% placement assistance. Don’t miss out on exciting internship and job opportunities

#fastest growing tech careers 2025 india evision#trending IT jobs for freshers india 2025#future-proof IT careers for students 2025#high demand IT roles with placement india#cloud administrator training with jobs 2025#cybersecurity analyst course with internship#ai & data science engineer course india freshers#devops engineer training with placement india#evisiontechnoserve#internshipprogram

0 notes