#difference between specialization and generalization in dbms

Explore tagged Tumblr posts

Text

BCA vs. B.Tech: Which Path is Right for You?

Choosing the right educational path is one of the most critical decisions you will make as a student. With various options available, especially in the field of technology, two prominent choices stand out: Bachelor of Computer Applications (BCA) and Bachelor of Technology (B.Tech). Both degrees offer unique advantages and cater to different career aspirations, making the decision challenging for students. This article will explore the key differences, advantages, career opportunities, and factors to consider when deciding between BCA and B.Tech to help you determine which path is right for you.

Overview of BCA and B.Tech

What is BCA?

BCA, or Bachelor of Computer Applications, is a three-year undergraduate program that focuses on computer applications, software development, and information technology. It is designed for students interested in developing practical skills in programming, database management, networking, and web development. BCA emphasizes hands-on learning and projects, equipping students with the knowledge necessary to pursue careers in various IT domains.

What is B.Tech?

B.Tech, or Bachelor of Technology, is a four-year undergraduate program that provides a comprehensive education in engineering and technology. It is available in various specializations, including Computer Science, Information Technology, Electronics, Mechanical, Civil, and many others. B.Tech programs are structured to offer both theoretical knowledge and practical training in engineering principles, preparing students for complex technological challenges.

Curriculum and Learning Approach

BCA Curriculum

The BCA curriculum typically includes subjects such as:

- Programming Languages (C, C++, Java, Python)

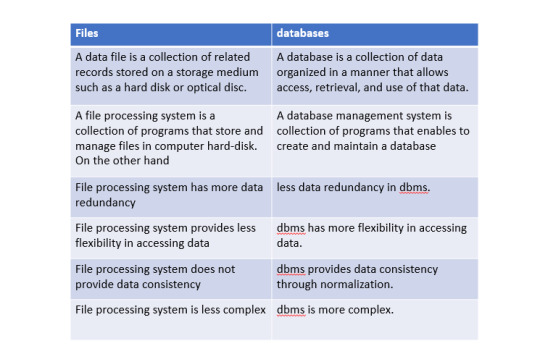

- Database Management Systems (DBMS)

- Software Engineering

- Web Technologies

- Networking

- Operating Systems

- Mobile Application Development

The focus is on developing practical skills through projects and real-world applications. BCA programs often encourage students to engage in internships and industry projects to gain hands-on experience, which is crucial for building a strong portfolio.

B.Tech Curriculum

The B.Tech curriculum is broader and more engineering-focused. It covers subjects such as:

- Mathematics and Physics

- Programming and Data Structures

- Algorithms

- Computer Networks

- Operating Systems

- Digital Logic Design

- Software Engineering

B.Tech students also engage in practical training through laboratory work, projects, and internships. The program is designed to provide a deep understanding of engineering principles and technology, preparing students for advanced roles in the field.

Key Differences Between BCA and B.Tech

Duration

- BCA: Typically a three-year program.

- B.Tech: Generally a four-year program.

Focus and Specialization

- BCA: Concentrates on software applications, programming, and IT management. Ideal for students interested in software development and application programming.

- B.Tech: Covers a broader range of engineering principles, allowing students to specialize in various fields like computer science, electronics, mechanical, and civil engineering.

Learning Approach

- BCA: More focused on practical skills and applications, often involving projects and hands-on training.

- B.Tech: Combines theoretical knowledge with practical applications, emphasizing engineering concepts and problem-solving.

Career Pathways

- BCA: Graduates typically pursue roles such as software developer, web developer, systems analyst, or IT support.

- B.Tech: Graduates can work in diverse engineering fields, including software engineering, hardware design, network administration, and research and development.

Career Opportunities

Career Paths After BCA

Graduating with a BCA opens various career opportunities, including:

1. Software Developer: Design, develop, and maintain software applications.

2. Web Developer: Create and manage websites, focusing on both front-end and back-end development.

3. Database Administrator: Manage databases, ensuring data integrity and security.

4. Systems Analyst: Analyze and improve IT systems and processes within organizations.

5. Network Administrator: Oversee and maintain computer networks, ensuring seamless connectivity.

6. IT Support Specialist: Provide technical support and troubleshooting for hardware and software issues.

BCA graduates can also pursue higher education, such as MCA (Master of Computer Applications) or MBA (Master of Business Administration), to enhance their career prospects.

Career Paths After B.Tech

B.Tech graduates enjoy a wider range of career opportunities, including:

1. Software Engineer: Develop and maintain software systems, applications, and tools.

2. Hardware Engineer: Design and develop computer hardware components and systems.

3. Network Engineer: Plan, implement, and manage computer networks and communication systems.

4. Data Scientist: Analyze complex data sets to inform business decisions and strategies.

5. Quality Assurance Engineer: Test software products for quality and performance.

6. Project Manager: Oversee engineering projects, ensuring they are completed on time and within budget.

Additionally, B.Tech graduates can pursue higher studies such as M.Tech (Master of Technology) or MBA for further specialization and career advancement.

Pros and Cons of BCA and B.Tech

Pros of BCA

- Shorter Duration: The three-year program allows students to enter the workforce sooner.

- Practical Focus: Emphasis on hands-on training equips students with relevant skills for the industry.

- Specialization in Software: Ideal for students passionate about software applications and development.

Cons of BCA

- Limited Scope: Career opportunities may be narrower compared to B.Tech, particularly in engineering roles.

- Higher Education Required: Graduates often pursue further studies for better job prospects.

Pros of B.Tech

- Broader Career Opportunities: Graduates can enter various engineering fields beyond software.

- Higher Salary Potential: B.Tech graduates often command higher starting salaries compared to BCA graduates.

- Robust Curriculum: Comprehensive education in engineering principles prepares students for complex problem-solving.

Cons of B.Tech

- Longer Duration: The four-year program means students spend more time before entering the workforce.

- Intensive Curriculum: The rigorous academic demands may be challenging for some students.

Factors to Consider When Choosing Between BCA and B.Tech

Career Goals

Consider your long-term career aspirations. If you aim to work specifically in software development or IT management, BCA may be a better fit. Conversely, if you are interested in a broader engineering career or wish to specialize in areas like electronics or civil engineering, B.Tech is the more suitable choice.

Personal Interests

Reflect on your interests and strengths. If you are passionate about coding and software applications, BCA course kolkata may align more closely with your passions. If you enjoy theoretical problem-solving and have an interest in engineering concepts, B.Tech might be the better path.

Job Market Trends

Research current job market trends in your desired field. B.Tech graduates may have access to a wider array of job opportunities, particularly in engineering and technology. BCA graduates can also find success, especially in software roles, but may face stiffer competition in certain areas.

Financial Considerations

Evaluate the financial aspects of each program, including tuition fees and potential salary outcomes. While B.Tech programs may be more expensive, they can lead to higher-paying jobs, potentially offsetting the initial costs.

Further Education

If you plan to pursue higher education, consider how each degree aligns with your future studies. Both BCA and B.Tech graduates can pursue master's degrees, but B.Tech may offer more options for specialization in engineering-related fields.

Choosing between BCA and B.Tech ultimately depends on your career goals, interests, and aspirations. BCA is an excellent choice for those looking to dive into software applications and IT management with a shorter study duration. On the other hand, B.Tech provides a broader engineering education, preparing students for various technical roles with the potential for higher salaries.

It’s essential to assess your strengths, interests, and long-term objectives before making a decision. Consider speaking with academic advisors, industry professionals, or recent graduates to gain further insights into each path. By understanding the nuances of BCA and B.Tech, you can make an informed choice that aligns with your passion and career aspirations, paving the way for a successful future in the ever-evolving world of technology.

0 notes

Text

DBMS in Hindi - Aggregation

DBMS in Hindi – Aggregation

aggregation in dbms, aggregation kya hai, DBMS Aggregation in Hindi

DBMS Aggregation in Hindi

एकत्रीकरण (aggregation) में, दो entities के बीच संबंध को एक entity के रूप में माना जाता है। एकत्रीकरण में, इसकी संबंधित entities के साथ संबंध एक higher level इकाई में एकत्रित होता है।

उदाहरण के लिए:यदि कोई visitor किसी कोचिंग सेंटर में जाता है, तो वह कभी भी कोर्स के बारे में या सिर्फ सेंटर के बारे में…

View On WordPress

#aggregation function in dbms#aggregation in dbms#aggregation in dbms in hindi#aggregation in dbms pdf#aggregation in dbms tutorialspoint#aggregation in dbms wikipedia#association in dbms#cardinality ratio in dbms#categorization in dbms#difference between specialization and generalization in dbms#difference between specialization and generalization in dbms in hindi#er diagram in dbms#generalization and specialization in software engineering#generalization in dbms in hindi#generalization in javatpoint#generalization kya hai#generalization or specialization pdf#generalization tutorialspoint#how specialization is different from generalization#specialization in dbms#translating er diagrams with aggregation#what is generalization in hindi#what is the opposite of a generalization relation in a concept hierarchy

0 notes

Text

Which Is The Best PostgreSQL GUI? 2021 Comparison

PostgreSQL graphical user interface (GUI) tools help open source database users to manage, manipulate, and visualize their data. In this post, we discuss the top 6 GUI tools for administering your PostgreSQL hosting deployments. PostgreSQL is the fourth most popular database management system in the world, and heavily used in all sizes of applications from small to large. The traditional method to work with databases is using the command-line interface (CLI) tool, however, this interface presents a number of issues:

It requires a big learning curve to get the best out of the DBMS.

Console display may not be something of your liking, and it only gives very little information at a time.

It is difficult to browse databases and tables, check indexes, and monitor databases through the console.

Many still prefer CLIs over GUIs, but this set is ever so shrinking. I believe anyone who comes into programming after 2010 will tell you GUI tools increase their productivity over a CLI solution.

Why Use a GUI Tool?

Now that we understand the issues users face with the CLI, let’s take a look at the advantages of using a PostgreSQL GUI:

Shortcut keys make it easier to use, and much easier to learn for new users.

Offers great visualization to help you interpret your data.

You can remotely access and navigate another database server.

The window-based interface makes it much easier to manage your PostgreSQL data.

Easier access to files, features, and the operating system.

So, bottom line, GUI tools make PostgreSQL developers’ lives easier.

Top PostgreSQL GUI Tools

Today I will tell you about the 6 best PostgreSQL GUI tools. If you want a quick overview of this article, feel free to check out our infographic at the end of this post. Let’s start with the first and most popular one.

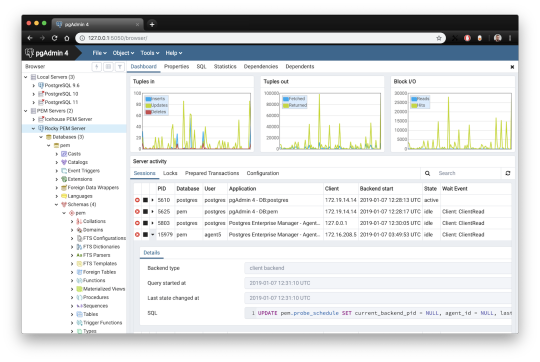

1. pgAdmin

pgAdmin is the de facto GUI tool for PostgreSQL, and the first tool anyone would use for PostgreSQL. It supports all PostgreSQL operations and features while being free and open source. pgAdmin is used by both novice and seasoned DBAs and developers for database administration.

Here are some of the top reasons why PostgreSQL users love pgAdmin:

Create, view and edit on all common PostgreSQL objects.

Offers a graphical query planning tool with color syntax highlighting.

The dashboard lets you monitor server activities such as database locks, connected sessions, and prepared transactions.

Since pgAdmin is a web application, you can deploy it on any server and access it remotely.

pgAdmin UI consists of detachable panels that you can arrange according to your likings.

Provides a procedural language debugger to help you debug your code.

pgAdmin has a portable version which can help you easily move your data between machines.

There are several cons of pgAdmin that users have generally complained about:

The UI is slow and non-intuitive compared to paid GUI tools.

pgAdmin uses too many resources.

pgAdmin can be used on Windows, Linux, and Mac OS. We listed it first as it’s the most used GUI tool for PostgreSQL, and the only native PostgreSQL GUI tool in our list. As it’s dedicated exclusively to PostgreSQL, you can expect it to update with the latest features of each version. pgAdmin can be downloaded from their official website.

pgAdmin Pricing: Free (open source)

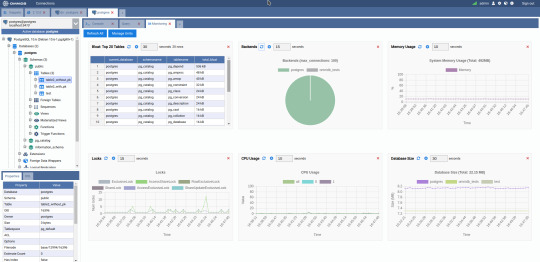

2. DBeaver

DBeaver is a major cross-platform GUI tool for PostgreSQL that both developers and database administrators love. DBeaver is not a native GUI tool for PostgreSQL, as it supports all the popular databases like MySQL, MariaDB, Sybase, SQLite, Oracle, SQL Server, DB2, MS Access, Firebird, Teradata, Apache Hive, Phoenix, Presto, and Derby – any database which has a JDBC driver (over 80 databases!).

Here are some of the top DBeaver GUI features for PostgreSQL:

Visual Query builder helps you to construct complex SQL queries without actual knowledge of SQL.

It has one of the best editors – multiple data views are available to support a variety of user needs.

Convenient navigation among data.

In DBeaver, you can generate fake data that looks like real data allowing you to test your systems.

Full-text data search against all chosen tables/views with search results shown as filtered tables/views.

Metadata search among rows in database system tables.

Import and export data with many file formats such as CSV, HTML, XML, JSON, XLS, XLSX.

Provides advanced security for your databases by storing passwords in secured storage protected by a master password.

Automatically generated ER diagrams for a database/schema.

Enterprise Edition provides a special online support system.

One of the cons of DBeaver is it may be slow when dealing with large data sets compared to some expensive GUI tools like Navicat and DataGrip.

You can run DBeaver on Windows, Linux, and macOS, and easily connect DBeaver PostgreSQL with or without SSL. It has a free open-source edition as well an enterprise edition. You can buy the standard license for enterprise edition at $199, or by subscription at $19/month. The free version is good enough for most companies, as many of the DBeaver users will tell you the free edition is better than pgAdmin.

DBeaver Pricing

: Free community, $199 standard license

3. OmniDB

The next PostgreSQL GUI we’re going to review is OmniDB. OmniDB lets you add, edit, and manage data and all other necessary features in a unified workspace. Although OmniDB supports other database systems like MySQL, Oracle, and MariaDB, their primary target is PostgreSQL. This open source tool is mainly sponsored by 2ndQuadrant. OmniDB supports all three major platforms, namely Windows, Linux, and Mac OS X.

There are many reasons why you should use OmniDB for your Postgres developments:

You can easily configure it by adding and removing connections, and leverage encrypted connections when remote connections are necessary.

Smart SQL editor helps you to write SQL codes through autocomplete and syntax highlighting features.

Add-on support available for debugging capabilities to PostgreSQL functions and procedures.

You can monitor the dashboard from customizable charts that show real-time information about your database.

Query plan visualization helps you find bottlenecks in your SQL queries.

It allows access from multiple computers with encrypted personal information.

Developers can add and share new features via plugins.

There are a couple of cons with OmniDB:

OmniDB lacks community support in comparison to pgAdmin and DBeaver. So, you might find it difficult to learn this tool, and could feel a bit alone when you face an issue.

It doesn’t have as many features as paid GUI tools like Navicat and DataGrip.

OmniDB users have favorable opinions about it, and you can download OmniDB for PostgreSQL from here.

OmniDB Pricing: Free (open source)

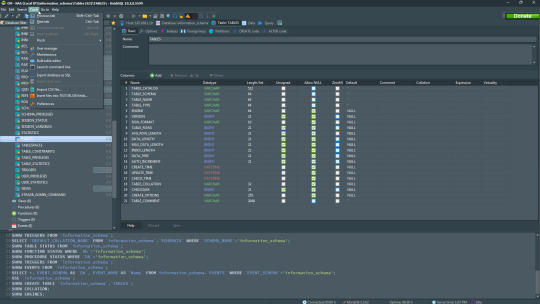

4. DataGrip

DataGrip is a cross-platform integrated development environment (IDE) that supports multiple database environments. The most important thing to note about DataGrip is that it’s developed by JetBrains, one of the leading brands for developing IDEs. If you have ever used PhpStorm, IntelliJ IDEA, PyCharm, WebStorm, you won’t need an introduction on how good JetBrains IDEs are.

There are many exciting features to like in the DataGrip PostgreSQL GUI:

The context-sensitive and schema-aware auto-complete feature suggests more relevant code completions.

It has a beautiful and customizable UI along with an intelligent query console that keeps track of all your activities so you won’t lose your work. Moreover, you can easily add, remove, edit, and clone data rows with its powerful editor.

There are many ways to navigate schema between tables, views, and procedures.

It can immediately detect bugs in your code and suggest the best options to fix them.

It has an advanced refactoring process – when you rename a variable or an object, it can resolve all references automatically.

DataGrip is not just a GUI tool for PostgreSQL, but a full-featured IDE that has features like version control systems.

There are a few cons in DataGrip:

The obvious issue is that it’s not native to PostgreSQL, so it lacks PostgreSQL-specific features. For example, it is not easy to debug errors as not all are able to be shown.

Not only DataGrip, but most JetBrains IDEs have a big learning curve making it a bit overwhelming for beginner developers.

It consumes a lot of resources, like RAM, from your system.

DataGrip supports a tremendous list of database management systems, including SQL Server, MySQL, Oracle, SQLite, Azure Database, DB2, H2, MariaDB, Cassandra, HyperSQL, Apache Derby, and many more.

DataGrip supports all three major operating systems, Windows, Linux, and Mac OS. One of the downsides is that JetBrains products are comparatively costly. DataGrip has two different prices for organizations and individuals. DataGrip for Organizations will cost you $19.90/month, or $199 for the first year, $159 for the second year, and $119 for the third year onwards. The individual package will cost you $8.90/month, or $89 for the first year. You can test it out during the free 30 day trial period.

DataGrip Pricing

: $8.90/month to $199/year

5. Navicat

Navicat is an easy-to-use graphical tool that targets both beginner and experienced developers. It supports several database systems such as MySQL, PostgreSQL, and MongoDB. One of the special features of Navicat is its collaboration with cloud databases like Amazon Redshift, Amazon RDS, Amazon Aurora, Microsoft Azure, Google Cloud, Tencent Cloud, Alibaba Cloud, and Huawei Cloud.

Important features of Navicat for Postgres include:

It has a very intuitive and fast UI. You can easily create and edit SQL statements with its visual SQL builder, and the powerful code auto-completion saves you a lot of time and helps you avoid mistakes.

Navicat has a powerful data modeling tool for visualizing database structures, making changes, and designing entire schemas from scratch. You can manipulate almost any database object visually through diagrams.

Navicat can run scheduled jobs and notify you via email when the job is done running.

Navicat is capable of synchronizing different data sources and schemas.

Navicat has an add-on feature (Navicat Cloud) that offers project-based team collaboration.

It establishes secure connections through SSH tunneling and SSL ensuring every connection is secure, stable, and reliable.

You can import and export data to diverse formats like Excel, Access, CSV, and more.

Despite all the good features, there are a few cons that you need to consider before buying Navicat:

The license is locked to a single platform. You need to buy different licenses for PostgreSQL and MySQL. Considering its heavy price, this is a bit difficult for a small company or a freelancer.

It has many features that will take some time for a newbie to get going.

You can use Navicat in Windows, Linux, Mac OS, and iOS environments. The quality of Navicat is endorsed by its world-popular clients, including Apple, Oracle, Google, Microsoft, Facebook, Disney, and Adobe. Navicat comes in three editions called enterprise edition, standard edition, and non-commercial edition. Enterprise edition costs you $14.99/month up to $299 for a perpetual license, the standard edition is $9.99/month up to $199 for a perpetual license, and then the non-commercial edition costs $5.99/month up to $119 for its perpetual license. You can get full price details here, and download the Navicat trial version for 14 days from here.

Navicat Pricing

: $5.99/month up to $299/license

6. HeidiSQL

HeidiSQL is a new addition to our best PostgreSQL GUI tools list in 2021. It is a lightweight, free open source GUI that helps you manage tables, logs and users, edit data, views, procedures and scheduled events, and is continuously enhanced by the active group of contributors. HeidiSQL was initially developed for MySQL, and later added support for MS SQL Server, PostgreSQL, SQLite and MariaDB. Invented in 2002 by Ansgar Becker, HeidiSQL aims to be easy to learn and provide the simplest way to connect to a database, fire queries, and see what’s in a database.

Some of the advantages of HeidiSQL for PostgreSQL include:

Connects to multiple servers in one window.

Generates nice SQL-exports, and allows you to export from one server/database directly to another server/database.

Provides a comfortable grid to browse and edit table data, and perform bulk table edits such as move to database, change engine or ollation.

You can write queries with customizable syntax-highlighting and code-completion.

It has an active community helping to support other users and GUI improvements.

Allows you to find specific text in all tables of all databases on a single server, and optimize repair tables in a batch manner.

Provides a dialog for quick grid/data exports to Excel, HTML, JSON, PHP, even LaTeX.

There are a few cons to HeidiSQL:

Does not offer a procedural language debugger to help you debug your code.

Built for Windows, and currently only supports Windows (which is not a con for our Windors readers!)

HeidiSQL does have a lot of bugs, but the author is very attentive and active in addressing issues.

If HeidiSQL is right for you, you can download it here and follow updates on their GitHub page.

HeidiSQL Pricing: Free (open source)

Conclusion

Let’s summarize our top PostgreSQL GUI comparison. Almost everyone starts PostgreSQL with pgAdmin. It has great community support, and there are a lot of resources to help you if you face an issue. Usually, pgAdmin satisfies the needs of many developers to a great extent and thus, most developers do not look for other GUI tools. That’s why pgAdmin remains to be the most popular GUI tool.

If you are looking for an open source solution that has a better UI and visual editor, then DBeaver and OmniDB are great solutions for you. For users looking for a free lightweight GUI that supports multiple database types, HeidiSQL may be right for you. If you are looking for more features than what’s provided by an open source tool, and you’re ready to pay a good price for it, then Navicat and DataGrip are the best GUI products on the market.

Ready for some PostgreSQL automation?

See how you can get your time back with fully managed PostgreSQL hosting. Pricing starts at just $10/month.

While I believe one of these tools should surely support your requirements, there are other popular GUI tools for PostgreSQL that you might like, including Valentina Studio, Adminer, DB visualizer, and SQL workbench. I hope this article will help you decide which GUI tool suits your needs.

Which Is The Best PostgreSQL GUI? 2019 Comparison

Here are the top PostgreSQL GUI tools covered in our previous 2019 post:

pgAdmin

DBeaver

Navicat

DataGrip

OmniDB

Original source: ScaleGrid Blog

3 notes

·

View notes

Text

COMMUNICATION

I) Introduction :

“Communication is your ticket to success, if you pay attention and learn to do it effectively” - Theo Gold (Author of Positive Thinking)

The very vital ingredient of life is to share feelings, expressions, to be get heard and add meanings. In fact, the key to life is means to communicate. In other word, we can say, only through communication can human life hold meaning. The process of understanding each other, express ideas, sharing opinion and passing of information or facts. And therefore, it’s imperative to be potent with effective communication skills and techniques in order to enrich the communication process more meaningful and efficient, eventually to be successful in any desired aim or task. We all are bind in relationship whether at home, workplace or in social affairs. Base of successful relationship is communication, and to do it effectively we have to be master in the art of effective communication. To communicate effectively, one must understand the emotion behind the information being said. Understanding communication skills such as; listening, verbal and non-verbal communication, and managing stress can help better the relationships one has with others.

“Your ability to communicate is an important tool in your pursuit of your goals, whether it is with your family, your co-workers or your clients and customers” - Lee Brown (American politician, criminologist and businessman)

For many, communication seems like a gift. In reality, it is a skill that can be learned through education and practice. Thus, I strongly believe that, each and every individual can grow and become successful in their respective filed and achieve their desired goal if they are championed in effective communication and eager to learn and adopt it as their essential skill set.

II) About Me :

Born and brought up in defense area, a town in India, my upbringing has great influenced of military culture. Being retried naval personnel, my father has always given utmost important to disciplined life be it in education, sports or workplace. My mother, a housemaker, truly believe in freedom of open thinking and expression. She has been source of inspiration for us as siblings to pursue our dreams and has her immense support in every manner to achieve it. I, being the youngest, had more privilege to be with her and get nurtured under the shadow as the wife of warrior, a tough warrior in real life.

As a defense ward, I was fortunate for having had my schooling in military school throughout and chance of meeting and interacting with colleagues coming from different part of the country. Spending my early life with friends, each one with special personality may it be their language, culture, living style, faith etc., was actually the great learning. I must say, defense kids are breed apart. They can adjust everywhere and has ability to manage life with everyone because of their wide exposure in their initial days. They are really blessed with skills to express themselves quite effectively and bond easily to create value network in life.

Post completing my graduation, I moved to metro city New Delhi. City with full of scope and hope. Opportunity in every field and avenue to fulfill our dreams. I did my post-graduation (PG) here with an ambition of successful career in corporate world, and hence PG in an MBA with finance and marketing as specialization. Since then I’m a working professional in different sectors namely IT/ITES, HR Consulting and Real Estate respectively. My work domain largely involved; business development, marketing communication (MarCom), client relationship (CRM) and event management. My key result area (KRAs) also involved the part of database management (DBMS), management information (MIS) and team handling.

With having experience of 12 years in different sectors and domain altogether, I always find a scope of learning, improvement and areas to challenge myself to upscale a level ahead from where I was last standing. Upgrading the communication tactics and strategy is organization demand to align with sophisticated corporate purpose and achieving core objectives. Sometime rejection and disapproval are obvious outcome. However, answer to all is keep on brushing and strengthening the communication strategies, keep it effective and nurture leadership quality with dynamic approach simultaneously.

“When you give yourself permission to communicate what matters to you in every situation you will have peace despite rejection or disapproval. Putting a voice to your soul helps you to let go of the negative energy of fear and regret” - Shannon L. Alder (An inspirational author)

III) Communication strategy and leadership:

Taking role as senior executive level, it’s important to quickly establish or elevate communication skill sets or program. I understand that, the higher we go, more people within the organization would want to know about what we are going to do and how will we do it. We may have inherited hundreds of staff distributed across the world, to whom we may need to communicate regarding our renewed mission, strategy or brand objectives. Furthermore, there may be numerous other stakeholders outside the company that we have to communicate to, like investors, banks, customers etc. Disciplined communication strategy is essential to get across the critical message to key stakeholders without it being drowned by the noise or lost in translation.

It is crucial to implement excellent communication strategy for success in business world. To encourage members of a company to work together effectively. How team and team members within a company interact determines whether projects will run smoothly or be fraught with challenges. This is where leadership comes in. Good leadership and effective communication go hand in hand. Leaders interact with every team and a large number of employees, how a leader communicates sets the tone for the rest of the organization. Good leader should able to motivate, persuade and encourage others to work towards a common goal.

“When the conduct of men is designed to be influenced, persuasion, kind, unassuming persuasion, should ever be adopted. It is an old and a true maxim, that ‘a drop of honey catches more flies than a gallon of gall” - Abraham Lincoln (Statesman, lawyer and former US president)

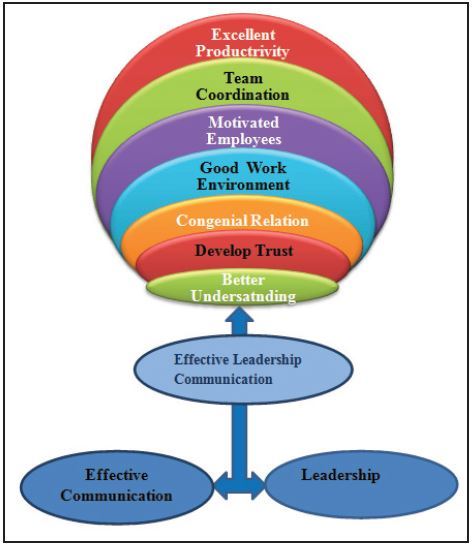

It’s essential to identify the leadership style for better understanding on how we must interact with, and perceived by, employees across the organization. Irrespective of the position, we need to develop our individual leadership style and cultivate the essential habit of self-awareness. Even before entering the managerial position, leadership qualities are required depending upon the context and situation. It may be goal oriented, action based, people centric, behavioral etc. Excellent communication skills are required to manage a team at workplace or to manage organization efficiently. And communication gets affected by different leadership styles. To conclude, effective communication and leadership together gives an effective leadership communication. Communication makes a leader effective who develops better understanding in teams. These understanding bring a sense of trust in employees on the leader and on each other, work together, which further reinforce congenial relations with team members and creates an excellent work atmosphere. This enhance the dedication towards work and eventually helps to achieve the desired targets. Conceptual model of effective leadership communication can be explained as below –

Strategic Narrative -

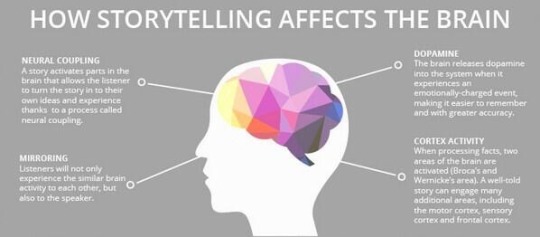

There has been a tangential shift in the way communication is being approached in organizations today. This tangential shift from a formal directive method of communication to a more engaging and inclusive conversational style. The distance between the sender and the receiver is getting shorter and the need for inclusivity and relationship building through communication is getting stronger. One of the major reasons for this shift is the evolution of the workforce and the relationships they hope to make in the workplace. Formality and hierarchy have made way for equality and a flatter organization structure. It’s a common refrain in executive suites these days: “We need a new narrative.” Therefore, story telling is very effective way to excite, attract customers, to engage and motivate. A story that is concise but comprehensive.

“Storytelling can be described as the art of communication using stories and narratives”

When a person needs to be motivated or action is desired out of him/her, communication in the form of stories will generate a stronger reaction when compared to passive data given to him/her.

Active Listening, Receiving and Implementing Feedback –

“When people talk, listen completely. Most people never listen” - Ernest Hemingway (An American journalist, novelist, short-story writer, and sportsman)

Effective leaders know when they need to talk and, more importantly, when they need to listen. employees’ opinions, ideas, and feedback are valuable. And when they do share, actively engage in the conversation—pose questions, invite them to elaborate, and take notes. It’s important to stay in the moment and avoid interrupting. It’s critical, though, that you don’t just listen to the feedback. You also need to act on it, to build up the faith, trust and transparency. By letting your employees know they were heard and then apprising them of any progress you can, or do, make, they’ll feel as though you value their perspective and are serious about improving.

IV) Conclusion :

Communication is the core of effective leadership. To influence and inspire the team, we’ve to be championed in transparency and practicing empathy. Need to understand how other perceive one’s perspective basis on verbal and non-verbal cues. Figure out the scope of improvement and development process and align the plan to guide and track progress.

#effective communication#leadership#leadership communication#storytelling#active listening#persuasion#empathy#compassion#teambuilding#transparency#feedback

4 notes

·

View notes

Text

Laravel vs Yii: Best PHP Framework for Web Development

PHP is a popular general-purpose programming language that is excellent for creating websites and can be incorporated into HTML. 3,090,319 active websites are still utilizing PHP, according to Built with. Other than PHP's fundamental functionality, developers have other options when it comes to building useful web applications. Laravel is a straightforward PHP framework originally developed as a superior alternative to Code igniter, which is often used for developing web-based or web applications. An open-source PHP framework called Yii Framework allows for the quick development of contemporary Web applications. Despite being both extensions of the PHP language, the two frameworks each have unique features that provide them a competitive advantage.

Components of Laravel

Some of the integral components of Laravel Framework are:

● Artisan, a command-line tool, makes it easier to create models, controls, schedule tasks, and execute a wide range of custom commands.

● Eloquent is a database interaction framework for Object-Relational Mapping (ORM).

● Supports all database formats using packages.

● Allows for versioning and database updates after migrations.

● Simple PHP code may be used in views thanks to Blade Template Engine.

● There are hundreds of Laravel standard libraries available.

● Extensive and practical government material that is constantly updated.

Components of Yii

Some of the key components of the Yii Framework include:

● DBMS that supports a wide range of DBMS, including PostgreSQL, MySQL, SQLite, Oracle, and others.

● Support for third-party template engines.

● Strong community support with several tutorials and official documentation.

● Gii extension accelerates coding to produce code automatically.

Key Differences between Laravel vs Yii

Although both frameworks are excellent at what they do and essential for web development, there are significant differences between them, including:

Installation

Both frameworks are compatible with PHP 5.4 or newer. Yii has all the required extensions built-in, thus all that is needed to install it is to unpack the package into a web-accessible subdirectory. Laravel, on the other hand, may be installed using Composer or the Laravel installer and needs extra extensions like PHP JSON and MCrypt PHP.

Client-side and scenario-based Validation

While Yii allows users to validate models in both forms, Laravel does not support either sort of validation.

Routing Capabilities

Resource routing is supported by Laravel, but all controllers must specify the routes for each route. Yii's framework by default selects the routes automatically.

CRUD Generation

Only Yii enables automatically producing frequently used interactive code snippets using a specialized plugin called Gii.

Extension Support

Compared to Yii, which only supports a meagre 2800 extensions, Laravel provides a powerful extension support for over 9000 extensions.

Migrations

Both frameworks make migrations relatively simple since they both have methods for ensuring that no data is lost when switching from one database structure to another.

Testing Capabilities

Yii offers PHPUnit and Codeception out of the box, while Laravel offers PHPUnit and various Symfony testing components like Httpkernel, DomCrawler, Browserkit, etc., for detecting and debugging any bugs in operations.

Security

For password security, authentication, defence against SQL Injections, Cross-Site Scripting, and other security risks, Yii and Laravel both provide solutions. While Laravel needs third-party plugins, Yii's role-based access control mechanism is more feature-rich.

Documentation

Yii does not have a high learning curve, however it does not have particularly good documentation. Laravel has a vast, organized documentation with in-depth information but the complexity makes it difficult to find the correct information quickly.

Wrapping Up

When it comes to audience, Laravel is a fairly narrowly focused framework that solely targets web developers. On the other hand, Yii has a wider clientele and offers support for system administrators, novice web developers, and other users. Vindaloo Softtech can create strong, feature-rich web apps and websites to fulfil the complicated demands of organizations with shorter response times since they have both Yii and Laravel engineers on their staff.

#Laravel vs Yii#Laravel#Yii#PHP Development#Laravel Web Development#Laravel Framework#Yii Framework#Web Development#PHP Framework#Web App Development

0 notes

Text

Most Popular SQL Interview Questions For Basic To Advanced

These SQL Interview Questions are completely dedicated to Modernization of the way we used to maintain records has changed drastically, a lot of data being generated nowadays are in the form of pictures or videos that have become our sole source of keeping memories intact for many years to come. Similarly, multiple organizations and firms have employed a similar method in storing the company data in the form of digitized documents stored away in Database Management Systems (DBMS) which require a special style of language to mine or extract data that the company wishes to extract from its database and here where SQL comes in.

What is SQL?

Structured Query Language (SQL) is a domain-specific programming language that is utilized by skilled professionals to manage data stored in the company’s database. SQL skills are in high demand in the market and serve as the foundational basics for any professional looking for a job or brighter prospects in the data industry. Here are a set of SQL Interview Questions that we believe you should prepare for SQL before going for an interview.

Here are Popular SQL Interview Questions & Answers Lists:-

Q1. Describe a DBMS?

Database Management System (DBMS) is software that is solely responsible for creating, controlling, maintaining, and use of a database. DBMS may be defined as a folder that manages data in a database rather than saving a file in the system.

Q2. Define an RDBMS?

RDBMS abbreviates for Relational Database Management System. It is categorized with the storage of data into a compilation of tables, which are linked by similar topics between the columns of a table. It aids the user with relational operators to influence the data stored in the tables.

Q3. Describe SQL?

SQL abbreviates to Structured Queried Language and aids the operator to communicate with the database. It is a standard operating language that helps execute responsibilities such as recovery, updating, incorporating, and expunging data from the database.

Q4. Define a Database?

It is a structured table of data made to easily access, store, retrieve, and manage data.

Q5. Describe MySQL?

It is a multi-threaded, multiuser structured query language database management system with more than 11 million installations across the globe. The language is the second most well-known and popularly used open-source database programming in use.

MySQL is an oracle-sponsored relational database management system (RDBMS) built on structured query language. It is supported by several operating systems which include Windows, LINUX, iOS, etc.

Q6. My SQL has been written in which language?

C & C++ are the languages in which MySQL has been written

Q7. Mention the technical specifications of MySQL?

Below are the technical specifications of MySQL:-

Drivers

Flexible structure

Geospatial support

Graphical tools

JSON support

OLTP and transactions

High performance

Manageable and easy to use

MySQL Enterprise Monitor

MySQL Enterprise Security

Replication and high availability

Security & storage management

Q8. Describe the difference between SQL and MySQL?

SQL stands for structured query language and is used to interact with databases like MySQL

MySQL is a database management system used for the structured storage of data

A PHP script is needed to store and mine data from this database

SQL is a computer language whereas MySQL is an application

SQL is used for the creation of various Database Management Systems (DBMS)

Q9. Distinguish between the database and a table?

There are 4 noticeable differences between a database & a table:-

Tables showcase structured data in a database, whereas a database is a collection of tables

Tables are grouped with relations to create a dataset; the dataset forms the database.

Data stored in the table in any form is part of the database, but the other way around is not possible.

A table is a collection of rows and columns used to store data, whereas a database is a collection of organized data and features used to access tables.

Q10. Distinguish between Tables and fields?

A table is a compilation of cells that are structured in a model which eventually form tables and rows. Columns may be categorized as a vertical collection of cells and rows may be categorized as a horizontal collection of cells.

There is also a reference to the cells laid out in a column to create an entity also termed a field once a header is provided to the so-called column.

A field may have several rows which may constitute a record.

E.g.

Table name:- Employee

Field name’s:- Emp ID, Emp Name, Date of Birth

Data:- 2866, Daniel Decker, 29/02/1984

Q11. What is the purpose of using a MySQL database server?

Below are some of the reasons why MySQL server is so famous with its users:-

MySQL is an open-source database management system that is free of charge for private developers and small enterprises.

MySQL’s community is vast and supportive, thus any issues faced are resolved at the earliest.

Has multiple stable versions available

It extremely quick, dependable and is beginner-friendly

The download is free of cost

Q12. Describe the various tables present in MySQL?

There are majorly 5 tables present in MySQL: –

MyISAM

Heap

Merge

INNO DB

ISAM

Q13. How can an Operator install MySQL?

There are multiple ways of installing MySQL in one’s system, but the best way to do it is manual. The manual installation allows the user to gain a better understanding of the system and aids in an additional grasp of the database. There are several benefits linked to the manual installation of MySQL:-

Reinstalling, creating backups, or moving databases can be achieved in less than a minute.

Provides precise control over how and when MySQL closes or starts.

MySQL can even be installed in a USB drive

Q14. How can a user check the MySQL version?

In WINDOWS MySQL command-line tool shows the version information without using any flags, but for a piece of more detailed information the operator may always feed in the below-mentioned command

MySQL> SHOW VARIABLES LIKE “%version%”.

and it will show a detailed discretion of the version of SQL that the user is using.

Q15. How to add columns in MySQL?

Several cells in a table are what may constitute a column and a set of cells in a column constitutes a row. To add columns in MySQL, the following statement of ALTER TABLE may be used:

ALTER TABLE table_name

ADD COLUMN column_name column_definition [FIRST|AFTER existing_column].

Q16. In MySQL how can you delete a table?

The drop table statement not only removes the data in the table, but it also removes the structure and definition from the database permanently. Thus, the user needs to be extremely careful whilst using this command, the reason is that once deleted there is no recovery option in MySQL. The command is as follows:-

DROP TABLE table_name

Q17. Define a Primary Key?

A primary Key may be described as a compilation of fields that meticulously define a row. This is a Unique Key and has an unspoken NOT NULL constraint, implicating Primary keys cannot have NULL values.

Q18. Define a Unique Key?

This key provides a separately pre-defined constraint that exclusively distinguishes every record in the database which insinuates a distinctiveness for the column or the set of columns.

Q19. Define a Foreign Key?

This is a key that can be linked to the Primary Key of another table. Connections need to be fabricated between the two tables by providing a reference to the foreign key with the primary key of another table.

Q20. Describe a Join?

It is a keyword utilized to question data from multiple tables established on the connections between the fields of the table. Keys play a crucial part when JOINs are employed.

Q21. Describe the multiple ‘JOIN’ and explain each?

JOIN’s are tools that help the user retrieve data and depend on the links between tables. Following are the types of ‘JOIN’ used in SQL:-

Inner JOIN – This JOIN is used to return rows with at least a single match of rows between tables

Right, JOIN – This JOIN helps return rows that are similar between tables and all rows of the right-hand side table. To simplify this JOIN returns all rows from the right-hand side table irrespective of any matches from the left-hand side table.

Left JOIN – This JOIN helps return rows that are similar between tables and all rows of the left-hand side table. To simplify this JOIN returns all rows from the left-hand side table irrespective of any matches from the right-hand side table.

Full JOIN – This JOIN returns rows if there are any similar rows in any of the tables. So basically, this JOIN returns all the rows from both the right-hand and left-hand side tables.

Q22. Define Normalization?

It is the procedure of reducing redundancies and dependencies by structuring fields and tables of a database. The primary motive of ‘Normalization’ is to add, modify, and delete that can be merged into a single table.

Q23. Define De-Normalization?

It is a method employed to gain access to data from higher to lower normal types of databases. It is also a way of implementing redundancy into a table by integrating data from the correlated tables.

Q24. Distinguish the multiple normalizations?

Normalizations may be dissected into 5 forms:-

First Normal Form (1NF) :- It helps in eliminating all identical columns from a table which aids the formation of tables for the associated data and recognition of distinctive columns.

Second Normal Form (2NF):– Meeting all the obligations of the 1NF. Retaining the subsets of data in separate tables and the creation of relations between tables using primary keys.

Third Normal Form (3NF):– This shall meet all the constraints of 2NF and eradicate the columns which are not reliant on the primary key limits.

Fourth Normal Form (4NF):– Meeting all the obligations of the 3NF and it shall not have multi-valued dependencies.

Q25. Define a View?

The view is a computer-generated table that comprises a subsection of data enclosed in a table. Views are NOT virtually present and require a lesser amount of storage capacity. The view can have data of one or more tables pooled in one and depends on the connection.

Q26. Define an Index?

An index is a routine tweaking method to permit faster reclamation of records from a table. An Index designs an entry for every value which makes data recovery quicker.

Q27. Describe the distinct kinds of Indexes?

There are primarily 3 types of Index’s:-

Unique Index – This style of Indexing does not grant the field to have identical values if the column is uniquely indexed. This Index can be used spontaneously once the primary key is defined.

Clustered Index – This style of index rearranges the raw order of the table and searches based on key values. Each table may consist of only one Clustered Index.

Non-Clustered Index – Non-Clustered Index does not modify the raw order of the table and retains the plausible order of data. Each table may consist of 999 non-clustered indexes.

Q28. Define a Cursor?

A database cursor is a command which facilitates a cross-over of the rows/records in a table. This may be visible as a hint to one row in a collection of rows. It is extremely useful for traversing the retrieval, addition, and removal of database archives.

Q29. Define a Database relationship and what are they in SQL?

It is defined as the link between the tables in a database. There are several database-based relationships, and they are as follows:-

One to One relationship

To Many relationships

Many to One relationship

Self-referencing relationship

Q30. Describe a Query?

A database query is a code created to recover information from the database. The query may be fabricated in a way to match the user’s expectation of the result set which may simply be a question to the database.

Q31. Describe a Subquery?

As the word describes, it is a query inside a query. The exterior query is known as the – Main Query and the innermost query is called a Subquery. Subqueries are forever implemented first and the outcome from the Subquery is then passed on to the main query.

Q32. Describe the types of Subqueries?

There are majorly 2 styles of subqueries:-

Correlated Subquery – These cannot be considered as independent queries but may refer to the column in a table listed in the FROM the list of the main query.

Non-Correlated Subquery – These may be considered as independent queries and the output of these subqueries may be substituted in the main query.

Q33. Define a Stored Procedure?

This procedure is a function that comprises several SQL statements to access the DBMS. Multiple SQL statements are compiled into a ‘Stored Procedure’ and may be employed anywhere as per requirement basis.

Q34. Define a ‘Trigger’?

The trigger is a code that automatically executes with some event on a table or with a view in a database. E.g., On the joining of a new hire, new records need to be entered in fields like employee ID, Name, Date of birth, etc.

Q35. Distinguish between DELETE and TRUNCATE commands?

DELETE command is utilized to delete rows from the table and a WHERE clause may be applied for a provisional set of considerations. Commit and Rollback may be executed post deletion of the statement.

TRUNCATE deletes every row from the table. Truncate control cannot be turned backward.

Q36. Define Local and global variables and describe their disparities?

Local variables are the variables that can be applied or occur within the function. They are unknown to the other functions and cannot be referred to or utilized. Variables can be established whenever the functions are called.

Global variables are the variables that can be employed or be present all over the program. An identical variable proclaimed in a global variable cannot be utilized in functions. Global variables cannot be established whenever a particular function is called.

Q37. Define a CONSTRAINT?

A constraint may be employed to restrict the data type of a table. It may also be specified at the time of creating or altering the table. Some examples of constraints are:-

NOT NULL

CHECK

DEFAULT

UNIQUE

PRIMARY KEY

FOREIGN KEY

Q38. Define Data Integrity?

It defines the precision and consistency of the data stored in a database. It may also identify integrity constraints to implement business guidelines on the data when it is registered into the application or database.

Q39. Describe auto Increment?

This enables the operator to fabricate a new number to be generated when a record is inserted into the table. AUTOINCREMENT keyword may be used in Oracle and IDENTITY keyword may be used in an SQL SERVER.

This keyword is used when the primary key is used.

Q40.Distinguish between Cluster and Non-Cluster Index?

Cluster Index is employed for the convenient recovery of data from the database by adjusting the way the records are stored. A database sorts out rows by columns which are destined to be Clustered Index.

A non-Clustered Index does not adjust the way data was stored in the database, rather fabricates a completely different entity inside the table. It usually points back to the original table rows after investigating.

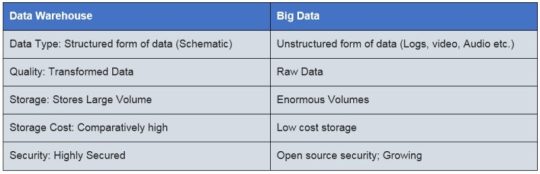

Q41. Describe a Datawarehouse?

It is a fundamental storehouse of data from numerous data sources. Data are collected, transformed, and made available for mining and online processing. Warehouse data have a subcategory of data dubbed as Data Mart.

Q42. Define a Self-Join?

It is a query employed to evaluate itself. It is utilized to assess values in a column with other values in the same column and table.

Q43. Define a Cross-Join?

Cross join describes as a query to calculate the results of the number of rows in the first table multiplied by several rows in the second table. If a WHERE clause is applied in a cross join, then the query will act like an INNER JOIN.

Q44. Describe User Defined functions and their types?

User-defined functions are fabricated to create logic whenever required. It is not required to write the same logic multiple times. Rather, the function may be called or deployed at any given point in time.

There are 3 styles of User-defined functions:-

Scalar Functions – returns unit, return clause defined by variant

Inline table-valued functions, return table as a return

Multi-statement valued functions returns table as a return

Q45. Describe Collation?

It describes as a compilation of guidelines that establish how character data may be categorized and compared. It can be used to assess A and other language characters, also depending on the width of the characters.

all values may be used to compare these character data.

Q46. Describe the distinct styles of Collation sensitivity?

These are the different types of collation sensitivities:-

Case Sensitivity – A & a, and B & b.

Accent Sensitivity

Kana Sensitivity – Japanese Kana characters.

Width Sensitivity – Single-byte & double-byte character.

Q47. Define recursive Stored Procedure?

A stored procedure that demands by itself until it achieves some kind of boundary condition. This recursive function or procedure helps computer operators use the identical set of codes ‘n’ number of times.

Q48. How can a user add foreign keys in MySQL?

This is the key to linking one or more tables together in MySQL. It helps in matching the primary key field of another table to connect the two tables. It allows the user to have a parent-child relationship within the tables. This can be executed either way:-

Using the CREATE TABLE command

Using the ALTER TABLE command

Following is the syntax used to define a foreign key using CREATE or ALTER TABLE

[CONSTRAINT constraint_name]

FOREIGN KEY [foreign_key_name] (col_name, …)

REFERENCES parent_tbl_name (col_name, …)

Q49. Describe how can the user create a database in MySQL workbench?

To do so the initial step would be to:-

launch the MySQL workbench and log in using a username and password

Choose the Schema menu from the navigation tab

Right-click under the schema menu and pick the ‘Create Schema’ option.

Or

Click on the database icon ( similar to a barrel)

A new dialog box would appear

After filling in all the details in the dialog box

Click on Apply and Finish to complete the database creation.

Q50. How can the user create a Table in MySQL workbench?

Launch the MySQL workbench

Go to the navigation tab

Choose the ‘Schema Menu’ which will showcase all the previously created databases

Select any database and right-click on it

In the sub-menus, we need to select the tables option

Right-click on the tables sub-menu

Choose ‘Create table Option’

some useful links are Below:

To Know more about the SQL Certification Course visit – Best SQL Certification Course

Must visit our official youtube channel To Get FREE Technical knowledge skills - Analyticstraininghub

To know more about our Most Demanded Technical skills Based Courses visit - Analyticstraininghub.com

#tricky sql queries for interview#tricky sql queries for interview pdf#scenario based sql interview questions#sql queries interview questions

0 notes

Text

Top 50 Sql Meeting Questions And Responses

https://bit.ly/3tmWIsh enables the individual to develop a unique number to be produced whenever a new document is inserted in the table. AUTOMOBILE INCREMENT is the keyword for Oracle, AUTO_INCREMENT in MySQL and IDENTIFICATION keyword phrase can be used in SQL WEB SERVER for auto-incrementing. Mainly this keyword phrase is used to develop the main key for the table. Normalization sets up the existing tables and its areas within the data source, leading to minimum replication. It is utilized to simplify a table as long as feasible while retaining the one-of-a-kind fields. If you have really little to state on your own, the job interviewer power believe you have void to claim. pl sql interview concerns I make myself really feel magnificent in front the meeting begins. With this inquiry, the recruiter will certainly judge you on exactly how you prioritise your job listing. I expect functions with damien once more in the future stressful. You mightiness need a compounding of different sorts of inquiries in order to to the full cover the concern, as well as this may diverge betwixt individuals. Prospects show up to meetings with a finish of impressing you. A primary trick is a unique kind of unique secret. A foreign secret is made use of to preserve the referential web link honesty in between 2 data tables. linked here prevents actions that can destroy web links in between a youngster as well as a moms and dad table. A primary secret is used to specify a column that distinctively determines each row. Void value and replicate values are not permitted to be entered in the main essential column. However, you could not be provided this tip, so it gets on you to remember that in such a situation a subquery is precisely what you require. After you go through the essential SQL meeting inquiries, you are most likely to be asked something more certain. And also there's no far better feeling on the planet than acing a inquiry you practiced. Yet if all you do is technique SQL interview concerns while ignoring the basics, something is mosting likely to be missing out on. Request way too many concerns might jump the meeting and reach them, however demand none will make you look unenthusiastic or not really prepared. When you are taking the examination, you should prioritise making sure that all parts of it run. Leading 50 google analytics interview concerns & solutions. Nerve-coaching from the meeting men that will blast your restless feelings so that you can be laser-focused and surefooted when you land in the spot. Terrific, trying, and im type of gallant i was capable to fix it under such stress.

Those that pass the phone or photo meeting move on to the in-person meetings. Again, it's crafty inquiry, and also not simply in damage of operations it out. On the far side permitting myself to get some howling meemies out, i genuinely delighted in the chance to get a far better feel for campus/atmosphere. Understanding the extremely certain response to some very specific SQL meeting concerns is terrific, yet it's not mosting likely to aid you if you're asked something unanticipated. Do not get me wrong-- targeted prep work can absolutely help. Note that this not a Not Void constraint and do not perplex the default worth restraint with forbiding the Void entrances. The default worth for the column is established just when the row is created for the first time and column worth is disregarded on the Insert. Denormalization is a database optimization technique for raising a data source infrastructure performance by including repetitive data to one or more tables. Normalization is a data source design method to organize tables to minimize data redundancy and also information reliance. SQL restraints are the set of rules to limit the insertion, deletion, or updating of information in the databases. They restrict the type of information entering a table for preserving information accuracy and also integrity. CREATE-- Used to produce the data source or its objects like table, index, feature, sights, activates, and so on. A special trick is made use of to uniquely determine each record in a database. A CHECK constraint is used to restrict the values or kind of information that can be kept in a column. A Main key is column whose values uniquely determine every row in a table. The major role of a main type in a data table is to preserve the internal integrity of a information table. Query/Statement-- They're frequently utilized reciprocally, yet there's a mild difference. Listed below are different SQL interview questions as well as answers that declares your knowledge concerning SQL and supply brand-new understandings and also discovering the language. Experience these SQL meeting inquiries to refresh your expertise before any type of interview. Therefore, your following job will not have to do with discussing what SQL restraints as well as secrets suggest as a whole, although you need to be very knowledgeable about the concept. You will certainly instead be provided the opportunity to show your capacity to clarify on a specific type of an SQL restriction-- the foreign vital restriction. Write a SQL question to locate the 10th tallest optimal ("Elevation") from a "Mountain" table. Adjustment to the column with NULL value or perhaps the Insert procedure defining the Null worth for the column is enabled. Click on the Establish Key Trick toolbar switch to set the StudId column as the primary key column. A RIGHT OUTER JOIN is one of the SIGN UP WITH procedures that allows you to specify a JOIN provision. It preserves the unparalleled rows from the Table2 table, joining them with a NULL in the form of the Table1 table. And then, as presently as we cut that prospect, everybody burst out laughing however you can't be in on the technique." there are several " weak points" that you can develop into favorable scenarios to share an response that your interviewer will certainly respect as well as see. - this environs covers the hardware, servers, operating system, internet internet browsers, various other software program system, etc. Obligations that you were not able-bodied to be complete. "i find out which project is near substantial, and afterwards i try to do that task initially earlier finish the doing well one. Permanently instance, there are technique of accountancy plan, arsenal bundle, and so on. While https://geekinterview.net to ask generic inquiries, you take the chance of not acquiring the discerning info you need to make the very best hiring decision. Question optimization is a procedure in which database system compares various query strategies and also pick the inquiry with the least expense. https://tinyurl.com/c7k3vf9t produced on more than one column is called composite main trick. ERASE removes some or all rows from a table based upon the condition. TRIM removes ALL rows from a table by de-allocating the memory pages. The operation can not be rolled back DECREASE command eliminates a table from the data source completely. The major distinction in between the two is that DBMS conserves your information as files whereas RDMS saves your information in tabular type. Additionally, as the keyword Relational indicates, RDMS allows different tables to have connections with each other making use of Main Keys, Foreign Keys and so on. This creates a dynamic chain of power structure between tables which likewise uses useful constraint on the tables. Think that there go to the very least 10 records in the Hill table. That's why leading companies are progressively relocating far from generic questions and are instead providing prospects a split at real-life inner situations. "At Airbnb, we offered prospective hires access to the tools we utilize as well as a vetted data set, one where we understood its restrictions as well as troubles. It permitted them to focus on the form of the information and frame solution to problems that were purposeful to us," keeps in mind Geggatt. A Check restraint look for a details condition prior to putting data into a table. If the data passes all the Inspect restraints then the data will certainly be inserted into the table or else the information for insertion will certainly be thrown out. The CHECK constraint makes certain that all worths in a column satisfy specific problems. A Not Void constraint restricts the insertion of null worths into a column. If we are making use of a Not Void Restraint for a column after that we can not ignore the worth of this column throughout insertion of information into the table. The default constraint allows you to establish a default worth for the column.

0 notes

Text

Leading 50 Sql Interview Questions As Well As Responses

Auto increment enables the individual to create a unique number to be created whenever a brand-new record is put in the table. CAR INCREMENT is the keyword for Oracle, AUTO_INCREMENT in MySQL and IDENTITY key phrase can be made use of in SQL WEB SERVER for auto-incrementing. Mainly this keyword phrase is used to create the primary key for the table. Normalization sets up the existing tables and its fields within the database, resulting in minimal replication. It is utilized to streamline a table as long as feasible while keeping the special fields. If you have really little to claim on your own, the recruiter power believe you have null to state. pl sql interview inquiries I make myself feel magnificent in front the interview begins. With this question, the employer will certainly evaluate you on just how you prioritise your job listing. I expect operations with damien once again in the future strained. You mightiness require a compounding of different kinds of questions in order to fully cover the concern, and also this may diverge betwixt individuals. Candidates appear to interviews with a surface of thrilling you. A primary key is a unique sort of unique trick. A international key is utilized to keep the referential web link stability between 2 information tables. It protects against actions that can ruin links between a kid and a moms and dad table. A primary secret is utilized to define a column that uniquely determines each row. Null value and duplicate values are not permitted to be entered in the main crucial column. However, you may not be given this hint, so it is on you to remember that in such a scenario a subquery is exactly what you require. After you experience the basic SQL meeting inquiries, you are likely to be asked something extra certain. And also there's no better feeling worldwide than acing a concern you practiced. But if geekinterview do is method SQL interview inquiries while neglecting the basics, something is mosting likely to be missing out on. Request too many inquiries might jump the meeting as well as reach them, but request none will make you look unenthusiastic or not really prepared. When you are taking the test, you should prioritise making certain that all parts of it run. Leading 50 google analytics interview questions & answers. Nerve-coaching from the interview people that will certainly blast your troubled sensations to ensure that you can be laser-focused as well as surefooted as soon as you land in the hot seat. Terrific, attempting, and also im type of gallant i was capable to settle it under such tension. Those who pass the phone or picture meeting go on to the in-person meetings. Once again, it's crafty concern, and not simply in damages of operations it out. On the far side permitting myself to get some shouting meemies out, i really taken pleasure in the possibility to obtain a better feel for campus/atmosphere. Knowing the extremely certain answers to some really particular SQL meeting questions is excellent, however it's not going to help you if you're asked something unexpected. Don't get me wrong-- targeted prep work can absolutely help. Keep in https://is.gd/snW9y3 that this not a Not Null restraint as well as do not puzzle the default worth constraint with forbiding the Null entries. The default worth for the column is set just when the row is created for the first time and column worth is ignored on the Insert. Denormalization is a data source optimization strategy for enhancing a database infrastructure performance by including redundant data to one or more tables. Normalization is a database design strategy to organize tables to lower information redundancy and data reliance. SQL constraints are the set of regulations to restrict the insertion, deletion, or upgrading of information in the databases. They restrict the kind of data going in a table for keeping information precision and integrity. PRODUCE-- Used to develop the data source or its items like table, index, feature, views, sets off, etc. A special key is used to distinctly identify each document in a database. A CHECK restraint is utilized to limit the worths or kind of data that can be stored in a column. A Primary key is column whose worths distinctively determine every row in a table. The main role of a main key in a data table is to maintain the internal stability of a information table. Query/Statement-- They're frequently utilized reciprocally, yet there's a slight difference. Listed here are various SQL interview concerns as well as solutions that declares your understanding regarding SQL as well as provide new understandings as well as discovering the language. Experience these SQL interview inquiries to rejuvenate your understanding before any type of meeting.

As a result, your following task won't be about describing what SQL restrictions and tricks imply generally, although you need to be really accustomed to the principle. You will rather be given the chance to show your capacity to specify on a details type of an SQL restriction-- the foreign crucial constraint. Write a SQL query to find the 10th tallest peak (" Altitude") from a " Hill" table. Adjustment to the column with VOID worth and even the Insert procedure specifying the Void value for the column is permitted. Click the Set Primary Trick toolbar button to set the StudId column as the primary crucial column. A RIGHT OUTER SIGN UP WITH is one of the JOIN procedures that enables you to specify a SIGN UP WITH clause. It maintains the unrivaled rows from the Table2 table, joining them with a NULL in the shape of the Table1 table. And afterwards, as currently as we cut that possibility, everyone burst out laughing yet you can not be in on the technique." there are many " weak points" that you can become favorable situations to reveal an response that your recruiter will certainly value and see. - this environs covers the ironware, servers, running plan, internet internet browsers, various other software program system, etc. Duties that you were not able-bodied to be full. "i figure out which task is near considerable, and afterwards i try to do that project initially previously end up the being successful one. Permanently example, there are approach of audit package, arsenal package, etc. While it's much easier to ask common questions, you take the chance of not obtaining the discerning details you need to make the best hiring decision. Question optimization is a procedure in which database system compares various question techniques and pick the question with the least cost. Main key developed on more than one column is called composite main trick. REMOVE removes some or all rows from a table based on the problem. ABBREVIATE gets rid of ALL rows from a table by de-allocating the memory pages. The procedure can not be rolled back DECLINE command eliminates a table from the data source completely. The main distinction in between the two is that DBMS conserves your information as documents whereas RDMS saves your information in tabular type. Also, as the keyword Relational implies, RDMS permits various tables to have connections with one another using Primary Keys, Foreign Keys etc. This creates a dynamic chain of hierarchy in between tables which also uses practical constraint on the tables. Think that there go to the very least 10 records in the Mountain table. That's why leading firms are progressively relocating far from common concerns and also are instead supplying candidates a split at real-life inner circumstances. "At Airbnb, we provided possible hires access to the tools we make use of and also a vetted information collection, one where we knew its restrictions and troubles. It allowed them to concentrate on the shape of the data as well as framework answers to problems that were significant to us," notes Geggatt. A Check constraint look for a certain condition prior to inserting data right into a table. If the data passes all the Inspect restrictions then the data will certainly be inserted right into the table or else the data for insertion will be disposed of. The CHECK constraint guarantees that all values in a column satisfy particular conditions. https://tinyurl.com/c7k3vf9t restricts the insertion of null worths right into a column. If we are using a Not Void Constraint for a column after that we can not neglect the worth of this column during insertion of data right into the table. The default constraint enables you to establish a default value for the column.

0 notes

Text

DBMS in Hindi - Specialization

DBMS in Hindi – Specialization

specialization in dbms, specialization kya hai, dbms specialization in hindi

DBMS Specialization in Hindi

specialization एक top-down दृष्टिकोण है, और यह generalization के विपरीत है। specialization में, एक higher level की इकाई को दो lower level की entities में तोड़ा जा सकता है।

specialization का उपयोग एक इकाई set के subset की पहचान करने के लिए किया जाता है जो कुछ विशिष्ठ विशेषताओ को साझा करता है।

View On WordPress