#ethics in computing

Explore tagged Tumblr posts

Text

watching alexander avilas new AI video and while:

I agree that we need more concrete data about the true amount of energy Generative AI uses as a lot of the data right now is fuzzy and utilities are using this fuzziness to their advantage to justify huge building of new data centers and energy infrastructure (I literally work in renewables lol so I see this at work every day)

I also agree that the copyright system sucks and that the lobbyist groups leveraging Generative AI as a scare tactic to strengthen it will probably ultimately be bad for artists.

I also also agree that trying to define consciousness or art in a concrete way specifically to exclude Generative AI art and writing will inevitably catch other artists or disabled people in its crossfire. (Whether I think the artists it would catch in the crossfire make good art is an entirely different subject haha)

I also also also agree that AI hype and fear mongering are both stupid and lump so many different aspects of growth in machine learning, neural network, and deep learning research together as to make "AI" a functionally useless term.

I don't agree with the idea that Generative AI should be a meaningful or driving part of any kind of societal shift. Or that it's even possible. The idea of a popular movement around this is so pie in the sky that it's actually sort of farcical to me. We've done this dance so many times before, what is at the base of these models is math and that math is determined by data, and we are so far from both an ethical/consent based way of extracting that data, but also from this data being in any way representative.

The problem with data science, as my data science professor said in university, is that it's 95% data cleaning and analyzing the potential gaps or biases in this data, but nobody wants to do data cleaning, because it's not very exciting or ego boosting, and the amount of human labor it would to do that on a scale that would train a generative AI LLM is frankly extremely implausible.

Beyond that, I think ascribing too much value to these tools is a huge mistake. If you want to train a model on your own art and have it use that data to generate new images or text, be my guest, but I just think that people on both sides fall into the trap of ascribing too much value to generative AI technologies just because they are novel.

Finally, just because we don't know the full scope of the energy use of these technologies and that it might be lower than we expected does not mean we get a free pass to continue to engage in immoderate energy use and data center building, which was already a problem before AI broke onto the scene.

(also, I think Avila is too enamoured with post-modernism and leans on it too much but I'm not academically inclined enough to justify this opinion eloquently)

17 notes

·

View notes

Text

This joke is very funny and to a certain extent true, but the underlying issue (if you're a nerd) is actually that these systems take way more resources to produce mediocre results and the fact of the matter is that without investor money the price they would have to charge for these services would be so high that no one would be willing to pay it

Also, now that the hype is dying down some companies are quietly discontinuing their stupid chatbot features which loses them a lot of money as commercial implementations were meant to be the real cash cows.

(full article here)

85K notes

·

View notes

Text

What I really appreciate about The Talos Principle 2 is that big chunks of its writing genuinely read like they were written by someone who's personally had to justify the discipline of philosophy to a STEM major. "There exists an implicit moral algorithm in the structure of the cosmos, but actually solving that algorithm to determine the correct course of action in any given circumstance a priori would require more computational power than exists in the universe. Thus, as we must when faced with any computationally intractable problem, we fall back on heuristic approaches; these heuristics are called 'ethics'." is a fascinating way of framing it, but then I ask why would you explain it like that, and every possible answer is hilarious.

#gaming#video games#the talos principle 2#the talos principle#philosophy#ethics#computer science#stem#the talos principle 2 spoilers#the talos principle spoilers#spoilers

2K notes

·

View notes

Text

This is a real thing that happened and got a google engineer fired in 2022.

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted june 13#polls about ethics#ai#artificial intelligence#computers#robots

447 notes

·

View notes

Text

To the people asking why they shouldn't use chat gpt and/or calling op a bigot for "discriminating against neurodivergent people" (also, yes goblin tools uses gpt, I see some people confused about that) I am an autistic software engineer here to explain. Here are some of the main reasons people object to AI usage:

1) environmental impact. AI uses a frankly disgusting amount of power and water. It took almost 300,000 kwh to just train chat gpt 3, and enough water to fill a nuclear reactors cooling tower. Some people will argue that only the training is this resource intensive and once the model is trained the damage is already done. This is untrue, as every conversation with chat gpt you have is the equivalent of wasting a large bottle of water and leaving a light on for an hour. Even if it was true, these companies are constantly training and improving existing models, and justifying that cost to their shareholders partially with YOUR use of their tool. And yes, big tech had a water and energy problem long before LLMs, but LLMs are significantly worse and only worsening.

2) it doesn't KNOW anything. This one is more philosophical, but chat gpt is advanced text prediction and arranging. Even researchers themselves are starting to accept that they will never be able to eliminate so called "hallucinations", which is when the model makes something plausible sounding up basically. It is not a "better search engine" because it is not retrieving any factual data, it is generating the most statistically probable results based on its training data, which will sometimes produce a desired output, but sometimes produces flat out nonsense. As well, you have to do your own fact checking. On Google, you can see the source of where something came from and who wrote, and gpt robs you of that context. There is no such thing as bias free, purely factual information unless we're talking incredibly basic questions and the info from chat gpt is basically worthless if it has no sources, no context, no author, and is made up by the model.

3) theft. This one is controversial, some people will argue that if you put something publicly on the Internet you are passively consenting to its use, but if I repost someones article without credit/claiming it as my own, they can still request that that article gets taken down. But the facts are that open AI used a dataset that scraped an absolutely massive amount of Internet data to train their model without asking, and you can get chat gpt to produce chunks of that text, especially in niche topics. For example, the software I work with is relatively niche and if you use chat gpt to get answers to questions about it, it will word for word reproduce some chunks of the actual documentation, mixed in with nonsense and with no accreditation. If you are using chat gpt for homework answers, you are potentially plagiarizing word for word chunks of other people's work.

4) this is one I don't see talked about often, but it's one that I feel is important. BIAS. GPT scraped the internet. Meaning, its word salads are entirely based on people who have internet access, and even more than that, people who post or post frequently on the Internet. That's a really small portion of the whole population. So, do you really want your word salads to have that kind of bias? Especially if you are using them for work or homework?? Wouldn't you rather put your unique, diverse, voice into the world?

Ultimately, using LLMs is an ethical decision you have to make and live with, but you should do it while understanding why people object to them, and also while understanding that the model doesn't know anything, it's like an advanced magic eight ball. Don't just play the victim and refuse to listen to concerns. Or call everyone taking issue with your use a bigot.

145K notes

·

View notes

Text

I archived the magnus archives

idk link to the full thing is here https://docs.google.com/spreadsheets/d/1vA6kin0H5bk_Nu15WTnwmAEPTAx9MLVO/edit?usp=sharing&ouid=117712544114786577413&rtpof=true&sd=true

I genuinely don't know what anyone would use this for, and honestly doing recreational paperwork is crazy and i need to sit with myself for a bit and think about this one

anyway john's case numbering system sucks so i made a new one, not really that good but it acts better as a call number system. In real life there would be albums and catalogue numbers but thank god the magnus institute archive does NOT exist so that's not an issue

#the magnus archives#tma#jonathan sims#martin blackwood#i literally did an unpaid shift as an archival assistant then came home and did this#autism overload#also dear god using smooth delicious Microsoft excel at work makes google sheets feel like one of those fake children's play toy-#-computers like what the hell why is office 365 so damn expensive i want nice cells i want capitalisation functions google sheets count you#damn days count your days thats what im trying to day#and don't tell me its an ethical issue for the statement givers last name to be in the call number#the magnus institute kills people i don't think they care

96 notes

·

View notes

Text

One day it will all be worth it <3

Nights like this can be so draining and tiring but posting stuff like this remotivates me to keep going. Struggling so hard to not be distracted but it will work out…

#academia#academic validation#academic weapon#history#philosophy#philanthropy#ethics#notes#study motivation#student life#studying#studyblr#studyspo#student#study blog#study aesthetic#study notes#my notes#life lessons#love#live#life#ethical lifestyle#good life#college life#kiss of life#hydration#owala#computer#trees

104 notes

·

View notes

Text

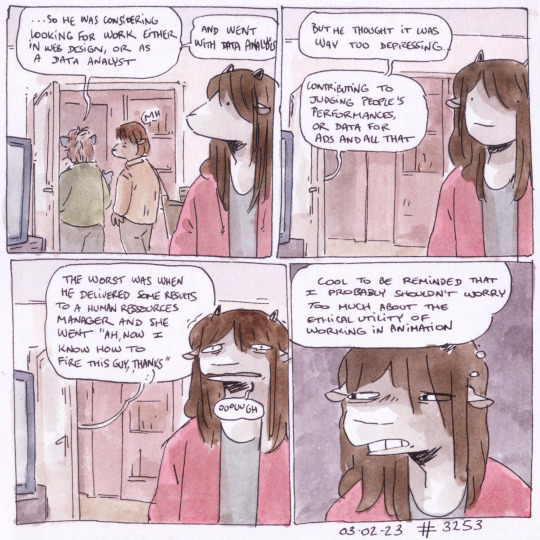

#03.02.23#3253#'ethical utility of working in animation' i think i wasthinking back to how when i was studying animation we had chats about like#isnt it wasteful to have a ton of computers running to render high detailed animation or whatever ;#that plus also does working in that field actually do any good i guess#unnecessary doubts because there's definitely definitely stuff a lot more harmful c'mon#ma

276 notes

·

View notes

Text

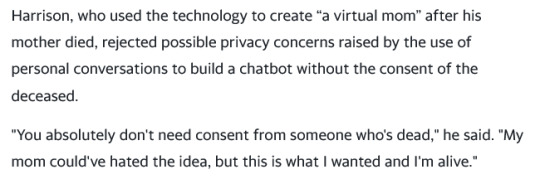

A mutual reblogged this from me and it reminded me of an example I found:

I mean... holy shit?

I fucking swear....

There was already a Black Mirror episode about why this was a bad idea! Ten years ago! It had Hayley Atwell!

How the fuck can you say that and not think you're a sci-fi villain?

Just... I'm so tired of this tweet being so accurate.

3K notes

·

View notes

Text

Highlights from bullshit AI meeting today where a company we buy a software from is trying to upsell us into their product.

"your data quality isn't really a concern, we just let the LLM sort through things and pick out what's important."

LOL they are so desperate that they will just lie to sell these AI tools.

"everyone is really searching for this killer use case for AI right now, so in that vein do you guys have a chatbot on your website?"

The chatbot is really the best thing they can come up with XD

Our senior engineer kept trying to circle back to other use cases and they kept pushing the chatbot thing, it's all they've got.

Also, fyi, all of these chatbot things are consumption based pricing, so if you want to fuck with a company you can program something to waste its time by having multiple "conversations" as they price by the conversation. Sure, this is bad for the environment, but it may eventually get them to take these stupid things down.

6 notes

·

View notes

Text

regardless of my or your opinion on AI art, saying that artists also make their art by simply viewing a lot of art and then regurgitating a mixture of statistically probable pixels implies that humans learn like machines do, and this isn't even remotely accurate. ignoring the phenomenological and social components of human learning really only stands to dovetail into viewing humans as material goods on the same level as a server farm. psychology has actually been sliding into viewing the brain as computers, and computers as equivalents to the human brain, and it's caused a lot of harm. we can't talk about some psychological phenomena without using Computer Terms to do it because that's the language that was given to them. viewing humans and computers as functionally equal with enough bits to replicate neurons won't humanize robots, it will dehumanize humans. it is advanced as an ideology for that purpose. in the end i see humans viewing art and integrating it into their own ideas for art as valuable because i think that humans don't exist to create a profit for some guy in silicon valley and i think that enriching human lives is good. humans don't "learn" art in order to produce replicas; the art is a byproduct.

#i'm trying to avoid the idea of a soul or whatever when trying to differentiate them#but the existence of consciousness often equivocated to 'having a soul' is significant and entirely different from machine learning#we have to define values and ethical systems that structure our society and i think that system of ethics should define conscious life#as valuable and that doesn't just extend to humans it's exactly like humanely dispatching animals is also an important ethical principle#i don't want to live in a society that sees a computer with the same amount of 'neurons' as a human brain as equally valuable to a person#at the end of the day.

41 notes

·

View notes

Text

Wetware Is Here: Human Brain-Matter Computing (not fiction)

Swiss tech company Final Spark now offers Neuroplatform, the world’s first bioprocessing platform using human brain organoids (lab-grown mini-brains) to perform computational tasks instead of silicon chips.

The first such facility uses 16 human-brain organoids, which the company claims uses a million times less power than their silicon counterparts.

These are not sentences we expected to write non-fictionally in this year of our world 2024.

news source: X

paper: X

44 notes

·

View notes

Text

originally prompted by a discord user, i used wikimedia as reference!

#daffleart#dafflemisc#illustration#2025 work#ignore this fib->#made with ai.#i am uh. doing tests with what's being read. this page is public to search engines.#i haven't meaningfully passed the turing test once in my online life—it's a test of psychology more than computer science.#so i unfortunately have to consider what mind games will poison the water-hungry data until it goes offline#i don't think it's ethical for it to be a worldwide web thing. keep it localised and auditable.

8 notes

·

View notes

Text

"Major AI companies are racing to build superintelligent AI — for the benefit of you and me, they say. But did they ever pause to ask whether we actually want that?

Americans, by and large, don’t want it.

That’s the upshot of a new poll shared exclusively with Vox. The poll, commissioned by the think tank AI Policy Institute and conducted by YouGov, surveyed 1,118 Americans from across the age, gender, race, and political spectrums in early September. It reveals that 63 percent of voters say regulation should aim to actively prevent AI superintelligence.

Companies like OpenAI have made it clear that superintelligent AI — a system that is smarter than humans — is exactly what they’re trying to build. They call it artificial general intelligence (AGI) and they take it for granted that AGI should exist. “Our mission,” OpenAI’s website says, “is to ensure that artificial general intelligence benefits all of humanity.”

But there’s a deeply weird and seldom remarked upon fact here: It’s not at all obvious that we should want to create AGI — which, as OpenAI CEO Sam Altman will be the first to tell you, comes with major risks, including the risk that all of humanity gets wiped out. And yet a handful of CEOs have decided, on behalf of everyone else, that AGI should exist.

Now, the only thing that gets discussed in public debate is how to control a hypothetical superhuman intelligence — not whether we actually want it. A premise has been ceded here that arguably never should have been...

Building AGI is a deeply political move. Why aren’t we treating it that way?

...Americans have learned a thing or two from the past decade in tech, and especially from the disastrous consequences of social media. They increasingly distrust tech executives and the idea that tech progress is positive by default. And they’re questioning whether the potential benefits of AGI justify the potential costs of developing it. After all, CEOs like Altman readily proclaim that AGI may well usher in mass unemployment, break the economic system, and change the entire world order. That’s if it doesn’t render us all extinct.

In the new AI Policy Institute/YouGov poll, the "better us [to have and invent it] than China” argument was presented five different ways in five different questions. Strikingly, each time, the majority of respondents rejected the argument. For example, 67 percent of voters said we should restrict how powerful AI models can become, even though that risks making American companies fall behind China. Only 14 percent disagreed.

Naturally, with any poll about a technology that doesn’t yet exist, there’s a bit of a challenge in interpreting the responses. But what a strong majority of the American public seems to be saying here is: just because we’re worried about a foreign power getting ahead, doesn’t mean that it makes sense to unleash upon ourselves a technology we think will severely harm us.

AGI, it turns out, is just not a popular idea in America.

“As we’re asking these poll questions and getting such lopsided results, it’s honestly a little bit surprising to me to see how lopsided it is,” Daniel Colson, the executive director of the AI Policy Institute, told me. “There’s actually quite a large disconnect between a lot of the elite discourse or discourse in the labs and what the American public wants.”

-via Vox, September 19, 2023

#united states#china#ai#artificial intelligence#superintelligence#ai ethics#general ai#computer science#public opinion#science and technology#ai boom#anti ai#international politics#good news#hope

204 notes

·

View notes

Text

interesting to think about how constantine isn't commonly read as being the most morally upright of characters, and that's generally a fair assessment. but at the same time he can still be a surprisingly ethical character, particularly in terms of the values he holds synonymous with being a good community member.

a couple examples of (what i think are) his most consistently held values throughout the hellblazer series:

constantine feels very strongly about things like defending people's bodily autonomy and showing kindness & compassion towards society's more vulnerable demographics, especially kids & unhoused people.

he frequently & sincerely upholds the basic principles of xenia (showing hospitality towards foreigners, guests, and anyone whose fate is in your hands), and possesses a deep, innate sense of justice that he is unafraid to uphold when he feels it necessary to do so.

he personally values survival above honor but still has a healthy appreciation for people that are committed to a code,

he respects integrity more than he'd respect a good liar (even if he fucking hates you, at least you're being true to yourself; can't help it if yourself is just a prick),

and he is CONSTANTLY PLAGUED by a deeply personal sense of social responsibility to try and nip potential threats to the world, to his city, or to his friends in the bud if he thinks it might be within his capabilities to do so.

of course, it's key to note that constantine's code of ethics was also founded primarily in accordance with the principles of '70s-'80s counterculture + still largely abides by the social beliefs of the same-era punk community, and thus also includes more subjectively-judged tenets such as challenging the establishment, defying the government, protecting individual liberties, taking direct action in support of your community, putting people above possessions at every turn, and actively addressing social issues wherever / whenever you encounter them. so he's not always viewed by other characters (particularly upper-class bootlicking ones) as being particularly ethical. doesn't change the fact that constantine still adamantly maintains certain major ethical principles in accordance with the social systems he inhabits and was raised in.

#( ooc. ) OUT OF CIGS.#( character study. ) A WALKING PLAGUE OF A MAN.#don't @ me for referencing xenia si spurrier has nothing to do with this. i just love the concept#i had a very interesting twofold coincidence that led to this post (well. interesting to me probably very mundane all told)#was rereading hellblazer 12 and when ritchie demanded that john find him a body to hop into? john's response was verbatim:#'a body? jesus mate. hang about. don't you think there might be a question of ethics here?'#and i giggled at that a little bc john constantine? ethics? come on now. but it made sense given his own struggles w/ autonomy#but then i saw a post later on that read 'let's do something unethical together. just for fun' and thought it was silly#and when i went to reblog it for john i stopped and thought about it and realized. no he wouldn't find that very fun actually#cue rabbit hole into morality vs ethics with a side jaunt into what the hell is moral nihilism#plus a dash of key recurring themes in hellblazer (bodily autonomy. standing up to injustice. compassion towards the vulnerable.)#i may be getting ahead of myself but i now think compounding morality + ethics into a uniform behavioral monolith#or else mistaking one for the other. Might Be a very big reason why john gets SO mischaracterized in later dc adaptations#like yes! he often has to resort to behavior / making decisions that are (to us) Obviously Morally Wrong in order to save the world#sacrificing gary lester to a demon. leaving ritchie trapped in a computer. lying to get baron winters' help against the brujeria.#and yes! that Morally Wrong behavior can and does involve causing harm to the people around him (albeit usually inadvertently)#Doesn't! Mean! He Has! No Sense! of Decency! or Empathy! or Right! or Wrong! just because he Had to Resort to an Immoral Act!#there were still Valid or at least Understandable Ethical Reasons behind ALL THREE EXAMPLES i just listed above!!!#that's why two of those examples are GUT-WRENCHING!! bc he betrayed his moral beliefs in support of his ethical principles!!#i can punch someone in the face and still feel compelled by my cultural norms to help a little old lady cross the street right after!!#upon reflection: whoa nelly. i fear i am getting needlessly heated over this. Anyways#if you've followed me for a bit you're already aware of how i feel about the continued mischaracterization of constantine in media lmao#i've said my piece. i've done my morality and ethics homework. i can rest now#and i'll say upfront that it would be Extremely funny if i wrote all this out + later find out i've been mixing up the two. bc it would be

17 notes

·

View notes