#futurewarnings

Explore tagged Tumblr posts

Text

L’Ultima Guerra: La Fine dell’Umanità è Vicina

Stanno facendo di tutto per promuovere il "Kit di sopravvivenza" per la Grande Guerra che verrà: l’ultima guerra, quella che spazzerà via l’intera umanità.

Fascisti, oligarchi, capitalisti senza scrupoli, burocrati guerrafondai e imperialisti assassini cercano in ogni modo di giustificare l'inizio della fine. Un'epoca in cui bombe atomiche cadranno dal cielo, la radioattività contaminerà il suolo e i mari, e l’umanità sarà condannata a vivere senza cibo, senza acqua, senza cure, in un mondo devastato da malattie e sofferenza.

Sono loro, i signori della guerra e della corruzione, che ci stanno trascinando verso l’abisso: verso l’era delle atomiche, la guerra definitiva che potrebbe annientare il 99% della popolazione mondiale in meno di un mese.

#youtube#FineDelMondo#UltimaGuerra#ApocalisseNucleare#PoliticaCorrotta#NoAllaGuerra#CrisiGlobale#ResistenzaUmana#AntiImperialismo#PeaceNotWar#StopNuclearWar#FutureWarning#SurvivalKit#GuerraMondiale#Dystopia#NuclearApocalypse

0 notes

Text

警告やエラーを解決していく その1

## 警告やエラーを解決していく WARNING: you should not skip torch test unless you want CPU to work. F:\StabilityMatrix\Data\Packages\stable-diffusion-webui-directml\venv\lib\site-packages\timm\models\layers\__init__.py:48: FutureWarning: Importing from timm.models.layers is deprecated, please import via timm.layers warnings.warn(f"Importing from {__name__} is deprecated, please import via timm.layers",…

0 notes

Text

Mediante un mensaje FutureWarning tambien se puede indicar cambios en los valores predeterminados de los parametros de funciones. https://www.analyticslane.com/2024/01/22/que-son-y-como-manejar-los-errores-futurewarning-en-python/

0 notes

Text

'Dates Misinterpreted' (Yeshuah)

‘Dates Misinterpreted’ (Yeshuah)

Dates Misinterpreted (Yeshuah) they are weakening their stance a surrender in malice against an unbeknowing people too busy living an unproductive life a covering up of suspicion don't you see why don't you see a waywardness buried tyre did this babylon did this egypt did this so many in ignorance buried under their decorated arrogance so it is a stone thrown to the masses if I† came…

View On WordPress

#futurewarnings#God#Good#Jesus#love#Psalms 1#Psalms 14#Psalms 144#teaching#virus#war#warning#Yeshuah

0 notes

Text

#ai#artificialgeneralintelligence#artificial intelligence#future#the future#artificial art#technology#tech news#news#business news#latest news#breaking news#google#godfatherofgoogle#warning#globalwarning#futurewarning#future warning#global warning#be careful#take a warning

9 notes

·

View notes

Text

🚨 Elon Musk's Shocking Warning: AI Dependency Could Doom Humanity! Are We Heading Toward a Real-Life Idiocracy? 🤖📉😱

🚀 Elon Musk recently dropped a 💣 with a tweet that shook the 🌐! In it, he warned about the ⚠️ of overreliance on AI & automation 🤖, hinting at a future straight out of the dystopian 🎞️ "Idiocracy" 🥤📺🤦♂️. He also mentioned E.M. Forster's 1909 story "The Machine Stops" 🛑, painting a dark picture of society's downfall 🌆🔥.

Elon Musk’s recent tweet 🐦 highlights a potential concern that is mirrored in the 2006 movie “Idiocracy” 📽️. The film presents a dystopian future where the general population becomes increasingly unintelligent over time 🧠⏳, ultimately leading to the collapse of society. Musk’s tweet suggests that a similar scenario could arise if we become too reliant on AI and automation 🤖, eventually forgetting how these machines work 🔧.

One way that AI could contribute to the decline of society, as portrayed in “Idiocracy”, is by fostering a culture of complacency and overreliance on technology 📱💻. As AI systems become more advanced, people may rely on them for decision-making, problem-solving, and general knowledge 🧩. Over time, this could lead to a gradual erosion of critical thinking skills 💭 and a diminishing ability to solve problems independently. As individuals lose their ability to think critically and innovate, the overall intellectual capacity of society could decrease 📉.

Moreover, as AI and automation take over more tasks 📈, there may be a decline in the need for human labor 💼, leading to fewer opportunities for individuals to engage in meaningful work 🛠️. This could result in a loss of a sense of purpose and a growing disconnection from the world around them 🌍. Without the necessity to learn, adapt, and overcome challenges, people may become intellectually stagnant and less capable of addressing complex issues that require human ingenuity 🧠.

Musk also referenced “The Machine Stops” 🛑, a 1909 science fiction short story by E. M. Forster that depicts a world where humanity has become entirely dependent on a global machine for all aspects of their lives 🌐🤖. In this story, people live in isolated cells and interact only through technology 📲, while the machine takes care of their every need. This dependence on technology ultimately leads to the decline of human culture, intellect, and innovation 📚.

“The Machine Stops” illustrates how an overreliance on technology can lead to the erosion of society. The story serves as a cautionary tale ⚠️, warning against the dangers of placing too much trust in machines and losing touch with our humanity ❤️. Similarly, Musk’s tweets suggest that relying too heavily on AI and automation could create a world where humans gradually lose their capacity for independent thought, creativity, and innovation 🎨🎭.

Elon Musk’s recent tweets bring attention to the potential dangers of becoming too dependent on AI and automation 🚨. Drawing parallels to “Idiocracy” and “The Machine Stops” 🎞️📖, Musk warns that allowing technology to take over all aspects of our lives could lead to the decline of society. To prevent this outcome, it is crucial to strike a balance between harnessing the benefits of AI and automation while maintaining a strong focus on human innovation, critical thinking, and the preservation of our intellectual abilities 🧠💡.

1 note

·

View note

Text

Hard Candy (~Misfits AU~)

Chapter 27: Epilogue - Back to The Future

Warning: Language, alcohol.

a/n: This has been a wild ride! I just wanna thank you all for your support and for being so incredible, Lydia is such a dear character to me and it’s great to see people falling in love with her story. Oh, and even though this is the last chapter of Hard Candy, the story isn’t over, keep an eye out for tons of drabbles and one shots, and a brand new series (hopefully) coming soon.

(Hard Candy Masterlist)

- I'm really lovin' this new color on you, Lyddie - Nathan played with my pink hair.

- Yeah, it was time for a little change, wasn't it? Today marks the start of a new part of my life... The part I know absolutely nothing about. After all, today's the day.

- The day? What d'you mean?

- The day we first kissed, when I confessed to you. Actually, it was right here, at this very table, at this very pub. After Simon left with Lisha, you promised to get me home safely by the end of the night, we talked for hours and hours until it finally happened.

- No shit! Wow, it seemed so far away when you told me about it, can't believe the day arrived, life is just passin' me by... I'm gettin' old, aren't I?

- You look fine to me - I winked and blew him a kiss.

- So, why are we here today? I thought you'd wanna forget it... Forget how much of a wanker I was for lettin' you go.

- Well, I normally would, but I think I owe someone an explanation.

- Who?

I looked pointedly at the door as my younger self walked towards our table. Nathan's face lit up upon seeing young Lydia, it was kinda cute to see him reacting like this instead of her for once.

- I think you get the picture?

- Oh, yeah... I get the whole picture - he smirked.

- Hey Lolli, hey Nathan - Lydia smiled as she joined us in the booth.

- Hi, Lyds - I took her hand - how are you?

- I'm good, just practicing a lot, I'm actually playing one of your songs for my university audition next week, Shape of You.

- Were you goin' to university? - Nathan frowned, slightly guilty.

- Yeah - I whispered - but it was okay.

- I have a good feeling about it, maybe it will be better than secondary school... - She joked.

- Are you excited to become an aunt? - I asked.

- Very! Simon promised me he would let me be the godmother - she clapped with excitement.

- That's really nice... - I was trying to gather the nerve to say what I had to say, the whole reason why I asked her to come in the first place - So, there's something important we need to talk to you about.

- Oh, is everything ok? - Lydia seemed worried.

- Sure, brilliant - Nathan chuckled, still a little hypnotized by her.

- So... I know you're into Nathan - I shrugged.

- What? - She blushed furiously, sputtering a bunch of nonsense - I'm not... He's just... I think he's... But not...

- Lydia, it's ok - I stroked her hair gently - it's natural and I'm not mad, really. Believe me, I understand exactly the way you feel.

- That's adorable - Nathan bit his lip teasingly - I love how jittery you get when you're around me... Lollipop.

Her breath hitched when she heard that name being directed at her. She stared at him agape and I saw her pupils dilate at least ten times their normal size.

If I'm being honest his sultry tone did something to me as well, that man knew exactly how to get us both riled up and ready. How could I blame her?

- That's... That's weird, is this a wind-up? - She looked scared.

- Here - Nathan got closer to her, placing his hand on the back of her neck - does this look like a wind-up?

He leaned in and kissed her, she stood still for a few seconds before melting into his arms. It was funny to watch, her fingers quickly making their way to Nathan's curls, her body pushing to be closer to his. It was almost like I could feel that rush, her stomach filling up with butterflies just like mine did so long ago.

- Oh my God, oh Jesus, okay - she panted as he let her go and wrapped his arm around me - what is happening? Why is your husband kissing me? Why are you ok with him cheating on you? I heard that famous people are weird, but...

- Well, Lydia - I grinned widely - he's not cheating on me, because we're the same person.

- We're what?

- My name is not Lolli or Lyddie, it's actually Blossom Lydia Bellamy Young, I was born in Wertham, on November 22nd, 1996, and funny enough, I have a birthmark that resembles a microphone.

I turned to show her my left shoulder and she tilted her head with the most confused expression I've ever seen on my face.

- Oh my God, people did always say I look a lot like you... So what does that mean? - She gulped nervously - How did you do this? How did we do this?

- Well, I fell in love with this twat, just like you, but when that happened he wasn't married to me. He was married to another woman, he had a family with her. He turned me down after saying he loved me, he said that in another life we could've been happy together, so I decided to make this other life real.

- What did you do?

- I sold everything I owned and bought myself a power, one-way time travel. I went back to 2009, got myself arrested and sentenced to community service, and that's when I met my Nathan.

- That's amazing - she looked between us - but why are you telling me this?

- I thought it would be only fair for you to have the same chance I did, to go back and be with the man we love. That is if you don't mind giving me the place of our nephew's godmother...

- So you're saying I should go back in time?

- Do you want to?

- Is it dangerous?

- Extremely, but I'm here, I'm alive, and I've never been better.

- What if he doesn't fall in love with me?

- That's impossible - Nathan shook his head - there's no way this could ever happen. I knew I was fallin' from the moment she called me "lanky kid".

He rubbed his thumb against the back of my hand with a sweet smile. I never knew that, can't believe he still remembers that day.

- Well... I do, I wanna go.

- Alright, I'll arrange everything. I'll write down some instructions for you on how to keep the timeline in place, and you can go two weeks from now. There's a guy I know who can transfer the power from me to you, you just have to promise me one thing.

- Anything - she nodded enthusiastically.

- When this day comes for you, today, you need to tell young Lydia the same thing I told you and offer her the same chance I'm giving you.

- Sure, I can do that.

- Just don't give up when I mess things up, alright? - Nathan tucked a strand of her hair behind her ear - I know I will, several times, but I do love you, before and after.

- I promise I won't - she gazed at him lovingly - can I possibly get... Another kiss?

- Such a little tart... - Nathan pulled her close and I laughed - You can never get enough, can ya?

- Shut up, you cocky bastard! - I rolled my eyes.

Nate kissed Lydia passionately, smearing once again her lipstick all over their mouths. Her chest was heaving and I could see that sparkle, the true happiness in her eyes, that thing that finally made me feel truly beautiful.

- And one more for the road - Nathan kissed her one last time.

- I can't believe this is happening - she looked down, catching her breath.

- You better believe it, Lollipop - he purred - actually, I think you deserve a little somethin' special. Maybe I should get a drink, a little snack, and then we both...

- SHE'S NOT READY! - I covered his mouth with my hand - She'll die of embarrassment if you say what you were about to say.

- Alright, touchy - he mocked - don't worry, young me will know exactly what to do to get rid o'that shyness.

- What are you guys talking about? - She widened her eyes.

- Nothin', you'll know soon enough - Nathan waggled his brows with a cheeky grin.

- Don't be scared of the lightning, yeah? - I whispered - It'll hurt like a bastard, but it's just the beginning of the biggest adventure of your life.

Tag list: @crisis-of-joy @elliethesuperfruitlover @misskittysmagicportal

#misfits#Misfits tv#misfits imagine#misfits fanfic#misfits nathan#nathan young#nathan young x oc#nathan x lydia#hard candy#imagine#fanfic

27 notes

·

View notes

Note

Ooo! Fun!! Who is your crush Kiibo? I'll just take a guess and say it's Miu, but just to be sure, I want to hear the answer from you. Who is your senpai Kiibo? -Chaotic Anon

[Tw Yandere Themes. No blood yet, so FutureWarning] Miu is my senpai,,,,,I love Miu very much, and would do ANYTHING to keep her mine.

1 note

·

View note

Text

pandas.DataFrameに対してstyle.set_precision()を実施すると警告が出てくるのを修正する

警告は下記の通り。

FutureWarning: this method is deprecated in favour of `Styler.format(precision=..)`

修正例

df.style.set_precision(2).highlight_min(axis=1,subset=model_names_list_JP) -> df.style.format(precision=2).highlight_min(axis=1,subset=model_names_list_JP)

理由: set_precision(<数値>) は廃止され、 .format(precision=<数値>) が採用されたため。

0 notes

Photo

Creating Graph for My Data

Summary

From the graph its clear that the distribution only contained teenagers who agreed that they have been pregnant and also showed if they were ready to get pregnant or not, turns out a larger percentage agreed to the fact that they were ready when they got pregnant.

My code

sub2["H1FP7"] = sub2["H1FP7"].astype('category')

seaborn.countplot(x="H1FP7", data=sub2) plt.xlabel(' Respondent who Have been pregnant') plt.title('Respondents who are teenagers that confirmed that they are pregnant')

#Univariate histogram for quantitative variable: seaborn.distplot(sub2["H1FP15_1"].dropna(), kde=False); plt.xlabel('Ready for pregnancy/not ready?') plt.title('Estimated number of respondent who agreed to be ready for pregnacy or not ready') ############################################################################### # Code for Week 4 Python Lesson 3 - Measures of Center & Spread

# standard deviation and other descriptive statistics for quantitative variables print ('Pregnant') desc1 = sub2['H1FP7'].describe() print (desc1)

c1= sub2.groupby('H1FP15_1').size() print (c1)

print ('Pregnant') desc2 = sub2['H1FP7'].describe() print (desc2)

c1= sub2.groupby('H1FP7').size() print (c1)

print ('mode') mode1 = sub2['H1FP7'].mode() print (mode1)

print ('mean') mean1 = sub2['H1FP15_1'].mean() print (mean1)

print ('std') std1 = sub2['H1FP15_1'].std() print (std1)

print ('min') min1 = sub2['H1FP15_1'].min() print (min1)

print ('max') max1 = sub2['H1FP15_1'].max() print (max1)

print ('median') median1 = sub2['H1FP15_1'].median() print (median1)

print ('mode') mode1 = sub2['H1FP15_1'].mode() print (mode1)

c1= sub2.groupby('H1FP7').size() print (c1)

p1 = sub2.groupby('H1FP7').size() * 100 / len(data) print (p1)

c2 = sub2.groupby('H1FP15_1').size() print (c2)

p2 = sub2.groupby('H1FP15_1').size() * 100 / len(data) print (p2)

My Code Output

ct1= data.groupby('H1FP7').size() print(ct1)

pt1 = data.groupby('H1FP7').size() * 100 / len(data) print(pt1) H1FP7 0 643 1 131 6 4 7 4058 8 1 dtype: int64 H1FP7 0 13.293364 1 2.708290 6 0.082696 7 83.894976 8 0.020674 dtype: float64

sub1=data[(data['age']>=18) & (data['age']<=25) & (data['H1FP7']==1)]

sub2 = sub1.copy()

import seaborn import matplotlib.pyplot as plt

seaborn.countplot(x="H1FP7", data=sub2) plt.xlabel(' Respondent who Have been pregnant') plt.title('Respondents who are teenagers that confirmed that they are pregnant') Out[9]: Text(0.5, 1.0, 'Respondents who are teenagers that confirmed that they are pregnant')

Figures now render in the Plots pane by default. To make them also appear inline in the Console, uncheck "Mute Inline Plotting" under the Plots pane options menu.

seaborn.distplot(sub2["H1FP15_1"].dropna(), kde=False); plt.xlabel('Ready for pregnancy/not ready?') plt.title('Estimated number of respondent who agreed to be ready for pregnacy or not ready') C:\Users\Admin\anaconda3\lib\site-packages\seaborn\distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms). warnings.warn(msg, FutureWarning) Out[10]: Text(0.5, 1.0, 'Estimated number of respondent who agreed to be ready for pregnacy or not ready')

print ('Pregnant') desc1 = sub2['H1FP7'].describe() print (desc1) Pregnant count 87.0 mean 1.0 std 0.0 min 1.0 25% 1.0 50% 1.0 75% 1.0 max 1.0 Name: H1FP7, dtype: float64

print ('Pregnant') desc1 = sub2['H1FP7'].describe() print (desc1)

c1= sub2.groupby('H1FP15_1').size() print (c1)

print ('Pregnant') desc2 = sub2['H1FP7'].describe() print (desc2)

c1= sub2.groupby('H1FP7').size() print (c1)

print ('mode') mode1 = sub2['H1FP7'].mode() print (mode1)

print ('mean') mean1 = sub2['H1FP15_1'].mean() print (mean1)

print ('std') std1 = sub2['H1FP15_1'].std() print (std1)

print ('min') min1 = sub2['H1FP15_1'].min() print (min1)

print ('max') max1 = sub2['H1FP15_1'].max() print (max1)

print ('median') median1 = sub2['H1FP15_1'].median() print (median1)

print ('mode') mode1 = sub2['H1FP15_1'].mode() print (mode1)

c1= sub2.groupby('H1FP7').size() print (c1)

p1 = sub2.groupby('H1FP7').size() * 100 / len(data) print (p1)

c2 = sub2.groupby('H1FP15_1').size() print (c2)

p2 = sub2.groupby('H1FP15_1').size() * 100 / len(data) print (p2) Pregnant count 87.0 mean 1.0 std 0.0 min 1.0 25% 1.0 50% 1.0 75% 1.0 max 1.0 Name: H1FP7, dtype: float64 H1FP15_1 1 5 3 2 4 2 5 1 7 77 dtype: int64 Pregnant count 87.0 mean 1.0 std 0.0 min 1.0 25% 1.0 50% 1.0 75% 1.0 max 1.0 Name: H1FP7, dtype: float64 H1FP7 1 87 dtype: int64 mode 0 1 dtype: int64 mean 6.471264367816092 std 1.5614474535594247 min 1 max 7 median 7.0 mode 0 7 dtype: int64 H1FP7 1 87 dtype: int64 H1FP7 1 1.798636 dtype: float64 H1FP15_1 1 5 3 2 4 2 5 1 7 77 dtype: int64 H1FP15_1 1 0.103370 3 0.041348 4 0.041348 5 0.020674 7 1.591896 dtype: float64

0 notes

Text

Week 4 Creating graphs for your data

STEP 1: Create graphs of your variables one at a time (univariate graphs).

Examine both their center and spread.

STEP 2: Create a graph showing the association between your explanatory and response variables (bivariate graph).

import pandas as pd import seaborn as sns import matplotlib.pyplot as plt data = pd.read_csv('/content/gapminder.csv', low_memory=False) bindata = data.copy() data['internetuserate'] = pd.to_numeric(data['internetuserate'], errors='coerce') bindata['internetuserate'] = pd.cut(data.internetuserate, 10) data['incomeperperson'] = pd.to_numeric(data['incomeperperson'], errors='coerce') bindata['incomeperperson'] = pd.cut(data.incomeperperson, 10) data['employrate'] = pd.to_numeric(data['employrate'], errors='coerce') bindata['employrate'] = pd.cut(data.employrate, 10) data['femaleemployrate'] = pd.to_numeric(data['femaleemployrate'], errors='coerce') bindata['femaleemployrate'] = pd.cut(data.femaleemployrate, 10) data['polityscore'] = pd.to_numeric(data['polityscore'], errors='coerce') bindata['polityscore'] = data['polityscore'] sub2 = bindata.copy()

sns.distplot(data["internetuserate"].dropna(), kde=False); plt.xlabel('Internet Use Rate') plt.title('Percentage of Population with Access to the Internet') sns.plt.show()

/usr/local/lib/python3.6/dist-packages/seaborn/distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms). warnings.warn(msg, FutureWarning)

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-5-a4342a87f149> in <module>() 3 plt.xlabel('Internet Use Rate') 4 plt.title('Percentage of Population with Access to the Internet') ----> 5 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() sns.distplot(data["incomeperperson"].dropna(), kde=False); plt.xlabel('Income Per Person') plt.title('GDP Per Person') sns.plt.show()

/usr/local/lib/python3.6/dist-packages/seaborn/distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms). warnings.warn(msg, FutureWarning)

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-6-54db57e9a334> in <module>() 3 plt.xlabel('Income Per Person') 4 plt.title('GDP Per Person') ----> 5 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() sns.distplot(data["employrate"].dropna(), kde=False); plt.xlabel('Employment Rate') plt.title('Percentage of total population, age above 15, that has been employed during the given year.') sns.plt.show()

/usr/local/lib/python3.6/dist-packages/seaborn/distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms). warnings.warn(msg, FutureWarning)

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-7-9e73a7a31906> in <module>() 3 plt.xlabel('Employment Rate') 4 plt.title('Percentage of total population, age above 15, that has been employed during the given year.') ----> 5 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

sns.distplot(data["femaleemployrate"].dropna(), kde=False); plt.xlabel('Female Employment Rate') plt.title('Percentage of Female Population with Access to the Internet') sns.plt.show()

/usr/local/lib/python3.6/dist-packages/seaborn/distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms). warnings.warn(msg, FutureWarning)

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-8-84212c88bbe3> in <module>() 3 plt.xlabel('Female Employment Rate') 4 plt.title('Percentage of Female Population with Access to the Internet') ----> 5 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() sns.countplot(x="polityscore", data=data) plt.xlabel('Polity Score') plt.title("The summary measure of a country's democratic and free nature") sns.plt.show()

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-9-92fdf6792227> in <module>() 3 plt.xlabel('Polity Score') 4 plt.title("The summary measure of a country's democratic and free nature") ----> 5 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() scat1 = sns.regplot(x="internetuserate", y="incomeperperson", fit_reg=False, data=data) plt.xlabel('Internet Use Rate') plt.ylabel('Income Per Person') plt.title('Scatterplot for the Association Between Internet Use Rate and Income Per Person') sns.plt.show()

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-10-8661bffadf8a> in <module>() 4 plt.ylabel('Income Per Person') 5 plt.title('Scatterplot for the Association Between Internet Use Rate and Income Per Person') ----> 6 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() scat2 = sns.regplot(x="internetuserate", y="employrate", fit_reg=False, data=data) plt.xlabel('Internet Use Rate') plt.ylabel('Employment Rate') plt.title('Scatterplot for the Association Between Internet Use Rate and Employment Rate') sns.plt.show()

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-11-ec533021bfd1> in <module>() 4 plt.ylabel('Employment Rate') 5 plt.title('Scatterplot for the Association Between Internet Use Rate and Employment Rate') ----> 6 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

plt.figure() scat3 = sns.regplot(x="internetuserate", y="femaleemployrate", fit_reg=False, data=data) plt.xlabel('Female Employment Rate') plt.ylabel('Income Per Person') plt.title('Scatterplot for the Association Between Internet Use Rate and Female Employment Rate') sns.plt.show()

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-12-f90bc6bd752f> in <module>() 4 plt.ylabel('Income Per Person') 5 plt.title('Scatterplot for the Association Between Internet Use Rate and Female Employment Rate') ----> 6 sns.plt.show() AttributeError: module 'seaborn' has no attribute 'plt'

0 notes

Text

Assignment-02_02 (Course-02, Module-02)

Running Chi-Square Independence Test

Dataset : GapMinder

Variables

urbanrate (urbgrps after collapsing into categories)

lifeexpectancy ( lifgrps after collapsing into categories)

urbgrps : urbanrate is collapsed into categories of width 20%, hence having 5 categories (1 for 0%-20%, 2 for 20% to 40%, .... and 5 for 80% to 100%)

lifgrps : It is a two-valued variable, with value 1 if the country has life-expectancy greater than or equal ti 65% and 0 otherwise.

Research Question : Hypothesis

H0 : proportion of countries in each category of urbgrps having life-expectancy of 65+ years is equal

HA : The proportion is unequal for atleast two groups

Summary

The p-value for the Chi-square test is 0.000008, which is significantly less than 0.05. Hence we can reject the null hypothesis.

The following table shows the p-values of chi-square tests for each pair of categories.

There are 10 comparisons, hence our threshold p-value must be 0.05/10 = 0.005

It is clear that category-4 (countries with urban-rate between 60% and 80%) has significantly different life-expectancy

Output

Rows 213 columns 16 ===================================== Descriptive Statistical Analysis =====================================

===================================== Inferencial Statistical Analysis ===================================== urbgrps 1 2 3 4 5 lifgrps 0 9 24 17 6 16 1 4 22 29 52 34 G:/Data Science/Data Analysis and Interpretation (Coursera)/Codes/02-02-Assignment.py:46: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects() For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric. data['urbanrate'] = data['urbanrate'].convert_objects(convert_numeric=True) G:/Data Science/Data Analysis and Interpretation (Coursera)/Codes/02-02-Assignment.py:47: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects() For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric. data['alcconsumption'] = data['alcconsumption'].convert_objects(convert_numeric=True) G:/Data Science/Data Analysis and Interpretation (Coursera)/Codes/02-02-Assignment.py:48: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects() For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric. data['lifeexpectancy'] = data['lifeexpectancy'].convert_objects(convert_numeric=True) G:/Data Science/Data Analysis and Interpretation (Coursera)/Codes/02-02-Assignment.py:75: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects() For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric. return 0 urbgrps 1 2 3 4 5 lifgrps 0 0.692308 0.521739 0.369565 0.103448 0.320000 1 0.307692 0.478261 0.630435 0.896552 0.680000 chi-square value, p value, expected counts (28.770249676716467, 8.704036143643654e-06, 4L, array([[ 4.3943662 , 15.54929577, 15.54929577, 19.6056338 , 16.90140845], [ 8.6056338 , 30.45070423, 30.45070423, 38.3943662 , 33.09859155]])) ============================= Post-hoc test New threshold for p-value : 0.005 1 vs 2 comp1v2 1.000000 2.000000 lifgrps 0 9 24 1 4 22 comp1v2 1.000000 2.000000 lifgrps 0 0.692308 0.521739 1 0.307692 0.478261 chi-square value, p value, expected counts (0.6044210109845561, 0.43689616446201507, 1L, array([[ 7.27118644, 25.72881356], [ 5.72881356, 20.27118644]])) 1 vs 3 comp 1.000000 3.000000 lifgrps 0 9 17 1 4 29 comp 1.000000 3.000000 lifgrps 0 0.692308 0.369565 1 0.307692 0.630435 chi-square value, p value, expected counts (3.073965958790373, 0.07955517016836518, 1L, array([[ 5.72881356, 20.27118644], [ 7.27118644, 25.72881356]])) 1 vs 4 comp 1.000000 4.000000 lifgrps 0 9 6 1 4 52 comp 1.000000 4.000000 lifgrps 0 0.692308 0.103448 1 0.307692 0.896552 chi-square value, p value, expected counts (18.70646985916383, 1.524642950087753e-05, 1L, array([[ 2.74647887, 12.25352113], [10.25352113, 45.74647887]])) 1 vs 5 comp 1.000000 5.000000 lifgrps 0 9 16 1 4 34 comp 1.000000 5.000000 lifgrps 0 0.692308 0.320000 1 0.307692 0.680000 chi-square value, p value, expected counts (4.520721862348178, 0.03348669970950359, 1L, array([[ 5.15873016, 19.84126984], [ 7.84126984, 30.15873016]])) 2 vs 3 comp 2.000000 3.000000 lifgrps 0 24 17 1 22 29 comp 2.000000 3.000000 lifgrps 0 0.521739 0.369565 1 0.478261 0.630435 chi-square value, p value, expected counts (1.5839311334289814, 0.20819535296566563, 1L, array([[20.5, 20.5], [25.5, 25.5]])) 2 vs 4 comp 2.000000 4.000000 lifgrps 0 24 6 1 22 52 comp 2.000000 4.000000 lifgrps 0 0.521739 0.103448 1 0.478261 0.896552 chi-square value, p value, expected counts (19.878233856044954, 8.253469598112272e-06, 1L, array([[13.26923077, 16.73076923], [32.73076923, 41.26923077]])) 2 vs 5 comp 2.000000 5.000000 lifgrps 0 24 16 1 22 34 comp 2.000000 5.000000 lifgrps 0 0.521739 0.320000 1 0.478261 0.680000 chi-square value, p value, expected counts (3.2246459627329176, 0.07253748022033618, 1L, array([[19.16666667, 20.83333333], [26.83333333, 29.16666667]])) 3 vs 4 comp 3.000000 4.000000 lifgrps 0 17 6 1 29 52 comp 3.000000 4.000000 lifgrps 0 0.369565 0.103448 1 0.630435 0.896552 chi-square value, p value, expected counts (9.059129050611572, 0.0026138645525417546, 1L, array([[10.17307692, 12.82692308], [35.82692308, 45.17307692]])) 3 vs 5 comp 3.000000 5.000000 lifgrps 0 17 16 1 29 34 comp 3.000000 5.000000 lifgrps 0 0.369565 0.320000 1 0.630435 0.680000 chi-square value, p value, expected counts (0.08745341614906833, 0.7674399219661803, 1L, array([[15.8125, 17.1875], [30.1875, 32.8125]])) 4 vs 5 comp 4.000000 5.000000 lifgrps 0 6 16 1 52 34 comp 4.000000 5.000000 lifgrps 0 0.103448 0.320000 1 0.896552 0.680000 chi-square value, p value, expected counts (6.485275205948824, 0.01087716976280061, 1L, array([[11.81481481, 10.18518519], [46.18518519, 39.81481481]]))

Finslly The Code

import pandas import numpy import seaborn import matplotlib.pyplot as plt import statsmodels.formula.api as smf import statsmodels.stats.multicomp as multi import scipy.stats

#importing data data = pandas.read_csv('Dataset_gapminder.csv', low_memory=False)

#Set PANDAS to show all columns in DataFrame pandas.set_option('display.max_columns', None) #Set PANDAS to show all rows in DataFrame pandas.set_option('display.max_rows', None)

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%f'%x)

#printing number of rows and columns print ('Rows') print (len(data)) print ('columns') print (len(data.columns))

#------- Variables under consideration------# # alcconsumption # urbanrate # lifeexpectancy

# Setting values to numeric data['urbanrate'] = data['urbanrate'].convert_objects(convert_numeric=True) data['alcconsumption'] = data['alcconsumption'].convert_objects(convert_numeric=True) data['lifeexpectancy'] = data['lifeexpectancy'].convert_objects(convert_numeric=True)

# Categorizing urbanrate as urbgrps

def urbgrps (row): if row['urbanrate'] <= 20 : return 1 elif row['urbanrate'] <= 40 : return 2 elif row['urbanrate'] <= 60 : return 3 elif row['urbanrate'] <= 80 : return 4 else : return 5 data2['urbgrps'] = data2.apply (lambda row: urbgrps (row),axis=1) data2["urbgrps"] = data2["urbgrps"].astype('category')

# Categorizing lifeexpectancy as lifgrps

def lifgrps (row): if row['lifeexpectancy'] >= 65 : return 1 else : return 0 data2['lifgrps'] = data2.apply (lambda row: lifgrps (row),axis=1) # Setting urbgrps to numeric data2['lifgrps'] = data2['lifgrps'].convert_objects(convert_numeric=True)

# Descriptive Analysis

print ('=====================================') print ('Descriptive Statistical Analysis') print ('=====================================') seaborn.factorplot(x='urbgrps', y='lifgrps', data=data2, kind="bar", ci=None) plt.xlabel('Urban rate') plt.ylabel('Proportion of countries in group with lifeexpectancy less than 65 years')

#Inferencial Statistic

print ('=====================================') print ('Inferencial Statistical Analysis') print ('=====================================')

# contingency table of observed counts ct1=pandas.crosstab(data2['lifgrps'], data2['urbgrps']) print (ct1)

# column percentages colsum=ct1.sum(axis=0) colpct=ct1/colsum print(colpct)

# chi-square value, p value, expected counts print ('chi-square value, p value, expected counts') cs1= scipy.stats.chi2_contingency(ct1) print (cs1)

# Post-hoc Test

print ('=============================') print ('Post-hoc test') print ('New threshold for p-value : ') print (0.05/10) print ('1 vs 2') recode1_2 = {1:1, 2:2} data2['comp1v2'] = data2['urbgrps'].map(recode1_2)

ct1_2 = pandas.crosstab(data2['lifgrps'], data2['comp1v2']) print (ct1_2)

colsum1_2 = ct1_2.sum(axis=0) colpct1_2 = ct1_2/colsum1_2 print (colpct1_2)

print ('chi-square value, p value, expected counts') cs1_2 = scipy.stats.chi2_contingency(ct1_2) print (cs1_2)

# 1 vs 3 print ('1 vs 3') recode = {1:1, 3:3} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 1 vs 4 print ('1 vs 4') recode = {1:1, 4:4} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 1 vs 5 print ('1 vs 5') recode = {1:1, 5:5} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 2 vs 3 print ('2 vs 3') recode = {2:2, 3:3} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 2 vs 4 print ('2 vs 4') recode = {2:2, 4:4} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 2 vs 5 print ('2 vs 5') recode = {2:2, 5:5} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 3 vs 4 print ('3 vs 4') recode = {3:3, 4:4} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 3 vs 5 print ('3 vs 5') recode = {3:3, 5:5} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

# 4 vs 5 print ('4 vs 5') recode = {4:4, 5:5} data2['comp'] = data2['urbgrps'].map(recode)

ct = pandas.crosstab(data2['lifgrps'], data2['comp']) print (ct)

colsum = ct.sum(axis=0) colpct = ct/colsum print (colpct)

print ('chi-square value, p value, expected counts') cs = scipy.stats.chi2_contingency(ct) print (cs)

0 notes

Photo

DockerでUnity ML-Agentsを動作させてみた(v0.11.0対応) https://ift.tt/2rjOwz3

Unity ML-Agents(v0.9.1)をDocker上で動作させてみました。

UnityやUnity ML-Agentsの環境構築などは下記をご参考ください。

Macでhomebrewを使ってUnityをインストールする(Unity Hub、日本語化対応) https://cloudpack.media/42142

MacでUnity ML-Agentsの環境を構築する(v0.11.0対応) – Qiita https://cloudpack.media/50837

手順

v0.10.1まではDockerでの学習方法についてドキュメントがあったのですが、v0.11.0の時点でドキュメントが削除されています。情報としては若干古くなりますが、v0.11.0でもこちらを参考にして動作させることができます。

ml-agents/Using-Docker.md at 0.10.1 · Unity-Technologies/ml-agents https://github.com/Unity-Technologies/ml-agents/blob/0.10.1/docs/Using-Docker.md

Dockerのインストール

Dockerがインストールされていない場合、インストールします。

Macでbrewコマンド利用

> brew cask install docker (略) > docker --version Docker version 19.03.4, build 9013bf5

※Dockerを初回起動すると初期設定のためにパスワード入力が求められます。

UnityにLinuxビルドサポートコンポーネントを追加する

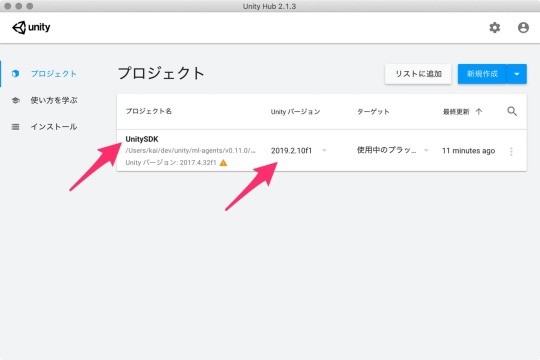

Unity Hubを利用してUnityにLinuxビルドサポートコンポーネントを追加します。 Unityのバージョンは2019.2.10f1を利用しています。

Unity Hubがインストールされていない場合は下記をご参考ください。

Macでhomebrewを使ってUnityをインストールする(Unity Hub、日本語化対応) https://cloudpack.media/42142

Unity Hub��プリを起動する

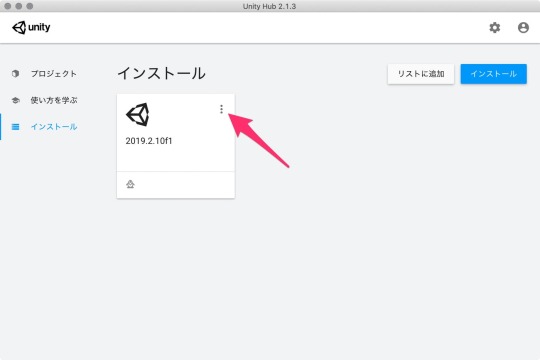

[インストール] > [Unityの利用するアプリ]右側にある[︙]をクリックして[モジュールを加える]を選択する

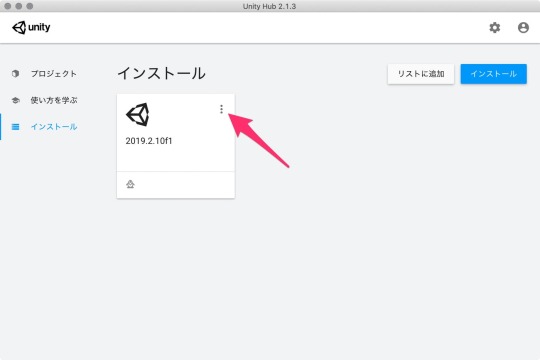

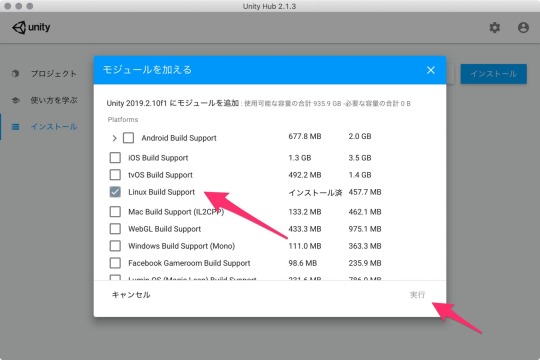

[モジュールを加える]ダイアログの[Platforms]にある[Linux Build Support]にチェックを入れて[実行]ボタンをクリックする

学習用のUnityアプリをダウンロードしてビルドする

ML-Agentsリポジトリに含まれているサンプルを学習できるようにします。

ML-Agentsリポジトリをダウンロード

適当なディレクトリにリポジトリをダウンロードする。

> mkdir 適当なディレクトリ > cd 適当なディレクトリ > git clone https://github.com/Unity-Technologies/ml-agents.git

Unityアプリからサンプルプロジェクトを開く

Unity Hubでアプリを立ち上げます。 ML-Agentsを利用するにはUnityのバージョン2017.4以上が必要となります。今回は2019.2.10f1を利用しました。

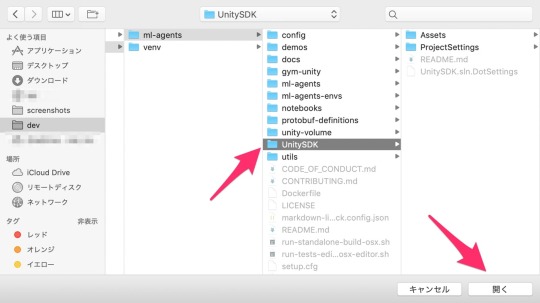

アプリが立ち上がったら「開く」ボタンから任意のディレクトリ/ml-agents/UnitySDKフォルダを選択します。

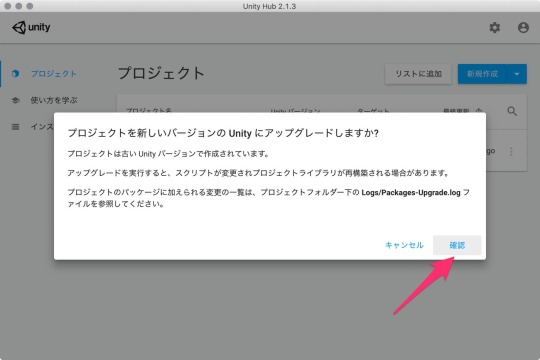

Unityエディタのバージョンによっては、アップグレードするかの確認ダイアログが立ち上がります。

「確認」ボタンをクリックして進めます。アップグレード処理に少し時間がかかります。

起動しました。

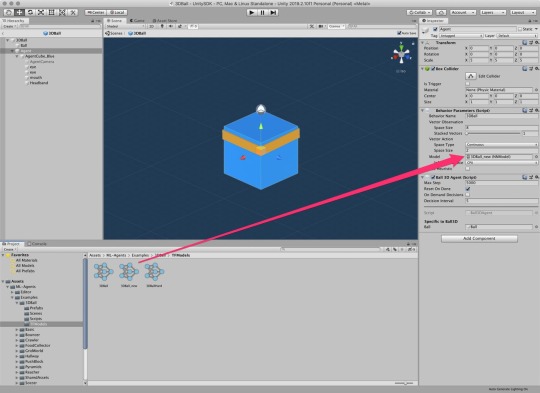

今回は、サンプルにある[3DBall]Scenesを利用します。

Unityアプリの下パネルにある[Project]タブから以下のフォルダまで開く

[Assets] > [ML-Agents] > [Examples] > [3DBall] > [Scenes]

開いたら、[3DBall]ファイルがあるので、ダブルクリックして開く

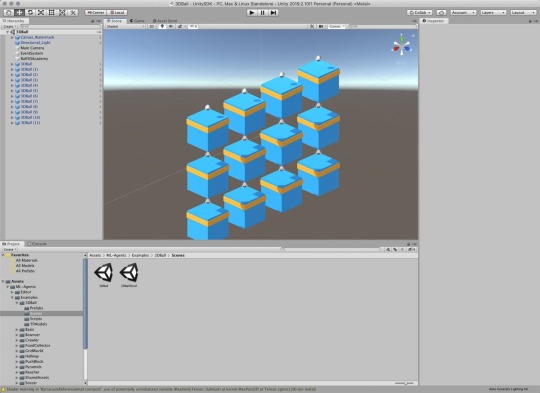

Scenes(シーン)の設定

ML-Agentsで学習させるための設定です。

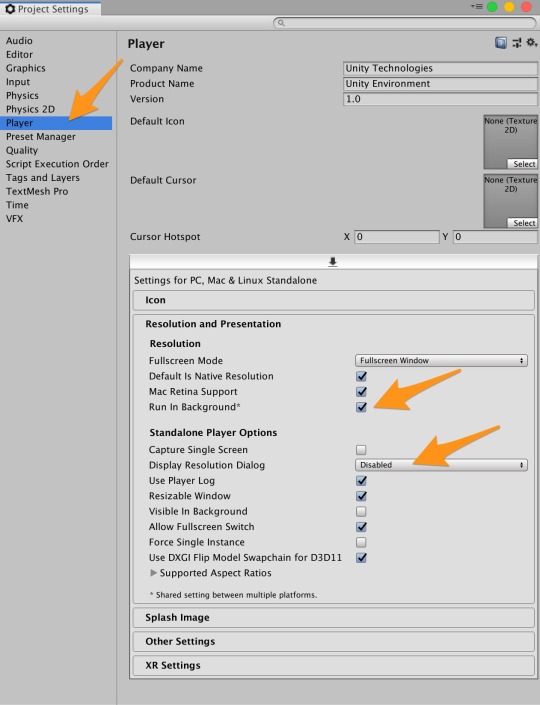

Unityアプリの[Edit]メニューから[Project Settings]を開く

[Inspector]パネルで以下の設定を確認する

[Resolution and Presentation]の[Run In Background]がチェックされている

[Display Resolution Dialog]がDisableになっている

ビルド設定

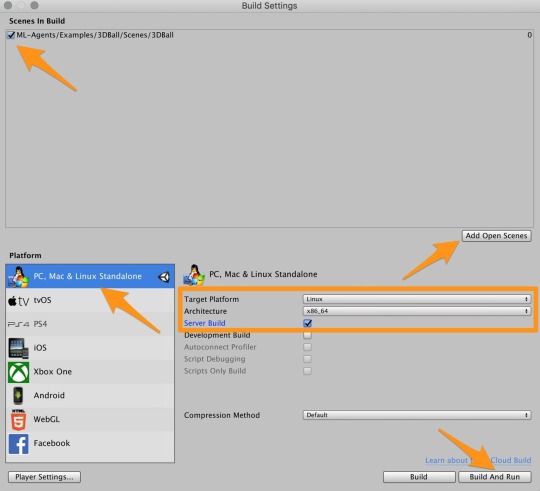

Unityアプリの[File]メニューから[Build Settings]を選択して[Build Settings]ダイアログを開く

[Add Open Scenes]をクリックする

[Scenes In Build]で[ML-Agents/Examples/3DBall/Scenes/3DBall]にチェックを入れる

[Platform]でPC, Mac & Linux Standalone が選択されていることを確認する

[Target Platform]をLinuxに変更する

[Architecture]をx86_64に変更する

[Server Build]にチェックを入れる

[Build]ボタンをクリックする

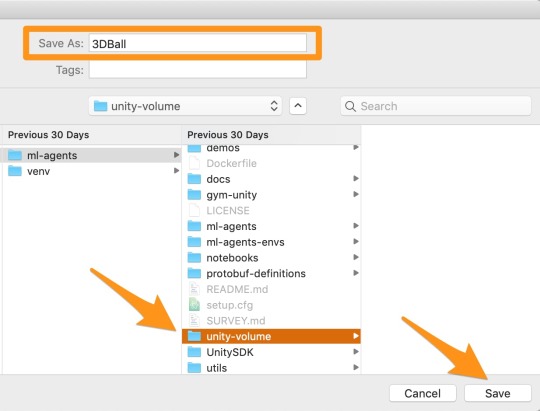

ファイル保存ダイアログで以下を指定してビルドを開始する

ファイル名: 3DBall

フォルダ名: 任意のディレクトリ/ml-agents/unity-volume

すると、unity-volumeに以下フォルダ・ファイルが出力されます。

ls 任意のディレクトリ/ml-agents/unity-volume 3DBall.x86_64 3DBall_Data

ハイパーパラメーターファイルの用意

ハイパーパラメーターファイルをunity-volumeフォルダにコピーしておきます。

> cp ml-agents/config/trainer_config.yaml ml-agents/unity-volume/

Dockerイメージを作成する

すでにDockerfileが用意されているので、docker buildするだけ。楽々ですね。 ml-agents-on-dockerはイメージ名となりますので、任意でOKです。

> docker build -t ml-agents-on-docker ./ml-agents (略) Removing intermediate container c1384ee9d5a6 ---> 9ff8832e88dc Step 19/20 : EXPOSE 5005 ---> Running in 53253a272fb4 Removing intermediate container 53253a272fb4 ---> f0b43146ad36 Step 20/20 : ENTRYPOINT ["mlagents-learn"] ---> Running in f80f7504b790 Removing intermediate container f80f7504b790 ---> 9caddd5a62b1 Successfully built 9caddd5a62b1 Successfully tagged ml-agents-on-docker:latest > docker images REPOSITORY TAG IMAGE ID CREATED SIZE ml-agents-on-docker latest 9caddd5a62b1 2 minutes ago 1.23GB

注意点

ml-agentsディレクトリ直下でdocker buildコマンドを実行するとエラーになるのでご注意ください。

> cd ml-agents > docker build -t ml-agents-on-docker . error checking context: 'file ('/Users/xxx/xxxxx/ml-agents/UnitySDK/Temp') not found or excluded by .dockerignore'.

v0.10.0からPython 3.7.xにも対応しましたが、Dockerfileを覗いてみるとPyhtonのバージョンは3.6.4のままでした。

Dockerfile一部抜粋

ENV PYTHON_VERSION 3.6.4

Dockerコンテナの実行

ビルドできたら実行してみます。

bashの場合

# ml-agents-3dball: コンテナ名(任意) # ml-agents-on-docker: Dockerでビルド時に付けたイメージ名 # 3DBall: Unityでビルド時に付けたアプリの名前(拡張子なし) > cd ml-agents > docker run -it --rm \ --name ml-agents-3dball \ --mount type=bind,source="$(pwd)"/unity-volume,target=/unity-volume \ -p 5005:5005 \ -p 6006:6006 \ ml-agents-on-docker:latest \ --docker-target-name=unity-volume \ --env=3DBall \ --train \ trainer_config.yaml

mlagents-learnコマンドの--docker-target-nameオプションはdocker runコマンドの--workdir(-w)に置き換えることもできます。

> docker run -it --rm \ --name ml-agents-3dball \ --mount type=bind,source="$(pwd)"/unity-volume,target=/unity-volume \ -w /unity-volume \ -p 5005:5005 \ -p 6006:6006 \ ml-agents-on-docker:latest \ --env=3DBall \ --train \ trainer_config.yaml

fishシェルで実行する場合は、"$(pwd)"を"$PWD"に置き換えます。

fishの場合

> cd ml-agents > docker run -it --rm \ --name ml-agents-3dball \ --mount type=bind,source="$PWD"/unity-volume,target=/unity-volume \ -p 5005:5005 \ -p 6006:6006 \ ml-agents-on-docker:latest \ --docker-target-name=unity-volume \ --env=3DBall \ --train \ trainer_config.yaml

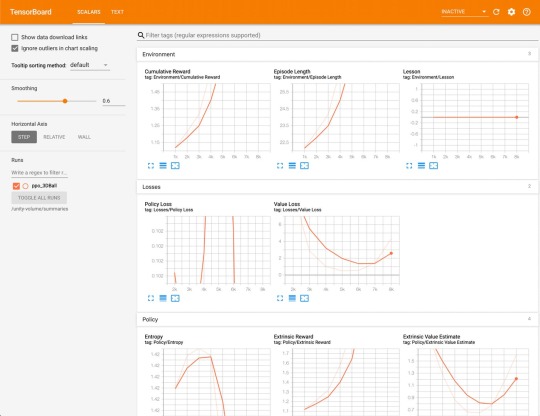

実行すると、学習が始まります。 trainer_config.yamlのmax_stepsで指定されているステップ数が完了するか、ctrl + cキーで学習が終了します。

> docker run (略) ▄▄▄▓▓▓▓ ╓▓▓▓▓▓▓█▓▓▓▓▓ ,▄▄▄m▀▀▀' ,▓▓▓▀▓▓▄ ▓▓▓ ▓▓▌ ▄▓▓▓▀' ▄▓▓▀ ▓▓▓ ▄▄ ▄▄ ,▄▄ ▄▄▄▄ ,▄▄ ▄▓▓▌▄ ▄▄▄ ,▄▄ ▄▓▓▓▀ ▄▓▓▀ ▐▓▓▌ ▓▓▌ ▐▓▓ ▐▓▓▓▀▀▀▓▓▌ ▓▓▓ ▀▓▓▌▀ ^▓▓▌ ╒▓▓▌ ▄▓▓▓▓▓▄▄▄▄▄▄▄▄▓▓▓ ▓▀ ▓▓▌ ▐▓▓ ▐▓▓ ▓▓▓ ▓▓▓ ▓▓▌ ▐▓▓▄ ▓▓▌ ▀▓▓▓▓▀▀▀▀▀▀▀▀▀▀▓▓▄ ▓▓ ▓▓▌ ▐▓▓ ▐▓▓ ▓▓▓ ▓▓▓ ▓▓▌ ▐▓▓▐▓▓ ^█▓▓▓ ▀▓▓▄ ▐▓▓▌ ▓▓▓▓▄▓▓▓▓ ▐▓▓ ▓▓▓ ▓▓▓ ▓▓▓▄ ▓▓▓▓` '▀▓▓▓▄ ^▓▓▓ ▓▓▓ └▀▀▀▀ ▀▀ ^▀▀ `▀▀ `▀▀ '▀▀ ▐▓▓▌ ▀▀▀▀▓▄▄▄ ▓▓▓▓▓▓, ▓▓▓▓▀ `▀█▓▓▓▓▓▓▓▓▓▌ ¬`▀▀▀█▓ INFO:mlagents.trainers:CommandLineOptions(debug=False, num_runs=1, seed=-1, env_path='3DBall', run_id='ppo', load_model=False, train_model=True, save_freq=50000, keep_checkpoints=5, base_port=5005, num_envs=1, curriculum_folder=None, lesson=0, slow=False, no_graphics=False, multi_gpu=False, trainer_config_path='trainer_config.yaml', sampler_file_path=None, docker_target_name='unity-volume', env_args=None, cpu=False) INFO:mlagents.envs: 'Ball3DAcademy' started successfully! Unity Academy name: Ball3DAcademy Number of Training Brains : 0 Reset Parameters : gravity -> 9.8100004196167 scale -> 1.0 mass -> 1.0 (略) 2019-11-07 05:31:38.593118: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 2019-11-07 05:31:38.604956: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 2400000000 Hz 2019-11-07 05:31:38.607307: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x39ccf70 executing computations on platform Host. Devices: 2019-11-07 05:31:38.607465: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): <undefined>, <undefined> (略) 2019-11-07 05:31:40.131532: W tensorflow/compiler/jit/mark_for_compilation_pass.cc:1412] (One-time warning): Not using XLA:CPU for cluster because envvar TF_XLA_FLAGS=--tf_xla_cpu_global_jit was not set. If you want XLA:CPU, either set that envvar, or use experimental_jit_scope to enable XLA:CPU. To confirm that XLA is active, pass --vmodule=xla_compilation_cache=1 (as a proper command-line flag, not via TF_XLA_FLAGS) or set the envvar XLA_FLAGS=--xla_hlo_profile. INFO:mlagents.envs:Hyperparameters for the PPOTrainer of brain 3DBall: trainer: ppo batch_size: 64 beta: 0.001 buffer_size: 12000 epsilon: 0.2 hidden_units: 128 lambd: 0.99 learning_rate: 0.0003 learning_rate_schedule: linear max_steps: 5.0e4 memory_size: 256 normalize: True num_epoch: 3 num_layers: 2 time_horizon: 1000 sequence_length: 64 summary_freq: 1000 use_recurrent: False vis_encode_type: simple reward_signals: extrinsic: strength: 1.0 gamma: 0.99 summary_path: /unity-volume/summaries/ppo_3DBall model_path: /unity-volume/models/ppo-0/3DBall keep_checkpoints: 5 WARNING:tensorflow:From /ml-agents/mlagents/trainers/trainer.py:223: The name tf.summary.text is deprecated. Please use tf.compat.v1.summary.text instead. WARNING:tensorflow:From /ml-agents/mlagents/trainers/trainer.py:223: The name tf.summary.text is deprecated. Please use tf.compat.v1.summary.text instead. INFO:mlagents.trainers: ppo: 3DBall: Step: 1000. Time Elapsed: 10.062 s Mean Reward: 1.167. Std of Reward: 0.724. Training. (略) INFO:mlagents.trainers: ppo: 3DBall: Step: 10000. Time Elapsed: 109.367 s Mean Reward: 36.292. Std of Reward: 28.127. Training. (略) INFO:mlagents.trainers: ppo: 3DBall: Step: 49000. Time Elapsed: 520.514 s Mean Reward: 100.000. Std of Reward: 0.000. Training. (略) Converting /unity-volume/models/ppo-0/3DBall/frozen_graph_def.pb to /unity-volume/models/ppo-0/3DBall.nn IGNORED: Cast unknown layer IGNORED: StopGradient unknown layer GLOBALS: 'is_continuous_control', 'version_number', 'memory_size', 'action_output_shape' IN: 'vector_observation': [-1, 1, 1, 8] => 'sub_3' IN: 'epsilon': [-1, 1, 1, 2] => 'mul_1' OUT: 'action', 'action_probs' DONE: wrote /unity-volume/models/ppo-0/3DBall.nn file. INFO:mlagents.trainers:Exported /unity-volume/models/ppo-0/3DBall.nn file INFO:mlagents.envs:Environment shut down with return code 0.

WARNING がかなり出力されますが、学習できました。

TensorBoard を利用して学習の進捗を視覚的に確認することもできます。

> docker exec -it \ ml-agents-3dball \ tensorboard \ --logdir=/unity-volume/summaries \ --host=0.0.0.0 (略) _np_qint32 = np.dtype([("qint32", np.int32, 1)]) /usr/local/lib/python3.6/site-packages/tensorflow/python/framework/dtypes.py:525: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'. np_resource = np.dtype([("resource", np.ubyte, 1)]) TensorBoard 1.14.0 at http://0.0.0.0:6006/ (Press CTRL+C to quit)

学習結果をアプリに組み込む

学習結果は、ml-agents/unity-volumeフォルダ内に保存されます。 それをUnityアプリに組み込むことで学習結果をUnityアプリに反映できます。

> ls unity-volume/ 3DBall.x86_64 3DBall_Data csharp_timers.json models summaries trainer_config.yaml > tree unity-volume/models/ unity-volume/models/ └── ppo-0 ├── 3DBall │ ├── checkpoint │ ├── frozen_graph_def.pb │ ├── model-50000.cptk.data-00000-of-00001 │ ├── model-50000.cptk.index │ ├── model-50000.cptk.meta │ ├── model-50001.cptk.data-00000-of-00001 │ ├── model-50001.cptk.index │ ├── model-50001.cptk.meta │ └── raw_graph_def.pb └── 3DBall.nn 2 directories, 10 files

Unityアプリの設定

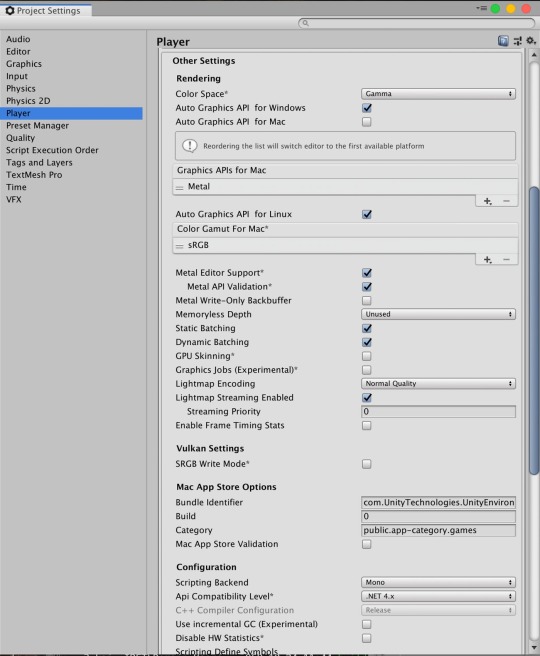

Playerの設定を行います。

Unityアプリの[Edit]メニューから[Project Settings]を選択する

[Inspector]ビューの[Other Settings]欄で以下を確認・設定する

Scripting BackendがMonoになっている

Api Conpatibility Levelが.NET 4.xになっている

学習結果ファイルの取り込み

ターミナルかFinderで学習結果を以下フォルダにコピーします。

学習結果ファイル: models/ppo-0/3DBall.nn

保存先: UnitySDK/Assets/ML-Agents/Examples/3DBall/TFModels/

※すでに保存先に3DBall.nnファイルが存在していますので、リネームします。

> cp models/ppo-0/3DBall.nn ml-agents/UnitySDK/Assets/ML-Agents/Examples/3DBall/TFModels/3DBall_new.nn

Unityアプリの下パネルにある[Project]タブから以下のフォルダまで開く

[Assets] > [ML-Agents] > [Examples] > [3DBall] > [Scenes]

開いたら、[3DBall]ファイルがあるので、ダブルクリックして開く

[Hierarchy]パネルから[Agent]を選択する

Unityアプリの[Project]パネルで以下フォルダを選択する

[Assets] > [ML-Agents] > [Examples] > [3DBall] > [TFModels]

Unityアプリの[Inspector]パネルにある[Model]という項目に[TFModels]フォルダ内の3DBall_new.nnファイルをドラッグ&ドロップする

Unity上部にある[

]ボタンをクリックする

これで、学習結果が組み込まれた状態でアプリが起動します。

参考

Macでhomebrewを使ってUnityをインストールする(Unity Hub、日本語化対応) https://cloudpack.media/42142

MacでUnity ML-Agentsの環境を構築する(v0.11.0対応) – Qiita https://cloudpack.media/50837

ml-agents/Using-Docker.md at 0.10.1 · Unity-Technologies/ml-agents https://github.com/Unity-Technologies/ml-agents/blob/0.10.1/docs/Using-Docker.md

元記事はこちら

「DockerでUnity ML-Agentsを動作させてみた(v0.11.0対応)」

December 23, 2019 at 12:00PM

0 notes

Text

Australia vs Pakistan: ‘Yasir Shah & Shan Masood were probably yawning’ - Wasim Akram lashes out at Pak fielders - cricket

Legendary cricketer Wasim Akram lashed out as Pakistan players for their sloppy fielding display during the second Test against Australia at Adelaide Oval. Pakistan fielders were guilty of giving away runs as Australia piled on the misery, scoring 589/3d in their first essay of Day-Night Test. David Warner was the chief architect for Australia as he slammed a majestic triple century the power hosts to his humongous score. Akram wasn’t happy with Pakistan’s effort in the field and let his feelings known while commentating during the Test. Also Read: Warner breaks Bradman’s record with unique triple hundred against Pakistan“Shaheen Shah was in la la land at fine leg,” Akram said. “Yasir Shah and Shan Masood were probably yawning. That’s the problem with Pakistan cricket. They should be on the ball.”“Nobody was backing up and you as a fielder, it doesn’t matter how inexperienced you are, you set yourself, new batsman in, they just came out, I’ll probably start five-ten yards inside the boundary line not on the boundary,” he added. Whoops! #AUSvPAK | https://t.co/0QSefkJERk pic.twitter.com/HpEwgwlm1H— cricket.com.au (@cricketcomau) November 29, 2019 Warner broke records for fun as a hapless Pakistan bowling unit was sent on a leather hunt on Day 2 of the day/night Test. At one point Warner looked set to break the highest ever Test score of 400 by Brian Lara but Australia captain Tim Paine decided otherwise. Warner remained unbeaten on 335 off 418 balls when Australia declared at 589 for 3.Also Read: ‘There is absolute clarity,’ Sourav Ganguly comments on MS Dhoni’s futureWarner went past Don Bradman and Mark Taylor’s 334-run mark, becoming the second highest scorer for Australia after Matthew Hayden’s 380 against Zimbabwe in 2003.Warner also broke Don Bradman’s 88-year-old record for the highest score at the Adelaide Oval. Bradman had scored 299 in 1931-32, which remained the highest individual score at Adelaide Oval till Warner took matters in his own hands. Source link Read the full article

0 notes

Text

Testing a Potential Moderator

1.1 ANOVA CODE

#post hoc ANOVA

import pandas

import numpy

import statsmodels.formula.api as smf

import statsmodels.stats.multicomp as multi

data = pandas.read_csv(‘addhealth_pds.csv’, low_memory=False)

print(“converting variables to numeric”)

data[“H1SU1”] = data[“H1SU1”].convert_objects(convert_numeric=True)

data[“H1NB5”] = data[“H1NB5”].convert_objects(convert_numeric=True)

data[“H1NB6”] = data[“H1NB6”].convert_objects(convert_numeric=True)

print(“Coding missing values”)

data[“H1SU1”] = data[“H1SU1”].replace(6, numpy.nan)

data[“H1SU1”] = data[“H1SU1”].replace(9, numpy.nan)

data[“H1SU1”] = data[“H1SU1”].replace(8, numpy.nan)

data[“H1NB5”] = data[“H1NB5”].replace(6, numpy.nan)

data[“H1NB6”] = data[“H1NB6”].replace(6, numpy.nan)

data[“H1NB6”] = data[“H1NB6”].replace(8, numpy.nan)

#F-Statistic

model1 = smf.ols(formula='H1SU1 ~ C(H1NB6)’, data=data)

results1 = model1.fit()

print (results1.summary())

sub1 = data[['H1SU1’, 'H1NB6’]].dropna()

print ('means for H1SU1 by happiness level in neighbourhood’)

m1= sub1.groupby('H1NB6’).mean()

print (m1)

print ('standard deviation for H1SU1 by happiness level in neighbourhood’)

sd1 = sub1.groupby('H1NB6’).std()

print (sd1)

#more tahn 2 lvls

sub2 = sub1[['H1SU1’, 'H1NB6’]].dropna()

model2 = smf.ols(formula='H1SU1 ~ C(H1NB6)’, data=sub2).fit()

print (model2.summary())

print ('2: means for H1SU1 by happiness level in neighbourhood’)

m2= sub2.groupby('H1NB6’).mean()

print (m2)

print ('2: standard deviation for H1SU1 by happiness level in neighbourhood’)

sd2 = sub2.groupby('H1NB6’).std()

print (sd2)

mc1 = multi.MultiComparison(sub2['H1SU1’], sub2 ['H1NB6’])

res1 = mc1.tukeyhsd()

print(res1.summary())

1.2 ANOVA RESULTS

converting variables to numeric

Coding missing values

OLS Regression Results

==============================================================================

Dep. Variable: H1SU1 R-squared: 0.019

Model: OLS Adj. R-squared: 0.018

Method: Least Squares F-statistic: 31.16

Date: Sat, 24 Aug 2019 Prob (F-statistic): 9.79e-26

Time: 17:16:11 Log-Likelihood: -2002.8

No. Observations: 6426 AIC: 4016.

Df Residuals: 6421 BIC: 4049.

Df Model: 4

Covariance Type: nonrobust

===================================================================================

coef std err t P>|t| [0.025 0.975]

———————————————————————————–

Intercept 0.2850 0.024 11.976 0.000 0.238 0.332

C(H1NB6)[T.2.0] -0.0800 0.029 -2.713 0.007 -0.138 -0.022

C(H1NB6)[T.3.0] -0.1192 0.025 -4.688 0.000 -0.169 -0.069

C(H1NB6)[T.4.0] -0.1615 0.025 -6.519 0.000 -0.210 -0.113

C(H1NB6)[T.5.0] -0.2033 0.025 -8.191 0.000 -0.252 -0.155

==============================================================================

Omnibus: 2528.245 Durbin-Watson: 1.952

Prob(Omnibus): 0.000 Jarque-Bera (JB): 7313.190

Skew: 2.173 Prob(JB): 0.00

Kurtosis: 5.903 Cond. No. 15.0

==============================================================================

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

means for H1SU1 by happiness level in neighbourhood

H1SU1

H1NB6

1.0 0.284974

2.0 0.204986

3.0 0.165814

4.0 0.123478

5.0 0.081707

standard deviation for H1SU1 by happiness level in neighbourhood

H1SU1

H1NB6

1.0 0.452576

2.0 0.404252

3.0 0.372050

4.0 0.329057

5.0 0.273980

/For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

data[“H1SU1”] = data[“H1SU1”].convert_objects(convert_numeric=True)

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

data[“H1NB5”] = data[“H1NB5”].convert_objects(convert_numeric=True)

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

data[“H1NB6”] = data[“H1NB6”].convert_objects(convert_numeric=True)

OLS Regression Results

==============================================================================

Dep. Variable: H1SU1 R-squared: 0.019

Model: OLS Adj. R-squared: 0.018

Method: Least Squares F-statistic: 31.16

Date: Sat, 24 Aug 2019 Prob (F-statistic): 9.79e-26

Time: 17:16:11 Log-Likelihood: -2002.8

No. Observations: 6426 AIC: 4016.

Df Residuals: 6421 BIC: 4049.

Df Model: 4

Covariance Type: nonrobust

===================================================================================

coef std err t P>|t| [0.025 0.975]

———————————————————————————–

Intercept 0.2850 0.024 11.976 0.000 0.238 0.332

C(H1NB6)[T.2.0] -0.0800 0.029 -2.713 0.007 -0.138 -0.022

C(H1NB6)[T.3.0] -0.1192 0.025 -4.688 0.000 -0.169 -0.069

C(H1NB6)[T.4.0] -0.1615 0.025 -6.519 0.000 -0.210 -0.113

C(H1NB6)[T.5.0] -0.2033 0.025 -8.191 0.000 -0.252 -0.155

==============================================================================

Omnibus: 2528.245 Durbin-Watson: 1.952

Prob(Omnibus): 0.000 Jarque-Bera (JB): 7313.190

Skew: 2.173 Prob(JB): 0.00

Kurtosis: 5.903 Cond. No. 15.0

==============================================================================

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

2: means for H1SU1 by happiness level in neighbourhood

H1SU1

H1NB6

1.0 0.284974

2.0 0.204986

3.0 0.165814

4.0 0.123478

5.0 0.081707

2: standard deviation for H1SU1 by considered suicide in past 12 months

H1SU1

H1NB6

1.0 0.452576

2.0 0.404252

3.0 0.372050

4.0 0.329057

5.0 0.273980

Multiple Comparison of Means - Tukey HSD,FWER=0.05

=============================================

group1 group2 meandiff lower upper reject

———————————————

1.0 2.0 -0.08 -0.1604 0.0004 False

1.0 3.0 -0.1192 -0.1885 -0.0498 True

1.0 4.0 -0.1615 -0.2291 -0.0939 True

1.0 5.0 -0.2033 -0.271 -0.1356 True

2.0 3.0 -0.0392 -0.0925 0.0142 False

2.0 4.0 -0.0815 -0.1326 -0.0304 True

2.0 5.0 -0.1233 -0.1745 -0.0721 True

3.0 4.0 -0.0423 -0.0731 -0.0115 True

3.0 5.0 -0.0841 -0.1152 -0.0531 True

4.0 5.0 -0.0418 -0.0687 -0.0149 True

———————————————

1.3 ANOVA summary

Model Interpretation for ANOVA:

To determine the association between my quantitative response variable (if the respondent considered suicide in the past 12 months) and categorical explanatory variable (happiness level in the respondent’s neighbourhood) I performed an ANOVA test found that those who were most unhappy in their neighbourhood were most likely to have considered suicide (Mean= 0.284974, s.d ±0.452576), F=31.16, p< 9.79e-26).

Code for anova and moderator

import numpy

import pandas

import statsmodels.formula.api as smf

import statsmodels.stats.multicomp as multi

import seaborn

import matplotlib.pyplot as plt

data = pandas.read_csv('addhealth_pds.csv', low_memory=False)

print("converting variables to numeric")

data["H1NB5"] = data["H1SU4"].astype('category')

data["H1NB6"] = data["H1SU2"].convert_objects(convert_numeric=True)

print("Coding missing values")

data["H1SU2"] = data["H1SU2"].replace(7, numpy.nan)

data["H1SU2"] = data["H1SU2"].replace(8, numpy.nan)

data["H1SU4"] = data["H1SU4"].replace(6, numpy.nan)

data["H1SU4"] = data["H1SU4"].replace(7, numpy.nan)

data["H1SU4"] = data["H1SU4"].replace(8, numpy.nan)

data["H1SU5"] = data["H1SU5"].replace(6, numpy.nan)

data["H1SU5"] = data["H1SU5"].replace(7, numpy.nan)

data["H1SU5"] = data["H1SU5"].replace(8, numpy.nan)

sub2=data[(data['H1SU5']=='0')]

sub3=data[(data['H1SU5']=='1')]

print ('association between friends suicide attemps and number of suicide attemps if friend was UNSUCCESSFUL in attempt')

model2 = smf.ols(formula='H1SU4 ~ C(H1SU2)', data=sub2).fit()

print (model2.summary())

print ('association between friends suicide attemps and number of suicide attemps if friend was SUCECSSFUL in attempt')

model3 = smf.ols(formula='H1SU4 ~ C(H1SU2)', data=sub3).fit()

print (model3.summary())

ANOVA with moderator results:

runfile('/Users/tyler2k/Downloads/Data Analysis Course/Course 2 Annova and Post Hoc.py', wdir='/Users/tyler2k/Downloads/Data Analysis Course')

converting variables to numeric

Coding missing values

association between friends suicide attemps and number of suicide attemps if friend was UNSUCCESSFUL in attempt

/Users/tyler2k/Downloads/Data Analysis Course/Course 2 Annova and Post Hoc.py:25: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

Traceback (most recent call last):

File "<ipython-input-30-7bac10fffda5>", line 1, in <module>

runfile('/Users/tyler2k/Downloads/Data Analysis Course/Course 2 Annova and Post Hoc.py', wdir='/Users/tyler2k/Downloads/Data Analysis Course')

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/spyder_kernels/customize/spydercustomize.py", line 786, in runfile

execfile(filename, namespace)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/spyder_kernels/customize/spydercustomize.py", line 110, in execfile

exec(compile(f.read(), filename, 'exec'), namespace)

File "/Users/tyler2k/Downloads/Data Analysis Course/Course 2 Annova and Post Hoc.py", line 47, in <module>

model2 = smf.ols(formula='H1SU4 ~ C(H1SU2)', data=sub2).fit()

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/statsmodels/base/model.py", line 155, in from_formula

missing=missing)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/statsmodels/formula/formulatools.py", line 65, in handle_formula_data

NA_action=na_action)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/highlevel.py", line 310, in dmatrices

NA_action, return_type)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/highlevel.py", line 165, in _do_highlevel_design

NA_action)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/highlevel.py", line 70, in _try_incr_builders

NA_action)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/build.py", line 721, in design_matrix_builders

cat_levels_contrasts)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/build.py", line 628, in _make_subterm_infos

default=Treatment)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/contrasts.py", line 602, in code_contrast_matrix

return contrast.code_without_intercept(levels)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/patsy/contrasts.py", line 183, in code_without_intercept

eye = np.eye(len(levels) - 1)

File "/Users/tyler2k/anaconda3/lib/python3.7/site-packages/numpy/lib/twodim_base.py", line 201, in eye

m = zeros((N, M), dtype=dtype, order=order)

ValueError: negative dimensions are not allowed

ANOVA with moderator summary:

An issue with Python has prevented me from adding a moderator so I have attached my ANOVA results without one.

2.1 Chi-square (CODE)

#“libraries”

import pandas

import numpy

import scipy.stats

import seaborn

import matplotlib.pyplot as plt

data = pandas.read_csv('addhealth_pds.csv', low_memory=False)

#print ('Converting variables to numeric’)

data['H1SU1'] = pandas.to_numeric(data['H1SU1'], errors='coerce')

data['H1NB6'] = pandas.to_numeric(data['H1NB6'], errors='coerce')

#print ('Coding missing values’)

data['H1SU1'] = data['H1SU1'].replace(6, numpy.nan)

data['H1SU1'] = data['H1SU1'].replace(9, numpy.nan)

data['H1SU1'] = data['H1SU1'].replace(8, numpy.nan)

data['H1NB6'] = data['H1NB6'].replace(6, numpy.nan)

data['H1NB6'] = data['H1NB6'].replace(8, numpy.nan)

#print ('contingency table of observed counts’)

ct1=pandas.crosstab(data['H1SU1'], data['H1NB6'])

print (ct1)

print ('column percentages')

colsum=ct1.sum(axis=0)

colpct=ct1/colsum

print(colpct)

#print ('chi-square value, p value, expected counts’)

cs1= scipy.stats.chi2_contingency(ct1)

print (cs1)

#print ('set variable types’)

data['H1NB6'] = data['H1NB6'].astype('category')

data['H1SU1'] = pandas.to_numeric(data['H1SU1'], errors='coerce')

seaborn.factorplot(x='H1NB6', y='H1SU1', data=data, kind='bar', ci=None)

plt.xlabel('Happiness Level Living in Neighbourhood 5=Very Happy')

plt.ylabel('Considered Suicide in Past 12 Months')

sub1=data[(data['H1NB5']== 0)]

sub2=data[(data['H1NB5']== 1)]

print ('association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel UNSAFE in it')

# contigency table of observed counts

ct2=pandas.crosstab(sub1['H1NB6'], sub1['H1SU1'])

print (ct2)

print ('column percentages')

colsum=ct1.sum(axis=0)

colpct=ct1/colsum

print(colpct)

print ('chi-square value, p value, expected counts')

cs2= scipy.stats.chi2_contingency(ct2)

print (cs2)

print ('association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel SAFE in it')

# contigency table of observed counts

ct3=pandas.crosstab(sub2['H1NB6'], sub2['H1SU1'])

print (ct3)

print ('column percentages')

colsum=ct1.sum(axis=0)

colpct=ct1/colsum

print(colpct)

print ('chi-square value, p value, expected counts')

cs3= scipy.stats.chi2_contingency(ct3)

print (cs3)

print ('association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel UNSAFE in it')

#print ('set variable types’)

data['H1NB6'] = data['H1NB6'].astype('category')

data['H1SU1'] = pandas.to_numeric(data['H1SU1'], errors='coerce')

seaborn.factorplot(x='H1NB6', y='H1SU1', data=sub1, kind='point', ci=None)

plt.xlabel('Happiness Level Living in Neighbourhood 5=Very Happy')

plt.ylabel('Considered Suicide in Past 12 Months')

print ('association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel SAFE in it')

seaborn.factorplot(x='H1NB6', y='H1SU1', data=sub2, kind='point', ci=None)

plt.xlabel('Happiness Level Living in Neighbourhood 5=Very Happy')

plt.ylabel('Considered Suicide in Past 12 Months')

2.2. Results

H1NB6 1.0 2.0 3.0 4.0 5.0

H1SU1

0.0 138 287 1142 2016 2023

1.0 55 74 227 284 180

column percentages

H1NB6 1.0 2.0 3.0 4.0 5.0

H1SU1

0.0 0.715026 0.795014 0.834186 0.876522 0.918293

1.0 0.284974 0.204986 0.165814 0.123478 0.081707

(122.34711107270866, 1.6837131211401846e-25, 4, array([[ 168.37192655, 314.93401805, 1194.30656707, 2006.50482415,

1921.88266418],

[ 24.62807345, 46.06598195, 174.69343293, 293.49517585,

281.11733582]]))

/Users/tyler2k/anaconda3/lib/python3.7/site-packages/seaborn/categorical.py:3666: UserWarning: The `factorplot` function has been renamed to `catplot`. The original name will be removed in a future release. Please update your code. Note that the default `kind` in `factorplot` (`'point'`) has changed `'strip'` in `catplot`.

warnings.warn(msg)

association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel UNSAFE in it

H1SU1 0.0 1.0

H1NB6

1.0 71 32

2.0 103 29

3.0 192 48

4.0 110 22

5.0 49 8

column percentages

H1NB6 1.0 2.0 3.0 4.0 5.0

H1SU1

0.0 0.715026 0.795014 0.834186 0.876522 0.918293

1.0 0.284974 0.204986 0.165814 0.123478 0.081707

chi-square value, p value, expected counts

(9.694224963778023, 0.04590576662195307, 4, array([[ 81.43825301, 21.56174699],

[104.36746988, 27.63253012],

[189.75903614, 50.24096386],

[104.36746988, 27.63253012],

[ 45.06777108, 11.93222892]]))

association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel SAFE in it

H1SU1 0.0 1.0

H1NB6

1.0 67 23

2.0 184 45

3.0 947 179

4.0 1905 262

5.0 1973 171

column percentages

H1NB6 1.0 2.0 3.0 4.0 5.0

H1SU1

0.0 0.715026 0.795014 0.834186 0.876522 0.918293

1.0 0.284974 0.204986 0.165814 0.123478 0.081707

chi-square value, p value, expected counts

(78.30692095511722, 3.977325608451433e-16, 4, array([[ 79.3676164 , 10.6323836 ],

[ 201.94649062, 27.05350938],

[ 992.97706741, 133.02293259],

[1910.99583044, 256.00416956],

[1890.71299514, 253.28700486]]))

association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel UNSAFE in it

/Users/tyler2k/anaconda3/lib/python3.7/site-packages/seaborn/categorical.py:3666: UserWarning: The `factorplot` function has been renamed to `catplot`. The original name will be removed in a future release. Please update your code. Note that the default `kind` in `factorplot` (`'point'`) has changed `'strip'` in `catplot`.

warnings.warn(msg)

/Users/tyler2k/anaconda3/lib/python3.7/site-packages/seaborn/categorical.py:3666: UserWarning: The `factorplot` function has been renamed to `catplot`. The original name will be removed in a future release. Please update your code. Note that the default `kind` in `factorplot` (`'point'`) has changed `'strip'` in `catplot`.

warnings.warn(msg)

association between Suicidal considerations and respondents level of happiness in their neighbourhood for those who feel SAFE in it

2.3 Chi-Square Summary

For my Chi-square test I looked at analyzing the association between a respondent's level of happiness living in their neighbourhood and if they considered suicide in past 12 months with the moderating variable being whether they feel safe or unsafe in their neighbourhood.

Looking at the association between suicidal considerations and respondents level of happiness in their neighbourhood for those who feel safe in it the p-value is much larger than the threshold which means that I can't accept the null hypothesis. The opposite is true for respondents that feel unsafe in their neighbourhood though it is close to the 0.05 threshold meaning that it might not signify anything.

However, we can also see with the graphs that, at every level, respondents who feel unsafe in their neighbourhood are more likely to have attempted suicide across the board –regardless of how happy they feel in their neighbourhood.

3.1 Pearson Correlation code

import pandas

import numpy

import seaborn

import scipy

import matplotlib.pyplot as plt

data = pandas.read_csv('addhealth_pds.csv', low_memory=False)

"converting variables to numeric"

data["H1SU2"] = data["H1SU2"].convert_objects(convert_numeric=True)

data["H1WP8"] = data["H1WP8"].convert_objects(convert_numeric=True)

data["H1NB6"] = data["H1NB6"].replace(' ', numpy.nan)

"Coding missing values"

data["H1SU2"] = data["H1SU2"].replace(6, numpy.nan)

data["H1SU2"] = data["H1SU2"].replace(7, numpy.nan)

data["H1SU2"] = data["H1SU2"].replace(8, numpy.nan)

data["H1WP8"] = data["H1WP8"].replace(96, numpy.nan)

data["H1WP8"] = data["H1WP8"].replace(97, numpy.nan)

data["H1WP8"] = data["H1WP8"].replace(98, numpy.nan)

data_clean=data.dropna()

print (scipy.stats.pearsonr(data_clean['H1SU2'], data_clean['H1WP8']))

def Nhappy (row):

if row['H1NB6'] <= 2:

return 1

elif row['H1NB6'] <= 3:

return 2

elif row['H1NB6'] >= 4:

return 3

data_clean['Nhappy'] = data_clean.apply (lambda row: Nhappy (row), axis=1)

chk1 = data_clean['Nhappy'].value_counts(sort=False, dropna=False)

print(chk1)

sub1=data_clean[(data_clean['Nhappy']==1)]

sub2=data_clean[(data_clean['Nhappy']==2)]

sub3=data_clean[(data_clean['Nhappy']==3)]

print ('association between number of suicide attempts and parents attendign supper for those who feel UNHAPPY in their neighbourhoods')

print (scipy.stats.pearsonr(sub1['H1SU2'], sub1['H1WP8']))

print ('association between number of suicide attempts and parents attendign supper for those who feel SOMEWHAT HAPPY in their neighbourhoods')

print (scipy.stats.pearsonr(sub2['H1SU2'], sub2['H1WP8']))

print ('association between number of suicide attempts and parents attendign supper for those who feel HAPPY in their neighbourhoods')

print (scipy.stats.pearsonr(sub3['H1SU2'], sub3['H1WP8']))

3.2. Results

runfile('/Users/tyler2k/Downloads/Data Analysis Course/Course 2 - Week 3 - Pearson Correlation', wdir='/Users/tyler2k/Downloads/Data Analysis Course')

/Users/tyler2k/Downloads/Data Analysis Course/Course 2 - Week 3 - Pearson Correlation:18: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

data["H1SU2"] = data["H1SU2"].convert_objects(convert_numeric=True)

/Users/tyler2k/Downloads/Data Analysis Course/Course 2 - Week 3 - Pearson Correlation:19: FutureWarning: convert_objects is deprecated. To re-infer data dtypes for object columns, use Series.infer_objects()

For all other conversions use the data-type specific converters pd.to_datetime, pd.to_timedelta and pd.to_numeric.

data["H1WP8"] = data["H1WP8"].convert_objects(convert_numeric=True)

(-0.040373727372780416, 0.25432562116292673)

1 124

2 221

3 454

Name: Nhappy, dtype: int64

association between number of suicide attempts and parents attendign supper for those who feel UNHAPPY in their neighbourhoods

(-0.09190790889552906, 0.30999684453361553)

association between number of suicide attempts and parents attendign supper for those who feel SOMEWHAT HAPPY in their neighbourhoods

(-0.07305378458717826, 0.27955952249152094)

association between number of suicide attempts and parents attendign supper for those who feel HAPPY in their neighbourhoods

(0.009662667237741849, 0.8373203027381138)

/Users/tyler2k/Downloads/Data Analysis Course/Course 2 - Week 3 - Pearson Correlation:43: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

3.3. Summary

For my Pearson correlation test I looked at analyzing an association between the number of suicide attempts and how happy respondents' feel in their neighbourhood organized into 3 categories (unhappy, somewhat happy, and happy) with the moderating variable being the number of meals a week that respondents' parents are present for. I chose this variable as it could be an indication of family cohesiveness and how much time parents are able to devote to spending time with their children.

For the association between number of suicide attempts and parents attending supper for those who feel unhappy and somewhat happy in their neighbourhoods there was a small potential correlation and a much larger potential correlation for those who feel happy in their neighbourhood.

0 notes

Text

Hard Candy (~Misfits AU~)

Chapter 24: Ghost of Christmas Future

Warning: Strong language, implied smut, mention of death and pregnancy, alcohol, if you squint there’s some angst.

(Hard Candy Masterlist)

- Good mornin', roommate - Nathan didn't even have to turn around to know I was there.

- More like good afternoon - I yawned as he turned around and put a grilled cheese in front of me - but after last night I think we both needed some rest, huh?

- Five times is a new record... At least for me - he chuckled.

- Are you meeting us at the bar after work? - I took a bite.

- Yeah, I'll definitely need a drink after that bullshit.

- Well, if it makes you feel any better you look sexy as Santa...