#grpc

Explore tagged Tumblr posts

Text

just typed into google "java grpc client screaming" and had a good laugh

7 notes

·

View notes

Text

Understanding Grpc: A Complete Guide For Modern Developers

I was reading about the gRPC recently and was wondering what all this about? Believe me, I was in the same boat not too long ago. I didn't even know what exactly gRPC means before this.

If you're curious about how to inspect gRPC traffic, check out this guide on capturing gRPC traffic going out from a server.

In this blog, I will walk you through everything I have learned about the game changing technology that is changing the world of distributed system.

What is gRPC?

Imagine this, You are building a microservices architecture, and you want your services to talk to each other efficiently. In this case, traditional APIs work, but what If I told you that there is something faster and more secure and developer friendly? That`s gRPC!

gRPC which is know as Google Remote Procedure Call is a high performance, open source framework which allows application to communicate with each other as they are a local function. It is like having a magic route between the services that speaks multiple language fluently.

You know, the beauty of gRPC lies in its simplicity from a developer’s point of view. Because Instead of just crafting HTTP request, you can call methods which feels like a local object. But under the table, it does some seriously impressive work to make that happen fluently.

Key Components of gRPC

Let me explain to you about the component of gRPC in detail:

Protocol Buffers(Protobuf): This is like a secret sauce. You can think of it as a super efficient way to define your data structures and service contracts. Basically, It is like having a universal translator that every programming language understands.

gRPC Runtime: Well, This is all about handling the heavy lifting - serialization, network communication, error handling and many more. In this, you define your services and the runtime takes care of making it work across the network.

Code Generation: Here is thing where this is cool. From the protocol buffer definition, gRPC generates the client and server code in you language of choice. So, there is no more writing boilerplate of HTTP clients! Yes, you heard it right.

Channel Management: It manages connection intelligently by handling things like load balancing, retries and timeouts automatically.

Implementing gRPC: Best Practices

After reading and working with gRPC for a while now, I have picked some best practices that will actually save you from the headache and from wasting your time.

Okay, start with your .proto files and get them correct. These are basically your contracts and changing them later can be little tricky. Track the version of your services from day one, even if you think that you don`t need it. You have to trust me on this one.

Use proper error handling. gRPC has a rich error model which means do not just throw a generic error. Your teammates will thank you!

Implement proper logging and monitoring. gRPC calls can fail in different ways and you want to know what is happening. So, Interceptor is your best friend in this.

Lastly, choose your deployment strategy early. Look, gRPC works great in Kubernetes but it if you are dealing with browser clients, you will need gRPC web or a proxy for it.

What Makes gRPC So Popular?

You know what is more interesting about the gRPC? Let me tell you. gRPC has gone from being Google`s internal tool to becoming one of the most adopted technologies in the cloud native environment, and there is a reason for it.

gRPC is a CNCF Incubation Project

Yes, you read correctly! The Cloud Native Computing Foundation doesn`t just accept any project. That means this is actually a big deal. gRPC is an incubation project means it has proven itself in the production environment, has a healthy ecosystem and is currently being maintained by an active community.

It is not just a Google pet project anymore; It has become a cornerstone of the modern cloud native architecture.

Why gRPC Has Taken Off

1. Performance

In this, let`s talk about numbers because we mostly believe in numbers. gRPC is fast compared to traditional REST APIs. I have seen performance improvements of 5 to 10 times in real-world applications. The combination of HTTP/2, binary seralization and efficient compression makes a huge difference, especially when you are dealing with large payloads.

2. Language Support

This is the place where gRPC actually outperformed others. You can have a python service talking to a Java service, which calls a Go service and all seamlessly. The generated code feels native in each language.

I have worked with the team where we had microservices in different languages and trust me gRPC made it feel like we are working with a monolith in terms of type safety and ease of integration.

3. Streaming

Real time feature gives pain to implement it. With gRPC streaming, you can build real time dashboards, chat application or live data feed with the little code. The streaming support is bidirectional too that means you can have clients and servers both sending data as needed.

4. Interoperability

You know, the cross platform nature of gRPC is incredible. Mobile apps can talk to backend services using the same efficient protocol. Any IOT device can communicate with the cloud services. In this, web app can maintain same contracts as server to server communication.

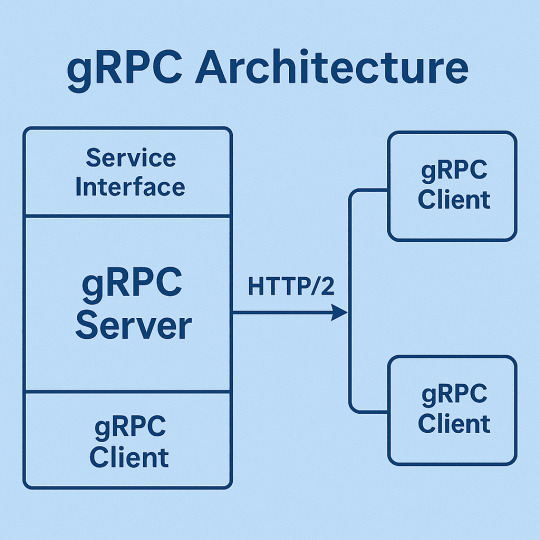

gRPC Architecture

Here is one of the most important topic to understand. Like how gRPC works and how the architecture look like.

The architecture is pretty simple. You have a gRPC server that implement your service interface and clients that call method on that service such that they were local functions. The gRPC runtimes handles serialization, network transport and deserialication transparently.

What interesting is how it uses HTTP/2 as the transport layer. This gives you all the benefits of HTTP/2 (multiplexing, flow control, header compression) while maintaining the simplicity of procedure calls. The client and server don't need to know about HTTP at all - they just see method calls and responses.

Why is gRPC So Fast?

1. HTTP/2 -

Look, this is huge. While REST APIs are typically stuck with the HTTP/1.1`s request and response limitations, but gRPC leverages HTTP/2 multiplexing. Multiple request can be on a same boat simultaneously over a single connection. No more connection pooling headaches or head of line blocking.

2. Binary serialisation

JSON is my best friend and I know yours too which is great for us but not for machines. In this what happens, protocol buffers create much smaller payloads and I have seen 60 to 80 % size reduction compared to equivalent JSON. Smaller payloads means faster network transmission and less bandwidth usage, right!

3. Compression

As we know, gRPC compress data automatically. By combining with the compact binary format, you are looking at the best efficient data transfer. This is especially notable In mobile applications or when we are dealing with limited bandwidth.

4. Streaming

Instead of multiple round trips, we can stream data continuously. Consider scenarios like real time analytics or live updates, this eliminates the latency of establishing new connections repeatedly.

Features of gRPC

The feature are pretty cool. You get authentication and encryption out of the box. Load balancing is the built in feature. Automatic retries and timeout helps with the resilience. Health checking is standardised.

But what I love most about gRPC is the developer experience. IntelliSense works perfectly because everything is strongly typed. API documentation is generated from the .proto files automatically, and refactoring across service boundaries becomes safe.

Working with Protocol Buffers

You might be thinking, what are protocol buffers? Right. So, Protocol Buffers is the heart of gRPC. You can think of this as a more efficient, strongly typed JSON. In this, you define the data structure in .proto files using a simple syntax and the compiler generates code for the language you want.

But syntax is intuitive. You define messages like structs and services like interfaces. Field numbering is important for backward compatibility and there are rules about how to evolve your schemas safely.

Let me show you how this actually works. Here is a simple .proto file that defines a user service.CopyCopysyntax = "proto3"; package user; service UserService { rpc GetUser(GetUserRequest) returns (User); rpc CreateUser(CreateUserRequest) returns (User); rpc ListUsers(ListUsersRequest) returns (ListUsersResponse); } message User { int32 id = 1; string name = 2; string email = 3; int64 created_at = 4; } message GetUserRequest { int32 id = 1; } message CreateUserRequest { string name = 1; string email = 2; } message ListUsersRequest { int32 page_size = 1; string page_token = 2; } message ListUsersResponse { repeated User users = 1; string next_page_token = 2; }

From this single .proto file, you can generate client and server code in multiple languages.

Here is how the implementation will look in Golang:

func (s *server) GetUser(ctx context.Context, req *pb.GetUserRequest) (*pb.User, error) { // Your business logic here user := &pb.User{ Id: req.Id, Name: "John Doe", Email: "[email protected]", CreatedAt: time.Now().Unix(), } return user, nil }

Protocol Buffer Versions

In protocol buffers, there are two main versions: Proto2 and Proto3.

Proto3 is simpler and more widely supported. unless you have some specific requirements that need proto2 features then go with proto3. The syntax is cleaner and it is what most of the new projects are using currently.

gRPC Method Types

gRPC supports four types of method types calls:

Unary RPc: This is a traditional request response which is like REST APIs but faster.

Server Streaming: In this, client sends one request, server sends back a stream of responses. It is great for downloading large datasets or real time updates.

Client Streaming: Client sends a stream of requests and server responds back with a single response. It is perfect for uploading data and aggregating information.

Bidirectional Streaming: In bidirectional streaming, both sides can send streams independently. This is where things get really interesting for real time applications.

gRPC vs. REST

This is the question everyone asks, right? REST is not going anywhere, but gRPC has some great advantages. gRPC is faster, efficient and it provides more better tooling. The type safety alone is worth considering and is great to compare. API evolution is more structured by using protocol buffers.

As we know, REST has broader ecosystem support, everyone is using REST, especially for public APIs. It is easier to debug with the standard HTTP tools. Browser support is more straightforward here.

Let me show you the difference in a real scenario by getting a user by ID:

REST Approach

Client code const response = await fetch('/api/users/123', { method: 'GET', headers: { 'Content-Type': 'application/json', 'Authorization': 'Bearer ' + token } }); const user = await response.json(); // You need to manually handle: // - HTTP status codes // - JSON parsing errors // - Type checking (user.name could be undefined) // - Error response formats

gRPC Approach

Client code (with gRPC-Web) const request = new GetUserRequest(); request.setId(123); client.getUser(request, {}, (err, response) => { if (err) { // Structured error handling console.log('Error:', err.message); } else { // Type-safe response console.log('User:', response.getName()); } }); // You get: // - Automatic serialization/deserialization // - Type safety (response.getName() is guaranteed to exist) // - Structured error handling // - Better performance

The difference is night and day here. With REST, you're dealing with strings, manual parsing, and hoping the API contract hasn't changed. But with gRPC, everything is typed, validated, and the contract is enforced at compile time.

So, my opinion on this comparison will be that you can use gRPC for the service-to-service communication, especially in microservice architectures and consider REST APIs for the public APIs or when you need maximum compatibility.

What is gRPC used for?

Look, the use cases for this are pretty diverse. That means microservices communication is the obvious one, but I have read articles in which gRPC is used for mobile app backends, IoT device communications, real-time features and even as a replacement for message queues in some places.

Currently, big tech companies use this. Netflix uses it for their natural services. Dropbox also built its entire storage system on gRPC. Even traditional enterprises are adopting it for modernizing their architectures.

Integration Testing With Keploy

Now, here is something really exciting that I found recently. You know how testing gRPC can be a real pain, right? Well, there's this tool called Keploy that's making it pretty easy.

Keploy provide the support for gRPC integration testing and it is definitely a great for testing gRPC services.

It watches your gRPC calls while your app is running and automatically creates test cases from real interactions. I'm not kidding, you just use your application normally, and it records everything. Then later, it can replay those exact same interactions as tests. You can read about integration testing with keploy here.

The dependency thing is genius: Remember how we always struggle with mocking databases and external services in our tests? Keploy captures all of that too. So when it replays your tests, it uses the exact same data that was returned during the recording. So basically, it doesn't spend more hours setting up test databases or writing complex mocks.

Catching regressions: You know, this is where it outperformed others. When you make changes to your gRPC services, Keploy compares the new responses with what it recorded before. If something changes unexpectedly, it flags it immediately.

Keploy represents the future of API testing that is intelligent, automated, and incredibly developer friendly. So, If you're building gRPC services, definitely check out what Keploy can do for your testing workflow. It's one of those tools that makes you wonder how you ever tested APIs without it.

Benefits of gRPC

The benefits are quite interesting:

Performance improvements are real and measurable.

Development velocity increases because of the strong typing and code generation.

Cross language interoperability becomes trivial.

Operational complexity decreases because of standardized health checks, metrics and tracing

The ecosystem of gRPC is rich, too. There are interceptors for logging, authentication, and monitoring. In this, cloud providers also offer native support.

Challenges of gRPC

Let`s be honest about the downsides. This is the point everyone looks right. In the gRPC, browser support requires gRPC web, which adds complexity. The learning curve can be steep if your team is used to REST. And also debugging can be more trickier since you cant just use curl.

The binary format makes manual testing more difficult. We need tools like grpcurl or GUI clients. Protocol buffers schema evolution also needs to plan carefully.

Load balancers and proxies need to understand gRPC, which might requires infrastructure changes. Some organization find the operational overhead initially challenging.

Reference Article:

Grpc Vs. Rest: A Comparative Guide - In this blog, you will get to know the key differences between gRPC and REST, use cases, and help you decide which one might be the best fit for your project.

Capture gRPC Traffic going out from a Server - This article tried to capture (log) the request and response from a gRPC client and server. This version assumes that you have access to the source code of the server

Everything You Need to Know About API Testing - This article helps you to understand API endpoint testing, exploring its importance, best practices, and tools to streamline the process.

Conclusion

Look, gRPC is not a silver bullet, but it is a powerful tool that can improve your distributed systems. The performance gains alone make it worth considering but the main thing is its developer experience which make the teams stick to it.

So, if you are building new services or modernizing existing services, you can definitely give gRPC a look. I recommend you to start small, maybe with one or two service pair, and see how it feels. I guarantee you will be surprised at how much it can simplify your architecture while managing everything faster.

And the important thing is Cloud Native world is moving towards gRPC for good reasons. It is worth understanding even if you don’t want to use it now. And who knows? It might be become your favourite way to build distributed systems.

Frequently Asked Question(FAQ)

Q1. Can I use gRPC with web browsers directly?

Not directly, but gRPC-Web solves this. You will need a proxy like Envoy, but it works great and gives you most gRPC benefits in the browser.

Q2. How do I handle versioning when my API changes?

You can basically follow Protocol Buffer compatibility rules and add fields safely, never change field numbers. Start with versioned service names like UserServiceV1 from day one.

Q3. Is gRPC overkill for simple CRUD applications?

Maybe. For basic web apps with simple CRUD, REST is fine. gRPC shines with complex service interactions, high performance needs, or microservices architectures.

Q4. How does gRPC handle authentication and security?

gRPC handle authentication and security using built-in TLS/SSL encryption. Also use interceptors for auth logic with JWT, OAuth, or client certificates. Cloud providers offer seamless integration.

Q5. What happens if my gRPC service is down?

So, you can try built-in retry logic, timeouts, and status codes help handle failures and Implement health checks and monitoring and also design for failure from the start.

0 notes

Text

I'm here for the night and I'd love to see some apps or reserves! Or even any questions you might have.

0 notes

Text

Apigee Extension Processor v1.0: CLB Policy Decision Point

V1.0 Apigee Extension Processor

This powerful new capability increases Apigee's reach and versatility and makes managing and protecting more backend services and modern application architectures easier than ever.

Modern deployers may add Apigee rules to their scalable containerised apps using the Extension Processor's seamless Cloud Run interface.

Additionally, the Extension Processor creates powerful new connections. With gRPC bidirectional streaming, complicated real-time interactions are easy, enabling low-latency, engaging apps. For event-driven systems, the Extension Processor controls and protects Server-Sent Events (SSE), enabling data streaming to clients.

Benefits extend beyond communication standards and application implementation. When used with Google Token Injection rules, the Apigee Extension Processor simplifies safe Google Cloud infrastructure access. Apigee's consistent security architecture lets you connect to and manage Bigtable and Vertex AI for machine learning workloads.

Finally, by connecting to Google's Cloud Load Balancing's advanced traffic management features, the Extension Processor offers unequalled flexibility in routing and controlling various traffic flows. Even complex API landscapes may be managed with this powerful combine.

This blog demonstrates a powerful technique to manage gRPC streaming in Apigee, a major issue in high-performance and real-time systems. gRPC is essential to microservices, however organisations employing Google Cloud's Apigee as an inline proxy (traditional mode) face issues owing to its streaming nature.

Nous will examine how Apigee's data plane may regulate gRPC streaming traffic from the ALB to the Extension Processor. A service extension, also known as a traffic extension, allows efficient administration and routing without passing the gRPC stream through the Apigee gateway.

Read on to learn about this solution's major features, its benefits, and a Cloud Run backend use case.

Overview of Apigee Extension Processor

Cloud Load Balancing may send callouts to Apigee for API administration via the Apigee extender Processor, a powerful traffic extender. Apigee can apply API management policies to requests before the ALB forwards them to user-managed backend services, extending its robust API management capabilities to Cloud Load Balancing workloads.

Infrastructure, Dataflow

Apigee Extension Processor requirements

Apigee Extension Processor setup requires several components. Service Extensions, ALBs, and Apigee instances with Extension Processors are included.

The numerical steps below match the flow diagram's numbered arrows to demonstrate order:

The ALB receives client requests.

The Policy Enforcement Point (PEP) ALB processes traffic. This procedure involves calls to Apigee via the Service Extension (traffic extension).

After receiving the callout, the Apigee Extension Processor, which acts as the Policy Decision Point (PDP), applies API management policies and returns the request back to the ALB.

After processing, the ALB forwards the request to the backend.

The ALB gets the backend service-started response. Before responding to the client, the ALB may utilise the Service Extension to contact Apigee again to enforce policies.

Making gRPC streaming pass-through possible

Apigee, an inline proxy, does not support streaming gRPC, even though many modern apps do. Apigee Extension Processor is handy in this circumstance since it allows the ALB to process streaming gRPC communication and act as the PEP and the Apigee runtime as the PDP.

Important components for Apigee's gRPC streaming pass-through

Using the Apigee Extension Processor for gRPC streaming pass-through requires the following components. Get started with the Apigee Extension Processor has detailed setup instructions.

gRPC streaming backend service: A bidirectional, server, or client streaming service.

The Application Load Balancer (ALB) routes traffic and calls to the Apigee Service Extension for client requests.

One Apigee instance with the Extension Processor enabled: A targetless API proxy is used by an Apigee instance and environment with Extension Processor to process Service Extension communication using ext-proc.

In order to link the ALB and Apigee runtime, a traffic extension (ideally Private Service link (PSC)) is configured.

When configured properly, client to ALB, ALB to Apigee, and ALB to backend may interact.

Apigee secures and manages cloud gRPC streaming services

Imagine a customer creating a high-performance backend service to deliver real-time application logs using gRPC. For scalability and administrative ease, their primary Google Cloud project hosts this backend application on Google Cloud Run. The customer wants a secure API gateway to offer this gRPC streaming service to its clients. They choose Apigee for its API administration capabilities, including authentication, authorisation, rate restriction, and other regulations.

Challenge

Apigee's inline proxy mode doesn't allow gRPC streaming. Typical Apigee installations cannot directly expose the Cloud Run gRPC service for client, server, or bi-di streaming.

Solution

The Apigee Extension Processor bridges gRPC streaming traffic to a Cloud Run backend application in the same Google Cloud project.

A concentrated flow:

Client start

Client applications initiate gRPC streaming requests.

The entry point ALB's public IP address or DNS name is the target of this request.

ALB and Service Extension callout

The ALB receives gRPC streaming requests.

A serverless Network Endpoint Group connects the ALB's backend service to Cloud Run.

The ALB also features a Service Extension (Traffic extension) with an Apigee runtime backend.

The ALB calls this Service Extension for relevant traffic.

Processing Apigee proxy

Service Extensions redirect gRPC requests to Apigee API proxies.

Apigee X proxy implements API management controls. This includes rate limiting, authorisation, and authentication.

No Target Endpoint is defined on the Apigee proxy in this situation.ALB finalises route.

Return to ALB

Since the Apigee proxy has no target, the Service Extension answer returns control to the ALB after policy processing.

Backend routing in Cloud Run by Load Balancer

The ALB maps the gRPC streaming request to the serverless NEG where the Cloud Run service is situated, per its backend service parameters.

ALB manages Cloud Run instance routing.

Managing responses

Request and response flow are similar. The backend starts the ALB to process the response. The ALB may call Apigee for policy enforcement before responding to the client via the Service Extension (traffic extension).

This simplified use case explains how to apply API management policies to gRPC streaming traffic to a Cloud Run application in the same Google Cloud project using the Apigee Extension Processor. The ALB largely routes to Cloud Run using its NEG setup.

Advantages of Apigee Extension Processor for gRPC Streaming

Using the Apigee Extension Processor to backend manage gRPC streaming services brings Apigee's core features to this new platform application, with several benefits:

Extended Apigee's reach

This technique extends Apigee's strong API management tools to gRPC streaming, which the core proxy does not handle natively.

Utilising current investments

Businesses using Apigee for RESTful APIs may now control their gRPC streaming services from Apigee. Even while it requires the Extension Processor, it uses well-known API management techniques and avoids the need for extra tools.

Centralised policymaking

Apigee centralises API management policy creation and implementation. Integrating gRPC streaming via the Extension Processor gives all API endpoints similar governance and security.

Moneymaking potential

Apigee's monetisation features may be utilised for gRPC streaming services. Rate plans in Apigee-customized API solutions let you generate money when gRPC streaming APIs are accessed.

Better visibility and traceability

Despite limited gRPC protocol-level analytics in a pass-through situation, Apigee provides relevant data on streaming service traffic, including connection attempts, error rates, and use trends. Troubleshooting and monitoring require this observability.

Apigee's distributed tracing solutions may help you trace requests in distributed systems utilising gRPC streaming services with end-to-end visibility across apps, services, and databases.

Business intelligence

Apigee API Analytics collects the massive amount of data going through your load balancer and provides UI visualisation or offline data analysis. This data helps businesses make smart decisions, identify performance bottlenecks, and understand usage trends.

These benefits show that the Apigee Extension Processor can offer essential API management functionalities to Google Cloud's gRPC streaming services.

Looking Ahead

Apigee Extension Processor enhances Apigee's functionality. Apigee's policy enforcement will eventually be available on all gateways. The Apigee runtime will serve as the Policy Decision Point (PDP) and the ext-proc protocol will allow many Envoy-based load balancers and gateways to act as Policy Enforcement Points. Due to this innovation, organisations will be better able to manage and protect their digital assets in more varied situations.

#technology#technews#govindhtech#news#technologynews#Apigee Extension Processor#Apigee Extension#gRPC#Cloud Run#Apigee#Apigee Extension Processor v1.0#Application Load Balancer

0 notes

Note

My name is Kes and a really, really long time ago I mainly played Destery Dawson for the Hazel Run RP. I was very close with Ken and pretty close with Inez. I miss you both dearly and hope life is treating you well. If this gets posted I hope you all reach out if you see this. To anyone I hurt, I’m truly sorry. For my contribution to petty drama, I’m regretful and owe my sincerest apologies. I’m a much different person now and I know you all are too… I’m darn near 30 now and still think about you guys often

Not making confessions anymore, but if anyone wishes to reach out to this person then their inbox is open.

Thank you Kes for reaching out.

#hazelrunrp#glee rp#glee rp confessions#glee rpg#glee roleplay#gleerpconfessions#glee au rp#grpcapology#grpc

1 note

·

View note

Text

Just wrapped up the assignments on the final chapter of the #mlzoomcamp on model deployment in Kubernetes clusters. Got foundational hands-on experience with Tensorflow Serving, gRPC, Protobuf data format, docker compose, kubectl, kind and actual Kubernetes clusters on EKS.

#mlzoomcamp#tensorflow serving#grpc#protobuf#kubectl#kind#Kubernetes#docker-compose#artificial intelligence#machinelearning#Amazon EKS

0 notes

Text

1 note

·

View note

Text

🚀 Getting Started with Golang: A Developer's Journey 🛠️

Golang is a game-changer for developers seeking efficiency and simplicity. Whether you’re building web servers 🌐 or mastering concurrency 🧵, Go’s clean syntax and robust performance make it ideal for high-performance apps.

In this blog, I’ll share byte-sized tips 💡, tutorials, and insights to help you level up your Go skills. For more in-depth resources and courses, check out ByteSizeGo. Let’s dive into debugging, gRPC, and everything Go! 🎯

Stay tuned! 🔥

1 note

·

View note

Text

A Comprehensive Guide to Building Microservices with Node.js

Introduction:The microservices architecture has become a popular approach for developing scalable and maintainable applications. Unlike monolithic architectures, where all components are tightly coupled, microservices allow you to break down an application into smaller, independent services that can be developed, deployed, and scaled independently. Node.js, with its asynchronous, event-driven…

0 notes

Text

0 notes

Text

more people should know about bird banding abbreviation codes because they make talking about certain species so much easier. they're four-letter codes that correspond to a bird's common name.*

for most bird names, they take the first two letters of the first part of the name and the first two letters of the last part and combine them. so GREG is GReat EGret, SPTO is SPotted TOwhee, LEGO is LEsser GOldfinch, etc. for birds with more than two parts to their names, the letters are taken from all of the parts. so NSWO is Northern Saw-Whet Owl, GHOW is Great Horned OWl, GRPC is GReater Prairie-Chicken, etc.

of course, some birds only have one part to their name. so for those birds the first four letters become the code. CANV is CANVasback, WILL is WILLet, GYRF is GYRFalcon.

but there are birds that would normally overlap, like AMerican GOshawk and AMerican GOldfinch, or CAnada GOose and CAckling GOose. so their codes need to be rearranged and break the typical convention--now AGOL is American GOLdfinch and AGOS is American GOShawk, and CANG is CANada Goose and CACG is CACkling Goose. these ones are less intuitive but usually still easy to figure out.

so now you know you can say NOPO when you see a northern pygmy-owl, SNEG when you see a snowy egret, BRBL when you see a brewer's blackbird, or AMCR when you see an american crow! good luck figuring out how to pronounce some of those.

(*things get more complicated when you start using the six-letter codes for the latin names... like ABTO/abert's towhee, which is Melozone aberti/MELABE. although it's definitely less time-consuming when you can say PHEFAS for Pheugopedius fasciatoventris, the black-bellied wren (BBEW). we'll do that some other day.)

23 notes

·

View notes

Text

In the world of APIs, there are many different architectural styles for building APIs, and each one has its own benefits, cons, and ideal use cases, but two prominent approaches dominate the landscape: gRPC and REST. Both have their unique strengths and weaknesses, making them suitable for different scenarios.

In this blog, we’ll delve into the key differences between gRPC and REST, explore their use cases, and help you decide which one might be the best fit for your project.

What is REST?

REST or Representational State Transfer is an architectural style for designing networked applications. It relies on a stateless, client-server, cacheable communications protocol – the HTTP.

RESTful applications use HTTP requests to perform CRUD (Create, Read, Update, Delete) operations on resources represented in a JSON format.

What are advantages of using REST ?

REST has several advantages that make it a popular choice for designing APIs. Here are the key benefits : -

Simplicity: They are easy to understand and use, thanks to their reliance on standard HTTP methods (GET, POST, PUT, DELETE).

Statelessness: Each request from a client to a server must contain all the information needed to understand and process the request.

Scalability: REST's stateless nature makes it highly scalable, as servers don't need to maintain session state between requests.

Caching: Responses can be marked as cacheable, reducing the need for redundant server processing.

How can REST be implemented ?

Implementing a RESTful API involves several key steps to ensure it is well-structured, efficient, and easy to use. Here's a high-level overview:

1. Set Up Your Environment

Choose your programming language (e.g., Python, JavaScript, Java).

Select a web framework (e.g., Flask for Python, Express for Node.js).

Install necessary libraries and tools.

2. Design Your API Endpoints

Define the resources your API will manage.

Plan the endpoints and HTTP methods (e.g., GET, POST, PUT, DELETE).

Use a consistent URL structure.

3. Implement CRUD Operations

Create routes for Create, Read, Update, Delete operations.

Handle data in a consistent format, typically JSON.

Ensure your API follows REST principles, such as statelessness and resource representation.

4. Test Your API

Use tools like Postman or curl to test API endpoints.

Validate the response data and status codes.

Ensure all edge cases are handled.

5. Add Error Handling and Data Validation

Implement error handling for various HTTP status codes (e.g., 404 Not Found, 400 Bad Request).

Validate incoming data to ensure it meets the required format and constraints.

Client Requests: Clients send HTTP requests (GET, POST, PUT, DELETE) to the API.

API Endpoints: The server has defined endpoints (e.g., /books, /books/<id>) to handle these requests.

CRUD Operations: Each endpoint corresponds to a CRUD operation, interacting with the database or data storage.

Responses: The server processes the request and sends back an appropriate response (data, status codes).

What is gRPC?

gRPC or gRPC Remote Procedure Calls is an open-source framework developed by Google. It uses HTTP/2 for transport, Protocol Buffers or protobufs as the interface description language, and provides features such as authentication, load balancing, and more.

What are advantages of using gRPC ?

gRPC is a very powerful choice for high-performance, real-time, and efficient communication in microservices architectures, as well as for applications requiring robust and strongly typed APIs. Here's why : -

Performance: gRPC is designed for high performance, with smaller message payloads and lower latency than REST, thanks to HTTP/2 and protobufs.

Bi-Directional Streaming: gRPC supports client, server, and bi-directional streaming, making it suitable for real-time applications.

Strongly Typed Contracts: Using Protocol Buffers ensures a strongly typed contract between client and server, reducing errors and improving reliability.

Built-in Code Generation: gRPC can generate client and server code in multiple languages, speeding up development.

How to create a gRPC API ?

Implementing a gRPC API involves several steps, including defining the service and messages using Protocol Buffers, generating the necessary client and server code, implementing the service logic, and testing the API. Here’s a high-level overview:

1. Set Up Your Environment

Choose your programming language (e.g., Python, Go, Java, C++).

Install gRPC and Protocol Buffers compiler (protoc).

Install necessary libraries and tools.

2. Define Your Service Using Protocol Buffers

Create a .proto file that defines the service and the messages it uses.

Specify the methods and their request/response types.

3. Generate Client and Server Code

Use the Protocol Buffers compiler to generate the gRPC client and server code from the .proto file.

This step creates the stubs and data classes needed for the service implementation.

4. Implement the Service Logic

Implement the server logic by extending the generated base classes.

Define the functionality for each service method.

5. Run the Server and Implement the Client

Start the gRPC server to listen for client requests.

Implement the client to make requests to the gRPC server.

6. Test Your gRPC API

Use tools like grpcurl or specific client implementations to test your gRPC endpoints.

Validate the response data and status codes.

Ensure all edge cases are handled.

Service Definition: Define your service and messages in a .proto file.

Code Generation: Generate client and server code using the Protocol Buffers compiler.

Server Implementation: Implement the server-side logic to handle requests.

Client Implementation: Implement the client to make requests to the server.

Communication: Clients communicate with the server using the generated stubs and data classes.

gRPC vs REST : How are they different.

This table provides a side-by-side comparison to help you understand the differences and make an informed decision based on your project's needs.

Conclusion

Choosing between gRPC and REST depends largely on your specific use case and requirements. REST’s simplicity, wide adoption, and flexibility make it a great choice for public APIs and web services. On the other hand, gRPC’s performance, efficiency, and advanced features like bi-directional streaming make it ideal for internal APIs, microservices, and real-time applications.

Understanding the strengths and limitations of each approach will help you make an informed decision, ensuring your API is robust, scalable, and performant. Whether you opt for the simplicity of REST or the power of gRPC, both have their place in the modern API ecosystem.

FAQs

What are the main differences between REST and gRPC?

REST is an architectural style that uses HTTP/1.1 and text-based formats like JSON for communication, making it simple and widely adopted. gRPC, developed by Google, leverages HTTP/2 and Protocol Buffers (binary format), providing high performance, bi-directional streaming, and strongly typed contracts.

When should I use gRPC instead of REST?

gRPC is ideal for scenarios requiring high performance, low latency, and real-time communication, such as microservices architectures and internal APIs. It's also well-suited for applications that benefit from strongly typed contracts and built-in code generation, such as complex backend systems.

Can gRPC and REST be used together in the same project?

Yes, they can be used together. For example, you might use REST for public-facing APIs due to its simplicity and widespread support while employing gRPC for internal service-to-service communication to take advantage of its performance benefits and advanced features like streaming.

Is it difficult to learn and implement gRPC?

gRPC has a steeper learning curve compared to REST, primarily because it requires understanding of Protocol Buffers and involves more setup for code generation. However, the robust documentation and tooling provided by the gRPC community can help mitigate these challenges.

0 notes

Text

The poll has now closed which means we're staying with the plot of following the characters 10 years into the future! I'm gonna make sure that everything is correct but I'm happy to open for apps and reserves right now! Quinn is the only character taken and we'd love to see more join in the fun!

0 notes

Text

CloudFront Now Supports gRPC Calls For Your Applications

Your applications’ gRPC calls are now accepted by Amazon CloudFront.

You may now set up global content delivery network (CDN), Amazon CloudFront, in front of your gRPC API endpoints.

An Overview of gRPC

You may construct distributed apps and services more easily with gRPC since a client program can call a method on a server application on a separate machine as if it were a local object. The foundation of gRPC, like that of many RPC systems, is the concept of establishing a service, including the methods that may be called remotely along with their parameters and return types. This interface is implemented by the server, which also uses a gRPC server to manage client requests. The same methods as the server are provided by the client’s stub, which is sometimes referred to as just a client.

Any of the supported languages can be used to write gRPC clients and servers, which can operate and communicate with one another in a range of settings, including your desktop computer and servers within Google. For instance, a gRPC server in Java with clients in Go, Python, or Ruby can be readily created. Furthermore, the most recent Google APIs will include gRPC interfaces, making it simple to incorporate Google functionality into your apps.

Using Protocol Buffers

Although it can be used with other data formats like JSON, gRPC by default serializes structured data using Protocol Buffers, Google’s well-established open source method.

Establishing the structure for the data you wish to serialize in a proto file a regular text file with a.proto extension is the first step in dealing with protocol buffers. Protocol buffer data is organized as messages, each of which is a brief logical record of data made up of a number of fields, or name-value pairs.

After defining your data structures, you can use the protocol buffer compiler protoc to create data access classes from your proto specification in the language or languages of your choice. These offer methods to serialize and parse the entire structure to and from raw bytes, along with basic accessors for each field, such as name() and set_name(). For example, executing the compiler on the aforementioned example will produce a class named Person if you have selected C++ as your language. This class can then be used to serialize, retrieve, and populate Person protocol buffer messages in your application.

You specify RPC method parameters and return types as protocol buffer messages when defining gRPC services in standard proto files:

Protoc is used by gRPC with a specific gRPC plugin to generate code from your proto file. This includes the standard protocol buffer code for populating, serializing, and retrieving your message types, as well as generated gRPC client and server code.

Versions of protocol buffers

Although open source users have had access to protocol buffers for a while, the majority of the examples on this website use protocol buffers version 3 (proto3), which supports more languages, has a little simplified syntax, and several helpful new capabilities. In addition to a Go language generator from the golang/protobuf official package, Proto3 is presently available in Java, C++, Dart, Python, Objective-C, C#, a lite-runtime (Android Java), Ruby, and JavaScript from the protocol buffers GitHub repository. Additional languages are being developed.

Although proto2 (the current default protocol buffers version) can be used, it advises using proto3 with gRPC instead because it allows you to use all of the languages that gRPC supports and prevents incompatibilities between proto2 clients and proto3 servers.

What is gRPC?

A contemporary, open-source, high-performance Remote Procedure Call (RPC) framework that works in any setting is called gRPC. By supporting pluggable load balancing, tracing, health checking, and authentication, it may effectively connect services both within and between data centers. It can also be used to link devices, browsers, and mobile apps to backend services in the last mile of distributed computing.

A basic definition of a service

Describe your service using Protocol Buffers, a robust language and toolkit for binary serialization.

Launch swiftly and grow

Use the framework to grow to millions of RPCs per second and install the runtime and development environments with only one line.

Works on a variety of platforms and languages

For your service, automatically create idiomatic client and server stubs in several languages and platforms.

Both-way streaming and integrated authentication

Fully integrated pluggable authentication and bi-directional streaming with HTTP/2-based transport

For creating APIs, gRPC is a cutting-edge, effective, and language-neutral framework. Platform-independent service and message type design is made possible by its interface defining language (IDL), Protocol Buffers (protobuf). With gRPC, remote procedure calls (RPCs) over HTTP/2 are lightweight and highly performant, facilitating communication between services. Microservices designs benefit greatly from this since it facilitates effective and low-latency communication between services.

Features like flow control, bidirectional streaming, and automatic code generation for multiple programming languages are all provided by gRPC. When you need real-time data streaming, effective communication, and great performance, this is a good fit. gRPC may be an excellent option if your application must manage a lot of data or the client and server must communicate with low latency. However, compared to REST, it could be harder to master. Developers must specify their data structures and service methods in.proto files since gRPC uses the protobuf serialization standard.

When you put CloudFront in front of your gRPC API endpoints, we see two advantages.

Initially, it permits the decrease of latency between your API implementation and the client application. A global network of more than 600 edge locations is provided by CloudFront, with intelligent routing to the nearest edge. TLS termination and optional caching for your static content are offered by edge locations. Client application requests are sent to your gRPC origin by CloudFront via the fully managed, high-bandwidth, low-latency private AWS network.

Second, your apps gain from extra security services that are set up on edge locations, like traffic encryption, AWS Web Application Firewall’s HTTP header validation, and AWS Shield Standard defense against distributed denial of service (DDoS) assaults.

Cost and Accessibility

All of the more than 600 CloudFront edge locations offer gRPC origins at no extra cost. There are the standard requests and data transfer costs.

Read more on govindhtech.com

#CloudFront#SupportsgRPC#Applications#Google#AmazonCloudFront#distributeddenialservice#DDoS#Accessibility#ProtocolBuffers#gRPC#technology#technews#news#govindhtech

0 notes

Note

Hey there! I haven’t been in the grpc in YEARS but I’ve been really missing it lately; this looks so much like it used to back in the day and I’m really excited! Anyway, I’m thinking about Dave Karofsky, but I’m not sure which direction I’d go with him. Just curious if you have any FC’s in mind (or if his og fc, Max Adler, is still a viable option? Again, been so long since I’ve been a part of the community, I’m unsure!) I was also wondering how closely I should stick to his original canon storyline and how much I can headcanon or change slightly.

Hey there, thank you so much! Max Adler is definitely good to use, though if you'd like some alternate options for a FC, here's a list of some we think would work great as well: Oliver Stark, Brant Daugherty, Taron Egerton, Aaron Taylor-Johnson, Cody Christian, Derek Theler.

As for his character, the setting itself is very much "alternate universe" so specifics with storylines don't have to be a part of the character if you don't want to. It's more about keeping the canon vibe of the character, plus as the show was (for the most part) depicting them in high school and they're all adults in this verse, there's room to have growth within that character vibe as well. Hope this helps and we hope to see an app from you!

4 notes

·

View notes