#learn data warehousing

Explore tagged Tumblr posts

Text

Get Industry-Ready with Data Warehouse Training

Introduction

In today’s data-driven world, businesses rely on structured information to make smart decisions. That’s where data warehousing comes in. A solid understanding of data warehouse concepts, architecture, and business intelligence (BI) tools can set the foundation for a strong career in IT and analytics.

For students and freshers aiming to enter the tech or business analytics industry, enrolling in a data warehousing course for beginners in Yamuna Vihar or Uttam Nagar can be a smart move.

Why Learn Data Warehousing?

Data warehousing is not just about storing data; it’s about organizing, analyzing, and making it useful for business strategies. Companies across the world use data warehouses to gain insights, spot trends, and plan for the future.

With growing demand for professionals skilled in data analysis, BI, and architecture, there’s a need for individuals trained in both basic and advanced concepts. Whether you’re from a technical or non-technical background, learning data warehouse technologies can make you job-ready.

What Will You Learn in a Data Warehouse Course?

A well-structured data warehouse and architecture course in Yamuna Vihar or Uttam Nagar teaches you the foundational and practical aspects of:

Data warehouse concepts and components

Architecture models (star schema, snowflake schema)

ETL (Extract, Transform, Load) processes

Data modeling techniques

Business Intelligence and reporting tools

Real-time project-based learning

If you are looking for a professional data warehouse training course in Uttam Nagar or Yamuna Vihar, look for institutes offering a mix of theoretical understanding and hands-on practice.

Learning Options That Suit Your Schedule

For students who prefer flexible learning, there are options for the best data warehouse training online in Yamuna Vihar or Uttam Nagar. These courses allow you to learn at your own pace while still gaining the necessary practical skills.

Many learners also choose online data warehouse classes near me in Yamuna Vihar or nearby areas, especially those with live sessions, mentor guidance, and access to real datasets.

If you’re ready to take your skills to the next level, you might also consider an advanced data warehouse training program in Uttam Nagar. These programs are ideal for those who already have basic knowledge and want to deepen their understanding of architecture, tools, and design.

Career Paths After Completing the Course

Completing a data warehouse and BI training institute in Yamuna Vihar or Uttam Nagar opens doors to a range of career opportunities such as:

Data Analyst

BI Developer

Data Warehouse Engineer

ETL Developer

Reporting Analyst

Data Architect

Professionals with certifications and training from the top institute for data warehouse and architecture in Uttam Nagar are more likely to get noticed by recruiters, especially those hiring for MNCs and startups looking to build data teams.

Finding the Right Institute

When choosing a learning center, students should always look for the best data warehouse training institute in Uttam Nagar or Yamuna Vihar that offers:

Certified training programs

Updated curriculum with real-time tools

Live projects and assignments

Doubt-clearing sessions

Job support or placement assistance

These features ensure that your learning experience is not just academic but practical and aligned with real industry needs.

Final Thoughts

In a world where data is the new currency, having the right skills to store, manage, and analyze that data is incredibly valuable. A quality data warehouse architecture and design training in Yamuna Vihar or Uttam Nagar can empower students and working professionals to build a strong career foundation in analytics and business intelligence.

Whether you're just starting out or upgrading your skills, now is the perfect time to enroll in a data warehousing course for beginners in Uttam Nagar or explore more advanced options in your local area. Get industry-ready. Get data warehouse ready

Suggested Links:

C++ Programming Language

Database Management System

Advanced Data Structures

Learn Core Java

#data warehouse#learn data warehousing#data warehouse corse#learn data mining#data mining techniques

0 notes

Text

Unlock powerful decision-making with MindCoders’ Data Analytics Course! Gain hands-on expertise in SQL, Python, R, Tableau/Power BI, statistical modeling, and data storytelling through real-world projects. Demand for analytics professionals is surging—businesses across finance, healthcare, e-commerce, and more rely on data insights to drive innovation and efficiency . Equip yourself with in-demand skills to analyze complex datasets, visualize trends, and deliver actionable business outcomes—future-proofing your career in one of the fastest-growing global fields

#data analytics#business intelligence#data visualization#predictive analytics#big data analytics#data mining#machine learning#data warehousing#data analysis tools#data-driven decision making

0 notes

Text

Deep Learning Solutions for Real-World Applications: Trends and Insights

Deep learning is revolutionizing industries by enabling machines to process and analyze vast amounts of data with unprecedented accuracy. As AI-powered solutions continue to advance, deep learning is being widely adopted across various sectors, including healthcare, finance, manufacturing, and retail. This article explores the latest trends in deep learning, its real-world applications, and key insights into its transformative potential.

Understanding Deep Learning in Real-World Applications

Deep learning, a subset of machine learning, utilizes artificial neural networks (ANNs) to mimic human cognitive processes. These networks learn from large datasets, enabling AI systems to recognize patterns, make predictions, and automate complex tasks.

The adoption of deep learning is driven by its ability to:

Process unstructured data such as images, text, and speech.

Improve accuracy with more data and computational power.

Adapt to real-world challenges with minimal human intervention.

With these capabilities, deep learning is shaping the future of AI across industries.

Key Trends in Deep Learning Solutions

1. AI-Powered Automation

Deep learning is driving automation by enabling machines to perform tasks that traditionally required human intelligence. Industries are leveraging AI to optimize workflows, reduce operational costs, and improve efficiency.

Manufacturing: AI-driven robots are enhancing production lines with automated quality inspection.

Customer Service: AI chatbots and virtual assistants are improving customer engagement.

Healthcare: AI automates medical imaging analysis for faster diagnosis.

2. Edge AI and On-Device Processing

Deep learning models are increasingly deployed on edge devices, reducing dependence on cloud computing. This trend enhances:

Real-time decision-making in autonomous systems.

Faster processing in mobile applications and IoT devices.

Privacy and security by keeping data local.

3. Explainable AI (XAI)

As deep learning solutions become integral to critical applications like finance and healthcare, explainability and transparency are essential. Researchers are developing Explainable AI (XAI) techniques to make deep learning models more interpretable, ensuring fairness and trustworthiness.

4. Generative AI and Creative Applications

Generative AI models, such as GPT (text generation) and DALL·E (image synthesis), are transforming creative fields. Businesses are leveraging AI for:

Content creation (automated writing and design).

Marketing and advertising (personalized campaigns).

Music and video generation (AI-assisted production).

5. Self-Supervised and Few-Shot Learning

AI models traditionally require massive datasets for training. Self-supervised learning and few-shot learning are emerging to help AI learn from limited labeled data, making deep learning solutions more accessible and efficient.

Real-World Applications of Deep Learning Solutions

1. Healthcare and Medical Diagnostics

Deep learning is transforming healthcare by enabling AI-powered diagnostics, personalized treatments, and drug discovery.

Medical Imaging: AI detects abnormalities in X-rays, MRIs, and CT scans.

Disease Prediction: AI models predict conditions like cancer and heart disease.

Telemedicine: AI chatbots assist in virtual health consultations.

2. Financial Services and Fraud Detection

Deep learning enhances risk assessment, automated trading, and fraud detection in the finance sector.

AI-Powered Fraud Detection: AI analyzes transaction patterns to prevent cyber threats.

Algorithmic Trading: Deep learning models predict stock trends with high accuracy.

Credit Scoring: AI evaluates creditworthiness based on financial behavior.

3. Retail and E-Commerce

Retailers use deep learning for customer insights, inventory optimization, and personalized shopping experiences.

AI-Based Product Recommendations: AI suggests products based on user behavior.

Automated Checkout Systems: AI-powered cameras and sensors enable cashier-less stores.

Demand Forecasting: Deep learning predicts inventory needs for efficient supply chain management.

4. Smart Manufacturing and Industrial Automation

Deep learning improves quality control, predictive maintenance, and process automation in manufacturing.

Defect Detection: AI inspects products for defects in real-time.

Predictive Maintenance: AI predicts machine failures, reducing downtime.

Robotic Process Automation (RPA): AI automates repetitive tasks in production lines.

5. Transportation and Autonomous Vehicles

Self-driving cars and smart transportation systems rely on deep learning for real-time decision-making and navigation.

Autonomous Vehicles: AI processes sensor data to detect obstacles and navigate safely.

Traffic Optimization: AI analyzes traffic patterns to improve city traffic management.

Smart Logistics: AI-powered route optimization reduces delivery costs.

6. Cybersecurity and Threat Detection

Deep learning strengthens cybersecurity defenses by detecting anomalies and preventing cyber attacks.

AI-Powered Threat Detection: Identifies suspicious activities in real time.

Biometric Authentication: AI enhances security through facial and fingerprint recognition.

Malware Detection: Deep learning models analyze patterns to identify potential cyber threats.

7. Agriculture and Precision Farming

AI-driven deep learning is improving crop monitoring, yield prediction, and pest detection.

Automated Crop Monitoring: AI analyzes satellite images to assess crop health.

Smart Irrigation Systems: AI optimizes water usage based on weather conditions.

Disease and Pest Detection: AI detects plant diseases early, reducing crop loss.

Key Insights into the Future of Deep Learning Solutions

1. AI Democratization

With the rise of open-source AI frameworks like TensorFlow and PyTorch, deep learning solutions are becoming more accessible to businesses of all sizes. This democratization of AI is accelerating innovation across industries.

2. Ethical AI Development

As AI adoption grows, concerns about bias, fairness, and privacy are increasing. Ethical AI development will focus on creating fair, transparent, and accountable deep learning solutions.

3. Human-AI Collaboration

Rather than replacing humans, deep learning solutions will enhance human capabilities by automating repetitive tasks and enabling AI-assisted decision-making.

4. AI in Edge Computing and 5G Networks

The integration of AI with edge computing and 5G will enable faster data processing, real-time analytics, and enhanced connectivity for AI-powered applications.

Conclusion

Deep learning solutions are transforming industries by enhancing automation, improving efficiency, and unlocking new possibilities in AI. From healthcare and finance to retail and cybersecurity, deep learning is solving real-world problems with remarkable accuracy and intelligence.

As technology continues to advance, businesses that leverage deep learning solutions will gain a competitive edge, driving innovation, efficiency, and smarter decision-making. The future of AI is unfolding rapidly, and deep learning remains at the heart of this transformation.

Stay ahead in the AI revolution—explore the latest trends and insights in deep learning today!

#Deep learning solutions#Big Data and Data Warehousing service#Data visualization#Predictive Analytics#Data Mining#Deep Learning

1 note

·

View note

Text

Unveiling the Power of Delta Lake in Microsoft Fabric

Discover how Microsoft Fabric and Delta Lake can revolutionize your data management and analytics. Learn to optimize data ingestion with Spark and unlock the full potential of your data for smarter decision-making.

In today’s digital era, data is the new gold. Companies are constantly searching for ways to efficiently manage and analyze vast amounts of information to drive decision-making and innovation. However, with the growing volume and variety of data, traditional data processing methods often fall short. This is where Microsoft Fabric, Apache Spark and Delta Lake come into play. These powerful…

#ACID Transactions#Apache Spark#Big Data#Data Analytics#data engineering#Data Governance#Data Ingestion#Data Integration#Data Lakehouse#Data management#Data Pipelines#Data Processing#Data Science#Data Warehousing#Delta Lake#machine learning#Microsoft Fabric#Real-Time Analytics#Unified Data Platform

0 notes

Text

Explore the seamless integration of AWS Data Warehouse and Machine Learning in our comprehensive guide. Explore the essence of AWS data warehousing and unlock the power of dynamic insights.

0 notes

Text

What is the difference between Data Scientist and Data Engineers ?

In today’s data-driven world, organizations harness the power of data to gain valuable insights, make informed decisions, and drive innovation. Two key players in this data-centric landscape are data scientists and data engineers. Although their roles are closely related, each possesses unique skills and responsibilities that contribute to the successful extraction and utilization of data. In…

View On WordPress

#Big Data#Business Intelligence#Data Analytics#Data Architecture#Data Compliance#Data Engineering#Data Infrastructure#Data Insights#Data Integration#Data Mining#Data Pipelines#Data Science#data security#Data Visualization#Data Warehousing#Data-driven Decision Making#Exploratory Data Analysis (EDA)#Machine Learning#Predictive Analytics

1 note

·

View note

Text

SQL for Hadoop: Mastering Hive and SparkSQL

In the ever-evolving world of big data, having the ability to efficiently query and analyze data is crucial. SQL, or Structured Query Language, has been the backbone of data manipulation for decades. But how does SQL adapt to the massive datasets found in Hadoop environments? Enter Hive and SparkSQL—two powerful tools that bring SQL capabilities to Hadoop. In this blog, we'll explore how you can master these query languages to unlock the full potential of your data.

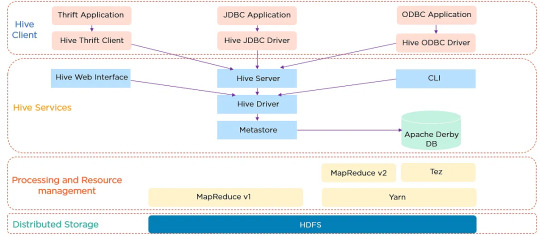

Hive Architecture and Data Warehouse Concept

Apache Hive is a data warehouse software built on top of Hadoop. It provides an SQL-like interface to query and manage large datasets residing in distributed storage. Hive's architecture is designed to facilitate the reading, writing, and managing of large datasets with ease. It consists of three main components: the Hive Metastore, which stores metadata about tables and schemas; the Hive Driver, which compiles, optimizes, and executes queries; and the Hive Query Engine, which processes the execution of queries.

Hive Architecture

Hive's data warehouse concept revolves around the idea of abstracting the complexity of distributed storage and processing, allowing users to focus on the data itself. This abstraction makes it easier for users to write queries without needing to know the intricacies of Hadoop.

Writing HiveQL Queries

HiveQL, or Hive Query Language, is a SQL-like query language that allows users to query data stored in Hadoop. While similar to SQL, HiveQL is specifically designed to handle the complexities of big data. Here are some basic HiveQL queries to get you started:

Creating a Table:

CREATE TABLE employees ( id INT, name STRING, salary FLOAT );

Loading Data:

LOAD DATA INPATH '/user/hive/data/employees.csv' INTO TABLE employees;

Querying Data:

SELECT name, salary FROM employees WHERE salary > 50000;

HiveQL supports a wide range of functions and features, including joins, group by, and aggregations, making it a versatile tool for data analysis.

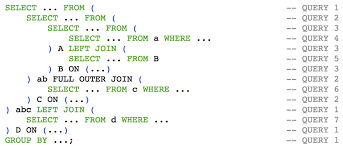

HiveQL Queries

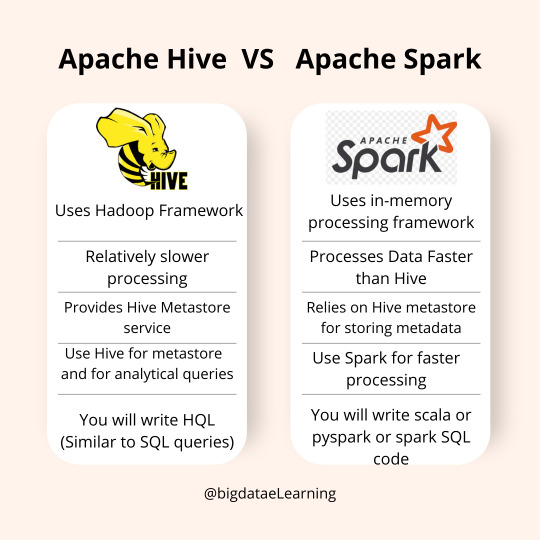

SparkSQL vs HiveQL: Similarities & Differences

Both SparkSQL and HiveQL offer SQL-like querying capabilities, but they have distinct differences:

Execution Engine: HiveQL relies on Hadoop's MapReduce engine, which can be slower due to its batch processing nature. SparkSQL, on the other hand, leverages Apache Spark's in-memory computing, resulting in faster query execution.

Ease of Use: HiveQL is easier for those familiar with traditional SQL syntax, while SparkSQL requires understanding Spark's APIs and dataframes.

Integration: SparkSQL integrates well with Spark's ecosystem, allowing for seamless data processing and machine learning tasks. HiveQL is more focused on data warehousing and batch processing.

Despite these differences, both languages provide powerful tools for interacting with big data, and knowing when to use each is key to mastering them.

SparkSQL vs HiveQL

Running SQL Queries on Massive Distributed Data

Running SQL queries on massive datasets requires careful consideration of performance and efficiency. Hive and SparkSQL both offer powerful mechanisms to optimize query execution, such as partitioning and bucketing.

Partitioning, Bucketing, and Performance Tuning

Partitioning and bucketing are techniques used to optimize query performance in Hive and SparkSQL:

Partitioning: Divides data into distinct subsets, allowing queries to skip irrelevant partitions and reduce the amount of data scanned. For example, partitioning by date can significantly speed up queries that filter by specific time ranges.

Bucketing: Further subdivides data within partitions into buckets based on a hash function. This can improve join performance by aligning data in a way that allows for more efficient processing.

Performance tuning in Hive and SparkSQL involves understanding and leveraging these techniques, along with optimizing query logic and resource allocation.

Hive and SparkSQL Partitioning & Bucketing

FAQ

1. What is the primary use of Hive in a Hadoop environment? Hive is primarily used as a data warehousing solution, enabling users to query and manage large datasets with an SQL-like interface.

2. Can HiveQL and SparkSQL be used interchangeably? While both offer SQL-like querying capabilities, they have different execution engines and integration capabilities. HiveQL is suited for batch processing, while SparkSQL excels in in-memory data processing.

3. How do partitioning and bucketing improve query performance? Partitioning reduces the data scanned by dividing it into subsets, while bucketing organizes data within partitions, optimizing joins and aggregations.

4. Is it necessary to know Java or Scala to use SparkSQL? No, SparkSQL can be used with Python, R, and SQL, though understanding Spark's APIs in Java or Scala can provide additional flexibility.

5. How does SparkSQL achieve faster query execution compared to HiveQL? SparkSQL utilizes Apache Spark's in-memory computation, reducing the latency associated with disk I/O and providing faster query execution times.

Home

instagram

#Hive#SparkSQL#DistributedComputing#BigDataProcessing#SQLOnBigData#ApacheSpark#HadoopEcosystem#DataAnalytics#SunshineDigitalServices#TechForAnalysts#Instagram

2 notes

·

View notes

Text

Supply Chain Management in India by Everfast Freight: Driving Efficiency, Reliability, and Growth

In today’s fast-paced global economy, efficient supply chain management (SCM) is not just a competitive advantage—it’s a necessity. In India, where logistics is the backbone of a rapidly growing economy, choosing the right supply chain partner can make or break a business. Everfast Freight stands out as a trusted name, delivering end-to-end supply chain management solutions in India tailored to diverse business needs.

Why Supply Chain Management Matters in India

India's dynamic business landscape, with its vast geography, diverse consumer base, and evolving infrastructure, demands smart, scalable, and efficient logistics solutions. From e-commerce giants to manufacturing units, every business requires a streamlined supply chain to:

Minimize delivery timelines

Reduce operational costs

Optimize inventory levels

Enhance customer satisfaction

That’s where Everfast Freight comes in—with a commitment to innovation, transparency, and on-time delivery.

Everfast Freight: Leaders in Supply Chain Management in India

With years of experience in logistics and freight forwarding, Everfast Freight offers comprehensive supply chain management services in India that include:

1. Warehousing & Inventory Management

Everfast Freight provides strategically located warehouses across India with advanced inventory tracking systems. This allows clients to manage stock levels effectively and reduce holding costs.

2. Transportation & Distribution

Our robust transportation network covers every major city and remote region. Whether it's road, air, rail, or sea, we ensure seamless movement of goods with real-time tracking and reduced transit times.

3. Customs Clearance & Documentation

Navigating India’s complex customs regulations can be challenging. Our in-house experts simplify the process, ensuring timely clearances and hassle-free international shipments.

4. Last-Mile Delivery

Timely delivery is the key to customer satisfaction. Our last-mile delivery solutions are optimized for speed, cost-efficiency, and reliability, especially for e-commerce and retail clients.

5. Technology-Driven SCM

We use cutting-edge logistics tech including IoT-enabled tracking, AI-based forecasting, and data analytics to provide full visibility and smarter decision-making for your supply chain.

Industries We Serve

Everfast Freight’s supply chain management solutions are tailored for a wide range of sectors, including:

E-commerce & Retail

Pharmaceuticals & Healthcare

FMCG & Consumer Goods

Manufacturing & Industrial Goods

Automotive & Engineering

Why Choose Everfast Freight?

Pan-India Network: Extensive reach across India’s Tier-1, Tier-2, and Tier-3 cities

Expertise & Experience: Decades of logistics and freight forwarding excellence

Real-Time Tracking: 24/7 visibility into every shipment

Customized Solutions: SCM plans tailored to your business model

Cost-Effective & Scalable: Optimize costs without compromising on speed or quality

Empower Your Business with Everfast Freight

Whether you're a startup scaling operations or an established enterprise expanding across India, Everfast Freight is your reliable logistics partner for supply chain management in India. We don’t just deliver packages—we deliver peace of mind, performance, and growth.

📞 Get in touch today to learn how we can streamline your supply chain operations.

#logistics#shipping#freightforwarding#transportation#cargo services#sea freight#cargo shipping#air cargo#custom-clearance#everfast

2 notes

·

View notes

Text

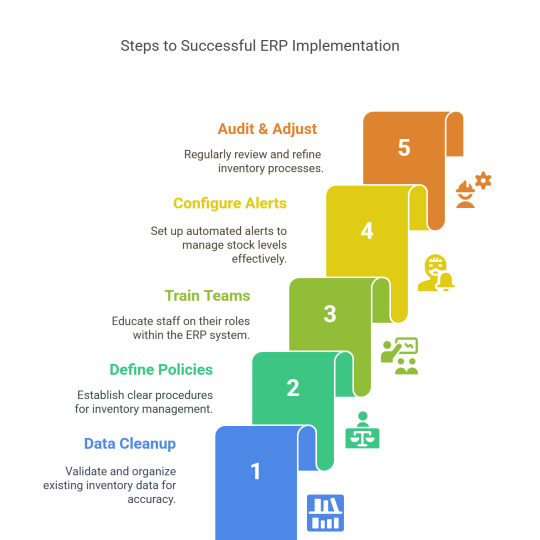

Better Cement Inventory Starts with Better Data

Sigzen’s ERPNext-based inventory management module is the key to smart warehousing and optimized production in cement.

📌 Learn how ERP is driving inventory success: 🔗 https://medium.com/@sigzencement/inventory-management-in-the-cement-industry-unlocking-efficiency-with-stock-inventory-management-003da22193dc

#erpnext#erpsoftware#businessefficiency#inventorymanagement#erpnextimplementation#erpnextintegration#erp#erpsolutions

3 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Empowering Businesses with Comprehensive Data Analytics Services

In today’s digital landscape, the significance of data-driven decision-making cannot be overstated. As organizations grapple with an overwhelming influx of data, the ability to harness, manage, and analyze this information effectively is key to gaining a competitive advantage. **Data analytics services** have emerged as essential tools that enable companies to transform raw data into valuable insights, driving strategic growth and operational efficiency.

The Role of Data Management in Modern Enterprises

Effective data management lies at the heart of any successful data analytics strategy. It involves systematically organizing, storing, and protecting data to ensure its quality and accessibility. A robust data management framework not only supports data compliance but also allows for the seamless integration of data from various sources, making it possible for businesses to have a unified view of their operations. This foundational layer is critical for maximizing the potential of data analytics services.

Turning Raw Data into Strategic Insights

Raw data, on its own, has little value unless it is processed and analyzed to reveal trends and patterns that can inform business strategies. Data analytics services help organizations unlock the true value of their data by converting it into actionable insights. These services leverage sophisticated techniques such as predictive analytics, data mining, and statistical modeling to deliver deeper insights into customer behavior, market trends, and operational efficiencies.

By employing data analytics, companies can optimize their decision-making processes, anticipate market changes, and enhance their products or services based on customer needs and feedback. This approach ensures that businesses stay ahead of the curve in an ever-evolving market landscape.

Driving Innovation Through Advanced Analytics

Data analytics services are not only about analyzing past performance; they are also instrumental in shaping the future. By integrating advanced analytics techniques, such as machine learning and artificial intelligence, businesses can identify emerging patterns and predict future outcomes with higher accuracy. This predictive capability enables organizations to mitigate risks, identify new revenue streams, and innovate more effectively.

The integration of real-time analytics further enhances a company’s ability to respond promptly to changing market conditions. It empowers decision-makers to take immediate actions based on the latest data insights, ensuring agility and resilience in dynamic environments.

Implementing a Data-Driven Culture

For businesses to truly benefit from data analytics services, it is crucial to foster a data-driven culture. This involves training teams to understand the value of data and encouraging data-centric decision-making across all levels of the organization. A culture that prioritizes data-driven insights helps break down silos, promotes transparency, and supports continuous improvement.

Organizations that embrace a data-driven mindset are better positioned to leverage analytics to drive strategic growth and deliver superior customer experiences.

Partnering for Success

Choosing the right partner for data management and analytics is vital. Companies like SG Analytics offer a range of tailored solutions designed to help organizations manage their data more effectively and gain valuable insights. From data warehousing and data integration to advanced analytics, these services provide end-to-end support, ensuring businesses can make the most of their data assets.

By leveraging data analytics services from experienced partners, companies can focus on their core objectives while simultaneously evolving into data-driven enterprises that thrive in the digital age.

Conclusion

In the era of big data, the ability to transform information into insights is a crucial differentiator. With comprehensive data analytics services, businesses can harness the power of their data to drive informed decisions, innovate, and maintain a competitive edge. The key lies in effective data management, advanced analytics, and fostering a culture that values data-driven insights.

2 notes

·

View notes

Text

AWS Security 101: Protecting Your Cloud Investments

In the ever-evolving landscape of technology, few names resonate as strongly as Amazon.com. This global giant, known for its e-commerce prowess, has a lesser-known but equally influential arm: Amazon Web Services (AWS). AWS is a powerhouse in the world of cloud computing, offering a vast and sophisticated array of services and products. In this comprehensive guide, we'll embark on a journey to explore the facets and features of AWS that make it a driving force for individuals, companies, and organizations seeking to utilise cloud computing to its fullest capacity.

Amazon Web Services (AWS): A Technological Titan

At its core, AWS is a cloud computing platform that empowers users to create, deploy, and manage applications and infrastructure with unparalleled scalability, flexibility, and cost-effectiveness. It's not just a platform; it's a digital transformation enabler. Let's dive deeper into some of the key components and features that define AWS:

1. Compute Services: The Heart of Scalability

AWS boasts services like Amazon EC2 (Elastic Compute Cloud), a scalable virtual server solution, and AWS Lambda for serverless computing. These services provide users with the capability to efficiently run applications and workloads with precision and ease. Whether you need to host a simple website or power a complex data-processing application, AWS's compute services have you covered.

2. Storage Services: Your Data's Secure Haven

In the age of data, storage is paramount. AWS offers a diverse set of storage options. Amazon S3 (Simple Storage Service) caters to scalable object storage needs, while Amazon EBS (Elastic Block Store) is ideal for block storage requirements. For archival purposes, Amazon Glacier is the go-to solution. This comprehensive array of storage choices ensures that diverse storage needs are met, and your data is stored securely.

3. Database Services: Managing Complexity with Ease

AWS provides managed database services that simplify the complexity of database management. Amazon RDS (Relational Database Service) is perfect for relational databases, while Amazon DynamoDB offers a seamless solution for NoSQL databases. Amazon Redshift, on the other hand, caters to data warehousing needs. These services take the headache out of database administration, allowing you to focus on innovation.

4. Networking Services: Building Strong Connections

Network isolation and robust networking capabilities are made easy with Amazon VPC (Virtual Private Cloud). AWS Direct Connect facilitates dedicated network connections, and Amazon Route 53 takes care of DNS services, ensuring that your network needs are comprehensively addressed. In an era where connectivity is king, AWS's networking services rule the realm.

5. Security and Identity: Fortifying the Digital Fortress

In a world where data security is non-negotiable, AWS prioritizes security with services like AWS IAM (Identity and Access Management) for access control and AWS KMS (Key Management Service) for encryption key management. Your data remains fortified, and access is strictly controlled, giving you peace of mind in the digital age.

6. Analytics and Machine Learning: Unleashing the Power of Data

In the era of big data and machine learning, AWS is at the forefront. Services like Amazon EMR (Elastic MapReduce) handle big data processing, while Amazon SageMaker provides the tools for developing and training machine learning models. Your data becomes a strategic asset, and innovation knows no bounds.

7. Application Integration: Seamlessness in Action

AWS fosters seamless application integration with services like Amazon SQS (Simple Queue Service) for message queuing and Amazon SNS (Simple Notification Service) for event-driven communication. Your applications work together harmoniously, creating a cohesive digital ecosystem.

8. Developer Tools: Powering Innovation

AWS equips developers with a suite of powerful tools, including AWS CodeDeploy, AWS CodeCommit, and AWS CodeBuild. These tools simplify software development and deployment processes, allowing your teams to focus on innovation and productivity.

9. Management and Monitoring: Streamlined Resource Control

Effective resource management and monitoring are facilitated by AWS CloudWatch for monitoring and AWS CloudFormation for infrastructure as code (IaC) management. Managing your cloud resources becomes a streamlined and efficient process, reducing operational overhead.

10. Global Reach: Empowering Global Presence

With data centers, known as Availability Zones, scattered across multiple regions worldwide, AWS enables users to deploy applications close to end-users. This results in optimal performance and latency, crucial for global digital operations.

In conclusion, Amazon Web Services (AWS) is not just a cloud computing platform; it's a technological titan that empowers organizations and individuals to harness the full potential of cloud computing. Whether you're an aspiring IT professional looking to build a career in the cloud or a seasoned expert seeking to sharpen your skills, understanding AWS is paramount.

In today's technology-driven landscape, AWS expertise opens doors to endless opportunities. At ACTE Institute, we recognize the transformative power of AWS, and we offer comprehensive training programs to help individuals and organizations master the AWS platform. We are your trusted partner on the journey of continuous learning and professional growth. Embrace AWS, embark on a path of limitless possibilities in the world of technology, and let ACTE Institute be your guiding light. Your potential awaits, and together, we can reach new heights in the ever-evolving world of cloud computing. Welcome to the AWS Advantage, and let's explore the boundless horizons of technology together!

8 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

6 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Digital Logistics Company in India: Zipaworld

Digital Logistics Company in India: Zipaworld

The Role of Digital Logistics Company in India

1. Enabling Trade and Commerce: Logistics companies in India play a crucial role in facilitating trade and commerce by ensuring the smooth movement of goods across the vast and diverse geographical expanse of the country.

2. Efficient Supply Chain Management: They act as a linchpin in the supply chain, managing the flow of goods from manufacturers to suppliers and eventually to the end consumers. This involves handling multiple processes, including transportation, warehousing, inventory management, and last-mile delivery.

3. Industry Tailored Solutions: Logistics providers offer scalable and specialized solutions to cater to different industries, such as FMCG, pharmaceuticals, automotive, and e-commerce. This customization optimizes the supply chain for specific requirements, reducing lead times and operational costs.

4. Boosting Productivity: By streamlining operations and optimizing routes, logistics companies boost productivity for businesses. Faster delivery times and cost-effective solutions help enterprises stay competitive in the market.

5. Navigating Regulatory Complexities: With international trade becoming more prevalent, logistics companies ensure compliance with various regulations and provide swift customs clearance, making cross-border transactions smoother.

The Need for Digital Logistics

1. Real-time Tracking: Digital logistics leverages IoT and GPS technologies to provide real-time tracking of shipments. This enhances visibility, allowing businesses to monitor their cargo's location and status at every step of the journey.

2. Predictive Analytics: Advanced analytics tools analyze historical data to predict future trends and demand patterns accurately. This foresight enables businesses to make proactive decisions, reducing delays and preventing potential bottlenecks.

3. Automated Route Optimization: AI-driven route optimization algorithms help freight forwarders find the most efficient and cost-effective routes for transportation, saving time and reducing fuel consumption.

4. Customer-Centric Approach: Digital logistics empowers logistics companies to offer better customer experiences. Improved visibility allows them to keep customers informed about their shipments' status, leading to enhanced satisfaction and loyalty.

5. Data-Driven Decision Making: With access to vast amounts of data, logistics companies can make data-driven decisions to optimize their operations continuously. This improves efficiency, reduces waste, and increases overall profitability.

How Freight Forwarding Companies are Leading the Digital World

1. Digital Platforms: Freight forwarding companies are investing in user-friendly digital platforms that allow customers to manage their shipments online. These platforms offer features such as instant quotes, online booking, and shipment tracking.

2. Blockchain Technology: Embracing blockchain technology, freight forwarders ensure secure and transparent transactions, reducing the risk of fraud and improving trust among stakeholders.

3. AI and Machine Learning: Integrating AI and machine learning algorithms, freight forwarders analyze data to optimize freight routes, predict demand, and enhance operational efficiency.

4. Collaborative Ecosystems: Leading freight forwarding companies are creating collaborative ecosystems by connecting shippers, carriers, and other service providers on a single platform. This fosters seamless communication and coordination among all stakeholders.

5. Sustainability Initiatives: Many freight forwarding companies are adopting digital solutions to reduce their environmental impact. Optimized routes and better load planning help in minimizing fuel consumption and carbon emissions.

In conclusion, Logistics Company companies in India are pivotal to the nation's economic growth, while digital logistics is the need of the hour in this ever-changing business landscape. Freight forwarding companies, at the forefront of the digital revolution, are harnessing technology to optimize operations, provide better customer experiences, and contribute to a more sustainable future.

Embracing Digital Logistics Company is not merely an option but a strategic imperative for businesses seeking to thrive in today's competitive global market. The fusion of logistics expertise with cutting-edge digital tools will pave the way for a more efficient, transparent, and customer-centric logistics industry in India and beyond.

Zipaworld Innovation Pvt. Ltd.

+91 1206916910

1 note

·

View note

Text

Unlock Efficiency: Your Pallet Master Guide

Wooden pallets are the unsung heroes of global commerce. At USA Pallet Warehousing Inc., we've mastered the art of blending durability, cost savings, and eco-smart. Here's how to leverage pallet solutions that boost your bottom line—while dominating search results.

🔥 1. Pallet Types Decoded: Match Wood to Your Workflow

Not all pallets are created equal. Choosing the correct type of shipping reduces damage by up to 60% (Industry Report, 2023):

Hardwood Pallets: Ideal for machinery weighing 2,000 pounds or more (made from oak/maple).

Custom Wood Pallets: Built to unique dimensions (e.g., pharma/automotive).

Recycled GMA Pallets: Cost-effective for standard shipments (48"x40").

Pro Tip: Identify wood types quickly—check for ISPM 15 stamps (heat-treated vs. chemically treated). Learn more about pallet identification.

♻️ 2. Wood Waste Recycling: Profit from Sustainability

85% of businesses overlook the potential savings from pallet recycling. Our Illinois hub turns waste into revenue:

Recycling Process: Collection → Sorting → Repairs → Resale (72-hour turnaround).

Eco-Benefits: 1 recycled pallet = 7.5 kWh energy saved (EPA Data).

Case Study: A Joliet warehouse cut disposal costs by 40% using our wood waste recycling program.

📦 3. Shipping Container Synergy: Maximize Space, Minimize Cost

Pair pallets with containers for unbreakable logistics:

Stack Smart: Use standardized 48"x40" pallets in 20ft/40ft containers.

Damage Control: Hardwood pallets + steel corner guards = 0% product loss.

Explore our shipping container solutions for Chicago warehouses.

🚀 4. The Illinois Advantage: Fast, Local, Reliable

Why 500+ IL businesses choose us:

Same-Day Delivery: From Bloomington to Streator.

Bulk Discounts: 1,000+ pallets = 25% wholesale rates.

Hot Deal: Custom pallets built to your specs in 48 hours.

Your Pallet Profit Playbook (Action Steps)

Audit Your Needs: Use our pallet dimension guide to optimize loads.

Recycle & Save: Request a Wood Waste Pickup.

Go Custom: Design pallets that slash shipping costs by 30%.

Limited Offer: The first 10 readers receive free pallet recycling and delivery on orders of 100 or more pallets. Claim Now

#wood waste recycling#usa pallet#wooden pallets for sale#pallets for sale#wood pallets#custom wood pallets#pallets usa#pallets#pallet dimensions#shipping container#WoodPallets#CustomPallets#PalletWoodTypes#IllinoisPallets

0 notes