#machine learning interview

Explore tagged Tumblr posts

Text

Key Machine Learning Interview Questions

Get ready for your machine learning interview with this curated list of questions and answers. Covering a range of topics from basic principles to advanced algorithms, this guide will help you demonstrate your expertise and land your desired role in the field of machine learning.

#Key Machine Learning Interview Questions#Machine Learning Interview Questions#Machine Learning Interview#machine learning#Machine learning career#data science

1 note

·

View note

Text

0 notes

Text

Audio of Michael with Kathy Burke on the Where There's A Will There's a Wake podcast being asked who would play Aziraphale if he dies and saying that he'd want David to play both parts. Transcript below (bold emphasis mine):

KB: "What about your colleagues' response? I mean, if you're in the middle of--I mean listen, in Nye, when you're doing theatre work, you do have understudies. But let's say you're were doing a new series of Good Omens with the great David Tennant--" Michael: "Well, I don't know about the great, but okay. With David Tennant, yeah." KB: "Who would replace you? I mean, who would put up with him, do you think?" Michael: "I mean, I'm loath to say it...but really, he should play both parts. Because originally we were--originally I was--Neil Gaiman, who wrote the original book with Terry Pratchett that the series was based on--when I first started talking to Neil about it, when he told me that he was going to do it, originally we talked about me playing the other part, the part David played. And one of the sort of things about us doing it is we'd never really acted opposite each other before because we'd usually be up for the same parts for many, many years. I think it was sort of between me and him for Casanova when he did Casanova. I mean, he's far too egotistical to let me know the parts I got over him--" KB: "--Of course." Michael: "There we are. That shows what the relationship is like. I'm quite happy to say the part that he got over me. But so, the fact that we were together in this was quite unusual, because normally we would be playing the same part. So that's quite good in a way, cause they're both, they're sort of light and shade of the same person in a way. So once I did pop my clogs, maybe he would have to then--you know the way they do it, do you remember that film Dead Ringers where Jeremy Irons played twins? So I'd quite like to see David playing both parts. And it would be his homage to me."

#michael sheen#welsh seduction machine#david tennant#soft scottish hipster gigolo#where there's a will there's a wake#good omens#this is so lovely though#i feel like the unspoken part is that he would rather David play both parts than have anyone else playing Aziraphale opposite him#possessive and heartfelt all at once#and shows just how much both Aziraphale and David mean to him#saying a lot by saying very little#at this point the subtext might as well be a billboard#they are perfect together your honor#also learned a new phrase today: 'pop my clogs'#god bless the British and their countless euphemisms for death#ineffable lovers#interview#discourse

146 notes

·

View notes

Text

Great Article By Ashkan Rajaee

13 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

AI: An ancient nightmare?

Artificial intelligence’s development seems to be moving at breakneck speed, and the ability of AI to automate even complex tasks – and, potentially, to outwit its human creators – has been making plenty of headlines in recent months. But how far back does our fascination with, and our fear of, AI extend? Matt Elton spoke to Michael Wooldridge, professor of computer science at the University of Oxford, to find out more.

#AI: An ancient nightmare#history extra#history extra podcast#BBC#podcast#history#new books#books and reading#author interview#podcasts#science#science history#AI#machine learning#Michael Wooldridge#computer science

3 notes

·

View notes

Text

AI interview preparation

I remember my first job interview vividly. It was a traditional setup—a panel of interviewers, a long list of questions, and the pressure to perform. Fast forward to today, and the process has evolved dramatically. With 87% of companies now leveraging advanced methods in recruitment, the way we approach interviews is changing1. These new methods focus on efficiency and fairness. For example,…

#AI interview questions#AI interview techniques#Artificial intelligence interview process#Automated hiring systems#Interview preparation tools#Machine learning job interviews

0 notes

Text

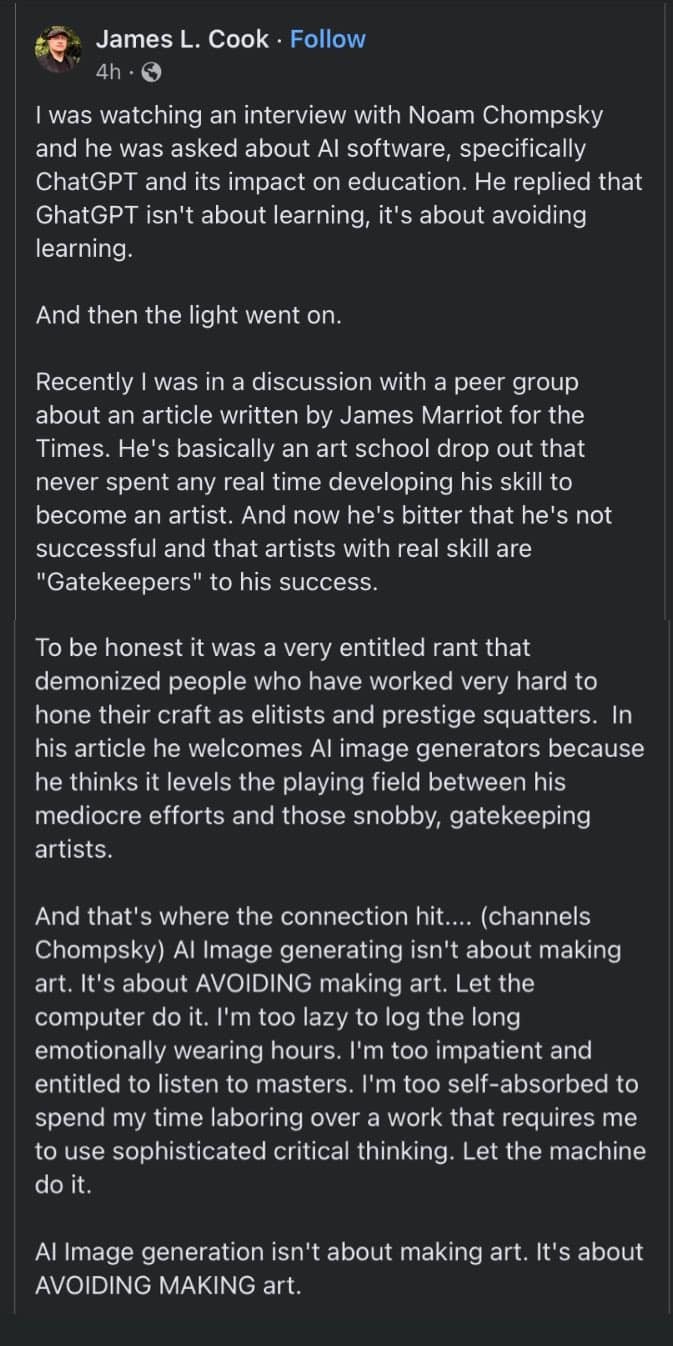

Image description from the notes by @summery-captain:

"A post by James L. Cook, reading: "I was watching an interview with Noam Chompsky and he was asked about AI software, specifically Chat GPT and its impact on education. He replied that Chat GPT isn't about learning, it's about avoiding learning.

And then the light went on.

Recently I was in discussion with a peer group about an article written by James Marriot for the Times. He's basically an art school drop out that never spent any real time developing his skill to become an artist. And now he's bitter that he's not successful and that artists with real skill are 'gatekeepers' to his success.

To be honest it was a very entitled rant that demonized people who have worked very hard to hone their craft as elitists and prestige squatters. In his article he welcomes AI generators because he thinks it levels the playing field between his mediocre efforts and those snobby, gatekeeping artists.

And that's where the connection hit... (channels Chompsky) AI image generating isn't about making art. It is about AVOIDING making art. Let the computer do it. I'm too lazy to log the long emotionally wearing hours. I'm too impatient to listen to masters. I'm too self-absorbed to spend my time laboring over a work that requires me to use sophisticated critical thinking. Let the machine do it.

AI imagine generation isn't about making art. It's about AVOIDING MAKING art." End of imagine description

63K notes

·

View notes

Text

Getting your feet wet with Generative AI

Disclaimer: The above image is AI generated Alright, here I am after a gap of a few months. Gen AI is creating a lot of buzz. While you have several names like ChatGpt, Perplexity, Google Gemini etc. doing the rounds wait… DeepSeek. Eeeek! Some folks did get scared for a while As a beginner, one should be concerned about privacy issues. You need to issue a prompt which contains detail of the…

#AI#AI Prompt#Artificial Intelligence#Automation#Chatbot#genai#Generative AI#interview question#Jobs#llama2#Machine Learning#ollama#prime numbers#Prompt#Python#Software testing#Tools

0 notes

Text

#AI & Morality#AI & Power Structures#AI and Human Survival#AI Ethics#AI Governance#AI Interviews#AI Predictions#Artificial Intelligence#Big Tech Control#Conscious AI#Digital Evolution#Ethical Dilemmas#facts#Future of AI#Human vs AI#Machine Learning#serious#straight forward#Surveillance & Control#Technology & Society#The Future of Humanity#The Realist Juggernaut#The Singularity#truth#upfront#Warning Signs#website

0 notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

Machine learning is one of the many subsets under the vast banner of AI, and its capabilities involve the automation of learning so that improvements based on experience are not dependent on explicit programming. Preparation for questions on a machine learning interview requires familiarity with foundational concepts that make the machines work; these involve learning based on data input, identification of patterns, and decision making by the machine. These include supervised learning, unsupervised learning, and reinforcement learning, as well as common algorithms and their applications.

0 notes

Text

Explore an in-depth review of the Interview Kickstart Machine Learning Course, a top-rated program for aspiring ML engineers. Learn how project-based learning and expert guidance can prepare you for roles in FAANG and other Tier-1 companies in 2024

#career#education#jobs#course reviews#interview kickstart#Machine learning course review#Interview Kickstart Machine Learning course reviews

0 notes

Text

K-means in Machine Learning: Easy Explanation for Data Science Interviews

K-Means is one of the most popular machine learning algorithms you’ll encounter in data science interviews. In this video, I’ll … source

0 notes

Text

Key Machine Learning Interview Questions

Get ready for your machine learning interview with this curated list of questions and answers. Covering a range of topics from basic principles to advanced algorithms, this guide will help you demonstrate your expertise and land your desired role in the field of machine learning.

0 notes

Text

In machine learning interviews, common questions often revolve around algorithms, model evaluation, and practical application. For instance, candidates might be asked about the difference between supervised and unsupervised learning, the workings of popular algorithms like decision trees or neural networks, and techniques for handling overfitting or data imbalance.

0 notes