#maters in AI and data science

Explore tagged Tumblr posts

Text

Why Pursue a Master’s in AI and Data Science: Skills, Careers, and Future Scope

Starting a master’s in AI and data science is a journey full of discovery. You learn vital skills. You open the door to great jobs. You shape the future of technology. With simple steps and clear goals, you can begin this path today. Good luck on your learning adventure!

#asian university delhi#south indian education society#maters in AI and data science#btech computer science

0 notes

Text

Top Companies Hiring AWS AI Certified Practitioners in 2025

In 2025, AI is no longer a buzzword. It's not a future promise. It's the battlefield. And if you're not armed with real skills, you're just another spectator watching the war from the sidelines. But here's the secret: becoming an AWS AI Certified Practitioner is the fastest ticket to the frontlines.

Yes, that exact certification from the Coursera AWS Certified AI Practitioner Specialization. Once seen as "nice to have," it's now the red-hot badge that makes recruiters stop scrolling and start dialing.

Why This Certification? Why Now?

Let's cut the fluff. Most AI certifications are either too academic, too theoretical, or worse, outdated the moment you get them. The AWS AI Practitioner program isn't one of them. It's built with Amazon's tech, on Amazon's infrastructure, teaching you how to wield AI in the real world, not in a sandbox.

You'll go from zero to building AI-powered apps, understanding SageMaker, and deploying machine learning pipelines that companies need. In 2025, practical skill beats PhD-level pontificating. Every. Single. Time.

The Power Shift: Who's Hiring AWS AI Certified Practitioners?

Now, here's where things get juicy. Let's talk about the companies behind the biggest AI hiring sprees—and why they specifically want AWS-certified talent.

Amazon (No surprise, right?)

Let's start with the behemoth in the room. Amazon isn't just promoting the certification—it's hiring directly from it. In 2025, over 40% of junior and mid-level AI roles at Amazon's cloud and retail divisions require direct experience with AWS AI tools. Guess what the specialization teaches?

Internal leaks (yep, we have sources) suggest that Amazon prioritizes certified applicants for its "AI-First" initiative—a sweeping project to embed machine learning into every Amazon service from Alexa to supply chain optimization.

Accenture and Deloitte

These consulting giants aren't messing around. They've realized their clients want real-time, cloud-native AI solutions—and AWS is the preferred cloud for 70% of them. They've rolled out exclusive hiring programs in 2025 to fast-track AWS AI-certified candidates into data science and ML ops roles.

We've even seen listings that say "AWS AI Certification preferred over master's degree." Bold move? Absolutely. But it tells you where the market is headed.

JP Morgan Chase, Goldman Sachs, and Citi

Finance has become a data arms race. From fraud detection to AI-driven trading algorithms, banks are doubling down on AWS AI infrastructure. The catch? They need practitioners who speak the language of machine learning, Python, and SageMaker pipelines.

These giants are now partnering with training platforms (including Coursera) to funnel certified talent into their data teams. One insider quipped, "We don't need AI researchers. We need AI builders. AWS-certified folks deliver."

Salesforce and Adobe

Customer experience is the new battleground, and AI is the weapon of choice. Both Adobe and Salesforce are embedding machine learning across their products. In 2025, their recruitment priorities include cloud-savvy AI specialists who understand API integrations, personalization algorithms, and data ethics—all covered in the AWS certification.

Also, rumor has it that Salesforce is integrating AWS AI models into Einstein, and they want talent to help make it happen.

Startups and AI Unicorns

Here's the twist: It's not just the giants. Early-stage startups and Series A-funded AI companies are cherry-picking AWS AI-certified candidates because they can't afford to train from scratch. They need plug-and-play AI talent who can architect, build, and ship.

These startups care less about your alma mater and more about whether you can deploy a recommender system or a generative model on AWS with minimal hand-holding.

Is the Certification Replacing Traditional Degrees?

Let's address the elephant in the room. There's growing tension in tech hiring circles: Is a certification like AWS AI Practitioner enough to replace a degree?

Some say yes, and the data backs it up. In a recent survey of 500 tech recruiters, 68% said they would prioritize a strong AWS AI certification over a generalist computer science degree for entry-level AI roles.

Universities are not happy. Professors argue that certifications are "shallow." But let's be real, companies don't want AI theory anymore. They want results. They want proof that you can work in AWS and ship scalable ML solutions. And that's exactly what this certification demonstrates.

Final Thoughts: The AI Talent War Is On. Are You Ready?

The hiring frenzy isn't slowing down. It's only heating up. Whether you want to work at Amazon, crack into fintech, or join a stealth-mode AI startup, the AWS Certified AI Practitioner certification is a strategic power play.

In 2025, it's not about how long you studied but what you can build. Companies are no longer impressed by jargon. They want doers—and this certification proves you're one of them.

So, if you're still debating whether to take that course on Coursera, here's the truth:

While others are waiting to "get ready," AWS AI-certified professionals are already getting hired.

Ready to join them? Start your journey with the AWS Certified AI Practitioner Specialization today and turn that badge into a badge of power.

0 notes

Note

How do u feel about ai? I feel like im “wrong” for being really fascinated by it (ik its a broad subject but its all so cool). Should i study ai and data science in college or will i be contributing to some like evil force idk

to the extent that AI is an "evil force" (pretty strong language imo), it's entirely due to how it's wielded by assholes for capitalist ends and not anything inherent to the technology itself*. that being said, like any other STEM field these days, the majority of your employment opportunities (and almost all of the high-paying ones) will probably involve using it for those "evil" ends because that's where the money is. this is endemic to STEM, unfortunately. my alma mater's career fair has reserved table spots for the Army, the Navy, Raytheon, Lockheed Martin, etc. i could be making six figures right now if i hadn't turned down an offer from Lincoln Labs in 2019 to make missile guidance systems because i figured my conscience and ability to live with myself/sleep at night was worth more than that.

sorry, that kind of got away from me for a bit there. basically just study what you want because trying to force yourself into a sub-field of STEM that you hate will make you suicidal, but keep a finger on the pulse of the field because "the coolest/most useful thing to do with a given technology" and "the most profitable thing to do with a given technology" are so far apart it's actually insane i mean neurodivergent

111 notes

·

View notes

Text

How AI can falsify satellite images

- By Kim Eckart , University of Washington -

A fire in Central Park seems to appear as a smoke plume and a line of flames in a satellite image. Colorful lights on Diwali night in India, seen from space, seem to show widespread fireworks activity. Both images exemplify what a new University of Washington-led study calls “location spoofing.”

The photos — created by different people, for different purposes — are fake but look like genuine images of real places. And with the more sophisticated AI technologies available today, researchers warn that such “deepfake geography” could become a growing problem.

So, using satellite photos of three cities and drawing upon methods used to manipulate video and audio files, a team of researchers set out to identify new ways of detecting fake satellite photos, warn of the dangers of falsified geospatial data and call for a system of geographic fact-checking.

Image: What may appear to be an image of Tacoma is, in fact, a simulated one, created by transferring visual patterns of Beijing onto a map of a real Tacoma neighborhood. Credit: Zhao et al., 2021, Cartography and Geographic Information Science.

“This isn’t just Photoshopping things. It’s making data look uncannily realistic,” said Bo Zhao, assistant professor of geography at the UW and lead author of the study, which published April 21 in the journal Cartography and Geographic Information Science. “The techniques are already there. We’re just trying to expose the possibility of using the same techniques, and of the need to develop a coping strategy for it.”

As Zhao and his co-authors point out, fake locations and other inaccuracies have been part of mapmaking since ancient times. That’s due in part to the very nature of translating real-life locations to map form, as no map can capture a place exactly as it is. But some inaccuracies in maps are spoofs created by the mapmakers. The term “paper towns” describes discreetly placed fake cities, mountains, rivers or other features on a map to prevent copyright infringement. On the more lighthearted end of the spectrum, an official Michigan Department of Transportation highway map in the 1970s included the fictional cities of “Beatosu and “Goblu,” a play on “Beat OSU” and “Go Blue,” because the then-head of the department wanted to give a shoutout to his alma mater while protecting the copyright of the map.

But with the prevalence of geographic information systems, Google Earth and other satellite imaging systems, location spoofing involves far greater sophistication, researchers say, and carries with it more risks. In 2019, the director of the National Geospatial Intelligence Agency, the organization charged with supplying maps and analyzing satellite images for the U.S. Department of Defense, implied that AI-manipulated satellite images can be a severe national security threat.

To study how satellite images can be faked, Zhao and his team turned to an AI framework that has been used in manipulating other types of digital files. When applied to the field of mapping, the algorithm essentially learns the characteristics of satellite images from an urban area, then generates a deepfake image by feeding the characteristics of the learned satellite image characteristics onto a different base map — similar to how popular image filters can map the features of a human face onto a cat.

Image: This simplified illustration shows how a simulated satellite image (right) can be generated by putting a base map (City A) into a deepfake satellite image model. This model is created by distinguishing a group of base map and satellite image pairs from a second city (City B). Credit: Zhao et al., 2021, Cartography and Geographic Information Science.

Next, the researchers combined maps and satellite images from three cities — Tacoma, Seattle and Beijing — to compare features and create new images of one city, drawn from the characteristics of the other two. They designated Tacoma their “base map” city and then explored how geographic features and urban structures of Seattle (similar in topography and land use) and Beijing (different in both) could be incorporated to produce deepfake images of Tacoma.

In the example below, a Tacoma neighborhood is shown in mapping software (top left) and in a satellite image (top right). The subsequent deep fake satellite images of the same neighborhood reflect the visual patterns of Seattle and Beijing. Low-rise buildings and greenery mark the “Seattle-ized” version of Tacoma on the bottom left, while Beijing’s taller buildings, which AI matched to the building structures in the Tacoma image, cast shadows — hence the dark appearance of the structures in the image on the bottom right. Yet in both, the road networks and building locations are similar.

Image: These are maps and satellite images, real and fake, of one Tacoma neighborhood. The top left shows an image from mapping software, and the top right is an actual satellite image of the neighborhood. The bottom two panels are simulated satellite images of the neighborhood, generated from geospatial data of Seattle (lower left) and Beijing (lower right). Credit: Zhao et al., 2021, Cartography and Geographic Information Science.

The untrained eye may have difficulty detecting the differences between real and fake, the researchers point out. A casual viewer might attribute the colors and shadows simply to poor image quality. To try to identify a “fake,” researchers homed in on more technical aspects of image processing, such as color histograms and frequency and spatial domains.

Some simulated satellite imagery can serve a purpose, Zhao said, especially when representing geographic areas over periods of time to, say, understand urban sprawl or climate change. There may be a location for which there are no images for a certain period of time in the past, or in forecasting the future, so creating new images based on existing ones — and clearly identifying them as simulations — could fill in the gaps and help provide perspective.

The study’s goal was not to show that geospatial data can be falsified, Zhao said. Rather, the authors hope to learn how to detect fake images so that geographers can begin to develop the data literacy tools, similar to today’s fact-checking services, for public benefit.

“As technology continues to evolve, this study aims to encourage more holistic understanding of geographic data and information, so that we can demystify the question of absolute reliability of satellite images or other geospatial data,” Zhao said. “We also want to develop more future-oriented thinking in order to take countermeasures such as fact-checking when necessary,” he said.

Co-authors on the study were Yifan Sun, a graduate student in the UW Department of Geography; Shaozeng Zhang and Chunxue Xu of Oregon State University; and Chengbin Deng of Binghamton University.

--

Source: University of Washington

Full study: “Deep fake geography? When geospatial data encounter Artificial Intelligence”, Cartography and Geographic Information Science.

https://doi.org/10.1080/15230406.2021.1910075

Read Also

Microsoft launches anti-disinformation tool ‘Video Authenticator’

#deepfake#ai#artificial intelligence#satellite#satellite imaging#imaging#ethics#geography#geospatial data#misinformation#disinformation

1 note

·

View note

Photo

Unleashing Big Data of the Past – Europe builds a Time Machine

Iconem is proud to announce that the European Commission has chosen the Time Machine project as one of the six proposals retained for preparing large scale research initiatives to be strategically developed in the next decade.

Nous sommes fiers d’annoncer que Time Machine a été retenu par la Commission Européenne comme l’un des six projets finalistes qui serviront à préparer des programmes de recherche ambitieux et sur une large échelle pour les prochaines décennies.

A €1 million fund has been granted for preparing the detailed roadmaps of this initiative that aims at extracting and utilising the Big Data of the past. Time Machine foresees to design and implement advanced new digitisation and Artificial Intelligence (AI) technologies to mine Europe’s vast cultural heritage, providing fair and free access to information that will support future scientific and technological developments in Europe.

Alongside 33 prestigious institutions, Iconem will provide its tools and knowledge on the digitisation of endangered cultural heritage sites in 3D.

One of the most advanced Artificial Intelligence systems ever built

The Time Machine will create advanced AI technologies to make sense of vast amounts of information from complex historical data sets. This will enable the transformation of fragmented data – with content ranging from medieval manuscripts and historical objects to smartphone and satellite images – into useable knowledge for industry. Considering the unprecedented scale and complexity of the data, The Time Machine’s AI even has the potential to create a strong competitive advantage for Europe in the global AI race.

Cultural Heritage as a valuable economic asset

Cultural Heritage is one of our most precious assets, and the Time Machine’s ten-year research and innovation program will strive to show that rather than being a cost, cultural heritage investment will actually be an important economic driver across industries. For example, services for comparing territorial configurations across space and time will become an essential tool in developing modern land use policy or city planning. Likewise, the tourism industry will be transformed by professionals capable of creating and managing newly possible experiences at the intersection of the digital and physical world. These industries will have a pan-European platform for knowledge exchange which will add a new dimension to their strategic planning and innovation capabilities.

Time Machine promotes a unique alliance of leading European academic and research organisations, Cultural Heritage institutions and private enterprises that are fully aware of the huge potential of digitisation and the very promising new paths for science, technology and innovation that can be opened through the information system that will be developed, based on the Big Data of the Past. In addition to the 33 core institutions that will be funded by the European Commission, more than 200 organisations from 33 countries are participating to the initiatives, including seven national libraries, 19 state archives, famous museums (Louvre, Rijkmuseum), 95 academic and research institutions, 30 European companies and 18 governmental bodies.

Background

In early 2016, the European Commission held a public consultation of the research community to gather ideas on science and technology challenges that could be addressed through future FET Flagships. A call for preparatory actions for future research initiatives was launched in October 2017 as part of the Horizon 2020 FET Work Programme 2018. From the 33 proposals submitted, six were selected after a two-stage evaluation by independent high-level experts.

The 33 institutions receiving parts of the €1 million to develop Time Machine

1. ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE

2. TECHNISCHE UNIVERSITAET WIEN

3. INTERNATIONAL CENTRE FOR ARCHIVAL RESEARCH

4. KONINKLIJKE NEDERLANDSE AKADEMIE VAN WETENSCHAPPEN

5. NAVER FRANCE

6. UNIVERSITEIT UTRECHT

7. FRIEDRICH-ALEXANDER-UNIVERSITAET ERLANGEN NUERNBERG

8. ECOLE NATIONALE DES CHARTES

9. ALMA MATER STUDIORUM - UNIVERSITÀ DI BOLOGNA

10. INSTITUT NATIONAL DE L'INFORMATION GEOGRAPHIQUE ET FORESTIERE

11. UNIVERSITEIT VAN AMSTERDAM

12. UNIWERSYTET WARSZAWSKI

13. UNIVERSITE DU LUXEMBOURG

14. BAR-ILAN UNIVERSITY

15. UNIVERSITA CA' FOSCARI VENEZIA

16. UNIVERSITEIT ANTWERPEN

17. QIDENUS GROUP GMBH

18. TECHNISCHE UNIVERSITEIT DELFT

19. CENTRE NATIONAL DE LA RECHERCHE SCIENTIFIQUE

20. STICHTING NEDERLANDS INSTITUUT VOOR BEELD EN GELUID

21. FIZ KARLSRUHE- LEIBNIZ-INSTITUT FUR INFORMATIONS INFRASTRUKTUR GMBH

22. FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V.

23. UNIVERSITEIT GENT

24. TECHNISCHE UNIVERSITAET DRESDEN

25. TECHNISCHE UNIVERSITAT DORTMUND

26. OESTERREICHISCHE NATIONALBIBLIOTHEK

27. ICONEM

28. INSTYTUT CHEMII BIOORGANICZNEJ POLSKIEJ AKADEMII NAUK

29. PICTURAE BV

30. CENTRE DE VISIÓ PER COMPUTADOR

31. EUROPEANA FOUNDATION

32. INDRA

33. UBISOFT

Time machine website : http://www.timemachine.eu/

Contact : Kevin Baumer, Ecole Polytechnique Fédérale de Lausanne, [email protected]

2 notes

·

View notes

Text

Best Data Science Course In Delhi, Top Data Science Course In Delhi

In these years I had a great experience in every field be it studies, sports, social service and many more. Whatever I am today after engineering is the output of efforts put together by all teachers, staff and their valuable guidance. Political Science, Physics, English literature and History attracts most of the candidates from Kerala. In the 2019-'22 batch, the Physics UG course in Hindu College has 39 Malayalis out of 141 students.Like the average fees for online courses varies from INR 10,000 to 50,000. In the case of UG courses it is between INR 6-10 L and in the case of PG it is INR 7-15 L. There are 134 colleges in India that provide courses on Data Science Course.

For those who find our culture and working standards admirable we are always keen to provide the industry exposure to them within our organisation. Monthly growth & skill evaluation of every student is recorded and shared with them during one-o-one mentorship session in order make them future ready. The Indraprastha Institute of Information Technology , Delhi in collaboration with IBM has launched a nine-month-long PG Diploma program in Data Science and AI (PGDDS&AI) for students and IT professionals. The nine-month-long PG Diploma program will help cater to the upskilling needs of IT professionals and to meet industry requirements.

COPS has the best Data Science faculty with relevant experience in the field. We introduce real-life projects to our students to practice their learning in action. We share some valuable insights about the real world projects by making use of this specific technology. Its purpose is to showcase the individual’s readiness to use the data science knowledge in real-life scenarios. • Use data science approaches like machine learning, statistical modeling, and artificial intelligence to solve problems. IMS Proschool has been ranked 4th among the top 5 training institutes in India for analytics and data science by the Analytics India Magazine. The Office of Alumni Relations at National Forensic Sciences University was established with the motive to give a platform for the Alumni to contact and connect with the alma mater. The alumni of the National Forensic Sciences University can contact Alumni Relations office for any requests related to the institute. It will also keep alumni updated about various activities that are happening in the campus, thus helping them to stay connected with National Forensic Sciences University.

This is our very 31st module of the data science course in delhi. Text mining is one of the most critical ways to analyze and process unstructured data. This will enable the student to learn the data science course syllabus in-depth to become an outstanding data scientist. This is our very 29th module of the data science course in delhi. Take a data science course online at Techstack Academy that intends to deliver you with nothing but the best. We educate our students in every aspect of the course they choose and start it from the very beginning to give immense knowledge about the subject.

Address :

M 130-131, Inside ABL Work Space, Second Floor, Connaught CircleConnaught PlaceNew Delhi, Delhi 110001

9632156744

Click here to get directions; https://g.page/ExcelRDataScienceDelhi?share

0 notes

Text

5 Essential Tips to Choose The Right Data Science Learning Resources

A beginner’s Guide by Learnbay

Data science learning resources are flooding everywhere. Unfortunately, such massive availability of online courses, practice websites, competition sites, and e-books can easily puzzle you to choose the wrong one. So, to make your data science career transition mistake-proof from learning resources, I have developed five professional pieces of advice for you.

Let’s see what those are.

1. Choose a Course that offers Interactive Learning Mode and end-to-end career transition support.

Recorded sessions are the best option for brushed up learning. While you are learning from scratch, then the model of learning has to be interactive fast. Such a session usually is not available for free. You need to pay for that. So, before finalising a paid data science learning course, ensure if it provides complete career transition assistance or not. Assess for the following. ● Live online classes/ offline classes ● Domain-specific Hands-on industrial project experience ● Capstone project opportunity ● Job assistance with interview questions and mock interviews. ● Domain-specific Internship (added advantage) ● Life-long access for course modules and recording of classes.

2. Choose tools training resource that supports your domain expertise

Opting for an additional online resource for practising the knowledge of your learned data science tool is fine. But be assured you are ● Learning and Practising the right tool ● assessing the latest version of each tool ● Following guidelines and techniques for the tools are on-demand in your industrial domain. ● Learning the best-fit usage strategies currently available.

3. Keep your focus on learning modules.

To gain the maximum possible benefits of a learning resource, check if the associated learning modules are ● Focused on your domain expertise. ● Focused on your level of working experience. ● Highly competent with the current job market demand. ● Offer you the scopes of learning from scratch. ● Includes all the theoretical concepts, practical tools, and soft skill development opportunities for a sustainable data science career transition within your domain.

4. For projects, cross-check data resource reliability.

Finding a large amount of data set is quite easy but finding the right one is not easy. With the massive development of IoT, the exposure of data sets is now expanding, but that also expands the reliability concern. Before inserting data set in your project, ensure it's ● free from legal hazards ● Cross-verified ● Bug-free ● Up-to-date ● Authenticated ● Original Better to verify the datasets from multiple resource platforms.

[Related Blog: The Secrets of Completing Highly Creditable Hand-on Project in 2021]

5. For e-books, tutorial videos, technical blogs, - choose the updated one only.

Listening to blogs, streaming youtube videos, and reading e-books are the best strategy for on-the-go continued learning in our busy lifestyle. But stick to such resource only that are ● Regularly updated ● Updated for tech tool’s new features, bug fixations, etc. ● Republished or launched with latest conceptual and informative updates (for the book/ blogs)

[Related blog: Top 7 Books Every Data Science Aspirants Should Read in 2021| Learnbay]

Mater Advice

Whatever resource you choose, it should always provide you with an in-depth idea, not the cheatsheet one. Besides, the resource has to be acceptable enough to become a smart but knowledgeable data scientist rather than hardworking.

The best strategy is to choose such a resource that offers ample opportunities for dealing with practice problems.

You can confidently opt for Learnbay data science and AI courses for a comprehensive and full-proof online data science certification course. Once you register for the Learnbay data science course, you'll get rid of all headaches about domain-based learning module search, finding reliable data sets for industrial projects, Hunting for creditable internship resources, searching for e-book references, etc. The data courses by Learnbay provide a complete package of the solution to all of your data science learning needs.

0 notes

Text

Deepfake geography: Why fake satellite images are a growing problem

Research indicates that "deepfake geography," or realistic but fake images of real places, could become a growing problem.

For example, a fire in Central Park seems to appear as a smoke plume and a line of flames in a satellite image. In another, colorful lights on Diwali night in India, seen from space, seem to show widespread fireworks activity.

Both images exemplify what the new study calls "location spoofing." The photos—created by different people, for different purposes—are fake but look like genuine images of real places.

So, using satellite photos of three cities and drawing upon methods used to manipulate video and audio files, a team of researchers set out to identify new ways of detecting fake satellite photos, warn of the dangers of falsified geospatial data, and call for a system of geographic fact-checking.

"This isn't just Photoshopping things. It's making data look uncannily realistic," says Bo Zhao, assistant professor of geography at the University of Washington and lead author of the study in the journal Cartography and Geographic Information Science. "The techniques are already there. We're just trying to expose the possibility of using the same techniques, and of the need to develop a coping strategy for it."

Putting lies on the map

As Zhao and his coauthors point out, fake locations and other inaccuracies have been part of mapmaking since ancient times. That's due in part to the very nature of translating real-life locations to map form, as no map can capture a place exactly as it is. But some inaccuracies in maps are spoofs that the mapmakers created. The term "paper towns" describes discreetly placed fake cities, mountains, rivers, or other features on a map to prevent copyright infringement.

For example, on the more lighthearted end of the spectrum, an official Michigan Department of Transportation highway map in the 1970s included the fictional cities of "Beatosu and "Goblu," a play on "Beat OSU" and "Go Blue," because the then-head of the department wanted to give a shout-out to his alma mater while protecting the copyright of the map.

But with the prevalence of geographic information systems, Google Earth, and other satellite imaging systems, location spoofing involves far greater sophistication, researchers say, and carries with it more risks. In 2019, the director of the National Geospatial Intelligence Agency, the organization charged with supplying maps and analyzing satellite images for the US Department of Defense, implied that AI-manipulated satellite images can be a severe national security threat.

Tacoma, Seattle, Beijing

To study how satellite images can be faked, Zhao and his team turned to an AI framework that has been used in manipulating other types of digital files. When applied to the field of mapping, the algorithm essentially learns the characteristics of satellite images from an urban area, then generates a deepfake image by feeding the characteristics of the learned satellite image characteristics onto a different base map—similar to how popular image filters can map the features of a human face onto a cat.

Next, the researchers combined maps and satellite images from three cities—Tacoma, Seattle, and Beijing—to compare features and create new images of one city, drawn from the characteristics of the other two. They designated Tacoma their "base map" city and then explored how geographic features and urban structures of Seattle (similar in topography and land use) and Beijing (different in both) could be incorporated to produce deepfake images of Tacoma.

In the example below, a Tacoma neighborhood is shown in mapping software (top left) and in a satellite image (top right). The subsequent deepfake satellite images of the same neighborhood reflect the visual patterns of Seattle and Beijing. Low-rise buildings and greenery mark the "Seattle-ized" version of Tacoma on the bottom left, while Beijing's taller buildings, which AI matched to the building structures in the Tacoma image, cast shadows—hence the dark appearance of the structures in the image on the bottom right. Yet in both, the road networks and building locations are similar.

These are maps and satellite images, real and fake, of one Tacoma neighborhood. The top left shows an image from mapping software, and the top right is an actual satellite image of the neighborhood. The bottom two panels are simulated satellite images of the neighborhood.Zhao et al., 2021, Cartography and Geographic Information Science

The untrained eye may have difficulty detecting the differences between real and fake, the researchers point out. A casual viewer might attribute the colors and shadows simply to poor image quality. To try to identify a "fake," researchers homed in on more technical aspects of image processing, such as color histograms and frequency and spatial domains.

Could 'location spoofing' prove useful?

Some simulated satellite imagery can serve a purpose, Zhao says, especially when representing geographic areas over periods of time to, say, understand urban sprawl or climate change. There may be a location for which there are no images for a certain period of time in the past, or in forecasting the future, so creating new images based on existing ones—and clearly identifying them as simulations—could fill in the gaps and help provide perspective.

The study's goal was not to show that it's possible to falsify geospatial data, Zhao says. Rather, the authors hope to learn how to detect fake images so that geographers can begin to develop the data literacy tools, similar to today's fact-checking services, for public benefit.

"As technology continues to evolve, this study aims to encourage more holistic understanding of geographic data and information, so that we can demystify the question of absolute reliability of satellite images or other geospatial data," Zhao says. "We also want to develop more future-oriented thinking in order to take countermeasures such as fact-checking when necessary," he says.

Coauthors of the study are from the University of Washington, Oregon State University, and Binghamton University.

Source: University of Washington. Reprinted with permission of Futurity. Read the original article.

0 notes

Text

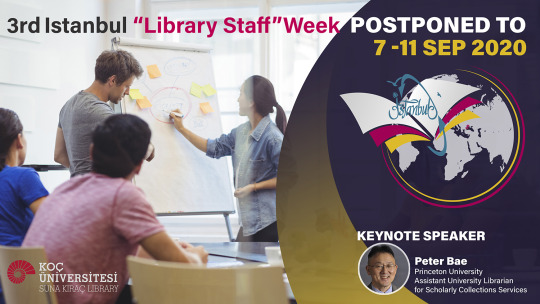

First Poster: Istanbul International “Library Staff” Week Project

Poster Creator: Sina Mater

Institution: Koç University Suna Kıraç Library

Poster Abstract: This year the week will focus on engagement. Library and Information Science is an evolving sector that follows current trends both in education and technology, trying to meet the various information and research needs of the users. Librarians are relationship builders for their campuses and they recognize the value of building essential, foundational community relationships...

Engagement can be established in academic issues, in users, in research, in scholarship, in technology and many more. Our workshops, presentations and discussions can cover different areas of ongoing engagement efforts such as Data Science and User Research, Assessment, Open Science, Scholarly Publishing, Data Management, Metrics, Information Visualization (IV), Research oriented IL, Artificial Intelligence (AI), Fake News, Outreach services, Digital Transformation, Sustainability, Digital Humanities, Conservation – Preservation of audiovisual Cultural Heritage materials, etc.

Through this week, library staff and visitors will have the chance to engage into fruitful discussions and exchange of professional and personal experiences, which will broaden their perspective on issues related to Information Science, strengthen their skills and hopefully plant the roots for future collaboration on joint projects.

0 notes

Text

10 Best Online Assessment Software For Teachers In 2020

2020 started as a pretty shaky year. The COVID-19 quarantine makes it so that we can’t make do with real-life interviews, and personal assessments are a pretty stressful endeavor.

Some places are on lockdown, so individual evaluations, discussions, and meetings are entirely out of the question.

That is the exact reason why you should consider giving online assessment a shot. Online assessment tools have been available for quite some time and were always a relatively popular option.

Online assessment software allows teachers to quiz their students through the internet with ease while cutting down on any cheater’s chances of passing.

As stated above, online assessment software has been around for quite some time, but not all of it is created equal.

People are taking the new opportunity to advertise their online assessment software, and it’s flooding the market with weak technology.

Top 10 Online Assessment Tools For Teachers in 2020

ProProfs Online Assessment Software

Easy LMS

ExamSoft

Cognician

GLIDER.ai

Nearpod

iSpring Suite

PowerSchool Assessment

CodeSignal

ReadnQuiz

For this purpose, we’ve decided to provide you with only the best online assessment tools for teachers in 2020, which you can find neatly listed below.

1) ProProfs Online Assessment Software

ProProfs has a long-standing history as one of the internet’s best online assessment and quiz tools. Their online assessment tool comes chock full of features that every teacher can benefit from.

Creating online assessment tests is a simple process. It allows you to import questions from a question bank, which contains over 100,000 different ready-to-use questions on various topics.

With the ProProfs online assessment software, you can pick the questions, and the type of answers you want to receive. It’s the ultimate tool for creating online quizzes, allowing you even to automate grading and scoring.

Your job as a teacher is further simplified by this feature, as ProProfs Online Assessment Software allows you to give instant feedback and scores based on the controlled answers.

That will give your students a brand new level of insight into their online assessment, allowing you to track their performance through reports.

The reports are very detailed and allow you to view virtually every aspect of the assessment. Another thing ProProfs is renowned for is its extensive customizability.

It’s fully customizable, allowing you to create a unique quiz carefully calibrated for your specific alma mater, topic, or subject.

2) Easy LMS

Easy LMS is also a popular online assessment software, specializing in educational technology.

Their online quizzing and online assessment software are renowned for its user experience and customer support.

Not only is the software easy to use, and makes online assessment a breeze, it’s fully immersive and allows for detailed quiz creation without any prior coding knowledge.

The online assessment creation tool comes jam-packed with a myriad of different customization features, allowing for much greater ease of use.

The Easy LMS online assessment tool is simple for both teachers and students, making for a very user-friendly experience.

The quizzes are available on all platforms and are fully optimized for phones, computers, and tablets.

What’s also exciting about this platform is its capability. It has several anti-cheating measures put in place and allows you to modify your quiz to your liking.

Any issue that you find with the software is bound to be quickly resolved by the ever active software customer support team.

3) ExamSoft

ExamSoft is a renowned online assessment software, which takes a very professional approach to the matter. Unlike the previous two additions on this list, ExamSoft is far more complex, at the price of added complications.

If you’re prepared to put in the effort and fully invest your time into online assessment creation, you can rest assured you’re going to get an excellent end product.

ExamSoft’s power is considerable, allowing for fully customizable online assessments.

Cheating is a thing of the past, as ExamSoft has powerful randomization possibilities based on the number of questions.

With ExamSoft, you can expect nothing but the top-tier efficiency that online assessment tools have to offer.

4) Cognician

Cognician is an online e-learning platform proposed for personal development, but it also comes with useful online assessment tools.

A tool like Cognician allows for quick and straightforward quiz creation, without any prior programming or quiz-making knowledge.

And the online assessment is constructed to let you reap critical data about your students. As a teacher, you should always strive to improve your efficiency and boost your students’ performance in the classroom.

With the data reports that Cognition brings to the table, you can completely modify your future exams and assessments, and influence better student performance.

Alternatively, the data garnered from these assessments will give you essential information when creating your next assessment.

5) GLIDER.ai

Glider.ai is a revolutionary new online assessment tool that allows you to create online assessments for your students or your employees.

It uses advanced machine learning and AI technology to help you get meaningful insight into your student’s or employees’ performance.

It’s a powerful tool that will help you to gather an abundance of essential data, all with a straightforward creation process.

Even if it sounds complicated through the use of AI and machine learning, the actual user experience is relatively easy. The questions opportunities are abundant and are also very interactive.

All of these advanced features are completely automated, allowing you, the teacher, to effortlessly create online assessments for your students.

It has many question options, and you can pick from 40 different question types.

6) Nearpod

Nearpod is an online assessment tool with a specific focus on education, making it a superb tool for teachers.

It’s a powerful tool that allows teachers to create detailed online assessments, without much time, effort, or prior quiz creation knowledge.

The self-titled “ed-tech tool” has quite a lot to offer. It’s not only an online quiz creation tool, as you can round up your whole educational process and lessons through it.

All educational experiences, polls, and questionnaires are all fully customizable and allow for detailed reports.

You can use these detailed reports to optimize your educational quizzing process, all through Nearpod. It allows for many quizzing and question types, but what sets it apart is its draw and optional answer features.

These features make Nearpod one of the best online assessment tools for teachers, as Nearpod is available and useful for basically every single niche of education.

Another essential thing to note about Nearpod is its active customer service, which will resolve any issue you might encounter while using their platform.

7) iSpring Suite

iSping Suite is a powerful educational online tool kit that allows educators to create a myriad of quizzes, online assessments, and tests for their students.

The potential of this software is vast, and it will enable you to create a complete and complex course in a matter of minutes.

These courses, quizzes, and questionnaires are all very expansive, as their creative potential exceeds that of many online assessment tools.

That’s another e-learning platform taking a note from Power Point’s book.

It allows users to create interactive and educational learning opportunities for their students and get some critical insight into student behavior and performance.

Working with this toolkit is as easy as dragging and dropping things. It’s potential is vast, as you can add video narrations, conduct a screencast, and insert quizzes and polls into your educational effort.

Finishing up this process is as easy as publishing it, and uploading the published result to your LMS.

The generated reports that iSpring Suite provides you with will give you some inside knowledge about your students’ behavior, allowing you to optimize your educational strategy even further.

It’s an ideal tool for these quarantined times.

8) PowerSchool Assessment

PowerSchool Assessment provides a myriad of educational technology solutions, which work great for teachers looking to bring their classroom to the internet.

This tool offers a multitude of online assessment opportunities and lets you thoroughly analyze the critical data you get from the assessment.

You can deliver specially calibrated content, online quizzes, and assessments, and reports to analyze any student interaction with your exam.

The quiz creation tool itself is relatively simple to use and is moderately powerful when it comes to getting a proper online assessment.

Where PowerSchool Assessment shines is its analytical opportunities. The collection of the data is completely automated and filtered to get accurate data.

This data can then be thoroughly analyzed and allow teachers to further work on their assessment and quizzing.

9) CodeSignal

CodeSignal is a unique tool on this list, as it specializes as a programmer education tool. Codesignal is unique in the sense that it gives specialized programming teachers a purpose-built student assessment software.

In an age where online teaching tools are highly expensive, and when programming and software is such a vast educational prospect, a purpose-built tool is a must.

CodeSignal is an assessment platform that allows teachers to analyze and assess quizzing results and provide valuable insight.

CodeSignal has quite an extensive educational history and has been used for numerous stimulative projects at MIT.

It strives to turn the computer sciences into an exciting and interactive educational experience through the process of gamification.

Teachers can use this tool with relative ease, as stimulating real-life situations and interactive questions through customized tests.

10) ReadnQuiz

Unlike the previous addition to this list, ReadnQuiz is a much simpler online assessment software, which allows teachers to quiz their students on a variety of topics.

It’s a straightforward tool to use and get into but provides detailed reports and in-depth analysis.

The teacher can completely customize the quiz, choosing between different types of questions and answers.

With ReadnQuiz, the teacher is in complete control. And since you’re allowed to create as many questions as possible, the automatic randomization of each round of testing will significantly discourage any cheating.

It’s purposefully built to encourage kids to read and is carefully designed for classrooms with any number of designated teachers.

If your classroom age demographic is from small kids to teenagers — this software is for you.

In Conclusion

Online assessment software is readily available, and it’s vital to pick out the best tool for your specific needs. Our list covers all education niches, age demographics, and analytical levels, respectively.

Find the tool that fits your specific requirements and approach, and don’t let the quarantine stop

The post 10 Best Online Assessment Software For Teachers In 2020 appeared first on CareerMetis.com.

10 Best Online Assessment Software For Teachers In 2020 published first on https://skillsireweb.tumblr.com/

0 notes

Text

Best Data Science Course In Delhi, Top Data Science Course In Delhi

In these years I had a great experience in every field be it studies, sports, social service and many more. Whatever I am today after engineering is the output of efforts put together by all teachers, staff and their valuable guidance. Political Science, Physics, English literature and History attracts most of the candidates from Kerala. In the 2019-'22 batch, the Physics UG course in Hindu College has 39 Malayalis out of 141 students.Like the average fees for online courses varies from INR 10,000 to 50,000. In the case of UG courses it is between INR 6-10 L and in the case of PG it is INR 7-15 L. There are 134 colleges in India that provide courses on Data Science Course. For those who find our culture and working standards admirable we are always keen to provide the industry exposure to them within our organisation. Monthly growth & skill evaluation of every student is recorded and shared with them during one-o-one mentorship session in order make them future ready.

The Indraprastha Institute of Information Technology , Delhi in collaboration with IBM has launched a nine-month-long PG Diploma program in Data Science and AI (PGDDS&AI) for students and IT professionals. The nine-month-long PG Diploma program will help cater to the upskilling needs of IT professionals and to meet industry requirements. COPS has the best Data Science faculty with relevant experience in the field.

We introduce real-life projects to our students to practice their learning in action. We share some valuable insights about the real world projects by making use of this specific technology. Its purpose is to showcase the individual’s readiness to use the data science knowledge in real-life scenarios. • Use data science approaches like machine learning, statistical modeling, and artificial intelligence to solve problems. IMS Proschool has been ranked 4th among the top 5 training institutes in India for analytics and data science by the Analytics India Magazine. The Office of Alumni Relations at National Forensic Sciences University was established with the motive to give a platform for the Alumni to contact and connect with the alma mater. The alumni of the National Forensic Sciences University can contact Alumni Relations office for any requests related to the institute. It will also keep alumni updated about various activities that are happening in the campus, thus helping them to stay connected with National Forensic Sciences University.

This is our very 31st module of the data science course in delhi. Text mining is one of the most critical ways to analyze and process unstructured data. This will enable the student to learn the data science course syllabus in-depth to become an outstanding data scientist. This is our very 29th module of the data science course in delhi. Take a data science course online at Techstack Academy that intends to deliver you with nothing but the best. We educate our students in every aspect of the course they choose and start it from the very beginning to give immense knowledge about the subject.

Address :

M 130-131, Inside ABL Work Space, Second Floor, Connaught CircleConnaught PlaceNew Delhi, Delhi 110001

9632156744

Click here to get directions; https://g.page/ExcelRDataScienceDelhi?share

0 notes

Text

Voices in AI – Episode 104: A Conversation with Anirudh Koul

[voices_in_ai_byline]

About this Episode

On Episode 104 of Voices in AI, Byron Reese discusses the nature of intelligence and how artificial intelligence evolves and becomes viable in today’s world.

Listen to this episode or read the full transcript at www.VoicesinAI.com

Transcript Excerpt

Byron Reese: This is Voices in AI brought to you by GigaOm, I’m Byron Reese. Today, my guest is Anirudh Koul. He is the head of Artificial Intelligence and Research at Aira and the founder of Seeing AI. Before that he was a data scientist at Microsoft for six years. He has a Masters of Computational Data Science from Carnegie Mellon and some of his work was just called by Time magazine, ‘One of the best inventions of 2018,’ which I’m sure we will come to in a minute. Welcome to the show Anirudh.

Anirudh Koul: It’s a pleasure being here. Hi to everyone.

So I always like to start off with—I don’t wanna call it a philosophical question—but it’s sort of definitional question which is, what is artificial intelligence and more specifically, what is intelligence?

Technology has always been here to fill the gaps between whatever ability is in our task and we are noticing this transformational technology—artificial intelligence—which can now try to mimic and predict based on previous observations, and hopefully try to mimic human intelligence which is like the long term goal—which might probably take 100 years to happen. Just noticing the evolution of it over the last few decades, where we are and where the future is going to be based on how much we have achieved so far, is just exciting to be in and be playing a part of it.

It’s interesting you use the word ‘mimic’ human intelligence as opposed to achieve human intelligence. So do you think artificial intelligence isn’t really intelligence? All it can do is kind of look like intelligence, but it is not really intelligence?

From the outside when you see something happen for the first time, it’s like magical. When you see the demo of an image being described by a computer in an English sentence. If you saw one of those demos in 2015, it just knocks the socks off when you see it the first time. But then, if you ask a researcher it said, “Well, it kind of has you know sort of learned the data, the pattern behind the scenes and it does make mistakes. It’s like a three year old. It knows a little bit but the more of the world they show it, the smarter it gets.” So from the outside—from the point of press, the reason why there’s a lot of hype is because of the magical effect when you see it happen for the first time. But the more you play with it, you also start to learn how far it has to go. So right now, mimicking might probably be a better word to use for it and hopefully in the future, maybe go closer to real intelligence. Maybe in a few centuries.

I notice the closer people are to actually coding, the further off they think general intelligence is. Have you observed that?

Yeah. If you look at the industrial trend and especially talking to people who are actively working on it, if you try to ask them when is artificial general intelligence (the field that you’re just talking about) going to come, most people on average will give you the year 20… They’ll basically give the end of this century. That’s when they think that artificial general intelligence will be achieved. And the reason is because of how far we have to go to achieve it.

At the same time, you also start to learn as the year 2017/18 comes, you start to learn that AI is really often an optimization problem trying to achieve the goal and that many times, these goals can be misaligned, so if you try to achieve—no matter how—it needs to achieve the goal. Some of the fun examples, which are like famous failure cases where there was a robot which was trying to minimize the time a pancake should be on the surface of the pancake maker. What it would do is, it would basically flip the pancake up in the air but because optimization probably was minimized the time it would flip the pancakes so high in the air that it would basically go to space during simulation and you minimize the time.

A lot of those failure cases are now being studied to understand the best practices and also learn the fact that, “Hey, we need to be keeping a realistic view of how to achieve that.” They’re just fun on both sides of what you can achieve realistically. Maybe some of those failure cases and just keeping appreciation for [the fact that] we have a long way to achieve that.

Who do you think is actually working on general intelligence? Because 99% of all the money put in AI is, like you said, to solve problems like get that pancake cooked as fast as you can. When I start to think about who’s working on general intelligence, it’s an incredibly short list. You could say OpenAI the Human Brain Project in Europe, maybe your alma mater Carnegie Mellon. Who is working on it? Or will we just get it eventually by getting so good at narrow AI, or is narrow AI just really a whole different thing?

So when you try to achieve any task, you break it down into subtasks that you can achieve well, right? So if you’re building a self-driving car, you would divide it into different teams. One team would just be working on one single problem of lane finding. Another team would just be working on the single problem of how to back up a car or park it. And if you want to achieve a long term vision, you have to divide it into smaller sub pieces of things that are achievable, that are bite sized, and then in those smaller near-term goals, you can get to some wins.

In a very similar way, when you try to build a complex thing, you bring it down to pieces. Some are obviously: Google, Microsoft Research, OpenAI, especially OpenAI. This is probably the bigger one who is betting on this particular field, making investments in this field. Obviously, universities are getting into it but interestingly, there are other factors even from the point of funding. So, for example, DARPA is trying to get in this field of putting funding behind AI. As an example, they put in like a $2 billion investment on something called the ‘AI Next’ program. What they’re really trying to achieve is to overcome the limitations of the current state of AI.

To give a few examples: Right now if you’re creating an image recognition system that typically takes somewhere around a million images to train for something like ‘imageness’ in it which is considered the benchmark. What DARPA is saying, “look this is great, but could you do it at one tenth of the data or could you do that at one hundredth of the data? But we’ll give you the real money if you can do it at 1000th of the data.” They literally want to cut the scale logarithmically by half, which is amazing.

Listen to this episode or read the full transcript at www.VoicesinAI.com

[voices_in_ai_link_back]

Byron explores issues around artificial intelligence and conscious computers in his new book The Fourth Age: Smart Robots, Conscious Computers, and the Future of Humanity.

from Gigaom https://gigaom.com/2020/01/09/voices-in-ai-episode-104-a-conversation-with-aniruhd-koul/

0 notes

Text

Ne opencart w Artificial Intelligence System To Aid In Materials Fabrication

www.inhandnetworks.com

Using a new artificial-intelligence system that would pore through research papers to deduce “recipes” for producing particular materials, scientists hope to close the materials-science automation gap.

In recent years, research efforts such as the Materials Genome Initiative and the Materials Project have produced a wealth of computational tools for designing new materials useful for a range of applications, from energy and electronics to aeronautics and civil engineering.

But developing processes for producing those materials has continued to depend on a combination of experience, intuition, and manual literature reviews.

A team of scientists at MIT, the University of Massachusetts at Amherst, and the University of California at Berkeley hope to close that materials-science automation gap, with a new artificial-intelligence system that would pore through research papers to deduce “recipes” for producing particular materials.

“Computational materials scientists have made a lot of progress in the ‘what’ to make — what material to design based on desired properties,” says Elsa Olivetti, the Atlantic Richfield Assistant Professor of Energy Studies in MIT’s Department of Materials Science and Engineering (DMSE). “But because of that success, the bottleneck has shifted to, ‘Okay, now how do I make it?’”

The scientists envision a database that contains materials recipes extracted from millions of papers. Scientists and engineers could enter the name of a target material and any other criteria — precursor materials, reaction conditions, fabrication processes — and pull up suggested recipes.

As a step toward realizing that vision, Olivetti and her colleagues have developed a machine-learning system that can analyze a research paper, deduce which of its paragraphs contain materials recipes, and classify the words in those paragraphs according to their roles within the recipes: names of target materials, numeric quantities, names of pieces of equipmmulti SIM LTE routerent, operating conditions, descriptive adjectives, and the like.

In a paper appearing in the latest issue of the journal Chemistry of Materials, they also demonstrate that a machine-learning InHand Networks Global Leader in Industrial IoT system can analyze the extracted data to infer general characteristics of classes of materials — such as the different temperature ranges that their synthesis requires — or particular characteristics of individual materials — such as the different physical forms they will take when their fabrication conditions vary.

Olivetti is the senior author on the paper, and she’s joined by Edward Kim, an MIT graduate student in DMSE; Kevin Huang, a DMSE postdoc; Adam Saunders and Andrew McCallum, computer scientists at UMass Amherst; and Gerbrand Ceder, a Chancellor’s Professor in the Department of Materials Science and Engineering at Berkeley.

Filling in the gaps

The scientists trained their system using a combination of supervised and unsupervised machine-learning techniques. “Supervised” means that the training data fed to the system is first annotated by humans; the system tries to find correlations between the raw data and the annotations. “Unsupervised” means that the training data is unannotated, and the system instead learns to cluster data together according to structural similarities.

Because materials-recipe extraction is a new area of research, Olivetti and her colleagues didn’t have the luxury of large, annotated data sets accumulated over years by diverse teams of researchers. Instead, they had to annotate their data themselves — ultimately, about 100 papers.

By machine-learning standards, that’s a pretty small data set. To improve it, they used an algorithm developed at Google called Word2vec. Word2vec looks at the contexts in which words occur — the words’ syntactic roles within sentences and the other words around them — and groups together words that tend to have similar contexts. So, for instance, if one paper contained the sentence “We heated the titanium tetracholoride to 500 C,” and another contained the sentence “The sodium hydroxide was heated to 500 C,” Word2vec would group “titanium tetracholoride” and “sodium hydroxide” together.

With Word2vec, the researchers were able to greatly expand their training set, since the machine-learning system could infer that a label attached to any given word was likely to apply to other words clustered with it. Instead of 100 papers, the researchers could thus train their system on around 640,000 papers.

Tip of the iceberg

To test the system’s accuracy, however, they had to rely on the labeled data, since they had no criterion for evaluating its performance on the unlabeled data. In those tests, the system was able to identify with 99 percent accuracy the paragraphs that contained recipes and to label with 86 percent accuracy the words within those paragraphs.

The scieintists hope that further work will i Android Industrial Computer mprove the system’s accuracy, and in ongoing work they are exploring a battery of deep learning techniques that can make further generalizations about the structure of materials recipes, with the goal of automatically devising recipes for materials not considered in the existing literature.

Much of Olivetti’s prior research has concentrated on finding more cost-effective and environmentally responsible ways to produce useful materials, and she hopes that a database of materials recipes could abet that project.

“This is landmark work,” says Ram Seshadri, the Fred and Linda R. Wudl Professor of Materials Science at the University of California at Santa Barbara. “The authors have taken on the difficult and ambitious challenge of capturing, through AI methods, strategies employed for the preparation of new materials. The work demonstrates the power of machine learning, but it would be accurate to say that the eventual judge of success or failure would require convincing practitioners that the utility of such methods can enable them to abandon their more instinctual approaches.”

This research was supporting by the National Science Foundation, Office of Naval Research, the Department of Energy, and seed support through the MIT Energy Initiative. Kim was partially supported by Natural Sciences and Engineering Research Council of Canada.

Publication: Edward Kim, et al. “Materials Synthesis Insights from Scientific Literature via Text Extraction and Machine Learning,” Chem. Mater., 2017, DOI: 10.1021/acs.chemmater.7b03500

inhandnetworks, InHand Networks Global Leader in Industrial IoT, Global Leader in Industrial IoT, android-computer, iot, industrial iot, industrial internet of things, m2m, industrial m2m, m2m communication, remote communication, wireless m2m, remote connectivity, remote access, m2m connectivity, iiot, industrial networking, industrial wireless, m2m iot, smart vending, touchscreen vending, cloud-vms, telemeter, vending telemetry, cashless vending, light industrial, commercial, distribution automation, distribution power line monitoring, fault location, fault detection, da monitoring, smart grid, transformer monitoring, intelligent substation, goose-messaging-, remotemachine monitoring, remote secure networks, remote secure networking, 心,

#vehicle router#iot vending machine#remote maintenance#azure iot certified#branch networking#touch screen for vending machine#iot gateway manufacturer#m2m-iot#QR code vending machine#IPC remote access

0 notes

Text

Georgia State Professor—and Student Success Innovator—Awarded McGraw Prize in Education

For 30 years, the Harold W. McGraw, Jr. Prize in Education has been one of the most prestigious awards in the field, honoring outstanding individuals who have dedicated themselves to improving education through innovative and successful approaches. The prize is awarded annually through an alliance between The Harold W. McGraw, Jr. Family Foundation, McGraw-Hill Education and Arizona State University.

If students aren't succeeding semester after semester, it's easy to assume it's not us, it's them.

This year, there were three prizes: for work in pre-K-12 education, higher education and a newly created prize, for learning science research.

From among hundreds of nominations, the award team gave the Learning Science Research prize to Arthur Graesser, Professor in the Department of Psychology and the Institute of Intelligent Systems at the University of Memphis. Reshma Saujani, Founder and CEO of Girls Who Code, won the pre-K-12 award. The higher ed award honored Timothy Renick, Senior Vice President for Student Success and Professor of Religious Studies at Georgia State University. The three winners received an award of $50,000 each and an iconic McGraw Prize bronze sculpture.

At Georgia State, Dr. Renick has overseen some of the country’s fastest-improving graduation rates while wiping out achievement gaps based on students’ race, ethnicity and income. EdSurge spoke with Dr. Renick about how upending conventional wisdom, the moral imperative of helping students succeed and how navigating college is a lot like taking a road trip.

EdSurge: You’ve said that instead of focusing so much attention on making students more college-ready, we should be making colleges more student-ready. That’s a fascinating concept. How was Georgia State failing in that regard, and how did you address the problem?

Tim Renick: We were failing in multiple ways. Ten years ago, our graduation rates hovered around 30%, meaning seven out of ten students who came to us left with no degree and many with debt and nothing to show for it. If students aren’t succeeding semester after semester, it’s easy to assume it’s not us, it’s them.

But what we’ve done at Georgia State is look at where we were creating obstacles. When we began to remove those obstacles, students not only started doing better overall, but the students who were least successful under the old system—our low-income students, our first-generation students, our students of color—did exponentially better.

If we can catch these problems early and get the student back on path, we can increase the chances they will get to their destination, which is graduation.

Source: The McGraw Prize in Education; watch video on Vimeo here.

One of your hallmark initiatives is GPS Advising, which uses predictive analytics. What drew you to that technology to help students?

We are a big institution, which means students leave a large electronic footprint every day—when they sign up for classes, when they drop a class, when they get a grade, and so forth. We thought, why don’t we use all this data we’re already collecting for their benefit? So back in 2011, we ran a big data project. We looked initially at about ten years of data, two and a half million Georgia State grades, and 140,000 student records to try to find early warning signs that a student would drop out.

We thought we’d find a couple dozen behaviors that had statistical significance, but we actually found 800. So every night when we update our student information systems, we’re looking for any of those 800 behaviors by any of our enrolled students. If one is discovered—say a bad grade on an early math quiz—the advisor assigned to that student gets an alert and within 48 hours there’s some kind of outreach to the student.

How has this changed the student experience?

Georgia State's Tim Renick; source: McGraw Prize in Education

Past McGraw Prize winners include:

Barbara Bush, Founder, Barbara Bush Foundation for Family Literacy

Chris Anderson, Curator, TED

Sal Khan, Founder and Executive Director, Khan Academy

Wendy Kopp, President and Founder, Teach for America

In the past, for example, a STEM student would get a low grade in a math course. The next semester they’d take chemistry. They’d get a failing grade in that course because they didn’t have the math skills. The next semester they’d take organic chemistry. By the time anybody noticed, they already had three Ds and and an F under their belts and were out of chemistry as a major.

Now when a student is taking that first quiz, if they didn’t do well on it and especially if they are a STEM major, there’s an alert that goes off. Somebody engages the student and says, do you realize that students who don’t do well on the first quiz often struggle on the midterm and the final, but we have resources for you. There’s a near-peer mentor for your class. There’s a math center. You can go to faculty office hours. There are all these things you can do!

If we can catch these problems early and get the student back on path, we can increase the chances they will get to their destination, which is graduation.

How widespread is this type of predictive analytic use? Could elite, well-resourced schools such as your alma maters—Dartmouth and Princeton—benefit from GPS Advising?

When we launched our GPS Advising back in 2012, we were one of maybe three schools in the country that had anything like daily tracking of every student using predictive analytics. There are now over 400 schools that are using platforms along these lines.

And yes, even elite institutions suffer from achievement gaps. Like us, they are interested in delivering better services. One of the innovations we developed out of necessity was the use of an AI-enhanced chatbot, an automated texting platform that uses artificial intelligence to allow students to ask questions 24/7 and get immediate responses. In the first three months after we launched this chatbot, we had 200,000 student questions answered.

That might seem like a need for a lower-resourced campus like Georgia State. But then you go to a place like Harvard, and they say, ‘well our students live on their smartphones, too,’ and they don’t want to come into an office or call up some stranger to get an answer to a question. They want the answers at their fingertips. So there’s a lot that we developed by necessity that may be part of what becomes the norm across higher education over the next few years.

But then you go to a place like Harvard, and they say, ‘well our students live on their smartphones, too,’ and they don’t want to come into an office or call up some stranger to get an answer to a question.

How much has your background as a professor in Religious Studies influenced your current work?

Quite a bit. My specialty is Religious Ethics. I believe unabashedly that there’s a moral component to the work we’re doing. It’s not ethically acceptable for us to continue to enroll low-income, first-generation college students—almost all of whom are taking out large loans in order to get the credential that we’re promising them—and then not provide them the support that gives them every chance to succeed.

Can you tell us about how your work has supported one of those students?

My favorite story is of Austin Birchell, a first-generation, low-income student from northern Georgia who was a heavy user of our chatbot when we first launched it. Sadly, Austin’s dad died when he was 14. His mom was having great difficulty getting a job again. She said specifically that everybody who was getting the job she was up for had a college degree. So Austin made a vow that he wasn’t going to let that happen to him.

He did everything right—got straight As, earned a big scholarship and decided to attend Georgia State. Then he got his first bill for the fall semester. It came in the middle of July and it was for $4000 or $5000 more than he had anticipated. Like a lot of first-generation students, he initially blamed himself. But he got on the chatbot and discovered that on some form his social security number had been transposed, so his scholarship wasn’t getting applied to his account. The problem got fixed. Austin and his mother were so relieved they took a bus to campus to pay at the cashier’s office because they didn’t want to leave anything to chance.

If we hadn’t changed the way we approach the process, Austin had every chance of being one of those students who never makes it to college, not through any fault of his own. It’s not acceptable to live in a world where someone like Austin loses out on college because we didn’t do our job.

It’s not ethically acceptable for us to continue to enroll low-income, first-generation college students . . . and then not provide them the support that gives them every chance to succeed.

How would you like to see your work impact higher education in general?

That’s a good question. What I’d like to be part of is a change in the conversation. For a generation, we’ve thought that they key is getting students college-ready. So all the pressure falls upon K-12 or state governments or public educational systems to prepare students better. What the Georgia State story shows is that we at the post-secondary level have a lot of control over the outcomes of our students. Changing simple things—like the way we communicate with them before they enroll, or the advice we give them about their academic progress, or the small grants we award students when they run into financial difficulty—can be the difference between graduations rates that are well below the national average and graduation rates that are well above it.

We are graduating over 2800 students more than we were in 2011, and we are now conferring more bachelor’s degrees to African-Americans than any other college or university in the United States. We’re not doing it because the students are more college-ready—our incoming SAT scores are actually down 33 points—we’re doing it because the campus is more student-ready.

Georgia State Professor—and Student Success Innovator—Awarded McGraw Prize in Education published first on https://medium.com/@GetNewDLBusiness

0 notes

Link

ANCONA – Al via questo pomeriggio alle 15.30 alla Mole KUM! il nuovo progetto ideato da Massimo Recalcati, dedicato allo sviluppo del concetto contemporaneo di cura. L’iniziativa avrà cadenza annuale e si candida a diventare il principale Festival del settore. Tra gli ospiti del 2017 i sociologi Aldo Bonomi e Luigi Manconi, la filosofa Adriana Cavarero, il conduttore radiofonico Massimo Cirri, i giornalisti Gad Lerner e Stefano Bartezzaghi, il filosofo Bernard Stiegler. I vari protagonisti si alterneranno nelle tre giornate tra Lectio, con alcuni dei nomi più prestigiosi del pensiero italiano e internazionale, dalla filosofia alla psicoanalisi, dalla letteratura alla medicina; Dialoghi su grandi domande del mondo della cura; discussioni A tre voci sull’attualità e la quotidianità più bruciante, dalle migrazioni alla scuole, passando per il mondo dell’infanzia; Conversazioni su questioni aperte come il gioco d’azzardo o come si affronta la malattia; Ritratti su grandi pensatori; Aperitivi filosofici; Spazio cinema e Psicologia da tè, letture e pensieri sui grandi classici della psicoanalisi, davanti a una tazza fumante di infuso.

Oltre alla parte umanistica, c’è anche una sezione sperimentale e innovativa, KUM!Lab, divisa in tre parti. La prima è una sfida tra le otto ricerche più innovative nel campo salute e benessere che si stanno sviluppando nella nostra regione. Si chiama Science Factor e coinvolge i progetti vincitori del bando FESR Marche – POR 2014-2020 che si raccontano davanti a una speciale giuria, come se fosse un talent show di natura scientifica. La seconda è Walking along the chromosomes, laboratori aperti a tutti (prenotabili sul sito www.kumfestival.it), ma dedicati in particolare alla fascia 15-18 anni, che permettono di scoprire le nuove grandi prospettive della scienza e della medicina nell’era post-genomica. L’attività è realizzata da Cinzia Grazioli e da Lidia Pirovano di CusMiBio, il centro dell’Università di Milano per la diffusione delle bioscienze. L’ultima sezione si chiama Parole ingovernabili ed è un social contest.

La manifestazione è organizzata dal Comune di Ancona – Assessorato alla Cultura con la collaborazione dell’Assessorato ai servizi sociali – con il contributo della Regione Marche, della Fondazione Cariverona, e il coordinamento organizzativo dell’associazione culturale Esserci.