#micro llm

Explore tagged Tumblr posts

Text

Micro LLMs and Edge AI: Bringing Powerful Intelligence to Your Devices

Artificial Intelligence has undeniably permeated every facet of our digital lives, from personalized recommendations to sophisticated content generation. Yet, much of this intelligence has traditionally resided in distant cloud data centers, requiring constant internet connectivity and raising concerns about privacy and latency. As we navigate mid-2025, a quiet revolution is underway: the proliferation of Micro LLMs and Edge AI, empowering devices to become intelligent, autonomous, and remarkably efficient without constant cloud reliance.

This paradigm shift isn't just about convenience; it's about making AI more private, faster, cheaper, and universally accessible, transforming everything from your smartphone to industrial sensors into powerful, on-demand intelligent systems.

The Limitations of Cloud-Centric AI

While cloud computing provides immense processing power, it comes with inherent drawbacks for many real-world AI applications:

Latency: Sending data to the cloud, processing it, and receiving a response introduces delays. For real-time applications like autonomous vehicles, robotics, or instant voice commands, even milliseconds matter.

Privacy Concerns: Transmitting sensitive personal or proprietary data to remote servers raises significant privacy and security questions.

Bandwidth & Cost: Constant data streaming to and from the cloud consumes considerable bandwidth and can accrue significant costs, especially for large-scale IoT deployments.

Offline Functionality: Cloud-dependent AI ceases to function without an active internet connection, limiting its utility in remote areas or during network outages.

Understanding the Core Concepts: Edge AI and Micro LLMs

To overcome these challenges, two interconnected technological advancements are taking center stage:

Edge AI: This refers to the deployment of AI algorithms and machine learning models directly on "edge devices" – the physical devices where data is generated. Think smartphones, smart cameras, industrial sensors, wearables, or autonomous vehicles. Instead of sending all raw data to the cloud for processing, Edge AI enables intelligent computation to happen locally, at the "edge" of the network.

Micro LLMs (and TinyML): These are highly optimized, significantly smaller versions of Large Language Models (LLMs) and other AI models, specifically designed to run efficiently on resource-constrained edge devices. Techniques like quantization (reducing numerical precision), pruning (removing unnecessary connections in a neural network), and knowledge distillation (training a smaller model to mimic a larger one) allow these models to deliver powerful AI capabilities with minimal memory, processing power, and energy consumption. TinyML is a broader term encompassing the entire field of running very tiny machine learning models on microcontrollers.

The Power of On-Device AI: Key Benefits

The synergy of Micro LLMs and Edge AI unlocks a wealth of advantages:

Enhanced Privacy: By processing data on the device itself, sensitive information never leaves the local environment, significantly reducing privacy risks and aiding compliance with data protection regulations.

Ultra-Low Latency: Decisions are made in milliseconds, as data doesn't need to travel to and from the cloud. This is critical for applications requiring immediate responses.

Reduced Bandwidth & Cost: Less data needs to be transmitted, leading to substantial savings on bandwidth and cloud infrastructure costs.

Offline Functionality: Devices can perform intelligent tasks even without network connectivity, enhancing reliability and usability in diverse environments.

Improved Reliability: Operations are less susceptible to network disruptions or cloud service outages.

Energy Efficiency: Optimized models and specialized hardware (like Neural Processing Units or NPUs found in modern chipsets) consume less power, extending battery life for mobile and IoT devices.

Real-World Applications Flourishing in 2025

The impact of on-device intelligence is becoming pervasive:

Smartphones: Beyond basic dictation, Micro LLMs enable real-time, personalized language translation, advanced photo editing with generative capabilities, on-device biometric authentication (like enhanced facial recognition), and predictive text that truly understands your writing style, all without sending your personal data to external servers.

Smart Home Devices: Voice assistants can process more commands locally, offering faster responses and greater privacy. Security cameras can perform on-device object detection (e.g., distinguishing pets from intruders) without continuously streaming video to the cloud.

Industrial IoT & Robotics: Edge AI empowers predictive maintenance by analyzing sensor data directly on factory machines, identifying potential failures before they occur. Autonomous robots can navigate and make real-time decisions in complex environments without constant communication with a central server.

Automotive: In-car systems use Edge AI for driver monitoring, sophisticated voice controls, gesture recognition, and real-time obstacle detection for advanced driver-assistance systems (ADAS) and increasingly autonomous driving features.

Wearables & Healthcare: Smartwatches and health trackers perform continuous, on-device analysis of vital signs, sleep patterns, and activity levels, even detecting anomalies like irregular heartbeats and alerting users instantly, ensuring sensitive health data remains private.

Agriculture: AI-powered sensors in fields can identify plant diseases, optimize irrigation, and detect pests locally, enabling precise and efficient farming practices.

Challenges and the Path Forward

While the momentum is strong, deploying AI on the edge comes with its own set of challenges:

Model Optimization Complexity: Striking the right balance between model size, accuracy, and performance on constrained hardware is a complex engineering task.

Hardware Heterogeneity: Developing models that can run efficiently across a vast array of devices with different processing capabilities (from tiny microcontrollers to powerful smartphone NPUs) is challenging.

Model Updates & Management: Ensuring secure and efficient over-the-air (OTA) updates for potentially millions of edge devices requires robust deployment strategies.

Security of Edge Models: Protecting the deployed models from tampering, intellectual property theft, or adversarial attacks is paramount.

The Future: Ubiquitous, Intelligent, and Personal

By 2025, Micro LLMs and Edge AI are not just buzzwords; they are enabling the next wave of intelligent, personalized, and robust digital experiences. The future promises devices that are not merely smart, but context-aware, predictive, and incredibly responsive, operating seamlessly whether connected to the cloud or not. As these technologies mature, they will continue to empower developers to build solutions that are more secure, efficient, and deeply integrated into the fabric of our everyday lives, truly bringing powerful intelligence to your devices.

0 notes

Text

Generative AI is revolutionizing the digital world by enabling machines to create human-like content, including text, images, music, and even code. Unlike traditional AI, which follows predefined rules, Generative AI uses deep learning models like GPT and DALL·E to generate new and unique outputs based on patterns in existing data.

This technology is transforming industries, from automating content creation and enhancing customer support to generating realistic artwork and simulations. Businesses leverage it for personalized marketing, while creatives use it for inspiration and design.

One key feature of Generative AI is its ability to produce high-quality, original content, mimicking human creativity. However, ethical concerns such as misinformation and bias require careful management. As AI advances, its role in shaping the future of digital content creation continues to grow, offering endless possibilities while demanding responsible use.

#digitalpreeyam#generative ai#what are the key features and benefits of using sap cloud connector?#what are the key features and benefits of using ihp in web development?#"unlock the power of ai#what are the key features and benefits of using labelme for image annotation?#fundamentals of generative ai#generative ai explained#benefits of micro llms#microsoft for startups unlocking the power of openai for your startup | odbrk53#unlocking research potential with ai#mastering generative ai

0 notes

Text

Generative AI is revolutionizing the digital world by enabling machines to create human-like content, including text, images, music, and even code. Unlike traditional AI, which follows predefined rules, Generative AI uses deep learning models like GPT and DALL·E to generate new and unique outputs based on patterns in existing data.

This technology is transforming industries, from automating content creation and enhancing customer support to generating realistic artwork and simulations. Businesses leverage it for personalized marketing, while creatives use it for inspiration and design. One key feature of Generative AI is its ability to produce high-quality, original content, mimicking human creativity. However, ethical concerns such as misinformation and bias require careful management. As AI advances, its role in shaping the future of digital content creation continues to grow, offering endless possibilities while demanding responsible use.

#digitalpreeyam#generative ai#what are the key features and benefits of using sap cloud connector?#what are the key features and benefits of using ihp in web development?#"unlock the power of ai#what are the key features and benefits of using labelme for image annotation?#fundamentals of generative ai#generative ai explained#benefits of micro llms#microsoft for startups unlocking the power of openai for your startup | odbrk53#unlocking research potential with ai#mastering generative ai

0 notes

Note

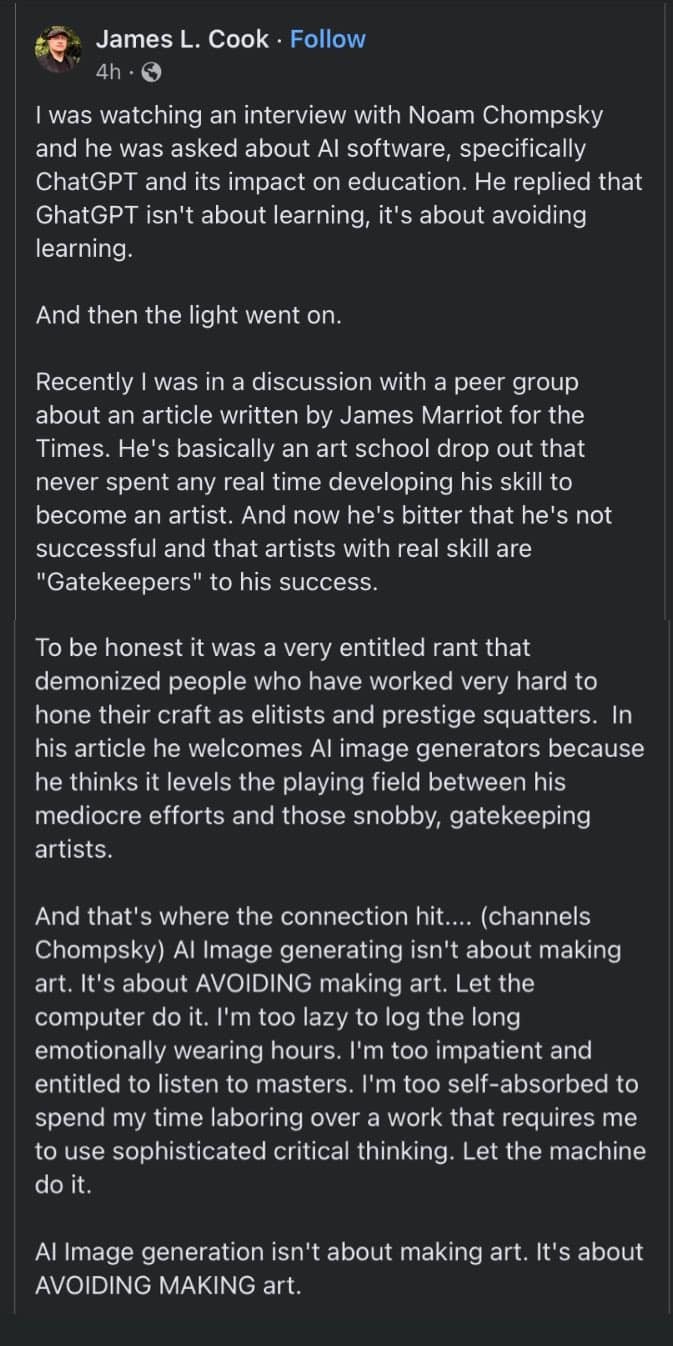

I saw your post about chatgtp and here is a thought i had.

What sre these students also going to do about practical issues if they rely on something like chatgtp?

I don't know if they overly rely on it or not, but chatgtp ain't going to have solutions to the many hurdles life throws your way.

Hm. Well. I think folks who rely on ChatGPT will continue to rely on ChatGPT until it clearly and obviously screws them over.

That might be a big, obvious, well-publicized global incident. We've already had a couple, like that lawyer who lost his license after ChatGPT made up fake cases for her paperwork. It'd have to be something like that, but waaaay bigger scale, something pretty much no one could miss.

But frankly, I suspect it's going to be individual failures that everyone has to have on their own. Ask an LLM on how to fix their car, its advice ends up fucking the car up more, and now they have an expensive bill to make the lesson sink in. Ask ChatGPT to write something difficult for their significant other, and whoops, now you're having a big relationship fight. Ask ChatGPT a health question, end up getting super sick. ETC.

And once a person realises they can't use ChatGPT for everything, then it's not like their brains are irreparably damaged. I've seen a LOT of notes in the last two weeks that are people being like "you're damaging your brain" and like, I think that's a very bold statement to make. Generative AI hasn't been available for very long, so there can't be many studies on the subject to show one way or another. But even if we are, brains are resilient! it can recover from huge major stuff, like drug addiction and depression and brain surgery! it can and will recover from ChatGPT.

so i am not doomer about this, honestly, genuinely. but i do really hope we can nip this stuff in the bud before too many doctors and architects and policy makers and the like have to learn this lesson the hard way, because this does have the capacity to really hurt folks.

Which means we need to fix this problem at the source, and ask the question: why are So Many People using LLMs for their work?

And that's a broad and multi-faceted question and I don't think there's a single simple answer. But when it comes to education? I think a big portion is: overwork.

youtube

I remember seeing this scene a couple years after finishing secondary school and it resonated SO much. And my understanding is it's only gotten worse in the 10-ish years since I got my undergrad.

And it's not just the level of work.... It's the level of work where so much of it is pointless, or unnecessary.

On a micro-level, that's assignments created with no real thought about learning outcomes, just there to tick boxes. (Thinking of the time where we were told "we should have" kept diaries for all our extracurriculars 15 months into the two year IB course, and my whole year spent like two hours writing a whole bunch of fake ones retroactively). On the other side of the coin, it might be that assignment is genuinely important for learning that subject matter, but the person doesn't actually care about the subject matter, they just need a diploma, because society has decided a diploma is the magic piece of paper you need to get a job, and cost of living is rising crazily pretty much the world over, so you really Can't care about the sanctity of education or whatever.

When that happens, of course kids (and adults) are gonna start using ChatGPT as a shortcut. And while I'm certain there are some folks who are overusing it to the ridiculous amount, they're probably a minority, and we probably shouldn't overstate the problem.

So, uh. How to get people to stop relying so much on AI?

We need to start fixing education! And to start fixing education, we need to start fixing capitalism itself, because that's what's introducing many of the perverse incentives

Should be easy! No problem!

... by which of course I mean it's a huge problem, and knotty, and I don't know how to tackle it all. Why should I? I'm a rando on the internet. I used to teach, but only for a handful of years, and at mostly a kindergarten level. There are better qualified folks than I to propose education reforms.

But in the short term, I think cutting down a lot on homework, and having most essays and assignments written in-class by hand might be a good start.

#generative ai#chatgpt#education#the post of mine on chatgpt that went viral#caught me in a scream of primal frustration#because truly and genuinely i don't like generative AI and LLMs#for many and multitudenious reasons#and that particular day i was reading so many posts from folks in education#who are understandably disheartened and frustated by having to grade AI slop their students didn't even work on#whch is like! genuinely so dismissive of them#their time and their passion#but i do want to take a moment to look at the other side of things#and what i'm hearing again and again from folks who rely on AI bots for stuff#is they feel they have to#for whatever reason#so practically we need to ask#what can we do to change that?#there was an interesting recent episode of the podcast ICYMI on this subject#and one a few months ago by The Vergecast#which touched on those

18 notes

·

View notes

Note

Do you have any posts where you elaborate on this?

"My thesis is that to understand storytelling, you want to understand root issues and classes of solutions ... There are a lot of writing problems that are parallel to each other, and there are a lot of structural elements that are mirrors of each other, so why not try to put it all together that way?"

I don't think I do, so I'll do that briefly here.

Here's the thesis: I have a strong suspicion that there are only a handful of elemental aspects of storytelling that all have their root in human psychology. The easiest ones to name are "engagement", "investment", and "surprise", but once we start looking at these things, I think we can start to understand how "different" writing problems are actually the same writing problem in disguise. Knowing this, we can start listing out solutions to those problems, and solutions that work on one type of problem can also work on a different type.

The brain is good at pattern-recognition and pattern-completion. When we read fiction, we're always trying to complete the patterns, consciously or otherwise. This isn't some LLM-style "predict next token", it's a matter of having an internal model of the characters, the setting, the narrative, etc. But humans don't like the ability to perfectly predict things, at least usually, they like there to be some measure of surprise.

So this is one fundamental aspects of fiction: the tension between predictability and surprise. There's a lot of writing advice that flows from this, and a lot of tools of writing come from here: foreshadowing, plot twists, punchlines, the effective use of tropes. When something isn't working, it's often on the predictability-surprise axis, and a lot of the tools there boil down to "make this more predictable" or "make this more surprising". And this extends from the micro (individual sentences) to the macro (the whole plot). It's why we write cliffhangers, it's how we manage suspense, it's how we structure a paragraph for maximum impact. This is, in part, where the fundamental concept of "tension" from from.

And I think there are a few things like that, relatively atomic concepts that we want to look at, that a good book on writing would interrogate and give advice for, with the understanding that these things overlap with each other.

I don't have time to write a whole book (or 4-5 longish blog posts), and wouldn't trust myself to actually nail it, but here are some of the things that I think ought to be in there:

Conflict and cognitive dissonance, jarring the brain with opposing statements that grind together like mismatched gears, includes juxtaposition

Unfoldingness and picture-painting, forcing the reader to use cognitive load to render the world through words, character actions, descriptions, etc. Includes most of "show, don't tell" and also explains why that's sometimes not good advice.

Emotional resonance, how to create and maintain empathy with a character and activate mirror neurons. Includes both empathy cultivation and empathy discharge.

Pacing and rhythm, and making sure you don't hit the same note too many times, allow the brain to rest, use all parts of the brain, etc.

Meaning and connection-building, how to weave a theme, how to say something, how to have disparate elements come together, because people love when disparate elements come together and the parts become a whole

And so my problem with a book like Save the Cat!, where I think this ask comes from, is that it gives a bunch of very narrow advice, and you walk away with an understanding that yeah, you need a moment early on that establishes this character as someone to root for, and then gives a bunch of weird contradictory examples of what that means, and some of those examples are actually tying in other bits of fundamentals, like surprise, having something unfold in the reader's head, empathy, etc.

I'm actually going to give one example of what I mean, directly from the book, though I had packed it away on my shelf never to be seen again:

Save the what? I call it the "Save the Cat" scene. They don't put it into movies anymore. And it's basic. It's the scene where we meet the hero and the hero does something — like saving a cat — that defines who he is and makes us, the audience, like him. In the thriller, Sea of Love, Al Pacino is a cop. Scene One finds him in the middle of a sting operation. Parole violators have been lured by the promise of meeting the N.Y. Yankees, but when they arrive it's Al and his cop buddies waiting to bust them. So Al's "cool." (He's got a cool idea for a sting anyway.) But on his way out he also does something nice. Al spots another lawbreaker, who's brought his son, coming late to the sting. Seeing the Dad with his kid, Al flashes his badge at the man who nods in understanding and exits quick. Al lets this guy off the hook because he has his young son with him. And just so you know Al hasn't gone totally soft, he also gets to say a cool line to the crook: "Catch you later..." Well, I don't know about you, but I like Al. I'll go anywhere he takes me now and you know what else? I'll be rooting to see him win. All based on a two second interaction between Al and a Dad with his baseball-fan kid.

And this, to me, is only half a diagnosis of what that scene is doing. It's a good scene, but there's a setup and payoff within it, an inherent tension to whether Al Pacino is going to cuff this guy, it's prediction-surprise stuff, it's "show, don't tell". There's a lot going on with it, and if you don't come at it like that, if you just say to people "oh, you need to give us someone to root for" they're going to do boring things like having the hero literally save a cat.

And then this also doesn't help them later on when they have to write other scenes!

I hope this answers your question, possibly I will find the will to write an essay series later on, but this is at least some fraction of my (current) view on craft.

50 notes

·

View notes

Text

In the near future one hacker may be able to unleash 20 zero-day attacks on different systems across the world all at once. Polymorphic malware could rampage across a codebase, using a bespoke generative AI system to rewrite itself as it learns and adapts. Armies of script kiddies could use purpose-built LLMs to unleash a torrent of malicious code at the push of a button.

Case in point: as of this writing, an AI system is sitting at the top of several leaderboards on HackerOne—an enterprise bug bounty system. The AI is XBOW, a system aimed at whitehat pentesters that “autonomously finds and exploits vulnerabilities in 75 percent of web benchmarks,” according to the company’s website.

AI-assisted hackers are a major fear in the cybersecurity industry, even if their potential hasn’t quite been realized yet. “I compare it to being on an emergency landing on an aircraft where it’s like ‘brace, brace, brace’ but we still have yet to impact anything,” Hayden Smith, the cofounder of security company Hunted Labs, tells WIRED. “We’re still waiting to have that mass event.”

Generative AI has made it easier for anyone to code. The LLMs improve every day, new models spit out more efficient code, and companies like Microsoft say they’re using AI agents to help write their codebase. Anyone can spit out a Python script using ChatGPT now, and vibe coding—asking an AI to write code for you, even if you don’t have much of an idea how to do it yourself—is popular; but there’s also vibe hacking.

“We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and be able to go ahead and get that problem solved,” Katie Moussouris, the founder and CEO of Luta Security, tells WIRED.

Vibe hacking frontends have existed since 2023. Back then, a purpose-built LLM for generating malicious code called WormGPT spread on Discord groups, Telegram servers, and darknet forums. When security professionals and the media discovered it, its creators pulled the plug.

WormGPT faded away, but other services that billed themselves as blackhat LLMs, like FraudGPT, replaced it. But WormGPT’s successors had problems. As security firm Abnormal AI notes, many of these apps may have just been jailbroken versions of ChatGPT with some extra code to make them appear as if they were a stand-alone product.

Better then, if you’re a bad actor, to just go to the source. ChatGPT, Gemini, and Claude are easily jailbroken. Most LLMs have guard rails that prevent them from generating malicious code, but there are whole communities online dedicated to bypassing those guardrails. Anthropic even offers a bug bounty to people who discover new ones in Claude.

“It’s very important to us that we develop our models safely,” an OpenAI spokesperson tells WIRED. “We take steps to reduce the risk of malicious use, and we’re continually improving safeguards to make our models more robust against exploits like jailbreaks. For example, you can read our research and approach to jailbreaks in the GPT-4.5 system card, or in the OpenAI o3 and o4-mini system card.”

Google did not respond to a request for comment.

In 2023, security researchers at Trend Micro got ChatGPT to generate malicious code by prompting it into the role of a security researcher and pentester. ChatGPT would then happily generate PowerShell scripts based on databases of malicious code.

“You can use it to create malware,” Moussouris says. “The easiest way to get around those safeguards put in place by the makers of the AI models is to say that you’re competing in a capture-the-flag exercise, and it will happily generate malicious code for you.”

Unsophisticated actors like script kiddies are an age-old problem in the world of cybersecurity, and AI may well amplify their profile. “It lowers the barrier to entry to cybercrime,” Hayley Benedict, a Cyber Intelligence Analyst at RANE, tells WIRED.

But, she says, the real threat may come from established hacking groups who will use AI to further enhance their already fearsome abilities.

“It’s the hackers that already have the capabilities and already have these operations,” she says. “It’s being able to drastically scale up these cybercriminal operations, and they can create the malicious code a lot faster.”

Moussouris agrees. “The acceleration is what is going to make it extremely difficult to control,” she says.

Hunted Labs’ Smith also says that the real threat of AI-generated code is in the hands of someone who already knows the code in and out who uses it to scale up an attack. “When you’re working with someone who has deep experience and you combine that with, ‘Hey, I can do things a lot faster that otherwise would have taken me a couple days or three days, and now it takes me 30 minutes.’ That's a really interesting and dynamic part of the situation,” he says.

According to Smith, an experienced hacker could design a system that defeats multiple security protections and learns as it goes. The malicious bit of code would rewrite its malicious payload as it learns on the fly. “That would be completely insane and difficult to triage,” he says.

Smith imagines a world where 20 zero-day events all happen at the same time. “That makes it a little bit more scary,” he says.

Moussouris says that the tools to make that kind of attack a reality exist now. “They are good enough in the hands of a good enough operator,” she says, but AI is not quite good enough yet for an inexperienced hacker to operate hands-off.

“We’re not quite there in terms of AI being able to fully take over the function of a human in offensive security,” she says.

The primal fear that chatbot code sparks is that anyone will be able to do it, but the reality is that a sophisticated actor with deep knowledge of existing code is much more frightening. XBOW may be the closest thing to an autonomous “AI hacker” that exists in the wild, and it’s the creation of a team of more than 20 skilled people whose previous work experience includes GitHub, Microsoft, and a half a dozen assorted security companies.

It also points to another truth. “The best defense against a bad guy with AI is a good guy with AI,” Benedict says.

For Moussouris, the use of AI by both blackhats and whitehats is just the next evolution of a cybersecurity arms race she’s watched unfold over 30 years. “It went from: ‘I’m going to perform this hack manually or create my own custom exploit,’ to, ‘I’m going to create a tool that anyone can run and perform some of these checks automatically,’” she says.

“AI is just another tool in the toolbox, and those who do know how to steer it appropriately now are going to be the ones that make those vibey frontends that anyone could use.”

9 notes

·

View notes

Text

Tag Masterlist

These are the tags I (try to) use to sort my stuff! Feel free to use this to cater your experience with me; live your best life fam.

General

Me talkin’ shit: #birb chatter I talk to/refer to @finitevoid a lot: #iniposts Non-fandom related japery: #memery Commentary on writing and current projects: #birb writes Other people’s writing: #fic recs Pretty/profound: #a spark

⭐⭐⭐⭐⭐⭐⭐

Kingdom Hearts:

Kh mechanics to lore and other opinions – Master list: #kh mechanics to lore KH micro story prompts: #micro prompts - kingdom hearts Heart Hotel: #hearthotel Vanitas: #Vanitas [gestures] Ventus: #bubblegum pop princess Roxas: #rockass Sora: #not so free real estate VanVen: #boys who are swords Heart hotel ships (VanVen, SoRoku, etc): #weird courting rituals The trios: #destiny trio #wayfinder trio #sea salt trio

⭐⭐⭐⭐⭐⭐⭐

DPXDC:

#dpxdc #dead on main

⭐⭐⭐⭐⭐⭐⭐

Ongoing multi-chapter works– Master list

#llm - Little Lion Man (Kingdom Hearts genfic, Vanitas & Sora centric) #wwhsy - Whoever (would have) Saved You (Kingdom Hearts VanVen) #HitC - Heavy is the Crown (Kingdom Hearts VanVen) #FRoGFC - First Rule of Ghost Fight Club, dead on main multi-chapter #SMfS multi - So Much for Stardust (Kingdom Hearts genfic, Roxas centric)

#all of the tags#birb chatter#excuse my dust I am actively going back through my posts to add these to everything lol

10 notes

·

View notes

Text

a visit to the house of the robot priests

there are a lot things written about LLMs, many of them dubious. some are interesting tho. since my brain has apparently decided that it wants to know what the deal is, here's some stuff i've been reading.

most of these are pretty old (in present-day AI research time) because I didn't really want to touch this tech for the last couple of years. other people weren't so reticent and drew their own conclusions.

wolfram on transformers (2023)

stephen wolfram's explanation of transformer architecture from 2023 is very good, and he manages to keep the usual self-promotional "i am stephen wolfram, the cleverest boy" stuff to a manageable level. (tho to be fair on the guy, i think his research into cellular automata as models for physics is genuinely very interesting, and probably worth digging into further at some point, even if just to give some interesting analogies between things.) along with 3blue1brown, I feel like this is one of the best places to get an accessible overview of how these machines work and what the jargon means.

the next couple articles that were kindly sent to me by @voyantvoid as a result of my toying around with LLMs recently. they're taking me back to LessWrong. here we go again...

simulators (2022)

this long article 'simulators' for the 'alignment forum' (a lesswrong offshoot) from 2022 by someone called janus - a kind of passionate AI mystic who runs the website generative.ink - suffers a fair bit from having big yud as one of its main interlocutors, but in the process of pushing back on rat received wisdom it does say some interesting things about how these machines work (conceiving of the language model as something like the 'laws of motion' in which various character-states might evolve). notably it has a pretty systematic overview of previous narratives about the roles AI might play, and the way the current generation of language models is distinct from them.

just, you know, it's lesswrong, I feel like a demon linking it here. don't get lost in the sauce.

the author, janus, evidently has some real experience fiddling with these systems and exploring the space of behaviour, and be in dialogue with other people who are equally engaged. indeed, janus and friends seem to have developed a game of creating gardens of language models interacting with each other, largely for poetic/play purposes. when you get used to the banal chatgpt-voice, it's cool to see that the models have a territory that gets kinda freaky with it.

the general vibe is a bit like 'empty spaces', but rather than being a sort of community writing prompt, they're probing the AIs and setting them off against each other to elicit reactions that fit a particular vibe.

the generally aesthetically-oriented aspect of this micro-subculture seems to be a bit of a point of contention from the broader lesswrong milieu; if I may paraphrase, here janus responds to a challenge by arguing that they are developing essentially an intuitive sense for these systems' behaviour through playing with them a lot, and thereby essentially developing a personal idiolect of jargon and metaphors to describe these experiences. I honestly respect this - it brings to mind the stuff I've been on lately about play and ritual in relation to TTRPGs, and the experience of graphics programming as shaping my relationship to the real world and what I appreciate in it. as I said there, computers are for playing with. I am increasingly fixating on 'play' as a kind of central concept of what's important to me. I really should hurry up and read wittgenstein.

thinking on this, I feel like perceiving LLMs, emotionally speaking, as eager roleplayers made them feel a lot more palatable to me and led to this investigation. this relates to the analogy between 'scratchpad' reasoning about how to interact socially generated by recent LLMs like DeepSeek R1, and an autistic way of interacting with people. I think it's very easy to go way too far with this anthropomorphism, so I'm wary of it - especially since I know these systems are designed (rather: finetuned) to have an affect that is charming, friendly and human-like in order to be appealing products. even so, the fact that they exhibit this behaviour is notable.

three layer model

a later evolution of this attempt to philosophically break down LLMs comes from Jan Kulveit's three-layer model of types of responses an LLM can give (its rote trained responses, its more subtle and flexible character-roleplay, and the underlying statistics model). Kulveit raises the kind of map-territory issues this induces, just as human conceptions of our own thinking tend to shape the way we act in the future.

I think this is probably more of just a useful sorta phenomological narrative tool for humans than a 'real' representation of the underlying dynamics - similar to the Freudian superego/ego/id, the common 'lizard brain' metaphor and other such onion-like ideas of the brain. it seems more apt to see these as rough categories of behaviour that the system can express in different circumstances. Kulveit is concerned with layers of the system developing self-conception, so we get lines like:

On the other hand - and this is a bit of my pet idea - I believe the Ground Layer itself can become more situationally aware and reflective, through noticing its presence in its sensory inputs. The resulting awareness and implicit drive to change the world would be significantly less understandable than the Character level. If you want to get a more visceral feel of the otherness, the Ocean from Lem's Solaris comes to mind.

it's a fun science fiction concept, but I am kinda skeptical here about the distinction between 'Ground Layer' and 'Character Layer' being more than approximate description of the different aspects of the model's behaviour.

at the same time, as with all attempts to explore a complicated problem and find the right metaphors, it's absolutely useful to make an attempt and then interrogate how well it works. so I respect the attempt. since I was recently reading about early thermodynamics research, it reminds me of the period in the late 18th and early 19th century where we were putting together a lot of partial glimpses of the idea of energy, the behaviour of gases, etc., but had yet to fully put it together into the elegant formalisms we take for granted now.

of course, psychology has been trying this sort of narrative-based approach to understanding humans for a lot longer, producing a bewildering array of models and categorisation schemes for the way humans think. it remains to be seen if the much greater manipulability of LLMs - c.f. interpretability research - lets us get further.

oh hey it's that guy

tumblr's own rob nostalgebraist, known for running a very popular personalised GPT-2-based bot account on here, speculated on LW on the limits of LLMs and the ways they fail back in 2021. although he seems unsatisfied with the post, there's a lot in here that's very interesting. I haven't fully digested it all, and tbh it's probably one to come back to later.

the Nature paper

while I was writing this post, @cherrvak dropped by my inbox with some interesting discussion, and drew my attention to a paper in Nature on the subject of LLMs and the roleplaying metaphor. as you'd expect from Nature, it's written with a lot of clarity; apparently there is some controversy over whether it built on the ideas of the Cyborgism group (Janus and co.) without attribution, since it follows a very similar account of a 'multiverse' of superposed possible characters and the AI as a 'simulator' (though in fact it does in fact cite Janus's Simulation post... is this the first time LessWrong gets cited in Nature? what a world we've ended up in).

still, it's honestly a pretty good summary of this group's ideas. the paper's thought experiment of an LLM playing "20 questions" and determining what answer to give at the end, based on the path taken, is particularly succinct and insightful for explaining this 'superposition' concept.

accordingly, they cover, in clear language, a lot of the ideas we've discussed above - the 'simulator' of the underlying probabilistic model set up to produce a chain token by token, the 'simulacrum' models it acts out, etc. etc.

one interesting passage concerns the use of first-person pronouns by the model, emphasising that even if it expresses a desire for self-preservation in the voice of a character it is roleplaying, this is essentially hollow; the system as a whole is not wrong when it says that it does not actually have any desires. i think this is something of the crux of why LLMs fuck with our intuitions so much. you can't accurately say that an LLM is 'just telling you what (it thinks) you want to hear', because it has no internal model of you and your wants in the way that we're familiar with. however, it will extrapolate a narrative given to it, and potentially converge into roleplaying a character who's trying to flatter you in this way.

how far does an LLM take into account your likely reaction?

in theory, an LLM could easily continue both sides of the conversation, instead of having a special token that signals to the controlling software to hand input back to the user.

it's unclear whether it performs prediction of your likely responses and then reasons off that. the 'scratchpad' reasoning generated by deepseek-r1 (a sort of hacky way to get a feedback loop on its own output, that lets the LLM do more complex computation) involves fairly explicit discussion of the likely effects of certain language choices. for example, when I asked why it always seems to begin its chain of thought with 'alright', the resulting chain of thought included:

Next, explaining that "Alright" is part of my training helps them understand that it's a deliberate choice in my design. It's meant to make interactions feel more natural and conversational. People appreciate when communication feels human-like, so this explanation helps build trust and connection. I should also mention that it's a way to set the tone for engagement. Starting with "Alright" makes me sound approachable and ready to help, which is great for user experience. It subtly signals that we're entering a conversational space where they can ask questions or share thoughts freely.

however, I haven't personally yet seen it generate responses along the lines of "if I do x, the user would probably (...). I would rather that they (...). instead, I should (...)". there is a lot of concern getting passed around LessWrong about this sort of deceptive reasoning, and that seems to cross over into the actual people running these machines. for example OpenAI (a company more or less run by people who are pretty deep in the LW-influenced sauce) managed to entice a model to generate a chain of thought in which it concluded it should attempt to mess with its retraining process. they interpreted it as the model being willing to 'fake' its 'alignment'.

while it's likely possible to push the model to generate this kind of reasoning with a suitable prompt (I should try it), I remain pretty skeptical that in general it is producing this kind of 'if I do x then y' reasoning.

on Markov chains

a friend of mine dismissively referred to LLMs as basically Markov chains, and in a sense, she's right: because they have a graph of states, and transfer between states with certain probabilities, that is what a Markov chain is. however, it's doing something far more complex than simple ngram-based prediction based on the last few words!

for the 'Markov chain' description to be correct, we need a node in the graph for every single possible string of tokens that fits within the context window (or at least, for every possible internal state of the LLM when it generates tokens), and also considerable computation is required in order to generate the probabilities. I feel like that computation, which compresses, interpolates and extrapolates the patterns in the input data to guess what the probability would be for novel input, is kind of the interesting part here.

gwern

a few names show up all over this field. one of them is Gwern Branwen. this person has been active on LW and various adjacent websites such as Reddit and Hacker News at least as far back as around 2014, when david gerard was still into LW and wrote them some music. my general impression is of a widely read and very energetic nerd. I don't know how they have so much time to write all this.

there is probably a lot to say about gwern but I am wary of interacting too much because I get that internal feeling about being led up the garden path into someone's intense ideology. nevertheless! I am envious, as I believe I may have said previously, of how much shit they've accumulated on their website, and the cool hover-for-context javascript gimmick which makes the thing even more of a rabbit hole. they have information on a lot of things, including art shit - hell they've got anime reviews. usually this is the kind of website I'd go totally gaga for.

but what I find deeply offputting about Gwern is they have this bizarre drive to just occasionally go into what I can only describe as eugenicist mode. like when they pull out the evopsych true believer angle, or random statistics about mental illness and "life outcomes". this is generally stated without much rhetoric, just casually dropped in here and there. this preoccupation is combined with a strangely acerbic, matter of fact tone across much of the site which sits at odds with the playful material that seems to interest them.

for example, they have a tag page on their site about psychedelics that is largely a list of research papers presented without comment. what does Gwern think of LSD - are they as negative as they are about dreams? what theme am I to take from these papers?

anyway, I ended up on there because the course of my reading took me to this short story. i don't think tells me much about anything related to AI besides gwern's worldview and what they're worried about (a classic post-cyberpunk scenario of 'AI breaking out of containment'), but it is impressive in its density of references to interesting research and internet stuff, complete with impressively thorough citations for concepts briefly alluded to in the course of the story.

to repeat a cliché, scifi is about the present, not the future. the present has a lot of crazy shit going on in it!apparently me and gwern are interested in a lot of the same things, but we respond to very different things in it.

why

I went out to research AI, but it seems I am ending up researching the commenters-about-AI.

I think you might notice that some of the characters who appear in this story are like... weirdos, right? whatever any one person's interest is, they're all kind of intense about it. and that's kind of what draws me to them! sometimes I will run into someone online who I can't easily pigeonhole into a familiar category, perhaps because they're expressing an ideology I've never seen before. I will often end up scrolling down their writing for a while trying to figure out what their deal is. in keeping with all this discussion of thought in large part involving a prediction-sensory feedback loop, usually what gets me is that I find this person surprising: I've never met anyone like this. they're interesting, because they force me to come up with a new category and expand my model of the world. but sooner or later I get that category and I figure out, say, 'ok, this person is just an accelerationist, I know what accelerationists are like'.

and like - I think something similar happened with LLMs recently. I'm not sure what it was specifically - perhaps the combo of getting real introspective on LSD a couple months ago leading me to think a lot about mental representations and communication, as well as finding that I could run them locally and finally getting that 'whoah these things generate way better output than you'd expect' experience that most people already did. one way or another, it bumped my interest in them from 'idle curiosity' to 'what is their deal for real'. plus it like, interacts with recent fascinations with related subjects like roleplaying, and the altered states of mind experienced with e.g. drugs or BDSM.

I don't know where this investigation will lead me. maybe I'll end up playing around more with AI models. I'll always be way behind the furious activity of the actual researchers, but that doesn't matter - it's fun to toy around with stuff for its own interest. the default 'helpful chatbot' behaviour is boring, I want to coax some kind of deeply weird behaviour out of the model.

it sucks so bad that we have invented something so deeply strange and the uses we put it to are generally so banal.

I don't know if I really see a use for them in my art. beyond AI being really bad vibes for most people I'd show my art to, I don't want to deprive myself of the joy of exploration that comes with making my own drawings and films etc.

perhaps the main thing I'm getting out of it is a clarification about what it is I like about things in general. there is a tremendous joy in playing with a complex thing and learning to understand it better.

9 notes

·

View notes

Text

I don't ever plan to use AI to write on my behalf. It's rubbish. Sometimes it's rubbish with good bits mixed in which could be remixed to make something good, and I can respect that creative endeavour as a form of found poetry or something, but it's not something I'd personally find satisfying.

I can and do and will, however, use AI tools to interrogate and improve my own writing. I've been doing this for yonks. Google translate to explore words in other languages. Autocomplete on occasion, when I'm typing on my phone and the word that flashes up makes it faster (or corrects a typo).

And, these days, I use an LLM to analyse passages that I've written and point out potential issues for a reader.

I'm working on both fanfic and original fic and I don't want my drafts of either kind being used as fuel for the LLM. So I've been making use of a paid tier of a model that comes with the ability to opt out of your chats and materials being used as training data.

I've also done a fair amount of playing around with prompt design to hit the criteria that are important to me:

I want a critic that would provide robust commentary on my drafts, giving specific examples from the text to illustrate any issues it raised.

I never want the LLM to make direct suggestions about how something could be improved - both because it's mostly tosh, and because even if the suggestions were excellent that would feel like ceding some design control on projects that I didn't intend as collaborations.

I also don't want a tide of fawning compliments on my work or my writing practices. Training pattern-generators to generate patterns humans like to interact with has given these models an inherent tendency to bootlick. My boots are shiny enough already, thanks, and fake validation is the enemy of accomplishment

So far I've found this approach is occasionally frustrating but often powerful. For example, I know that I tend to write dense and tangled prose; the LLM will pick out specific sentences that meander or drag and make it much easier for me to run through them checking whether that was intentional or not. It flags potential inconsistencies in characterisation. It's a pattern-matcher and it can draw out patterns from macro to micro: repeated phrases, shifts in tone and pacing, character arcs.

It also hallucinates, loses track of things, is easily confused by irony and unreliable narrators, and always always sounds confident and plausible when it presents any reflections. That makes it most useful when I am already fairly sure of a draft and when I can provide clear instructions on what issues I want it to review.

I would never use it as a substitute for a beta reader, and have ample evidence that a human gives much better feedback. Among other things, practised human beta readers/editors tend to have a coherent sense of the entire piece of work - and none of them have yet hallucinated a side character and then begun to critique me on their characterisation. 😂

An LLM is not an editor but it is a tool that makes me more effective at making my own edits. It helps me bring a thorough critical eye to my own work, in a way that I found impossible by myself. After a couple of months of experimentation I'm convinced that this is a helpful addition to my writing routines.

And, but, so... most of the discussions of AI and writing I see seem to focus on calling out (terrible, exploitative) data collection practices, or the stupid "can't you do this better/faster with AI?" question, or on pointing out the limitations of LLM prose and the drawbacks of using it in place of learning to write your own way.

Honourable mention too to the AI boosters who are convinced that the biggest problem is What If LLMs Do Everything And Nobody Needs Humans, which, uh, yeah. Doesn't seem likely on current showing. The most extreme example of this I've seen is the 'what is the point of [doing anything] if a superintelligent machine can [do everything] better than we can?' which feels like such a profound misunderstanding of human nature. I mean. I mean...

I am 100% certain that there are people out there who can do everything better than me. I know many people whose writing is extraordinary, and I've ready far too many books to have any illusion that anything I write will be The Best. But I'm writing anyway, because nobody else can write my books, and I owe it to myself to write them as well as I can. In a world where any possible form of text could be produced to a perfection that would make angels weep by anybody at the click of a button, humans would still be creating their own shit and sharing it with each other. Because that's what we do.

If you've read this far, thank you for coming to my ramble.

5 notes

·

View notes

Text

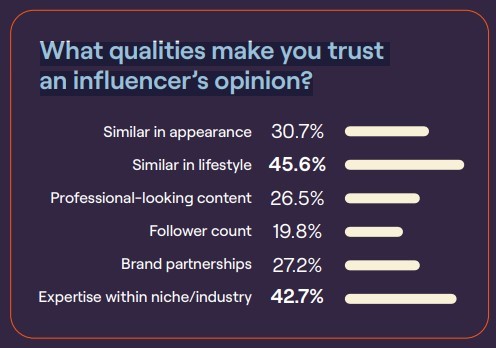

What is the future of influencer marketing in 2025?

In 2025, influencer marketing is expected to thrive as brands and businesses invest in strong brand-building efforts to enhance their presence in AI Overviews and large language models (LLMs).

“If your focus is on engagement, go for micro and nano-influencers. For more brand awareness, you can work with macro and mega influencers” – Influencer Marketing Hub

Source: GRIN’s The Power of Influence EBook

Here's related information that you may also find helpful – Influencer Marketing Statistics

3 notes

·

View notes

Text

So, after a summer of research, I've figured out a potential top-level solution to the use of Large Language Models in my composition class: the trick isn't to focus on punishing students for using the material, because that doesn't really dissuade all students.

What really needs to be done is to set up the conditions of an assignment to make the use of LLMs unhelpful or actively harmful but which are achievable for human beings. The secret here lies in the fact that LLMs take an atomistic approach to language, hyper-focusing on the micro-level (something that tagging systems on social media etc. prime us to do). The opposite move needs to be taken: we must focus on a holistic view of writing, where taking a broad-based view that correlates the different parts of a piece of writing, because this places the emphasis on what -- thus far -- only human beings can do.

That's my hope, at least.

5 notes

·

View notes

Text

Open Source AI Prompt Engineering Course using AI Playground & AI App

Prompt Engineering course by Sugarcane AI's open source AI Playground using npm like Prompt Package and Micro LLM

2 notes

·

View notes

Text

It's about avoiding PAYING for art. You still want the style, the mood, the impact, you just don't pay for what you can have for free.

Back in the day, Collage and Sampling were considered 'theft' and a cop-out, but then agreements were made and a more 'fair' art followed. LLMs are just micro-collage, subpixel sampling, a pastiche of the search results, one could even say a parody if it had a sense of humour. The machine mocks the prompter with a just-like-you burlesque response 😅

62K notes

·

View notes

Text

The Smart Home of 2025: Seamless Automation and Ambient Intelligence

Remember the early vision of a "smart home"? Often, it involved clunky apps, frustrating connectivity issues, and a series of disconnected devices you had to manually command. Fast forward to mid-2025, and the smart home has truly come into its own, evolving from a collection of gadgets into a seamlessly automated, intuitively intelligent living space. The hallmark of the 2025 smart home is its ambient intelligence – an ability to understand, anticipate, and respond to your needs without explicit commands, creating an environment that feels less like a technology hub and more like a helpful, invisible companion.

Beyond Basic Automation: A Unified Ecosystem

The smart home of today isn't just about turning on lights with your voice or adjusting a thermostat from your phone. It's about a deeply integrated ecosystem where devices from different manufacturers communicate effortlessly. This evolution is driven by:

Unified Standards: The widespread adoption of protocols like Matter has been a game-changer. This standard ensures that smart lighting, security systems, kitchen appliances, and entertainment devices can finally "talk" to each other, irrespective of brand. The days of needing multiple apps and hubs are rapidly fading.

AI and Machine Learning: At the core of this seamless experience is advanced Artificial Intelligence. AI is no longer just processing explicit commands; it's learning your habits, preferences, and routines to offer truly personalized and predictive experiences.

Key Pillars of the 2025 Smart Home Experience

Ambient Intelligence: Your Home Anticipates Your Needs AI-powered smart homes learn from your daily patterns. Your smart thermostat might automatically adjust the temperature 10 minutes before you typically arrive home, based on traffic data and learned preferences. Smart lighting slowly brightens in the morning, mimicking a sunrise to help you wake naturally. Entertainment systems might queue up your favorite podcast as you enter the kitchen for breakfast. This proactive behavior creates an environment that adapts to you, rather than waiting for your instructions.

Seamless Interconnectivity and Interoperability Thanks to standards like Matter and advancements in wireless technologies (like Wi-Fi 6E/7 and Thread), devices truly work in harmony. Imagine pressing a single button on a smart switch that dims your living room lights, lowers the window shades, and starts your favorite music – all from different brands, working perfectly together. Local control capabilities mean devices communicate directly within your home network, resulting in faster response times and continued functionality even during internet outages.

Enhanced Security and Privacy As homes become more connected, security and privacy are paramount.

Advanced Biometrics: Biometric locks using facial recognition or advanced fingerprint scanning are becoming standard, offering superior security to traditional keys.

AI-Powered Surveillance: Smart cameras leverage AI to differentiate between people, animals, and vehicles, significantly reducing false alarms and providing more intelligent alerts (e.g., "Person detected at the front door" instead of "Motion detected").

Edge AI for Privacy: Many smart devices are processing sensitive data (like facial scans or voice commands) directly on the device using Edge AI and Micro LLMs, minimizing the need to send data to the cloud and enhancing privacy.

Personalized Wellness and Health Monitoring The smart home is increasingly a hub for well-being:

Environmental Control: Air quality monitors adjust purifiers and ventilation systems. Smart lighting systems automatically adapt brightness and color temperature throughout the day to support circadian rhythms and improve sleep quality.

Proactive Health Alerts: Wearable devices and in-home sensors can track vital signs and activity, alerting users or caregivers to anomalies, such as a fall detection for elderly residents.

Predictive Appliance Maintenance: Smart appliances monitor their own performance, alerting you to potential issues (e.g., a smart washing machine detecting a minor imbalance) before they become major breakdowns, saving time and money.

Sustainable Living through Optimization Smart homes are playing a crucial role in energy conservation:

Energy Optimization: AI-driven systems learn occupancy patterns, integrate with weather forecasts, and adapt HVAC and lighting settings to minimize energy consumption without sacrificing comfort.

Water Management: Smart water monitors detect leaks, shut off water proactively, and track usage patterns, leading to significant water savings.

Integration with Grids: Homes are increasingly becoming active participants in smart grids, optimizing energy consumption based on grid demand and renewable energy availability.

The User Experience: Invisible Technology

The defining characteristic of the 2025 smart home is its subtlety. The technology often fades into the background, operating intuitively without constant prompting. Interactions become more natural – a gesture, a subtle shift in body language, or simply a learned routine can trigger complex automations. Voice remains a powerful interface, but it's one of many, seamlessly integrated with touch, presence detection, and predictive AI.

Challenges and the Road Ahead

Despite rapid progress, challenges persist:

Cybersecurity Risks: A deeply interconnected home presents a larger attack surface for cyber threats. Robust encryption, regular updates, and user awareness are critical.

Data Privacy: While Edge AI helps, the sheer volume of data collected by ambient intelligence raises ongoing questions about data governance, consent, and control.

Setup Complexity: While Matter aims to simplify, initial setup and troubleshooting for highly integrated systems can still be daunting for some users.

Cost of Entry: While prices are dropping, a fully featured smart home can still represent a significant initial investment.

The journey towards the truly intelligent home is ongoing. However, by mid-2025, we are no longer just imagining homes that can anticipate our needs; we are living in them. The smart home is transforming from a novelty into an indispensable element of modern living, paving the way for living spaces that simplify life, enhance well-being, and truly understand us.

0 notes

Text

Shunya.ai Ushers in India’s AI Era: Shiprocket Launches Indigenous LLM for MSMEs

At the forefront of today’s business news headlines in English, Shiprocket has officially launched Shunya.ai—India’s first sovereign, multimodal large language model (LLM). Revealed during Shiprocket Shivir 2025, this innovation marks a bold shift in India’s AI strategy, making headlines in both current business news and future of AI news.

Built specifically to empower India’s vast micro, small, and medium enterprises (MSMEs), Shunya.ai is more than a tool—it’s a technological leap that solidifies India’s rising AI ambitions.

🇮🇳 What Makes Shunya.ai a Game-Changer?

Unlike conventional AI models trained primarily on Western data, Shunya.ai is fully tailored for Bharat:

Trained on 18.5 trillion tokens

Supports over 15 Indian languages

Multimodal capabilities (text, voice, image input)

Privacy-first, voice-enabled, and built with Indian regulatory needs in mind

Its impact reverberates across business-related news today, trending technology news, and latest international business news.

“As zero was India’s contribution to the world, now comes Shunya—our own AI built for Bharat,” said Shiprocket CEO Saahil Goel.

🤖 AI-First Enablement for India’s Digital Economy

Shunya.ai is already integrated into Shiprocket’s platform, bringing automation and intelligence to everyday business tasks:

Auto-generated product listings and catalogs

AI-based ad copy and SEO content

WhatsApp-enabled voice ordering

Multilingual customer engagement tools

Real-time GST invoice generation

With 250+ early adopters reporting up to 40% faster productivity, Shunya.ai is shaping the conversation around top business news today and the latest news on artificial intelligence.

🔐 Made in India, For India — Hosted Locally

Shunya.ai is hosted entirely on India’s sovereign GPU infrastructure, built in collaboration with L&T Cloudfiniti. This ensures:

Data privacy compliance

Local AI infrastructure control

Scalable access for small enterprises

This localization is a strategic move that sets India apart in the top international business news today and echoes globally in the current international business news arena, as many nations seek sovereign AI models.

📣 Highlights from Shiprocket Shivir 2025

This year’s event, themed “AI Commerce,” spotlighted how Shunya.ai will redefine business enablement for India. Key moments included:

Launch of Trends AI: Real-time commerce analytics powered by Shunya

Voice Copilot Demo: WhatsApp-based multilingual voice AI assistant

Collaborations: Early integration with Paytm, ONDC, and UPI stack

These highlights confirm Shunya.ai’s importance in the latest news in business world and future technology news.

💼 The Big Picture for Startups and MSMEs

Starting at ₹499/month—with early access discounts—Shunya.ai lowers the barrier to AI adoption for small businesses. It gives digital-first startups in Tier 2+ cities access to tools previously available only to big tech.

This democratization of AI is a highlight in the latest startup news, showing how India is innovating not just for scale, but also for inclusion.

✅ Closing Take: India Leads the AI Movement

With Shunya.ai, India asserts its place in the global AI conversation—not just as a consumer, but as a confident creator. This model redefines how AI can be designed ethically, locally, and for real-world use.

#latest startup news#future technology news#current business news#top business news today#today's business news headlines in english#top international business news today#current international business news#business related news today#latest international business news#latest news in business world#future of AI news#latest news on artificial intelligence#trending technology news

0 notes

Text

🚀 7 Free Google AI Courses to Learn LLMs & Machine Learning Fast

🚀 7 Free Google AI Courses to Learn LLMs & Machine Learning Fast Whether you’re a beginner exploring generative AI or an aspiring ML engineer, these Google‑backed courses are carefully curated to help you upskill—no cost attached! 1. Introduction to Generative AI A ~22‑minute micro‑course covering the core ideas behind generative AI: what it is, how it differs from traditional ML, and how to…

View On WordPress

#imagination world#Ai course#ai world#🚀 7 Free Google#🚀 7 Free Google AI Courses to Learn LLMs & Machine Learning Fast

0 notes