#oauth2 authentication flow

Explore tagged Tumblr posts

Text

Building a Scalable, Secure, and Efficient System

Agritech Platform

A comprehensive Seed-to-Sale Cannabis ERP solution designed to streamline operations for businesses within the cannabis industry. It offers tools for regulatory compliance, sales enhancement, production efficiency, and inventory management. With features like regulatory software integration, dynamic demand alerts, and real-time inventory updates. It’s tailored for cultivators, manufacturers and vertically integrated businesses, promising visibility, control, and streamlined workflows across multiple sites and operations.

UX Audit

Task Flow Optimization

Navigational structure Redesign

Wireframing

Visual Design

The Engagement

Feature Gating Based on Pricing Plans

Plan Definition with Dynamic UI Rendering: Created multiple pricing plans (e.g., Basic, Pro, Enterprise), each offering a distinct set of features / limitations. Adjusted the UI dynamically to show or hide features based on the user's plan.

Feature Flags: Used feature flags to enable or disable features dynamically based on the user's plan. This was managed using a centralized feature flagging service.

RBAC (Role-Based Access Control)

Roles and PermissionsDefined roles such as Admin (Tenant Owner), Manager, Team Member and Customer, each with specific permissions. Permissions were managed using a centralized policy engine.

UI AccessCustomized interfaces based on user roles, ensuring that users only see and interact with the features relevant to their responsibilities. This was achieved by dynamically rendering components based on the user's role.

Authentication and AuthorizationImplemented using JWT (JSON Web Tokens) and OAuth2 for secure authentication, and fine-grained access control.

Monorepo Structure

Frontend

Two Progressive Web Apps (PWAs) for user interaction, built with Vue and Nuxt for faster development and a better development experience.

Backend

Backend server built with Node.js and Express, leveraging GraphQL for flexible and efficient data querying, enabling more precise and efficient data retrieval.

Shared Component Library

Maintained a shared component library using Vue and Nuxt to promote code reuse, maintain consistency across both PWAs, and speed up the development process by providing pre-built, standardized components.

Server-less Functions

Data-heavy tasks to optimize performance and scalability. These functions handled tasks like data processing, reports, and task automations.

Apollo GraphQL API

Utilized with code generation to ensure type-safe queries and mutations across the frontend, enhancing developer productivity and reducing errors.

Integration

Government Compliant Body

Two-way Integration: Ensured real-time synchronization with the government body, facilitating compliance and inventory management. Implemented using REST APIs to sync data.

Inventory Audit: Automated highlighting of inconsistent data, improving data accuracy and compliance.

Task Dependencies: Implemented a dependency engine to link dependencies between tasks and resolve them once executed. Enabled users to perform multiple tasks, syncing them in a compliant order with the body.

Shipment Provider (On Fleet)

Two-way Integration: Provided seamless tracking of sales order shipments. Integrated using On Fleet's API, with real-time updates pushed to the frontend using WebSockets.

QuickBooks Integration

CSV Export: Facilitated easy generation of invoices for QuickBooks, streamlining financial operations.

Performance Improvements

Jobs Kanban Board

Two-way Integration: Achieved a 70% faster load time by optimizing database queries, implementing client-side caching, and using service workers for offline capabilities.

Real-time Reports

COGS (Cost of Goods Sold)

Top Performing Products

Sales Reports

Inventory Value Average Inventory Age

Others

Multi-Tenancy

Security

Data Isolation: Implemented a secure multi-tenant architecture ensuring no data leakage between tenants. Achieved using a combination of tenant-specific database schemas and logical data isolation in shared databases.

Document Sharing: Used signed URLs expiring after 1 minute for secure document sharing within tenants. Implemented using AWS S3 pre-signed URLs.

Compliance and Labeling

QR Codes Generation and Scanning

Barcodes Generation and Scanning

Compliance Labels

Inventory Value, Average Inventory Age Linked to COA reported by labs

DFS (Drug Fact Sheet)

0 notes

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

How Scalable API Development Gives Startups a Competitive Edge

In the fast-paced digital world, startups must build technology that is not just functional but also scalable and future-ready. API development plays a critical role in this ecosystem by enabling seamless integration, efficient data flow, and faster go-to-market strategies. For startups aiming to innovate quickly and respond to user needs with agility, robust APIs are not just a backend function—they are strategic assets.

Why APIs Matter to Startup Growth

Modern startups operate in a hyper-connected ecosystem where success depends on how well your application interacts with other platforms. Whether you’re connecting with third-party services or expanding features across devices, API development serves as the communication backbone.

Additionally, APIs allow developers to decouple frontend and backend operations, facilitating easier upgrades and more agile product iterations. In this context, many startups prioritize integrating their Web application development with well-structured APIs from the beginning.

The Role of API Development in Building Product Ecosystems

Building an ecosystem around your product adds long-term value. For example, if your startup offers a scheduling app, an API can allow third-party platforms to integrate scheduling features into their systems, extending your reach without additional user acquisition costs.

By integrating iOS App Development efforts with scalable APIs, startups can ensure consistent performance and design language across devices. APIs also reduce redundancy in coding efforts, saving both time and money—two things startups can't afford to waste.

How APIs Improve Efficiency and Reduce Development Time

Time-to-market is often the difference between a startup's success or failure. Well-architected API development streamlines feature rollouts and reduces development time by allowing modular work. Frontend and backend teams can work simultaneously, accelerating timelines significantly.

Startups that plan for API scalability from the start can avoid the growing pains of retrofitting systems later. This becomes especially important when integrating smart devices, such as wearables or home automation systems. Building an IoT Mobile App becomes easier and more efficient with scalable APIs that handle data influx from multiple sources.

Choosing the Right API Architecture for Your Startup

Selecting the right architecture—REST, GraphQL, or gRPC—can affect your application's scalability and performance. REST remains the most popular for its simplicity, but newer startups may benefit from GraphQL's flexibility in querying exactly what is needed.

Regardless of the approach, maintaining proper documentation and security standards is essential. It’s also wise to consider versioning strategies early in your API development process to accommodate future changes without breaking existing integrations.

Custom Software Solutions and API Integration

Startups often need unique functionalities that off-the-shelf software cannot provide. Custom Software Development combined with scalable APIs creates tailored solutions that can grow as the business evolves.

Custom APIs offer flexibility, allowing startups to adapt quickly to market demands or pivot when needed. Whether integrating CRM systems, analytics tools, or customer-facing features, a well-thought-out API layer can streamline operations and open up new revenue streams.

Security and Compliance Considerations in API Development

Security should never be an afterthought, especially for startups handling sensitive user data. APIs must be secured with authentication protocols like OAuth2, and encrypted communication should be enforced via HTTPS.

Incorporating robust security practices during API development also helps ensure compliance with regulations like GDPR or HIPAA, depending on your industry. Startups gain user trust and avoid legal pitfalls when they make secure development a priority from day one.

Scalability: Planning for the Future

One of the biggest advantages of modern API development is its ability to scale. As your startup grows, APIs must handle increased traffic and data processing without degrading performance. Cloud platforms like AWS and Azure offer scalable API gateway services that can grow with your user base.

Planning for scalability includes choosing the right database, implementing caching mechanisms, and using load balancers. These infrastructure choices help your startup remain agile while managing growth efficiently.

Real-World Examples of Successful API-Led Startups

Many successful startups attribute their rapid growth to strong API strategies. For instance, Stripe made its name by offering a simple yet powerful payment API development that could be embedded in any application. Twilio did the same for communication services.

These companies became platforms rather than just products—thanks largely to their API-centric approach. Whether you're building a SaaS tool or a consumer app, your API can be a product in itself.

Best Practices for Building Scalable APIs

To make the most of your API development, follow these best practices:

Use consistent naming conventions and standards like RESTful practices.

Employ throttling and rate limiting to protect against abuse.

Maintain clear and updated API documentation.

Monitor APIs using tools like Postman, Swagger, or API Gateway metrics.

Regularly review and refactor code to eliminate bottlenecks.

These practices ensure that your API infrastructure supports growth rather than hinders it.

Conclusion: API Development Is Not Optional for Startups

In today's digital economy, API development is a necessity, not a luxury. It forms the backbone of your startup's digital architecture, influencing everything from speed and efficiency to scalability and innovation.

Startups that invest in scalable, secure, and well-documented APIs are better positioned to compete, grow, and adapt in a fast-changing landscape. Don’t just build an app—build an ecosystem. It starts with the right API.

0 notes

Text

Azure Data Factory for Healthcare Data Workflows

Introduction

Azure Data Factory (ADF) is a cloud-based ETL (Extract, Transform, Load) service that enables healthcare organizations to automate data movement, transformation, and integration across multiple sources. ADF is particularly useful for handling electronic health records (EHRs), HL7/FHIR data, insurance claims, and real-time patient monitoring data while ensuring compliance with HIPAA and other healthcare regulations.

1. Why Use Azure Data Factory in Healthcare?

✅ Secure Data Integration — Connects to EHR systems (e.g., Epic, Cerner), cloud databases, and APIs securely. ✅ Data Transformation — Supports mapping, cleansing, and anonymizing sensitive patient data. ✅ Compliance — Ensures data security standards like HIPAA, HITRUST, and GDPR. ✅ Real-time Processing — Can ingest and process real-time patient data for analytics and AI-driven insights. ✅ Cost Optimization — Pay-as-you-go model, eliminating infrastructure overhead.

2. Healthcare Data Sources Integrated with ADF

3. Healthcare Data Workflow with Azure Data Factory

Step 1: Ingesting Healthcare Data

Batch ingestion (EHR, HL7, FHIR, CSV, JSON)

Streaming ingestion (IoT sensors, real-time patient monitoring)

Example: Ingest HL7/FHIR data from an APIjson{ "source": { "type": "REST", "url": "https://healthcare-api.com/fhir", "authentication": { "type": "OAuth2", "token": "<ACCESS_TOKEN>" } }, "sink": { "type": "AzureBlobStorage", "path": "healthcare-data/raw" } }

Step 2: Data Transformation in ADF

Using Mapping Data Flows, you can:

Convert HL7/FHIR JSON to structured tables

Standardize ICD-10 medical codes

Encrypt or de-identify PHI (Protected Health Information)

Example: SQL Query for Data Transformationsql SELECT patient_id, diagnosis_code, UPPER(first_name) AS first_name, LEFT(ssn, 3) + 'XXX-XXX' AS masked_ssn FROM raw_healthcare_data;

Step 3: Storing Processed Healthcare Data

Processed data can be stored in: ✅ Azure Data Lake (for large-scale analytics) ✅ Azure SQL Database (for structured storage) ✅ Azure Synapse Analytics (for research & BI insights)

Example: Writing transformed data to a SQL Databasejson{ "type": "AzureSqlDatabase", "connectionString": "Server=tcp:healthserver.database.windows.net;Database=healthDB;", "query": "INSERT INTO Patients (patient_id, name, diagnosis_code) VALUES (?, ?, ?)" }

Step 4: Automating & Monitoring Healthcare Pipelines

Trigger ADF Pipelines daily/hourly or based on event-driven logic

Monitor execution logs in Azure Monitor

Set up alerts for failures & anomalies

Example: Create a pipeline trigger to refresh data every 6 hoursjson{ "type": "ScheduleTrigger", "recurrence": { "frequency": "Hour", "interval": 6 }, "pipeline": "healthcare_data_pipeline" }

4. Best Practices for Healthcare Data in ADF

🔹 Use Azure Key Vault to securely store API keys & database credentials. 🔹 Implement Data Encryption (using Azure Managed Identity). 🔹 Optimize ETL Performance by using Partitioning & Incremental Loads. 🔹 Enable Data Lineage in Azure Purview for audit trails. 🔹 Use Databricks or Synapse Analytics for AI-driven predictive healthcare analytics.

5. Conclusion

Azure Data Factory is a powerful tool for automating, securing, and optimizing healthcare data workflows. By integrating with EHRs, APIs, IoT devices, and cloud storage, ADF helps healthcare providers improve patient care, optimize operations, and ensure compliance with industry regulations.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

25 Chat GPT Prompts for Full-Stack Developers

Today, full-stack developers have a lot to handle. They work with tools like Node.js, React, and MongoDB to build websites and apps. These tools help developers create powerful applications, but they also come with challenges. That’s where ChatGPT can come in to help. ChatGPT makes your job easier by solving problems, speeding up tasks, and even writing code for you.

In this post, we’ll show 25 ChatGPT prompts that full-stack developers can use to speed up their work. Each prompt helps you solve common development issues across backend, frontend, and database management tasks.

Backend Development with ChatGPT Prompts for Node.js

1. Debugging Node.js Apps

When your app runs slowly or isn’t working right, finding the problem can take a lot of time. ChatGPT can help you find and fix these issues quickly.

ChatGPT Prompt:

“Help me debug a performance issue in my Node.js app related to MongoDB queries.”

2. Generate Node.js Code Faster

Writing the same type of code over and over is boring. ChatGPT can generate this basic code for you, saving you time.

ChatGPT Prompt:

“Generate a REST API boilerplate with authentication using Node.js and Express.”

3. Speed Up MongoDB Queries

Sometimes, MongoDB searches (called “queries”) take too long. ChatGPT can suggest ways to make these searches faster.

ChatGPT Prompt:

“How can I optimize a MongoDB aggregation pipeline to reduce query execution time in large datasets?”

4. Build Secure APIs

APIs help different parts of your app talk to each other. Keeping them secure is important. ChatGPT can guide you to make sure your APIs are safe.

ChatGPT Prompt:

“Help me design a secure authentication flow with JWT for a multi-tenant SaaS app using Node.js.”

5. Handle Real-Time Data with WebSockets

When you need real-time updates (like chat messages or notifications), WebSockets are the way to go. ChatGPT can help you set them up easily.

ChatGPT Prompt:

“Help me set up real-time notifications in my Node.js app using WebSockets and React.”

Frontend Development with ChatGPT Prompts for React

6. Improve React Component Performance

Sometimes React components can be slow, causing your app to lag. ChatGPT can suggest ways to make them faster.

ChatGPT Prompt:

“Can you suggest improvements to enhance the performance of this React component?”

7. Use Code Splitting and Lazy Loading

These techniques help your app load faster by only loading the parts needed at that time. ChatGPT can help you apply these techniques correctly.

ChatGPT Prompt:

“How can I implement lazy loading and code splitting in my React app?”

8. Manage State in React Apps

State management helps you keep track of things like user data in your app. ChatGPT can guide you on the best ways to do this.

ChatGPT Prompt:

“Explain how to implement Redux with TypeScript in a large-scale React project for better state management.”

9. Set Up User Authentication

Logging users in and keeping their data safe is important. ChatGPT can help set up OAuth2 and other secure login systems.

ChatGPT Prompt:

“Help me set up Google OAuth2 authentication in my Node.js app using Passport.js.”

10. Use Server-Side Rendering for Better SEO

Server-side rendering (SSR) makes your app faster and improves SEO, which means it ranks higher in Google searches. ChatGPT can help you set up SSR with Next.js.

ChatGPT Prompt:

“How do I implement server-side rendering (SSR) in my React app using Next.js?”

Database Management with ChatGPT Prompts for MongoDB

11. Speed Up MongoDB Searches with Indexing

Indexing helps MongoDB find data faster. ChatGPT can help you set up the right indexes to improve performance.

ChatGPT Prompt:

“How can I optimize MongoDB indexes to improve query performance in a large collection with millions of records?”

12. Write MongoDB Aggregation Queries

ChatGPT can help write complex aggregation queries, which let you summarize data in useful ways (like creating reports).

ChatGPT Prompt:

“How can I use MongoDB’s aggregation framework to generate reports from a large dataset?”

13. Manage User Sessions with Redis

Handling lots of users at once can be tricky. ChatGPT can help you use Redis to manage user sessions and make sure everything runs smoothly.

ChatGPT Prompt:

“How can I implement session management using Redis in a Node.js app to handle multiple concurrent users?”

14. Handle Large File Uploads

When your app lets users upload large files, you need to store them in a way that’s fast and secure. ChatGPT can help you set this up.

ChatGPT Prompt:

“What’s the best way to handle large file uploads in a Node.js app and store file metadata in MongoDB?”

15. Automate MongoDB Schema Migrations

When you change how your database is organized (called schema migration), ChatGPT can help ensure it’s done without errors.

ChatGPT Prompt:

“How can I implement database migrations in a Node.js project using MongoDB?”

Boost Productivity with ChatGPT Prompts for CI/CD Pipelines

16. Set Up a CI/CD Pipeline

CI/CD pipelines help automate the process of testing and deploying code. ChatGPT can help you set up a smooth pipeline to save time.

ChatGPT Prompt:

“Guide me through setting up a CI/CD pipeline for my Node.js app using GitHub Actions and Docker.”

17. Secure Environment Variables

Environment variables hold important info like API keys. ChatGPT can help you manage these safely so no one else can see them.

ChatGPT Prompt:

“How can I securely manage environment variables in a Node.js app using dotenv?”

18. Automate Error Handling

When errors happen, it’s important to catch and fix them quickly. ChatGPT can help set up error handling to make sure nothing breaks without you knowing.

ChatGPT Prompt:

“What are the best practices for implementing centralized error handling in my Express.js app?”

19. Refactor Apps into Microservices

Breaking a large app into smaller, connected pieces (called microservices) can make it faster and easier to maintain. ChatGPT can help you do this.

ChatGPT Prompt:

“Show me how to refactor my monolithic Node.js app into microservices and ensure proper communication between services.”

20. Speed Up API Response Times

When APIs are slow, it can hurt your app’s performance. ChatGPT can help you find ways to make them faster.

ChatGPT Prompt:

“What are the strategies to reduce API response times in my Node.js app with MongoDB as the database?”

Common Web Development Questions Solved with ChatGPT Prompts

21. Real-Time Data with WebSockets

Handling real-time updates, like notifications, can be tricky. ChatGPT can help you set up WebSockets to make this easier.

ChatGPT Prompt:

“Help me set up real-time notifications in my Node.js app using WebSockets and React.”

22. Testing React Components

Testing makes sure your code works before you release it. ChatGPT can help you write unit tests for your React components.

ChatGPT Prompt:

“Show me how to write unit tests for my React components using Jest and React Testing Library.”

23. Paginate Large Datasets in MongoDB

Pagination splits large amounts of data into pages, making it easier to load and display. ChatGPT can help set this up efficiently.

ChatGPT Prompt:

“How can I implement efficient server-side pagination in a Node.js app that fetches data from MongoDB?”

24. Manage Roles and Permissions

If your app has different types of users, you need to control what each type can do. ChatGPT can help you set up roles and permissions using JWT.

ChatGPT Prompt:

“Guide me through setting up role-based access control (RBAC) in a Node.js app using JWT.”

25. Implement Caching with Redis

Caching stores data temporarily so it can be accessed quickly later. ChatGPT can help you set up caching to make your app faster.

ChatGPT Prompt:

“Guide me through implementing Redis caching in a Node.js app to reduce database load.”

Conclusion: Use ChatGPT Prompts for Smarter Web Development

ChatGPT makes it easier for developers to manage their work. Whether you’re building with Node.js, React, or MongoDB, ChatGPT can help with debugging, writing code, and improving performance.

Using ChatGPT prompts can help you work smarter, not harder!

1 note

·

View note

Text

Oracle Apex Oauth2 Example

OAuth2 in Oracle APEX: A Practical Guide and Example

OAuth2 is a contemporary and secure authorization framework that allows third-party applications to access protected resources on behalf of a user. By implementing OAuth2 in Oracle APEX, you can provide controlled access to your APEX application’s data and functionality, enabling seamless integration with other services.

Why use OAuth2 with APEX?

Enhanced Security: OAuth2 offers a robust security layer compared to traditional username and password-based authentication. It uses tokens rather than directly passing user credentials.

Fine-grained Access Control: OAuth2 allows you to define specific scopes (permissions) determining the access level granted to third-party applications.

Improved User Experience: Users can conveniently authorize applications without repeatedly sharing their primary credentials.

Prerequisites

A basic understanding of Oracle APEX development

An Oracle REST Data Source (ORDS) instance, if you want to integrate with ORDS-defined REST APIs

Steps for Implementing OAuth2 in Oracle APEX

Create an OAuth2 Client:

Within your APEX workspace, navigate to Shared Components -> Web Credentials.

Click Create and select the OAuth2 Client type.

Provide a name, ID, client secret, and any necessary authorization scopes.

Obtain an Access Token:

The method for obtaining an access token will depend on the OAuth2 flow you choose (e.g., Client Credentials flow, Authorization Code flow).

Utilize the Access Token:

Include the access token in the Authorization header of your API requests to protected resources. Use the format: Bearer .

Example: Accessing an ORDS-based REST API

Let’s assume you have an ORDS-based REST API endpoint for fetching employee data that requires OAuth2 authentication. Here’s how you would configure APEX to interact with it:

Create a REST Data Source:

Go to Shared Components -> REST Data Sources.

Set the authentication type to OAuth2 Client Credentials Flow.

Enter your OAuth2 token endpoint URL, client ID, and client secret.

Use the REST Data Source in Your APEX Application:

Create APEX pages or components that utilize the REST Data Source to fetch and display employee data. APEX will automatically handle obtaining and using the access token.

Additional Considerations

Access Token Expiration: OAuth2 access tokens usually have expiration times. Implement logic to refresh access tokens before they expire.

OAuth2 Flows: Choose the most suitable OAuth2 flow for your integration use case. The Client Credentials flow is often used for server-to-server integrations, while the Authorization Code flow is more common for web applications where a user is directly involved.

youtube

You can find more information about Oracle Apex in this Oracle Apex Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for Oracle Apex Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Oracle Apex here – Oarcle Apex Blogs

You can check out our Best In Class Oracle Apex Details here – Oracle Apex Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Quote

Ever since we announced our intention to disable Basic Authentication in Exchange Online we said that we would add Modern Auth (OAuth 2.0) support for the IMAP, POP and SMTP AUTH protocols. Today, we’re excited to announce the availability of OAuth 2.0 authentication for IMAP and SMTP AUTH protocols to Exchange Online mailboxes. This feature announcement is for interactive applications to enable OAuth for IMAP and SMTP. For additional information about non-interactive applications, please see our blog post Announcing OAuth 2.0 Client Credentials Flow support for POP and IMAP protocols in Exchange Online. Application developers who have built apps that send, read or otherwise process email using these protocols will be able to implement secure, modern authentication experiences for their users. This functionality is built on top of Microsoft Identity platform (v2.0) and supports access to email of Microsoft 365 (formerly Office 365) users. Detailed step-by-step instructions for authenticating to IMAP and SMTP AUTH protocols using OAuth are now available for you to get started. What’s supported? With this release, apps can use one of the following OAuth flows to authorize and get access tokens on behalf of a user. 1.OAuth2 authorization code flow 2.OAuth2 Device authorization grant flow Follow these detailed step-by-step instructions to implement OAuth 2.0 authentication if your in-house application needs to access IMAP and SMTP AUTH protocols in Exchange Online, or work with your vendor to update any apps or clients that you use that could be impacted.

Announcing OAuth 2.0 support for IMAP and SMTP AUTH protocols in Exchange Online - Microsoft Community Hub

0 notes

Text

YouTube Short | What is Difference Between OAuth2 and SAML | Quick Guide to SAML Vs OAuth2

Hi, a short #video on #oauth2 Vs #SAML #authentication & #authorization is published on #codeonedigest #youtube channel. Learn OAuth2 and SAML in 1 minute. #saml #oauth #oauth2 #samlvsoauth2 #samlvsoauth

What is SAML? SAML is an acronym used to describe the Security Assertion Markup Language (SAML). Its primary role in online security is that it enables you to access multiple web applications using single sign-on (SSO). What is OAuth2? OAuth2 is an open-standard authorization protocol or framework that provides applications the ability for “secure designated access.” OAuth2 doesn’t share…

View On WordPress

#Oauth#oauth2#oauth2 authentication#oauth2 authentication flow#oauth2 authentication postman#oauth2 authentication server#oauth2 authentication spring boot#oauth2 authorization#oauth2 authorization code#oauth2 authorization code flow#oauth2 authorization code flow spring boot#oauth2 authorization server#oauth2 authorization sever spring boot#oauth2 explained#oauth2 spring boot#oauth2 spring boot microservices#oauth2 spring boot rest api#oauth2 tutorial#oauth2 vs saml#oauth2 vs saml 2.0#oauth2 vs saml2#saml#saml 2.0#saml 2.0 registration#saml authentication#saml tutorial#saml vs oauth#saml vs oauth 2.0#saml vs oauth vs sso#saml vs oauth2

0 notes

Text

OAuth 2 on the Tumblr API

Ten years ago HTTPS wasn't as nearly as widespread as today. It is hard to believe that HTTPS was essentially opt-in, if available at all! Back then, people also had to get creative when inventing means to delegate access to someone else. One solution was OAuth 1, conceived by the IETF, later adopted by Tumblr in 2011.

Time went by, and here we are in 2021, with hardly any popular website not shielded with HTTPS (including your own blog!). Today, it wouldn't make much sense to adopt OAuth 1 as inconvenient as it is. Yet here we are, still asking people to use outdated protocols for their new fancy Tumblr apps. Not anymore!

Starting today, you have another option: we're officially opening up OAuth 2 support for the Tumblr API!

Get started

OAuth 2 flow requires you to know two key URIs:

For authorization requests, you should use /oauth2/authorize

To exchange authorization codes and refresh tokens, you'll need to use /v2/oauth2/token

If you're familiar with OAuth 2, register an application and check out our API documentation (specifically the section on OAuth 2) to get up and running.

The future of OAuth 1

There are no plans to shut down OAuth 1. Your app will continue to work as usual. But be sure to keep an eye on this blog just in case anything new pops up that would prevent us from serving OAuth 1 requests.

What's more, if you wish to adopt OAuth 2 in your app, given its superior simplicity, you don't have to migrate entirely to OAuth 2 at once. Instead, you can keep the old sign-up / log-in flow working, and exchange OAuth 1 access token to OAuth 2 tokens on the fly. There's only one catch: this exchange will invalidate the original access token, so you should be using only the OAuth 2 Bearer authentication for any subsequent requests.

Next steps

We'll be adding support for OAuth 2 to our API clients in the coming months. Follow this blog to learn firsthand when this happens.

Although we do support client-side OAuth 2 flow, we can't recommend using it unless absolutely required. We might harden it with PKCE someday, though.

That's all from us today. Happy hacking!

117 notes

·

View notes

Text

Lessons Learned: How to avoid getting asked for tokens in MS Azure console apps

Lessons Learned: How to avoid getting asked for tokens in MS Azure console apps

Microsoft has been migrating most of Azure’s functionality to the “Microsoft Graph API”, which is fantastic as that allows us to automate a bunch of things within the Azure cloud. However, the Graph API is secured behind an OAuth2 flow, and if you’ve ever dealt with OAuth2 you’d know that it revolves around “tokens” which are issued by a central authentication/authorization server for any given…

View On WordPress

0 notes

Text

Innovating on Authentication Standards

By George Fletcher and Lovlesh Chhabra

When Yahoo and AOL came together a year ago as a part of the new Verizon subsidiary Oath, we took on the challenge of unifying their identity platforms based on current identity standards. Identity standards have been a critical part of the Internet ecosystem over the last 20+ years. From single-sign-on and identity federation with SAML; to the newer identity protocols including OpenID Connect, OAuth2, JOSE, and SCIM (to name a few); to the explorations of “self-sovereign identity” based on distributed ledger technologies; standards have played a key role in providing a secure identity layer for the Internet.

As we navigated this journey, we ran across a number of different use cases where there was either no standard or no best practice available for our varied and complicated needs. Instead of creating entirely new standards to solve our problems, we found it more productive to use existing standards in new ways.

One such use case arose when we realized that we needed to migrate the identity stored in mobile apps from the legacy identity provider to the new Oath identity platform. For most browser (mobile or desktop) use cases, this doesn’t present a huge problem; some DNS magic and HTTP redirects and the user will sign in at the correct endpoint. Also it’s expected for users accessing services via their browser to have to sign in now and then.

However, for mobile applications it's a completely different story. The normal user pattern for mobile apps is for the user to sign in (via OpenID Connect or OAuth2) and for the app to then be issued long-lived tokens (well, the refresh token is long lived) and the user never has to sign in again on the device (entering a password on the device is NOT a good experience for the user).

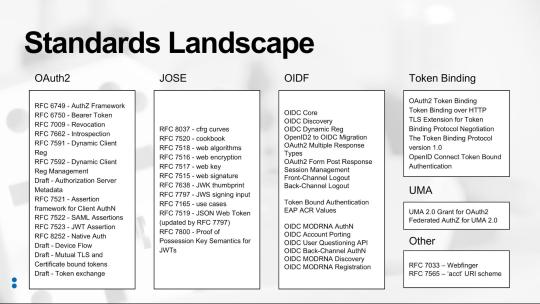

So the issue is, how do we allow the mobile app to move from one identity provider to another without the user having to re-enter their credentials? The solution came from researching what standards currently exist that might address this use case (see figure “Standards Landscape” below) and finding the OAuth 2.0 Token Exchange draft specification (https://tools.ietf.org/html/draft-ietf-oauth-token-exchange-13).

The Token Exchange draft allows for a given token to be exchanged for new tokens in a different domain. This could be used to manage the “audience” of a token that needs to be passed among a set of microservices to accomplish a task on behalf of the user, as an example. For the use case at hand, we created a specific implementation of the Token Exchange specification (a profile) to allow the refresh token from the originating Identity Provider (IDP) to be exchanged for new tokens from the consolidated IDP. By profiling this draft standard we were able to create a much better user experience for our consumers and do so without inventing proprietary mechanisms.

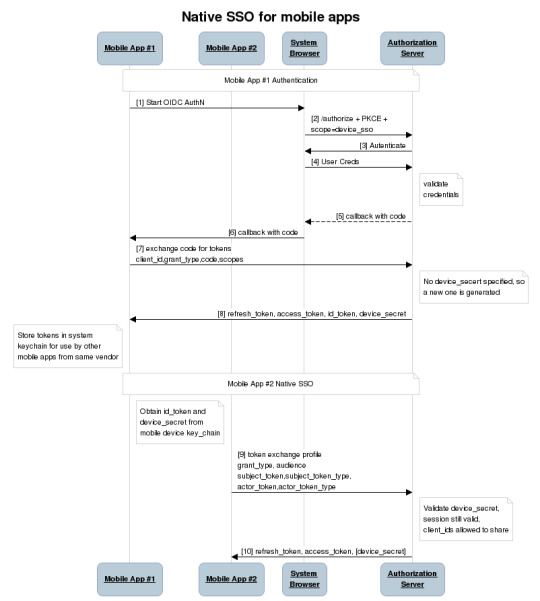

During this identity technical consolidation we also had to address how to support sharing signed-in users across mobile applications written by the same company (technically, signed with the same vendor signing key). Specifically, how can a signed-in user to Yahoo Mail not have to re-sign in when they start using the Yahoo Sports app? The current best practice for this is captured in OAuth 2.0 for Natives Apps (RFC 8252). However, the flow described by this specification requires that the mobile device system browser hold the user’s authenticated sessions. This has some drawbacks such as users clearing their cookies, or using private browsing mode, or even worse, requiring the IDPs to support multiple users signed in at the same time (not something most IDPs support).

While, RFC 8252 provides a mechanism for single-sign-on (SSO) across mobile apps provided by any vendor, we wanted a better solution for apps provided by Oath. So we looked at how could we enable mobile apps signed by the vendor to share the signed-in state in a more “back channel” way. One important fact is that mobile apps cryptographically signed by the same vender can securely share data via the device keychain on iOS and Account Manager on Android.

Using this as a starting point we defined a new OAuth2 scope, device_sso, whose purpose is to require the Authorization Server (AS) to return a unique “secret” assigned to that specific device. The precedent for using a scope to define specification behavior is OpenID Connect itself, which defines the “openid” scope as the trigger for the OpenID Provider (an OAuth2 AS) to implement the OpenID Connect specification. The device_secret is returned to a mobile app when the OAuth2 code is exchanged for tokens and then stored by the mobile app in the device keychain and with the id_token identifying the user who signed in.

At this point, a second mobile app signed by the same vendor can look in the keychain and find the id_token, ask the user if they want to use that identity with the new app, and then use a profile of the token exchange spec to obtain tokens for the second mobile app based on the id_token and the device_secret. The full sequence of steps looks like this:

As a result of our identity consolidation work over the past year, we derived a set of principles identity architects should find useful for addressing use cases that don’t have a known specification or best practice. Moreover, these are applicable in many contexts outside of identity standards:

Spend time researching the existing set of standards and draft standards. As the diagram shows, there are a lot of standards out there already, so understanding them is critical.

Don’t invent something new if you can just profile or combine already existing specifications.

Make sure you understand the spirit and intent of the existing specifications.

For those cases where an extension is required, make sure to extend the specification based on its spirit and intent.

Ask the community for clarity regarding any existing specification or draft.

Contribute back to the community via blog posts, best practice documents, or a new specification.

As we learned during the consolidation of our Yahoo and AOL identity platforms, and as demonstrated in our examples, there is no need to resort to proprietary solutions for use cases that at first look do not appear to have a standards-based solution. Instead, it’s much better to follow these principles, avoid the NIH (not-invented-here) syndrome, and invest the time to build solutions on standards.

36 notes

·

View notes

Text

Configure IdentityServer for Xamarin Forms

In this new post, I explain how to configure IdentityServer for Xamarin Forms to integrate Web Authenticator using Xamarin Essentials.

First, I wrote a post about how to implement the authentication in Xamarin Forms with IdentityServer. So, my focus was only on the Xamarin side. Here I want to explain what the IdentityServer configuration is in order to succeed in the login.

Create a new client

Have you ever wondered how hard it would be to set up a minimal viable authentication server that uses industry standards and usable from your mobile Xamarin application? Well, I have, and I believe in having found a solution that can be a great starting point and will allow you to expand the answer should you ever need to do so.

One common industry standard is OpenID / OAuth2, which provides a standardized authentication mechanism that allows user identification securely and reliably. You can think of the identity service as a web server that identifies a user and provides the client (website/mobile app, etc.) to authenticate itself with another application server that said client uses.

The recommended flow for a mobile app

While the OAuth standard is open to anyone with a computer and an internet connection, I generally do not recommend writing your own implementation. My go-to solution for setting up an identity provider is the IdentityServer.

IdentityServer4 is built based on the OAuth spec. It is built on the trusted ASP.NET Core but requires quite some know-how to get the configurations and other settings ready for use. Luckily, there is a quickstart template that you can install via the dotnet command line and then make your server. You can find the repository here on GitHub. After following the install instructions, we can create a server with the following command:

dotnet new sts -n XamarinIdentity.Auth

The solution is pretty much ready to go but let’s look at the configuration of the IdentityServer in Config.cs and make some adjustments in the GetClients method.

Add a client

Based on the template, let’s make some changes that leave us with the following final configuration:

public static IEnumerable<Client> GetClients(IConfigurationSection stsConfig) { return new List<Client> { // mobile client new Client { ClientName = "mobileclient-name-shown-in-logs", ClientId = "the-mobileclient-id-of-your-choice", AllowedGrantTypes = GrantTypes.Code, AllowOfflineAccess = true, // allow refresh tokens RequireClientSecret = false, RedirectUris = new List<string> { "oidcxamarin101:/authorized" }, PostLogoutRedirectUris = new List<string> { "oidcxamarin101:/unauthorized", }, AllowedScopes = new List<string> { "openid", "role", "profile", "email" } } }; }

Generally, you can set the ClientName, ClientId, RedirectUris and PostLogoutRedirectUris to values of your choosing. The scopes represent the defaults. Further note that by setting AllowOfflineAccess to true, the user can request refresh tokens which means that as long as the refresh token is valid, the user will not have to log in but can use said refresh token to request a new access token. In mobile apps, this is generally the prefered behaviour since users usually have their personal device and therefore expect the app to “store” their login.

As you can see, The RedirectUris and PostLogoutRedirectUris are using a custom URL oidcxamarin101:/ that identifies my app.

IdentityServer Admin

So, very often we have to create the front-end to manage users, integrate the authentication with external services suck as Facebook and Twitter, make API calls secure. IdentityServer offers a nice UI for administration but it is quite expensive. Now, I found a very nice work on GitHub: Skoruba.IdentityServer4.Admin.

This is cool! The application is written in ASP.NET Core MVC using .NET5.

Skoruba IdentityServer4 Admin UI

Add a new client

So, adding a new client with this UI is quite easy. In the IdentityServer Admin website, create a new Client. Then, the important configuration are:

Under Basic

Add this RedirectUris : oidcxamarin101:/authenticated

Allowed Grant Types: authorization_code

Under Authenticaton/Logout

Add Post Logout Redirect Uris: oidcxamarin101:/signout-callback-oidc

Basic configuration

Authentication/Logout configuration

I hope this is useful! Do you know Microsoft is releasing .NET MAUI that will replace Xamarin? Here how to test it with Visual Studio 2022.

If you have any questions, please use the Forum. Happy coding!

The post Configure IdentityServer for Xamarin Forms appeared first on PureSourceCode.

from WordPress https://www.puresourcecode.com/dotnet/xamarin/configure-identityserver-for-xamarin-forms/

1 note

·

View note

Text

Top Practices to enhance Mobile App Security for Developers!

Mobile apps have brought a revolutionary shift in everything around us. It has made a paradigm shift in how businesses and individuals operate in their respective capacities. This has helped to connect with the target audience very easily thereby boosting the profits in a big way. No wonder there is a huge demand for mobile application development worldwide. However, with the development of apps, come security nuances that businesses should not ignore. If the apps are not well-engineered against security threats, they can become an easy target for hackers to do malicious activities. So, companies must ensure that they proactively work on ‘how to build secured apps’ and also follow certain mobile app security standards during the development process.

Do you know what the hackers with malicious intention do?

Tamper your app’s code and reverse-engineer to create a hoax app containing malware.

Hack customer data and use for fraud or identity theft

Induce malware in the apps to access data, grab passcodes for screens, store keystrokes, etc.

Steal sensitive data, intellectual property, business assets, etc.

Access your IP and launch harmful attacks

Would you ever want something like this happening to your app? Never! So, mobile app security cannot be taken for granted. Yet, it is quite shocking that over 75% of the mobile apps fail to meet the basic security standards.

This blog outlines some of the crucial mobile app security measures that every mobile application development company must employ while they architecture their apps. Before we delve deeper, let us quickly glance at some common security lapses that could occur while architecting secured mobile apps.

Notable Security Lapses in the Mobile Application Development Process

Not checking the cache appropriately and not using a cache cleaning cycle

Not doing thorough testing of the app

Applying weak encryption algorithms or no algorithms at all

Utilizing an unreliable data storage system

Neglecting the Binary protection

Picking up a code written by hackers by mistake

Neglecting the transport layer security

Not ensuring the server-side security

Mobile App Security Best Practices

Here are a few common security tips that are endorsed by various industry experts. These are applicable to both Android and iOS apps; however, some additional tips and guidelines are available for both platforms, which we will cover in another blog. That simply means, after applying the below practices, one can also implement best security practices for iOS app and Android app meant for respective platforms. For now, let’s get started with the common security measures for mobile apps.

App-code Encryption:

Encryption of the code and testing it for vulnerabilities is one of the most fundamental and crucial steps in the app development process. Before launching the app, mobile app developers protect the app code with encryption and practices like obfuscation and minification. Also, it is necessary to code securely for the detection of jailbreaks, checksum controls, debugger detection control, etc.

Data Encryption:

Along with the code encryption, it is essential to encrypt all the vital data that is exchanged over the apps. In the case of data theft, hackers shouldn’t be able to access and harm the data without the security key. So, key management must be a priority. File-level encryption secures the data in the files. The encryption of mobile databases is equally important. Also, various data encryption algorithms can be used like Advanced Encryption Standard (AES), Triple data integration standard, RSA technique, etc.

Robust Authentication:

If the authentication is weak, severe data breaches can take place. Hence, it is imperative to ensure a powerful authentication in the apps. Make sure that your app only allows strong passwords. Utilizing two-factor authentication is a good practice. Also, biometric authentications like a fingerprint, retina scan, etc. are widely being used these days in mobile apps to assure high security.

Protecting the Binary Files:

Negligence towards binary protection gives a free-hand to hackers for injecting malware in apps. It can even cause severe data thefts and lead to monetary losses ultimately. Therefore, binary hardening procedures must be utilized to ensure the protection of binary files against any threats. Several hardening techniques like Buffer overflow protection or Binary Stirring can be applied in this scenario.

Servers’ and other Network Connections’ Security:

The security of servers and network connections is an integral part of mobile app security as these are a leading target of hackers. To keep them secure, it is advisable to use an HTTPS connection. Also, the APIs must be thoroughly verified to shun from the spying of data that is transferred from the client to servers. Another security measure is to scan the app with the use of automated scanners frequently. Also, enhanced security can be ensured with encrypted connections or VPN i.e. a virtual private network.

API Security:

Since mobile application development hinges so much with the APIs, protecting them from threats is not an option but a necessity. APIs are the channels for the flow of data, functionality, content, etc. between the cloud, apps, and users. Vital security measures like authorization, authentication, and identification help in the creation of a secure and robust API. To enhance the app security, an API gateway can be integrated. Moreover, for secure communication between APIs, mobile app developers can use various authentications like OAuth and OAuth2.

Exhaustive Testing and Updating the Apps:

To speed up the time-to-market, testing usually falls to the sideways. But this step helps to avoid any anticipated security loopholes in the apps. So, before launching the apps and even after their launch, rigorous security testing must be conducted. Thus, potential security threats can be identified and resolved proactively. Also, updating the apps from time-to-time will help to eliminatethe security bugs apart from other issues that arise in the apps after it is out in the market.

Code Signing Certificates:

Code signing certificates help in enhancing mobile code security. In this process, the certificate authority needs to digitally sign the scripts and executables. These certificates help in authenticating the author and assure that the code won’t be edited or tampered by anyone else. A Code Signing Certificate is a must for every publisher or mobile app developer.

Final Verdict:

Thousands of mobile apps arrive in the market daily, but if, they aren’t protected well, they can pose a threat to the entire ecosystem. Needless to say, hackers and fraudsters are lurking around to steal important data and destroy app security. On the contrary, a well-secured mobile app can prove to be highly efficient, reliable, and profitable for the business as well as the end-users.

So we can conclude that mobile app security holds the utmost importance in the whole process. A smart strategy along with the guidelines mentioned in this blog can help you build a powerful impeccable app with high-level security.

To know more about our core technologies, refer to links below

React Native App Development Company

Angular App Development Company

Ionic App Development Company

0 notes

Text

Cross Platform Google OAuth2

It was way more challenging than we expected in order to implement Google’s OAuth on the various platforms involved in one of our projects. We needed to implement OAuth on a .NET server, .NET MVC web app, Reactjs, Chrome Books, and iOS. Each one required a unique library and flow.

.NET server

download the library here: https://www.nuget.org/packages?q=Google.Apis.oauth2&prerelease=true&sortOrder=relevance

general guide: https://developers.google.com/api-client-library/dotnet/guide/aaa_oauth

how to exchange a authentication code from a web app and get a refresh token: https://developers.google.com/identity/sign-in/web/server-side-flow

Chrome App

Use the chrome.identity library: https://developer.chrome.com/apps/app_identity

In the chrome.identity library you can use identity.getAuthToken to get an access token, or you can use the identity.launchWebAuthFlow to get a authentication code you can then send to your API and get a refresh token. See my StackOverflow answer here: https://stackoverflow.com/questions/42562115/access-code-for-google-oauth2-in-chrome-app-invalid-credentials/49696037#49696037

Reactjs Web App

https://github.com/anthonyjgrove/react-google-login

iOS Cordova App

https://github.com/EddyVerbruggen/cordova-plugin-googleplus

.NET MVC

We were not able to get the .NET MVC tutorials to work, and ended up using the js library. Followed this guide: https://developers.google.com/identity/sign-in/web/sign-in, then this one in order to get the refresh token: https://developers.google.com/identity/sign-in/web/server-side-flow

2 notes

·

View notes

Text

IFS Authentication flow with OAuth and OpenID Connect

IFS Authentication flow with OAuth and OpenID Connect

In my previous article I wrote about Capturing IFS Aurena requests using Postman which is a shortcut method for calling IFS main cluster resources which requires OAuth2 and OpenID Connect authentication. I thought of getting little deep dive this time and try out how we can create a successful request to IFS with OAuth authentication. What this post is about… What is OAuth and how it’s used in…

View On WordPress

0 notes