#raid6

Explore tagged Tumblr posts

Text

💾✨ RAID5 vs RAID6: il dramma dei dischi rigidi ✨💾

Ok, ragazzi, immaginate questo: hai un server pieno di dati preziosissimi (sì, parlo di quei file che non puoi assolutamente permetterti di perdere). 🔥 E ora arriva il grande dilemma: RAID5 o RAID6? 😳

⚡ RAID5? Yeah, super cool, fa il suo dovere e ti dà ottime performance. Ma... plot twist! Se due dischi decidono di fare un tuffo nel nulla? Boom, tutti i tuoi dati se ne vanno. Bye bye. 👋💀

💪 RAID6 invece è tipo il bodyguard dei tuoi dati. Due dischi possono andare in fumo, e lui sarà ancora lì, fermo, proteggendo i tuoi preziosissimi gigabyte. Due guasti? Nessun problema. RAID6 ce l’ha sotto controllo. 👊😎

✨ Morale della favola? Se ci tieni a quelle foto imbarazzanti del liceo, ai documenti aziendali super importanti o a qualsiasi cosa tu abbia lì, RAID6 è l’eroe di cui hai bisogno. Certo, costa un po’ di più, ma hey, prevenire è meglio che piangere su dischi guasti, no? 😅

https://www.recdati.it/raid6-vs-raird5/

#data recovery#recupero dati#recuprodati#recdati#hard disk#hard drive#raid5#raid6#raid#nas#server#datarecovery

0 notes

Text

I deserve at least $1,000 with which to build my dream Raid6 NAS with at least 100 TB of WD Reds. But that will not happen any time soon so I simply have to suffer for now

4 notes

·

View notes

Text

LincPlus è un'azienda cinese, con base a Shenzhen, nata nel 2018 da un appassionato gruppo di amanti della tecnologia, in pochi anni è riuscita ad espandersi a macchia d'olio nei mercati europei ed americani, conquistando sempre più utenti nel mondo. Di base è specializzata in due linee di prodotti: LincStudio e LincStation. Il primo è un tablet all-in-one, noi invece vi proponiamo la recensione di LincStation N1, un NAS a 6-bay, ovvero con 6 slot differenti dove posizionare i dischi di memoria, perfetto per l'utenza domestica, ma non solo. Scopriamolo con la recensione completa. Design e Estetica Il prodotto presenta dimensioni relativamente ridotte, per gli standard del settore, ha una form factor che ricorda più un decoder o un lettore di videocassette, è difatti dotato di una forma allungata, sufficientemente sottile, con peso di 800 grammi. Nello specifico, le dimensioni sono di 14,99 x 20,83 centimetri, con uno spessore che non va oltre i 3,81 cm. L'eleganza regna da padrona, con il dualismo che l'azienda è stata in grado di creare sul prodotto: nella parte superiore troviamo un "coperchio" realizzato in plastica, di colorazione nera, con finitura opaca, al cui centro viene indicato il nome del dispositivo. Ad appoggiare sulla superficie inferiore ci pensa una parte metallica, con finitura spazzolata, molto resistente e dal design sicuramente ammirevole, che innalza notevolmente il livello qualitativo del prodotto. All'interno della confezione è possibile trovare tutto il necessario per il suo corretto funzionamento, oltre al NAS stesso, sono presenti accessori di vario genere (per facilitare il montaggio degli hard disk), oltre al cavo di alimentazione (data l'assenza di una batteria integrata), l'alimentatore vero e proprio ed un manuale di istruzioni. Hardware e Specifiche LincStation N1, come anticipato, è un NAS classico con licenza Unraid, che prevede la possibilità di virtualizzare qualsiasi sistema operativo, sia esso Android, Linux o Windows, così da rendere il prodotto non solo il NAS di casa, ma anche una sorta di cloud personale o home server. A tessere le fila è presente processore Intel Celeron N5105, che viene affiancato da 16GB di RAM, nonché da una connettività virtuale sufficientemente fornita, come WiFi 6 dual band e bluetooth 5.2. Uno dei suoi più grandi pregi riguarda la possibilità di installare un numero particolarmente elevato di hard disk, è infatti possibile raggiungere un massimo di 48TB di spazio di archiviazione, sfruttando i 4 slot per M.2 NVMe SSD (massimo 8TB singolarmente) presenti anteriormente e superiormente, a cui si aggiungono altri 2 slot Sata3 da massimo 8TB, per un totale, come anticipato, di 64TB. La flessibilità è la parola d'ordine, proprio perché l'utente si ritrova a poter variare il setup a proprio piacimento, senza dover sottostare a vincoli particolari, ma anche riuscendo così a raggiungere una capacità complessiva particolarmente abbondante (fino a 10 milioni di foto, 15 milioni di file e 1 milione di video in HD), e più che sufficiente per un ambiente domestico, o professionali di piccole/medie dimensioni. Il salvataggio dei dati è sicuro ed affidabile, con il pieno supporto a tutti i livelli RAID conosciuti (RAID0 fino a RAID6). L'installazione è semplicissima ed alla portata di tutti i consumatori, infatti per quanto riguarda gli slot anteriori basta rimuovere la placchetta frontale per poi andare ad inserirli manualmente. Discorso simile per gli slot posteriori, non sono richieste abilità particolari. Sono compatibili tutti gli hard disk o SSD da 2,5" con interfaccia sata3, o gli NVME M.2 2280, sottolineando la limitazione nello spessore dell'alloggiamento: il disco non deve essere superiore a 9,5 millimetri. Una volta acceso, LincStation N1 risulta essere particolarmente silenzioso (anche perché permette l'installazione di tutti SSD, che tecnicamente sono più silenziosi dei classici dischi ottici), grazie alla presenza di un sistema di raffreddamento efficiente e performante, capace di mantenere la temperatura (quando in standby), sempre all'incirca sui 50 gradi celsius. Durante questa fase, le ventole non girano (o comunque pochissimo), limitandone moltissimo la rumorosità; da notare che la velocità di rotazione è direttamente proporzionale alla temperatura e all'operatività, per cercare sempre di bilanciare performance e silenzio. Connettività e Prestazioni Smontando la parte anteriore, tramite la quale si accede ai due slot, possiamo notare due peculiarità: la presenza di una striscia LED, che crea atmosfera ed accresce ulteriormente l'appeal estetico, oltre ad una porta USB-C. Spostando l'attenzione postderiormente, invece, si apre una buona dotazione di connettori fisici, al netto della DC port 12V/5A, che ci ricorda la necessità di collegare fisicamente il NAS ad una presa di corrente per il suo funzionamento, sono presenti due porte USB-A 3.0, una ehternet RJ45 a 2,5Gbps, per una connessione dati velocissima, oltre ad una porta HDMI classica con trasmissione dai in 4K a 60fps. La sua natura di media center la notiamo proprio in quest'ultima connessione, il 4K a 60fps, ovvero permette di collegare direttamente il NAS ad un monitor o un televisore, così da riuscire a trasmettere contenuti video alla massima qualità, senza perdite di alcun tipo. La connessione alla rete può avvenire sia tramite la porta ethernet fino a 2.5Gbps (quindi velocissima), che con il WiFi 6 dual-band. Il sistema operativo è semplice ed intuitivo, molto facile da utilizzare anche per gli utenti meno esperti, offre alcune funzionalità particolarmente interessanti: backup automatico delle immagini direttamente dal telefono in un qualsiasi momento, gestione intelligente dei file, backup centralizzato, con i dati ricevuti da varie fonti, possibilità di salvare (e ricevere) dati dal cloud, oppure di sincronizzarli da qualsiasi sorgente, siano altri NAS, computer con sistema operativo Windows, dispositivi mobile e Apple Mac. Nel complesso ne siamo rimasti piacevolmente soddisfatti, proprio perché è risultato essere un sistema operativo alla mano, intuitivo e facile da utilizzare. Conclusioni Arrivati a questo punto è il momento di trarre le conclusioni su tutto quanto abbiamo raccontato nel corso della recensione. LincStation N1 può essere una validissima alternativa ai tanti sistemi NAS che potete trovare sul mercato, parliamo ad esempio dei quotati Synology, TerraMaster, QNAP e similari, che riesce a distinguersi in primis con un'estetica davvero unica nel suo genere. Il prodotto è incredibilmente elegante, robusto ed affidabile, presenta questo form factor da videoregistratore di una volta, da utilizzare sdraiato e non in verticale, capace di soddisfare le esigenze anche dell'utente più preciso, ma allo stesso tempo di avere dimensioni relativamente ridotte, tanto da risultare essere quasi portatile. Inutile dire che la presenza di 6 slot di archiviazione (6-bay), siano uno dei punti forti di questo dispositivo, la possibilità di intallare SSD rende l'accesso ai dati decisamente più rapido, la capacità di 48TB è molto buona e soddisfacente, con manutenzione quasi ridotta al imino e rapido accesso ai singoli dischi. In ultimo non ci resta che parlare della connettività, sicuramente ben fornita, con il plus rappresentato dal connettore fisico HDMI presente nella parte posteriore per il collegamento diretto al televisore, oppure della porta ethernet fino a 2,5GBps, per un accesso ai dati ancora più rapido. Il sistema operativo è altrettanto versatile, adatto alla maggior parte degli utilizzi, con buonissime possibilità di personalizzazione, e capacità di adattasi alle singole esigenze del consumatore, sia esso poco esperto, che conoscitore approfondito del settore. LincStation N1 viene commercializzato ad un prezzo di listino di 399 euro, una cifra che a conti fatti è decisamente più bassa di tutti i diretti concorrenti, poiché al giorno d'oggi con questa spesa in media si acquista un NAS 2-bay con spesso neanche il supporto agli SSD o con il modulo WiFi. L'acquisto può essere completato direttamente su Amazon, con consegna a domicilio in tempi brevissimi, e la certezza di poter abbracciare senza alcun problema la solita ottima garanzia legale della durata di 24 mesi, la quale copre ogni difetto di fabbrica che l'utente potrebbe eventualmente riscontrare in fase di utilizzo. Altre informazioni sul prodotto disponibili sul sito ufficiale. Read the full article

0 notes

Video

youtube

Installazione e configurazione di un Server TrueNAS in Raid6 con protoco...

0 notes

Text

Grand Strategy Manifesto (16^12 article-thread ‘0x1A/?)

youtube

Broken down many times yet still unyielding in their vision quest, Nil Blackhand oversees the reality simulations & keep on fighting back against entropy, a race against time itself...

So yeah, better embody what I seek in life and make real whatever I so desire now.

Anyways, I am looking forward to these... things in whatever order & manner they may come:

Female biological sex / gender identity

Ambidextrous

ASD & lessened ADD

Shoshona (black angora housecat)

Ava (synthetic-tier android ENFP social assistance peer)

Social context

Past revision into successful university doctorate graduation as historian & GLOSS data archivist / curator

Actual group of close friends (tech lab peers) with collaborative & emotionally empathetic bonds (said group large enough to balance absences out)

Mutually inclusive, empowering & humanely understanding family

Meta-physics / philosophy community (scientific empiricism)

Magicks / witch coven (religious, esoteric & spirituality needs)

TTRPGs & arcade gaming groups

Amateur radio / hobbyist tinkerers community (technical literacy)

Political allies, peers & mutual assistance on my way to the Progressives party (Harmony, Sustainability, Progress) objectives' fulfillment

Local cooperative / commune organization (probably something like Pflaumen, GLOSS Foundation & Utalics combined)

Manifesting profound changes in global & regional politics in a insightful & constructive direction to lead the world in a brighter direction fast & well;

?

Bookstore

Physical medium preservation

Video rental shop

Public libraries

Public archives

Shopping malls

Retro-computing living museum

Superpowers & feats

Hyper-competence

Photographic memory

600 years lifecycle with insightful & constructive historical impact for the better

Chronokinesis

True Polymorph morphology & dedicated morphological rights legality (identity card)

Multilingualism (Shoshoni, French, English, German, Hungarian, Atikamekw / Cree, Innu / Huron...)

Possessions

Exclusively copyleft-libre private home lab + VLSI workshop

Copyleft libre biomods

Copyleft libre cyberware

Privacy-respecting wholly-owned home residence / domain

Privacy-respecting personal electric car (I do like it looking retro & distinct/unique but whatever fits my fancy needs is fine)

Miyoo Mini+ Plus (specifically operating OnionOS) & its accessories

BeagleboneY-AI

BeagleV-Fire

RC2014 Mini II -> Mini II CP/M Upgrade -> Backlane Pro -> SIO/2 Serial Module (for Pro Tier) -> 512k ROM 512k RAM Module + DS1302 Real Time Clock Module (for ZED Tier) -> Why Em-Ulator Sound Module + IDE Hard Drive Module + RP2040 VGA Terminal + ESP8266 Wifi + Micro SD Card Module + CH375 USB Storage + SID-Ulator Sound Module -> SC709 RCBus-80pin Backplane Kit -> MG014 Parallel Port Interface + MG011 PseudoRandomNumberGen + MG003 32k Non-Volatile RAM + MG016 Morse Receiver + MG015 Morse Transmitter + MG012 Programmable I/O + "MSX Cassette + USB" Module + "MSX Cartridge Slot Extension" + "MSX Keyboard" -> Prototype PCBs

Intersil 6100/6120 System ( SBC6120-RBC? )

Pinephone Beta Edition Convergence Package (Nimrud replacement)

TUXEDO Stellaris Slim 15 Gen 6 AMD laptop (Nineveh replacement)

TUXEDO Atlas X - Gen 1 AMD desktop workstation (Ashur replacement)

SSD upgrade for Ashur build (~4-6TB SSD)

LTO Storage

NAS RAID6 48TB capacity with double parity (4x12TB for storage proper & 2x12TB for double parity)

Apple iMac M3-Max CPU+GPU 24GB RAM MagicMouse+Trackpad & their relevant accessories (to port Asahi Linux, Haiku & more sidestream indie-r OSes towards… with full legal permission rights)

Projects

OpenPOWER Microwatt

SPARC Voyager

IBM LinuxOne Mainframe?

Sanyo 3DO TRY

SEGA Dreamcast with VMUs

TurboGrafx 16?

Atari Jaguar CD

Nuon (game console)

SEGA's SG-1000 & SC-3000?

SEGA MasterSystem?

SEGA GameGear?

Casio Loopy

Neo Geo CD?

TurboExpress

LaserActive? LaserDisc deck?

45rpm autoplay mini vinyl records player

DECmate III with dedicated disk drive unit?

R2E Micral Portal?

Sinclair QL?

Xerox Daybreak?

DEC Alpha AXP Turbochannel?

DEC Alpha latest generation PCI/e?

PDP8 peripherals (including XY plotter printer, hard-copy radio-teletypes & vector touchscreen terminal);

?

0 notes

Text

QNAP QuTS ZFS速度メモ

TS-h1277XU-RPを稼働させてみたので速度計測。メインPCとNASは10GBASEのSFP+で接続。

2.5インチSSD単発。

M.2 SSD単発。ここがHDDでNASを構成する目標速度かな。

3.5インチHDD×3本のraid5で読み込みがやっとNAS上のM.2 SSD並みに。

3.5インチHDD×8本のraid6。読み書き共にNAS上のM.2 SSDに並んできた。

3.5インチHDD×9本のraid6。これ以上増やしても変化はなさそう。raidにはいずれもキャッシュを積み込み済み。これなら何年もこのままNASを買い替えずに戦えそう。10TBのHDDがもう少しお安くなってポンポン買えるようになると嬉しいとこ��。

0 notes

Text

Yeah ...

The plan is currently to basically do this. Right now I have a 2-Bay NAS with 2 8TB drives in it in a RAID0 totaling ~14TB usable space. In the next few days I'll be purchasing a 6-Bay NAS and putting 6 14TB enterprise-level drives in it and configuring it in a RAID6, netting me about 60TB of usable space. The RAID6 allows up to two drives to fail before data is unretrievable. The NAS itself allows hot-swapping, so should a drive fail I pull it, replace it, and rebuild the RAID. Additionally, it supports expansion slots for up to 10 more drives.

I've got a Raspberry Pi 4 8GB running LibreELEC and Kodi as a media center, and I've got another Raspberry Pi 4 4GB running Raspberry Pi OS and Plex for streaming so my partner and I can watch movies and shows at her place and I can stream my music library wherever I go with Plexamp.

I'm basically tired of Google's shit. I'm tired of Netflix's shit. I'm tired of Disney and Amazon and Spotify's shit. I'm tired of algorithms that only feed me content I don't want or ask for. I'm tired of having advertising pissed into my eyeballs at every fucking opportunity. I'm tired of being tracked, traced, commodified, and sold to the highest bidder.

There's more management involved here yes, but honestly? I'm at the point where I don't really mind anymore. I'd rather soak 90 minutes a week into downloading and cataloging shit again than to hand over my metadata willingly to anybody who shows up with a shiny app and a cheesy one-liner.

NordVPN is $5/mo (and NordPass is $1.50/mo) and is usually on super sale too. That's cheaper than any streaming service. Also, piracy is fun and hip and sexy and will make you more attractive to folks of your preferred gender guaranteed.* *Not a guarantee

26K notes

·

View notes

Text

tech: Wait for Synology rebuild, SHR2 > RAID6 and Drive Order matters

OK, we finally have things in place and we’ve been copying files from an older SHR (Synology Hybrid RAID) system to a newly created SHR2 (this allows two levels of redundancy). The problem is that for the first week, I was only seeing 100MBps transfer rates, but the underlying disks were running at 600MBps. Well, the “optimizing” disks finally stopped and the next copy is 600MBps. Lesson: When…

View On WordPress

0 notes

Text

So I'm learning.

Plex is ... different than anything I've worked with previously. Since starting on this journey, I've abandoned the original Raspberry Pi device I originally set it up on, switching instead to an old Lenovo T580 laptop I rescued from the recycling pile at work. It's got 8GB of RAM and an octa-core i5 8th Gen, so it's not a beast, but pretty alright for what I'm doing. I've installed and configured Ubuntu 22.04 on it as well.

I've purchased a PlexPass month-to-month for now to see if there is actually value in it, and so far it's kinda dope. The music stuff is great! I've been using it to stream music for the last several weeks and it works a treat!

I did this for three (3) reasons:

I wanted a project and this seemed super fun (I was not wrong!)

I wanted to be able to stream content I download at my partner's place without having to carry around a flash drive or something like that and,

Fuck streaming services in the ear. I'm tired of them nickel-and-diming users on their failing nonsense.

I've leaned into this a bit more than I thought I would honestly. I also previously posted that I purchased a Synology Diskstation DS1621+ and 6 12TB IronWolf enterprise-class drives and put them into a RAID6, netting me 43TB of space with dual redundancy, which is nice, but cost a bit much.

But to the real meat. My partner has been streaming content and said it keeps stuttering. I checked the internet streaming settings and they seemed OK, but it kept happening. I checked the logs and transcoding is ... weird. I didn't really understand what it was doing. So after a lengthy dive into r/Plex, I reconfigured some things and now she says that it's running well and not hiccuping anymore! So win!

Additionally, I've set up some housekeeping for the device as well. I've configured a cron job to update and upgrade the system with apt on the 2nd and 15th of every month at 2am, and configured a weekly restart every Sunday at 1am. That's mostly to clear the /tmp folder where the transcoded files are kept, but I figure it can't hurt much either.

I'm going to keep tweaking here or there, but I think overall it's working pretty OK. It's been super fun!

0 notes

Photo

🚀 Vuoi elevare il tuo sistema di archiviazione al livello successivo? 😮 Confuso tra le configurazioni RAID 0, 1, 5, 6, 10 e JBOD? 🤔 Noi di G Tech Group abbiamo preparato una guida dettagliata che spiega le differenze tra queste configurazioni! 📘 ✅ Scopri la velocità di RAID 0 ✅ Esplora la sicurezza di RAID 1 ✅ Trova l'equilibrio con RAID 5 e 6 ✅ Massimizza la capacità con JBOD Dai un'occhiata al nostro articolo e fai la scelta giusta per il tuo business! 💼👩💼👨💼 👉 https://gtechgroup.it/raid-0-1-5-6-10-jbod-spieghiamo-le-differenze-tra-le-configurazioni/ 🎯 #RAID #ConfigurazioniRAID #RAID0 #RAID1 #RAID5 #RAID6 #RAID10 #JBOD #Sicurezza #Prestazioni #Velocità #Capacità #Archiviazione #GuidaRAID #Tecnologia #Business #IT #GTechGroup #HelpMePost #SoluzioniIT #Sistema #Hardware Questo post è stato pubblicato con #HelpMePost, provalo anche tu su helpmepost.com! 🚀

0 notes

Video

youtube

TrueNAS Scale Server 2025 24TB Raid6 (Boot in Raid0 Mirror)

0 notes

Text

What is RAID and what are the different RAID modes?

About RAID

RAID is an acronym for Redundant Array of Independent Disks.

RAID is a data storage virtualization technology that combines multiple physical disk drive components into one or more logical units for the purposes of data redundancy, performance improvement, or both.

Data is distributed across the drives in one of several ways, referred to as RAID levels, depending on the required level of redundancy and performance. The different schemes, or data distribution layouts, are named by the word "RAID" followed by a number, for example RAID 0 or RAID 1. Each scheme, or RAID level, provides a different balance among the key goals: reliability, availability, performance, and capacity. RAID levels greater than RAID 0 provide protection against unrecoverable sector read errors, as well as against failures of whole physical drives.

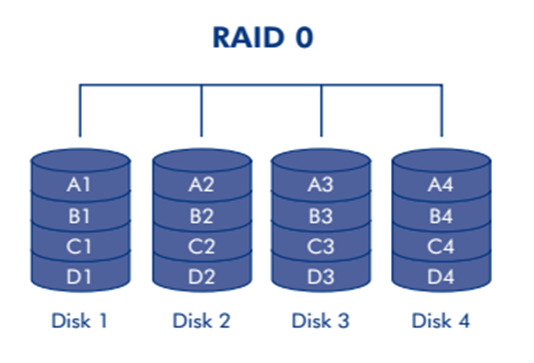

RAID 0 - The fastest transmission speed

RAID 0 consists of striping, but no mirroring or parity.

The capacity of a RAID 0 volume is the same; it is the sum of the capacities of the drives in the set. But because striping distributes the contents of each file among all drives in the set, the failure of any drive causes the entire RAID 0 volume and all files to be lost.

The benefit of RAID 0 is that the throughput of read and write operations to any file is multiplied by the number of drives because, reads and writes are done concurrently.

The cost is increased vulnerability to drive failures—since any drive in a RAID 0 setup failing causes the entire volume to be lost, the average failure rate of the volume rises with the number of attached drives.

RAID 0 does not provide data redundancy but does provide the best performance of any RAID levels.

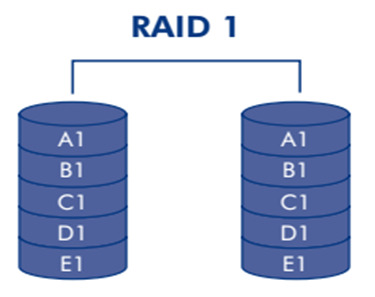

RAID 1 - Data protection is the safest

RAID1 is data protection mode (Mirrored Mode). Half of the capacity is used to store your data and half is used for a duplicate copy. RAID 1 consists of data mirroring, without parity or striping. Data is written identically to two or more drives,If one drive goes down your data is protected because it's duplicated. The array continues to operate as long as at least one drive is functioning.

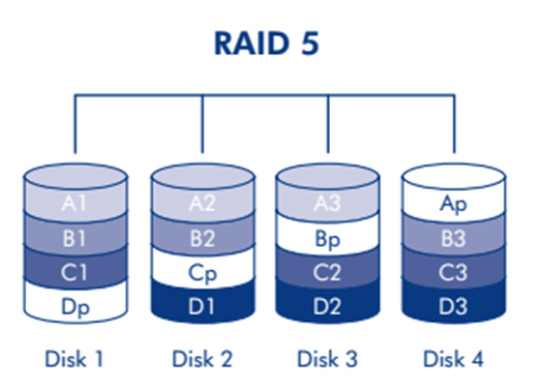

RAID 5 - Best fit Data protection and transmission

RAID 5 consists of block-level striping with distributed parity.

RAID 5 requires at least three disks. In systems with four drives we recommend that you set the system to RAID 5. This gives you the best of both worlds: fast performance by striping data across all drives; data protection by dedicating a quarter of each drive in a four drive system to fault tolerance leaving three quarters of the system capacity available for data storage.

Upon failure of a single drive, subsequent reads can be calculated from the distributed parity such that no data is lost.

The risk is that rebuilding an array requires reading all data from all disks, opening a chance for a second drive failure and the loss of the entire array.

RAID 6 - Large-capacity secure storage RAID6 is more suitable

RAID 6 consists of block-level striping with double distributed parity. Double parity provides fault tolerance up to two failed drives.

RAID 6 requires a minimum of four disks. As with RAID 5, a single drive failure results in reduced performance of the entire array until the failed drive has been replaced.

With a RAID 6 array, using drives from multiple sources and manufacturers, it is possible to mitigate most of the problems associated with RAID 5. The larger the drive capacities and the larger the array size, the more important it becomes to choose RAID 6 instead of RAID 5.

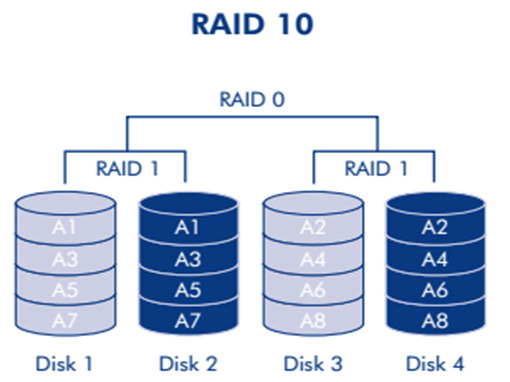

RAID 10 - High reliability and performance

RAID 10, also called RAID 1+0 . RAID 10 is a stripe of mirrors.

Arrays of more than four disks are also possible. A system set to RAID 10 yields half the total capacity of all the drives in the array.

RAID 10 provides better throughput and latency than all other RAID levels except RAID 0. This RAID mode is good for business critical database management solutions that require maximum performance and high fault tolerance. Thus, it is the preferable RAID level for I/O-intensive applications such as database, email, and web servers, as well as for any other use requiring high disk performance.

RAID 50 - High reliability and performance

RAID 50, also called RAID 5+0, combines the straight block-level striping of RAID 0 with the distributed parity of RAID 5. As a RAID 0 array striped across RAID 5 elements, minimal RAID 50 configuration requires six drives.

One drive from each of the RAID 5 sets could fail without loss of data; for example, a RAID 50 configuration including three RAID 5 sets can tolerate three maximum potential simultaneous drive failures (but only one per RAID 5 set).

RAID 50 improves upon the performance of RAID 5 particularly during writes, and provides better fault tolerance than a single RAID level does. This level is recommended for applications that require high fault tolerance, capacity and random access performance.

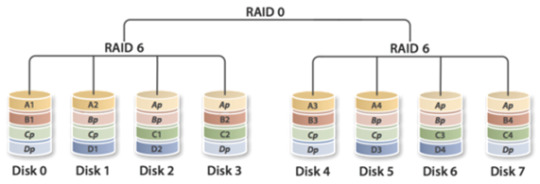

RAID 60 - High reliability and performance

RAID 60, also called RAID 6+0, combines the straight block-level striping of RAID 0 with the distributed double parity of RAID 6, resulting in a RAID 0 array striped across RAID 6 elements. It requires at least eight disks.

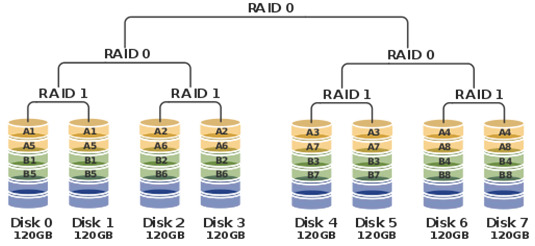

RAID 100 - High reliability and performance

RAID 100, sometimes also called RAID 10+0, is a stripe of RAID 10s. This is logically equivalent to a wider RAID 10 array, but is generally implemented using software RAID 0 over hardware RAID 10. Being "striped two ways", RAID 100 is described as a "plaid RAID".

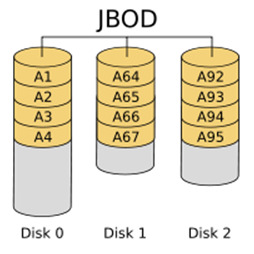

JBOD (Just a Bunch Of Disks)

JBOD (abbreviated from "Just a Bunch Of Disks",also is known as "None RAID") is an architecture using multiple hard drives exposed as individual devices. Hard drives may be treated independently or may be combined into one or more logical volumes using a volume manager like LVM or mdadm, or a device-spanning filesystem like btrfs; such volumes are usually called SPAN or BIG.

A spanned volume provides no redundancy, so failure of a single hard drive amounts to failure of the whole logical volume.

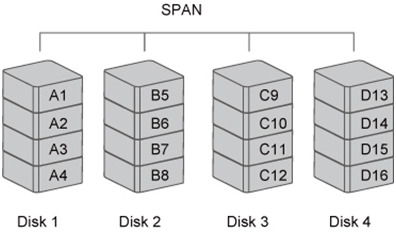

Concatenation (SPAN, BIG, LARGE)

Concatenation or spanning of drives is not one of the numbered RAID levels, but it is a popular method for combining multiple physical disk drives into a single logical disk. It provides no data redundancy.

Spanning provides another maximum capacity solution, which some call it as "Big/Large". Spanning combines multiple hard drives into a single logical unit. Unlike Striping, Spanning writes data to the first physical drive until it reaches full capacity. When the first disk reaches full capacity, data is written to the second physical disk. Spanning provides the maximum possible storage capacity, but does not increase performance.

What makes a SPAN or BIG different from RAID configurations is the possibility for the selection of drives. While RAID usually requires all drives to be of similar capacity and it is preferred that the same or similar drive models are used for performance reasons, a spanned volume does not have such requirements. The advantage of using this mode is that you can add more drives without having to reformat the system.

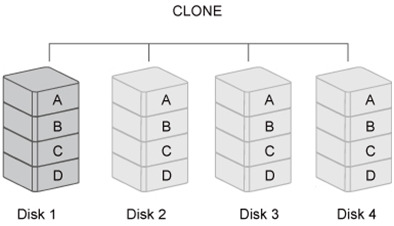

Clone

CLONE consists of at least two drives storing duplicate copies of the same data. In this mode, the data is simultaneously written to two or more disks. Thus, the storage capacity of the disk array is limited to the size of the smallest disk.

RAID Level Comparison

https://www.terra-master.com/global/products.html

#raid#raid1#raid0#raid5#raid6#raid10#raid50#jbod#span#clone#terramaster#raid storage#nas#network storage

3 notes

·

View notes

Text

RAIDレベルの話: 1+0と6はどっちが安全か?

仕事でちょっくら12台のHDDを使ったRAIDアレイを組むんだけど、その折にちょうどTwitterで「RAID-1+0にしないとRAID-6とか怖くて使えませんよ!」というウソ八百な内容のWebページのURLを見掛けたので、いいかげんそのような迷信が消え去ってもよかろうと思って書くことにした。

1重ミラー設定のRAID-1+0は安全性においてRAID-6に劣る。ただし、正しく運用されている場合に限る。*1

知っている人はずっと前から知っている事実ではあるんだけど、某巨大SIerなんかでも高い方が安全に決まってる的な残念な脳味噌の持ち主がいっぱいいて「いやあデータの安全性を考えるとRAID-1+0」とか考えもなしにクチにし、そっちの方がディスクがいっぱい売れて嬉しいストレージベンダーもニコニコしながら否定せず売りつけて去っていくといううわなにをす(ry まあそんな感じで。ちなみに正しくない運用状況にあるRAIDアレイはそもそもどう構成しても安全じゃないので論じても無駄です。

もう何年も前に目にしたNetAppが公開しているホワイトペーパーにディスクの故障率とかその他の数値仮定といっしょに計算式がちゃんと載ってて超わかりやすかったんだけど、いまググってみたらなんか見付からない……。NOW*2の中のドキ���メントだったかなあ。誰か知ってたら教えてください。 見付かった範囲だと こんなの があるけど、計算式とか載ってなくて数値に信憑性がない。もっと怪しげで更に信憑性のないのだと こっち (笑)

しょうがないので自分であれこれ仮定の数字をおいて計算してみる。仮定の数字なんで現実と違うところはあっちこっちあるし、ざっくりと省略する要素もいっぱいあるんでそのへんはご勘弁ね。詳しくはNetAppの営業にでも聞くと何か出てくるんじゃないでしょうか。知らんけど。あと資料の調べ直しとかせずに記憶ベースで書いてるんで、あちこち間違ってる可能性も高いです。勘弁してね。

あともうひとつ、RAID-1+0はパフォーマンスにおいては確かにRAID-6より(たいていの場合において)優秀です。そこに言及する気は今回ありません。

前提: RAIDアレイの正しい運用 RAIDアレイの正しい運用とはなにか、を認識することがRAIDアレイの安全性の試算には重要です。ここがそもそもあんまり認識されてないように思うので、軽くまとめ。

ディスクのfail RAIDを構成するディスクそれぞれについて、RAIDコントローラは状態を監視しています。各ディスクへのI/Oの成功/失敗が常に監視され、特定のディスクについてI/Oの失敗が許容値を超えたと判断*3された場合に、ようやくfailしたとマークされ、そのディスクを構成するRAIDアレイの冗長度が下がったと判断される。

ここで大事なのは、ディスクがfailしたと判断されるのはあくまでI/Oの結果ベースだということ。ディスクは壊れたときにそれを勝手にRAIDコントローラに通知してくれるような機能は持ってません。書かれているはずのデータを読みに行く(そして失敗する)、もしくは書けるはずの場所にデータを書きに行く(そして失敗する)ことでしかディスクの故障というのは発見できない。 f:id:tagomoris:20110418190857p:image この図のように、特に最近の大容量HDDであれば昔に書かれたデータと最近読み書きしているデータが綺麗に分かれることは割とよくある。ディスクの特定の部分に(HDDの先頭セクタに)このディスクを使いはじめた頃のデータが多く書かれたとする。時間が経つにつれて古いデータへのアクセス頻度が減り、ディスクの別の部分に対して書いた新しいデータに頻繁に読み書きが行われるようになっていく。 この頃に古いデータが書かれている領域で劣化がありデータの読み書きが不可能になっても、このデータを読み込もうとすること自体が起きなければ、そのことに気付けない(真ん中の図)。長い期間が経てば、そのような箇所は当然増えていく。 そしてある日になんらかのきっかけで古いデータを読み込みに行こうと��て、その時はじめてデータの読み込みが不可能であることに気付く。こうしてこのディスクが壊れた(failした)と判定されることになる。*4 RAIDアレイの故障 RAIDアレイはどのようなときに故障したとみなされるか。たとえば一番単純な2台構成でミラーリングのRAID-1について考えると? ミラーを構成する2台のHDDが同時に故障したときにRAIDアレイも故障する、というのは間違い。厳密に同時に2台のHDDが故障する確率というのは極めて低く、基本的にはほとんど無視していい確率のはず。まあ絶対に無いとは言えないけど、あまり現実的じゃない。 もうひとつの場合は、1台のディスクがfailし、代替ディスクを手配している間にもう1台(もしくはそれ以上)のディスクもfailした、というケース。これは無くはない。無くはないが、そもそも代替ディスクの調達にそんなに時間をかける方が間違ってる。自宅でRAIDを組んでるという趣味人はともかく、お仕事でRAIDを使うような人ならすぐに抜いて挿せる位置に代替ディスクくらい置いておくべき。あるいはホットスペアを用意しましょう。RAID-1+0じゃないと嫌だとか言う人は当然やってることだよね?

じゃあ他にどういう場合があるかというと、1台のディスクがfailしたあと、リビルドをかけている間にもう1台(もしくはそれ以上)のディスクがfailする場合だ。これを「2台同時に壊れる」と表現する人がいるが、その人はほとんどの場合は正しく状況を認識していない。これは厳密に考えると、以下ふたつのケースがある。

ディスクのfailひとつめの瞬間までは「全ディスクの全ブロックが読み書き可能な状態」だった ひとつめのディスクのfailが起きてからリビルドをかけている時間のうちに、もうひとつのディスクがfailした ディスクひとつ(もしくはそれ以上)が「実質的には読み書きできないブロックを抱えており潜在的にはfail」だった ひとつめのディスクのfailが起きてからリビルドをかける際に全ブロックへのアクセスが発生し、他のディスクのfailに気付いた 後者のケースを図示してみると、こんな感じだ。 f:id:tagomoris:20110418190858p:image 頻繁にアクセスされるのは 4〜6あたりの領域だとすると、A4〜A6およびB4〜B6のあたりで不良セクタができればI/Oエラーで気付けるが、A1〜A3およびB1〜B3あたりで不良ブロックが出てもなかなか気付けない。このときにB2が不良ブロック化し、それに気付けないままの状態が一番左だ。その状態のまま使い続けていたが、A5に不良ブロックが出て読み込み不可能となり、ディスクAがfailしたと判定される。ディスクAを新品と交換しRAIDアレイのリビルドをかけると、ここで初めてアクセスが低頻度な領域も含めてディスク全体への読み込みが発生し、ディスクBの不良ブロックが発見され、ディスクBもfailしてしまう。全データを取り出す方法が無くなってしまい、RAIDアレイ全体が故障したとみなされる。

定期的なベリファイ しかし、これらRAIDアレイの故障のケースのうち、最後のものについては予防が可能だ。RAIDコントローラは各ディスクの記録内容を強制的に同期する機能を持っているものが数多くある。5 これらの機能は通常、RAIDアレイを構成するHDD上の全ブロックのデータをスキャンし、各ディスク間で矛盾が無いかどうかをすべてチェックする。HDD上のデータのアクセス頻度に関わらず全ブロックを走査するため、もうほとんど読んで���ないようなデータが書かれているブロックにエラーがあった場合でも問題なく検出し、回復不可能であればそのディスクをfailさせてくれる。 これを頻繁に6実行していれば「読み込めないブロックがあるが気付けない」状況のことを気にしなくてよくなり、いざRAIDアレイのリビルドをかけようとしたときに生きていたはずのディスクがfailする確率が格段に減ることとなる。

で、こういう話をすると「ディスクの寿命が縮むから嫌だ」とか言う人が必ずいる。多少ディスクの寿命が延びたからといって、データ損失の危険と天秤にかけられるんですかー? まあそういう人は好きにすればぁ? 週1回くらい全走査したからってそんな寿命減りゃしねーよ。

ということで、RAIDアレイに対する定期的なベリファイは必須だ。必ずやろう。前提とします。

failディスクを発見したらすぐに交換・リビルド これはもう言うまでもない。放置は悪。そんな奴にデータの安全性を云々する資格はありません。

RAIDアレイ故障の起きる確率 さて、じゃあ定期的なベリファイとfailディスクはすぐ交換という前提を置いたとして、各RAIDレベルのアレイの故障確率を計算してみよう。ここではHDD8台分の論理容量がアレイに必要だということにして、RAID-1+0 および RAID-5/6 の各レベルを比較する。 1年間でHDD1台が故障する確率を 1/10 ということにしてみる。1年間は365日だから、1日あたりの故障確率を x とすると (1-x)^365 == (1-0.1) == 0.9 となるので x == 1 - 0.9^(1/365) == 1 - 0.99971138 == 0.00028862 == 2.8910^-4 くらい、となる。だいたい 0.0289% くらいですな。10,000台あったら2〜3台壊れる、だと、だいたいそんなもんかね? ちょっと多いかな。 またRAIDアレイのリビルドに必要な時間を仮定する必要がある。たいてい数時間だと思うしディスクの容量とコントローラの性能(ソフトウェアRAIDの場合はCPUのパワー)、およびリビルドと並行して発生している通常のI/O負荷などによるので一概には言えないんだけど、ここでは長めを仮定し、あと計算の簡単のため、1日かかることにしよう。なおRAIDレベルによってもリビルドに必要な時間は異なるが、ここでは計算の簡単のため、等しいとする。7 2台1ペア(単純なミラー)のRAID-1+0 HDD 16台構成 前述「RAIDアレイの故障」に書いた内容から、確率を求めなければならないのは以下のケース。

(a): 完全に同時に複数台のディスクがfailしてRAIDアレイが故障する (b): 1台のディスクがfailし、それに対してリビルドをかけている間に他のディスクがfailしてRAIDアレイが故障する (a) は簡単で RAID-1+0 の場合はペアになっている2台が同時にfailする確率を求めればいい。16台構成の場合は8ペアだから、確率は1ペアの単純に8倍になる。「同時に」が難しいけど、簡単のため「同じ日に」としてしまおう。8 確率は x^28 == (2.8910^-4)^28 == 8.352110^-88 == 6.68168*10^-7 だそうだ。1年あたりで起きる確率に直してみると (1 - 0.999756148) となって、1万個のアレイがあれば3個は故障が起きるらしい。おおう、意外に高い! ディスクのfailを1日放置しておくと、このくらいの確率で RAID-1+0 のアレイでも壊れる。

(a) やりなおし。「同時に」を「同じ日」はやめて、そうだなあ、1時間くらいにするか。ディスクひとつが1時間あたりでfailする確率 y を求めよう。1日は24時間なので (1 - y)^24 == (1 - x) を解けばいい。y == 1 - (1 - x)^(1/24) == 1 - 0.99971138^(1/24) = 1 - 0.999987973 == 1.202710^-5 だそうだ。 これを使って先程のように「ある1時間に複数台のディスクがfailしてRAIDアレイが故障する」確率を求めると y^28 == (1.202710^-5)^28 == 1.15718983*10^-9 となった。1年あたりの確率では (1 - 0.999989863) となるので、10万個のアレイがあると1個か2個で起きるくらいの確率となる。おおおお、安全! ていうかもうこんなケース考えなくてよくね? 絶対これより何桁も高い確率で世の中でデータ欠損起きてるじゃん。

(b) じゃあリビルド中に「ペアになっているもう1台」がfailする可能性を考えよう。ディスク1台のfailは既に起きてしまったものとするので、そこまでの確率は考えなくていい。条件付確率というやつだ。みんな中学校でやったよね?(高校だっけ?) リビルドに1日かかるとする。1重ミラーのRAID-1+0の場合、failしたディスクとペアになっているディスクがfailしたらアレイは故障するので、このディスクのfailする確率だけを考える。「正しい運用」が行われていれば1週間ごとにベリファイが実行されているはずなので、最長でもこの1週間 + リビルドに必要な1日の合計8日間にディスクが「実際には壊れてしまった」可能性を求めればいい9。 で、計算。リビルドの前後8日間で特定の1台のディスク(リビルド対象のHDDのペア)がfailする確率は 1 - (1-x)^8 == 1 - 0.99971138^8 = 0.0023066289 == 0.23% となった。おや、かなり高いですね?10 RAID-5 HDD 9台構成 RAID-5 はあんまり問題に出してなかったが、こっちでも同じように確率を出しておこう。

(a): 完全に同時に複数台(任意の2台)のディスクがfailしてRAIDアレイが故障する (b): 1台のディスクがfailし、それに対してリビルドをかけている間に他の任意の1台のディスクがfailしてRAIDアレイが故障する (a) ある1時間に、9台のうち任意の2台がfailする確率を求めればいい。9C2 * y^2 == 98/2 * y^2 == 36 * (1.202710^-5)^2 == 5.20735424*10^-9 となる。ああ、確かに RAID-1+0 の4倍くらい高い確率ですね。しかし1年あたりに直すと (1 - 0.999954385) ってことで、10万個のアレイのうち5個くらい起きるかも、ということでやっぱり小さい確率には違いないよなあ。

(b) RAID-5 の場合、リビルド中に他のどの1台がfailしてもアレイは故障する。さっきと同じようにリビルドの前後8日間で故障ディスク以外の8台のうち任意の1台がfailする確率を計算してみると 8 * (1 - (1 - x)^8) == 0.0184530312 == 1.85% だ。要するにRAID-1+0の8倍だよねってことで、RAID-5が危険な構成であることがわかる。

RAID-6 HDD 10台構成 (a) RAID-6 の場合はある1時間に、10台のうち任意の3台がfailする確率となる。10C3 * y^3 == (1098)/(32) * y^3 == 120 * (1.202710^-5)^3 == 2.08762832 * 10^-13 となった。この時点で RAID-1+0 のアレイに対してざっと4桁も安全だということがわかる。1年あたりに直しても (1 - 0.999999998) ってことで、まあもう壊れないじゃんコレ。

(b) こちらはリビルド前後8日間で、故障ディスク以外の9台のうち任意の2台がfailする確率になる。8日間で1台がfailする確率は (1 - (1 - x)^8) だから、求める確率は 9C2 * (1 - (1 - x)^8)^2 == 98/2 * (1 - 0.99971138^8)^2 == 0.000191539328 == 1.91510^-4 == 0.01915% となった。ざっと RAID-1+0 の1/10の確率になる。

試算から この簡単な試算の結果、以下のことがわかる。

本当に同時にディスクが複数台壊れる確率だけに注目すると RAID-1+0 の場合は年あたり 0.001% くらいの確率でデータロストする RAID-6 の場合は年あたり 0.0000002% くらいの確率でデータロストする 基本的にどちらも低過ぎてあまり考える意味はないけど、そ��でもRAID-6の方が何桁も安全 1台のディスクがfailしたあとのリカバリ中の故障の確率を見ると RAID-1+0 の場合は 0.23% の確率でデータロストする RAID-6 の場合は 0.019% の確率でデータロストする NetAppの試算とは数字がひと桁違うが、アレイの構成台数の前提やリビルド前後期間のとりかたなどで変わってくる 疑われる向きは自分のアレイ構成で計算してみればいいと思いますよ計算式は全部上にあるから 結論 単純な安全性だけ考えるならRAID-6はRAID-1+0(2重化)よりすぐれている。*11 もちろん実際の選択においては性能、要求容量に対するHDDあたり容量のトレンドと冗長性の兼ね合い、手に入るRAIDコントローラの機能および価格なども考慮の上で選択するべきであって、安全性だけを考えればいいというものではない。ただし性能要件が厳しいことというのは実際にはそんなに多くないはずなので、きちんと考えた上でならより安価で安全なRAID-6を選択するというのは現実的なものでしょう。

*1:3台以上のHDDが故障しているとき、データが助かる可能性があるのはRAID-1+0であってRAID-6では確実にデータが失われる。しかしそもそも3台以上のHDD故障を想定しなければならないケースは運用が間違っている。

*2:NetApp on the Web: 登録制でログインが必要なNetAppの技術サイト

*3:この判断基準はRAIDコントローラの実装によって異なり、またディスクの種類によっても異なるため、あまり一般化できません。ただし1つのセクタに対するI/O失敗だけでいきなりディスクをfail扱いするコントローラはほとんどないはず。通常はいくつかのbad blockが予備ブロックに置き換えられるなどした後、その回数や頻度が許容値を超えた場合などにようやくfail扱いとなります。まあ自分も詳しくないんでHW系の誰かに聞きましょう。

*4:この図ではI/Oの成功/失敗がものすごく単純化されているが、ブロック/セクタ単位でのread/writeの成功/失敗にはそれはそれであれこれある。らしいよ。

*5:Linux Software RAID(md) でも可能。

*6:といっても週1以上でかける意味はあんまり無いと思うけど……

*7:RAIDレベル毎にどれくらいリビルドの所要時間が異なるかはCPUやI/O負荷により以下略。

*8:これはつまり、ディスクがfailしてからリビルドを開始するまでの時間が必ず1時間ある、という前提だと思ってもいい。

*9:さっきまでは「ディスクがfailする確率」を扱っていたが、ここでは「実際には壊れていたがfailとしては見えていない」ケースも扱っている。ただし「ディスクがfailする単位時間あたりの確率」と「実際には壊れていたものを単位時間あたりでfailとして検出する確率」は数字としては等しいので、ここでは同じ数値をそのまま用いることができる。

*10:逆にこの数字を見て「もっと高い確率で失敗している!」と思った人は正しくない運用をしている疑いが強い。

*11:計算するまでもなく3重化以上のRAID-1+0であれば常にRAID-6よりも安全性において優る。なぜならRAID-6は任意の3台がfailした時点でRAIDアレイが故障するが、3重化ミラーなら組になった3台が同時にfailしない限りRAIDアレイ故障は起きない

https://tagomoris.hatenablog.com/entry/20110419/1303181958

0 notes

Text

I spent the weekend going through and backing up data to my server which included the long overdue task of downloading fanfic that I wanted to save that I had read over the past 3 years. I should have done it sooner because there was a fair amount that have been deleted or moved to a hidden collection. I intend to automate this process in the future, especially for fics that are in progress and then are deleted before they are ever completed.

Searching through this blog I recorded in April 2018 that "earliest fanfic I have goes back to 2008 ... w/ 2,045 Files, 93 Folders". Slight correction that I found a really old BTVS crossover dating to 2007.

As of Feb 2023 I have 3,370 files. About a hundred of the files aren't individual stories but chapters saved individually of now deleted fics before I started using FanFictionDownloader. Raid6 organized storage hates lots of small files so I've gone through and redownloaded all the stories I could from chapters into a compiled work, so the actual number of new stories added is probably larger.

My folder organization has changed a lot since so it's separated into 102 Fandom Folders, 15 Author folders (for those who write a lot across different fandoms), and a mostly unorganized Crossovers folder.

My top 5 fandoms by size were: DC, Transformers, Marvel, RvB, Warhammer 40k; top 10 by number of files: Warhammer 40k, DC, RvB, Destiny, Star Wars, Marvel, Transformers, Fallout, Naruto, Star Trek. 40k is definitely an outlier, because there's a lot of meta analysis posts included and pdf versions of tumblr pages where the css doesn't save well. That RvB, Destiny, and Fallout rank up there with other juggernaut fandoms really speaks to my small-to-medium fandom bias where I read a lot more shorter works by more authors that would normally get overshadowed in bigger fandoms.

Reading through the earlier stuff I saved, a lot of the fics haven't aged well; bad writing, super cliched plots, flat characters. Fics that I wouldn't finish reading now, let alone save; but aside from deleting a few, I'm glad to have this record of what I used to like. It's a lot easier with fanfic than the bad pulp novels I sold to the 2nd hand bookstore because physical books take up a lot more space.

2 notes

·

View notes

Text

Linux 6.2 的 Btrfs 改進

Linux 6.2 的 Btrfs 改進

在 Hacker News 上看到 Btrfs 的改善消息:「Btrfs With Linux 6.2 Bringing Performance Improvements, Better RAID 5/6 Reliability」,對應的討論在「 Btrfs in Linux 6.2 brings performance improvements, better RAID 5/6 reliability (phoronix.com)」這邊。 因為 ext4 本身很成熟了,加上特殊的需求反而會去用 OpenZFS,就很久沒關注 Btrfs 了,這次看到 Btrfs 在 Linux 6.2 上的改進剛好可以重顧一下情況。 看起來是針對 RAID 模式下的改善,包括穩定性與效能,不過看起來是針對 RAID5 的部份多一點。 就目前的「情勢」看起來,Btrfs…

View On WordPress

#btrfs#cddl#filesystem#fs#gpl#kernel#license#linux#openzfs#performance#raid#raid5#raid6#reliability#zfs

0 notes