#sagemaker

Explore tagged Tumblr posts

Text

Wie jedes Jahr eine Zertifizierung und mein Bericht darüber, wie man sich effizient dafür vorbereitet. Dieses Jahr ist das Thema der AWS Certified AI Practitioner. AI ist das Thema der letzten Jahre in meiner Industrie und ich habe vieles gelernt. Viel Spass.

#AI#Artificial Intelligence#AWS#Bedrock#Deep Learning#Generative AI#Künstliche Intelligenz#KI#Machine Learning#Responsible AI#SageMaker

0 notes

Text

Configurar autoapagado de space en JupiterLAB - [AWS]

Para realizar el apagado automático de un Spacename de JupiterLab (Shutdown) definiendo un tiempo de inactividad, se deberá configurar un LifeCycle de Dominio, para ello dentro de la consola de AWS se deberá ir a la siguiente opción: Amazon SageMaker > Admin configurations > Lifecycle configurations > Create configuration Aquí se deberá pegar el siguiente código, que lo que hará es de manera…

View On WordPress

0 notes

Text

How Einstein 1 And Data Cloud Enable AI-driven Results

The artificial intelligence capabilities of Einstein 1 have already generated a great deal of hype and development, which will be a significant emphasis of Dreamforce 2024. A corresponding focus has also been observed on the Data Cloud, which is the mechanism that combines data from many sources to enable this AI magic. However, precisely how do the two cooperate? Does Einstein 1 need to be enabled with Data Cloud?

Data Unification: Data Cloud as the Basis

Salesforce has made significant investments in Data Cloud over the last few years. As a result, it went from being a mid-tier customer data platform to the Leader position in the Gartner Magic Quadrant for 2023. Ultimately, it is clear that inside the Salesforce ecosystem, Cloudera Data Platform (CDP) offers the strongest basis for a complete data solution.

Data Cloud absorbs and unifies data from throughout the company to release data that has become imprisoned. Data can be included and connected to the Salesforce data model with more than 200 native connectors, such as those for AWS, Snowflake, and IBM Db2. As a result, it may be used for analytics, Customer 360 profiles, marketing campaigns, and sophisticated AI features.

To put it simply, you can do more with your data the better it is. This necessitates a careful examination of the data prior to Data Cloud ingestion: Do you possess the information points required for customization? Do the various data sources employ the same formats required for sophisticated analytics? Are you able to train the AI models with enough data?

Recall that your teams will still require proper usage of the data after it has been imported and mapped in Data Cloud. This could entail using a “two in a box” approach to work with a partner in order to quickly absorb and put those lessons into practice. On the other hand, it necessitates extensive training, change management, and a readiness to embrace the new instruments. Teams must have documentation such as a “Data Dictionary for Marketers” in order to completely comprehend the data points they are utilizing in their campaigns.

Improved AI tools are offered by Einstein 1 Studio

Once Data Cloud is operational, you can utilize Einstein 1 Studio, which houses Salesforce’s most advanced and potent AI tools.

Salesforce’s low-code platform for integrating AI throughout its product line is called Einstein 1 Studio, and it can only be accessed through Data Cloud. Salesforce is making significant investments in the Einstein 1 Studio roadmap, and with each new release, the features get better. As of early September 2024, when this article is written, Einstein 1 Studio is made up of three parts:

Prompt builder

Salesforce users may integrate these generative AI capabilities into any item, including contact records, by creating reusable AI prompts with the prompt builder. AI commands such as record summarization, enhanced analytics, and suggested offers and actions are triggered by these prompts.

Copilot builder

Salesforce copilots are artificial intelligence interfaces that are generative and based on natural language processing. They are designed to enhance customer experiences and increase productivity, both internally and externally. In addition to triggering actions and updates using Apex and Flow, Copilot Builder lets you personalize the built-in Copilot functions with prompt builder features like summarizations and AI-driven search.

Model builder

Businesses can utilize the standard large language models from Salesforce by utilizing the Bring Your Own Model (BYOM) option. If they want to employ the ideal AI model for their company, they can also integrate their own, such as SageMaker, OpenAI, or IBM Granite. Furthermore, a new model can be constructed using Model Builder and reliable Data Cloud data.

How can you determine which model produces the greatest outcomes? You can test and validate responses with the BYOM tool, and you should also take a look at this model comparison tool.

As Salesforce continues to make significant investments in this field, expect to see frequent updates and new features.

Salesforce AI features in the absence of Data Cloud

If you haven’t yet utilized Data Cloud or absorbed a significant amount of data, Salesforce nevertheless offers a range of artificial intelligence functionalities. These can be accessed using Tableau, Sales Cloud, Service Cloud, Marketing Cloud, and Commerce Cloud. These built-in AI features include suggestions for products, generative AI content, and case and call summary. The potential for AI outputs increases with the quality and coherence of the data.

This is a really useful feature, and you ought to utilize Salesforce’s AI capabilities in the following ways:

Optimization of campaigns

Marketing campaign subject lines and message copy can be created using Einstein Generative AI, and Einstein Copy Insights can even compare the suggested copy to past campaigns to estimate engagement rates. This feature extends beyond Marketing Cloud; depending on CRM record data, it may also suggest AI-generated copy for Sales Cloud messages.

Recommendations

Based on CRM data, product catalogs, and past behavior, Einstein Recommendations can be utilized across clouds to suggest goods, information, and engagement tactics. The recommendation could take many different forms, such as customized copy depending on the situation or a recommendation for a product that is the next best offer.

Search and self-service

Based on the query’s natural language processing, past interactions, and a variety of tool data points, Einstein Search offers customized search results. Based on Salesforce’s foundation of security and trust, Einstein Article Answers can enable self-service by promoting responses from a chosen knowledge base.

Advanced analytics

Although Tableau CRM (previously Einstein Analytics), a specialized analytics visualization and insights tool, is available from Salesforce, Salesforce itself has AI-based advanced analytics capabilities built in. Numerous reports and dashboards, such as Einstein Lead Scoring, sales summary, and marketing campaigns, emphasize these business-focused advanced analytics.

AI, Data, CRM, and Trust

Salesforce offers strong tools within the Salesforce ecosystem thanks to its focus on Einstein 1 as “CRM + AI + Data + Trust.” The only way to improve these tools is to use Data Cloud as the primary tool for activating, combining, and aggregating data. You should anticipate considerably more improvement from this in the future. The AI industry is changing at an astonishing rate, and Salesforce is still setting the standard with its investments and methods.

Einstein 1 pricing

Pricing for Einstein 1 is offered in two editions:

Einstein 1 for Sales:

Cost: $500 per month for each user

Features: Consists of all the features found in Sales Cloud Unlimited Edition plus Data Cloud, Sales Planning, Maps, Revenue Intelligence, Generative AI, Enablement, Slack, and Slack Sales Elevate.

Einstein 1 for Service:

Cost: $500 per month for each user

Features: All the features of Service Cloud Unlimited Edition plus Data Cloud, Digital Engagement, Feedback Management, Self-Service, Slack, and CRM Analytics are included. Generative AI is also included.

Remember that these are list rates, and that actual pricing may change based on things like the quantity of users, the features that are needed, and any deals that may have been worked out.

Read more on Govindhtech.com

#Einstein1#DataCloud#Salesforce#ClouderaDataPlatform#IBMDb2#AIfeatures#AImodels#AIcapabilities#SageMaker#OpenAI#news#technews#technology#technologynews

0 notes

Text

Enhancing ML Efficiency with Amazon SageMaker

Taking machine learning models from conceptualization to production is complex and time-consuming. Companies need to handle a huge amount of data to train the model, choose the best algorithm for training and manage the computing capacity while training it. Moreover, another major challenge is to deploy the models into a production environment.

Amazon SageMaker simplifies such complexities. It makes easier for businesses to build and deploy ML models. It offers the required underlying infrastructure to scale your ML models at petabyte level and easily test and deploy them to production.

Read our blog to understand A comprehensive guide to Amazon SageMaker

Scaling data processing with SageMaker

The typical workflow of a machine learning project involves the following steps:

Build: Define the problem, gather and clean data

Train: Engineer the model to learn the patterns from the data

Deploy: Deploy the model into a production system

This entire cycle is highly iterative. There are chances that the changes made at any stage of the process can loop back the progress to any state. Amazon SageMaker provides various built-in training algorithms and pre-trained models. You can choose the model according to your requirements for quick model training. This allows you to scale your ML workflow.

SageMaker offers Jupyter NoteBooks running R/Python kernels with a compute instance that you can choose as per the data engineering requirements. After data engineering, data scientists can easily train models using a different compute instance based on the model’s compute demand. The tool offers cost-effective solutions for:

Provisioning hardware instances

Running high-capacity data jobs

Orchestrating the entire flow with simple commands

Enabling serverless elastic deployment works with a few lines of code

Three main components of Amazon SageMaker

SageMaker allows data scientists, engineers and machine learning experts to efficiently build, train and host ML algorithms. This enables you to accelerate your ML models to production. It consists of three components:

Authoring: you can run zero-setup hosted Jupyter notebook IDEs on general instance types or GPU-powered instances for data exploration, cleaning and pre-processing.

Model training: you can use built-in supervised and unsupervised algorithms to train your own models. Amazon SageMaker trained models are not code dependent but data dependent. This enables easy deployment.

Model hosting: to get real-time interferences, you can use AWS’ model hosting service with HTTPs endpoints. These endpoints can scale to support traffic and allow you do A/B testing on multiple models simultaneously.

Benefits of using Amazon SageMaker

Cost-efficient model training

Training deep learning models requires high GPU utilization. Moreover, the ML algorithms that are CPU-intensive should switch to another instance type with a higher CPU:GPU ratio.

With AWS SageMaker heterogeneous clusters, data engineers can easily train the models with multiple instance types. This takes some of the CPU tasks from the GPU instances and transfers them to dedicated compute-optimized CPU instances. This ensures higher GPU utilization as well as faster and more cost-efficient training.

Rich algorithm library

Once you have defined a use case for your machine learning project, you can choose an appropriate built-in algorithm offered by SageMaker that is valid for your respective problem type. It provides a wide range of pre-trained models, pre-built solution templates and examples relevant for various problem types.

ML community

With AWS ML researchers, customers, influencers and experts, SageMaker offers a niche ML community where data scientists and engineers come together to discuss ML uses and issues. It offers a range of videos, blogs and tutorials to help accelerate ML model deployment.

ML community is a place to discuss, learn and chat with experts and influencers regarding machine learning algorithms.

Pay-as-you-use model

One of the best advantages of Amazon SageMaker is the fee structure. Amazon SageMaker is free to try. As a part of AWS Free Tier, you can get started with Amazon SageMaker for free. Moreover, once the trial period is over, you need to pay only for what you use.

You have two types of payment choices:

On-demand pricing – it offers no minimum fees. You can utilize SageMaker services without any upfront commitments.

SageMaker savings plans – AWS offers a flexible, usage-based pricing model. You need to pay a consistent amount of usage in return.

If you use a computing instance for a few seconds, billed at a few dollars per hour, you will still be charged only for the seconds you use the instance. Compared to other cloud-based self-managed solutions, SageMaker provides at least 54% lower total cost of ownership over three years.

Read more: Deploy Machine Learning Models using Amazon SageMaker

Amazon SageMaker – making machine learning development and deployment efficient

Building machine learning models is a continuous cycle. Even after deploying a model, you should monitor inferences and evaluate the model to identify drift. This ensures an increase in the accuracy of the model. Amazon SageMaker, with its built-in library of algorithms, accelerates building and deploying machine learning models at scale.

Amazon SageMaker offers the following benefits:

Scalability

Flexibility

High-performing built-in ML models

Cost-effective solutions

Softweb Solutions offers Amazon SageMaker consulting services to address your machine learning challenges. Talk to our SageMaker consultants to know more about its applications for your business.

Originally published at softwebsolutions on November 28th, 2022.

1 note

·

View note

Text

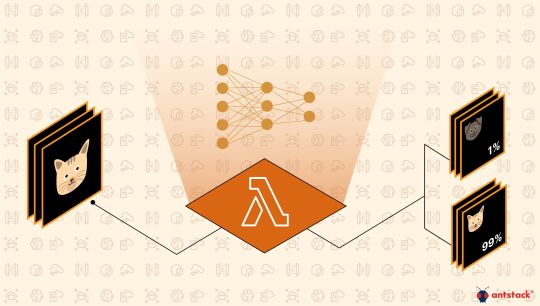

Serverless Image Classifier with AWS SageMaker| AntStack

Building a deep learning project locally on your machine is a great feat. What’s even better is to actually deploy the project as an application in the cloud. Deploying an application gives a lot of knowledge as you will encounter a lot of issues which helps you understand the project on a great level. This blog covers how to build, train and deploy an Image Classifier using Amazon SageMaker, EFS, Lambda and even more.

0 notes

Text

Best AWS Sagemaker Developer

SoftmaxAI is a reputed AWS cloud consultant in India. We have the experience and knowledge to build comprehensive strategies and develop solutions that maximize your return on AWS investment. We have a certified team of AWS DeepLens, Amazon Forecast, PyTorch on AWS, and AWS Sagemaker developer to leverage machine tools and design custom algorithms and improve your ROI.

2 notes

·

View notes

Text

Software tools to use today for Predictive Modelling

0 notes

Link

The Amazon EU Design and Construction (Amazon D&C) team is the engineering team designing and constructing Amazon warehouses. The team navigates a large volume of documents and locates the right information to make sure the warehouse design meets the highest standards. In the post A generative AI-powered solution on Amazon SageMaker to help Amazon EU […]

0 notes

Text

Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text analysis models

Exciting news! 🎉 Want to use machine learning to gain insights from product reviews without any coding or ML expertise? Check out this step-by-step guide on how to leverage Amazon SageMaker Canvas sentiment analysis and text analysis models. 🤩 According to Gartner, 85% of software buyers trust online reviews as much as personal recommendations. With machine learning, you can analyze large volumes of customer reviews and uncover valuable insights to improve your products and services. 💡 Amazon SageMaker Canvas offers ready-to-use AI models for sentiment analysis and text analysis of product reviews, eliminating the need for ML specialists. In this blog post, we provide sample datasets and walk you through the process of leveraging these models. 📈 Elevate your company with AI and stay competitive. Don't miss out on this opportunity to derive insights from product reviews. Check out the blog post here: [Link](https://ift.tt/tRcfO83) Stay updated on AI and ML trends by following Itinai on Twitter @itinaicom. And for a free consultation, join our AI Lab in Telegram @aiscrumbot. 🚀 #AI #MachineLearning #AmazonSageMakerCanvas #ProductReviews #Insights List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text analysis mod#AI News#AI tools#AWS Machine Learning Blog#Gavin Satur#Innovation#LLM#t.me/itinai Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text

0 notes

Text

End To End Machine Learning Project Implementation Using AWS Sagemaker

In this video we will be implementing an end-to-end machine learning project using AWS SageMaker! In this video, we will walk … source

0 notes

Text

Real-time Model Oversight: Amazon SageMaker vs Databricks ML Monitoring Features

Model monitoring is crucial in the lifecycle of machine learning models, especially for models deployed in production environments. Model monitoring is not just a "nice-to-have" but is essential to ensure the models' robustness, accuracy, fairness, and reliability in real-world applications. Without monitoring, model predictions can be unreliable, or even detrimental to the business or end-users. As a model builder, how often have you thought about how models’ behavior will change over time? In my professional life, I have seen many production systems managing model retraining life cycle using heuristic, gut feel or scheduled basis, either leading to the wastage of precious resources or performing retraining too late.

This is a ripe problem space as many models have been deployed in production. Hence there are many point solutions such as Great Expectations, Neptune.ai, Fiddler.ai who all boast really cool features either in terms of automatic metrics computation, differentiated statistical methods or Responsible AI hype that has become a real need of time (Thanks to ChatGPT and LLMs). In this Op-ed, I would like to touch upon two systems that I am familiar with and are widely used.

Amazon SageMaker Model Monitor

Amazon SageMaker is AWS’s flagship fully managed ML service to Build, Train, Deploy & “Monitor” Machine Learning models. The service provides click through experience for set up using SageMaker Studio or API experience using SageMaker SDK. SageMaker assumes you to have clean datasets for training and can capture inference request/response based on user defined time interval. The system works for model monitoring if models are the problem, BUT What if Data that is fed to the model is a problem or a pipeline well upstream in ETL pipeline is a problem. AWS provides multiple Data Lake architectures and patterns to stitch end-2-end data and AI systems together but tracking data lineage is easy if not impossible.

The monitoring solution is flexible thanks to SageMaker processing job which is underlying mechanism to execute underlying metrics. SageMaker processing also lets you build your custom container. SageMaker model monitoring is integrated with Amazon SageMaker Clarify and can provide Bias Drift which is important for Responsible AI. Overall SageMaker monitoring does a decent job of alerting when model drifts.

Databricks Lakehouse Monitoring

Let's look at the second contender. Databricks is a fully managed Data and AI platform available across all major clouds and also boasts millions of downloads of MLFlow OSS. I have recently come across Databricks Lakehouse Monitoring which IMO is a really cool paradigm of Monitoring your Data assets.

Let me explain why you should care if you are an ML Engineer or Data Scientist?

Let's say you have built a cool customer segmentation model and deployed it in production. You have started monitoring the model using one of the cool bespoke tools I mentioned earlier which may pop up an alert blaming a Data field. Now What?

✔ How do you track where that field came from cobweb of data ETL pipeline?

✔ How do you find the root cause of the drift?

✔ How do you track where that field came from cobweb of data ETL pipeline?

Here comes Databricks Lakehouse Monitoring to the rescue. Databricks Lakehouse Monitoring lets you monitor all of the tables in your account. You can also use it to track the performance of machine learning models and model-serving endpoints by monitoring inference tables created by the model’s output.

Let's put this in perspective, Data Layer is a foundation of AI. When teams across data and AI portfolios work together in a single platform, productivity of ML Teams, Access to Data assets and Governance is much superior compared to siloed or point solution.

The Vision below essentially captures an ideal Data and Model Monitoring solution. The journey starts with raw data with Bronze -> Silver -> Golden layers. Moreover, Features are also treated as another table (That’s refreshing and new paradigm, Goodbye feature stores). Now you get down to ML brass tacks by using Golden/Feature Tables for Model training and serve that model up.

Databricks recently launched in preview awesome Inference table feature. Imagine all your requests/responses captured as a table than raw files in your object store. Possibilities are limitless if the Table can scale. Once you have ground truth after the fact, just start logging it in Groundtruth Table. Since all this data is being ETLed using Databricks components, the Unity catalog offers nice end-2-end data lineage similar to Delta Live Tables.

Now you can turn on Monitors, and Databricks start computing metrics. Any Data Drift or Model Drift can be root caused to upstream ETL tables or source code. Imagine that you love other tools in the market for monitoring, then just have them crawl these tables and get your own insights.

Looks like Databricks want to take it up the notch by extending Expectations framework in DLT to extend to any Delta Table. Imagine the ability to set up column level constraints and instructing jobs to fail, rollback or default. So, it means problems can be pre-empted before they happen. Can't wait to see this evolution in the next few months.

To summarize, I came up with the following comparison between SageMaker and Databricks Model Monitoring.CapabilityWinnerSageMakerDatabricksRoot cause AnalysisDatabricksConstraint and violations due to concept and model driftExtends RCA to upstream ETL pipelines as lineage is maintainedBuilt-in statisticsSageMakerUses Deque Spark library and SageMaker Clarify for Bias driftUnderlying metrics library is not exposed but most likely Spark libraryDashboardingDatabricksAvailable using SageMaker Studio so it is a mustRedash dashboards are built and can be customized or use your favorite BI tool.AlertingDatabricksNeeds additional configuration using Event BridgeBuilt in alertingCustomizabilityBothUses Processing jobs so customization of your own metricsMost metrics are built-in, but dashboards can be customizedUse case coverageSageMakerCoverage for Tabular and NLP use casesCoverage for tabular use casesEase of UseDatabricksOne-click enablementOne-click enablement but bonus for monitoring upstream ETL tables

Hope you enjoyed the quick read. Hope you can engage Propensity Labs for your next Machine Learning project no matter how hard the problem is, we have a solution. Keep monitoring.

0 notes

Text

today i have a stuffy nose and my teeth hurt and my throat hurts.

BUT i will continue reading the kuberntes book !! and also im very excited about working on a ml project i will use sagemaker with docker this time (docker is completely new to me)

plus 1 hour of cost optimized mcqs for saac03

and decide when to give that assessment and also apply to jobs. yay?

3 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Hire Best AWS Sagemaker Developer

At SoftmaxAI, we provide custom AWS cloud consulting services to help your business grow. Our AWS sagemaker developer assists you automate software release processes and focus on building and delivering applications and services more efficiently. Hire the best AWS sagemaker developer today!

0 notes

Text

February Goals

1. Reading Goals (Books & Authors)

LLM Twin → Paul Iusztin

Hands-On Large Language Models → Jay Alammar

LLM from Scratch → Sebastian Raschka

Implementing MLOps → Mark Treveil

MLOps Engineering at Scale → Carl Osipov

CUDA Handbook → Nicholas Wilt

Adventures of a Bystander → Peter Drucker

Who Moved My Cheese? → Spencer Johnson

AWS SageMaker documentation

2. GitHub Implementations

Quantization

Reinforcement Learning with Human Feedback (RLHF)

Retrieval-Augmented Generation (RAG)

Pruning

Profile intro

Update most-used repos

3. Projects

Add all three projects (TweetGen, TweetClass, LLMTwin) to the resume.

One easy CUDA project.

One more project (RAG/Flash Attn/RL).

4. YouTube Videos

Complete AWS dump: 2 playlists.

Complete two SageMaker tutorials.

Watch something from YouTube “Watch Later” (2-hour videos).

Two CUDA tutorials.

One Azure tutorial playlist.

AWS tutorial playlist 2.

5. Quizzes/Games

Complete AWS quiz

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes