#amazon sagemaker

Explore tagged Tumblr posts

Link

We are excited to announce a new version of the Amazon SageMaker Operators for Kubernetes using the AWS Controllers for Kubernetes (ACK). ACK is a framework for building Kubernetes custom controllers, where each controller communicates with an AWS service API. These controllers allow Kubernetes users to provision AWS resources like buckets, databases, or message queues […]

0 notes

Text

Enhancing ML Efficiency with Amazon SageMaker

Taking machine learning models from conceptualization to production is complex and time-consuming. Companies need to handle a huge amount of data to train the model, choose the best algorithm for training and manage the computing capacity while training it. Moreover, another major challenge is to deploy the models into a production environment.

Amazon SageMaker simplifies such complexities. It makes easier for businesses to build and deploy ML models. It offers the required underlying infrastructure to scale your ML models at petabyte level and easily test and deploy them to production.

Read our blog to understand A comprehensive guide to Amazon SageMaker

Scaling data processing with SageMaker

The typical workflow of a machine learning project involves the following steps:

Build: Define the problem, gather and clean data

Train: Engineer the model to learn the patterns from the data

Deploy: Deploy the model into a production system

This entire cycle is highly iterative. There are chances that the changes made at any stage of the process can loop back the progress to any state. Amazon SageMaker provides various built-in training algorithms and pre-trained models. You can choose the model according to your requirements for quick model training. This allows you to scale your ML workflow.

SageMaker offers Jupyter NoteBooks running R/Python kernels with a compute instance that you can choose as per the data engineering requirements. After data engineering, data scientists can easily train models using a different compute instance based on the model’s compute demand. The tool offers cost-effective solutions for:

Provisioning hardware instances

Running high-capacity data jobs

Orchestrating the entire flow with simple commands

Enabling serverless elastic deployment works with a few lines of code

Three main components of Amazon SageMaker

SageMaker allows data scientists, engineers and machine learning experts to efficiently build, train and host ML algorithms. This enables you to accelerate your ML models to production. It consists of three components:

Authoring: you can run zero-setup hosted Jupyter notebook IDEs on general instance types or GPU-powered instances for data exploration, cleaning and pre-processing.

Model training: you can use built-in supervised and unsupervised algorithms to train your own models. Amazon SageMaker trained models are not code dependent but data dependent. This enables easy deployment.

Model hosting: to get real-time interferences, you can use AWS’ model hosting service with HTTPs endpoints. These endpoints can scale to support traffic and allow you do A/B testing on multiple models simultaneously.

Benefits of using Amazon SageMaker

Cost-efficient model training

Training deep learning models requires high GPU utilization. Moreover, the ML algorithms that are CPU-intensive should switch to another instance type with a higher CPU:GPU ratio.

With AWS SageMaker heterogeneous clusters, data engineers can easily train the models with multiple instance types. This takes some of the CPU tasks from the GPU instances and transfers them to dedicated compute-optimized CPU instances. This ensures higher GPU utilization as well as faster and more cost-efficient training.

Rich algorithm library

Once you have defined a use case for your machine learning project, you can choose an appropriate built-in algorithm offered by SageMaker that is valid for your respective problem type. It provides a wide range of pre-trained models, pre-built solution templates and examples relevant for various problem types.

ML community

With AWS ML researchers, customers, influencers and experts, SageMaker offers a niche ML community where data scientists and engineers come together to discuss ML uses and issues. It offers a range of videos, blogs and tutorials to help accelerate ML model deployment.

ML community is a place to discuss, learn and chat with experts and influencers regarding machine learning algorithms.

Pay-as-you-use model

One of the best advantages of Amazon SageMaker is the fee structure. Amazon SageMaker is free to try. As a part of AWS Free Tier, you can get started with Amazon SageMaker for free. Moreover, once the trial period is over, you need to pay only for what you use.

You have two types of payment choices:

On-demand pricing – it offers no minimum fees. You can utilize SageMaker services without any upfront commitments.

SageMaker savings plans – AWS offers a flexible, usage-based pricing model. You need to pay a consistent amount of usage in return.

If you use a computing instance for a few seconds, billed at a few dollars per hour, you will still be charged only for the seconds you use the instance. Compared to other cloud-based self-managed solutions, SageMaker provides at least 54% lower total cost of ownership over three years.

Read more: Deploy Machine Learning Models using Amazon SageMaker

Amazon SageMaker – making machine learning development and deployment efficient

Building machine learning models is a continuous cycle. Even after deploying a model, you should monitor inferences and evaluate the model to identify drift. This ensures an increase in the accuracy of the model. Amazon SageMaker, with its built-in library of algorithms, accelerates building and deploying machine learning models at scale.

Amazon SageMaker offers the following benefits:

Scalability

Flexibility

High-performing built-in ML models

Cost-effective solutions

Softweb Solutions offers Amazon SageMaker consulting services to address your machine learning challenges. Talk to our SageMaker consultants to know more about its applications for your business.

Originally published at softwebsolutions on November 28th, 2022.

1 note

·

View note

Text

Real-time Model Oversight: Amazon SageMaker vs Databricks ML Monitoring Features

Model monitoring is crucial in the lifecycle of machine learning models, especially for models deployed in production environments. Model monitoring is not just a "nice-to-have" but is essential to ensure the models' robustness, accuracy, fairness, and reliability in real-world applications. Without monitoring, model predictions can be unreliable, or even detrimental to the business or end-users. As a model builder, how often have you thought about how models’ behavior will change over time? In my professional life, I have seen many production systems managing model retraining life cycle using heuristic, gut feel or scheduled basis, either leading to the wastage of precious resources or performing retraining too late.

This is a ripe problem space as many models have been deployed in production. Hence there are many point solutions such as Great Expectations, Neptune.ai, Fiddler.ai who all boast really cool features either in terms of automatic metrics computation, differentiated statistical methods or Responsible AI hype that has become a real need of time (Thanks to ChatGPT and LLMs). In this Op-ed, I would like to touch upon two systems that I am familiar with and are widely used.

Amazon SageMaker Model Monitor

Amazon SageMaker is AWS’s flagship fully managed ML service to Build, Train, Deploy & “Monitor” Machine Learning models. The service provides click through experience for set up using SageMaker Studio or API experience using SageMaker SDK. SageMaker assumes you to have clean datasets for training and can capture inference request/response based on user defined time interval. The system works for model monitoring if models are the problem, BUT What if Data that is fed to the model is a problem or a pipeline well upstream in ETL pipeline is a problem. AWS provides multiple Data Lake architectures and patterns to stitch end-2-end data and AI systems together but tracking data lineage is easy if not impossible.

The monitoring solution is flexible thanks to SageMaker processing job which is underlying mechanism to execute underlying metrics. SageMaker processing also lets you build your custom container. SageMaker model monitoring is integrated with Amazon SageMaker Clarify and can provide Bias Drift which is important for Responsible AI. Overall SageMaker monitoring does a decent job of alerting when model drifts.

Databricks Lakehouse Monitoring

Let's look at the second contender. Databricks is a fully managed Data and AI platform available across all major clouds and also boasts millions of downloads of MLFlow OSS. I have recently come across Databricks Lakehouse Monitoring which IMO is a really cool paradigm of Monitoring your Data assets.

Let me explain why you should care if you are an ML Engineer or Data Scientist?

Let's say you have built a cool customer segmentation model and deployed it in production. You have started monitoring the model using one of the cool bespoke tools I mentioned earlier which may pop up an alert blaming a Data field. Now What?

✔ How do you track where that field came from cobweb of data ETL pipeline?

✔ How do you find the root cause of the drift?

✔ How do you track where that field came from cobweb of data ETL pipeline?

Here comes Databricks Lakehouse Monitoring to the rescue. Databricks Lakehouse Monitoring lets you monitor all of the tables in your account. You can also use it to track the performance of machine learning models and model-serving endpoints by monitoring inference tables created by the model’s output.

Let's put this in perspective, Data Layer is a foundation of AI. When teams across data and AI portfolios work together in a single platform, productivity of ML Teams, Access to Data assets and Governance is much superior compared to siloed or point solution.

The Vision below essentially captures an ideal Data and Model Monitoring solution. The journey starts with raw data with Bronze -> Silver -> Golden layers. Moreover, Features are also treated as another table (That’s refreshing and new paradigm, Goodbye feature stores). Now you get down to ML brass tacks by using Golden/Feature Tables for Model training and serve that model up.

Databricks recently launched in preview awesome Inference table feature. Imagine all your requests/responses captured as a table than raw files in your object store. Possibilities are limitless if the Table can scale. Once you have ground truth after the fact, just start logging it in Groundtruth Table. Since all this data is being ETLed using Databricks components, the Unity catalog offers nice end-2-end data lineage similar to Delta Live Tables.

Now you can turn on Monitors, and Databricks start computing metrics. Any Data Drift or Model Drift can be root caused to upstream ETL tables or source code. Imagine that you love other tools in the market for monitoring, then just have them crawl these tables and get your own insights.

Looks like Databricks want to take it up the notch by extending Expectations framework in DLT to extend to any Delta Table. Imagine the ability to set up column level constraints and instructing jobs to fail, rollback or default. So, it means problems can be pre-empted before they happen. Can't wait to see this evolution in the next few months.

To summarize, I came up with the following comparison between SageMaker and Databricks Model Monitoring.CapabilityWinnerSageMakerDatabricksRoot cause AnalysisDatabricksConstraint and violations due to concept and model driftExtends RCA to upstream ETL pipelines as lineage is maintainedBuilt-in statisticsSageMakerUses Deque Spark library and SageMaker Clarify for Bias driftUnderlying metrics library is not exposed but most likely Spark libraryDashboardingDatabricksAvailable using SageMaker Studio so it is a mustRedash dashboards are built and can be customized or use your favorite BI tool.AlertingDatabricksNeeds additional configuration using Event BridgeBuilt in alertingCustomizabilityBothUses Processing jobs so customization of your own metricsMost metrics are built-in, but dashboards can be customizedUse case coverageSageMakerCoverage for Tabular and NLP use casesCoverage for tabular use casesEase of UseDatabricksOne-click enablementOne-click enablement but bonus for monitoring upstream ETL tables

Hope you enjoyed the quick read. Hope you can engage Propensity Labs for your next Machine Learning project no matter how hard the problem is, we have a solution. Keep monitoring.

0 notes

Text

Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text analysis models

Exciting news! 🎉 Want to use machine learning to gain insights from product reviews without any coding or ML expertise? Check out this step-by-step guide on how to leverage Amazon SageMaker Canvas sentiment analysis and text analysis models. 🤩 According to Gartner, 85% of software buyers trust online reviews as much as personal recommendations. With machine learning, you can analyze large volumes of customer reviews and uncover valuable insights to improve your products and services. 💡 Amazon SageMaker Canvas offers ready-to-use AI models for sentiment analysis and text analysis of product reviews, eliminating the need for ML specialists. In this blog post, we provide sample datasets and walk you through the process of leveraging these models. 📈 Elevate your company with AI and stay competitive. Don't miss out on this opportunity to derive insights from product reviews. Check out the blog post here: [Link](https://ift.tt/tRcfO83) Stay updated on AI and ML trends by following Itinai on Twitter @itinaicom. And for a free consultation, join our AI Lab in Telegram @aiscrumbot. 🚀 #AI #MachineLearning #AmazonSageMakerCanvas #ProductReviews #Insights List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text analysis mod#AI News#AI tools#AWS Machine Learning Blog#Gavin Satur#Innovation#LLM#t.me/itinai Use no-code machine learning to derive insights from product reviews using Amazon SageMaker Canvas sentiment analysis and text

0 notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

The Future of AWS: Innovations, Challenges, and Opportunities

As we stand on the top of an increasingly digital and interconnected world, the role of cloud computing has never been more vital. At the forefront of this technological revolution stands Amazon Web Services (AWS), a A leader and an innovator in the field of cloud computing. AWS has not only transformed the way businesses operate but has also ignited a global shift towards cloud-centric solutions. Now, as we gaze into the horizon, it's time to dive into the future of AWS—a future marked by innovations, challenges, and boundless opportunities.

In this exploration, we will navigate through the evolving landscape of AWS, where every day brings new advancements, complex challenges, and a multitude of avenues for growth and success. This journey is a testament to the enduring spirit of innovation that propels AWS forward, the challenges it must overcome to maintain its leadership, and the vast array of opportunities it presents to businesses, developers, and tech enthusiasts alike.

Join us as we embark on a voyage into the future of AWS, where the cloud continues to shape our digital world, and where AWS stands as a beacon guiding us through this transformative era.

Constant Innovation: The AWS Edge

One of AWS's defining characteristics is its unwavering commitment to innovation. AWS has a history of introducing groundbreaking services and features that cater to the evolving needs of businesses. In the future, we can expect this commitment to innovation to reach new heights. AWS will likely continue to push the boundaries of cloud technology, delivering cutting-edge solutions to its users.

This dedication to innovation is particularly evident in AWS's investments in machine learning (ML) and artificial intelligence (AI). With services like Amazon SageMaker and AWS Deep Learning, AWS has democratized ML and AI, making these advanced technologies accessible to developers and businesses of all sizes. In the future, we can anticipate even more sophisticated ML and AI capabilities, empowering businesses to extract valuable insights and create intelligent applications.

Global Reach: Expanding the AWS Footprint

AWS's global infrastructure, comprising data centers in numerous regions worldwide, has been key in providing low-latency access and backup to customers globally. As the demand for cloud services continues to surge, AWS's expansion efforts are expected to persist. This means an even broader global presence, ensuring that AWS remains a reliable partner for organizations seeking to operate on a global scale.

Industry-Specific Solutions: Tailored for Success

Every industry has its unique challenges and requirements. AWS recognizes this and has been increasingly tailoring its services to cater to specific industries, including healthcare, finance, manufacturing, and more. This trend is likely to intensify in the future, with AWS offering industry-specific solutions and compliance certifications. This ensures that organizations in regulated sectors can leverage the power of the cloud while adhering to strict industry standards.

Edge Computing: A Thriving Frontier

The rise of the Internet of Things (IoT) and the growing importance of edge computing are reshaping the technology landscape. AWS is positioned to capitalize on this trend by investing in edge services. Edge computing enables real-time data processing and analysis at the edge of the network, a capability that's becoming increasingly critical in scenarios like autonomous vehicles, smart cities, and industrial automation.

Sustainability Initiatives: A Greener Cloud

Sustainability is a primary concern in today's mindful world. AWS has already committed to sustainability with initiatives like the "AWS Sustainability Accelerator." In the future, we can expect more green data centers, eco-friendly practices, and a continued focus on reducing the harmful effects of cloud services. AWS's dedication to sustainability aligns with the broader industry trend towards environmentally responsible computing.

Security and Compliance: Paramount Concerns

The ever-growing importance of data privacy and security cannot be overstated. AWS has been proactive in enhancing its security services and compliance offerings. This trend will likely continue, with AWS introducing advanced security measures and compliance certifications to meet the evolving threat landscape and regulatory requirements.

Serverless Computing: A Paradigm Shift

Serverless computing, characterized by services like AWS Lambda and AWS Fargate, is gaining rapid adoption due to its simplicity and cost-effectiveness. In the future, we can expect serverless architecture to become even more mainstream. AWS will continue to refine and expand its serverless offerings, simplifying application deployment and management for developers and organizations.

Hybrid and Multi-Cloud Solutions: Bridging the Gap

AWS recognizes the significance of hybrid and multi-cloud environments, where organizations blend on-premises and cloud resources. Future developments will likely focus on effortless integration between these environments, enabling businesses to leverage the advantages of both on-premises and cloud-based infrastructure.

Training and Certification: Nurturing Talent

AWS professionals with advanced skills are in more demand. Platforms like ACTE Technologies have stepped up to offer comprehensive AWS training and certification programs. These programs equip individuals with the skills needed to excel in the world of AWS and cloud computing. As the cloud becomes increasingly integral to business operations, certified AWS professionals will continue to be in high demand.

In conclusion, the future of AWS shines brightly with promise. As a expert in cloud computing, AWS remains committed to continuous innovation, global expansion, industry-specific solutions, sustainability, security, and empowering businesses with advanced technologies. For those looking to embark on a career or excel further in the realm of AWS, platforms like ACTE Technologies offer industry-aligned training and certification programs.

As businesses increasingly rely on cloud services to drive their digital transformation, AWS will continue to play a key role in reshaping industries and empowering innovation. Whether you are an aspiring cloud professional or a seasoned expert, staying ahead of AWS's evolving landscape is most important. The future of AWS is not just about technology; it's about the limitless possibilities it offers to organizations and individuals willing to embrace the cloud's transformative power.

8 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Best AWS Sagemaker Developer

SoftmaxAI is a reputed AWS cloud consultant in India. We have the experience and knowledge to build comprehensive strategies and develop solutions that maximize your return on AWS investment. We have a certified team of AWS DeepLens, Amazon Forecast, PyTorch on AWS, and AWS Sagemaker developer to leverage machine tools and design custom algorithms and improve your ROI.

2 notes

·

View notes

Link

In this post, we demonstrate how to use SageMaker AI to apply the Random Cut Forest (RCF) algorithm to detect anomalies in spacecraft position, velocity, and quaternion orientation data from NASA and Blue Origin’s demonstration of lunar Deorbit, Des #AI #ML #Automation

0 notes

Text

No-code data preparation for time series forecasting using Amazon SageMaker Canvas

No-code data preparation for time series forecasting using Amazon SageMaker Canvas

0 notes

Link

The Amazon EU Design and Construction (Amazon D&C) team is the engineering team designing and constructing Amazon warehouses. The team navigates a large volume of documents and locates the right information to make sure the warehouse design meets the highest standards. In the post A generative AI-powered solution on Amazon SageMaker to help Amazon EU […]

0 notes

Text

The Power of AI and Human Collaboration in Media Content Analysis

In today’s world binge watching has become a way of life not just for Gen-Z but also for many baby boomers. Viewers are watching more content than ever. In particular, Over-The-Top (OTT) and Video-On-Demand (VOD) platforms provide a rich selection of content choices anytime, anywhere, and on any screen. With proliferating content volumes, media companies are facing challenges in preparing and managing their content. This is crucial to provide a high-quality viewing experience and better monetizing content.

Some of the use cases involved are,

Finding opening of credits, Intro start, Intro end, recap start, recap end and other video segments

Choosing the right spots to insert advertisements to ensure logical pause for users

Creating automated personalized trailers by getting interesting themes from videos

Identify audio and video synchronization issues

While these approaches were traditionally handled by large teams of trained human workforces, many AI based approaches have evolved such as Amazon Rekognition’s video segmentation API. AI models are getting better at addressing above mentioned use cases, but they are typically pre-trained on a different type of content and may not be accurate for your content library. So, what if we use AI enabled human in the loop approach to reduce cost and improve accuracy of video segmentation tasks.

In our approach, the AI based APIs can provide weaker labels to detect video segments and send for review to be trained human reviewers for creating picture perfect segments. The approach tremendously improves your media content understanding and helps generate ground truth to fine-tune AI models. Below is workflow of end-2-end solution,

Raw media content is uploaded to Amazon S3 cloud storage. The content may need to be preprocessed or transcoded to make it suitable for streaming platform (e.g convert to .mp4, upsample or downsample)

AWS Elemental MediaConvert transcodes file-based content into live stream assets quickly and reliably. Convert content libraries of any size for broadcast and streaming. Media files are transcoded to .mp4 format

Amazon Rekognition Video provides an API that identifies useful segments of video, such as black frames and end credits.

Objectways has developed a Video segmentation annotator custom workflow with SageMaker Ground Truth labeling service that can ingest labels from Amazon Rekognition. Optionally, you can skip step#3 if you want to create your own labels for training custom ML model or applying directly to your content.

The content may have privacy and digitial rights management requirements and protection. The Objectway’s Video Segmentaton tool also supports Digital Rights Management provider integration to ensure only authorized analyst can look at the content. Moreover, the content analysts operate out of SOC2 TYPE2 compliant facilities where no downloads or screen capture are allowed.

The media analysts at Objectways’ are experts in content understanding and video segmentation labeling for a variety of use cases. Depending on your accuracy requirements, each video can be reviewed or annotated by two independent analysts and segment time codes difference thresholds are used for weeding out human bias (e.g., out of consensus if time code differs by 5 milliseconds). The out of consensus labels can be adjudicated by senior quality analyst to provide higher quality guarantees.

The Objectways Media analyst team provides throughput and quality gurantees and continues to deliver daily throughtput depending on your business needs. The segmented content labels are then saved to Amazon S3 as JSON manifest format and can be directly ingested into your Media streaming platform.

Conclusion

Artificial intelligence (AI) has become ubiquitous in Media and Entertainment to improve content understanding to increase user engagement and also drive ad revenue. The AI enabled Human in the loop approach outlined is best of breed solution to reduce the human cost and provide highest quality. The approach can be also extended to other use cases such as content moderation, ad placement and personalized trailer generation.

Contact [email protected] for more information.

2 notes

·

View notes

Text

Simplify medical image classification using Amazon SageMaker Canvas

📢 Exciting news! Check out this blog post on how to simplify medical image classification using Amazon SageMaker Canvas. 🧪🖥️ For healthcare professionals looking to leverage machine learning in image analysis, this visual tool makes it a breeze to build and deploy ML models without any coding or specialized knowledge. 🙌 Learn how Amazon SageMaker Canvas provides a user-friendly interface to select data, specify output, and automatically build and train models for accurate diagnosis and treatment decisions. 💡 Read the blog post here: [Simplify medical image classification using Amazon SageMaker Canvas](https://ift.tt/IHChKr6) Take advantage of this powerful tool to simplify your workflows and drive advancements in healthcare research. Share your thoughts and let's continue innovating together! 💪 #machinelearning #healthtech #medicalimaging #AmazonSageMakerCanvas #innovation List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Simplify medical image classification using Amazon SageMaker Canvas#AI News#AI tools#AWS Machine Learning Blog#Innovation#itinai#LLM#ML#Productivity#Ramakant Joshi Simplify medical image classification using Amazon SageMaker Canvas

0 notes

Text

U.S. Machine Learning Market Size, Share | CAGR 37.2% During 2023-2030

The U.S. machine learning market share was valued at USD 4.74 billion in 2022 and is projected to grow from USD 6.49 billion in 2023 to USD 59.30 billion by 2030, at a CAGR of 37.2%. The U.S. Machine Learning (ML) market encompasses the development, deployment, and application of algorithms and statistical models that enable computer systems to perform tasks without explicit instructions, relying instead on patterns and inference. Machine Learning is a key subset of Artificial Intelligence (AI) and plays a vital role across a broad range of sectors, including healthcare, finance, retail, manufacturing, transportation, and government.

Market Scope:

Types of technology: supervised learning, unsupervised learning, reinforcement learning, deep learning, natural language processing (NLP), and neural networks.

Deployment Options: Cloud-hosted, local, and mixed solutions.

Applications: Predictive analysis, image and voice recognition, recommendation engines, fraud detection, robotic process automation, and self-operating systems.

Final Users: Businesses, research organizations, governmental bodies, and technology startups

Request for Free Sample Here: https://www.fortunebusinessinsights.com/enquiry/request-sample-pdf/u-s-machine-learning-ml-market-107479

Key Players:

Amazon, Inc. (U.S.)

Fair Isaac Corporation (U.S.)

RapidMiner Inc. (U.S.)

Microsoft Corporation (U.S.)

H2O.ai (U.S.)

IBM Corporation (U.S.)

Oracle Corporation (U.S.)

Hewlett Packard Enterprise Company (U.S.)

Teradata (U.S.)

TIBCO Software Inc. (U.S.)

Key Development Industry:

June 2022– Teradata announced the integration of the Teradata Vantage multi-cloud data and analytics platform with Amazon SageMaker and its general availability. This initiative backs Teradata's Analytics 123 framework, providing organizations facing challenges with production-grade AI/ML projects a systematic method for expanding their analytical model implementation. October 2022 – IBM's artificial intelligence System-on-Chip (SoC) has been released to the public. The device is engineered to train and execute deep learning models much more efficiently and considerably quicker than CPUs. The SoC features 32 processing cores and contains 23 billion transistors, thanks to a 5 nm process node.

Market Trend:

Rising interest in explainable AI (XAI) and responsible ML practices.

Increased use of automated machine learning (AutoML) for non-experts.

Integration of ML with edge computing for real-time analytics.

Rapid adoption in healthcare, fintech, and cybersecurity domains.

Speak to Analyst: https://www.fortunebusinessinsights.com/enquiry/speak-to-analyst/u-s-machine-learning-ml-market-107479

About Us:

At Fortune Business Insights, we empower businesses to thrive in rapidly evolving markets. Our comprehensive research solutions, customized services, and forward-thinking insights support organizations in overcoming disruption and unlocking transformational growth.

With deep industry focus, robust methodologies, and extensive global coverage, we deliver actionable market intelligence that drives strategic decision-making. Whether through syndicated reports, bespoke research, or hands-on consulting, our result-oriented team partners with clients to uncover opportunities and build the businesses of tomorrow.

We go beyond data offering clarity, confidence, and competitive edge in a complex world.

Contact Us:

US +1 833 909 2966

UK +44 808 502 0280

APAC +91 744 740 1245

Email: [email protected]

#U.S. Machine Learning Market Share#U.S. Machine Learning Market Size#U.S. Machine Learning Market Industry#U.S. Machine Learning Market Analysis#U.S. Machine Learning Market Driver#U.S. Machine Learning Market Research#U.S. Machine Learning Market Growth

0 notes

Text

AI Model Development: How to Build Intelligent Models for Business Success

AI model development is revolutionizing how companies tackle challenges, make informed decisions, and delight customers. If lengthy reports, slow processes, or missed insights are holding you back, you’re in the right place. You’ll learn practical steps to leverage AI models, plus why Ahex Technologies should be your go-to partner.

Read the original article for a more in-depth guide: AI Model Development by Ahex

What Is AI Model Development?

AI model development is the practice of designing, training, and deploying algorithms that learn from your data to automate tasks, make predictions, or uncover hidden insights. By turning raw data into actionable intelligence, you empower your team to focus on strategy , while machines handle the heavy lifting.

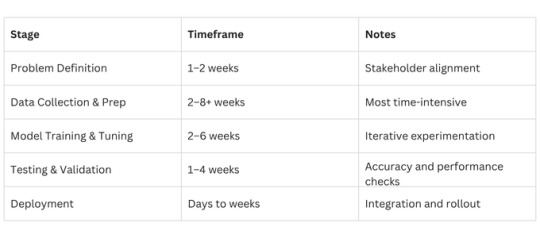

The AI Model Development Process

Define the Problem Clarify the business goal: Do you need sales forecasts, customer-churn predictions, or automated text analysis?

Gather & Prepare Data Collect, clean, and structure data from internal systems or public sources. Quality here drives model performance.

Select & Train the Model Choose an algorithm, simple regression for straightforward tasks or neural nets for complex patterns. Split data into training and testing sets for validation.

Test & Validate Measure accuracy, precision, recall, or other KPIs. Tweak hyperparameters until you achieve reliable results.

Deploy & Monitor Integrate the model into your workflows. Continuously track performance and retrain as data evolves.

AI Model Development in 2025

Custom AI models are no longer optional, they’re essential. Off-the-shelf solutions can’t match bespoke systems trained on your data. In 2025, businesses that leverage tailored AI enjoy faster decision-making, sharper insights, and increased competitiveness.

Why Businesses Need Custom AI Model Development

Precision & Relevance: Models built on your data yield more accurate, context-specific insights.

Data Security: Owning your models means full control over sensitive information — crucial in finance, healthcare, and beyond.

Scalability: As your business grows, your AI grows with you. Update and retrain instead of starting from scratch.

How to Create an AI Model from Scratch

Define the Problem

Gather & Clean Data

Choose an Algorithm (e.g., regression, classification, deep learning)

Train & Validate on split datasets

Deploy & Monitor in production

Break each step into weekly sprints, and you’ll have a minimum viable model in just a few weeks.

How to Make an AI Model That Delivers Results

Set Clear Objectives: Tie every metric to a business outcome, revenue growth, cost savings, or customer retention.

Invest in Data Quality: The “garbage in, garbage out” rule is real. High-quality data yields high-quality insights.

Choose Explainable Models: Transparency builds trust with stakeholders and meets regulatory requirements.

Stress-Test in Real Scenarios: Validate your model against edge cases to catch blind spots.

Maintain & Retrain: Commit to ongoing model governance to adapt to new trends and data.

Top Tools & Frameworks to Build AI Models That Work

PyTorch: Flexible dynamic graphs for rapid prototyping.

Keras (TensorFlow): User-friendly API with strong community support.

LangChain: Orchestrates large language models for complex applications.

Vertex AI: Google’s end-to-end platform with AutoML.

Amazon SageMaker: AWS-managed service covering development to deployment.

Langflow & AutoGen: Low-code solutions to accelerate AI workflows.

Breaking Down AI Model Development Challenges

Data Quality & Availability: Address gaps early to avoid costly rework.

Transparency (“Black Box” Issues): Use interpretable models or explainability tools.

High Costs & Skills Gaps: Leverage a specialized partner to access expertise and control budgets.

Integration & Scaling: Plan for seamless API-based deployment into your existing systems.

Security & Compliance: Ensure strict protocols to protect sensitive data.

Typical AI Model Timelines

For simple pilots, expect 1–2 months; complex enterprise AI can take 4+ months.

Cost Factors for AI Development

Why Ahex Technologies Is the Best Choice for Mobile App Development

(Focusing on expertise, without diving into technical app details)

Holistic AI Expertise: Our AI solutions integrate seamlessly with mobile platforms you already use.

Client-First Approach: We tailor every model to your unique workflow and customer journey.

End-to-End Support: From concept to deployment and beyond, we ensure your AI and mobile efforts succeed in lockstep.

Proven Track Record: Dozens of businesses trust us to deliver secure, scalable, and compliant AI solutions.

How Ahex Technologies Can Help You Build Smarter AI Models

At Ahex Technologies, our AI Development Services cover everything from proof-of-concept to full production rollouts. We:

Diagnose your challenges through strategic workshops

Design custom AI roadmaps aligned to your goals

Develop robust, explainable models

Deploy & Manage your AI in the cloud or on-premises

Monitor & Optimize continuously for peak performance

Learn more about our approach: AI Development Services Ready to get started? Contact us

Final Thoughts: Choosing the Right AI Partner

Selecting a partner who understands both the technology and your business is critical. Look for:

Proven domain expertise

Transparent communication

Robust security practices

Commitment to ongoing optimization

With the right partner, like Ahex Technologies, you’ll transform data into a competitive advantage.

FAQs on AI Model Development

1. What is AI model development? Designing, training, and deploying algorithms that learn from data to automate tasks and make predictions.

2. What are the 4 types of AI models? Reactive machines, limited memory, theory of mind, and self-aware AI — ranging from simple to advanced cognitive abilities.

3. What is the AI development life cycle? Problem definition → Data prep → Model building → Testing → Deployment → Monitoring.

4. How much does AI model development cost? Typically $10,000–$500,000+, depending on project complexity, data needs, and integration requirements.

Ready to turn your data into growth? Explore AI Model Development by Ahex Our AI Development Services Let’s talk!

0 notes

Text

AWS Security 101: Protecting Your Cloud Investments

In the ever-evolving landscape of technology, few names resonate as strongly as Amazon.com. This global giant, known for its e-commerce prowess, has a lesser-known but equally influential arm: Amazon Web Services (AWS). AWS is a powerhouse in the world of cloud computing, offering a vast and sophisticated array of services and products. In this comprehensive guide, we'll embark on a journey to explore the facets and features of AWS that make it a driving force for individuals, companies, and organizations seeking to utilise cloud computing to its fullest capacity.

Amazon Web Services (AWS): A Technological Titan

At its core, AWS is a cloud computing platform that empowers users to create, deploy, and manage applications and infrastructure with unparalleled scalability, flexibility, and cost-effectiveness. It's not just a platform; it's a digital transformation enabler. Let's dive deeper into some of the key components and features that define AWS:

1. Compute Services: The Heart of Scalability

AWS boasts services like Amazon EC2 (Elastic Compute Cloud), a scalable virtual server solution, and AWS Lambda for serverless computing. These services provide users with the capability to efficiently run applications and workloads with precision and ease. Whether you need to host a simple website or power a complex data-processing application, AWS's compute services have you covered.

2. Storage Services: Your Data's Secure Haven

In the age of data, storage is paramount. AWS offers a diverse set of storage options. Amazon S3 (Simple Storage Service) caters to scalable object storage needs, while Amazon EBS (Elastic Block Store) is ideal for block storage requirements. For archival purposes, Amazon Glacier is the go-to solution. This comprehensive array of storage choices ensures that diverse storage needs are met, and your data is stored securely.

3. Database Services: Managing Complexity with Ease

AWS provides managed database services that simplify the complexity of database management. Amazon RDS (Relational Database Service) is perfect for relational databases, while Amazon DynamoDB offers a seamless solution for NoSQL databases. Amazon Redshift, on the other hand, caters to data warehousing needs. These services take the headache out of database administration, allowing you to focus on innovation.

4. Networking Services: Building Strong Connections

Network isolation and robust networking capabilities are made easy with Amazon VPC (Virtual Private Cloud). AWS Direct Connect facilitates dedicated network connections, and Amazon Route 53 takes care of DNS services, ensuring that your network needs are comprehensively addressed. In an era where connectivity is king, AWS's networking services rule the realm.

5. Security and Identity: Fortifying the Digital Fortress

In a world where data security is non-negotiable, AWS prioritizes security with services like AWS IAM (Identity and Access Management) for access control and AWS KMS (Key Management Service) for encryption key management. Your data remains fortified, and access is strictly controlled, giving you peace of mind in the digital age.

6. Analytics and Machine Learning: Unleashing the Power of Data

In the era of big data and machine learning, AWS is at the forefront. Services like Amazon EMR (Elastic MapReduce) handle big data processing, while Amazon SageMaker provides the tools for developing and training machine learning models. Your data becomes a strategic asset, and innovation knows no bounds.

7. Application Integration: Seamlessness in Action

AWS fosters seamless application integration with services like Amazon SQS (Simple Queue Service) for message queuing and Amazon SNS (Simple Notification Service) for event-driven communication. Your applications work together harmoniously, creating a cohesive digital ecosystem.

8. Developer Tools: Powering Innovation

AWS equips developers with a suite of powerful tools, including AWS CodeDeploy, AWS CodeCommit, and AWS CodeBuild. These tools simplify software development and deployment processes, allowing your teams to focus on innovation and productivity.

9. Management and Monitoring: Streamlined Resource Control

Effective resource management and monitoring are facilitated by AWS CloudWatch for monitoring and AWS CloudFormation for infrastructure as code (IaC) management. Managing your cloud resources becomes a streamlined and efficient process, reducing operational overhead.

10. Global Reach: Empowering Global Presence

With data centers, known as Availability Zones, scattered across multiple regions worldwide, AWS enables users to deploy applications close to end-users. This results in optimal performance and latency, crucial for global digital operations.

In conclusion, Amazon Web Services (AWS) is not just a cloud computing platform; it's a technological titan that empowers organizations and individuals to harness the full potential of cloud computing. Whether you're an aspiring IT professional looking to build a career in the cloud or a seasoned expert seeking to sharpen your skills, understanding AWS is paramount.

In today's technology-driven landscape, AWS expertise opens doors to endless opportunities. At ACTE Institute, we recognize the transformative power of AWS, and we offer comprehensive training programs to help individuals and organizations master the AWS platform. We are your trusted partner on the journey of continuous learning and professional growth. Embrace AWS, embark on a path of limitless possibilities in the world of technology, and let ACTE Institute be your guiding light. Your potential awaits, and together, we can reach new heights in the ever-evolving world of cloud computing. Welcome to the AWS Advantage, and let's explore the boundless horizons of technology together!

8 notes

·

View notes