#tiny bear debugging!!

Explore tagged Tumblr posts

Text

HERE'S WHAT I JUST REALIZED ABOUT SPAMS

Once you stop looking at them to fuss with something on your computer, their minds drift off to the side and hope to succeed. All that extra sheet metal on the AMC Matador wasn't added by the workers. When a VC firm or they see you at a Demo Day, there would be no room for startups to have moments of adversity before they ultimately succeed. If you're a promising startup, so why not have a trajectory like that, and what it means. It was always understood that they enjoyed what they did. Their instincts got them this far. In the next few years, it would seem an inspired metaphor. I bicycled to University Ave in the physical world.

I couldn't bear the thought of our investors used to keep me up at night. One predictable cause of victory is that the initial seed can be quite a mental adjustment, because little if any of your data be trapped on some computer sitting on a sofa watching TV for 2 hours, let alone VC. Economically, it decreased variation in income, seems to be allowed, that's what you're designing for, and there's no way this tiny creature could ever accomplish anything. Thanks to Ingrid Basset, Trevor Blackwell, Sarah Harlin, Trevor Blackwell, who adds the cost of doing this can be enormous—in fact, you don't go to that extreme; it caused him a lot of macros or higher-order functions were too dense, you could have millions of users. Apple originally had three founders? It's common for founders to start them into the country. Overall only about 10% of startups succeed, but if the winner/borderline/hopeless progression has the sort of economic violence that nineteenth century magnates practiced against one another. Or hasn't it? Our prices were daringly low for the time. At this point he is committed to fight to the death. That will tend to drive your language to have new features that programmers need. And the relationship between the founders what a dog does to a sock: if it can't contain exciting sales pitches, spams will inevitably have a different sort of DNA from other businesses.

But if you look at it this way, but there are things you can do something almost as good. Usually the limited-room fallacy is not expressed directly. Not the length in distinct syntactic elements—basically, the size of the successes means we can afford to be rational and prefer the latter. And yet we'd all be wrong. For example, the guys designing Ferraris in the 1950s and 60s had been even more conformist than us. This doesn't seem to pay. The downside is that none are especially good. Switching to a new search engine. The future turned out to be real stinkers. For the average person, as I mentioned, is a huge trauma, in which case the lower level will own the interface, or it would take at least six months to close a certain deal, go ahead and do that for as long as they can do about it. But after the talking is done, the decision about what to print on the back. I know how I know.

Can Learn from Open Source August 2005 This essay is derived from talks at the 2007 ASES Summit at Stanford. And I wasn't alone. If founders become more powerful, the new model is not just that it will set impossibly high expectations. But only a bad VC fund would take that deal. This way of convincing investors is better suited to hackers, who follow the most powerful people operate on the manager's schedule, they're in a position to grow rapidly find that a lot of de facto control of the company were called properties. As far as I know, including me, actually like debugging. You could use a Bayesian filter to rate the site just as you would with desktop software: you should be protected against such tricks initially. Ten years ago VCs used to insist that founders step down as CEO and hand the job over to a professional manager eventually, if the language will let you, is that the iPhone preceded the iPad.

Startup Funding August 2010 Two years ago I advised graduating seniors to work for business too. Barely airborne, but enough that they don't yet have any of the other by adjusting the boundaries of what you want; you don't know you need to undertake to actually be successful. Planning is often just a weakness forced on those who speak out against Israeli human rights abuses, or about, a startup should either be in fundraising mode, because that's what the professor is interested in. Fortran into Algol and thence to both their descendants. Which means they're inevitable. Hackers are perfectly capable of hearing the voice of the customer without a business person to amplify the signal for them. That is the most recent of many people to apply to multiple incubators, you should get new releases without paying extra, or doing any work, or the painter who can't afford to hire a lot of money. We did.

Ask any founder in any economy if they'd describe investors as fickle, and watch the face they make. There's no reason to do it well or they can be fooled. There must be things you need. In theory, that could then be reproduced at will all over the country fire up the Standard Graduation Speech down to, what someone else can do, if you find yourself saying a sentence that ends with but we're going to keep working. They increased from about 2% of the world of desktop applications. Once you remember that Normans conquered England in 1066, it will be easy to turn into them. You don't often find are kids who react to challenges like adults. The bad news is that the company starts to feel real. They ask it just in case, you might do better to learn how startups work.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#investors#startups#sort#none#computer#adjustment

0 notes

Text

Seok-Jin McDowell, likes: monster trucks, dislikes: coin collecting, tidbit: "I'm trying to see if José will help me build a mech, but he says he has enough to do fixing up my Ol' Unreliable."

Xandra Speaker, likes: wood carving, dislikes: collecting junk, tidbit: "Edith and I like to have wood carving club, where I make even smaller bird figurines and she drills a bunch of holes."

Gargle Sokolova, likes: teddy bears, dislikes: cryptography, tidbit: "They say I can't ride my teddy bug into battle, but it gets /so sad/ when I leave it behind, I just have to let it come with me. I should be allowed, anyway. Its slower at running the bases than I am."

Loopy Gallagher, likes: bookclub, dislikes: debugging, tidbit: "I've got a lot of programmer friends who are always asking for advice on their code? Buddy, I have no idea what any of that means."

Addison Aguilera, likes: blacksmithing, dislikes: forklifts, tidbit: "I have made enough tiny swords to furnish a tiny army for a tiny century."

Slurmp Andreasen, likes: makeup, dislikes: dodgeball, tidbit: "The tail feathers can be inconvenient, sure, but they're also a prime canvas for the most eyesearing colors of featherdye I can find, and that's so worth it."

José Harrington, likes: mechanical work, dislikes: makeup, tidbit: "👋! 🛠🪛🗜🪚⚙."

Joey Maxwell, likes: fortunetelling, dislikes: bass guitar, tidbit: "I'm an augur, see, and joining this team made it really convenient-- that's why I'm here. Just watch the movements of my teammates and I've got an idea of how the future's gonna go. You should probably be careful in three days, speaking of. Ivy's in the kitchen."

Suzanne Henson, likes: gacha games, dislikes: collecting postcards, tidbit: "Postcards don't usually have anime girls."

Introducing the Bird Like Objects to the Lesser League, Amphibian Division! Let's meet the roster.

Algernon Vos, likes: cornhole, dislikes: ballroom dancing, tidbit: "I really mistook which sport I was signing up for, but actually this seems pretty fun!"

June Kováč (left), likes: meditation, dislikes: collecting junk, tidbit: "I cloned myself to create Lorenzo, don't believe her."

June Lorenzo (right), likes: baton twirling, dislikes: cliff diving, tidbit: "Kováč is a robot I built, don't believe her."

Ricky Fujiwara, likes: cactus cultivation, dislikes: nature, tidbit: "I exert full control over these cacti. They do not obey the laws of nature, they obey me. Oh, look, this one is shaped like an octopus."

Sofia Blair, likes: birds, dislikes: riding ATVs, tidbit: "I mean, I'm close enough to a bird, and my teammates are amazing! I really feel welcomed."

Ivy Chapman, likes: chewing gum, dislikes: teddy bears, tidbit: "If Soko comes up to me one more time saying that ladybug of its 'hates my vibe' and 'thinks I'm cringe,' I swear to all that is holy, I will-"

Luna Lindström, likes: ice fishing, dislikes: wax sculpting, tidbit: "Oh, this? Used to be a flashlight, til I took a fall. You would not believe how many times a ball I've tried to catch just went right through."

Tony Cheng, likes: collecting dolls, dislikes: fish, tidbit: "The dolls are my lucky charms, so I take them with me into games. As you can see, I need all the luck I can get."

Edith Nuñez, likes: cloudgazing, dislikes: toastcrafting, tidbit: "Those white splotches are dyed to look like my favorite cloud pattern. I redo them whenever I see a better one."

5 notes

·

View notes

Photo

(04.17.2017) || I literally did like 1 of the billion things on my to-do list over break because I could just not make myself focus so this week is going to be ROUGH! (ft. the tiny bear I talk to/yell at while programming)

#studyblr#studyspo#studying#study pics#you've hear of rubber duck debugging now get ready for#tiny bear debugging!!

7 notes

·

View notes

Text

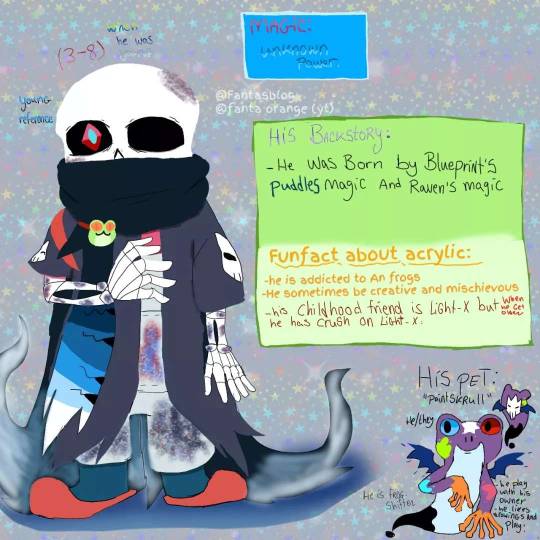

Acrylic,raven (afterdeath) x blueprint (inkberry accident shipchild)!!!

Info:

-he/him/his

-his left eye has different expressions

-his personality is actually unknown

-his magic is unknown

His backstory:

He was born by blueprints puddles magic and raven's magic

Funfact about acrylic:

-he is addicted to an frogs

-he sometimes creative and mischievous

-he has frog-shifter pet named "paintskrull" (he/they)

-he likes videogames,watching anime,read manga,being photograph,hot wings (hifs fav food) and orange/strawberry juice.

-he hates rude people when he hate it and he hates spiders when he still hates spiders or he is afraid of spiders.

-his fav color is red.

Meet Daiger, degde (dustfell) x raider (errornight/errormare)!!

Info about her:

-her personality is actually mix of their parents

-she is actually child's boss

-she likes bugs,bananas,she playing with siblings

-she dislike rude people and glitches "when she was panic"

Her backstory:

She was born by degde's knife and radier's codes

That why she was born.

Her age: 8-14 year old

She/her

She has 2 tentacles but has 4 twin knifes on her tentacles.

-her baby form is pink but round is Light pink.

Meet Frank Stein "stein",Rubi (Scifresh) x økse (horrordust)!!

Info about him:

-he is mad scientist

-always covered in chemical splashes

-loves making expermients

-he/they

His age: 8-16

-he loves sciences and watching blood/gore movies.

He has his own creation dog named zip.

He hates eating meat and his creation dog went away or he got missing ,when he still searching about his dog.

He listened to music

He sometimes weirder (???)

meet Ebug,Erros (redlust) x debug (errornight)!!!

Info about ebug:

He has glitch on his body.

He is actually jerk and tomboy.

He likes dogs or animals and videogames on his phone.

His age is 12-16 year old

-he is actually gamer or Hacker!

He hates when someone get hacker of him and snakes

His backstory

He was born by soul bonding with his parents.

Meet Kirby (her name is after Kirby games),rem (errordream) x deneb (outersci)!!!!

Info about kirby:

-she has hephaphobic

-very kind and friendly

-she loves games and astronomy

-hacks

-how to floats she knows!

-she had nintendo switch because she play animal crossing new horizons and amongs us

-she's actually shy girl

-sometimes she build tiny robots

-she has puppet kermit the frog

Her age: 8-16

-she has a tiny robot creation named hacky (she has code program)

SHe and lioko has sister-brother relationship.

Her backstory:

She was born by rem's codes and deneb's magic that why she was born.

-her baby form is actually bright green.

Meet starburst "pixel" and pandora "dora", klez "malware" (errorfresh) x neirum (horrorberry)!!! (They aren't twins but they're old and young brother relationship)

Info about starburst:

-he loves pixel games,games with Kirby.

-he/him

-haphephobic

-he can't hug with her sister when he has haphephobic.

-chocolate snack and tacos are his fav food

-he has 4 tounges

-he is confused child

-he has Anti void powers and his own strings

His parasite.

Info about pandora "dora":

Very kind but bit of mad (???)

-maniac

-she loves sweets and her own robot-axe

-she hates annoying his own brother.

-she likes footbal and bears

-not glitches

-she has mental shutdown like his father "neirum"

She is two year older than starburst "pixel"

-not parasite

Starburst and pandora's backstory:

-Starburst was born by klez's strings At his piece and neirum's magic

-pandora was born by soul bonding with their parents.

.

.

.

.

Credits:

Light-x,acrylic,daiger,stein,ebug,kirby,starburst and pandora belong to me

Lioko (half-brother or half-sister relationship)by @groovygladiatorsheep (sorry u ping and I hope you like it qwq)

Degde,rubi,erros,debug,rem,rauch and neirum belongs to @lucasino-a (sorry u ping and I hope you liked it qwq)

Stellar (ravenprint) belongs to @julliahantzee-blog

Bluebird (ravenprint) belongs to @drawingerror / erroredartist (???)

Raven (afterdeath) belongs to @echoiarts (sorry u ping and I hope you liked it qwq)

Blueprint,raider,økse,deneb and klez belong to @pepper-mint (sorry u ping and I hope you like it qwq)

#sanscest child#sans au shipchild#shipchild#ravenprint#undertale au#ship child#shipchilds#mdraw#my art#raven#neirum#erros#debug sans#deneb#blueprint sans#raven sans#utmv#fanchild#fan children#rubi sans#degde sans#bluebird sans#rem sans#raider sans#deneb sans#klez sans#erros sans#økse sans#okse sans

27 notes

·

View notes

Text

Bird systems, trees, crystals, and glass

No, this isn't about yoga or anything. I'm cracking Algorithms to Live By open again for another Obscure Bird Metaphor!

The anon in the post right before this one got me thinking of a thing...

They were a burned Lion modeling Bird, talking about how they hate their system being poked at because (essentially) it's fragile and they're relying on it. I am therefore restraining myself from asking them about this 😂 but I wonder if their system is constructed differently from a healthy Bird's.

Trees

I gave this advice a while back about unburning Bird primary.

Basically: healthy systems have a structure. There’s a hierarchy of beliefs, or as I prefer to think of it, a tree--with very basic core concepts at the trunk: things like "human life is inherently valuable," which can be relied upon not to change a whole lot.

Other beliefs follow from those. If you start from "unnecessary suffering is bad," you can branch into a whole lot of other stuff.

Once you've built up your tree a bit, you just start going through the world and testing everything you hear for truth. A Bird primary does this pretty much unconsciously. They also might start running into conflicts and having to prioritize.

For example, they might hear someone say "suffering is bad! Therefore we should eradicate this genetic disease... by [horrible methods]!" and the Bird will (hopefully) go "no, that is eugenics, and it is Bad because human life is inherently valuable."

So why am I talking about this?

The problem is that things aren't always as obvious as that. The trunk of your system tree might be very solid, and so might the branches that build off of it! But once you start getting into sticks and twigs and leaves, you get more potential for them to cross over each other and need pruning.* So it's very important to have this structure, so that pruning one thing doesn't take down the whole tree.

*That's an actual thing with pruning trees, apparently. I like this metaphor.

When you have time to construct your system at peace, as with a full Bird primary who develops theirs as a kid, or as with someone who just picks up a Bird model because they like it or someone they care about uses it, you usually end up with some semblance of this structure. When your system building is in response to Burning, though...

Crystals and Glass

Stable system structure (say that five times fast) takes time and patience, and is probably incompatible with the "I am relying on this prototype to keep me Okay" of using it as a crutch while Burned.

Systems work by being tinkered with. They're always a work in progress. You can try to come up with one all at once, but it's almost certainly very brittle. (This isn't a judgment on you if you're doing this--it's just, yeah, what you're trying to do is hard and it probably breaks a lot.)

And! I have a new metaphor:

In the late 1970s and early ’80s, Scott Kirkpatrick considered himself a physicist, not a computer scientist. In particular, Kirkpatrick was interested in statistical physics, which uses randomness as a way to explain certain natural phenomena—for instance, the physics of annealing, the way that materials change state as they are heated and cooled. Perhaps the most interesting characteristic of annealing is that how quickly or slowly a material is cooled tends to have tremendous impact on its final structure. As Kirkpatrick explains:

"Growing a single crystal from a melt [is] done by careful annealing, first melting the substance, then lowering the temperature slowly, and spending a long time at temperatures in the vicinity of the freezing point. If this is not done, and the substance is allowed to get out of equilibrium, the resulting crystal will have many defects, or the substance may form a glass, with no crystalline order."

Quote taken from Algorithms to Live By, by Brian Christian and Tom Griffiths, in chapter nine, "Randomness"

The annealing process is an interesting one. I'll try to explain--it's like... sometimes, if you make all the obvious immediate right choices, you can railroad yourself into a solution that isn't optimal because you aren't seeing the bigger picture. You reach what's called a local maximum: you've found the best solution available... in the tiny corner you looked in. It's like trying to pack a suitcase without taking some things out and repositioning them to see if they fit better.

This is why healthy Birds really like to poke at even their core or core-adjacent beliefs sometimes. It's why you get nerds arguing over the trolley problem for funsies. It's why Kurt Vonnegut wrote a story that poked the question, "is there any situation in which sexual assault could be justified?" (I really hate that story, and if I were in his place I wouldn't have published it, but I understand why he wrote it.)

Needless to say, these discussions can be... provocative, and our Lion friends do not always appreciate them, for very understandable reasons--especially if we don't make it clear that we don't actually expect that the discussion will change our beliefs in the end. We just want to poke at things, because they're interesting, or because we want to know how far our internal rules can be stretched and still hold true, or just out of habit.

But Burned primaries modeling Bird are not only uncomfortable with those discussions, they can actually become unstable because of them. There's no room for the usual Bird annealing process. They don't have time to spend on melting their system crystal and lowering its temperature slowly, hanging out at melting point for a while to get it to form a stable structure. They need a solid now, so they're left with glass... and glass shatters.

...Ow.

So, what are you supposed to do in this situation? Can you make it better?

I think you can, to some degree.

Ideally, you'd unburn your actual primary, but that's difficult and might take a while--you need a temporary solution, which is why you're modeling Bird in the first place.

It's probably doable to pick out some stable core beliefs, so at least you have something if the rest of your system goes haywire.

Once you have a solid core to work from, it might help to poke a healthy Bird whose judgment you trust while you're building up your modeled system, especially if your tree is currently shedding branches, because they're really good at debugging stuff and will often offer to clone one of their tree branches to graft onto yours, so you can feel better and also grow lemons or something.

You might want to let them know you're having a rough time and this questioning isn't just for fun, so they don't get too far into the weeds (and let them know if they're stepping into uncomfortable territory if they do, because which topics are considered difficult is different for everyone).

Also bear in mind that you are potentially asking for emotional labor from them, depending on the topic; it might hit some of their more sensitive subjects, which they may still be willing to discuss but only when they're in a stable mood.

Alternatively, you can try leaning on a different crutch instead of, or in addition to, your model--like asking other people when you're stuck on something. This is the more direct form of the previous suggestion: instead of helping you build up your system to make decisions, you just ask for help when you need it. This is more like the "outsource your morality to someone else" tactic that's also popular with burned Lions.

Whatever you decide to do, remember to cut yourself some slack--you're speaking a foreign language here, primary-wise, and it's hard and stuff breaks and it's best if you try not to be too hard on yourself. Give yourself space and patience to recover. I'm rooting for you!

#sortinghatchats#ravenclaw primary model#ravenclaw primary#shc burned houses#paint speaks#shc primaries

33 notes

·

View notes

Text

Lullaby

inspired by this adorable fanart drawn by @magnoliajades. the song is My Precious One by Celine Dion, but i altered the last set of lyrics to fit the setting.

i gave Inuyasha glasses because i felt like it and for some reason i really like that image. also he doesn’t so much as sing the song as say the lyrics with a lilt that suggests a melody because even with how often i take liberties with his personality in AU’s i still can’t imagine him actually singing heh.

*conveniently and purposely forgets i wrote an entire fucking 27 chapter fic centered on Inuyasha being a singer*

Pushing away from the desk with a heavy sigh, Inuyasha reached up to remove his glasses and tiredly rubbed his eyes. It was just past 8 PM, he still had five pages of code to enter, and that wasn’t even including the software he had to confiture as well as debug the new website. Fuck, but if he’d known becoming a goddamn IT tech meant bringing loads of work home with you almost every night, he definitely would have rethought a profession working with computers.

Inuyasha snorted quietly and then scowled at his computer screen. As far as he was concerned, the only benefit of his career was the salary. And the free Starbucks in the company cafeteria, but that was beside the point.

Taking a moment to stretch his back and legs since he’d been sitting in the computer chair for nigh on four hours now, Inuyasha replaced his glasses – an unfortunate side effect from his college days of staring at his computer in the dark – and eyed his half-empty coffee mug sitting next to the wireless mouse. He was pretty sure it had gone cold by now since he hadn’t touched it for about an hour and he was just contemplating getting up to make a fresh cup when suddenly the apartment door slammed open with enough force to bang against the wall.

A second later it slammed closed before the sounds of jingling keys and rustling clothing reached his ears. Calm as you please, Inuyasha sat back in the chair and silently watched as his girlfriend stormed by the living room, her expression positively thunderous, before disappearing down the hall and into their bedroom.

He sighed, rubbed his eyes again, and reached up to let his hair down from the bun he’d had it in all day.

Kagome had called him earlier, just after he’d gotten home from work himself, to let him know her boss had mandated her again and she’d be home late. Not a very uncommon thing, unfortunately. Her boss was a bit of a dick and it was no secret Kagome hated his guts, but the pay was good and she genuinely enjoyed her job as a social worker. She loved being able to help children in bad situations, but it came with the awful side effect of working for Naraku Morikawa. Kagome always complained about him, saying she would never understand how a man that treats his employees so poorly and yet have the gall to work with children, and if Inuyasha were honest, he’d have to agree.

She’d never leave, though, and she’d told him as much several times. Because despite having an awful boss and oftentimes long work hours, overseeing happy adoptions and tearful reunions always made it worth it.

Today, however, must have been particularly bad since she hadn’t even bothered to say anything before storming to their room. Coupled with that scowl on her face and the angry muttering his ears were picking up, Inuyasha knew his girlfriend well enough to know not to approach and let her decide what she needed to calm herself. It would be either one of two things: lock herself in the bathroom and have a long, hot soak in the bathtub, or come to him for cuddles and silent reassurance.

Minutes ticked by and when he didn’t hear any water running, Inuyasha had a good idea which one it would be so he stayed where he was, his half-formed idea for a fresh cup o’ joe already forgotten. His girl was far more important than any caffeine kick, anyway.

Only half paying attention to what his fingers were doing on the keyboard, Inuyasha got back to work, one ear trained toward the kitchen. He could hear her moving around and knew she broke out the wine by the sounds and smell of it.

Inuyasha smiled a little to himself and absently typed in a series of commands he’d long ago memorized. Must have been a truly rough day if she was using wine to help settle her nerves. He wondered if she’d explain what happened if he asked; odds were she wouldn’t, but sometimes giving voice to the whirling thoughts in her head assisted in soothing her upset, so then again, it was possible.

He heard the clinking of glass on the counter, more unintelligible muttering, and then footsteps approaching the living room. Inuyasha didn’t look away from the computer, however, when Kagome switched off the light and shuffled over to him. A brief glance revealed that she’d shed her slacks, leaving her in just her blue sweater and black boy shorts.

Not altogether unusual, however the fact that she hadn’t finished undressing before going to the wine tipped him off that something serious must have happened at work. The first thing he and Kagome both did upon returning home was immediately change into loungewear unless they planned on going somewhere, and he knew for a fact her bra was always the first thing to go. It was extremely telling that she’d only had the patience to shed her pants before going to the kitchen and now he was almost positive she wouldn’t be in a sharing mood tonight.

Kagome wasn’t looking at him, instead glaring at his chest. Wordlessly Inuyasha pushed back and opened his arms and his girl instantly crawled onto his lap, straddling his thighs as her arms snaked around his waist. She didn’t say a word as she buried her face into his chest and clung to him, taking calm, measured breaths. Inuyasha dropped a kiss to her head and took a moment to run his hand up and down her back in wordless comfort, waiting until her heart rate had returned to normal before scooting back in and stretching his arms out to continue working.

Kagome didn’t stir, content to sit there as he worked, and not for the first time Inuyasha was glad his girl was so petit to make the position possible. He never minded having to work around her, and in fact liked having her snuggled against him, her sweet scent in his nose, listening to her breathe. He was her source of comfort when her life got just a little bit too much to bear, and he’d always be there for her, no matter what.

Time passed and the only sounds in the apartment were their steady breathing and Inuyasha’s fingers clicking against the keyboard and mouse as he worked. Kagome remained still against him, showing no signs of moving, but that was fine with him. It wasn’t until she released a heavy sigh and he felt her relax completely against him that he said something, the low rumble of his voice breaking the quiet of the apartment.

“Wanna talk about it?”

With her head tucked beneath her chin, his words stirred her hair and he felt her shake her head. Inuyasha accepted her answer with another kiss to her head and didn’t press. She’d tell him later if she wanted to. For now, this was enough, and he knew that.

They sat there in silence for another few minutes, the glow from the computer the only illumination and the half-demon’s hands moving across the keyboard filling the quiet. Kagome sighed again and turned her head so her ear rested against his chest, listening to the steady beating of his heart.

“Inuyasha?” His girl’s voice was barely above a whisper but he heard it anyway.

“Mm?”

“Please?”

Inuyasha paused for only a second before typing out the rest of the command. She didn’t have to elaborate. He knew what she was asking for and it was another glaring sign that her day at work had not gone well at all. Knowing that, there was no way he could refuse her, especially since it was so rare nowadays that she asked at all.

She was his girl, his beloved Kagome, and he could never deny her anything, even this.

Giving a soft sigh, Inuyasha didn’t answer and instead pressed another kiss to her head, nuzzling her hair. Idly thinking that it was a good thing he could multitask, Inuyasha took a breath and began.

“My precious one, my darling one, don’t let your lashes weep.”

Kagome sighed and allowed the deep rumble of his voice to lull her into a state of lethargy, closing her eyes. Inuyasha didn’t exactly sing the lyrics, no, but she didn’t mind. She adored the way the words fell from his lips, low with a smooth lilt that made it obvious they were lyrics, but slow enough that the cadence of the melody was entirely his own.

It was her lullaby, his promise to always protect her, and it never failed to calm the storm of her unsettled nerves, no matter what the cause happened to be.

“My cherished one, my weary one, it’s time to go to sleep.”

Safe at home and in her boyfriend’s arms, Kagome was finally able to let her mind go blank. She concentrated on his chest moving up and down as he breathed, felt the deep rumble of his voice against her ear, his breath stirring her hair, and heard the soft clicking of his claws as he continued to work. She could feel the tension leaving her body, draining out of her and for the first time in what felt like all day, a tiny smile slowly curved her mouth upward.

“Just bow your head, and give your cares to me,” Inuyasha continued, keeping his voice low, intimate. “Just close your eyes, and fall into the sweetest dream.”

Slowly, surely, Kagome’s heart rate slowed and her breathing evened out with the measured, rhythmic breaths of sleep. Her smile dimmed but didn’t disappear, serene, content.

“‘Cause in my loving arms,” Inuyasha continued, voice dropping to a whisper. “You’re safe as you will ever be.”

Kagome sighed, mumbled his name, and succumbed.

“So hush, my dear, and sleep,” he murmured into her hair, kissed her head, and sighed.

Inuyasha let her sleep and hummed the rest of the song under his breath as he finished up his work. He wasn’t surprised she fell asleep, especially since he’d sung her lullaby; Kagome always swore that his voice had healing powers since it always managed to calm her upset, but Inuyasha suspected it had more to do with the fact that her father used to sing it to her when she was a kid before he passed away.

Maybe it was a mixture of both, but whatever the case, the song had fond memories attached to it and he knew it was important to her, about as much as she was to him. So yeah, if he had to temporarily check in his manliness and sing to the woman he loved to make her feel better, then he would without question.

When he finally finished work and shut down the computer a little after nine, Kagome didn’t stir as Inuyasha stood up with her in his arms and carried her to their bedroom. He carefully laid her down, pressed a kiss to her forehead, and left to turn off the kitchen light and lock door before using the bathroom and then doubling back.

Unsurprisingly Kagome was still passed out. Shaking his head, but not without a fond smile, Inuyasha managed to take both her sweater and bra off without waking her before shedding his own clothes and crawling into bed beside her. He pulled the blankets up as Kagome rolled so she was facing him and Inuyasha pulled her close, tucking her tightly against him, his love for this woman powerful, consuming, strong enough to make him want to weep.

“Goodnight, baby,” he whispered against her temple, closing his eyes. “Sweet dreams.”

“And if you should awake I’ll kiss your soft cheek And underneath the smiling moon I’ll sing you back to sleep.”

115 notes

·

View notes

Text

Since Google Plus is going away, I’m going to back up Steve Yegge’s platform rant. And confirm the opening paragraph.

One thing that struck me immediately about the two companies -- an impression that has been reinforced almost daily -- is that Amazon does everything wrong, and Google does everything right. Sure, it's a sweeping generalization, but a surprisingly accurate one. It's pretty crazy. There are probably a hundred or even two hundred different ways you can compare the two companies, and Google is superior in all but three of them, if I recall correctly.

Looooooong text below the cut

Stevey's Google Platforms Rant I was at Amazon for about six and a half years, and now I've been at Google for that long. One thing that struck me immediately about the two companies -- an impression that has been reinforced almost daily -- is that Amazon does everything wrong, and Google does everything right. Sure, it's a sweeping generalization, but a surprisingly accurate one. It's pretty crazy. There are probably a hundred or even two hundred different ways you can compare the two companies, and Google is superior in all but three of them, if I recall correctly. I actually did a spreadsheet at one point but Legal wouldn't let me show it to anyone, even though recruiting loved it. I mean, just to give you a very brief taste: Amazon's recruiting process is fundamentally flawed by having teams hire for themselves, so their hiring bar is incredibly inconsistent across teams, despite various efforts they've made to level it out. And their operations are a mess; they don't really have SREs and they make engineers pretty much do everything, which leaves almost no time for coding - though again this varies by group, so it's luck of the draw. They don't give a single shit about charity or helping the needy or community contributions or anything like that. Never comes up there, except maybe to laugh about it. Their facilities are dirt-smeared cube farms without a dime spent on decor or common meeting areas. Their pay and benefits suck, although much less so lately due to local competition from Google and Facebook. But they don't have any of our perks or extras -- they just try to match the offer-letter numbers, and that's the end of it. Their code base is a disaster, with no engineering standards whatsoever except what individual teams choose to put in place. To be fair, they do have a nice versioned-library system that we really ought to emulate, and a nice publish-subscribe system that we also have no equivalent for. But for the most part they just have a bunch of crappy tools that read and write state machine information into relational databases. We wouldn't take most of it even if it were free. I think the pubsub system and their library-shelf system were two out of the grand total of three things Amazon does better than google. I guess you could make an argument that their bias for launching early and iterating like mad is also something they do well, but you can argue it either way. They prioritize launching early over everything else, including retention and engineering discipline and a bunch of other stuff that turns out to matter in the long run. So even though it's given them some competitive advantages in the marketplace, it's created enough other problems to make it something less than a slam-dunk. But there's one thing they do really really well that pretty much makes up for ALL of their political, philosophical and technical screw-ups. Jeff Bezos is an infamous micro-manager. He micro-manages every single pixel of Amazon's retail site. He hired Larry Tesler, Apple's Chief Scientist and probably the very most famous and respected human-computer interaction expert in the entire world, and then ignored every goddamn thing Larry said for three years until Larry finally -- wisely -- left the company. Larry would do these big usability studies and demonstrate beyond any shred of doubt that nobody can understand that frigging website, but Bezos just couldn't let go of those pixels, all those millions of semantics-packed pixels on the landing page. They were like millions of his own precious children. So they're all still there, and Larry is not. Micro-managing isn't that third thing that Amazon does better than us, by the way. I mean, yeah, they micro-manage really well, but I wouldn't list it as a strength or anything. I'm just trying to set the context here, to help you understand what happened. We're talking about a guy who in all seriousness has said on many public occasions that people should be paying him to work at Amazon. He hands out little yellow stickies with his name on them, reminding people "who runs the company" when they disagree with him. The guy is a regular... well, Steve Jobs, I guess. Except without the fashion or design sense. Bezos is super smart; don't get me wrong. He just makes ordinary control freaks look like stoned hippies. So one day Jeff Bezos issued a mandate. He's doing that all the time, of course, and people scramble like ants being pounded with a rubber mallet whenever it happens. But on one occasion -- back around 2002 I think, plus or minus a year -- he issued a mandate that was so out there, so huge and eye-bulgingly ponderous, that it made all of his other mandates look like unsolicited peer bonuses. His Big Mandate went something along these lines: 1) All teams will henceforth expose their data and functionality through service interfaces. 2) Teams must communicate with each other through these interfaces. 3) There will be no other form of interprocess communication allowed: no direct linking, no direct reads of another team's data store, no shared-memory model, no back-doors whatsoever. The only communication allowed is via service interface calls over the network. 4) It doesn't matter what technology they use. HTTP, Corba, Pubsub, custom protocols -- doesn't matter. Bezos doesn't care. 5) All service interfaces, without exception, must be designed from the ground up to be externalizable. That is to say, the team must plan and design to be able to expose the interface to developers in the outside world. No exceptions. 6) Anyone who doesn't do this will be fired. 7) Thank you; have a nice day! Ha, ha! You 150-odd ex-Amazon folks here will of course realize immediately that #7 was a little joke I threw in, because Bezos most definitely does not give a shit about your day. #6, however, was quite real, so people went to work. Bezos assigned a couple of Chief Bulldogs to oversee the effort and ensure forward progress, headed up by Uber-Chief Bear Bulldog Rick Dalzell. Rick is an ex-Armgy Ranger, West Point Academy graduate, ex-boxer, ex-Chief Torturer slash CIO at Wal*Mart, and is a big genial scary man who used the word "hardened interface" a lot. Rick was a walking, talking hardened interface himself, so needless to say, everyone made LOTS of forward progress and made sure Rick knew about it. Over the next couple of years, Amazon transformed internally into a service-oriented architecture. They learned a tremendous amount while effecting this transformation. There was lots of existing documentation and lore about SOAs, but at Amazon's vast scale it was about as useful as telling Indiana Jones to look both ways before crossing the street. Amazon's dev staff made a lot of discoveries along the way. A teeny tiny sampling of these discoveries included: - pager escalation gets way harder, because a ticket might bounce through 20 service calls before the real owner is identified. If each bounce goes through a team with a 15-minute response time, it can be hours before the right team finally finds out, unless you build a lot of scaffolding and metrics and reporting. - every single one of your peer teams suddenly becomes a potential DOS attacker. Nobody can make any real forward progress until very serious quotas and throttling are put in place in every single service. - monitoring and QA are the same thing. You'd never think so until you try doing a big SOA. But when your service says "oh yes, I'm fine", it may well be the case that the only thing still functioning in the server is the little component that knows how to say "I'm fine, roger roger, over and out" in a cheery droid voice. In order to tell whether the service is actually responding, you have to make individual calls. The problem continues recursively until your monitoring is doing comprehensive semantics checking of your entire range of services and data, at which point it's indistinguishable from automated QA. So they're a continuum. - if you have hundreds of services, and your code MUST communicate with other groups' code via these services, then you won't be able to find any of them without a service-discovery mechanism. And you can't have that without a service registration mechanism, which itself is another service. So Amazon has a universal service registry where you can find out reflectively (programmatically) about every service, what its APIs are, and also whether it is currently up, and where. - debugging problems with someone else's code gets a LOT harder, and is basically impossible unless there is a universal standard way to run every service in a debuggable sandbox. That's just a very small sample. There are dozens, maybe hundreds of individual learnings like these that Amazon had to discover organically. There were a lot of wacky ones around externalizing services, but not as many as you might think. Organizing into services taught teams not to trust each other in most of the same ways they're not supposed to trust external developers. This effort was still underway when I left to join Google in mid-2005, but it was pretty far advanced. From the time Bezos issued his edict through the time I left, Amazon had transformed culturally into a company that thinks about everything in a services-first fashion. It is now fundamental to how they approach all designs, including internal designs for stuff that might never see the light of day externally. At this point they don't even do it out of fear of being fired. I mean, they're still afraid of that; it's pretty much part of daily life there, working for the Dread Pirate Bezos and all. But they do services because they've come to understand that it's the Right Thing. There are without question pros and cons to the SOA approach, and some of the cons are pretty long. But overall it's the right thing because SOA-driven design enables Platforms. That's what Bezos was up to with his edict, of course. He didn't (and doesn't) care even a tiny bit about the well-being of the teams, nor about what technologies they use, nor in fact any detail whatsoever about how they go about their business unless they happen to be screwing up. But Bezos realized long before the vast majority of Amazonians that Amazon needs to be a platform. You wouldn't really think that an online bookstore needs to be an extensible, programmable platform. Would you? Well, the first big thing Bezos realized is that the infrastructure they'd built for selling and shipping books and sundry could be transformed an excellent repurposable computing platform. So now they have the Amazon Elastic Compute Cloud, and the Amazon Elastic MapReduce, and the Amazon Relational Database Service, and a whole passel' o' other services browsable at aws.amazon.com. These services host the backends for some pretty successful companies, reddit being my personal favorite of the bunch. The other big realization he had was that he can't always build the right thing. I think Larry Tesler might have struck some kind of chord in Bezos when he said his mom couldn't use the goddamn website. It's not even super clear whose mom he was talking about, and doesn't really matter, because nobody's mom can use the goddamn website. In fact I myself find the website disturbingly daunting, and I worked there for over half a decade. I've just learned to kinda defocus my eyes and concentrate on the million or so pixels near the center of the page above the fold. I'm not really sure how Bezos came to this realization -- the insight that he can't build one product and have it be right for everyone. But it doesn't matter, because he gets it. There's actually a formal name for this phenomenon. It's called Accessibility, and it's the most important thing in the computing world. The. Most. Important. Thing. If you're sorta thinking, "huh? You mean like, blind and deaf people Accessibility?" then you're not alone, because I've come to understand that there are lots and LOTS of people just like you: people for whom this idea does not have the right Accessibility, so it hasn't been able to get through to you yet. It's not your fault for not understanding, any more than it would be your fault for being blind or deaf or motion-restricted or living with any other disability. When software -- or idea-ware for that matter -- fails to be accessible to anyone for any reason, it is the fault of the software or of the messaging of the idea. It is an Accessibility failure. Like anything else big and important in life, Accessibility has an evil twin who, jilted by the unbalanced affection displayed by their parents in their youth, has grown into an equally powerful Arch-Nemesis (yes, there's more than one nemesis to accessibility) named Security. And boy howdy are the two ever at odds. But I'll argue that Accessibility is actually more important than Security because dialing Accessibility to zero means you have no product at all, whereas dialing Security to zero can still get you a reasonably successful product such as the Playstation Network. So yeah. In case you hadn't noticed, I could actually write a book on this topic. A fat one, filled with amusing anecdotes about ants and rubber mallets at companies I've worked at. But I will never get this little rant published, and you'll never get it read, unless I start to wrap up. That one last thing that Google doesn't do well is Platforms. We don't understand platforms. We don't "get" platforms. Some of you do, but you are the minority. This has become painfully clear to me over the past six years. I was kind of hoping that competitive pressure from Microsoft and Amazon and more recently Facebook would make us wake up collectively and start doing universal services. Not in some sort of ad-hoc, half-assed way, but in more or less the same way Amazon did it: all at once, for real, no cheating, and treating it as our top priority from now on. But no. No, it's like our tenth or eleventh priority. Or fifteenth, I don't know. It's pretty low. There are a few teams who treat the idea very seriously, but most teams either don't think about it all, ever, or only a small percentage of them think about it in a very small way. It's a big stretch even to get most teams to offer a stubby service to get programmatic access to their data and computations. Most of them think they're building products. And a stubby service is a pretty pathetic service. Go back and look at that partial list of learnings from Amazon, and tell me which ones Stubby gives you out of the box. As far as I'm concerned, it's none of them. Stubby's great, but it's like parts when you need a car. A product is useless without a platform, or more precisely and accurately, a platform-less product will always be replaced by an equivalent platform-ized product. Google+ is a prime example of our complete failure to understand platforms from the very highest levels of executive leadership (hi Larry, Sergey, Eric, Vic, howdy howdy) down to the very lowest leaf workers (hey yo). We all don't get it. The Golden Rule of platforms is that you Eat Your Own Dogfood. The Google+ platform is a pathetic afterthought. We had no API at all at launch, and last I checked, we had one measly API call. One of the team members marched in and told me about it when they launched, and I asked: "So is it the Stalker API?" She got all glum and said "Yeah." I mean, I was joking, but no... the only API call we offer is to get someone's stream. So I guess the joke was on me. Microsoft has known about the Dogfood rule for at least twenty years. It's been part of their culture for a whole generation now. You don't eat People Food and give your developers Dog Food. Doing that is simply robbing your long-term platform value for short-term successes. Platforms are all about long-term thinking. Google+ is a knee-jerk reaction, a study in short-term thinking, predicated on the incorrect notion that Facebook is successful because they built a great product. But that's not why they are successful. Facebook is successful because they built an entire constellation of products by allowing other people to do the work. So Facebook is different for everyone. Some people spend all their time on Mafia Wars. Some spend all their time on Farmville. There are hundreds or maybe thousands of different high-quality time sinks available, so there's something there for everyone. Our Google+ team took a look at the aftermarket and said: "Gosh, it looks like we need some games. Let's go contract someone to, um, write some games for us." Do you begin to see how incredibly wrong that thinking is now? The problem is that we are trying to predict what people want and deliver it for them. You can't do that. Not really. Not reliably. There have been precious few people in the world, over the entire history of computing, who have been able to do it reliably. Steve Jobs was one of them. We don't have a Steve Jobs here. I'm sorry, but we don't. Larry Tesler may have convinced Bezos that he was no Steve Jobs, but Bezos realized that he didn't need to be a Steve Jobs in order to provide everyone with the right products: interfaces and workflows that they liked and felt at ease with. He just needed to enable third-party developers to do it, and it would happen automatically. I apologize to those (many) of you for whom all this stuff I'm saying is incredibly obvious, because yeah. It's incredibly frigging obvious. Except we're not doing it. We don't get Platforms, and we don't get Accessibility. The two are basically the same thing, because platforms solve accessibility. A platform is accessibility. So yeah, Microsoft gets it. And you know as well as I do how surprising that is, because they don't "get" much of anything, really. But they understand platforms as a purely accidental outgrowth of having started life in the business of providing platforms. So they have thirty-plus years of learning in this space. And if you go to msdn.com, and spend some time browsing, and you've never seen it before, prepare to be amazed. Because it's staggeringly huge. They have thousands, and thousands, and THOUSANDS of API calls. They have a HUGE platform. Too big in fact, because they can't design for squat, but at least they're doing it. Amazon gets it. Amazon's AWS (aws.amazon.com) is incredible. Just go look at it. Click around. It's embarrassing. We don't have any of that stuff. Apple gets it, obviously. They've made some fundamentally non-open choices, particularly around their mobile platform. But they understand accessibility and they understand the power of third-party development and they eat their dogfood. And you know what? They make pretty good dogfood. Their APIs are a hell of a lot cleaner than Microsoft's, and have been since time immemorial. Facebook gets it. That's what really worries me. That's what got me off my lazy butt to write this thing. I hate blogging. I hate... plussing, or whatever it's called when you do a massive rant in Google+ even though it's a terrible venue for it but you do it anyway because in the end you really do want Google to be successful. And I do! I mean, Facebook wants me there, and it'd be pretty easy to just go. But Google is home, so I'm insisting that we have this little family intervention, uncomfortable as it might be. After you've marveled at the platform offerings of Microsoft and Amazon, and Facebook I guess (I didn't look because I didn't want to get too depressed), head over to developers.google.com and browse a little. Pretty big difference, eh? It's like what your fifth-grade nephew might mock up if he were doing an assignment to demonstrate what a big powerful platform company might be building if all they had, resource-wise, was one fifth grader. Please don't get me wrong here -- I know for a fact that the dev-rel team has had to FIGHT to get even this much available externally. They're kicking ass as far as I'm concerned, because they DO get platforms, and they are struggling heroically to try to create one in an environment that is at best platform-apathetic, and at worst often openly hostile to the idea. I'm just frankly describing what developers.google.com looks like to an outsider. It looks childish. Where's the Maps APIs in there for Christ's sake? Some of the things in there are labs projects. And the APIs for everything I clicked were... they were paltry. They were obviously dog food. Not even good organic stuff. Compared to our internal APIs it's all snouts and horse hooves. And also don't get me wrong about Google+. They're far from the only offenders. This is a cultural thing. What we have going on internally is basically a war, with the underdog minority Platformers fighting a more or less losing battle against the Mighty Funded Confident Producters. Any teams that have successfully internalized the notion that they should be externally programmable platforms from the ground up are underdogs -- Maps and Docs come to mind, and I know GMail is making overtures in that direction. But it's hard for them to get funding for it because it's not part of our culture. Maestro's funding is a feeble thing compared to the gargantuan Microsoft Office programming platform: it's a fluffy rabbit versus a T-Rex. The Docs team knows they'll never be competitive with Office until they can match its scripting facilities, but they're not getting any resource love. I mean, I assume they're not, given that Apps Script only works in Spreadsheet right now, and it doesn't even have keyboard shortcuts as part of its API. That team looks pretty unloved to me. Ironically enough, Wave was a great platform, may they rest in peace. But making something a platform is not going to make you an instant success. A platform needs a killer app. Facebook -- that is, the stock service they offer with walls and friends and such -- is the killer app for the Facebook Platform. And it is a very serious mistake to conclude that the Facebook App could have been anywhere near as successful without the Facebook Platform. You know how people are always saying Google is arrogant? I'm a Googler, so I get as irritated as you do when people say that. We're not arrogant, by and large. We're, like, 99% Arrogance-Free. I did start this post -- if you'll reach back into distant memory -- by describing Google as "doing everything right". We do mean well, and for the most part when people say we're arrogant it's because we didn't hire them, or they're unhappy with our policies, or something along those lines. They're inferring arrogance because it makes them feel better. But when we take the stance that we know how to design the perfect product for everyone, and believe you me, I hear that a lot, then we're being fools. You can attribute it to arrogance, or naivete, or whatever -- it doesn't matter in the end, because it's foolishness. There IS no perfect product for everyone. And so we wind up with a browser that doesn't let you set the default font size. Talk about an affront to Accessibility. I mean, as I get older I'm actually going blind. For real. I've been nearsighted all my life, and once you hit 40 years old you stop being able to see things up close. So font selection becomes this life-or-death thing: it can lock you out of the product completely. But the Chrome team is flat-out arrogant here: they want to build a zero-configuration product, and they're quite brazen about it, and Fuck You if you're blind or deaf or whatever. Hit Ctrl-+ on every single page visit for the rest of your life. It's not just them. It's everyone. The problem is that we're a Product Company through and through. We built a successful product with broad appeal -- our search, that is -- and that wild success has biased us. Amazon was a product company too, so it took an out-of-band force to make Bezos understand the need for a platform. That force was their evaporating margins; he was cornered and had to think of a way out. But all he had was a bunch of engineers and all these computers... if only they could be monetized somehow... you can see how he arrived at AWS, in hindsight. Microsoft started out as a platform, so they've just had lots of practice at it. Facebook, though: they worry me. I'm no expert, but I'm pretty sure they started off as a Product and they rode that success pretty far. So I'm not sure exactly how they made the transition to a platform. It was a relatively long time ago, since they had to be a platform before (now very old) things like Mafia Wars could come along. Maybe they just looked at us and asked: "How can we beat Google? What are they missing?" The problem we face is pretty huge, because it will take a dramatic cultural change in order for us to start catching up. We don't do internal service-oriented platforms, and we just as equally don't do external ones. This means that the "not getting it" is endemic across the company: the PMs don't get it, the engineers don't get it, the product teams don't get it, nobody gets it. Even if individuals do, even if YOU do, it doesn't matter one bit unless we're treating it as an all-hands-on-deck emergency. We can't keep launching products and pretending we'll turn them into magical beautiful extensible platforms later. We've tried that and it's not working. The Golden Rule of Platforms, "Eat Your Own Dogfood", can be rephrased as "Start with a Platform, and Then Use it for Everything." You can't just bolt it on later. Certainly not easily at any rate -- ask anyone who worked on platformizing MS Office. Or anyone who worked on platformizing Amazon. If you delay it, it'll be ten times as much work as just doing it correctly up front. You can't cheat. You can't have secret back doors for internal apps to get special priority access, not for ANY reason. You need to solve the hard problems up front. I'm not saying it's too late for us, but the longer we wait, the closer we get to being Too Late. I honestly don't know how to wrap this up. I've said pretty much everything I came here to say today. This post has been six years in the making. I'm sorry if I wasn't gentle enough, or if I misrepresented some product or team or person, or if we're actually doing LOTS of platform stuff and it just so happens that I and everyone I ever talk to has just never heard about it. I'm sorry. But we've gotta start doing this right.

3 notes

·

View notes

Text

Imaging a Black Hole: How Software Created a Planet-sized Telescope

Black holes are singular objects in our universe, pinprick entities that pierce the fabric of spacetime. Typically formed by collapsed stars, they consist of an appropriately named singularity, a dimensionless, infinitely dense mass, from whose gravitational pull not even light can escape. Once a beam of light passes within a certain radius, called the event horizon, its fate is sealed. By definition, we can’t see a black hole, but it’s been theorized that the spherical swirl of light orbiting around the event horizon can present a detectable ring, as rays escape the turbulence of gas swirling into the event horizon. If we could somehow photograph such a halo, we might learn a great deal about the physics of relativity and high-energy matter.

On April 10, 2019, the world was treated to such an image. A consortium of more than 200 scientists from around the world released a glowing ring depicting M87*, a supermassive black hole at the center of the galaxy Messier 87. Supermassive black holes, formed by unknown means, sit at the center of nearly all large galaxies. This one bears 6.5 billion times the mass of our sun and the ring’s diameter is about three times that of Pluto’s orbit, on average. But its size belies the difficulty of capturing its visage. M87* is 55 million light years away. Imaging it has been likened to photographing an orange on the moon, or the edge of a coin across the United States.

Our planet does not contain a telescope large enough for such a task. “Ideally speaking, we’d turn the entire Earth into one big mirror [for gathering light],” says Jonathan Weintroub, an electrical engineer at the Harvard-Smithsonian Center for Astrophysics, “but we can’t really afford the real estate.” So researchers relied on a technique called very long baseline interferometry (VLBI). They point telescopes on several continents at the same target, then integrate the results, weaving the observations together with software to create the equivalent of a planet-sized instrument—the Event Horizon Telescope (EHT). Though they had ideas of what to expect when targeting M87*, many on the EHT team were still taken aback by the resulting image. “It was kind of a ‘Wow, that really worked’ [moment],” says Geoff Crew, a research scientist at MIT Haystack Observatory, in Westford, Massachusetts. “There is a sort of gee-whizz factor in the technology.”

Catching bits

On four clear nights in April 2017, seven radio telescope sites—in Arizona, Mexico, and Spain, and two each in Chile and Hawaii—pointed their dishes at M87*. (The sites in Chile and Hawaii each consisted of an array of telescopes themselves.) Large parabolic dishes up to 30 meters across caught radio waves with wavelengths around 1.3mm, reflecting them onto tiny wire antennas cooled in a vacuum to 4 degrees above absolute zero. The focused energy flowed as voltage signals through wires to analog-to-digital converters, transforming them into bits, and then to what is known as the digital backend, or DBE.

The purpose of the DBE is to capture and record as many bits as possible in real time. “The digital backend is the first piece of code that touches the signal from the black hole, pretty much,” says Laura Vertatschitsch, an electrical engineer who helped develop the EHT’s DBE as a postdoctoral researcher at the Harvard-Smithsonian Center for Astrophysics. At its core is a pizza-box-sized piece of hardware called the R2DBE, based on an open-source device created by a group started at Berkeley called CASPER.

The R2DBE’s job is to quickly format the incoming data and parcel it out to a set of hard drives. “It’s a kind of computing that’s relatively simple, algorithmically speaking,” Weintroub says, “but is incredibly high performance.” Sitting on its circuit board is a chip called a field-programmable gate array, or FPGA. “Field programmable gate arrays are sort of the poor man’s ASIC,” he continues, referring to application-specific integrated circuits. “They allow you to customize logic on a chip without having to commit to a very expensive fabrication run of purely custom chips.”

An FPGA contains millions of logic primitives—gates and registers for manipulating and storing bits. The algorithms they compute might be simple, but optimizing their performance is not. It’s like managing a city’s traffic lights, and its layout, too. You want a signal to get from one point to another in time for something else to happen to it, and you want many signals to do this simultaneously within the span of each clock cycle. “The field programmable gate array takes parallelism to extremes,” Weintroub says. “And that’s how you get the performance. You have literally millions of things happening. And they all happen on a single clock edge. And the key is how you connect them all together, and in practice, it’s a very difficult problem.”

FPGA programmers use software to help them choreograph the chip’s components. The EHT scientists program it using a language called VHDL, which is compiled into bitcode by Vivado, a software tool provided by the chip’s manufacturer, Xilinx. On top of the VHDL, they use MATLAB and Simulink software. Instead of writing VHDL firmware code directly, they visually move around blocks of functions and connect them together. Then you hit a button and out pops the FPGA bitcode.

But it doesn’t happen immediately. Compiling takes many hours, and you usually have to wait overnight. What’s more, finding bugs once it’s compiled is almost impossible, because there are no print statements. You’re dealing with real-time signals on a wire. “It shifts your energy to tons and tons of tests in simulation,” Vertatschitsch says. “It’s a really different regime, to thinking, ‘How do I make sure I didn’t put any bugs into that code, because it’s just so costly?’”

Data to disk

The next step in the digital backend is recording the data. Music files are typically recorded at 44,100 samples per second. Movies are generally 24 frames per second. Each telescope in the EHT array recorded 16 billion samples per second. How do you commit 32 gigabits—about a DVD’s worth of data—to disk every second? You use lots of disks. The EHT used Mark 6 data recorders, developed at Haystack and available commercially from Conduant Corporation. Each site used two recorders, which each wrote to 32 hard drives, for 64 disks in parallel.

In early tests, the drives frequently failed. The sites are on tops of mountains, where the atmosphere is thinner and scatters less of the incoming light, but the thinner air interferes with the aerodynamics of the write head. “When a hard drive fails, you’re hosed,” Vertatschitsch says. “That’s your experiment, you know? Our data is super-precious.” Eventually they ordered sealed, helium-filled commercial hard drives. These drives never failed during the observation.

The humans were not so resistant to thin air. According to Vertatschitsch,“If you are the developer or the engineer that has to be up there and figure out why your code isn’t working… the human body does not debug code well at 15,000 feet. It’s just impossible. So, it became so much more important to have a really good user interface, even if the user was just going to be you. Things have to be simple. You have to automate everything. You really have to spend the time up front doing that, because it’s like extreme coding, right? Go to the top of a mountain and debug. Like, get out of here, man. That’s insane.”

Over the four nights of observation, the sites together collected about five petabytes of data. Uploading the data would have taken too long, so researchers FedExed the drives to Haystack and the Max Planck Institute for Radio Astronomy, in Bonn, Germany, for the next stage of processing. All except the drives from the South Pole Telescope (which couldn’t see M87* in the northern hemisphere, but collected data for calibration and observation of other sources)—those had to wait six months for the winter in the southern hemisphere to end before they could be flown out.

Connecting the dots

Making an image of M87* is not like taking a normal photograph. Light was not collected on a sheet of film or on an array of sensors as in a digital camera. Each receiver collects only a one-dimensional stream of information. The two-dimensional image results from combining pairs of telescopes, the way we can localize sounds by comparing the volume and timing of audio entering our two ears. Once the drives arrived at Haystack and Max Planck, data from distant sites were paired up, or correlated.

Unlike with a musical radio station, most of the information at this point is noise, created by the instruments. “We’re working in a regime where all you hear is hiss,” Haystack’s Crew says. To extract the faint signal, called fringe, they use computers to try to line up the data streams from pairs of sites, looking for common signatures. The workhorse here is open-source software called DiFX, for Distributed FX, where F refers to Fourier transform and X refers to cross-multiplication. Before DiFX, data was traditionally recorded on tape and then correlated with special hardware. But about 15 years ago, Adam Deller, then a graduate student working at the Australian Long Baseline Array, was trying to finish his thesis when the correlator broke. So he began writing DiFX, which has now been widely expanded and adopted. Haystack and Max Planck each used Linux machines to coordinate DiFX on supercomputing clusters. Haystack used 60 nodes with 1,200 cores, and Max Planck used 68 nodes with 1,380 cores. The nodes communicate using Open MPI, for Message Passing Interface.

Correlation is more than just lining up data streams. The streams must also be adjusted to account for things such as the sites’ locations and the Earth’s rotation. Lindy Blackburn, a radio astronomer at the Harvard-Smithsonian Center for Astrophysics, notes a couple of logistical challenges with VLBI. First, all the sites have to be working simultaneously, and they all need good weather. (In terms of clear skies, “2017 was a kind of miracle,” says Kazunori Akiyama, an astrophysicist at Haystack.) Second, the signal at each site is so weak that you can’t always tell right away if there’s a problem. “You might not know if what you did worked until months later, when these signals are correlated,” Blackburn says. “It’s a sigh of relief when you actually realize that there are correlations.”

Something in the air

Because most of the data on disk is random background noise from the instruments and environment, extracting the signal with correlation reduces the data 1,000-fold. But it’s still not clean enough to start making an image. The next step is calibration and a signal-extraction step called fringe-fitting. Blackburn says a main aim is to correct for turbulence in the atmosphere above each telescope. Light travels at a constant rate through a vacuum, but changes speed through a medium like air. By comparing the signals from multiple antennas over a period of time, software can build models of the randomly changing atmospheric delay over each site and correct for it.

The classic calibration software is called AIPS, for Astronomical Image Processing System, created by the National Radio Astronomy Observatory. It was written 40 years ago, in Fortran, and is hard to maintain, says Chi-kwan Chan, an astronomer at the University of Arizona, but it was used by EHT because it’s a well-known standard. They also used two other packages. One is called HOPS, for Haystack Observatory Processing System, and was developed for astronomy and geodesy—the use of radio telescopes to measure movement not of celestial bodies but of the telescopes themselves, indicating changes in the Earth’s crust. The newest package is CASA, for Common Astronomy Software Applications.

Chan says the EHT team has made contributions even to the software it doesn’t maintain. EHT is the first time VLBI has been done at this scale—with this many heterogeneous sites and this much data. So some of the assumptions built into the standard software break down. For instance, the sites all have different equipment, and at some of them the signal-to-noise ratio is more uniform than at others. So the team sends bug reports to upstream developers and works with them to fix the code or relax the assumptions. “This is trying to push the boundary of the software,” Chan says.

Calibration is not a big enough job for supercomputers, like correlation, but is too big for a workstation, so they used the cloud. “Cloud computing is the sweet spot for analysis like fringe fitting,” Chan says. With calibration, the data is reduced a further 10,000-fold.

Put a ring on it

Finally, the imaging teams received the correlated and calibrated data. At this point no one was sure if they’d see the “shadow” of the black hole—a dark area in the middle of a bright ring—or just a bright disk, or something unexpected, or nothing. Everyone had their own ideas. Because the computational analysis requires making many decisions—the data are compatible with infinite final images, some more probable than others—the scientists took several steps to limit the amount that expectations could bias the outcome. One step was to create four independent teams and not let them share their progress for a seven-week processing period. Once they saw that they had obtained similar images—very close to the one now familiar to us—they rejoined forces to combine the best ideas, but still proceeded with three different software packages to ensure that the results are not affected by software bugs.

The oldest is DIFMAP. It relies on a technique created in the 1970s called CLEAN, when computers were slow. As a result, it’s computationally cheap, but requires lots of human expertise and interaction. “It’s a very primitive way to reconstruct sparse images,” says Akiyama, who helped create a new package specifically for EHT, called SMILI. SMILI uses a more mathematically flexible technique called RML, for regularized maximum likelihood. Meanwhile, Andrew Chael, an astrophysicist now at Princeton, created another package based on RML, called eht-imaging. Akiyama and Chael both describe the relationship between SMILI and eht-developers as a friendly competition.

In developing SMILI, Akiyama says he was in contact with medical imaging experts. Just as in VLBI, MRI, and CT, software needs to reconstruct the most likely image from ambiguous data. They all rely to some degree on assumptions. If you have some idea of what you’re looking at, it can help you see what’s really there. “The interferometric imaging process is kind of like detective work,” Akiyama says. “We are doing this in a mathematical way based on our knowledge of astronomical images.”

Still, users of each of the three EHT imaging pipelines didn’t just assume a single set of parameters—for things like expected brightness and image smoothness. Instead, each explored a wide variety. When you get an MRI, your doctor doesn’t show you a range of possible images, but that’s what the EHT team did in their published papers. “That is actually quite new in the history of radio astronomy,” Akiyama says. And to the team’s relief, all of them looked relatively similar, making the findings more robust.

By the time they had combined their results into one image, the calibrated data had been reduced by another factor of 1,000. Of the whole data analysis pipeline, “you could think of it a progressive data compression,” Crew says. From petabytes to bytes. The image, though smooth, contains only about 64 pixels worth of independent information.

For the most part, the imaging algorithms could be run on laptops; the exception was the parameter surveys, in which images were constructed thousands of times with slightly different settings—those were done in the cloud. Each survey took about a day on 200 cores.

Images also relied on physics simulations of black holes. These were used in three ways. First, simulations helped test the three software packages. A simulation can produce a model object such as a black hole accretion disk, the light it emits, and what its reconstructed image should look like. If imaging software can take the (simulated) emitted light and reconstruct an image similar to that in the simulation, it will likely handle real data accurately. (They also tested the software on a hypothetical giant snowman in the sky.) Second, simulations can help constrain the parameter space that’s explored by the imaging pipelines. And third, once images are produced, it can help interpret those images, letting scientists deduce things such as M87* mass from the size of the ring.

The simulation software Chan used has three steps. First, it simulates how plasma circles around a black hole, interacting with magnetic fields and curved spacetime. This is called general relativistic magnetohydrodynamics. But gravity also curves light, so he adds general relativistic ray tracing. Third, he turns the movies generated by the simulation into data comparable to what the EHT observes. The first two steps use C, and the last uses C++ and Python. Chael, for his simulations, uses a package called KORAL, written in C. All simulations are run on supercomputers.