#u-v graph method

Explore tagged Tumblr posts

Text

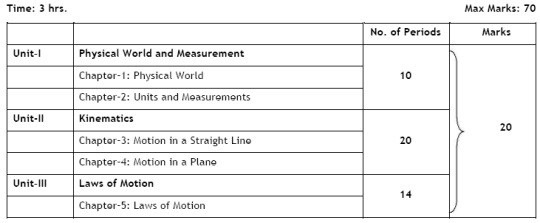

Experiment: To Determine the Focal Length of a Convex lens by Plotting Graphs Between u and v and 1/u and 1/v

Focal length of a convex lens: Objective To find the focal length of a convex lens using the lens formula by plotting u and v graph 1/u and 1/v graph Focal length of a convex lens: Apparatus and Material Required Convex lens (let’s assume f=10 cm) Optical bench with 3 uprights (with clamps) Optic needles Meter scale Spirit level Focal length of a convex lens: Ray Diagram Focal length of a convex…

#1/u vs 1/v graph#CBSE PHYSICS#CBSE/ICSE physics practical#Convex lens#Convex lens experiment#Focal length#Focal length determination#Lens formula experiment#lens properties#Optics experiment#Optics lab experiment#PHYSICS PRACTICAL#Ray optics#Ray optics experiment#Science lab projects#u-v graph method

0 notes

Text

Large Language Models and industrial manufacturing, a bibliography

References

Autodesk. (n.d.). Autodesk simulation. Retrieved July 14, 2023, from https://www.autodesk.com/solutions/simulation/overview

Chen, M., Tworek, J., Jun, H., Yuan, Q., de Oliveira Pinto, H. P., Kaplan, J., Edwards, H., Burda, Y., Joseph, N., Brockman, G., Ray, A., Puri, R., Krueger, G., Petrov, M., Khlaaf, H., Sastry, G., Mishkin, P., Chan, B., Gray, S., . . . Zaremba, W. (2021). Evaluating large language models trained on code. ArXiv.https://doi.org/10.48550/arXiv.2107.03374

Christiano, P. F., Leike, J., Brown, T., Martic, M., Legg, S., & Amodei, D. (2017). Deep reinforcement learning from human preferences. In I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, & R. Garnett (Eds.), Advances in neural information processing systems (Vol. 30, pp. 4299–4307). Curran Associates. https://papers.nips.cc/paper_files/paper/2017/hash/d5e2c0adad503c91f91df240d0cd4e49-Abstract.html

Dassault Systèmes. (n.d.). Dassault Systèmes simulation. Retrieved July 14, 2023, from https://www.3ds.com/products-services/simulia/overview/

Dhariwal, P., Jun, H., Payne, C., Kim, J. W., Radford, A., & Sutskever, I. (2020). Jukebox: A generative model for music. ArXiv. https://doi.org/10.48550/arXiv.2005.00341

Du, T., Inala, J. P., Pu, Y., Spielberg, A., Schulz, A., Rus, D., Solar-Lezama, A., & Matusik, W. (2018). InverseCSG: Automatic conversion of 3D models to CSG trees. ACM Transactions on Graphics (TOG), 37(6), Article 213. https://doi.org/10.1145/3272127.3275006

Du, T., Wu, K., Ma, P., Wah, S., Spielberg, A., Rus, D., & Matusik, W. (2021). DiffPD: Differentiable projective dynamics. ACM Transactions on Graphics (TOG), 41(2), Article 13. https://doi.org/10.1145/3490168

Du, Y., Li, S., Torralba, A., Tenenbaum, J. B., & Mordatch, I. (2023). Improving factuality and reasoning in language models through multiagent debate. ArXiv. https://doi.org/10.48550/arXiv.2305.14325

Erez, T., Tassa, Y., & Todorov, E. (2015). Simulation tools for model-based robotics: Comparison of Bullet, Havok, MuJoCo, ODE and PhysX. In Amato, N. (Ed.), Proceedings of the 2015 IEEE International Conference on Robotics and Automation (pp. 4397–4404). IEEE. https://doi.org/10.1109/ICRA.2015.7139807

Erps, T., Foshey, M., Luković, M. K., Shou, W., Goetzke, H. H., Dietsch, H., Stoll, K., von Vacano, B., & Matusik, W. (2021). Accelerated discovery of 3D printing materials using data-driven multiobjective optimization. Science Advances, 7(42), Article eabf7435. https://doi.org/10.1126/sciadv.abf7435

Featurescript introduction. (n.d.). Retrieved July 11, 2023, from https://cad.onshape.com/FsDoc/

Ferruz, N., Schmidt, S., & Höcker, B. (2022). ProtGPT2 is a deep unsupervised language model for protein design. Nature Communications, 13(1), Article 4348. https://doi.org/10.1038/s41467-022-32007-7

Guo, M., Thost, V., Li, B., Das, P., Chen, J., & Matusik, W. (2022). Data-efficient graph grammar learning for molecular generation. ArXiv. https://doi.org/10.48550/arXiv.2203.08031

Jiang, B., Chen, X., Liu, W., Yu, J., Yu, G., & Chen, T. (2023). MotionGPT: Human motion as a foreign language. ArXiv. https://doi.org/10.48550/arXiv.2306.14795

JSCAD user guide. (n.d.). Retrieved July 14, 2023, from https://openjscad.xyz/dokuwiki/doku.php

Kashefi, A., & Mukerji, T. (2023). ChatGPT for programming numerical methods. Journal of Machine Learning for Modeling and Computing, 4(2), 1–74. https://doi.org/10.1615/JMachLearnModelComput.2023048492

Koo, B., Hergel, J., Lefebvre, S., & Mitra, N. J. (2017). Towards zero-waste furniture design. IEEE Transactions on Visualization and Computer Graphics, 23(12), 2627–2640. https://doi.org/10.1109/TVCG.2016.2633519

Li, J., Rawn, E., Ritchie, J., Tran O’Leary, J., & Follmer, S. (2023). Beyond the artifact: Power as a lens for creativity support tools. In Follmer, S., Han, J., Steimle, J., & Riche, N. H. (Eds.), Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (Article 47). Association for Computing Machinery. https://doi.org/10.1145/3586183.3606831

Liu, R., Wu, R., Van Hoorick, B., Tokmakov, P., Zakharov, S., & Vondrick, C. (2023). Zero-1-to-3: Zero-shot one image to 3D object. ArXiv. https://doi.org/10.48550/arXiv.2303.11328

Ma, P., Du, T., Zhang, J. Z., Wu, K., Spielberg, A., Katzschmann, R. K., & Matusik, W. (2021). DiffAqua: A differentiable computational design pipeline for soft underwater swimmers with shape interpolation. ACM Transactions on Graphics (TOG), 40(4), Article 132. https://doi.org/10.1145/3450626.3459832

Makatura, L., Wang, B., Chen, Y.-L., Deng, B., Wojtan, C., Bickel, B., & Matusik, W. (2023). Procedural metamaterials: A unified procedural graph for metamaterial design. ACM Transactions on Graphics, 42(5), Article 168. https://doi.org/10.1145/3605389

Mathur, A., & Zufferey, D. (2021). Constraint synthesis for parametric CAD. In M. Okabe, S. Lee, B. Wuensche, & S. Zollmann (Eds.), Pacific Graphics 2021: The 29th Pacific Conference on Computer Graphics and Applications: Short Papers, Posters, and Work-in-Progress Papers (pp. 75–80). The Eurographics Association. https://doi.org/10.2312/pg.20211396

Mirchandani, S., Xia, F., Florence, P., Ichter, B., Driess, D., Arenas, M. G., Rao, K., Sadigh, D., & Zeng, A. (2023). Large language models as general pattern machines. ArXiv. https://doi.org/10.48550/arXiv.2307.04721

Müller, P., Wonka, P., Haegler, S., Ulmer, A., & Van Gool, L. (2006). Procedural modeling of buildings. In ACM SIGGRAPH 2006 papers (pp. 614–623). Association for Computing Machinery. https://doi.org/10.1145/1179352.1141931

Ni, B., & Buehler, M. J. (2024). MechAgents: Large language model multi-agent collaborations can solve mechanics problems, generate new data, and integrate knowledge. Extreme Mechanics Letters, 67, Article 102131. https://doi.org/10.1016/j.eml.2024.102131

O’Brien, J. F., Shen, C., & Gatchalian, C. M. (2002). Synthesizing sounds from rigid-body simulations. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (pp. 175–181). Association for Computing Machinery. https://doi.org/10.1145/545261.545290

OpenAI. (2023). GPT-4 technical report. ArXiv. https://doi.org/10.48550/arXiv.2303.08774

Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., Schulman, J., Hilton, J., Kelton, F., Miller, L., Simens, M., Askell, A., Welinder, P., Christiano, P. F., Leike, J., & Lowe, R. (2022). Training language models to follow instructions with human feedback. In S. Koyejo, S. Mohamed, A. Agarwal, D. Belgrave, K. Cho, & A. Oh (Eds.), Advances in Neural Information Processing Systems (Vol. 35, pp. 27730–27744). Curran Associates. https://proceedings.neurips.cc/paper_files/paper/2022/hash/b1efde53be364a73914f58805a001731-Abstract-Conference.html

Özkar, M., & Stiny, G. (2009). Shape grammars. In ACM SIGGRAPH 2009 courses (Article 22). Association for Computing Machinery. https://doi.org/10.1145/1667239.1667261

Penedo, G., Malartic, Q., Hesslow, D., Cojocaru, R., Cappelli, A., Alobeidli, H., Pannier, B., Almazrouei, E., & Launay, J. (2023). The RefinedWeb dataset for Falcon LLM: Outperforming curated corpora with web data, and web data only. ArXiv. https://doi.org/10.48550/arXiv.2306.01116

Prusinkiewicz, P., & Lindenmayer, A. (1990). The algorithmic beauty of plants. Springer Science & Business Media.

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019, February 14). Language models are unsupervised multitask learners. OpenAI. https://openai.com/index/better-language-models/

Ramesh, A., Pavlov, M., Goh, G., Gray, S., Voss, C., Radford, A., Chen, M., & Sutskever, I. (2021). Zero-shot text-to-image generation. Proceedings of Machine Learning Research, 139, 8821–8831. https://proceedings.mlr.press/v139/ramesh21a.html

Repetier. (n.d.). Repetier software. Retrieved July 20, 2023, from https://www.repetier.com/

Richards, T. B. (n.d.). AutoGPT. Retrieved February 11, 2024, from https://github.com/Significant-Gravitas/AutoGPT

Rozenberg, G., & Salomaa, A. (1980). The mathematical theory of L systems. Academic Press.

Slic3r. (n.d.). Slic3r - Open source 3D printing toolbox. Retrieved July 20, 2023, from https://slic3r.org/

Stiny, G. (1980). Introduction to shape and shape grammars. Environment and Planning B: Planning and Design, 7(3), 343–351. https://doi.org/10.1068/b070343

Sullivan, D. M. (2013). Electromagnetic simulation using the FDTD method. John Wiley & Sons. https://doi.org/10.1002/9781118646700

Todorov, E., Erez, T., & Tassa, Y. (2012). MuJoCo: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (pp. 5026–5033). IEEE. https://doi.org/10.1109/IROS.2012.6386109

Turing, A. M. (1936). On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London Mathematical Society, s2-42(1), 230–265. https://doi.org/10.1112/plms/s2-42.1.230

Willis, K. D., Pu, Y., Luo, J., Chu, H., Du, T., Lambourne, J. G., Solar-Lezama, A., & Matusik, W. (2021). Fusion 360 gallery: A dataset and environment for programmatic CAD construction from human design sequences. ACM Transactions on Graphics (TOG), 40(4), Article 54. https://doi.org/10.1145/3450626.3459818

Xu, J., Chen, T., Zlokapa, L., Foshey, M., Matusik, W., Sueda, S., & Agrawal, P. (2021). An end-to-end differentiable framework for contact-aware robot design. ArXiv. https://doi.org/10.48550/arXiv.2107.07501

Yu, L., Shi, B., Pasunuru, R., Muller, B., Golovneva, O., Wang, T., Babu, A., Tang, B., Karrer, B., Sheynin, S., Ross, C., Polyak, A., Howes, R., Sharma, V., Xu, P., Tamoyan, H., Ashual, O., Singer, U., . . . Aghajanyan, A. (2023). Scaling autoregressive multi-modal models: Pretraining and instruction tuning. ArXiv.https://doi.org/10.48550/arXiv.2309.02591

Zhang, Y., Yang, M., Baghdadi, R., Kamil, S., Shun, J., & Amarasinghe, S. (2018). GraphIt: A high-performance graph DSL. Proceedings of the ACM on Programming Languages, 2(OOPSLA), Article 121. https://doi.org/10.1145/3276491

Zhao, A., Xu, J., Konaković-Luković, M., Hughes, J., Spielberg, A., Rus, D., & Matusik, W. (2020). RoboGrammar: Graph grammar for terrain-optimized robot design. ACM Transactions on Graphics (TOG), 39(6), Article 188. https://doi.org/10.1145/3414685.3417831

Zilberstein, S. (1996). Using anytime algorithms in intelligent systems. AI Magazine, 17(3), 73–83. https://doi.org/10.1609/aimag.v17i3.1232

0 notes

Note

1/2 i think ill be able to list all of them but im not sure so i will try? the eye, the web, the hunt, the flesh, corruption, the vast, the buried, the stranger, the spiral, the dark, desolation, the lonely, the end, extinction technically. this took me some time tho and i still am missing one bc i know that there are 14 (15 with extinction)

2/2 and for the method. entities usually come in pairs or at least i associate them this way (for example vast and buried being opposites or spiral and stranger being similar, hunt and flesh also feel like a pair to me even though it doesnt really make sense) also im thinking of any meta theory graphs ive seen or trying to remember avatars/statements

thats valid and makes a lot of sense!! the 1 u forgot was the slaughter which is understandable lol. i remember them in little groups too but not grouped conceptually just grouped alphabetically bc its easy for me to remember like. B-C-2D-3E-F-H-L and then theres a big jump in the alphabet and it goes to 3S-V-W and thats all of em. also i like to automatically count the extinction bc it saves me from the confusion of like listing it and then counting 14 and thinking thats all of them when i actually forgot one of the others and replaced it w the extinction

2 notes

·

View notes

Note

im in ap bio and our teacher doesn’t actually teach us anything but he gives us 9 page packets to fill out during the unit (which is like. 4 classes?) and literally every single question aside from graphs or labeling images is on quizlet so we use that and now we’re all gonna fail our ap exam

ahhhhhhhhhhh!!!!!!!!!!!!!!!!

god that sounds a w f u l !!!! but i also know that that is the experience of a lot of kids who take ap bio and it sucks!!! bio is super super interesting if you have a teacher who’s methods aline with your learning style and is just genuinely really excited to teach it. i still remember lots of random things about hormones or cell processes just because of how my teacher taught them

if you want some genuine recommendations from me, i always recommend the review book. it’s basically your textbook but without a lot of the language that makes it hard to understand. it’s just super streamlined and i would use it to study before each individual test, not just the ap exam. it’s so much more straightforward and i found that really helps and it also has practice problems and answers*

other than that i recommend crash course and bozeman science on youtube. crash course is (obviously) a crash course, so it’s not as detailed, but i really like using it for overviews. if i don’t really remember or understand something hank mentions, i know that it’s something i need to look at again. and then i always would listen to it as i fell asleep and all morning the day before tests, because i just really really hoped that would just. help. bozeman science is (in my opinion) a lot more boring, but they’re specifically made for the ap course and exam and are a lot more detailed. if you’re really struggling, i recommend those**

i’m sorry your teacher sucks :// i really do genuinely believe that way more people would love biology if they just had a better teacher. but hey, don’t give up the ap just yet! you’ve got time! (don’t bother studying plants. no one cares about plants)

[footnotes below cut cause i don’t shut up]

*btw this is true of all the ap books i ever used. i highly recommend them for sciences and history, and as much as i still didn’t understand calculus, i understood it a tiny bit more and it helped to be able to see what felt really really confusing simplified on a page. it’s just the barebones knowledge and i know for me, it’s so much easier to process and figure out what you need to know. i really wish all textbooks were written the way the review books were honestly

**i apply the same principal to both world and us ap history. crash course videos are VERY good for basic overview and finding holes in your knowledge. i also would put it on in the background as i reviewed other notes or read my review book because i was like “this is a ridiculous amount of information, maybe i should just like surround myself in a bubble of it” (i have no idea if it helped). and then for the more thorough videos, i would check out joczproductions, also on youtube. they’re longer and kind of boring but they’re good for like. getting a lot of information at once. AND there are videos that compare different areas and themes and whatnot which are REALLY important to keep in mind on the exam!!!

(also if you google ‘apush notes site’..............)

(i l o v e amsco review book for apush btw, it doesn’t have answers for the practice questions but like i really do love it and have genuinely considered buying a new copy, i gave mine away, just to have to read through. for other books, i looked at both the official college board ones and other unofficial versions. for those just go with whatever looks like it’ll help you more, for my ap psych book i literally just went back and forth between two different review books because i hated the textbook that much)

#apush#ap bio#ap biology#ap#advanced placement#ap advice#not pjo#answered#school#a;sdlkfjLKSDJF SORRY I HAVE A LOT OF THINGS TO SAY ABOUT APS!!!!!!#and they can be uber overwhelming so like i wanna tell ppl how i dealt w that#bc w know i DONT do it well pfff#you can do it i believe in you#please take my long rambly advice with many grains of salt#Anonymous#Thanks from the Argo!

15 notes

·

View notes

Text

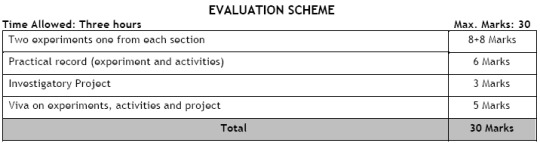

1. To determine resistivity of two / three wires by plotting a graph between potential difference versus current.

2. To find resistance of a given wire / standard resistor using metre bridge.

OR

To verify the laws of combination (series) of resistances using a metre bridge.

OR

To verify the Laws of combination (parallel) of resistances using a metre bridge.

3. To compare the EMF of two given primary cells using potentiometer.

OR

To determine the internal resistance of given primary cell using potentiometer.

4. To determine resistance of a galvanometer by half-deflection method and to find its figure of merit.

5. To convert the given galvanometer (of known resistance and figure of merit) into a voltmeter of desired range and to verify the same.

OR

To convert the given galvanometer (of known resistance and figure of merit) into an ammeter of desired range and to verify the same.

6. To find the frequency of AC mains with a sonometer.

Experiments Assigned for Term-II

1. To find the focal Length of a convex lens by plotting graphs between u and v or between 1/u and 1/v.

2. To find the focal Length of a concave lens, using a convex lens.

or,

To find the focal length of a convex mirror, using a convex Lens

3. To determine angle of minimum deviation for a given prism by plotting a graph between angle of incidence and angle of deviation.

4. To determine refractive index of a glass slab using a travelling microscope.

5. To find refractive index of a liquid by using convex Lens and plane mirror.

6. To draw the I-V characteristic curve for a p-n junction in forward bias and reverse bias.

_______________________________

#isc #icse #icsevscbse #icsememes #education #likeforlikes #like4likes #likesforlike #likeforfollow #liketime #likeforlikeback #likelike #likers #likeme #followforfollowback #follow #follow4followback #followers #followme #friends #study #instadaily #instagram #instagood #instalike #insta #instamood #instaphoto #instacool #india 📖📖📖📖

0 notes

Text

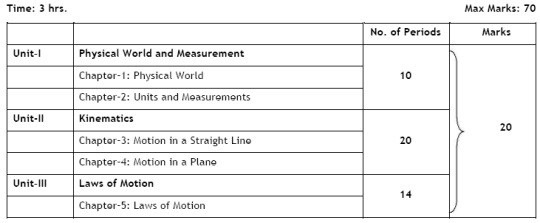

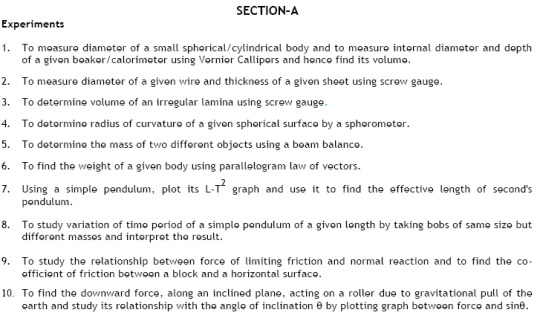

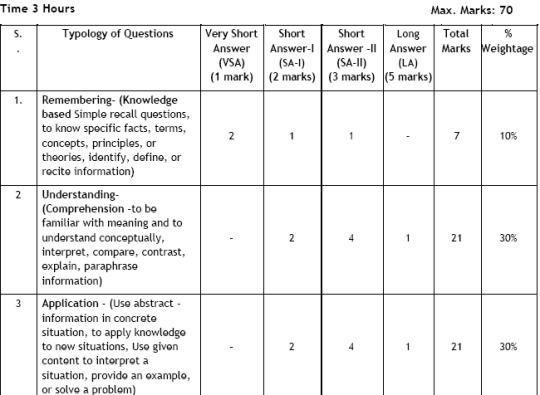

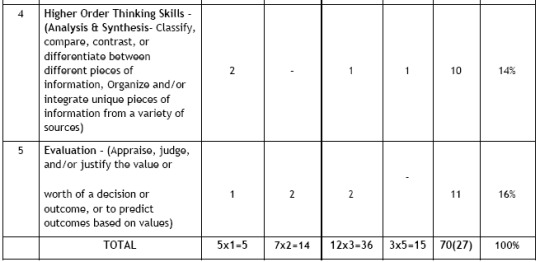

Board practical exam paper

All India Senior Secondary Certificate Exam (2020 – 21) SUB: PHYSICS (042)

Date: ………… Time: 03hrs

Section A

1. To determine resistivity of two/three wires by plotting a graph for potential difference versus current.

2. To find resistance of a given wire/ standard resistor using meter bridge.

3. To determine resistance of a galvanometer by half-deflection method and to find its figure of merit.

4. To convert the given galvanometer (of known resistance and figure of merit) into a voltmeter of desired range and to verify the same.

Section B

1. To find the focal length of a convex lens by plotting graphs between u and v or between 1/u and 1/v.

2. To find the focal length of a convex mirror, using a convex lens.

3. To determine angle of minimum deviation for a given prism by plotting a graph between angle of incidence and angle of deviation.

4. To draw the I-V characteristic curve for a p-n junction diode in forward bias and reverse bias.

Evaluation Scheme:

o Tow experiments one from each section 8+8 marks

o Practical record (experiments and activities) 7 marks

o Viva based on experiments 7 marks

Total marks 30 marks

Internal examiner signature External examiner signature

0 notes

Text

Game Theory (SSG) in Physical and Cybersecurity

Most organizations only have so many resources to go around, forcing them to choose where and when to use their money and assets to improve security. In the past, this forced the cyber community to develop different methods and routes to best keep businesses safe. These were algorithmic game theories that need to be tested, leading to what we know today as “cyber games” (Sinha, et al.). Many of us have heard of the Red Team versus Blue Team events or a company hiring a security group to mock attack or break in. Even Disaster Recovery table top games are reminiscent of a kind of play, like cards or escape rooms.

Here are some of these algorithms that helped randomize and strengthen security: ARMOR, IRIS, Protect, Trusts, Paws, and Midas. These, specifically, were all used for physical security in some way. Usually to randomize patrol units or protect wildlife. Using these algorithms in cybersecurity is relatively new but have already been used for “deep packet inspection, optimal use of honey pots, and enforcement of privacy policies” (Sinha, et al.; Vanek O, Yin Z, Jain M, et al.; Dukota K, Lisy V, Kiekintveld C, et al.; Blocki J, Christin N, Datta A, et al.).

Sometimes you may see these games called “Stackelberg Security Games” or SSG. This is when one side has to protect something and the other has to use adversarial reasoning to try to invade, destroy, or defeat. The article I read explains more on research conducted on the results of these games as well as challenges in doing this research and real-world difficulties. For example, when you sit down to play a game and you’re given three options, you’re going to try to go with the best option. A real-world challenge is that humans don’t always pick the best option, especially under pressure or time-crunch and especially when they’re in an emergency situation. So, since no one is perfect, these games simply ask: Is this a better security profile than before (Sinha, et al.)?

Most of these algorithms rely on organizations following them for timing. For example, they compared how well a metro in Los Angeles ran based off a human-made schedule versus an algorithmically designed one. Humans taking the metro gave their opinions on their ride experience, not knowing which had made the schedule for the day. Not only did the algorithm win, but letting a computer do that work saves a human employee, on average, an entire day’s worth of work creating the schedule (Sinha, et al.)!

Another example is using these algorithms to pick and inspect data packets in an extremely large network, as if taking a random survey sample. A network can’t be expected to “deep dive” into every single data packet that hops to or from a device, so we’re back to a limited allocation of resources.

Game theory to the rescue: “The objective of the defender is to prevent the intruder from succeeding by selecting the packets for inspection, identifying the attacker, and subsequently thwarting the attack … The authors provide polynomial time approximation algorithm that benefits from the submodularity property of the discretized zero-sum variant of the game and finds solutions with bounded error in polynomial time (Sinha, et al.).”

That’s pretty technical but basically means that the game will help the company playing it decide how often they need to set up their system to do these random checks. For example, PetCo may only have to check once an hour, while PetSmart might have to set their system to check a random data packet every minute.

Most people think physical and cybersecurity are different, but they have a lot of common ground. The largest being that a computer algorithm can help when time and assets are tight!

Resources

Blocki J, Christin N, Datta A et al. Audit games. In: Proceedings of the 23rd International Joint Conference on Artificial Intelligence, 2013.

Durkota K, Lisy V, Kiekintveld C et al. Game-theoretic algorithms for optimal network security hardening using attack graphs. In: Proceedings of the (2015) International Conference on Autonomous Agents and Multiagent Systems. AAMAS '15, 2015. Richland, SC: International Foundation for Autonomous Agents and Multiagent Systems (IFAAMAS).

Sinha, Arunesh, et al. "From physical security to cybersecurity." Journal of Cybersecurity, 2015, p. 19+. Gale OneFile: Computer Science, link.gale.com/apps/doc/A468772624/CDB?u=lirn55593&sid=CDB&xid=435a0a02. Accessed 22 Apr. 2021.

Vanek O, Yin Z, Jain M et al. Game-theoretic resource allocation for malicious packet detection in computer networks. In: Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2012. Richland, SC: International Foundation for Autonomous Agents and Multiagent Systems (IFAAMAS).

0 notes

Text

Baseline to Measurement

I completed the same tasks for my first measurement that I did for my baseline performance. This included the two strumming patterns and the chord progression. Although, I noticed that for my baseline I played Fadd9 instead of Am. This mix-up ended up helping me in the long run (more on that later). When practicing, I decided that playing to a song helped me with knowing when to switch a chord. So I tried this out with the chorus of “House of Gold” (vocals only). I set the playback speed to .75, which was a good starting speed for me.

Practice: After a week of practicing, I noticed that I was starting to gain anticipatory behaviors while playing. For example, if I was playing F to G, while playing F I would look at the chords that form G. Additionally, I would think about the positioning of my fingers on the G chord. This was a highlighting moment in my practice time, because it was some sort of evidence that I was retaining something out of this practice. My ultimate goal is to flow and become fluid in every aspect of learning to play ukulele and this gave me a little confidence and proof that it’s possible. I think that’s a crucial factor in learning any skill, having the motivation to pick it up, but also keeping that motivation when no progress (or interesting progress) is shown.

As far as the mistake in practicing the wrong four chords I mentioned earlier, this helped my methods in diminishing some practice/order effects in playing the same four chords in the same order. My first measurement includes the same four chords, but my practice with the song switches up the order and replaces a chord (remember Am—>Fadd9). On a side note, Fadd9 is still a beginner chord, here’s a video that provides some explanation: https://www.youtube.com/watch?v=8zOgwD-TQME.

Measurement: I followed the same procedure as baseline when measuring my first performance level by recording myself playing the chord progression with the strumming pattern (with all fingers and then just thumb): D-D-U-U-D-U. This pattern was executed 2x for each chord.

My initial goal in the semester was to work on my strumming, however I got the hang of it more quickly than I had anticipated--moving from a simple down strum to the D-D-U-U-D-U pattern. So I felt like my next goal was to get comfortable with the fret side of the uke. This would include the motor movement involved in forming my fingers into the correct chords, pressing down on the strings (with enough pressure), and switching between chords with ease. I struggled with these areas the most at baseline, so I felt they were most appropriate to measure. I decided to count the total errors of the whole session and separated the errors into three groups: dead notes, finger pressure, finger placement/adjustment.

Here’s a graph comparing errors at baseline and the first measurement:

I also have a graph of completion time for each session:

Note: I did not focus on timing for this first performance session since that wasn’t part of my goal, however I wanted to note that it took slightly less time for me to do the same task a week later.

Thoughts on improvement:

Pros: I enjoyed my new technique of playing chords in a song with the vocals included. I find that knowing the words to a song helps with knowing what notes to produce and timing (for when I start to practice that). Overall my measurement session felt less “calculated” when it came to decision-making. I felt like my chord switches and strumming were a bit more fluid compared to baseline. I think I have gotten a lot better at the motor aspect. One major change I have noticed is my shift in hand position. I find myself slanting my fingers so that I don’t touch other strings that results in a buzzing or dead note sound.

Cons: I am feeling a little uncomfortable with my wrist after playing for awhile and I’m not sure if it is from this shift I am making. I also notice that I’m sometimes using my thumb to hold up the ukulele when I make these shifts. I know that people play in a way that’s comfortable for them, however there is a standard posture that a ukulele player should adopt. They say there’s a YouTube video for everything, so I plan to find a few to help fix elements relating to posture.

0 notes

Text

Will it be a ‘V’ or a ‘K’? The many shapes of recessions and recoveries

A 'V' restoration is seen as the easiest way to bounce again from a recession. Steve Stone/Second through Getty Photos

Recessions – sometimes outlined as two consecutive quarters of declining financial output – are at all times painful when it comes to how they have an effect on our financial well-being. Like all unhealthy issues, luckily, they finally finish and a restoration begins.

However not all recoveries or recessions look the identical. And economists tend to check their various paths with letters of the alphabet.

For instance, through the present state of affairs, you could have the heard the path the restoration may take in contrast with a “V,” a “W” or perhaps a “Okay.”

As a macroeconomist, I do know this alphabet soup might be complicated for a lay reader. So right here’s a information to among the mostly used letters.

‘V’ for victory

Whereas recessions are by no means a superb factor, the “V-shaped” restoration is deemed the best-case situation. In a recession with a V form, the decline is fast, however so is the restoration.

instance of this kind of recession happened in 1981 and 1982. That recession occurred after then-Federal Reserve Chair Paul Volcker quickly raised rates of interest starting in 1979 in an effort to curb excessive inflation. This triggered a pointy recession – resulting in what was then the very best unemployment charge within the U.S. because the Nice Despair.

However outdoors of financial circles, this recession is little remembered. Why? Primarily as a result of the restoration was so fast. After Volcker started slicing rates of interest within the second half of 1982, the economic system entered a restoration as sharp because the recession.

‘U’ and a protracted backside

Conversely, a “U-shaped” recession usually has an extended period, each for the downturn and the restoration interval. The 2001 recession that adopted the dot-com bubble and the 9/11 assaults suits into this class.

In some methods, the post-dot-com recession was a light one. The autumn in employment from the job market’s peak in February 2001 till the trough in August 2003 was solely barely lower than 2%. But it took over two years of decline for the economic system to backside out, and it took one other yr and a half for the variety of jobs to exceed the pre-recession peak. Moreover, the period of time spent close to the underside of the recession was comparatively lengthy.

The reclining ‘L’

The final U.S. recession, which coincided with the monetary disaster of 2008, was particularly brutal.

Economists name it an “L-shaped” recession as a result of there was an preliminary sharp downturn, however a really plodding restoration. To see the L, you should think about the letter type of reclining backward on its finish.

The economic system declined quickly after the September 2008 failure of Lehman Brothers, and employment plunged about 6.3% from its pre-recession peak earlier than reaching its low level. The tempo of job creation within the restoration was very sluggish. It took nearly 4½ years to get better all the roles misplaced.

‘Okay’ and a two-track restoration

It might be onerous to see how a Okay might be utilized to information on a graph, but it surely’s the letter more and more getting used to explain the trail of the present recession and eventual restoration.

Fed Chair Jerome Powell didn’t name it a “Okay” however that’s principally what he meant when he mentioned the present financial trajectory in a latest handle. He expressed considerations that the U.S. will expertise a “two-track restoration” wherein issues get higher rapidly for some folks, whereas staying unhealthy for others.

Is that the form of recession we’re in?

It’s unclear. Thus far, wanting on the entire economic system, the U.S. has what has been referred to as a “checkmark” or “swoosh” recession. It started to look one thing like a V, with a pointy drop in employment after which the beginnings of a fast enhance. However that restoration has begun to stall – although not for everybody.

[Deep knowledge, daily. Sign up for The Conversation’s newsletter.]

As Powell advised, the restoration may look totally different to numerous teams. White-collar staff may even see a “V,” as their jobs are extra able to being achieved remotely. Blue-collar staff are seeing one thing nearer to a U or L. One evaluation exhibits that medium- to high-wage staff have gained again nearly all the roles misplaced through the shutdown earlier this close to. Conversely, employment of lower-wage staff remains to be greater than 20% under its pre-COVID-19 peak.

Recessions are powerful for anybody to dwell via. Nevertheless, the form of the restoration could make it roughly bearable.

William Hauk ne travaille pas, ne conseille pas, ne possède pas de elements, ne reçoit pas de fonds d'une organisation qui pourrait tirer revenue de cet article, et n'a déclaré aucune autre affiliation que son organisme de recherche.

from Growth News https://growthnews.in/will-it-be-a-v-or-a-k-the-many-shapes-of-recessions-and-recoveries/ via https://growthnews.in

0 notes

Text

The "dichotomy theorem" is a statement about finding homomorphisms between graphs. A homomorphism from a graph G to a graph H is a function f : V(G) -> V(H), so that f(u) and f(v) are neighbors whenever u and v are neighbors. For example: if H has a vertex p that is its own neighbor, a self-loop, then you could map every vertex in G to p. Since f(u) = p = f(v), and p is its own neighbor, this trivially satisfies the requirements. Another example is when H = K3, a triangle. We can call these three vertices r, g, and b. Now finding a homomorphism f means mapping each vertex to one of these three "colors", [r]ed [g]reen or [b]lue. If two vertices in G are neighbors, then applying f to that pair has to give two different colors. So, finding a homomorphism from G to K3 is equivalent to finding a 3-coloring of G.

The dichotomy theorem states that for any fixed H, the problem of "Given G, is there a homomorphism from G to H?" is either in P or is NP-Complete. This is a deep theorem, and wasn't proved until 2017, with pretty advanced methods. A not-so-hard extension of this is that any "finite constraint problem", like 3-SAT, or a set of bounded-size equations over a finite field, is also necessarily either P or NP-Complete. This is shown by establishing a polynomial-time computable equivalence between a constraint problem and an appropriate graph homomorphism problem, such that either can always be written in terms of the other.

As nice and broad as this result is, it doesn't capture the problem of graph isomorphism, which is not known to be P or NP-Complete. This problem can also be naturally phrased in terms of graph homomorphisms: given two graphs G and H, are there graph homomorphisms f1 : G -> H, and f2 : H -> G, such that f1 is the inverse of f2? There is no known way to encode graph isomorphism as a constraint problem, though. In the same way that many constraint problems can be described by a graph homomorphism problem to a fixed graph, many isomorphism problems (of different structures) can be described by a graph isomorphism problem.

I'm trying to figure out if there's a natural problem space that captures both constraint problems and isomorphism problems, that could permit a "trichotomy theorem" kind of thing, where everything is either P or NP-Complete or GI-complete (graph-isomorphism complete). An interesting candidate space I thought of would be a sort of 'graph category completion' problem. You're given a small category of graphs, where some are fixed and some are part of the parameters of the problem, and your goal is to find a set of graph morphisms that satisfy given relations. So a constraint problem would be:

Category with 2 objects G and H, H is fixed, G is part of the problem, one morphism f : G -> H, no relations.

and a graph isomorphism problem would be:

Category with 2 objects G and H, none are fixed, G and H are part of the problem, two morphisms f1 : G -> H and f2 : H -> G, relations are that f1∘f2=id_G, and f2∘f1=id_H.

One more important example is:

Two objects G and H, H is fixed, and you want a morphism f from H to G. This problem is always in P, because you can enumerate all potential functions f in time O( |G| ^ |H| ). Since |H| is a constant, this polynomial time.

And then the question is, is any such "find the morphisms" problem necessarily either P/NP-Complete/GI-Complete? For most of the simple categories I can think of, I could pretty quickly show it was one of these three. Here's a more complicated example:

Category with four objects: {P, H, G1, G2}; P and H are fixed; G1 and G2 are part of the problem; six morphisms {h1 : H->G1, g1 : G1 -> G2, g2 : G2 -> G1, h2 : G2 -> H, p1 : P -> H, p2 : H -> P}; relations are {g2∘g1=id_G1, g1∘g2=id_G2, h2∘g1∘h1 = p1∘p2}.

That's a long statement, so let me explain why it's interesting -- I also have a picture attached above. The composition p1∘p2 is going to be some endomorphism of H, so, not necessarily an isomorphism, or the identity. The laws relating g1 and g2 mean that G1 is isomorphic to G2. Then instead of finding h2, we can define a new morphism h3 : G1 -> H, where h3 = h2∘g1. So now we're finding endomorphisms of H given by h3∘h1 and p1∘p2, that go through G1 and P respectively. If we have found any 4 morphisms h3, h1, p1, and p2, that don't necessarily satisfy the laws we want, we can define new ones: h1' = h1∘p1∘p2, and p1' = h3∘h1∘p1. Now h3∘h1' = h3∘h1∘p1∘p2 = p1'∘p2, and so these new morphisms will satisfy the laws. So we find these morphisms in polynomial time since |H| is a constant (or reject if we fail), and then we're left with finding an isomorphism between G1 and G2, so the whole thing is GI-complete.

This general strategy of diving up the category into isomorphism-like subproblems (GI-complete), and problems of finding morphisms from a fixed structure to a graph (which are P-time), seems to address most cases. I wonder how hard it would be hard to prove this works in general. Any reasonable proof would certainly use the normal dichotomy theorem as a giant hammer.

Some things I'm wondering about, as a potentially interesting thing to allow in this "category completion" problem:

"Morphism f is faithful", i.e. f(u) and f(v) are neighbors only if u and v are neighbors

"Morphism f is injective" / "Morphism f is surjective".

Filling in a category with new graphs. Instead of just having to solve for morphisms, now finding objects is also part of the problem. Without putting bounds on the size of the "answer" graph, it's not immediately clear that the resulting problem is even in NP. I suspect you could show that it's always at most the product of the sizes of the other graphs given, or something like that, which would suffice to put it in NP.

0 notes

Text

Letter to an interested student.

I had the good luck to chat with a high-school student who was interested in doing the most good she could do with hacker skills. So I wrote the letter I wish someone had written me when I was an excitable, larval pre-engineer. Here it is, slightly abridged.

Hi! You said you were interested in learning IT skills and using them for the greater good. I've got some links for learning to code, and opportunities for how to use those skills. There's a lot to read in here--I hope you find it useful!

First, on learning to code. You mentioned having a Linux environment set up, which means that you have a Python runtime readily available. Excellent! There are a lot of resources available, a lot of languages to choose from. I recommend Python--it's easy to learn, it doesn't have a lot of sharp edges, and it's powerful enough to use professionally (my current projects at work are in Python). And in any case, mathematically at least, all programming languages are equally powerful; they just make some things easier or more difficult.

I learned a lot of Python by doing Project Euler; be warned that the problems do get very challenging, but I had fun with them. (I'd suggest attempting them in order.) I've heard good things about Zed Shaw's Learn Python the Hard Way, as well, though I haven't used that method to teach myself anything. It can be very, very useful to have a mentor or community to work with; I suggest finding a teacher who's happy to help you with your code, or at the very least sign up for stackoverflow, a developer community and a very good place to ask questions. (See also /r/learnprogramming's FAQ.) The really important thing here is that you have something you want to do with the skills you want to learn. (As it is written, "The first virtue is curiosity. A burning itch to know is higher than a solemn vow to pursue truth.") Looking at my miscellaneous-projects directory on my laptop, the last thing I wrote was a Python script to download airport diagrams from the FAA's website (via some awful screenscraping logic), convert them from PDFs to SVGs, and upload them to Wikimedia Commons. It was something I was doing by hand, and then I automated it. I've also used R (don't use R if you can help it; it's weird and clunky) to make choropleth maps for internet arguments, and more Python to shuffle data to make Wikipedia graphs. It's useful to think of programming as powered armor for your brain.

You asked about ethical hacking. Given that the best minds of my generation are optimizing ad clicks for revenue, this is a really virtuous thing to want to do! So here's what I know about using IT skills for social good.

I mentioned the disastrous initial launch of healthcare.gov; TIME had a narrative of what happened there; see also Mikey Dickerson (former SRE manager at Google)'s speech to SXSW about recruiting for the United States Digital Service. The main public-service organizations in the federal government are 18F (a sort of contracting organization in San Francisco) and the United States Digital Service, which works on larger projects and tries to set up standards. The work may sound unexciting, but it's extraordinarily vital--veterans getting their disability, immigrants not getting stuck in limbo, or a child welfare system that works. It's easy to imagine that providing services starts and ends with passing laws, but if our programs don't actually function, people don't get the benefits or services we fought to allocate to them. (See also this TED talk.)

The idea is that most IT professionals spend a couple of years in public service at one of these organizations before going into the industry proper. (I'm not sure what the future of 18F/USDS is under the current administration, but this sort of thing is less about what policy is and more about basic competence in executing it.)

For a broader look, you may appreciate Bret Victor's "What Can a Technologist Do About Climate Change?", or consider Vi Hart and Nicky Case's "Parable of the Polygons", a cute web-based 'explorable' which lets you play with Thomas Schelling's model of housing segregation (i.e., you don't need actively bitter racism in order to get pretty severe segregation, which is surprising).

For an idea of what's at stake with certain safety-critical systems, read about the Therac-25 disaster and the Toyota unintended-acceleration bug. (We're more diligent about testing the software we use to put funny captions on cat pictures than they were with the software that controls how fast the car goes.) Or consider the unintended consequences of small, ubiquitous devices.

And for an example of what 'white hat' hacking looks like, consider Google's Project Zero, which is a group of security researchers finding and reporting vulnerabilities in widely-used third-party software. Some of their greatest hits include "Cloudbleed" (an error in a proxying service leading to private data being randomly dumped into web pages en masse), "Rowhammer" (edit memory you shouldn't be able to control by exploiting physical properties of RAM chips), and amazing bug reports for products like TrendMicro Antivirus.

To get into that sort of thing, security researchers read reports like those linked above, do exercises like "capture the flag" (trying to break into a test system), and generally cultivate a lateral mode of thinking--similar to what stage magicians do, in a way. (Social engineering is related to, and can multiply the power of, traditional hacking; Kevin Mitnick's "The Art of Deception" is a good read. He gave a public talk a few years ago; I think that includes his story of how he stole proprietary source code from Motorola with nothing but an FTP drop, a call to directory assistance and unbelievable chutzpah.)

The rest of this is more abstract, hacker-culture advice; it's less technical, but it's the sort of thing I read a lot of on my way here.

For more about ethical hacking, I'd be remiss if I didn't mention Aaron Swartz; he was instrumental in establishing Creative Commons licensing, the RSS protocol, the Markdown text-formatting language, Reddit and much else. As part of his activism, he mass-harvested academic journal articles from JSTOR using a guest account at MIT. The feds arrested him and threatened him with thirty-five years in prison, and he took his own life before going to trial. It's one of the saddest stories of the internet age, I think, and it struck me particularly because it seemed like the kind of thing I'd have done, if I'd been smarter, more civic-minded, and more generally virtuous. There's a documentary, The Internet's Own Boy, about him.

Mark Pilgrim is a web-standards guy who previously blogged a great deal, but disappeared from public (internet) life around 2011. He wrote about the freedom to tinker, early internet history, long-term preservation (see also), and old-school copy protection, among other things.

I'll leave you with two more items. First, a very short talk, "wat", by Gary Bernhardt, on wacky edge cases in programming language. And second, a book recommendation. If you haven't read it before, Gödel, Escher, Bach is a wonderfully fun and challenging read; it took me most of my senior year of high school to get through it, but I'd never quite read anything like it. It's not directly about programming, but it's a marvelous example of the hacker mindset. MIT OpenCourseWare has a supplemental summer course (The author's style isn't for everyone; if you do like it, his follow-up Le Ton beau de Marot (about language and translation) is also very, very good.)

I hope you enjoy; please feel free to send this around to your classmates--let me know if you have any more specific questions, or any feedback. Thanks!

4 notes

·

View notes

Text

As we Coron-on

To explain my “humor” ... “ Coron-on” is to “Drone-on” with Corona-virus blues.

I have taken a few weeks off from commenting about this “pandemic.” But the first thing is to let us look at the numbers for today 7/6/20; total cases are 11,483,400, with 534,938 total deaths. In the USA, we have 2,288,915 cases, with 130,007 deaths. The percentages are 0.147% of the world population infected and 4.66% death rate. The USA is almost 5 times greater at 0.695% infected and a death rate of only 0.583%. Remember, in looking at these numbers, remember the Spanish Flu of 1918 had an overall infection rate of 27% and a death rate between 3.4% to 20%, so we are not close to those “pandemic levels.”

The one thing these numbers tell us is while yes, they are still large and will remain so, the USA is either proving this is more widespread than we know or they have figured out how to treat the CCP-Virus. And still, the percentages show this is still statistically insignificant, although the USA is trending to reach the 1% mark on 7/13. Also, the USA is testing far more people than anywhere else in the world, so that appears to be why the death rate is almost 8x less than the world while our infection rate is approximately 5x greater.

The CCP-Virus is named such because the Chinese Communist Party (CCP) has been controlling the information concerning the source and the initial infection rate. Also, the virus appears to have been developed in the lab in Wuhan, where it seems to have escaped. Just so it’s clear the USA is not complacent in this virus, Dr. Fauci’s agency, NIAID, gave the Wuhan lab $3.4M to study “gain of function” research with coronavirus in bats. For clarity, “gain of function” research is genetically modifying the virus to “see” what they can do with it.

Second, this has become hyper-political; we had to close the economy for a while before people got a handle on the problem. This problem has been compounded by a conflict between President Trump and others. For example, Trump suggesting HCQ as a “cure” based on anecdotal data from using the common low-cost drug was lambasted by his opponents and science for having no clear tests. The press latched on to the conflict without looking at the data, and science was complicit for not doing any meaningful tests. Well, they are starting to trickle in with amazing results compared to the drugs that cost thousands yet are still being promoted while HCQ results are ignored—no idea why this is going on, but it’s interesting to observe.

In watching the debate, I notice it’s similar to the Climate Change debate, where only a group of individuals (including scientists) are allowed to have an opinion. In contrast, other groups of individuals (including scientists) are not allowed to speak. As I have mentioned before, social media has suppressed opinions outside what they wish to promote and hence why I no longer participate on Facebook (other than promoting our business). The question I have is, why? If there is data, something is wrong, why suppress it? Why not discuss it, isn’t that what SCIENCE is about. Think about what we would not know if the debate was suppressed in the past!!

Anyway, look at the numbers and look at what we were told. If you did not know I pull the numbers off the John Hopkins site and compile them, I also do a “curve fitting” graph to help predict the future. When I initially stated the rate of infection was 11.29% daily increase, now it’s around 1.75%. Now the press has been talking about a “spike” in new cases as we open. My charts show this “spike” in the USA as going from 1.45% daily increase in new cases to 1.83%, not really a “spike.” Now I will say the daily number of cases has risen, and the percentage has gone down since the overall number of cases this number is based on is getting larger, but this is a number game. The overall percentages are where we live for perspective. A million is huge when compared to my bank account, but is small when compared to the national debt, that is why you use percentages to get a perspective on the overall size.

The one thing noted is how a month ago, after the country opened, irresponsible protesters were doing massive protests in the USA without following the “social distancing” rule seem to correlate closer to the increase in cases than “opening” has.

In Oregon, they are partially opened fully. I say partially since many restaurants are remaining take out only because they don’t want to open only to have the Governor close them down again. It’s a cost and risk they will wait on taking. We are now required to wear masks indoors and that’s receiving resistance. Why? Because there is mixed information on their effectiveness. We were initially told only N95 masks would work and that “bandanas” were worthless. Now anything over the face is fine. Hence it’s starting to look like government control versus health concerns. This rule added with the 4th of July being shut down, but a BLM protest of over 1000 people was allowed in Salem caused division. You can see why many believe this is starting to look more like a political/cabal driven population control method.

On a Biblical side, massive floods and locust infestations in China make this look closer and closer to “end times” prophecies. A YouTuber said it best, and we as Christians must pray in the name of Jesus, the NAME of those who need salvation in Jesus. So … if you’re reading this and don’t understand Jesus feel free to contact me via email c v s (at) b e l l s o u t h (dot) n e t. (done for preventing spam:)

0 notes

Video

youtube

Integration |Exercise 9.2|Question 3 Solution|Elements of Mathematics |

integration meaning integration by parts integration meaning in hindi integration of tanx integration formula integration of log x integration by parts formula integration of cotx integration calculator integration all formulas integration and differentiation integration and differentiation formula integration all formulas pdf integration as limit of sum examples integration as limit of sum integration architect integration assignment integration application integration antonym a integration test a integration formula a integration meaning a integration system a integration policy integrating sphere a integration theory a integration value a integration in english a integration method integration by substitution integration by partial fractions integration basic formulas integration by parts examples integration by parts rule integration by parts with limits integration basics integration by substitution method integration b integration by mit integration b y substitution integrated b.ed ax+b integration d&b integration manager d&b integration a.b integration formula integration class 11 integration chapter integration calculus integration calculator with limits integration chain rule integration cosec x integration cotx integration class 12 maths integration calculator with steps c integration testing c integration function c integration test framework c integration library c integration with python c integration constant c integration meaning integration c code integration c programming integration definition integration day integration differentiation integration developer integration design integration dx integration division rule integration design patterns integration disorder integration definition in maths d'integration integration d/dx integration d i method regulation d integration integration d'haiti a la caricom integration d'haiti dans la caricom integration d'un salarié integration d'un nouveau chaton integration d'une equation differentielle integration d'un nouveau rat integration examples integration e^x integration exercise integration engineer integration ex 7.2 integration ex 7.5 integration e^x^2 integration equation integration e^2x integration easy questions e integration formula e integration rules e integration bhopal e integration infinity integration formula list integration formula pdf integration formulas class 12 integration formula of uv integration formulas for class 12 pdf integration formula by parts integration formula list class 12 integration formula of tanx integration finder f# integration with c# f# integration testing integration f integration of function integration f(x)g(x) integration f(x)dx integration f(x) integration f(ax+b) integration f'x/fx integration f/g integration general formula integration graph integration gateway integration guide integration gamma function integration game integration gana integration growth strategy integration gane g+ integration android integration g(r) g suite integration g suite integration with active directory g suite integration with salesforce g suite integration with outlook g suite integration with azure active directory g&r integration services gpay integration in android g suite integration api integration hindi integration hub servicenow integration hard questions integration history integration hub plugin servicenow integration host factor integration hyperbolic formulas integration hrm integration history mathematics integration how to do integration h nmr integration h nmr spectra h nmr integration values h nmr integration ratio h nmr integration meaning h nmr integration calculation j.h. integration technology co. ltd h&m integration h nmr integration trace hw integration integration in hindi integration icon integration in matlab integration identities integration important questions integration in biology integration in salesforce integration in physics integration in mathematics integration interview questions i integration by parts i-integration test and solutions i integration icon i integration definition i integration broker i integration systems i integration techniques i integration methods integration i eu integration i usa integration jee integration journal integration jee questions integration jokes integration jee mains integration jobs integration jee mains pdf integration java integration job description integration jee quora imagej integration j d systems integration ltd j d systems integration continuous integration j log4j integration integration khan academy integration ka hindi meaning integration ka formula integration ka matlab integration kya hota hai integration ka hindi arth integration kit integration ka video integration kaise karte hai k+ integration systems k-first integration sdn bhd k-12 integration k-space integration k form integration k-space integration method bessel k integration curriculum integration k-12 theory and practice k-12 asean integration k-12 technology integration integration log x integration lnx integration log integration limit integration logo integration list integration layer integration latex integration laws integration lead l'integration en france l'integration des immigres en france l'integration en france marche-t-elle l'integration verticale et horizontale l'integration vertical l'integration au canada l'integration l'integration economique l'integration verticale l integration regionale definition integration meaning in english integration math integration methods integration meaning in tamil integration meaning in telugu integration meaning in urdu integration management integration mathematics integration m&e integration m&a process integration m&a jobs integration m&a finance integration m&a job description m&a integration playbook m&a integration checklist m&a integration plan m&a integration playbook pdf m-files integration integration ncert solutions integration notes integration ncert pdf integration ncert examples integration notes class 12 integrals ncert exemplar integration ncert solutions 7.4 integration ncert solutions 7.3 integration ncert solutions 7.5 integration numerical integration n^x integration n+1 integration n integration n times integration n definition integration in chinese integration in synonym integration n factorial o integration services integration o level integration o level notes integration of cos o.s.integration.handler.logginghandler java.lang.nullpointerexception o data integration o/r integration in spring o horizontal integration integration product rule integration problems integration properties integration pdf integration patterns integration partial fraction integration physics integration product formula integration platform integration process integration p(x)/q(x) integration p-adic i.p. integration limited p-16 integration im&p integration with cucm p&i integration sdn bhd i p integration ltd exam p integration shortcuts integration questions integration questions class 12 integration questions for class 11 integration questions pdf integration quotes integration quotient rule integration questions for class 11 physics integration questions with solutions integration question bank integration questions class 12 pdf integration q substitution q-volkenborn integration q-pulse integration qradar integration qtest integration alexa sky q integration q sys voip integration guides netflix sky q integration q-pulse api integration q-deformed integration integration rules integration runtime integration reduction formula integration rd sharma integration rules pdf integration root x integration results integration root tanx integration runtime download integration root a2-x2 r integration with tableau r integration with power bi r integration with sql server r integration with sap hana r integration with excel r integration with qlik sense desktop r integration with microstrategy r integration with qlik sense r integration package r integration with java integration synonym integration symbol integration solutions integration solver integration strategy integration sinx integration sin^3x integration sin^2x integration sec x integration services s+ integration ark s+ integration is integration testing s integration disorder is integration layer integration(s) in microsoft visual studio s/4hana integration s 4hana integration with ariba s 4hana integration with successfactors regulation s integration integration table integration tanx integration testing spring boot integration tricks integration testing example integration techniques integration t substitution integration t formula integration t shirt integration t method integration t dt integration t^2 integration t distribution sint/t integration 1/t integration sk&t integration integration uv integration uv formula integration uv rule integration under threat integration using partial fractions integration using trigonometric identities integration uses integration using matlab integration u by v integration using integrating factor u integration substitution u integration v integration u/v formula integration u v rule integration u sub integration u substitution worksheet integration u into v formula integration u substitution practice problems integration u substitution changing bounds integration u substitution calculator integration video integration values integration vs differentiation integration vector integration vedantu integration vlsi journal integration vs system testing integration vs unit testing integration vs inclusion integration vs assimilation interface vs integration integration v hyper-v integration services hyper-v integration services download u.v integration formula u.v integration hyper-v integration services guest services hyper-v integration services linux hyper-v integration components integration with limits integration wizards integration wikipedia integration worksheet integration with steps integration with salesforce integration wallpaper integration with limits formula integration worksheet pdf integration without integrity w amp integration difference b/w integration and differentiation difference b/w integration and system testing w large scale integration vertical integration w integrationskurs w niemczech integration w.r.t measure w plug via integration issues kvjs b w integrationsamt integration x^2 integration x dx integration xe^x integration x log x integration x^n integration xcosx integration x sinx integration x/1+sinx x integration pty ltd integration of log x/x integration x^-1 integration x^2/(xsinx+cosx)^2 integration x+sinx/1+cosx integration youtube integration y dx integration y cos y dy y integration dy integration y axis integration y=mx+c integration y/x integration y square integration y=f(x) integration zero integration z transform integration zero to infinity integration zoho creator integration zone integration zone neuron integration zapier integration zendesk integration zoom integration zeitschrift log z integration z-wave integration 1/z integration z substitution integration what z integration z value integration z-index integration z-wave homekit integration integration 0 to pi by 2 integration 0 to infinity integration 0 to pi sinx dx integration 0 to infinity symbol integration 0 to pi integration 0 to 1 log(1+x)/1+x^2 integration 0 to infinity sinx/x integration 0 to 1 integration 0 to t integration 0 to x integration of 0 integration 1/x integration 1/1+tanx integration 12th integration 1/x^2 integration 12 integration 1/1+x^2 integration 1/x dx integration 1/1+cosx integration 1/1+x^4 integration 1/(a^2+x^2) integration 1/x^3 integration 1/e^x integration 1/2x integration 1/tanx integration 1/log x integration 2x integration 2xdx integration 2xdx from 1 to 12 integration 2nd year integration 2019 integration 2018 integration 2^x dx integration 2dx integration 2nd year notes integration 2b 2 integration enneagram integration 2^x integration 2 variables integration 2 functions multiplied integration 2 ln x integration 2 cos(2x) integration 2 t integration 2+sinx integration 3x integration 3x^3-x^2+2x-4 integration 3x^2 integration 360 integration 3blue1brown integration 3 variables integration 3d integration 3e^x integration 3 dimensional integration 3cx 3 integration methods 3 integration strategies i3- integration innovation inc 3 integration points 3 integration policies integration 3^x integration 4.0 integration 4x integration 4.0 apec integration (4-x^2)^1/2 integration 4.0 login integration 4u integration 4x^3 integration of 4 sin x 4 integration court truganina 4 integration court integration 4^x integration 4dx control 4 integration chapter 4 integration answers type 4 integration angular 4 integration with aem integration 5^x integration 5 letters integration 5 crossword clue integration 5.5 integrate 5 cos integration 5 sentence integration 5.1 5 integration court truganina 5 integration enneagram 5g integration 5 integration areas 5 integrationsloven junit 5 integration test enneagram 5 integration 8 rails 5 integration tests integration 65 age 6 integration enneagram 6 integration court truganina 6.2 integration with u-substitution 65 integration patterns 6 integration 60s integration idm integration 6.23.15 integration of 6x dendritic integration 60 years of progress stage 6 integration angular 6 integration testing angular 6 integration with spring boot enneagram 6 integration to 9 hhv 6 integration type 6 integration angular 6 integration with aem pmbok 6 integration management integration 7.3 integration 7.5 integration 7.2 integration 7.6 integration 7.4 integration 7.9 integration 7.8 integration 7.11 integration 7.3 class 12 integration 7.3 exercise 7 integration enneagram 7 integration management processes integration 7/x ch 7 integration class 12 chapter 7 integration enneagram 7 integration to 5 angular 7 integration testing scene 7 integration with aem type 7 integration windows 7 integration services integration 8x8 integration 8.1 integration 8x 8x8 integration with salesforce 8.2 integration by parts 8x8 integration with skype for business 8x8 integration with outlook 8fit integration 8x8 integration with office 365 88 integration llc integration 8 enneagram 8 integration chapter 8 integration techniques type 8 integration enneagram 8 integration 2 drupal 8 integration java 8 integration testing 3/8 integration 8-1 discussion integration integration 9 formulas integration 9^x integration 91579 integration 9.4 9 integration to 3 991ms integration 9 integration enneagram 911 integration 9.5 integration 97 integration relay 9 integration formula 9 integrationsgesetz integration 9 enneagram 9 integration to 3 type 9 integration enneagram 9 integration and disintegration topic 9 integration and national unity

1 note

·

View note

Text

On statistical learning in graphical models, and how to make your own [this is a work in progress...]

I’ve been doing a lot of work on graphical models, and at this point if I don’t write it down I’m gonna blow. I just recently came up with an idea for a model that might work for natural language processing, so I thought that for this post I’ll go through the development of that model, and see if it works. Part of the reason why I’m writing this is that the model isn’t working right now (it just shrieks “@@@@@@@@@@”). That means I have to do some math, and if I’m going to do that why not write about it.

So, the goal will be to develop what the probabilistic people in natural language processing call a “language model” – don’t ask me why. This just means a probability distribution on strings (i.e. lists of letters and punctuation and numbers and so on). It’s what you have in your head that makes you like “hi how are you” more than “1$…aaaXD”. Actually I like the second one, but the point is we’re trying to make a machine that doesn’t. Anyway, once you have a language model, you can do lots of things, which hopefully we’ll get to if this particular model works. (I don’t know if it will.)

The idea here is that if we go through the theory and then apply it to develop a new model, then maybe You Can Too TM. In a move that is pretty typical for me, I misjudged the scope of the article that I was going for, and I ended up laying out a lot of theory around graphical models and Boltzmann machines. It’s a lot, so feel free to skip things. The actual new model is developed at the end of the post using the theory.

Graphical models and Gibbs measures

If we are going to develop a language model, then we are going to have to build a probability distribution over, say, strings of length 200. Say that we only allowed lowercase letters and maybe some punctuation in our strings, so that our whole alphabet has size around 30. Then already our state space has size 20030. In general, this huge state space will be very hard to explore, and ordinary strategies for sampling from or studying our probability distribution (like rejection or importance sampling) will not work at all. But, what if we knew something nice about the structure of the interdependence of our 200 variables? For instance, a reasonable assumption for a language model to make is that letters which are very far away from each other have very little correlation – $n$-gram models use this assumption and let that distance be $n$.

It would be nice to find a way to express that sort of structure in such a way that it could be exploited. Graphical models formalize this in an abstract setting by using a graph to encode the interdependence of the variables, and it does so in such a way that statistical ideas like marginal distributions and independence can be expressed neatly in the language of graphs.

For an example, let $V={1,\dots,n}$ index some random variables $X_V=(X_1,\dots,X_n)$ with joint probability mass function $p(x_V)$. What would it mean for these variables to respect a graph like, say if $n=5$,

In other words, we’d like to say something about the pmf $p$ that would guarantee that observing $X_5$ doesn’t tell us anything interesting about $X_1$ if we already knew $X_4$. Well,

Def 0. (Gibbs random field, pmf/pdf version) Let $\mathcal{C}$ be the set of cliques in a graph $G=(V,E)$. Then we say that a collection of random variables $X_V$ with joint probability mass function or probability density function $p$ is a Gibbs random field with respect to $G$ if $p$ is of the form $$p(x_V) = \frac{1}{Z_\beta} e^{-\beta H(x_V)},$$ given that the energy $H$ factorizes into clique potentials: $$H(x_V)=\sum_{C\in\mathcal{C}} V_C(x_C).$$

Here, if $A\subset V$, we use the notation $x_A$ to indicate the vector $x_A=(x_i;i\in A)$.

For completeness, let’s record the measure-theoretic definition. We won’t be using it here so feel free to skip it, but it can be helpful to have it when your graphical model mixes discrete variables with continuous ones.

Def 1. (Gibbs random field) For the same $G$, we say that a collection of random variables $X_V\thicksim\mu$ is a Gibbs random field with respect to $G$ if $\mu$ can be written $$\mu(dx_V)=\frac{\mu_0(dx_V)}{Z_\beta} e^{-\beta H(x_V)},$$ for some energy $H$ factorizes over the cliques like above.

Here, $\mu_0$ is the “base” or “prior” measure that appears in the constrained maximum-entropy derivation of the Gibbs measure (notice, this is the same as in exponential families -- it’s the measure that $X_V$ would obey if there were no constraint on the energy statistic), but we won’t care about it here, since it’ll just be Lebesgue measure or counting measure on whatever our state space is. Also, $Z$ is just a normalizing constant, and $\beta$ is the “inverse temperature,” which acts like an inverse variance parameter. See footnote:Klenke 538 for more info and a large deviations derivation.

There are two main reasons to care about Gibbs random fields. First, the measures that they obey (Gibbs or Boltzmann distributions) show up in statistical physics: under reasonable assumptions, physical systems that have a notion of potential energy will have the statistics of this distribution. For more details and a plain-language derivation, I like Terry Tao’s post footnote:https://terrytao.wordpress.com/2007/08/20/math-doesnt-suck-and-the-chayes-mckellar-winn-theorem/.

Second, and more to the point here, they have a lot of nice properties from a statistical point of view. For one, they have the following nice conditional independence property:

Def 2a. (Global Markov property) We say that $X_V$ has the global Markov property on $G$ if for any $A,B,S\subset V$ so that $S$ separates $A$ and $B$ (i.e., for any path from $A$ to $B$ in $G$, the path must pass through $S$), $X_A\perp X_B\mid X_S$, or in other words, $X_A$ is conditionally independent of $X_B$ given $X_S$.

Using the graphical example from above, for instance, we see what happens if we can condition on $X_4=x_4$:

This is what happens in Def 2a. when $S=\{4\}$. Since the node 4 separates the graph into partitions $A=\{1,2,3\}$ and $B=\{5\}$, we can say that $X_1,X_2,X_3$ are independent of $X_5$ given $X_4$, or in symbols $X_1,X_2,X_3\perp X_5\mid X_4$.

The global Markov property directly implies a local property:

Def 2b. (Local Markov property) We say that $X_V$ has the local Markov property on $G$ if for any node $i\in V$, $X_i$ is conditionally independent from the rest of the graph given its neighbors.

In a picture,

Here, we are expressing that $X_2\perp X_3,X_5\mid X_1,X_4$, or in words, $X_2$ is conditionally independent from $X_3$ and $X_5$ given its neighbors $X_1$ and $X_4$. I hope that these figures (footnote:tikz-cd) have given a feel for how easy it is to understand the dependence structure of random variables that respect a simple graph.

It’s not so hard to check that a Gibbs measure satisfies these properties on its graph. If $X_V\thicksim p$ where $p$ is a Gibbs density/pmf, then $p$ factorizes as follows: $$p(x_V)=\prod_{C\in\mathcal{C}} p_C(x_C).$$ This factorization means that $X_V$ is a “Markov random field” in addition to being a Gibbs random field, and the Markov properties follow directly from this factorization footnote:mrfwiki. The details of the equivalence between Markov random fields and Gibbs random fields are given in the Hammersley-Clifford theorem footnote:HCThm.

This means that in situations where $V$ is large and $X_V$ is hard to sample directly, there is still a nice way to sample from the distribution, and this method becomes easier when $G$ is sparse.

The Gibbs sampler

The Gibbs sampler is a simpler alternative to methods like Metropolis-Hastings, first named in an amazing paper by Stu Geman footnote:thatpaper. It’s best explained algorithmically. So, say that you had a pair of variables $X,Y$ whose joint distribution $p(x,y)$ is unknown or hard to sample from, but where the conditional distributions $p(x\mid y)$ and $p(y\mid x)$ are easy to sample. (This might sound contrived, but the language model we’re working towards absolutely fits into this category, so we will be using this a lot.) How can we sample faithfully from $p(x,y)$ using these conditional distributions?

Well, consider the following scheme. For the first step, given some initialization $x_0$ for $X$, sample $y_0\thicksim p(\cdot\mid x_0)$. Then at the $i$th step, sample $x_{i}\thicksim p(\cdot\mid y_{i-1})$, and then sample $y_{i}\thicksim p(\cdot \mid x_i)$. The claim is that the Markov chain $(X_i,Y_i)$ will approximate samples from $p(x,y)$ as $i$ grows.

It is easy to see that $p$ is an invariant distribution for this Markov chain: indeed, if it were the case that $x_0,y_0$ were samples from $p(X,Y)$, then if $X_1\thicksim p(X\mid Y=y_0)$, clearly $X_1,Y_0\thicksim p(X\mid Y)p(Y)$, since $Y_0$ on its own must be distributed according to its marginal $p(Y)$. By the same logic, $X_1$ is distributed according to its marginal $p(X)$, so that if $Y_1$ is chosen according to $p(Y\mid X_0)$, then the pair $X_1,Y_1\thicksim p(Y\mid X)p(X)=p(X,Y)$.

The proof that this invariant distribution is the limiting distribution of the Markov chain is more involved and can be found in the Geman paper, but to me this is the main intuition.

This sampler is especially useful in the context of graphical models, and more so when the graph has some nice regularity. The Gemans make great use of this to create a parallel algorithm for sampling from their model, which would otherwise be very hard to study. For a simpler example of the utility, consider a lattice model (you might think of an Ising model), i.e. say that $G$ is a piece of a lattice: take $V=\{1,\dots,n\}\times\{1,\dots,n\}$ and let $E=\{((h,i),(j,k):\lvert h-j + i-k\rvert=1\}$ be the nearest-neighbor edge system on $V$. Say that $X_V$ form a Gibbs random field with respect to that lattice (here we let $n=4$):